By Zhihao and Zhanyi

An increasing number of enterprises are now running AI and big data applications on Kubernetes, which enhances resource elasticity and development efficiency. However, the decoupling of computing and storage architecture introduces challenges such as high network latency, expensive network costs, and inadequate storage service bandwidth.

Let's consider high-performance computing cases like AI training and gene computing. In these scenarios, a large number of computations need to be executed concurrently within a short timeframe, and multiple computing instances share access to the same data source from the file system. To address this, many enterprises utilize the Apsara File Storage NAS or CPFS service, mounting it to computing tasks executed by Alibaba Cloud Container Service for Kubernetes (ACK). This implementation enables high-performance shared access to thousands of computing nodes.

As cloud-native machine learning and big data scenarios witness an increase in computing power, model size, and workload complexity, high-performance computing demands faster and more elastic access to parallel file systems.

Consequently, providing an elastic and swift experience for containerized computing engines has become a new storage challenge.

To tackle this challenge, we have launched the Elastic File Client (EFC) to establish a cloud-native storage system. Our aim is to leverage the high extensibility, native POSIX interface, and high-performance directory tree structure of Alibaba Cloud's file storage service to build this cloud-native storage system. Moreover, we integrate EFC with Fluid, a cloud-native data orchestration and acceleration system, to enable visibility, auto-scaling, data migration, and computing acceleration of datasets. This combination provides a reliable, efficient, and high-performance solution for shared access to file storage in cloud-native AI and big data applications.

Fluid [1] is a cloud-native distributed data orchestration and acceleration system designed for data-intensive applications like big data and AI.

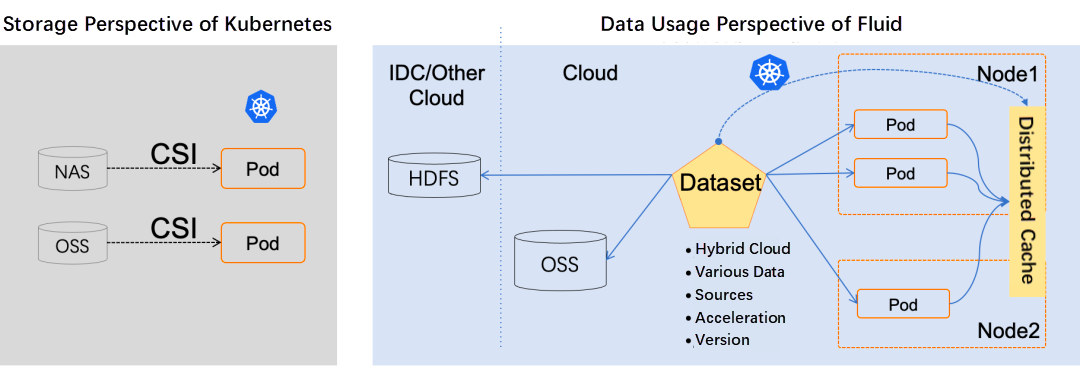

Unlike traditional storage-oriented Persistent Volume Claims (PVCs), Fluid introduces the concept of elastic datasets (Datasets) from the application's perspective, abstracting the "process of utilizing data on Kubernetes."

Fluid enables flexible and efficient movement, replication, eviction, transformation, and management of data between various storage sources (such as NAS, CPFS, OSS, and Ceph) and upper-level Kubernetes applications.

Fluid can implement CRUD operations, permission control, and access acceleration for datasets. Users can directly access abstracted data in the same way as they access native Kubernetes data volumes. Fluid currently focuses on two important scenarios: dataset orchestration and application orchestration.

• In terms of dataset orchestration, Fluid can cache data of a specified dataset to a Kubernetes node with specified features to improve data access speed.

• In terms of application orchestration, Fluid can schedule specified applications to nodes that have stored specified datasets to reduce data transfer costs and improve computing efficiency.

The two can also be combined to form a collaborative orchestration scenario, where dataset and application requirements are considered for node resource scheduling.

Fluid offers an efficient and convenient data abstraction layer for cloud-native AI and big data applications. It encompasses the following core features related to abstracted data:

Fluid abstracts datasets not only by consolidating data from multiple storage sources but also by describing data mobility and characteristics. Additionally, it provides observability features such as total dataset volume, current cache space size, and cache hit ratio. With this information, users can evaluate the need for scaling up or down the cache system.

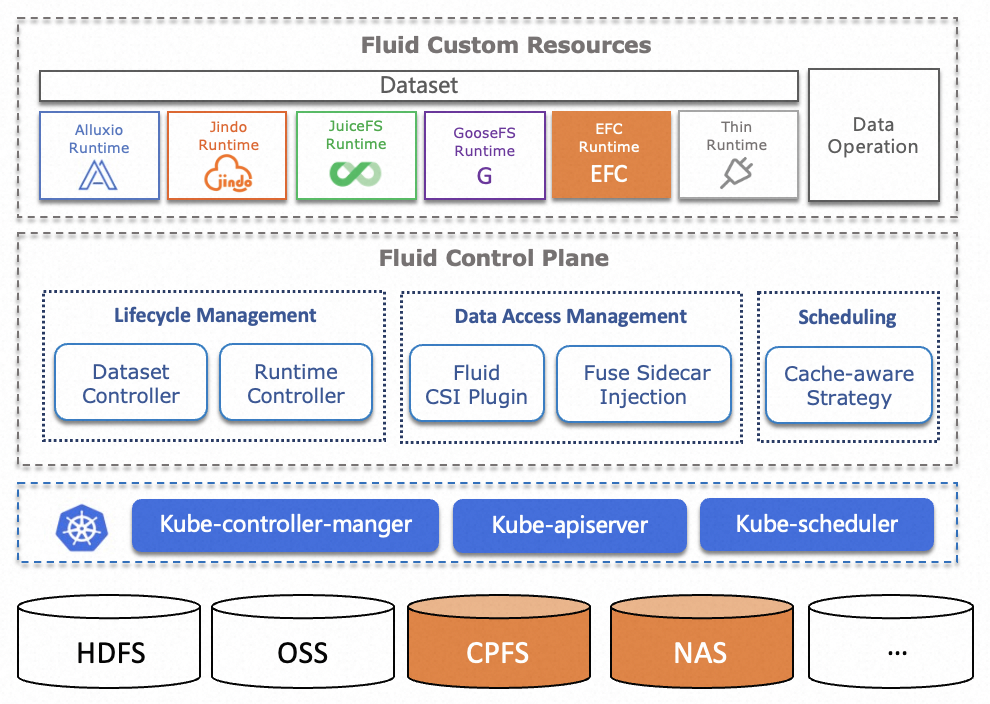

While Dataset is a unified abstract concept, different storage systems have distinct runtime interfaces, requiring different runtime implementations for actual data operations. Fluid provides Cache Runtime and Thin Runtime. Cache Runtime facilitates cache acceleration using various open-source distributed cache runtimes like Alluxio, Juice FS, Alibaba Cloud EFC, Jindo, and Tencent Cloud GooseFS. Thin Runtime offers unified access interfaces (e.g., s3fs, nfs-fuse) to integrate with third-party storage systems.

Fluid enables various operations, including data prefetching, data migration, and data backup, using Custom Resource Definitions (CRDs). It supports one-time, scheduled, and event-driven operations, allowing integration with automated operations and maintenance (O&M) systems.

Combining distributed data caching technology with features like autoscaling, portability, observability, and scheduling capabilities, Fluid improves data access performance.

Fluid supports multiple Kubernetes forms, including native Kubernetes, edge Kubernetes, serverless Kubernetes, and multi-cluster Kubernetes. It can run in diverse environments such as public clouds, edge environments, and hybrid clouds. Depending on the environment, Fluid can be run using either the CSI Plugin or sidecar mode for the storage client.

After implementing cloud-native modernization, enterprise applications can build more flexible services. The question is, how can the storage of application data be cloud-native synchronously?

What is cloud-native storage?

Cloud-native storage is not merely a storage system built on the cloud or deployed in Kubernetes containers. It refers to a storage service that seamlessly integrates with Kubernetes environments to meet the requirements of business elasticity and agility.

Cloud-native storage must fulfill the following requirements:

However, none of the above requirements can be solved independently by relying on storage backend services or clients.

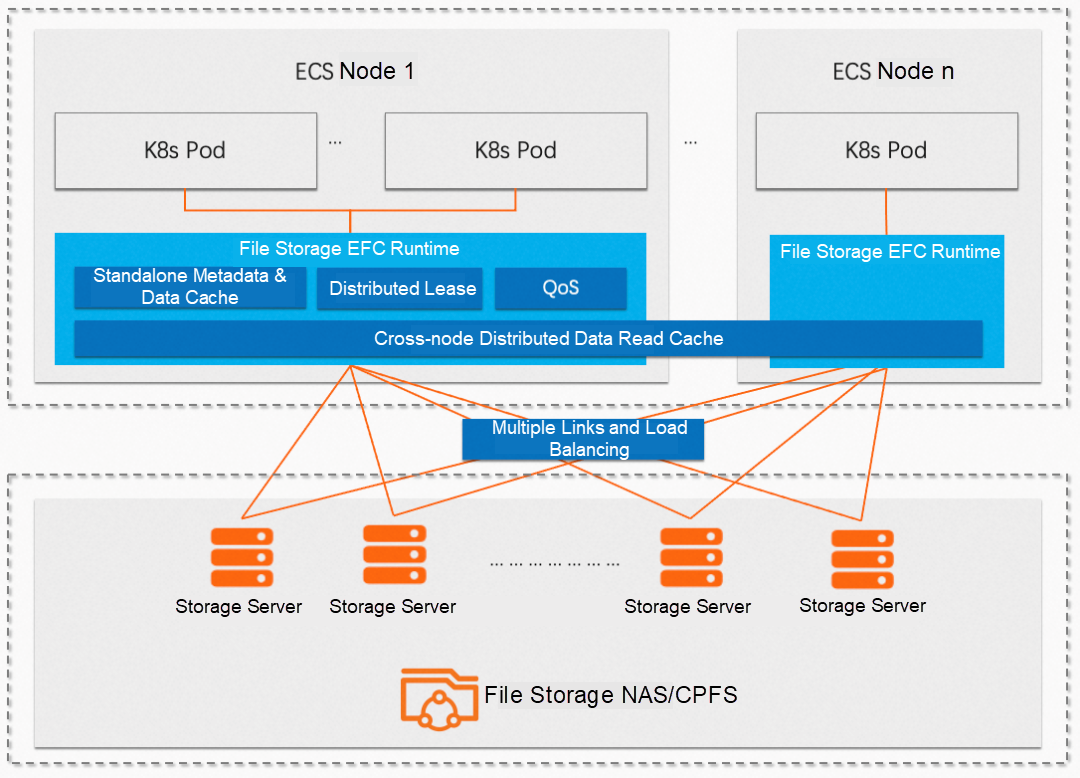

Therefore, Alibaba Cloud launched the Elastic File Client (EFC), which combines the high extensibility, native POSIX interface, and high-performance directory tree structure of Elastic File Client file storage service to build a cloud-native storage system. EFC replaces the traditional kernel-mode NFS client of NAS and provides acceleration capabilities such as multi-link access, metadata cache, and distributed data cache. It also provides end-side performance monitoring, QoS capability, and hot upgrade capability.

At the same time, EFC avoids the problem that POSIX clients that use open source FUSE cannot fail over within seconds, ensuring the stability during large-scale computing.

EFC Runtime is a runtime type implementation that supports the acceleration of Dataset access. It uses EFC as its cache engine. Fluid enables visibility, auto scaling, data migration, and compute the acceleration of datasets by managing and scheduling EFC Runtime. Using and deploying EFC Runtime on Fluid is simple. EFC Runtime on Fluid is compatible with the native Kubernetes environment, and can automatically and controllably improve data throughput.

You can access Apsara File Storage by using EFC Runtime to obtain the following capabilities in addition to the enterprise-level basic features of Apsara File Storage:

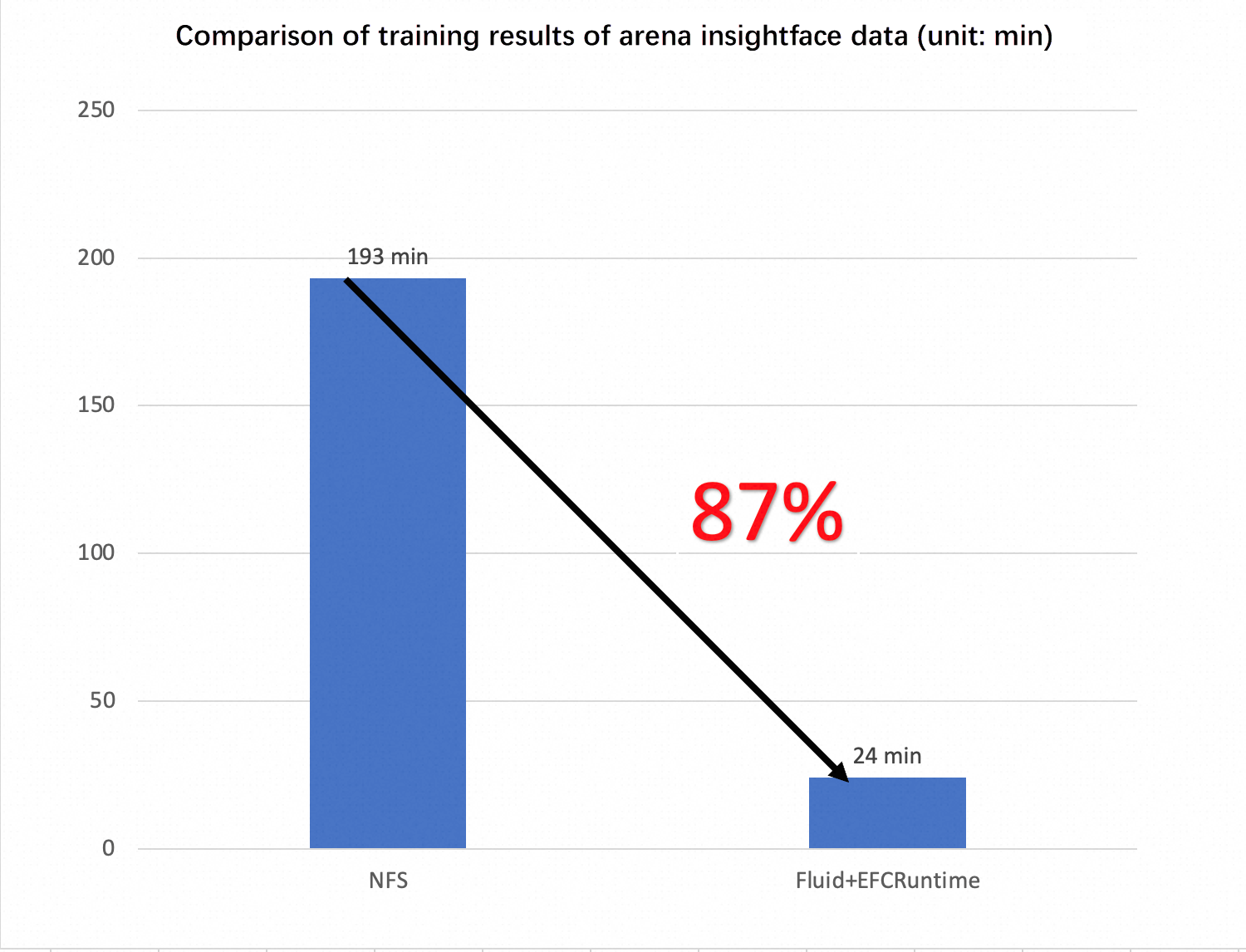

We use the insightface(ms1m-ibug) dataset [2], and use the Arena [3] based on the Kubernetes cluster to verify the concurrent read speed on this dataset. As a result, based on EFC Runtime, when the local cache is enabled, the performance is significantly better than that of open source nfs, and the training time is shortened by 87%.

The following uses Apsara File Storage NAS as an example of how to use Fluid EFC Runtime to accelerate access to NAS files.

First, you need to prepare the Alibaba Cloud ACK Pro cluster and the Alibaba Cloud NAS file system.

Then, you only need to spend about 5 minutes creating the required EFC Runtime environment. The process of using EFC Runtime is very simple. You can follow the following procedures to deploy the EFC Runtime environment.

Create a dataset.yaml file that contains the following two parts:

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: efc-demo

spec:

placement: Shared

mounts:

- mountPoint: "nfs://<nas_url>:<nas_dir>"

name: efc

path: "/"

---

apiVersion: data.fluid.io/v1alpha1

kind: EFCRuntime

metadata:

name: efc-demo

spec:

replicas: 3

master:

networkMode: ContainerNetwork

worker:

networkMode: ContainerNetwork

fuse:

networkMode: ContainerNetwork

tieredstore:

levels:

- mediumtype: MEM

path: /dev/shm

quota: 15Gikubectl create -f dataset.yamlTo view the Dataset:

$ kubectl get dataset efc-demoThe expected output is:

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

efc-demo Bound 24mYou can create an application container to use the EFC acceleration service, or submit machine learning jobs to experience related features.

Next, we will create two application containers to access the same 10GB file in the dataset. You can also use another file for testing, which needs to be pre-stored in the NAS file system.

Define the following app.yaml file:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: efc-app

labels:

app: nginx

spec:

serviceName: nginx

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

command: ["/bin/bash"]

args: ["-c", "sleep inf"]

volumeMounts:

- mountPath: "/data"

name: data-vol

volumes:

- name: data-vol

persistentVolumeClaim:

claimName: efc-demoRun the following command to view the size of the data file to be accessed:

kubectl exec -it efc-app-0 -- du -h /data/allzero-demo

10G /data/allzero-demoRun the following command to check the read time of the file in the first application container. If you use your own real data file, replace /data/allzero-demo with the real file path.

kubectl exec -it eac-app-0 -- bash -c "time cat /data/allzero-demo > /dev/null"The expected output is:

real 0m15.792s

user 0m0.023s

sys 0m2.404sThen, in another container, test the time taken to read the same 10G file. If you use your own real data file, please replace /data/allzero-demo with the real file path):

kubectl exec -it efc-app-1 -- bash -c "time cat /data/allzero-demo > /dev/null"The expected output is:

real 0m9.970s

user 0m0.012s

sys 0m2.283sFrom the above output information, it can be found that the throughput is improved from the original 648MiB/s to 1034.3MiB/s, and the reading efficiency for the same file is improved by 59.5%.

By combining Fluid with EFC, you can better support AI and big data services in cloud-native scenarios. This combination can improve data usage efficiency and enhance the integration of automated O&M through standardized operations such as data preheating and migration.

In addition, we will also support running in serverless scenarios to provide a better-distributed file storage access experience for serverless containers.

[1] Fluid

https://github.com/fluid-cloudnative/fluid

[2] insightface(ms1m-ibug) dataset

https://github.com/deepinsight/insightface/tree/master/recognition/_datasets_#ms1m-ibug-85k-ids38m-images-56

[3] Arena

https://www.alibabacloud.com/help/en/doc-detail/212117.html

[4] Use EFC to accelerate access to NAS

https://www.alibabacloud.com/help/en/doc-detail/600930.html

In-depth Analysis of Traffic Isolation Technology of Online Application Nodes

668 posts | 55 followers

FollowAlibaba Cloud Native - September 5, 2022

Alibaba Cloud Community - February 9, 2022

Alibaba Container Service - July 16, 2019

Alibaba Cloud Community - March 8, 2022

Alibaba Developer - September 6, 2021

Alibaba Container Service - August 25, 2020

668 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Native Community