By Xianwei (Team Leader, Alibaba Cloud Serverless Kubernetes) and Yili (Product Line Head, Alibaba Cloud Container Service)

Visit our website to learn more about Serverless Kubernetes and Serverless Container Instances

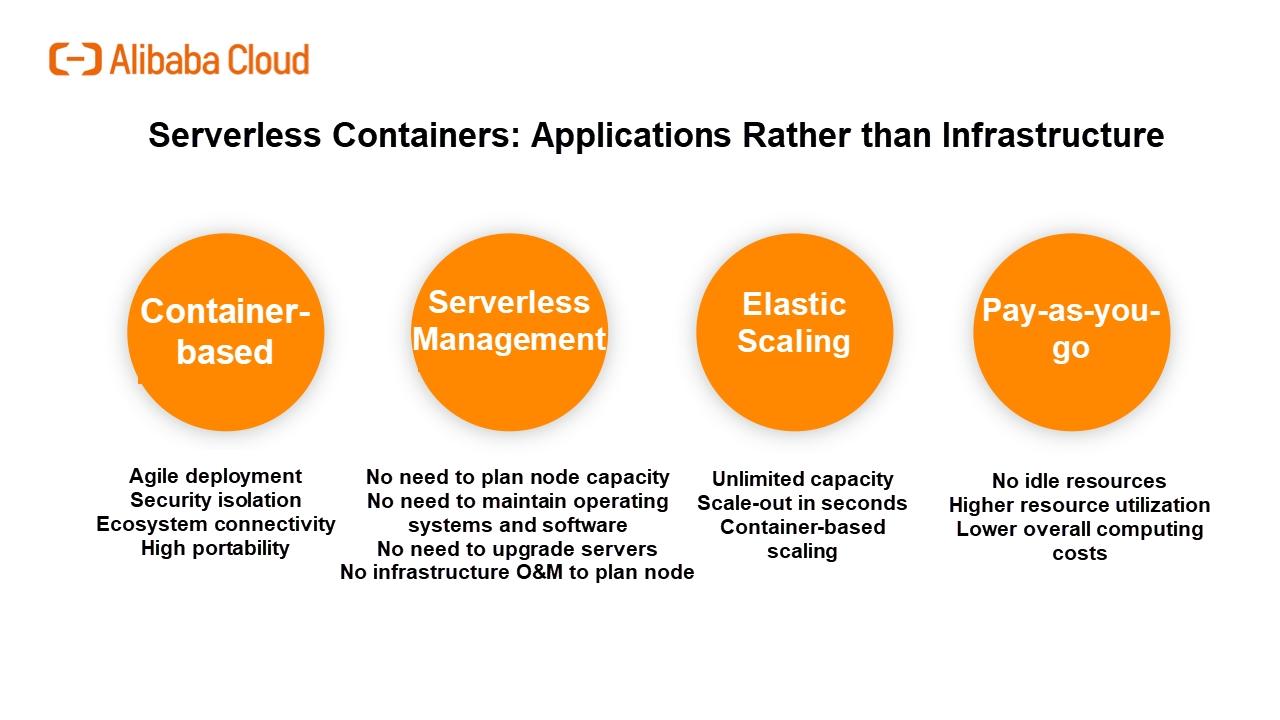

Serverless containers allow deploying container applications without purchasing or managing servers. Serverless containers significantly improve the agility and elasticity of container application deployment and reduce computing costs. This allows users to focus on managing business applications rather than infrastructure, which greatly improves application development efficiency and reduces O&M costs.

Kubernetes has become the de-facto standard for container orchestration systems in the industry. Kubernetes-based cloud-native application ecosystems, such as Helm, Istio, Knative, Kubeflow, and Spark on Kubernetes, use Kubernetes as a cloud operating system. Serverless Kubernetes has attracted the attention of cloud vendors. On the one hand, it simplifies Kubernetes management through a serverless mode, freeing businesses from capacity planning, security maintenance, and troubleshooting for Kubernetes clusters. On the other hand, it further unleashes the capabilities of cloud computing and implements security, availability, and scalability on infrastructure, differentiating itself from the competition.

Alibaba Cloud launched Elastic Container Instance (ECI) and Alibaba Cloud Serverless Kubernetes (ASK) in May 2018. These products have been commercially available since February 2019.

Gartner predicts that, by 2023, 70% of artificial intelligence (AI) tasks will be constructed through computing models such as containers and serverless computing models. According to a survey conducted by AWS, 40% of new AWS Elastic Container Service customers used serverless containers on Fargate in 2019.

The Container as a Service (CaaS) is moving in the direction of serverless containers, which will work with function Platform as a Service (fPaaS) and Function as a Service (FaaS) in a complementary manner. FaaS provides an event-driven programming method, thus, users only need to implement the processing logic of the functions. For example, transcoding and watermarking videos during the upload process. FaaS ensures high development efficiency and powerful elasticity. However, there is a need to change the existing development mode in order to adapt to FaaS. Serverless container applications are built on container images, making them highly flexible. The scheduling system supports various applications, including stateless applications, stateful applications, and computing task applications. Many existing applications can be deployed in a serverless container environment without the need for modification.

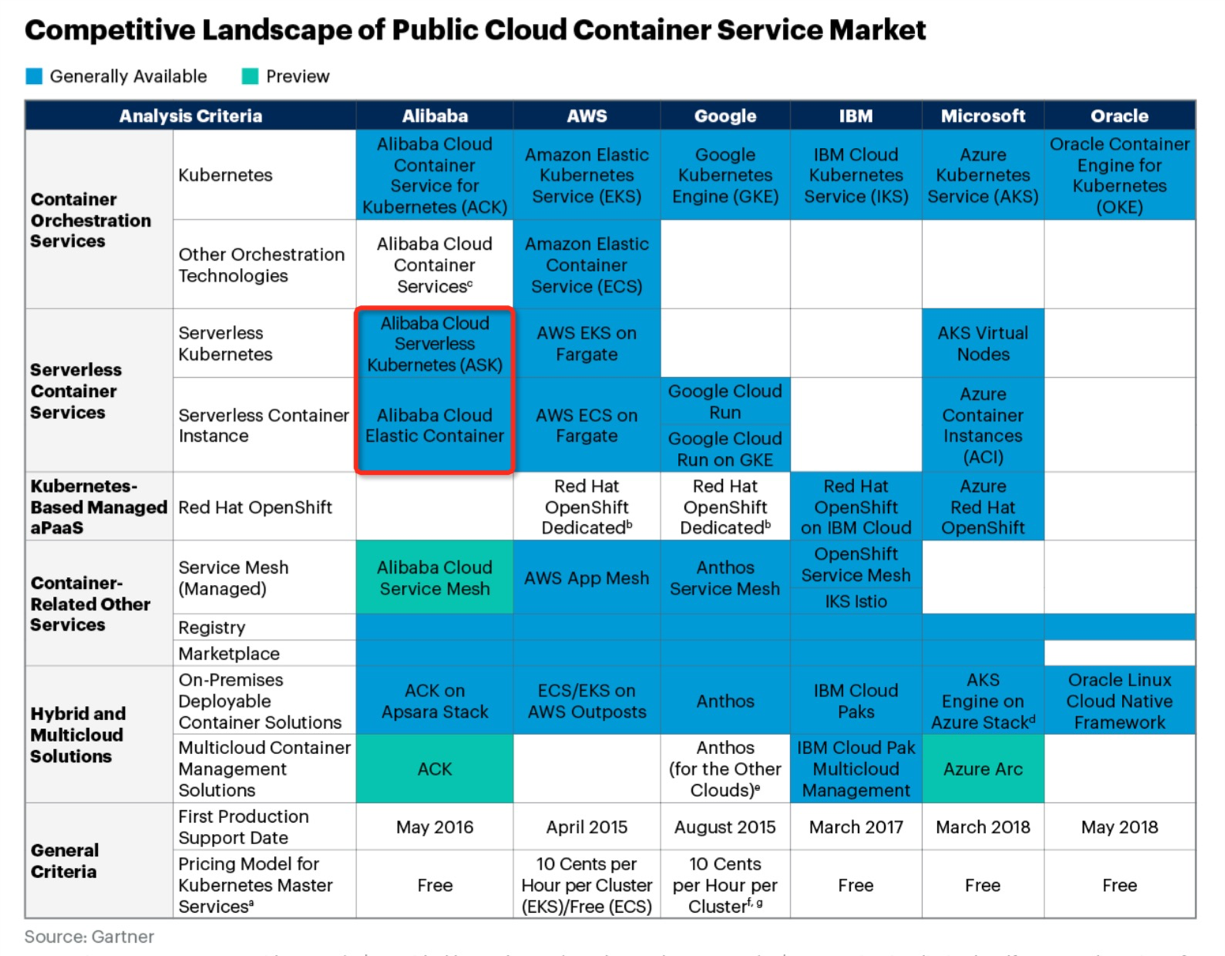

In Gartner's Public Cloud Container Service Market Report released in 2020, serverless containers are viewed as the main difference between the container service platforms of different cloud vendors, and their product capabilities are divided into serverless container instances and serverless Kubernetes. This is highly consistent with the product positioning of Alibaba Cloud ECI and ASK, as shown in the following figure.

According to the Gartner report, there is no industry standard for serverless containers, and cloud vendors currently provide many unique value-added capabilities through technological innovation. Gartner's report gives the following suggestions for cloud vendors:

Since the official public preview of Alibaba Cloud ECI and ASK in May 2018, we have been glad to see that customers are increasingly recognizing the value of serverless containers. Typical scenarios are as follows:

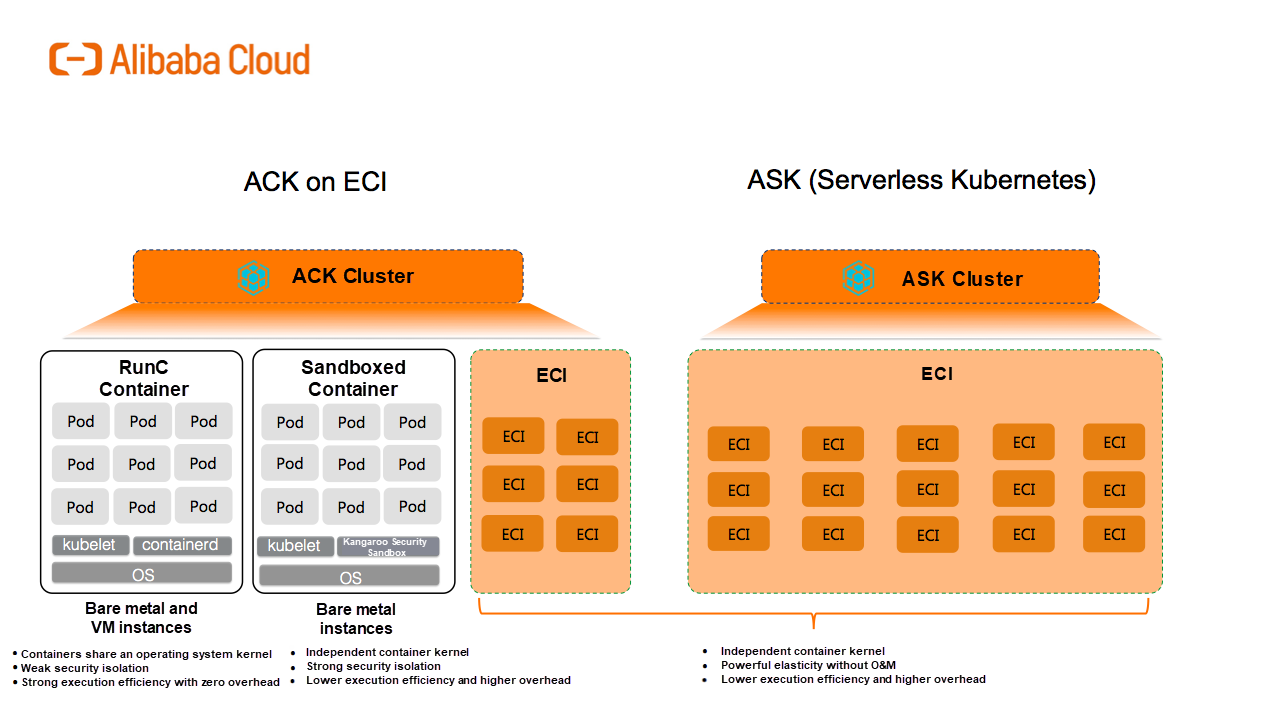

In the scenarios where serverless containers are used in Alibaba Cloud Kubernetes, the ACK on ECI solution and ASK product, optimized from serverless Kubernetes, are used in Kubernetes clusters. Both complement each other and are capable of meeting the needs of different customers.

Unlike standard Kubernetes, serverless Kubernetes is deeply integrated with Infrastructure as a Service (IaaS). The product modes of serverless Kubernetes help public cloud vendors improve their scale, efficiency, and capabilities through technological innovation. In terms of architecture, serverless containers are divided into two layers: container orchestration and the computing resource pool. Next, we will take a close look at the two layers and share our key ideas about the serverless container architecture and products.

The success of Kubernetes in container orchestration is due to the reputation of Google and the hard work of the Cloud Native Computing Foundation (CNCF). In addition, it is backed by Google Borg's experience and improvements in large-scale distributed resource scheduling and automated O&M. The technical points are as follows:

Serverless Kubernetes must be compatible with the Kubernetes ecosystem, provide the core value of Kubernetes, and be deeply integrated with cloud capabilities.

It allows users to directly use Kubernetes' declarative APIs and is compatible with Kubernetes application definitions, requiring no changes to Deployment, StatefulSet, Job, and Service.

Serverless Kubernetes is fully compatible with the extension mechanism, allowing it to support more workloads. In addition, the components of serverless Kubernetes strictly comply with Kubernetes' state approximation control method.

Kubernetes makes full use of cloud capabilities, such as resource scheduling, load balancing, and service discovery. This radically simplifies the design of container platforms, increases the scale, and reduces O&M complexity. These implementations are transparent to users, which ensures portability. In this way, existing applications are smoothly deployed on serverless Kubernetes or deployed on both traditional containers and serverless containers.

Conventional Kubernetes adopts a node-centric architecture, where a node is a carrier of pods. The Kubernetes scheduler selects a proper node from the node pool to run pods and uses Kubelet to complete pod lifecycle management and automated O&M. When resources in the node pool are insufficient, users need to expand the node pool and then scale out containerized applications.

The most important feature of serverless Kubernetes is the decoupling of the container runtime from specific node runtime environments. In this way, there is no need to worry about node O&M or security and ensure savings on O&M costs. Moreover, the elastic implementation of containers is greatly simplified and only requires creating pods for container applications as needed, without any prior capacity planning. In addition, serverless containers are supported by the entire cloud elastic computing infrastructure during runtime, ensuring the cost and scale of elasticity.

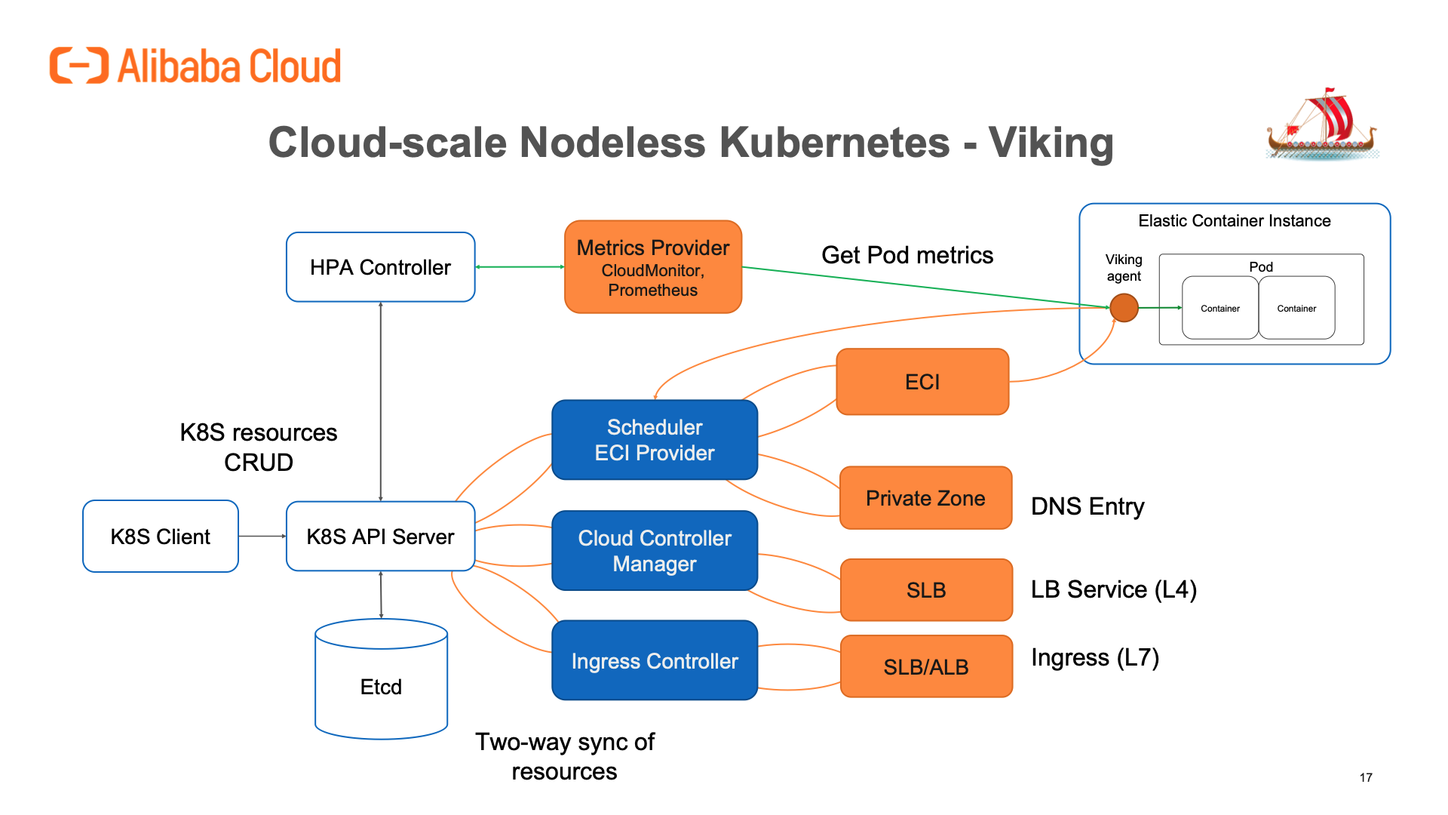

When we started the serverless Kubernetes project at the end of 2017, we were thinking about how to design its architecture as if it was born in the cloud. We expanded and optimized the design and implementation of existing Kubernetes and created the cloud-scale nodeless Kubernetes architecture, with the internal codename Viking because, in ancient times, Viking warships were famous for their agility and ease of operation.

1) We use the Alibaba Cloud Domain Name System (DNS) PrivateZone to configure DNS server address resolution for container groups, which supports headless services.

2) We use SLB to provide load balancing capabilities.

3) We implement Ingress routing rules through the layer-7 route provided by SLB and Application Load Balancer (ALB).

1) Knative is a serverless application framework in the Kubernetes ecosystem. The serving component supports traffic-based auto-scaling and scale-down to 0. Based on serverless Kubernetes capabilities, Alibaba Cloud Knative provides some new and distinctive features, such as auto-scaling to the minimum-cost container group specifications. This ensures we meet the service-level agreement (SLA) for the cold start time and effectively reduces the computing cost. In addition, SLB and ALB are used to implement Ingress Gateway, effectively reducing system complexity and costs.

2) In large-scale computing scenarios such as Spark, vertical optimization is used to improve the efficiency of batch task creation. These capabilities have been tested in cross-region customer scenarios in Jiangsu.

For serverless containers, customers require the following features:

The key technologies of ECI are as follows:

Security is the first consideration for cloud products. Therefore, ECI implements a secure and isolated container runtime based on the Kangaroo cloud-native container engine and lightweight micro virtual machines (VMs). In addition to runtime resource isolation, strict multi-tenant data segregation is also implemented for a series of capabilities such as the network, storage, quota, and elastic service level objective (SLO) based on Alibaba Cloud infrastructure.

In terms of performance, the operating system and containers of the Kangaroo container engine are highly optimized, and ECI is integrated with existing Alibaba Cloud infrastructure capabilities for container execution, such as the straight-through Elastic Network Interface (ENI) and storage object mounting. These capabilities ensure that the application execution efficiency on ECI is equal to or even slightly higher than that of runtime environments on existing ECS instances.

Unlike Azure Container Instances (ACIs) and AWS Fargate on ECS, pods are used as the basic scheduling and operation unit of serverless containers in the early stage of ECI design. This makes it easier to integrate with the upper-layer Kubernetes orchestration system.

ECI provides pod lifecycle management capabilities, such as the create, delete, describe, logs, exec, and metrics capabilities. ECI pods have the same capabilities as Kubernetes pods, except that the sandbox for ECI pods is based on micro VMs rather than cgroups or namespaces. This enables ECI pods to perfectly support various Kubernetes applications, including Istio, which uses sidecar injection.

In addition, standardized APIs shield the implementation of the underlying resource pool and support different underlying forms, architectures, and resource pools as well as production scheduling implementation. The underlying ECI architecture has been optimized and iterated multiple times. For example, Kangaroo security sandbox creation can be offloaded to the MOC card of X-dragon Hypervisor, without affecting the upper-layer application and cluster orchestration.

In addition, the APIs must be available in multiple scenarios, allowing users to use serverless containers in ASK and Alibaba Cloud Container Service for Kubernetes (ACK), user-created Kubernetes, and hybrid cloud scenarios. This is an important difference between Alibaba Cloud ECI and similar products from other vendors.

Through pooling, we can fully integrate the computing power of Alibaba Cloud's elastic computing resource pool, including different selling modes (such as pay-as-you-go, spot, RI, and saving plan), multiple models (such as the Graphics Processing Unit (GPU), virtual GPU (vGPU), and new CPU architectures), and diversified storage capabilities (Enhanced SSD (ESSD) and local disks). This provides ECI with more advantages in product features, cost, and scale, allowing it to satisfy the computing cost and scaling requirements of customers.

The process of creating a serverless container resource is a process of creating and assembling a computing resource, and then jointly assembling multiple basic IaaS resources for computing, storage, and networks. However, unlike ECS instances, serverless containers face many unique challenges.

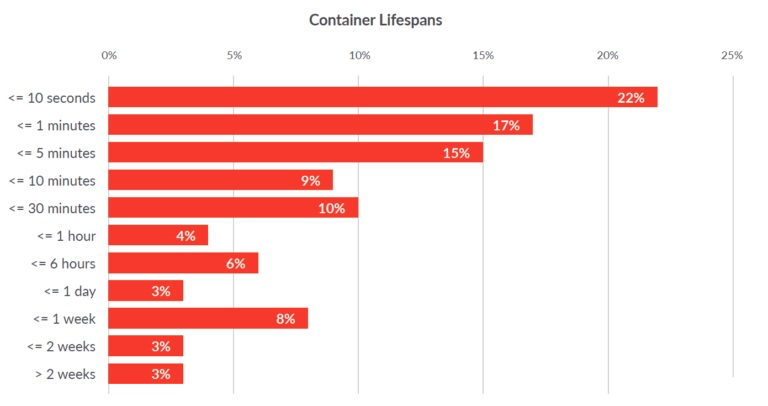

According to the Sysdig 2019 Container Usage Report, more than 50% of containers live for less than 5 minutes. To meet the needs of customers, serverless containers must be started in seconds. The startup speed of serverless containers is affected by the following factors:

In the future, we will work with other teams to further optimize the startup efficiency of serverless containers.

Compared with ECS instance scheduling, serverless container scheduling pays more attention to the certainty of the flexible supply of resources. Serverless containers are purchased on-demand, while ECS instances are purchased in advance. When a large-scale container is created, it is difficult to ensure the elastic SLO for a single user in a single zone. When we support customers during e-commerce promotions or New Year's activities and when providing service assurance during the current epidemic, customers attach great importance to whether the cloud platform ensures the SLO for the flexible supply of resources. By using serverless Kubernetes, the upper-layer scheduler, and the ECI flexible supply policy, we provide customers with more control over the flexible supply of resources, balancing considerations of cost, scale, and holding time.

The efficiency of concurrent creation is also crucial for serverless containers. In highly elastic scenarios, customers want to start 500 pod replicas within the 30s to support traffic spikes. Computing services such as Spark and Presto have even higher requirements for a concurrent startup.

To effectively reduce computing costs, serverless containers need to be deployed in greater density. By using the microVM technology, each container group has an independent operating system kernel. To ensure compatibility with Kubernetes semantics, some auxiliary processes are made to run on ECI. Currently, each ECI process has an additional overhead of about 100 MB. Similar to EKS on Fargate, each pod has a system overhead of about 256 MB. This reduces the deployment density of serverless containers. We need to sink common overheads from ECI to the infrastructure, or even offload some overhead to the MOC cards of ECS Bare Metal Instances.

Major cloud vendors will continue to invest in serverless containers to further differentiate their container service platforms. As mentioned above, the cost, compatibility, creation efficiency, and flexible supply guarantee are critical capabilities of the serverless container technology.

We will work with multiple Alibaba Cloud teams to build Alibaba Cloud IaaS infrastructure. We will further optimize existing serverless container formats to provide customers with serverless Kubernetes with lower costs, better experience, and better compatibility. Based on serverless containers, upper-layer applications can be further developed and innovated in the future. The way forward for us is certainly based on: Cloud-Native First, Serverless First

Visit our website to learn more about Serverless Kubernetes and Serverless Container Instances

Alluxio Deep Learning Practices - 1: Running PyTorch Framework on HDFS

Impacts of Alluxio Optimization on Kubernetes Deep Learning and Training

228 posts | 33 followers

FollowAlibaba Clouder - January 12, 2021

Alibaba Clouder - September 24, 2020

ProsperLabs - May 10, 2023

Alibaba Container Service - July 16, 2019

PM - C2C_Yuan - October 12, 2022

Alibaba Container Service - April 11, 2019

228 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn MoreMore Posts by Alibaba Container Service