Summary: The presentation is based on vivo's real-world cases and showcases some key decisions and technical practices in building a modern data lakehouse, including component selection, architecture design, performance optimization, and data migration exploration. The content is divided into the following sections:

Component Selection and Architecture

Offline Acceleration

Unified Stream and Batch Link

Message Component Alternative

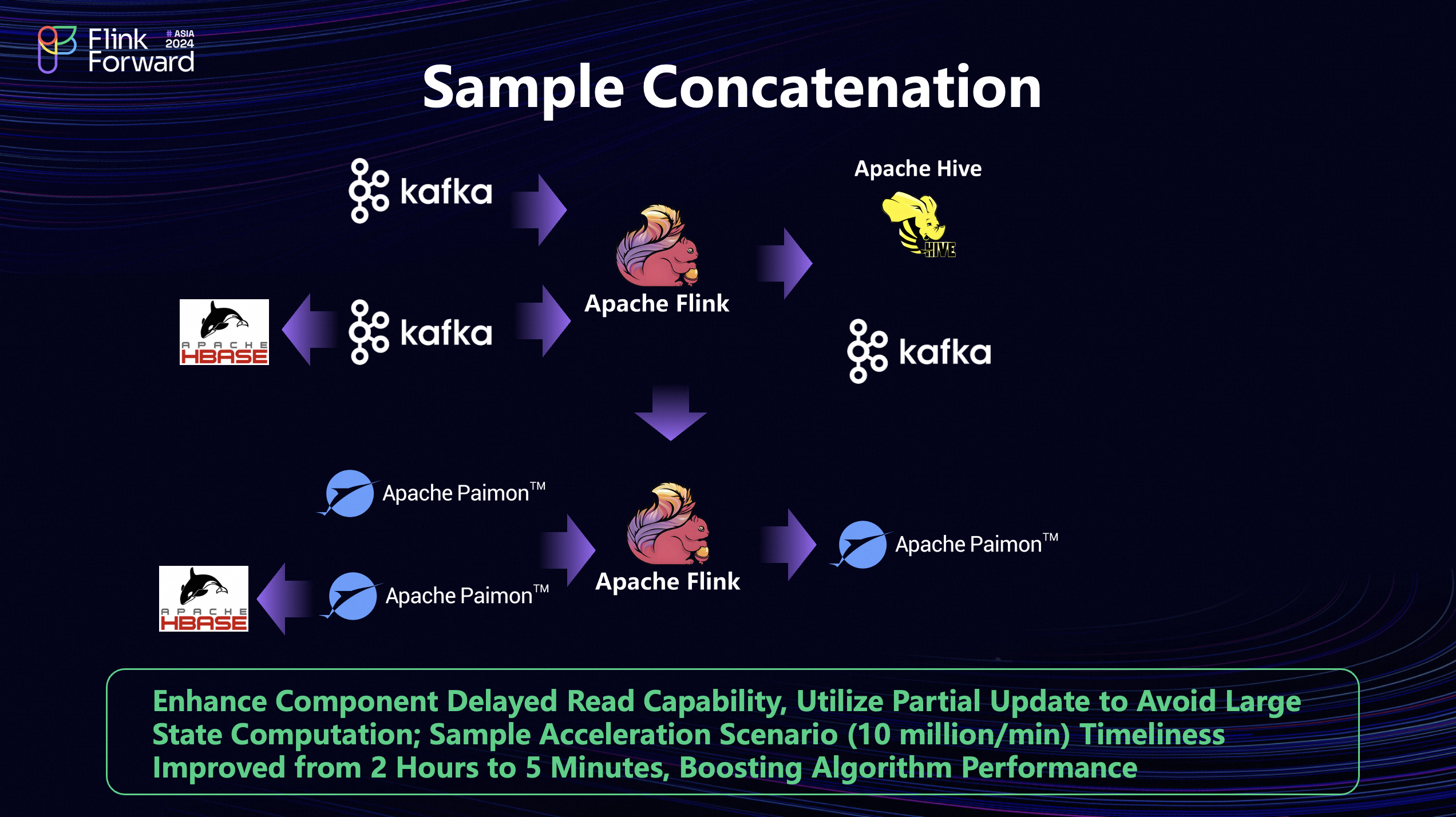

Sample Concatenation

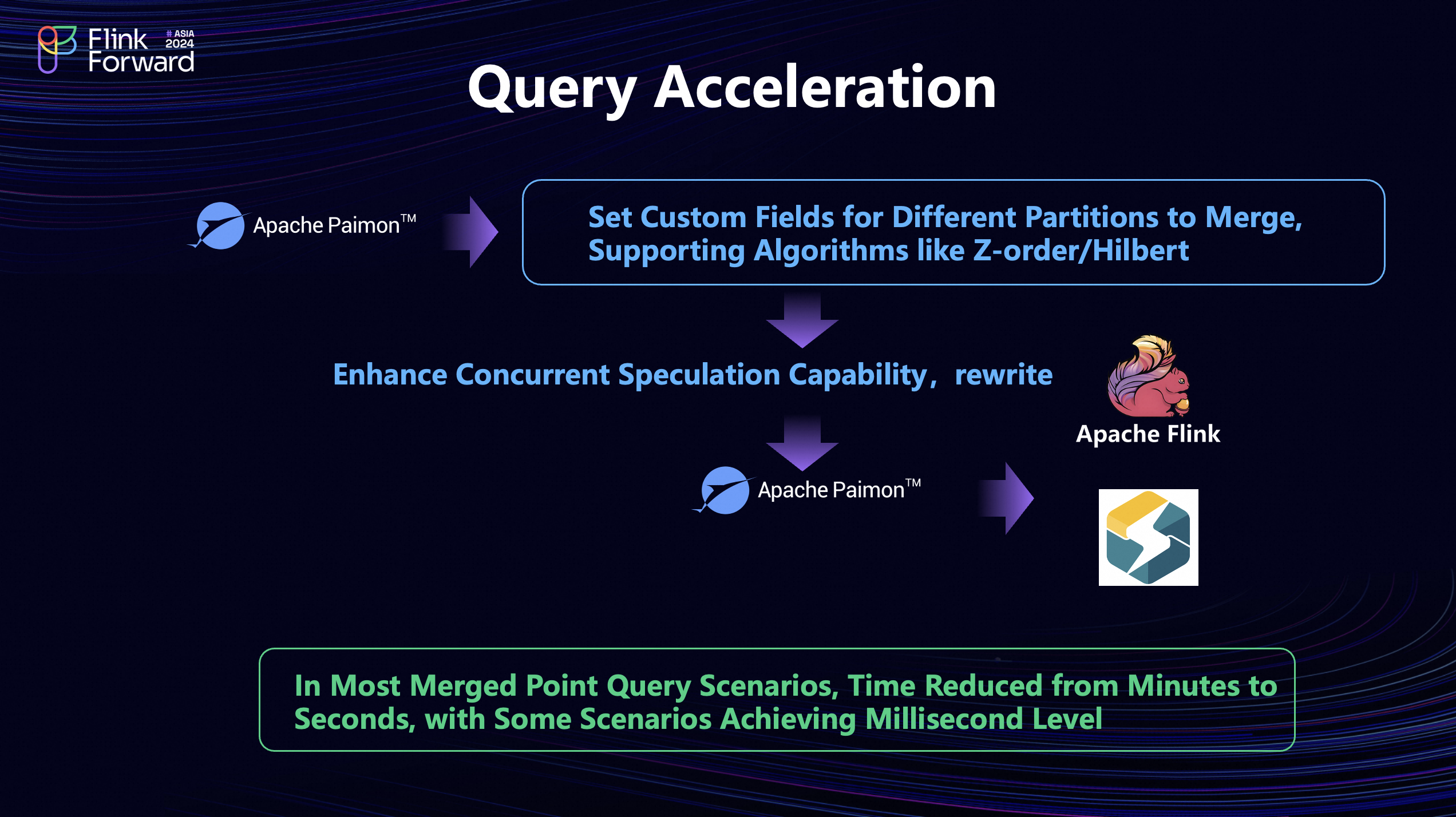

Query Acceleration

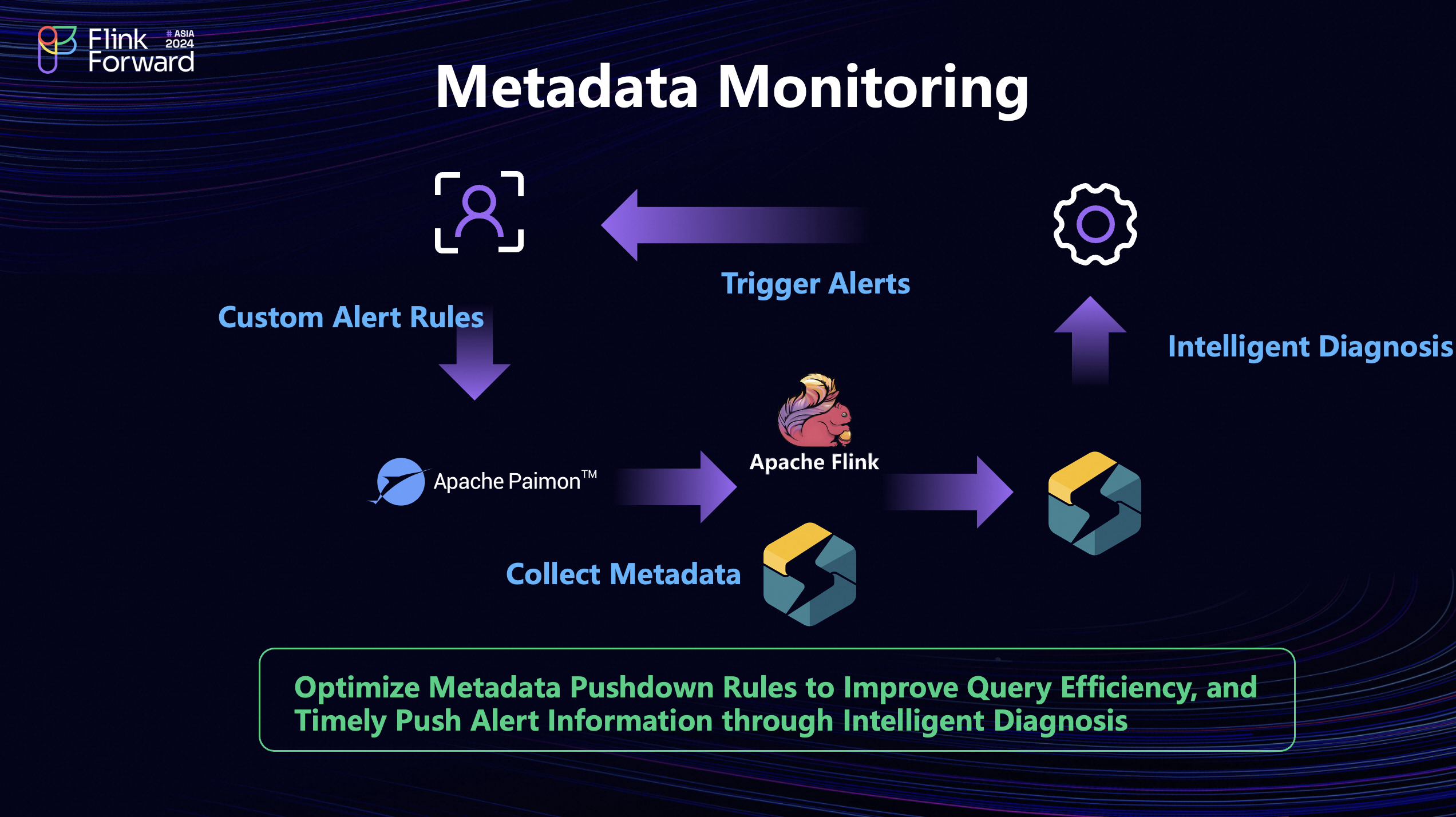

Metadata Monitoring

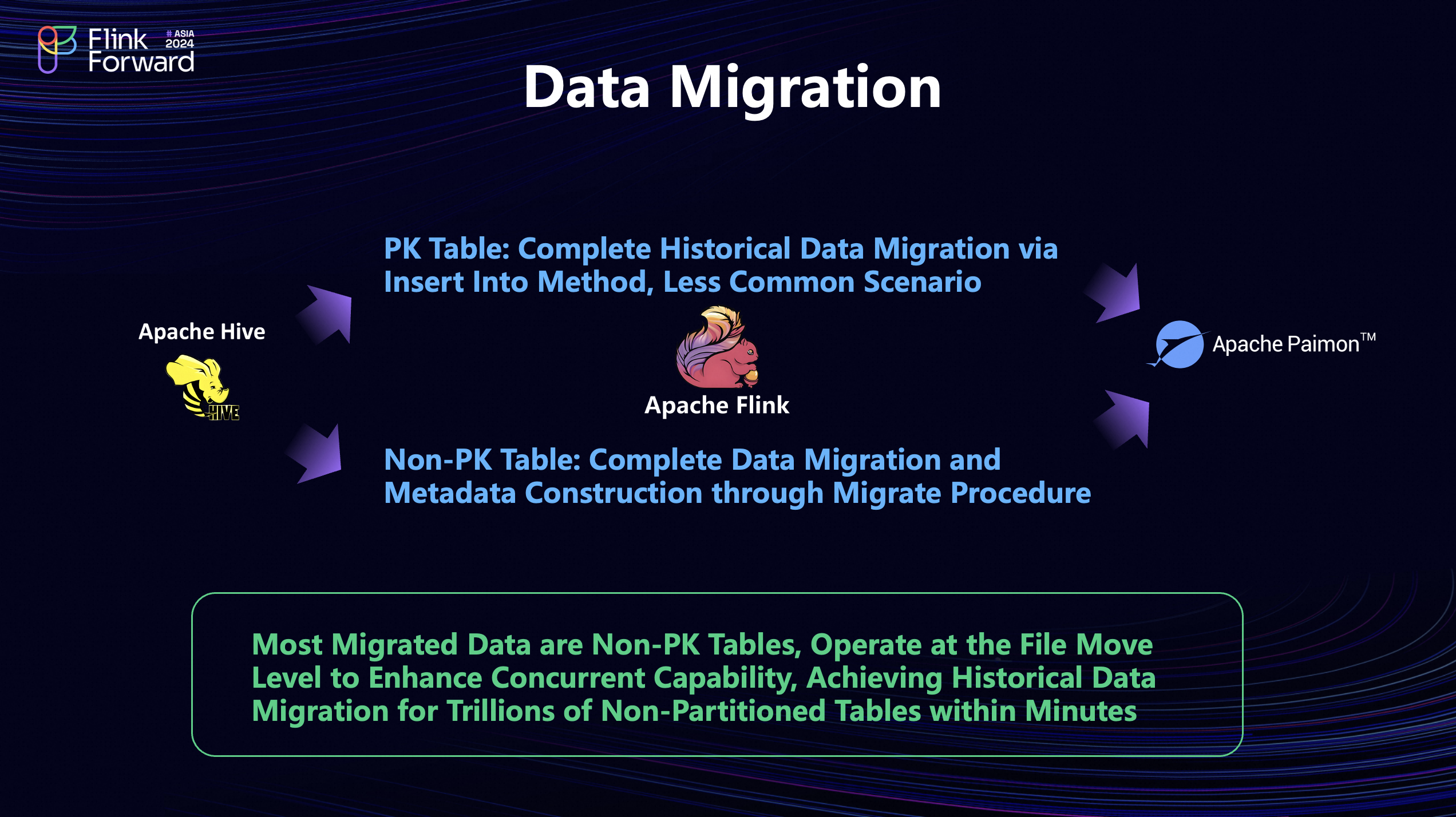

Data Migration

Future Outlook

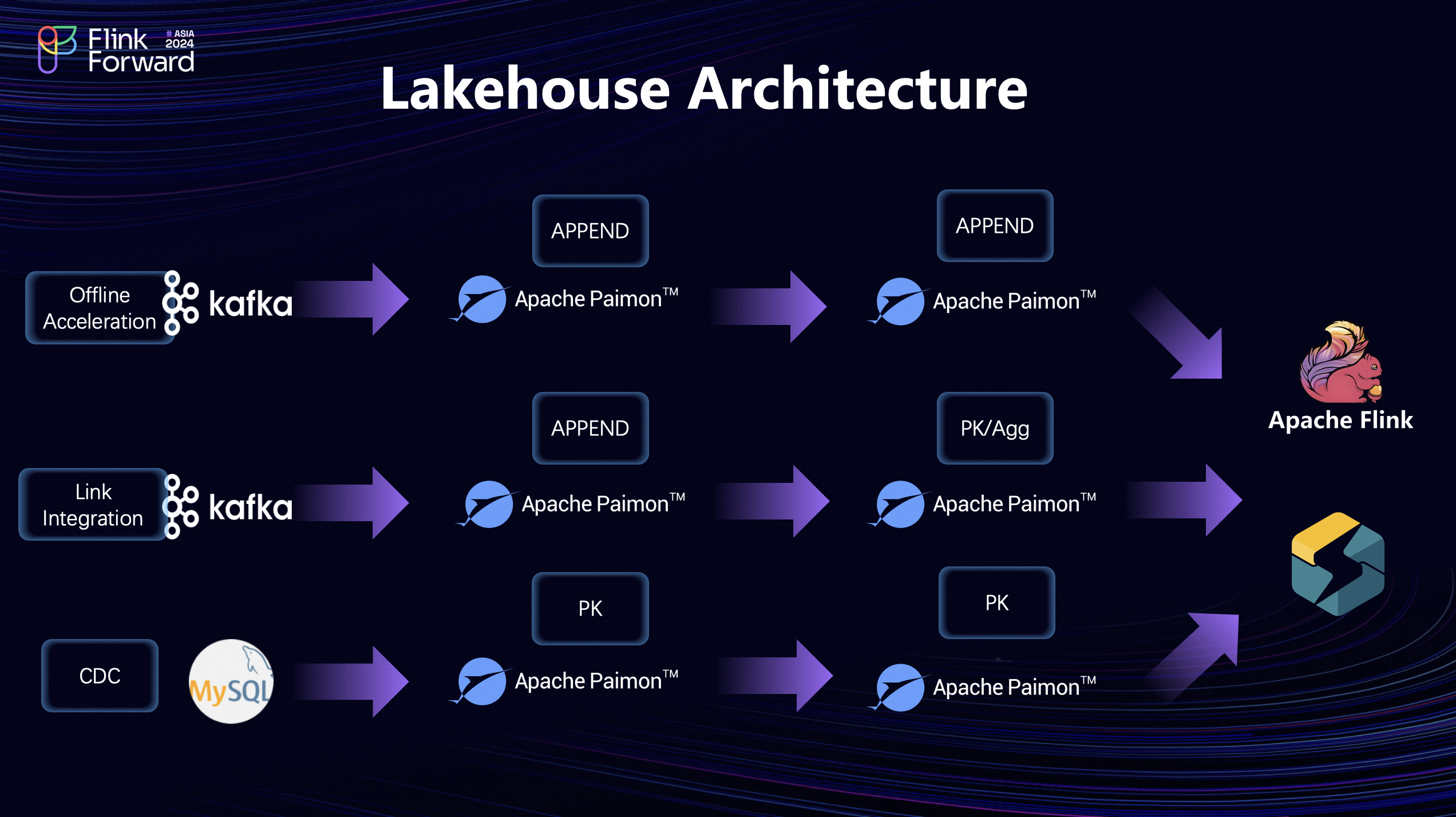

Our technology stack uses Flink as the primary computing engine, combined with StarRocks for federated query acceleration, and Paimon as the core storage layer covering all lakehouse scenarios. In terms of storage format selection, ORC was recommended in older versions. Since version 1.0, Parquet offers more robust features, supporting complex data types. Therefore, you can flexibly choose based on the version in use.

The unified lakehouse architecture has three major applications in realtime processing scenarios: offline acceleration, link merging, and traditional database data analysis optimization.

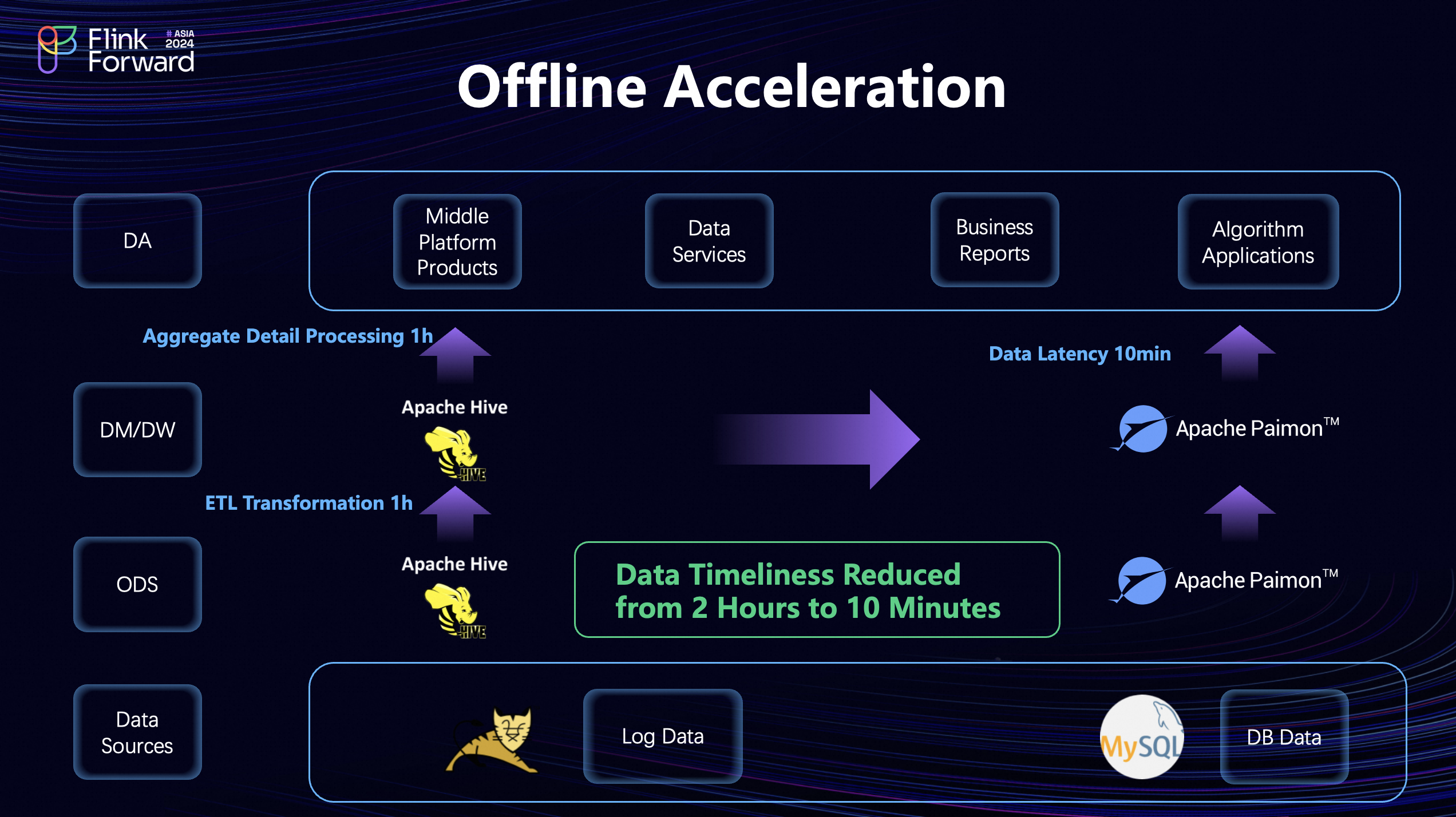

Below is a detailed explanation of the benefits brought by offline acceleration. Compared to traditional data warehouses, the offline acceleration solution using a unified lakehouse architecture significantly enhances the timeliness of data processing. Specifically, this architecture achieves transformative improvements in timeliness through the following means:

Example of Actual Production Link:

Link Integrity and Disaster Recovery:

Application Scenarios:

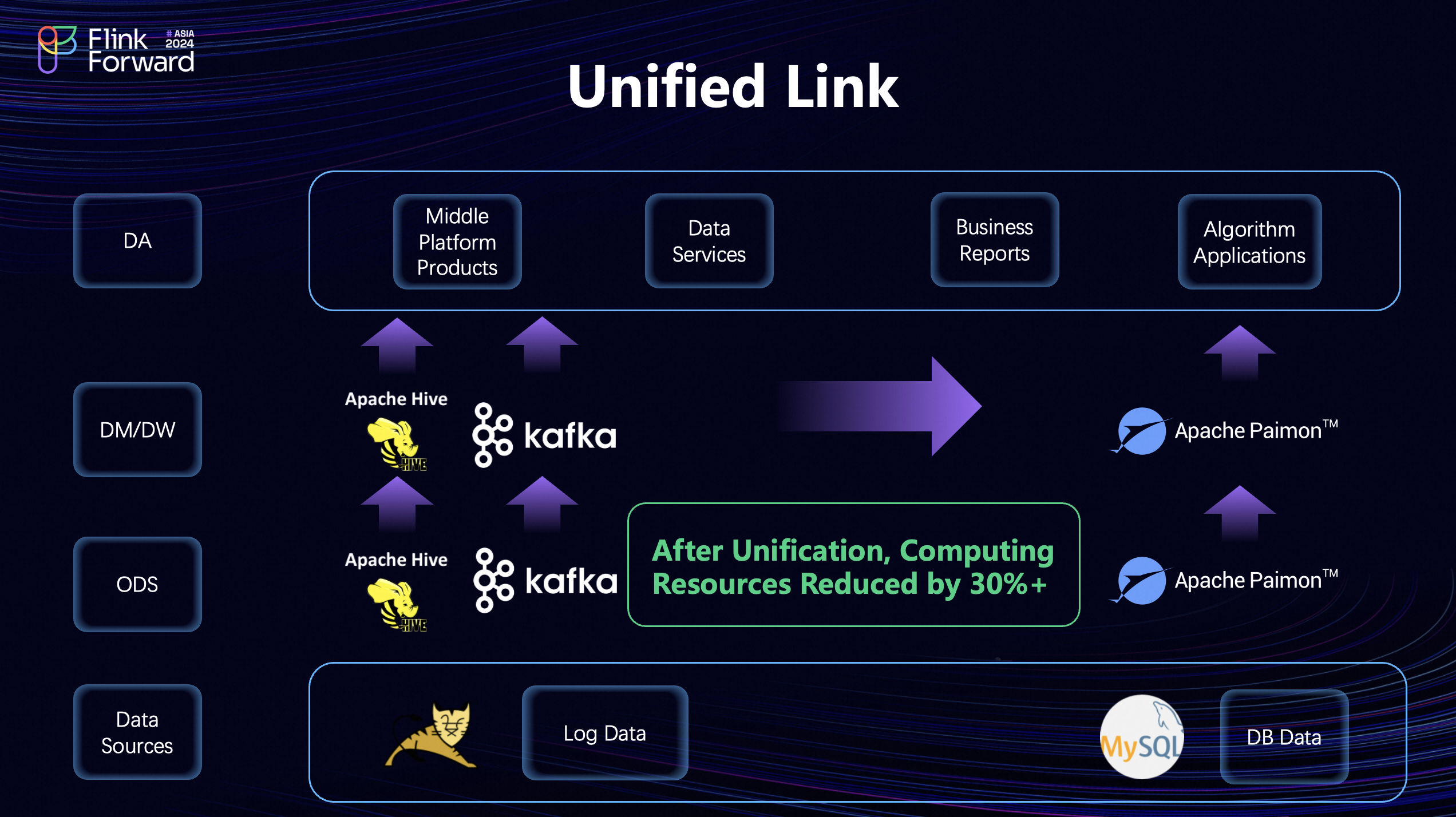

Traditional data processing architectures typically consist of two separate links: one for offline processing based on Spark and Hive, and another for realtime processing based on Kafka and Flink. Although this dual-link design ensures data accuracy and real-time capabilities, it consumes significant resources and lacks flexibility. Additionally, due to Kafka's data storage characteristics, data written to Kafka is often not easily accessible, adding complexity to its usage.

After adopting the Paimon and Flink architecture, all data processing links are completely unified, allowing both realtime and offline data to be written into Paimon tables and analyzed at any time, thereby enhancing flexibility. Furthermore, the merged link can reduce computational resource requirements by approximately 30%. With unified monitoring and comparison of indicators such as memory and CPU cores, more efficient resource management and optimization can be achieved.

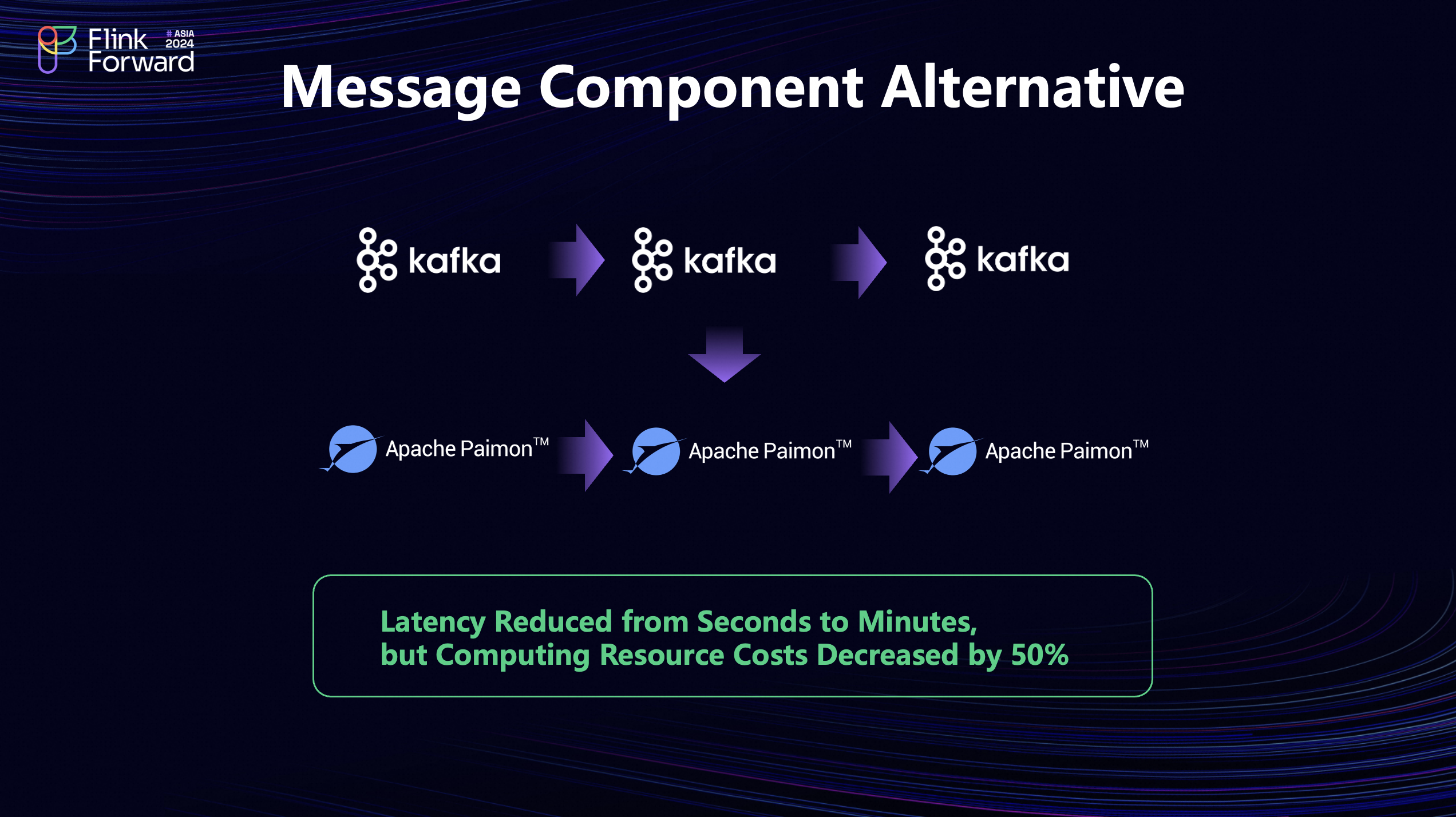

In realtime scenarios, Kafka or PSA is typically used for data streaming and ingestion into real-time data warehouses. However, for cloud users, Kafka resources are valuable and costly, sometimes leading to resource shortages or high load issues. Paimon, as a low-cost alternative to message components, can achieve Kafka-like functionality through its Consumer mechanism. Although its latency is at the minute level, slightly higher than Kafka's second-level delay, it is sufficient for many business scenarios.

By migrating some businesses to Paimon, redundant offline resources can be effectively utilized, enhancing storage utilization and significantly reducing computational and storage costs. In vivo's internal applications, this migration not only optimized the stability of data links but also significantly reduced overall resource costs, achieving a total cost reduction of up to 50%.

In sample concatenation scenarios, both realtime and offline concatenation methods typically need to be handled. Offline concatenation involves full data distribution and insertion operations in specified partitions, leading to wasted computational resources and inefficiency. Realtime concatenation faces the challenge of large state management, which may result in TB-level state data, posing risks to cluster stability.

By utilizing Paimon's Partial Update feature, efficient incremental updates can be achieved, avoiding large state issues. Specifically, A data and B data can be directly written into Paimon tables, using lightweight HASH calculations and incremental writes to ensure high-throughput writing and merging during queries. This approach not only reduces computational resource consumption but also enhances system stability and performance. Additionally, Paimon's delayed read capability can automatically synchronize dimension table data in special scenarios, ensuring data freshness.

In practical applications, this solution can reduce sample concatenation time from one to two hours to five minutes, significantly improving the effectiveness and speed of algorithm training.

In terms of query acceleration, Paimon provides significant performance improvements through federated queries and specific algorithms such as Zorder or Hilbert. For example, when querying different partitions or fields at different times, Paimon can optimize query performance by specifying partitions and using Procedures to merge fields. Compared to Hive, Paimon does not require deduplication and sorting across all partitions, reducing overall costs.

In practical applications, with Paimon and Spark, Flink engines, second-level point queries can be achieved on tables with tens of billions of records. Combined with MPP vectorized query technology, query times can be further compressed to the millisecond level. However, in high concurrency scenarios, lower versions of Paimon (such as version 0.7) lack the Canny Catalog, leading to frequent redundant interactions with Hive Metastore (HMS), which affects query performance. Upgrading to version 0.9 or higher with Canny Catalog allows maintaining millisecond-level responses even with over 200 concurrent queries on tables with tens of billions of records.

Additionally, Paimon supports file management after realtime data writing. Setting shorter Checkpoint times may generate many small files. To avoid putting pressure on the Hive Metastore (HMS) cluster, Paimon regularly performs file merging, ensuring stable read and write performance.

In terms of lakehouse metadata monitoring, Flink tasks may disable certain table management functions, such as setting Read Only to True, to ensure efficient data writing. However, this can lead to oversight of maintenance operations like snapshot cleaning, resulting in slower query speeds and metadata inflation. To address this, a table-level metadata monitoring system can be constructed. This system automatically activates monitoring upon table creation and provides default rules. For instance, when the snapshot count exceeds 200, the system automatically triggers an alert.

The monitoring system is based on Paimon's system tables, with scheduled queries of these system tables via Flink and StarRocks engines, importing data into StarRocks' internal tables. The intelligent diagnostic system checks relevant metrics according to user configurations or system default rules. Once an alert rule is triggered, an alert message is immediately pushed, enabling users to promptly manage and maintain tables, such as performing snapshot cleaning.

This monitoring approach allows for timely detection and resolution of issues before they occur, ensuring the performance and stability of lake tables.

In terms of data migration, Paimon offers simple and effective tools to migrate historical data from Hive tables to Paimon tables, enabling lake table capabilities. For non-Paimon tables (such as default Hive tables), you can create Paimon tables and complete the migration using INSERT INTO or other data import tools. Paimon supports both in-place migration and A-to-B migration. The latter involves moving Hive files to a temporary directory and then constructing metadata (such as Schema, snapshot types, and Manifest files) to complete the process. Once migration is complete, the temporary table is renamed to the existing table name, achieving a seamless migration with minimal user awareness.

This migration method is not only efficient but can also complete the migration of tables with tens of billions of records within minutes, with little user perception. After migration, to ensure compatibility with computing engines like Spark or Flink, it is necessary to adjust relevant dependencies and Catalog injection information to accomplish task-level migration. The overall process includes the migration of both data and tasks, ultimately enabling one-click or low-perception migration of Hive tables to Paimon tables on the platform, thereby activating stream read and write capabilities and reducing computational resource consumption.

Finally, let's look forward to the future together. Future work will focus on algorithm requirements in AI scenarios, particularly supporting the storage, querying, and processing capabilities of unstructured and semi-structured data in AI training and inference scenarios. We will enhance Paimon's storage and query performance for handling complex data types, such as integrated data.

Additionally, we plan to improve the customization capabilities of the Merge Engine, allowing users to flexibly configure according to their specific needs, breaking through the limitations of existing fixed functions. These improvements aim to better support various special scenarios, such as algorithm journeys, thereby creating greater business value.

With this, our sharing session comes to an end. We hope that the content provided above has brought some inspiration and help. Thank you all for your reading and support!

Apache Flink Table API Aggregation Made Easy: FLIP-11 Windowing Guide for Developers

206 posts | 54 followers

FollowApache Flink Community - January 21, 2025

Apache Flink Community - August 21, 2025

Apache Flink Community - September 30, 2025

Apache Flink Community - July 5, 2024

Apache Flink Community - October 17, 2025

Apache Flink Community - November 21, 2025

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Data Lake Analytics

Data Lake Analytics

A premium, serverless, and interactive analytics service

Learn MoreMore Posts by Apache Flink Community