This article is a compilation from the research on Gemini, an enterprise-level state storage engine of Alibaba Cloud Realtime Compute for Apache Flink. The research was conducted by Jinzhong Li, Zhaoqian Lan, and Yuan Mei, members of the Alibaba Cloud Realtime Compute for Apache Flink storage engine team. The content is divided into the following five parts:

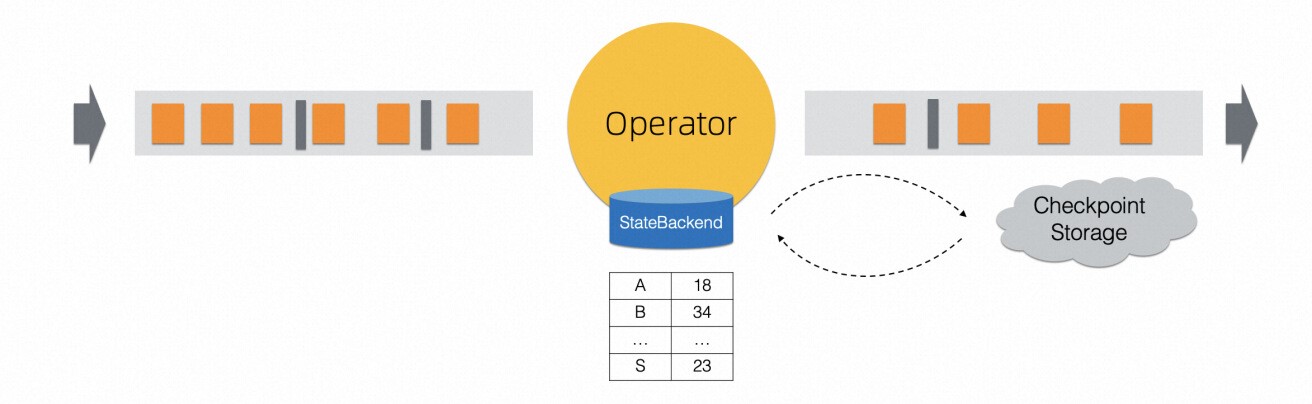

Flink, as a stateful stream computing system, heavily relies on its state storage engine. In Flink, state serves the purpose of storing either intermediate computation results or sequences of historical events, as illustrated in Figure 1-1. Let's examine two typical scenarios:

Figure 1-1 The Flink state is used to store intermediate computing results or the sequence of historical events

When a Flink job has a large state scale, it is difficult for the state storage engine to store full state data in memory, so some cold data is often stored on the disk. The difference between memory and disk in access performance and latency is huge, and I/O access can easily become a bottleneck in data processing. In the Flink computing process, if an operator needs to frequently load state data from the disk, this operator is prone to become a bottleneck for the entire job throughput performance. Therefore, the state storage engine is often a key factor determining the performance of Flink jobs.

Currently, the state storage engine available for community production is based on the implementation of RocksDB. As a general-purpose KV storage engine, RocksDB is not fully suitable for stream computing scenarios. In actual production use and user feedback, we found that it has the following pain points:

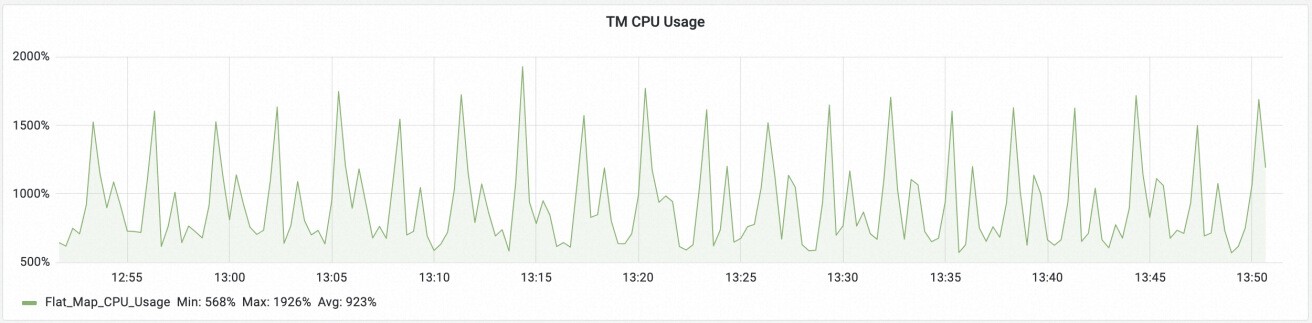

Periodic checkpoints in Flink degrade the performance of RocksDB and are prone to CPU spikes, affecting cluster stability. In the fault tolerance mechanism of Flink, jobs periodically trigger checkpoints to generate global state snapshots for fault recovery. Each time Flink triggers a checkpoint, it flushes the data in the RocksDB memory to generate a new file, which brings many negative effects:

Figure 1-2 Periodic checkpoints of Flink jobs cause periodic CPU spikes

Realtime Compute for Apache Flink provides an enterprise-level state storage engine, Gemini, which is designed for state access in stream computing. This engine resolves the pain points of open source state storage engines in terms of performance, checkpoints, and job recovery. With the comprehensive upgrade of Alibaba Cloud Realtime Compute for Apache Flink this year, Gemini has also introduced a new version, making breakthroughs in performance and stability. The new version of Gemini has undergone extensive production testing by Alibaba Group and Alibaba Cloud customers. In various scenarios, the performance, ease of use, and stability of the new version of Gemini are significantly better than the open source versions of the state storage engine.

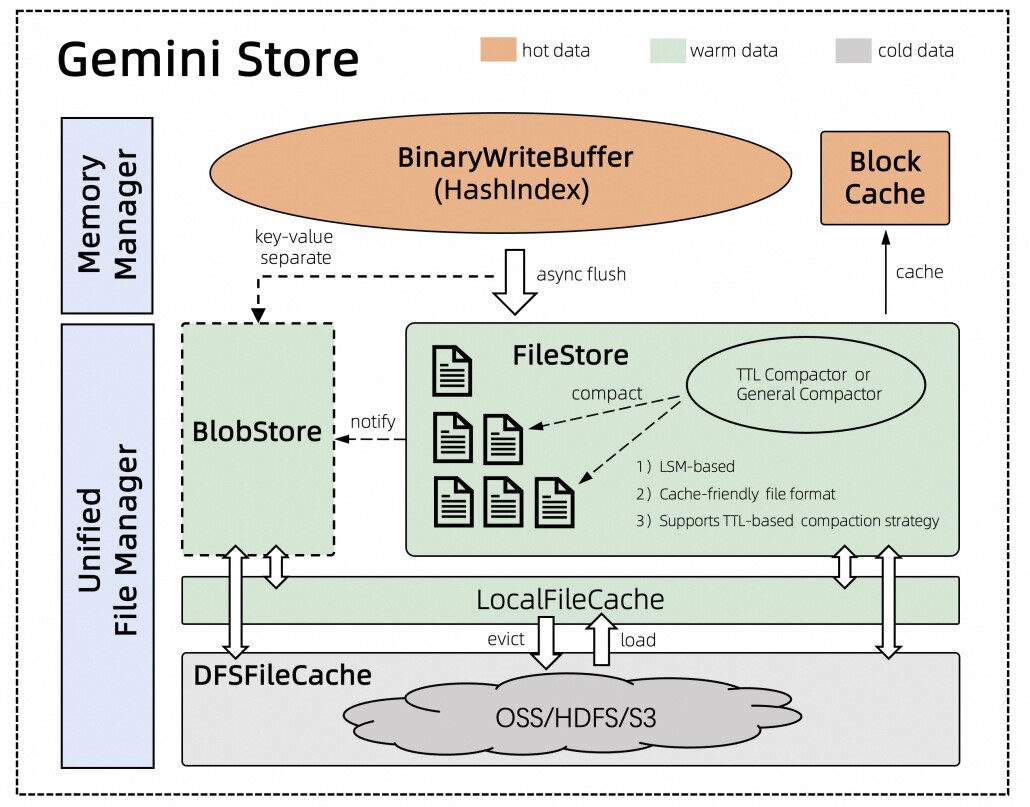

The overall architecture of Gemini still maintains disks as the primary persistent storage, and memory as the cache (as shown in Figure 2-1). Write Buffer utilizes hash indexes with a tight memory structure, providing significant performance advantages over sorted indexes in small and medium-sized states. The new version of Gemini improves the disk data storage structure and focuses on optimizing engine performance in large-state scenarios. It redesigns the file format based on the characteristics of stream computing, supports different state expiration cleanup methods based on common business scenarios, greatly optimizes the compression and encoding efficiency of state data, reduces the state size, and effectively improves the state access performance.

Figure 2-1 Gemini core architecture

In cloud-native deployment environments, local disk capacity is generally limited. RocksDB is designed to store full state data in local disks and has poor scalability. Gemini supports remote storage and access to state data files. When the local disk capacity is insufficient, some cold data can be stored in the remote distributed file systems, getting rid of the capacity limit of the local disk. You do not need to adopt the method of expanding concurrency due to insufficient storage usage, thus saving a lot of costs.

Remote access is characterized by low cost but poor performance. Gemini uses a hot/cold-tiered approach to solve this problem. Data that is frequently accessed is retained in local disks based on historical information and stream computing features. Data that is infrequently accessed is stored in remote systems. This approach achieves optimal performance at the existing cost overhead.

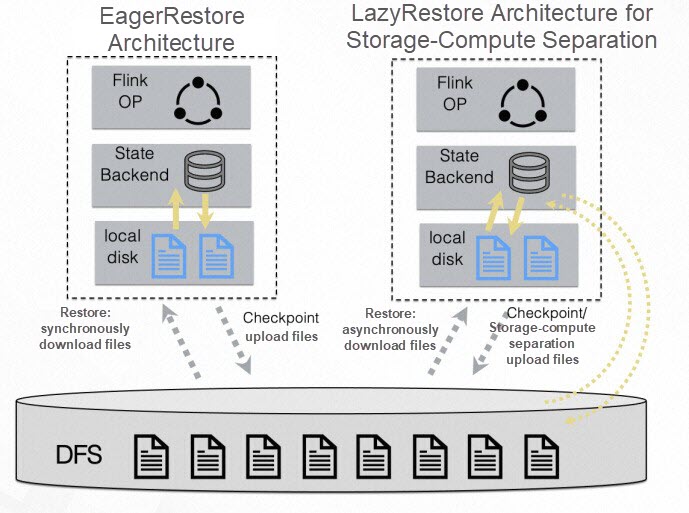

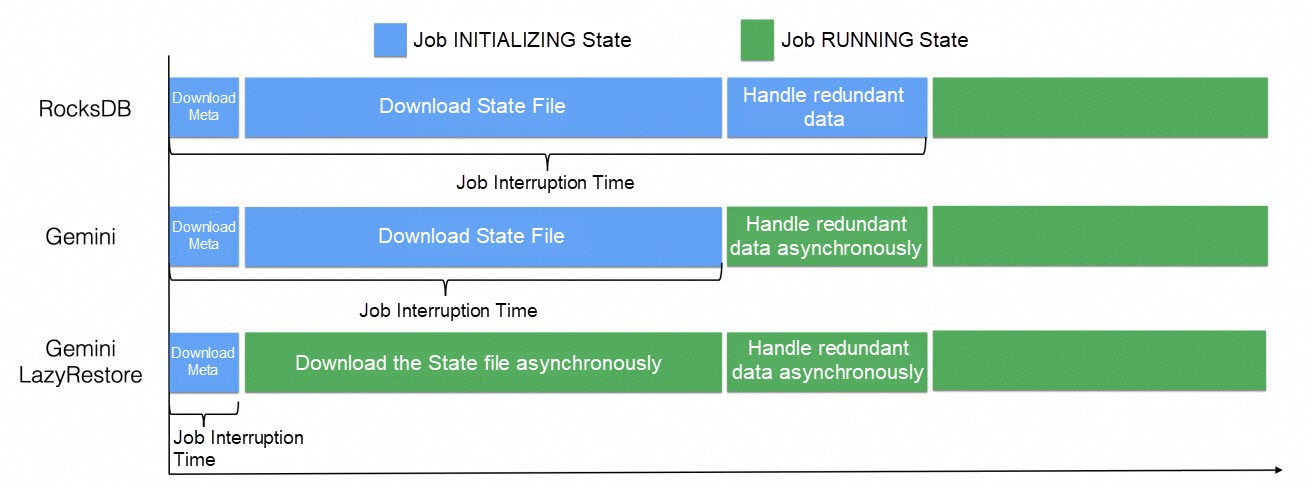

The new version of Gemini provides the state lazy loading feature (LazyRestore) to resolve the issue that job recovery takes a long time and jobs are interrupted for a long period of time in large-state scenarios. As shown in Figure 2-2, in the traditional state recovery mode, the user job can run normally and process business data only after the remote checkpoint file is synchronously downloaded to the local. In the state lazy loading mode, only a small amount of metadata needs to be downloaded during state recovery, so that the job can start to process user data, and then download the remote checkpoint file to the local computer in asynchronous download mode. During the download process, the operator can directly read the remote state data to complete the computing.

Figure 2-2 Normal state recovery mode (EagerRestore) vs. state lazy loading mode (LazyRestore)

Scaling concurrency is also a common operation for users. Unlike simple job recovery, scaling concurrency involves state tailoring, that is, processing redundant data. Unlike RocksDB, which needs to traverse the required key-value data to resume a job during scaling concurrency, Gemini can directly use the original file to splice metadata, quickly restore the DB instance, and start processing user data. However, redundant data in the file can be cleaned asynchronously, and the performance of state read and write threads is hardly affected during the cleaning process. This feature is called state-delayed tailoring.

Gemini's use of state lazy loading and delayed tailoring can achieve great results in job recovery speed. Let's compare three different recovery methods (see Figure 2-3 ):

Figure 2-3 Comparison of interruption time of RocksDB/Gemini/Gemini state lazy loading

Now, Realtime Compute for Apache Flink provides the capability of dynamic update of job parameters (hot update). You can update job parameters without the need to stop and restart the job. The state lazy loading function has been launched in combination with the dynamic update job parameter feature, which greatly reduces the interruption time of user services by more than 90% in the parameter update scenario.

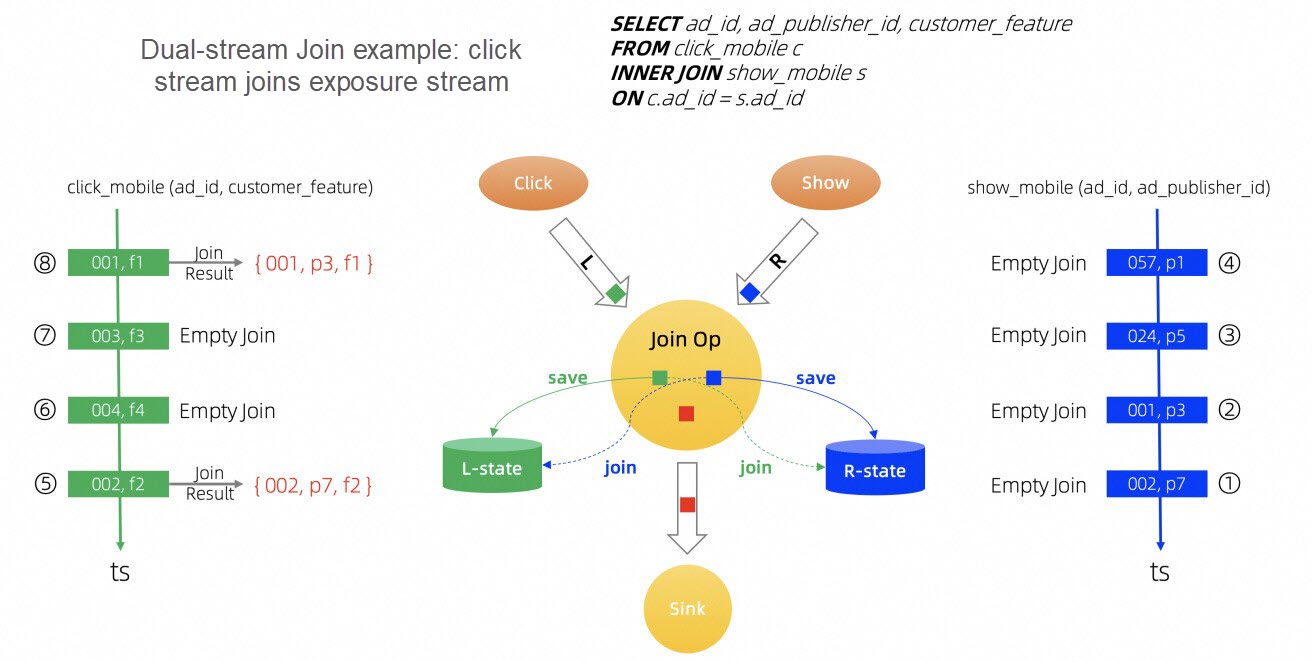

In many dual-stream Join scenarios in Flink, the Join success rate is low or the state data Value is long. Key-value separation can bring advantages to the performance of such jobs. For example, in risk control scenarios, only abnormal data can be joined successfully. In real-time recommend scenarios (as shown in Figure 2-4), the Join can be successful only when the recommend algorithm actually takes effect. The characteristics of such user scenarios determine that the Join success rate of the corresponding Flink job is very low, and the business data field stored in Value is very long, so key-value separation can obtain great performance advantages.

The advantages of KV separation in Join scenarios come from two aspects:

Figure 2-4 Flink dual-stream Join model

The disadvantage of the key-value separation mechanism is that it is not very friendly to range queries, and there is a certain degree of space amplification. In the Flink scenario, state access operations are mainly point queries, and range queries are relatively few, which is a natural application scenario for key-value separation. For the disadvantage of space amplification, Gemini can support storage-compute separation through key-value separation, which avoids the disadvantage of storage space to the greatest extent.

The Gemini key-value separation function can be closely combined with the preceding storage-compute separation and cold/hot data separation functions. In scenarios where the local space is insufficient, the separated Value data (cold data) can be preferentially stored at the remote end to ensure that the key read performance is not affected. In the case of low Value access probability, this scheme can provide the performance of a pure local disk storage scheme at a low cost.

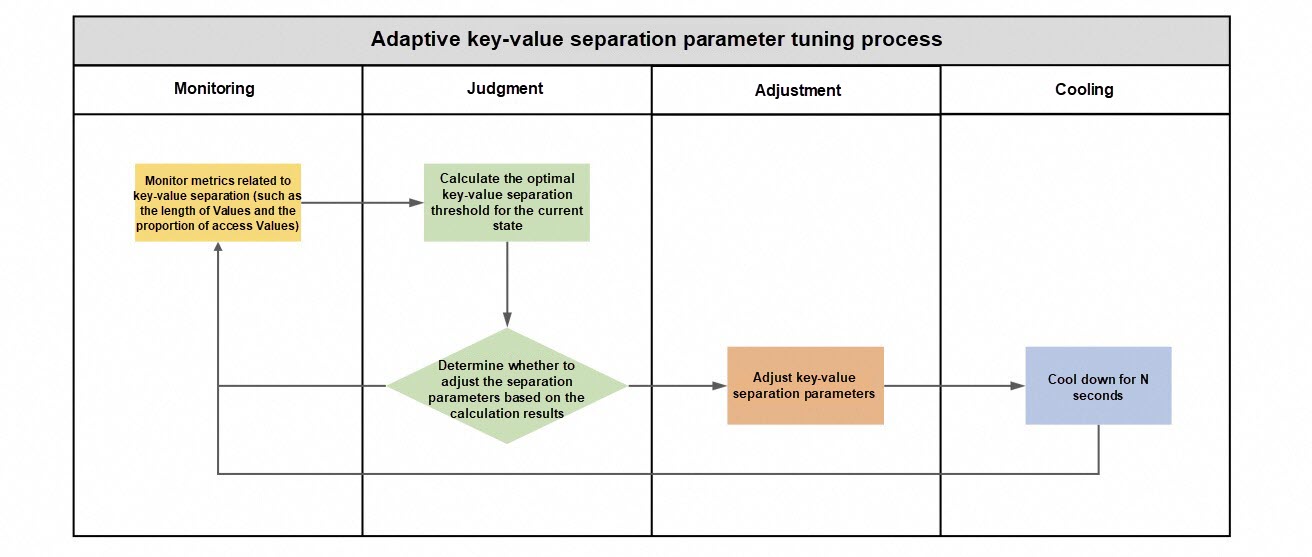

In stream computing scenarios, different jobs have different data characteristics (such as the Value length and the access frequency of Keys and Values). A fixed key-value separation parameter cannot optimize the performance of all jobs. To maximize the performance advantages of key-value separation, Gemini supports adaptive key-value separation. The storage engine can identify hot and cold data according to the characteristics of state data, and dynamically adjust the proportion of key-value separation data to achieve better overall system performance. The parameter tuning process is shown in Figure 2-5. The Gemini adaptive key-value separation feature is enabled by default in SQL Join scenarios. You can use this feature to improve job performance without the need to configure this feature.

Figure 2-5 Gemini adaptive KV separation parameter tuning process

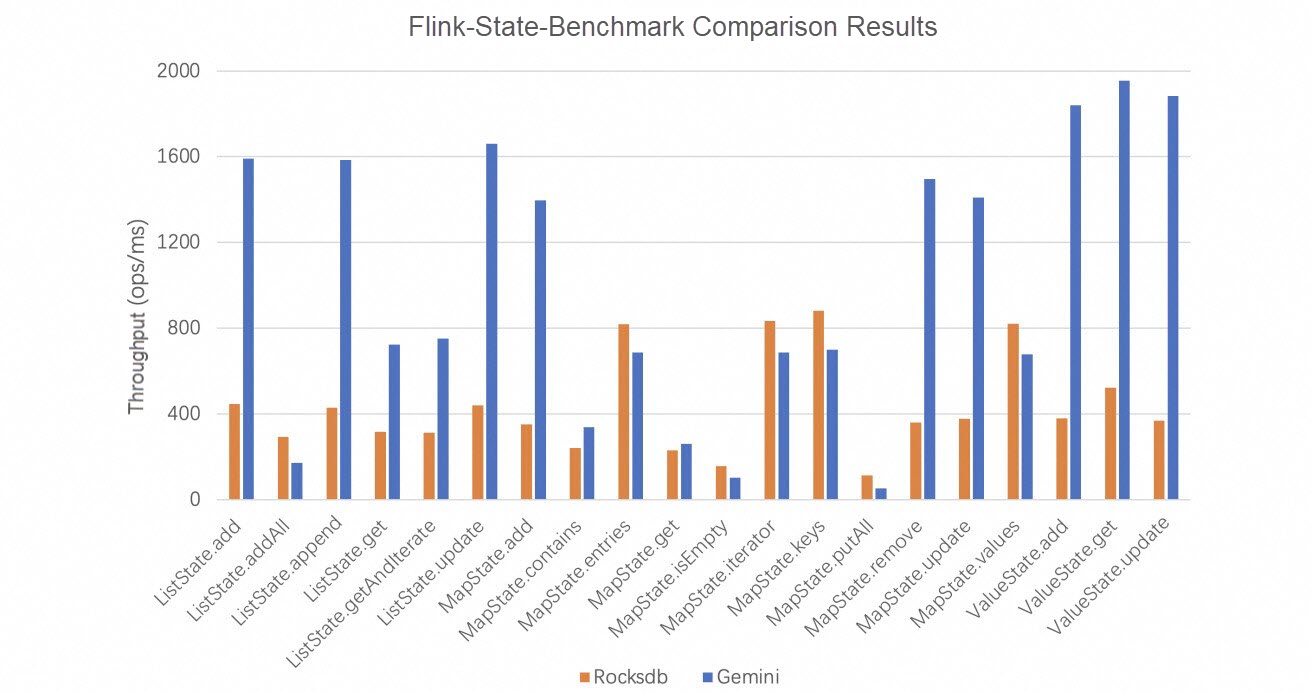

Test environment: An Alibaba Cloud ECS i2.2xlarge instance with 8 vCPUs, 64 GB of memory, and NVMe SSD;

Test settings: Use Flink State Benchmark to compare the pure state operation performance of RocksDB and Gemini. RocksDB setting: WriteBuffer 64 MB (two by default), blockCache 512 MB. Gemini setting: total memory (64MB * 2 + 512MB).

The test results are shown in Figure 3-1. The throughput performance of Gemini can be two to five times that of RocksDB for most point queries (ValueGet, ListGet, and MapGet) and write operations (ValueUpate, ListUpdate, and MapUpdate), which account for a large proportion of Flink stream computing scenarios.

Figure 3-1 Gemini/RocksDB Flink-state-benchmark performance comparison

Test environment: 5 Alibaba Cloud ecs.c7.16xlarge instances (1 JM,4 TM), each with 64 vCPUs, 128GB memory, and PL1 ESSD;

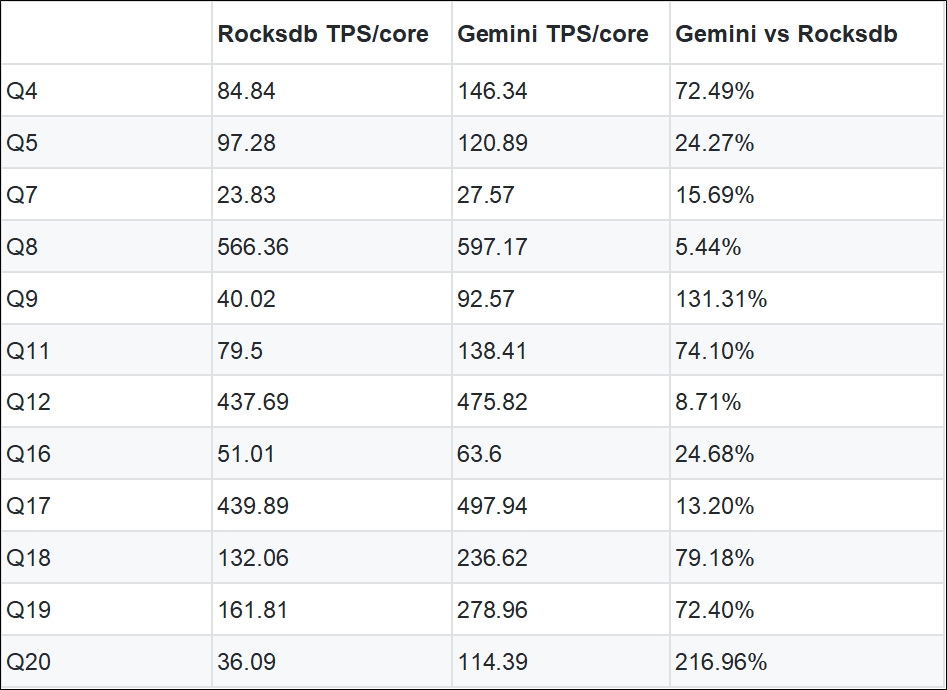

Test settings: Select Nexmark stateful use case, and with default data volume EventsNum=100MB, use Nexmark standard configuration (8 concurrencies, 8 TaskManagers, each TaskManager 8 GB memory) to compare the performance difference between RocksDB and Gemini.

The test results are shown in Table 3-1. Gemini has a significant optimization effect on job performance (single-core throughput), and the performance of all use cases is better than RocksDB, with about half of the use cases outperforming RocksDB by more than 70%.

Table 3-1 Gemini/RocksDB Nexmark performance comparison

Test environment: Fully managed pay-as-you-go Flink is activated in Realtime Compute for Apache Flink.

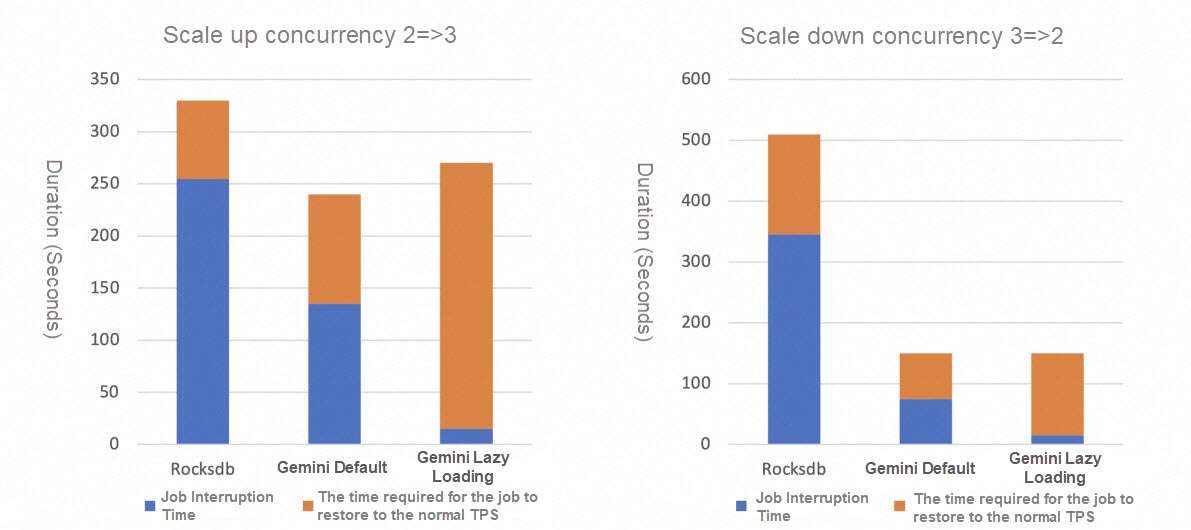

Test settings: Use WordCount Benchmark to test the recovery performance of jobs that are lazily loaded in RocksDB, Gemini, and Gemini states. The total state size of the job is about 4 GB, and the source data is generated in a normal distribution. Each TaskManager is allocated 1 CPU and 4 GB of memory resources.

The test results are shown in Figure 3-2. In the scenario of changing concurrency, the interruption time of Gemini default jobs is less than that of RocksDB (the concurrency scaling-up is reduced by 47%, and the concurrency scaling-down is reduced by 78%). After the lazy loading of Gemini is enabled, the interruption time of jobs can be further reduced compared with that of RocksDB (the concurrency scaling-up is reduced by 94%, and the concurrency scaling-down is reduced by 96%). Compared with RocksDB, the time required for a Gemini job to return to normal performance is significantly reduced, especially in scenarios where concurrency is scaled down by more than 70%.

Figure 3-2 RocksDB vs. Gemini vs. Gemini lazy loading in concurrency scaling speed

At the same time, the state lazy loading function and the function of dynamically updating job parameters (hot update) have been jointly tested. In the scenario where the job concurrency is 128 and each concurrent state size is 5 GB, after the state lazy loading + hot update function is enabled, the interruption time of job concurrency scaling can be reduced by more than 90% (concurrency scaling-up 579 sec -> 13 sec, concurrency scaling-in 420 sec -> 11 sec).

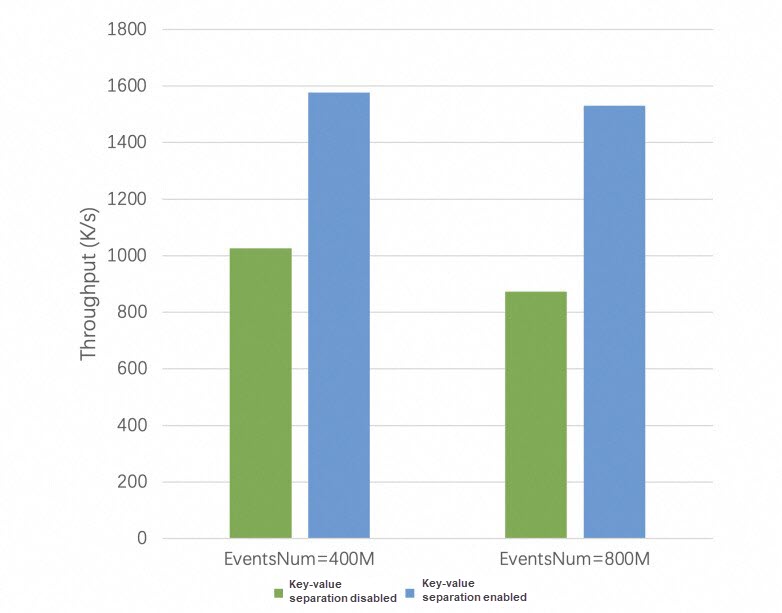

Test settings: Select Nexmark Q20 Join job as the performance testing Benchmark for key-value separation, and appropriately expand the data scale (EventsNum=400 MB/800MB) to make it more suitable for the large-state scenario of dual-stream Join. Other test environments and settings are consistent with 3.2 Nexmark, and the performance of Gemini when key-value separation is disabled/enabled is tested respectively.

The test results are shown in Figure 3-3. In the Q20 dual-stream Join scenario, Gemini has a significant performance optimization effect after key-value separation is enabled, and the job throughput can be improved by more than 50% to 70%.

Figure 3-3. Comparison of the throughput of Nexmark Q20 Gemini with key-value separation disabled and enabled

Gemini, as an enterprise-level state storage engine, is designed based on the characteristics of stream computing scenarios. After more than three years of continuous optimization and refinement, the performance, stability, and ease of use have improved. Since the VVR-8.X version of Enterprise-level Engine of Realtime Compute for Apache Flink, the new version of Gemini has upgraded the core architecture and features based on the previous version. Compared with RocksDB, the new version of Gemini provides better state access performance and faster scaling, and supports key-value separation, storage-compute separation, and state lazy loading.

In the future, we will continue to optimize and improve the Gemini engine to enhance the performance, ease of use, and stability of stream computing products, making it the most suitable state storage engine for stream computing scenarios.

[1] https://nightlies.apache.org/flink/flink-docs-release-1.17/docs/concepts/stateful-stream-processing

[2] https://www.alibabacloud.com/help/en/flink/user-guide/dynamically-update-deployment-parameters

[3] https://github.com/apache/flink-benchmarks/tree/master/src/main/java/org/apache/flink/state/benchmark

[4] https://github.com/nexmark/nexmark

[5] https://www.alibabacloud.com/en/product/realtime-compute

[6] https://www.alibabacloud.com/help/en/flink/product-overview/engine-version

Building a Streaming Lakehouse: Performance Comparison Between Paimon and Hudi

206 posts | 54 followers

FollowApache Flink Community - May 7, 2024

Apache Flink Community China - June 28, 2021

Apache Flink Community - May 9, 2024

Apache Flink Community China - May 14, 2021

Alibaba Cloud Big Data and AI - October 27, 2025

Alibaba Cloud Indonesia - March 23, 2023

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Compute Nest

Compute Nest

Cloud Engine for Enterprise Applications

Learn MoreMore Posts by Apache Flink Community