Download the "Real Time is the Future - Apache Flink Best Practices in 2020" whitepaper to learn about Flink's development and evolution in the past year, as well as Alibaba's contributions to the Flink community.

By Jia Yuanqiao (Yuanqiao), Senior Data Technology Expert with Cainiao

During the forum on real-time data warehouses at the Flink Forward Asia Conference, Jia Yuanqiao, a senior data technology expert from the Cainiao data and planning department, discussed the evolution of Cainiao's supply chain data team in terms of real-time data technology architecture, covering data models, real-time computing, and data services. He also discussed typical real-time application cases in supply chain scenarios and the Flink implementation solution.

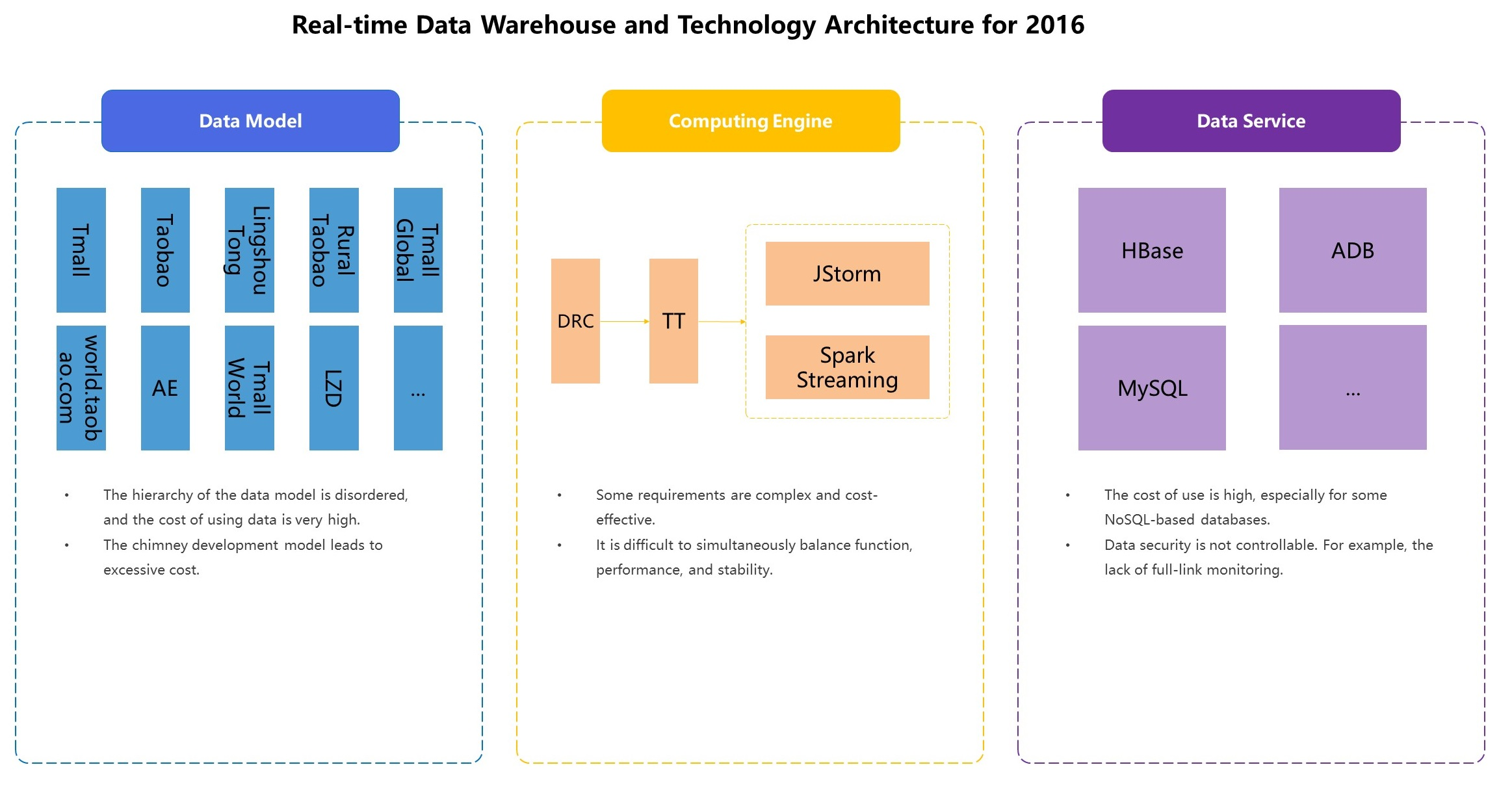

First, let's take a brief look at the technical architecture for real-time data adopted by Cainiao in 2016, which can be broken down into three aspects: data models, real-time computing, and data services.

In response to these problems, Cainiao performed a major upgrade to its data technology architecture in 2017, which will be described in detail below.

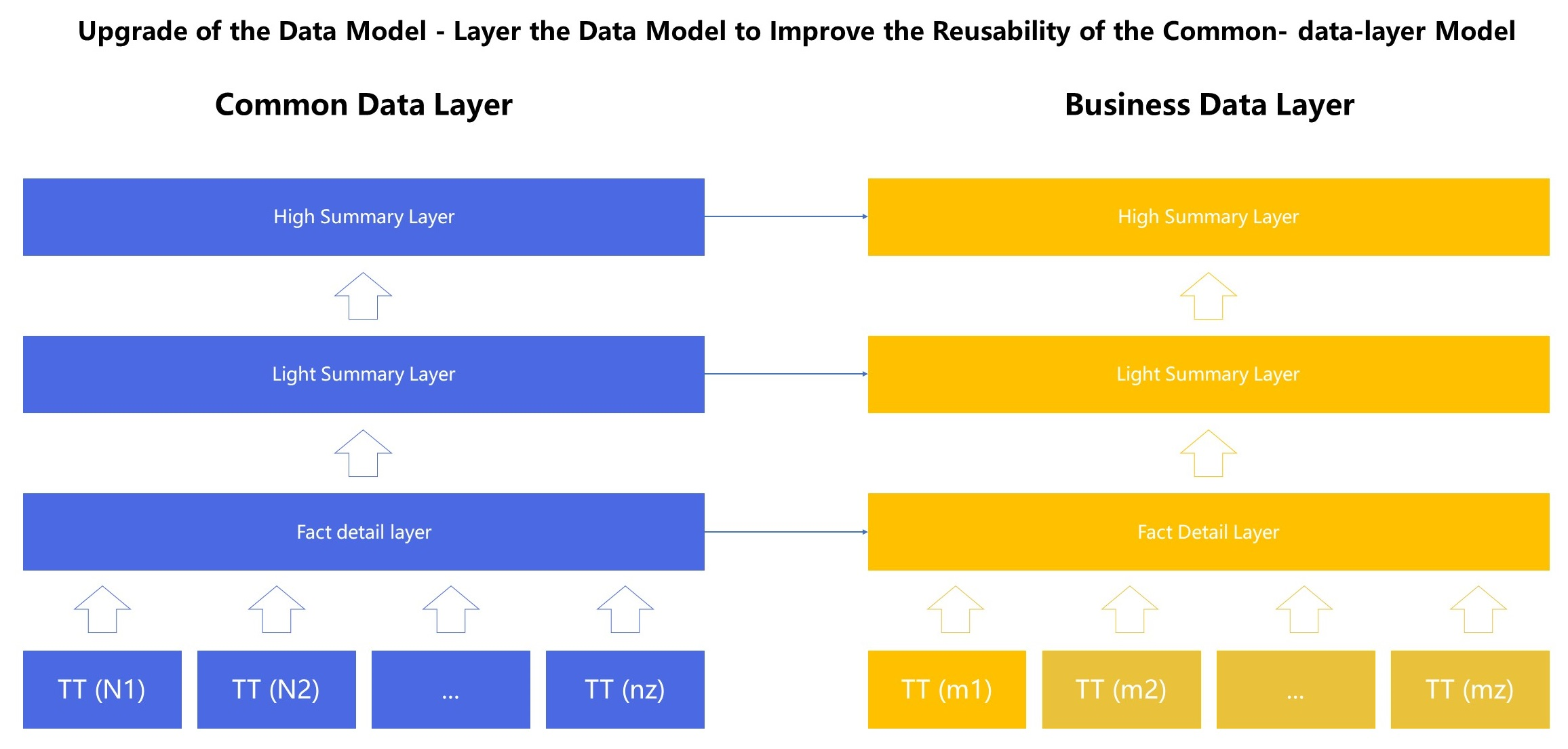

The data model upgrade mainly consisted of the layering of models to fully reuse common intermediary models. In the previous model, data was extracted from the TT data source (such as Kafka) and processed to generate a single-layer table structure. Comparatively, the new data model is layered. The first layer is the data collection layer, which supports data collection from various databases and inputs the collected data into message-oriented middleware. The second layer is the fact detail layer, where TT-based real-time messages generate detailed fact tables and then write them to TT's message middleware. These tables are then converged to the third and fourth layers (the light summary layer and the high summary layer) through publishing and subscription. The light summary layer is suitable for scenarios with a large amount of data dimensions and metric information, such as statistical analysis for a major promotion. Data at this layer is generally stored in Alibaba's proprietary ADB databases, in which you can filter target metrics for aggregation based on your specific needs. In contrast, the high summary layer provides some common granularity metrics and writes data to HBase. This supports a dashboard function for real-time data display scenarios, such as media and logistics data visualization.

In the previous development model, business lines were developed independently, and problems common to different business lines were not considered. However, in logistics scenarios, the requirements for many functions are similar, which often resulted in a waste of resources. To address this issue, we first abstracted the common intermediary data layer (highlighted in blue on the left). Then, individual business lines could shunt data to their own intermediary data layers (highlighted in yellow on the right) based on the common intermediary data layer.

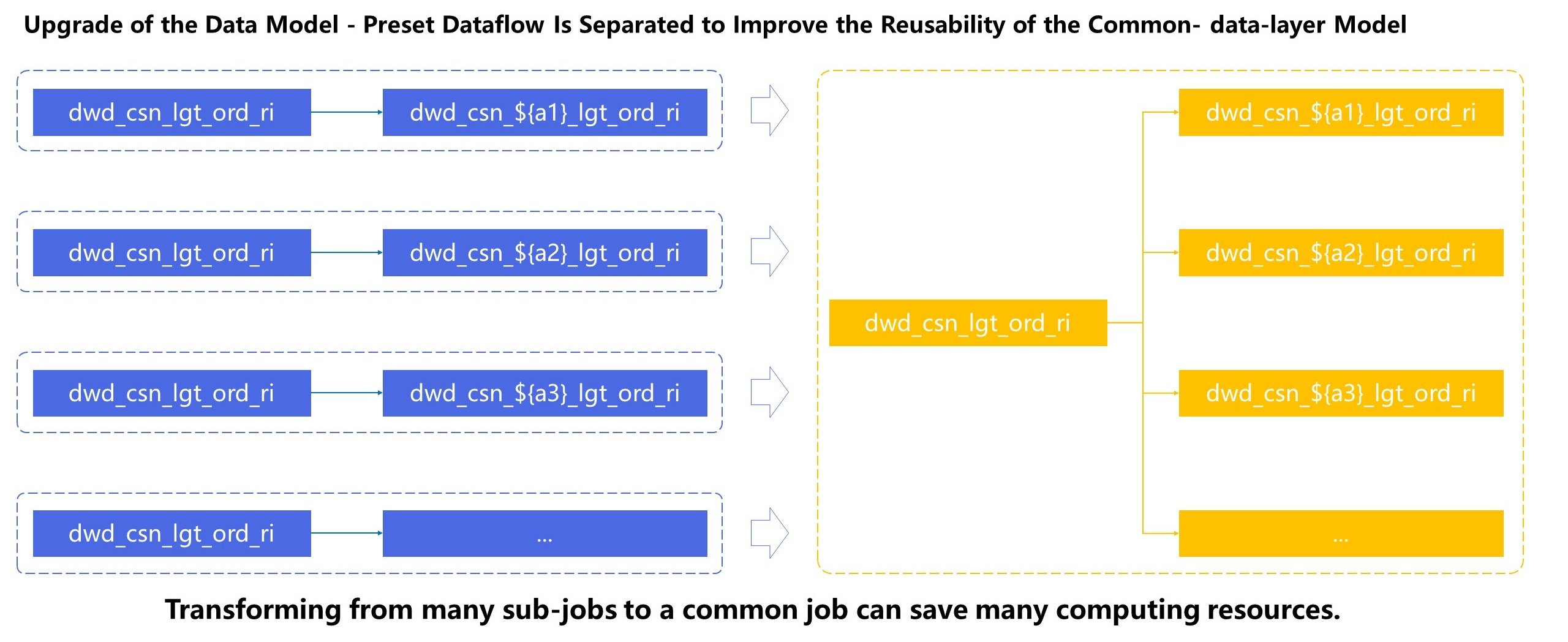

This business line shunting is implemented by a preset common shunting task. The shunting tasks, originally performed downstream, are completed by a common task upstream. This way, the preset common shunting models are fully reused for significant computing resource savings.

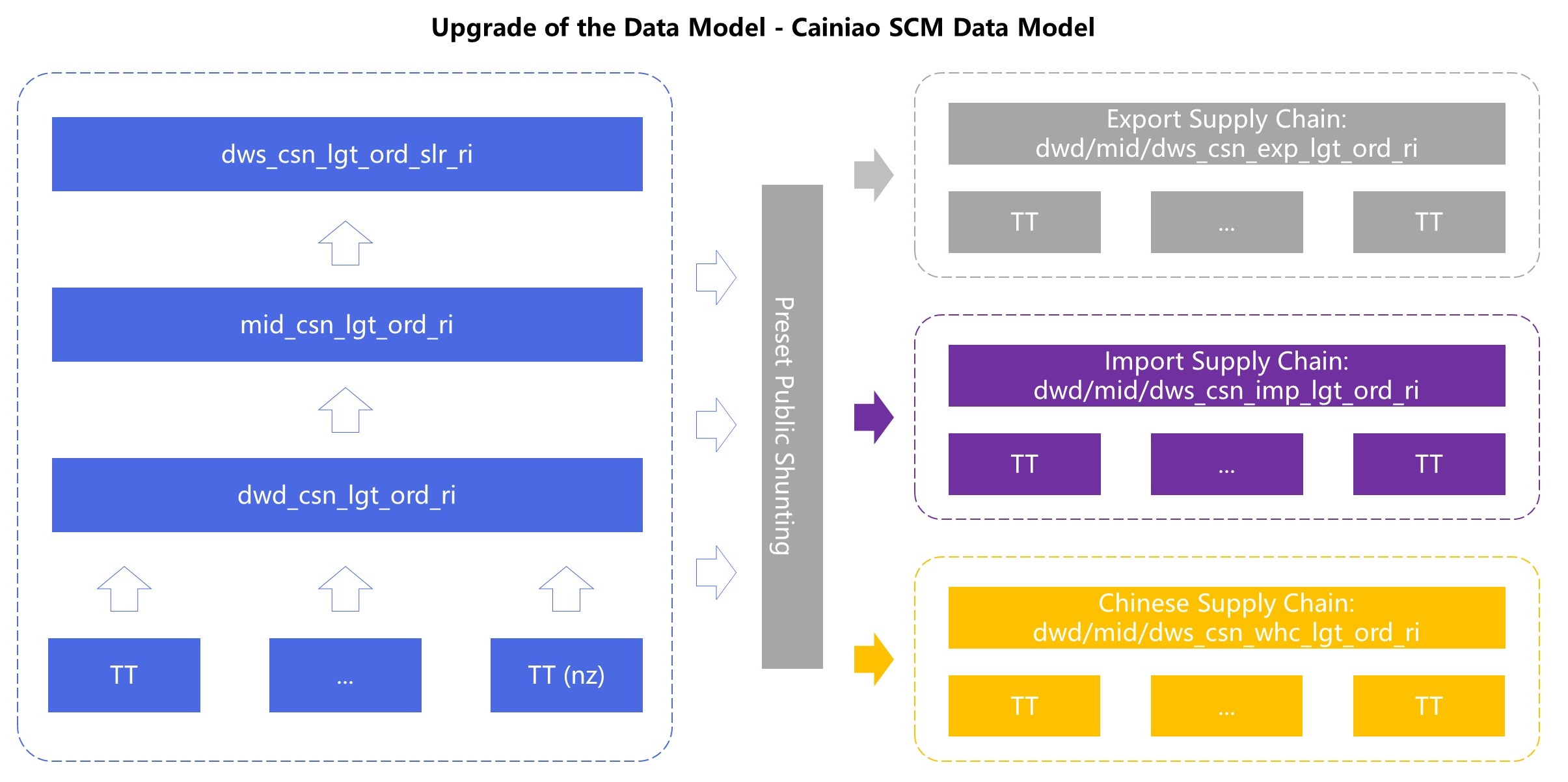

Next, we will look at a data model upgrade case: the real-time data model of Cainiao's supply chain. The left side of the following figure shows the common intermediary data layer, including Cainiao's horizontal logistics orders, dashboard logistics details, and common granularity data. Based on this, Cainiao implements preset common shunting, separating out personalized common intermediary data layers for individual business lines from logistics orders and logistics details. These intermediary layers include domestic supply chains, import supply chains, and export supply chains. Based on the common logic used for shunting and the personalized TT messages of the different business lines, we can produce an intermediary business data layer for each business line. For example, an import supply chain might shunt logistics orders and logistics details from the common business line, but store customs information and trunk line information in its own business line TT. Then, we can use this information to create an intermediary business data layer for this business line. By taking advantage of this design and introducing real-time model design specifications and real-time development specifications, it is much easier to use data models.

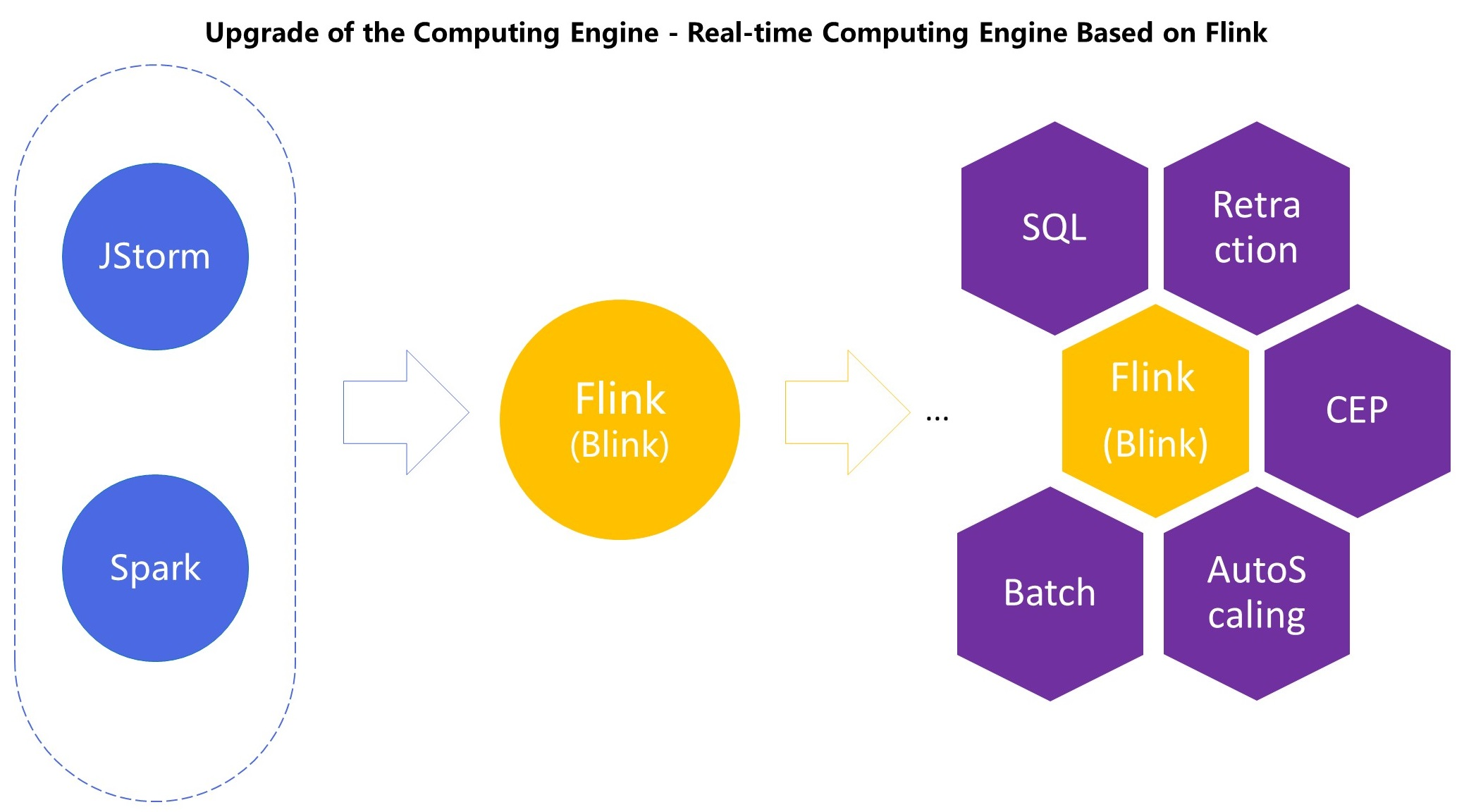

Cainiao originally used the JStorm and Spark Streaming services developed by Alibaba for its computing engine. These services can meet the requirements of most scenarios, but they also create problems in some complex scenarios, such as supply chains and logistics. Therefore, Cainiao fully upgraded to a Flink-based real-time computing engine in 2017. The main reasons for choosing Flink were:

Now, we will discuss three computing engine upgrade cases.

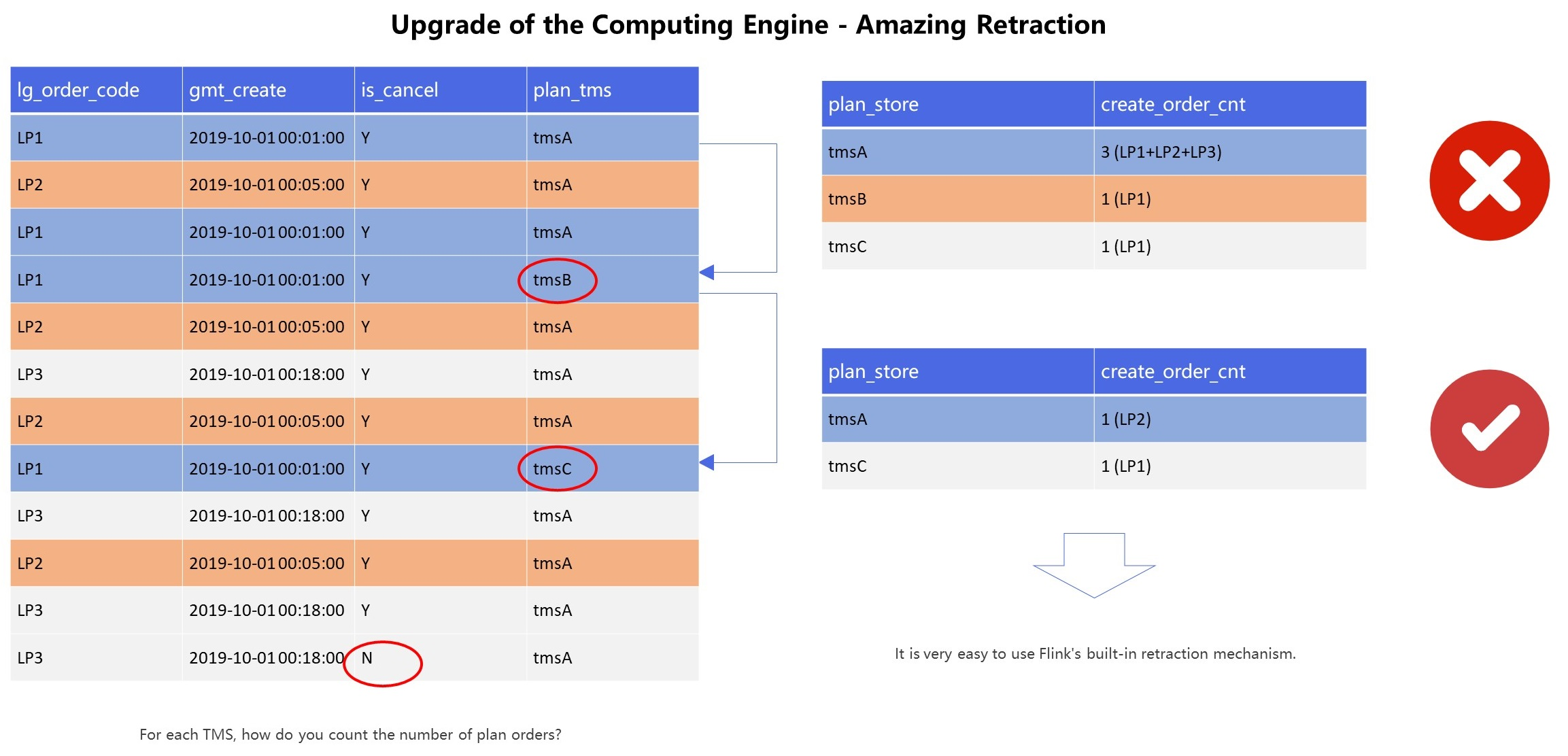

The left side of the following figure shows a logistics order table, which contains four columns of data: the logistics order number, creation time, whether the order was canceled, and assigned delivery company. To count the number of valid orders that a delivery company is assigned, this seems simple but involves some considerations in actual implementation.

One issue is that the LP3 order in the table is valid at the beginning (the cancellation state at 00:18:00 should be N, and the table is incorrect.) However, the order was canceled in the end (the cancellation state for the last line should be Y, and the table is incorrect.) In this case, the order is considered invalid and should not be included in the statistics.

In addition, we must pay attention to delivery company changes. The delivery company for LP1 at 00:01:00 was tmsA, but then changed to tmpB and tmsC. The result in the upper-right corner is obtained according to offline computing methods (such as Storm or incremental processing). Therefore, the LP1 records for tmsA, tmpB, and tmsC are all included in the statistics. However, tmsA and tmsB did not deliver the order, so the result is wrong. The correct result is shown in the table in the lower-right corner of the figure.

To address this scenario, Flink provides a built-in state-based retraction mechanism to help ensure accurate statistics when stream messages are withdrawn.

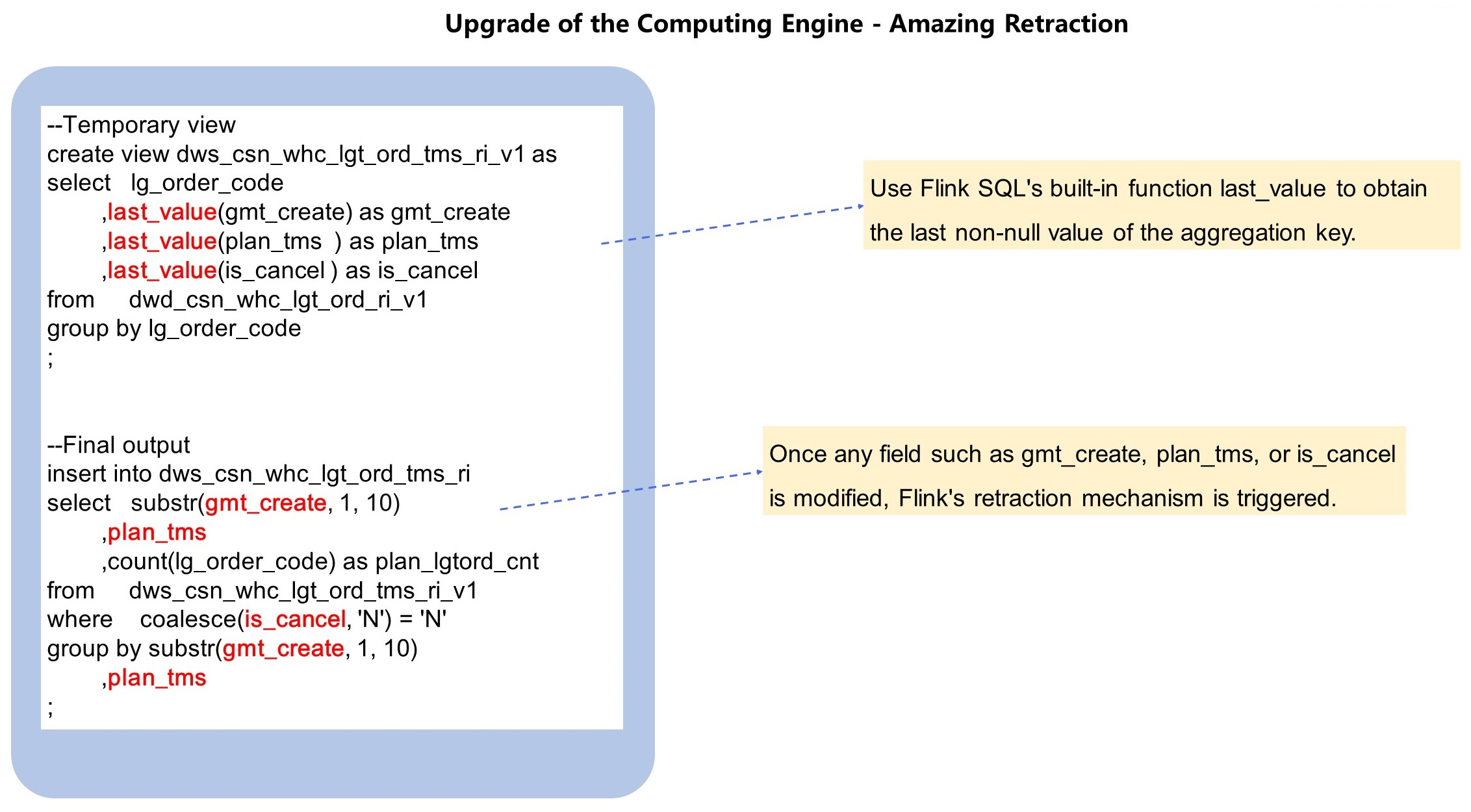

The following figure shows a pseudo-code implementation of the retraction mechanism. First, we use the built-in row count last_value in Flink SQL to obtain the last non-null value of the aggregate key. For the LP1 order in the preceding table, the result obtained by using last_value is tmsC, which is the correct value. Note that the retraction mechanism in Flink is triggered when any change is made to the gmt_create, plan_tms, or is_cancel field for last_value statistics on the left side.

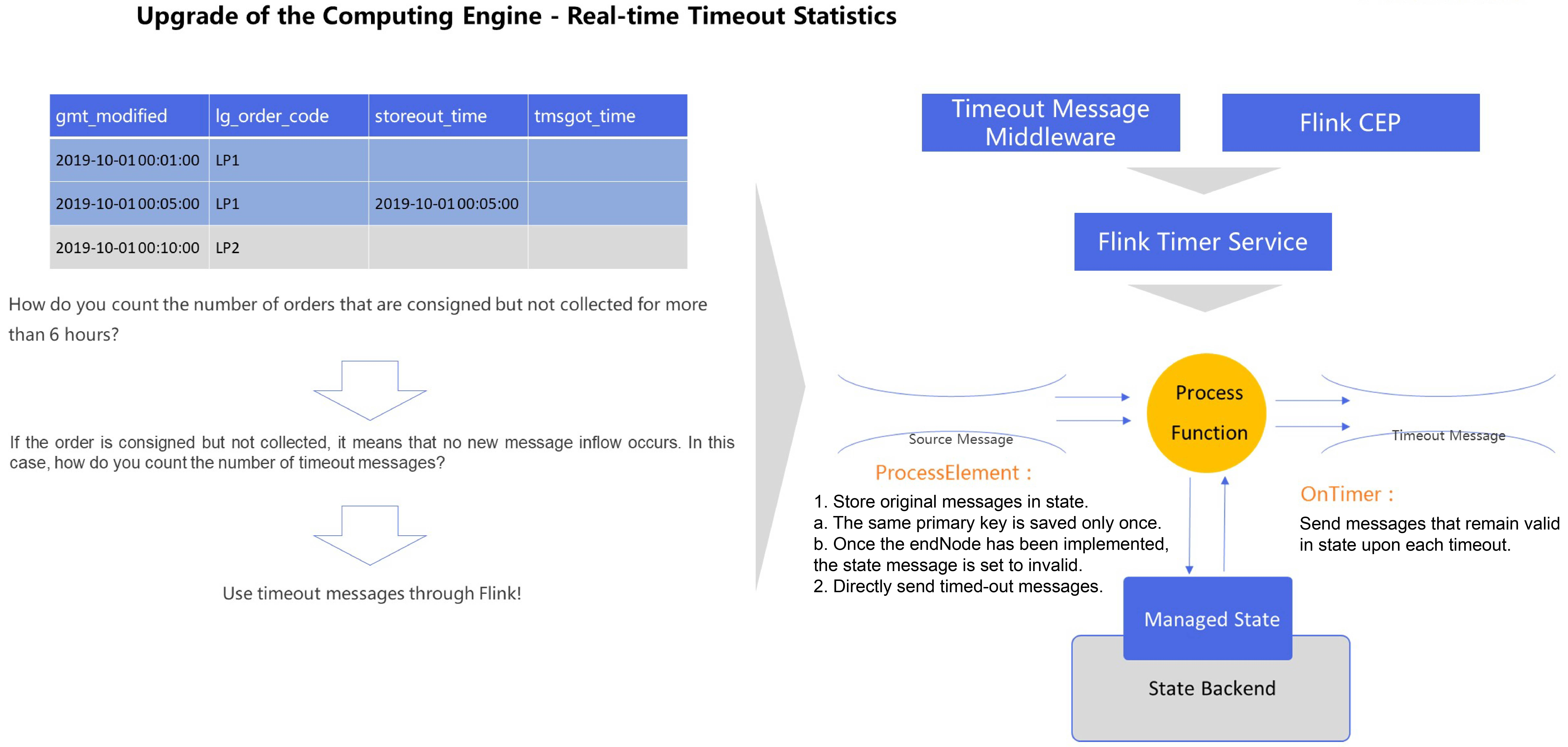

Logistics is a common business scenario in Cainiao. Logistics businesses often require real-time timeout statistics, such as the number of orders that have not been collected more than six hours after leaving the warehouse. The data table used in this example is shown on the left of the following figure. It includes the log time, logistics order number, warehouse exit time, and collection time. It is easier to implement this function in the offline hourly table or daily table. However, in real-time scenarios, we must overcome certain challenges. If an order is not collected after leaving the warehouse, no new message can be received. If this is the case, no timeout messages can be calculated. To address this, Cainiao began exploring potential solutions in 2017. During the exploration, we found that some message-oriented middleware (such as Kafka) and Flink CEP provide timeout message delivery functions. The maintenance cost of introducing messaging middleware is relatively high, whereas the application of Flink CEP would create accuracy problems.

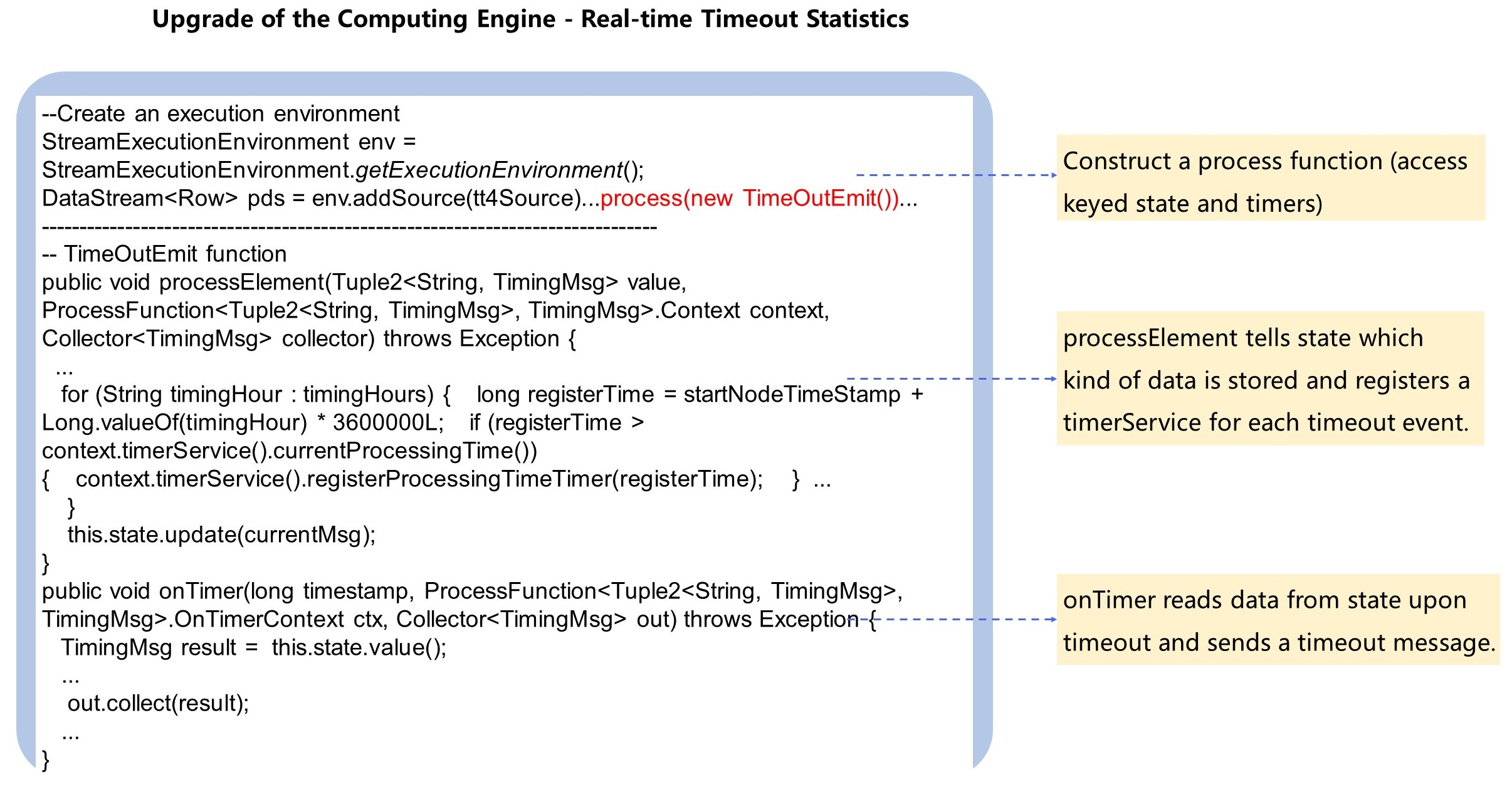

Ultimately, Cainiao chose Flink Timer Service to meet these needs. Specifically, Cainiao rewrites the ProcessElement function in ProcessFunction at the underlying Flink layer. In this function, the original message is stored by the Flink state, and the same primary key is stored only once. Once endNode actually runs, the state message becomes invalid, and a timeout message is delivered. In addition, we rewrite an OnTimer function. This function is mainly responsible for reading the state message upon each timeout and then delivering messages that are still valid in the state. Then, we can count the number of orders with timeout messages based on the association operations of the downstream and normal stream.

The following figure shows the pseudo-code implementation of Flink Timer Service timeout statistics.

We must first create an execution environment and construct a Process Function (for accessing keyed state and times).

Next, we must write the processElement function, which tells state what kind of data to store and registers a timerService for each timeout message. In the code, timingHour stores the timeout period, which is six hours in this example. Then, we start timerService.

Finally, we write the onTimer function, which is used to read the state data upon timeout and send timeout messages.

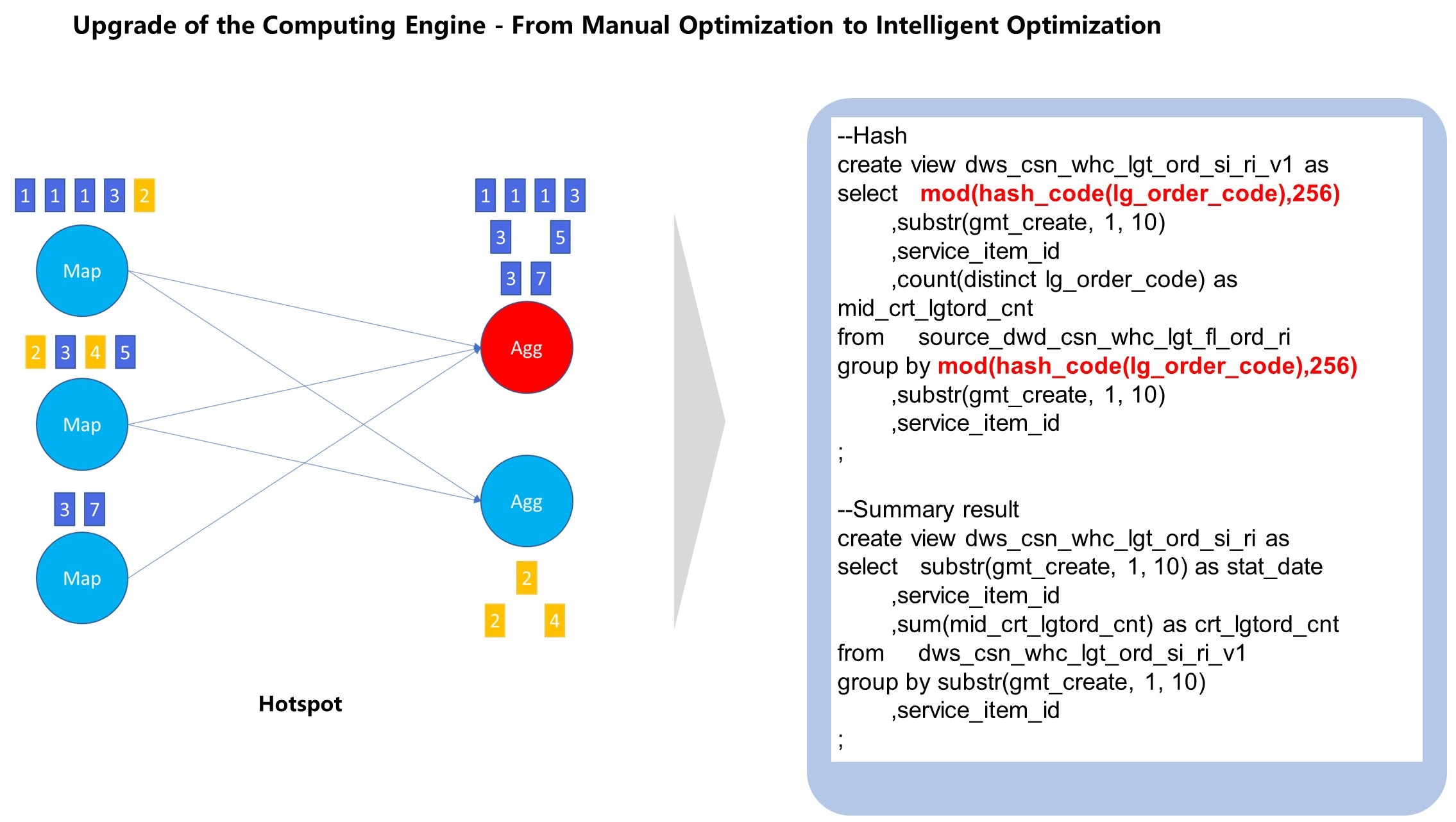

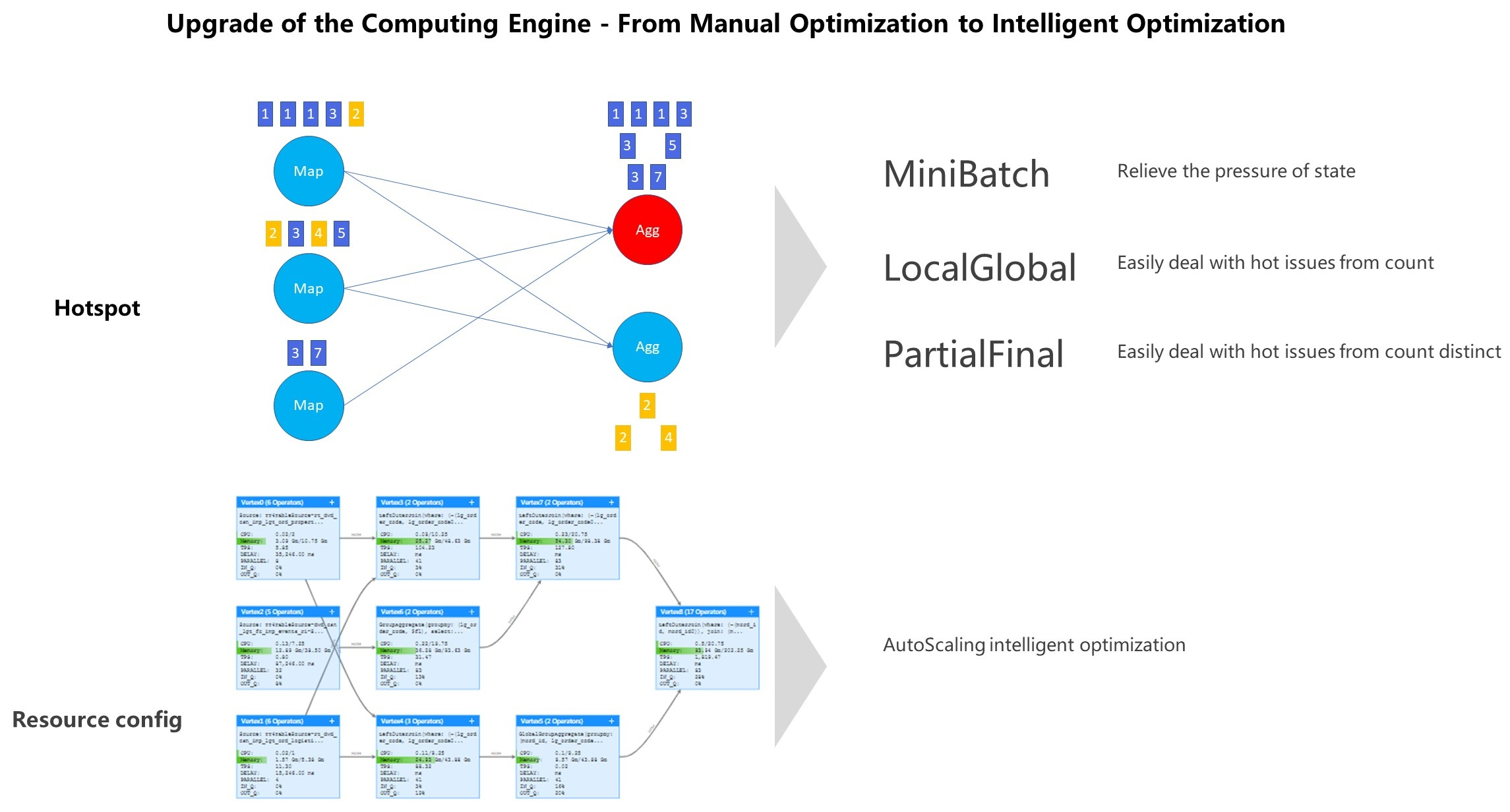

Data hot spots and data cleansing are common problems in real-time data warehouses. Data hot spots are shown on the left of the following figure. After data shuffling is performed in the Map stage (highlighted in blue), data hot spots appear when the data is passed to Agg (highlighted in red). The following figure shows the pseudo-code implementation of Cainiao's initial solution to this problem. To clean lg_order-code, we must first hash it and then perform secondary aggregation on the hashed results. This reduces data skew to a certain extent because there may be another Agg operation.

Cainiao currently uses the latest version of Flink, which provides intelligent features to solve the data hot spot problem:

Intelligent functions also provide help in resource configuration. During real-time ETL, we need to define the data definition language (DDL), write SQL statements, and then configure resources. To solve the resource configuration problem, Cainiao previously configured each node, including setting the concurrency, whether out-of-order message operations are involved, CPU, and memory. This approach was very complicated, and it is still impossible to predict the resource consumption of some nodes in advance. Currently, Flink provides an ideal optimization solution to this problem:

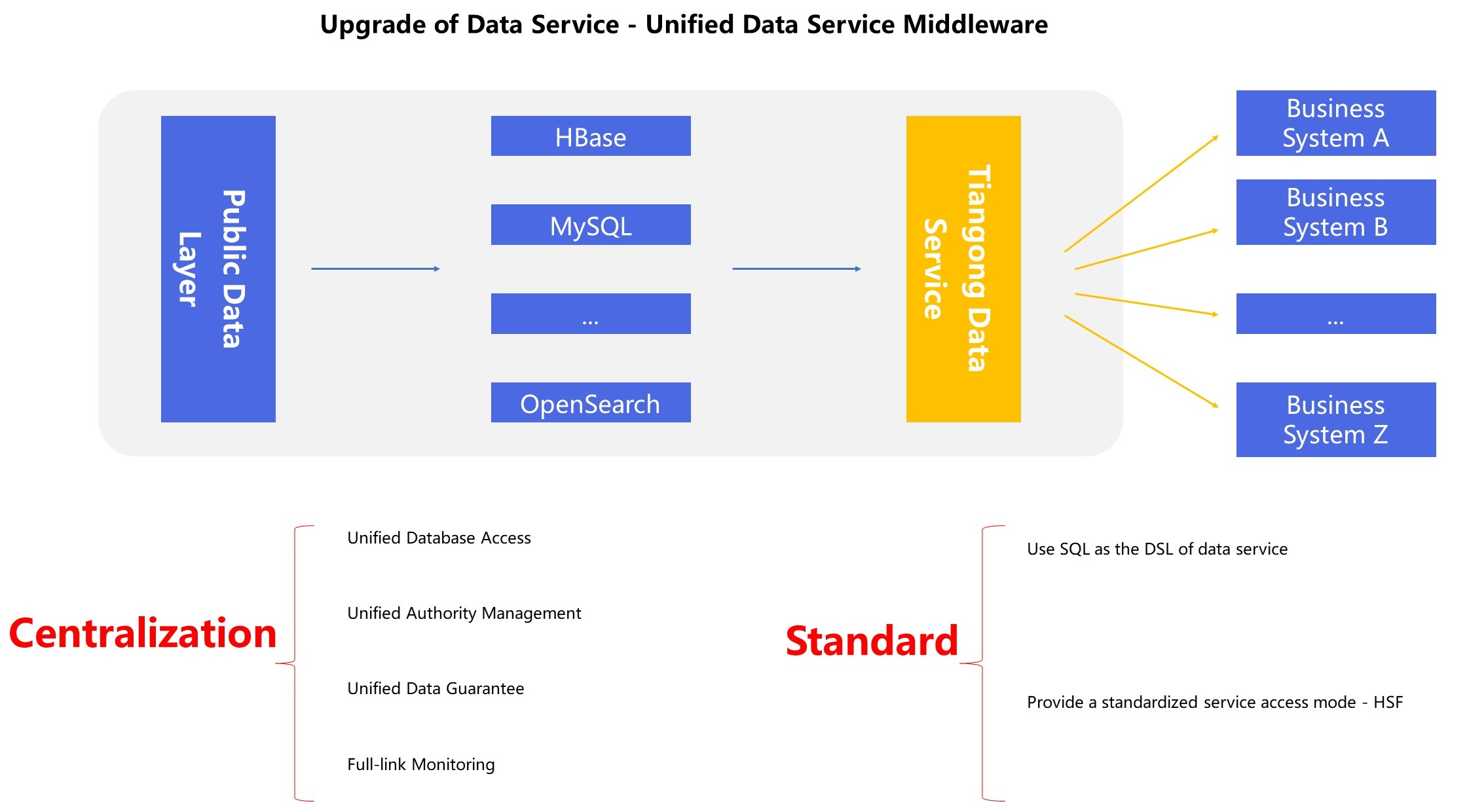

Cainiao also provides a series of data products to offer data services during data warehouse development. Originally, it provided multiple methods for connecting to databases through Java Web. However, in practical applications, the most common database types are HBase, MySQL, and OpenSearch. Therefore, Cainiao worked with the data service team to develop a unified data service middleware called Tiangong Data Service. This service provides centralized functions, such as centralized database access, centralized authority management, centralized data guarantee, and centralized end-to-end monitoring. Also, this service privileges SQL, uses SQL as the domain-specific language (DSL) of data service, and provides a standardized service access mode (HSF).

As an implementer of Cainiao data services, Tiangong also provides many functions relevant to businesses. Now, we will look at several specific cases.

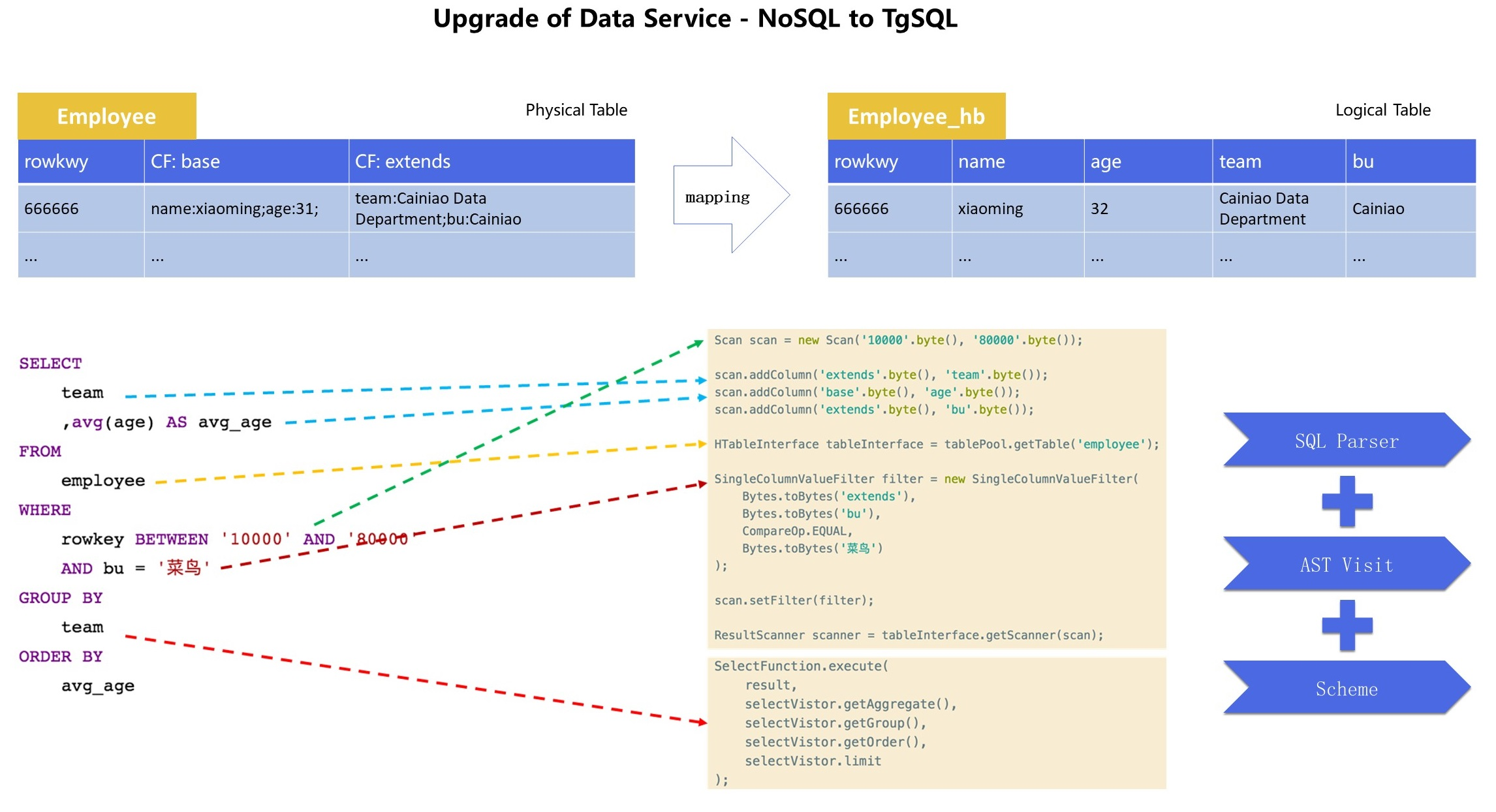

For NoSQL databases such as HBase, it is difficult for operational personnel to write code. As such, a standard syntax is required. To solve this problem, Tiangong provides TgSQL to standardize NoSQL conversion. The following figure shows the conversion process. As shown in the figure, the Employee table is converted to a two‑dimensional table. In this example, the conversion is logical rather than physical. To query data, Tiangong middleware parses SQL and automatically converts it to a query language in the background.

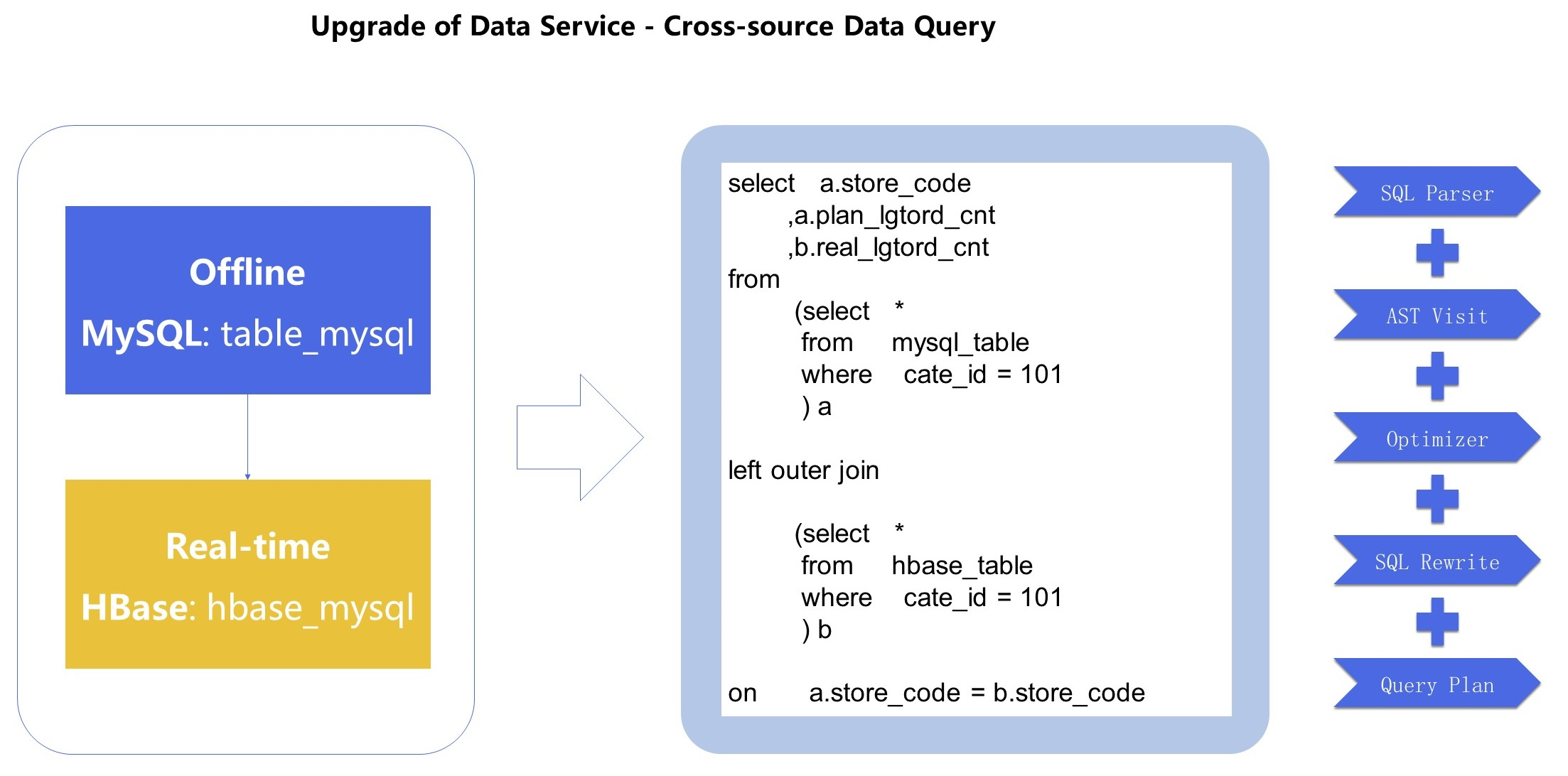

When developing data products, Cainiao often encounters situations that cannot be broken down into real-time and offline cases. For example, Cainiao collects the real-time KPI completion rate every year by using the ratio of completed orders to planned orders. This computing job relies on two data sources: one is the planned offline KPI table, and the other is the calculated real-time table written to HBase. In our original solution, two APIs were called through Java, and then the necessary mathematical operations were performed at the front end. To address this problem, Cainiao provides standard SQL for queries on different data sources, such as offline MySQL tables and HBase real-time tables. This way, you only need to write data in standard SQL, use the upgraded data service to parse the data, and then query the data from the corresponding databases.

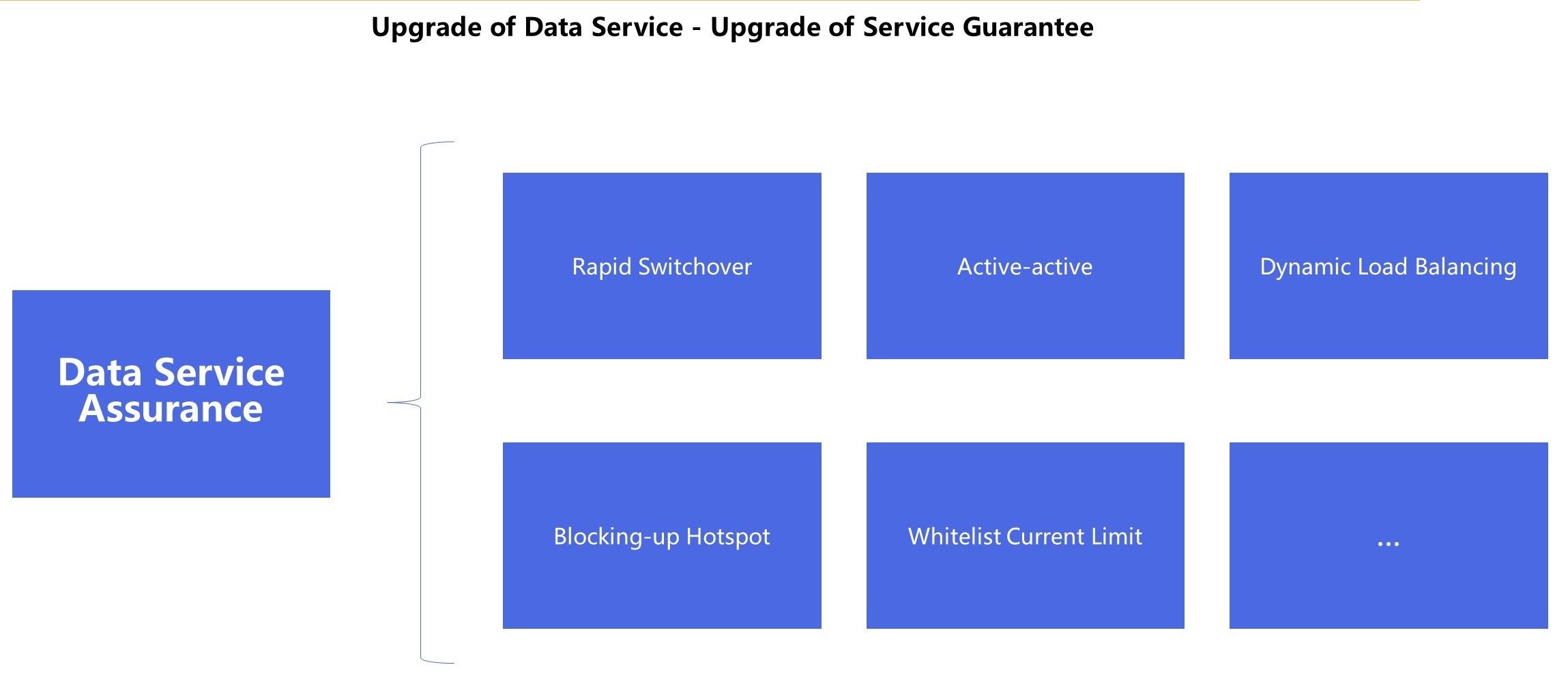

Cainiao initially lacked service assurance capabilities. After a task was released, we could assure it was problem-free, and some problems were discovered until reported by users. In addition, when the concurrency is high, there is no way to promptly take appropriate measures, such as throttling and active/standby switchover.

To address this problem, Tiangong middleware provides a data assurance feature. In addition to active/standby switchover, this feature also provides active/standby active-active, dynamic load balancing, hot spot service blocking, and whitelist throttling.

For switchover in scenarios such as that shown above with physical and logical tables on the left and right sides, one logical table can be mapped to the active and standby links. When the active link fails, you can switch to the standby link with a single click.

In addition, promotions involve some very important services, such as dashboards and internal statistical analysis. These services use the active and standby links simultaneously. At this point, it is not appropriate to fully read from and write to one of the libraries, so we expect that traffic can be distributed to both links. Tiangong implements the active/standby active-active function, which directs heavy traffic to the active link and light traffic to the standby link.

When a task on the active link is affected, the task is moved to the standby link to proceed. When complex and slow queries impact the performance of the overall task, such hot spot services are blocked.

In addition to data models, computing engines, and data services, Cainiao has also explored and made innovations in other areas, including real-time stress testing, process monitoring, resource management, and data quality assurance. Real-time stress testing is commonly used for major promotions. It can be used to simulate the traffic during major promotions and test whether tasks can be successfully executed under specific QPS conditions. Originally, we would restart the jobs on the standby link, and then change the source of the jobs on the standby link to the stress test source and change the sink action to the action of the stress test source. This solution was very complicated to implement when many tasks coexist. To solve this problem, the Alibaba Cloud team developed a real-time stress testing tool. This tool can start all required stress testing jobs at one time, automatically generate the source and sink items for stress testing, perform automatic stress testing, and generate stress testing reports. Flink also provides job monitoring features, including latency monitoring and alert monitoring. For example, if a job does not respond within a specified period of time, an alert is sent and a TPS or resource alert is triggered.

Cainiao is currently developing a series of features for real-time data warehouses based on Flink. In the future, we plan to move in the direction of hybrid batch and stream operations and artificial intelligence (AI).

With the batch processing function provided by Flink, Cainiao no longer imports many small- and medium-size table analysis jobs to HBase. Instead, we read offline MaxCompute dimension tables directly into the memory during source definition and perform association. This way, data synchronization is removed from many operations.

In some logistics scenarios, if the process is relatively long, especially for orders during Double 11, some orders may not be signed for. Even worse, if an error is found in the job and the job is restarted accordingly, the state of the job will be lost and the entire upstream source can only be retained for up to three days in TT. This makes it more difficult to solve such problems. Cainiao discovered that the batch function provided by Flink is an ideal solution to this problem. Specifically, it defines the TT source as an application for three-day real-time scenarios. TT data is written to the offline database for historical data backup. If a restart occurs, the offline data is read and aggregated. Even if the Flink state is lost, the offline data allows a new state to be generated. This means you do not have to worry about being unable to obtain information for Double 11 orders signed for before the 17th if a restart occurs. Undoubtedly, Cainiao has encountered many difficulties in our attempts to solve such problems. One example is the problem of data disorder when aggregating real-time data and offline data. Cainiao implemented a series of user-defined functions (UDFs) to address this issue, such as setting read priorities for real-time and offline data.

Alibaba Cloud Realtime Compute for Apache Flink Provides Solutions for the Financial Industry

206 posts | 54 followers

FollowApache Flink Community China - December 25, 2019

Alibaba Clouder - December 21, 2020

Alibaba Clouder - December 13, 2019

Apache Flink Community - March 7, 2025

Alibaba Cloud Native Community - July 20, 2021

Alibaba Clouder - September 30, 2019

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community