PyFlink is the Python language portal of Flink. Its Python language is really simple and easy to learn, but the development environment of PyFlink is not easy to build. If you are not careful, the PyFlink environment will be messed up, and it is difficult to find out the cause. This article introduces a PyFlink development environment tool that can help users solve these problems, Zeppelin Notebook. The main contents are listed below:

Check out the GitHub page! You are welcome to give it a like and send stars!

You may have heard of Zeppelin for a long time, but previous articles mainly focused on how to develop Flink SQL in Zeppelin. Today, we will introduce how to develop PyFlink Job in Zeppelin efficiently to solve the environmental problems of PyFlink.

To summarize the theme of this article, use Conda in Zeppelin notebook to create Python env to deploy it to a Yarn cluster automatically. You do not need to install any PyFlink packages on the cluster manually, and you can use multiple versions of PyFlink isolated from each other in a Yarn cluster at the same time. Eventually, you will see:

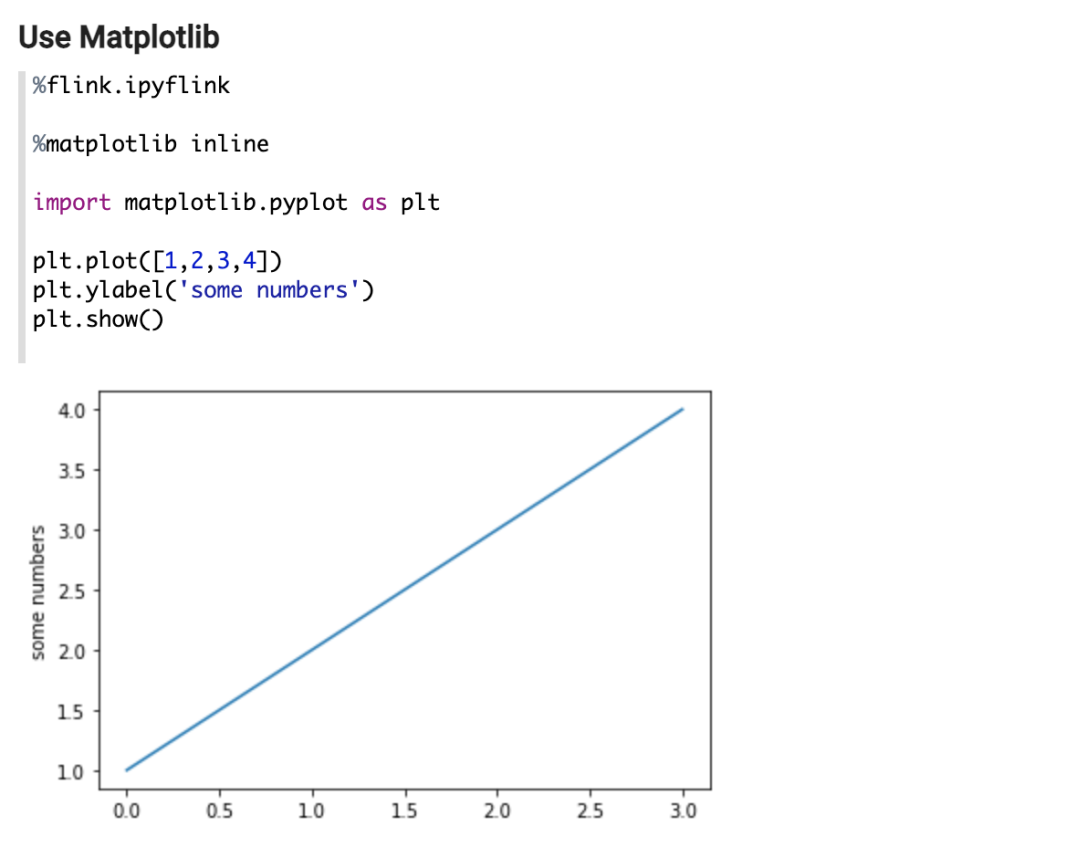

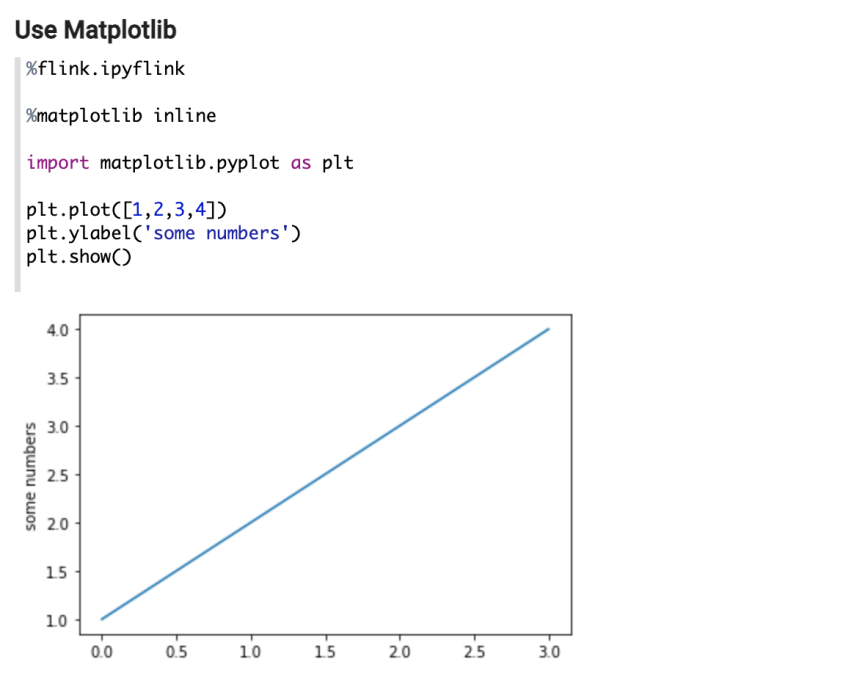

1. A third-party Python library, such as matplotlib, can be used on the PyFlink client:

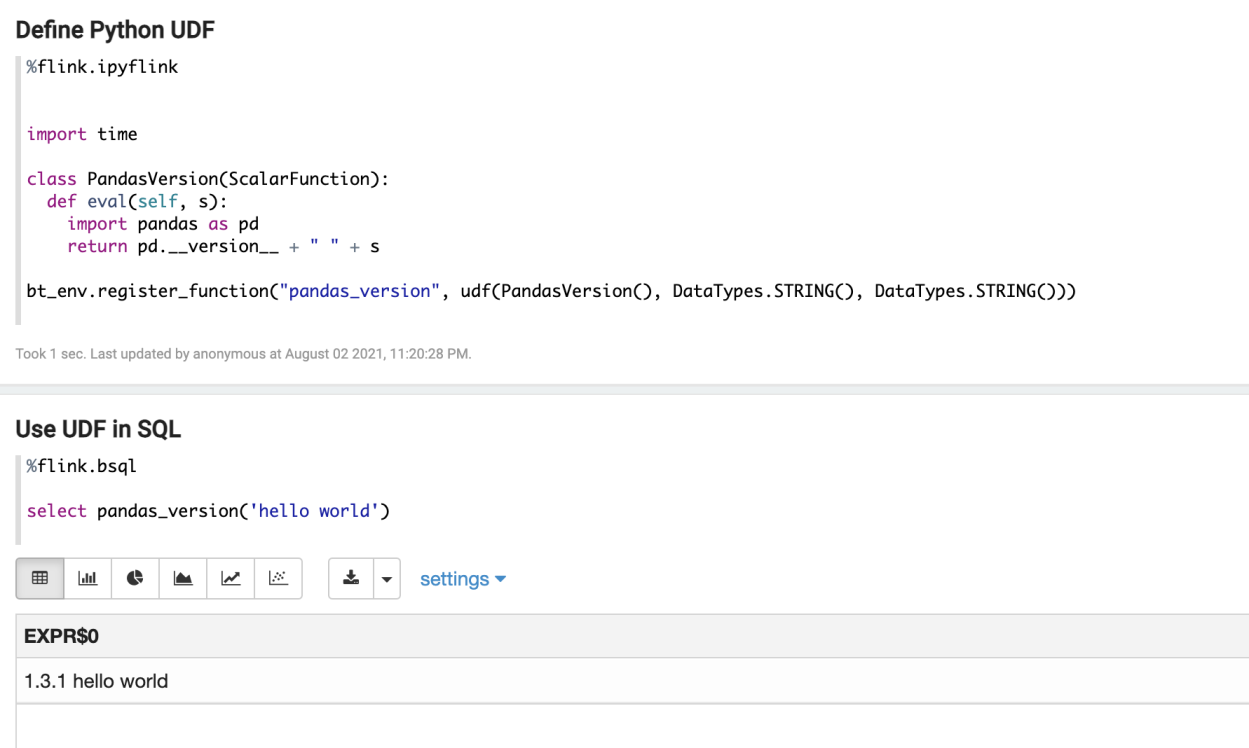

2. Users can use third-party Python libraries in PyFlink UDF, such as:

Next, let's learn how to implement it:

We will not describe the details of building the latest version of Zeppelin here. If you have any questions, you can join the Flink on Zeppelin DingTalk group (34517043) for consultations.

Note: The Zeppelin deployment cluster needs to be performed on a Linux system. If you use macOS, the Conda environment on macOS cannot be used in the Yarn cluster because the Conda packages are incompatible with different systems.

Download Flink 1.13.

Note: The functions introduced in this article can only be used in Flink 1.13 or above. Then:

Install the following software (which is used to create Conda env):

Next, users can build and use PyFlink in Zeppelin.

Since Zeppelin inherently supports Shell, users can use Shell in Zeppelin to create a PyFlink environment. Note: The Python third-party packages here are required by the PyFlink client (JobManager), such as Matplotlib. Please ensure that at least the following packages are installed:

The remaining packages can be specified as needed:

%sh

# make sure you have conda and momba installed.

# install miniconda: https://docs.conda.io/en/latest/miniconda.html

# install mamba: https://github.com/mamba-org/mamba

echo "name: pyflink_env

channels:

- conda-forge

- defaults

dependencies:

- Python=3.7

- pip

- pip:

- apache-flink==1.13.1

- jupyter

- grpcio

- protobuf

- matplotlib

- pandasql

- pandas

- scipy

- seaborn

- plotnine

" > pyflink_env.yml

mamba env remove -n pyflink_env

mamba env create -f pyflink_env.ymlRun the following code to package the Conda environment of PyFlink and upload it to HDFS. Note: The file format packaged here is tar.gz:

%sh

rm -rf pyflink_env.tar.gz

conda pack --ignore-missing-files -n pyflink_env -o pyflink_env.tar.gz

hadoop fs -rmr /tmp/pyflink_env.tar.gz

hadoop fs -put pyflink_env.tar.gz /tmp

# The Python conda tar should be public accessible, so need to change permission here.

hadoop fs -chmod 644 /tmp/pyflink_env.tar.gzRun the following code to create a PyFlink Conda environment on TaskManager. The PyFlink environment on TaskManager contains at least the following two packages:

The remaining packages are the packages that Python UDF needs to rely on. For example, the pandas package is specified here:

echo "name: pyflink_tm_env

channels:

- conda-forge

- defaults

dependencies:

- Python=3.7

- pip

- pip:

- apache-flink==1.13.1

- pandas

" > pyflink_tm_env.yml

mamba env remove -n pyflink_tm_env

mamba env create -f pyflink_tm_env.ymlRun the following code to package the Conda environment of PyFlink and upload it to HDFS. Note: Zip format is used here.

%sh

rm -rf pyflink_tm_env.zip

conda pack --ignore-missing-files --zip-symlinks -n pyflink_tm_env -o pyflink_tm_env.zip

hadoop fs -rmr /tmp/pyflink_tm_env.zip

hadoop fs -put pyflink_tm_env.zip /tmp

# The Python conda tar should be public accessible, so need to change permission here.

hadoop fs -chmod 644 /tmp/pyflink_tm_env.zipNow, users can use the Conda environment created above in Zeppelin. First, users need to configure Flink in Zeppelin. The main configuration options are:

flink.execution.mode is yarn-application, and the methods described in this article are only applicable to yarn-application mode.yarn.ship-archives, zeppelin.pyflink.Python, and zeppelin.interpreter.conda.env.name to configure the PyFlink Conda environment on the JobManager side.Python.archives and Python.executable to specify the PyFlink Conda environment on the TaskManager side.flink.jm.memory and flink.tm.memory here.%flink.conf

flink.execution.mode yarn-application

yarn.ship-archives /mnt/disk1/jzhang/zeppelin/pyflink_env.tar.gz

zeppelin.pyflink.Python pyflink_env.tar.gz/bin/Python

zeppelin.interpreter.conda.env.name pyflink_env.tar.gz

Python.archives hdfs:///tmp/pyflink_tm_env.zip

Python.executable pyflink_tm_env.zip/bin/Python3.7

flink.jm.memory 2048

flink.tm.memory 20481. In the following example, the JobManager Conda environment created above can be used on the PyFlink client (JobManager side), such as Matplotlib:

2. The following example uses the library in the Conda environment on the TaskManager side created above in the PyFlink UDF, such as Pandas in the UDF:

This article uses Conda in a Zeppelin notebook to create Python env and deploy it to a Yarn cluster automatically. Users do not need to install any Pyflink packages on the cluster manually, and you can use multiple versions of PyFlink in a Yarn cluster at the same time.

Each PyFlink environment is isolated and can be customized to change the Conda environment at any time. You can download the following note and import it into Zeppelin to review the content we introduced today: http://23.254.161.240/#/notebook/2G8N1WTTS

In addition, there are many areas for improvement:

206 posts | 56 followers

FollowApache Flink Community China - April 23, 2020

Alibaba Clouder - September 2, 2019

Alibaba Clouder - August 10, 2020

降云 - January 12, 2021

Alibaba Container Service - April 8, 2025

Data Geek - May 9, 2023

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Apache Flink Community