PyFlink is a Python API for Apache Flink. It allows users to write Flink programs in Python and execute them on a Flink cluster.

This article introduces PyFlink from the following aspects:

We hope readers will gain a basic understanding of PyFlink and what to do with it after reading this article.

Basically, if you have requirements of real-time computing (such as real-time ETL, real-time feature engineering, real-time data warehouse, real-time prediction, etc.), and you are familiar with Python language or want to use some handy Python libraries during the processing, it may be a good idea to try PyFlink, which connects Flink with the Python world.

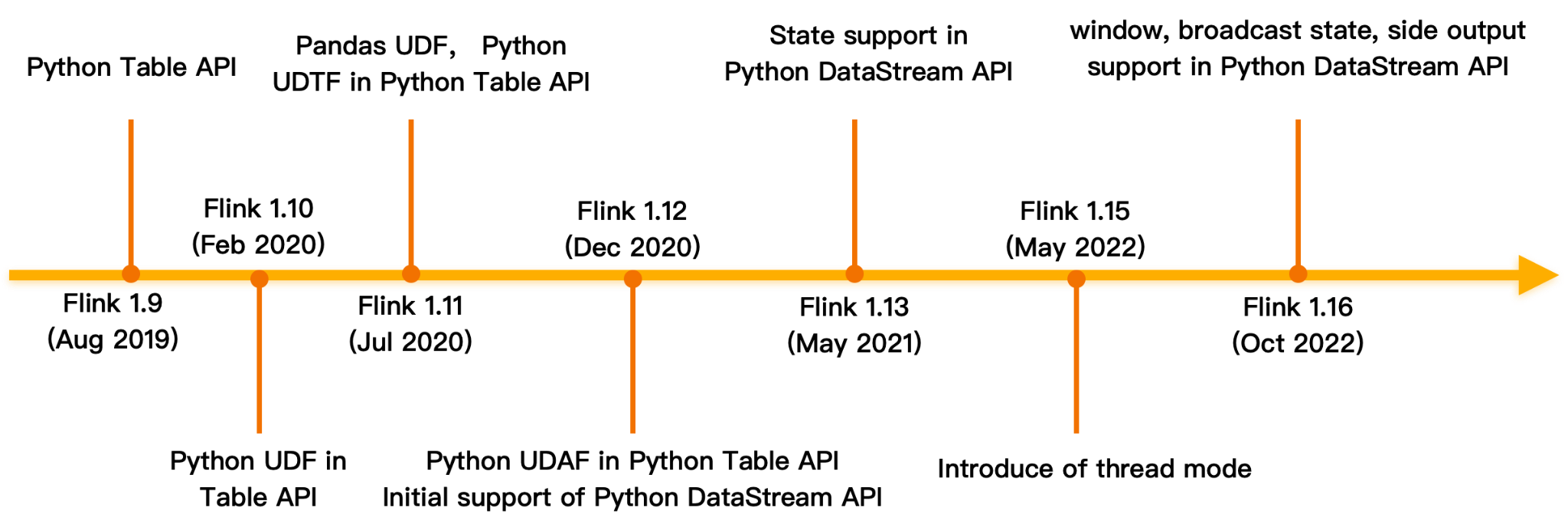

PyFlink was first introduced into Flink in Flink 1.9, which dates back to 2019. In this first version, the supported functionalities were very limited. After that, the Flink community was continuously improving it. After nearly four years of development, it has become more mature. Currently, it supports most of the functionalities in Flink Java API. Besides, there are many functionalities only available in PyFlink (such as Python user-defined function support).

The simplest way to install PyFlink is from PyPI:

pip install apache-flinkThe latest version (Flink 1.16) only supports Python 3.6, 3.7, 3.8, and 3.9. Python 3.10 will be supported in the upcoming Flink 1.17.

It should be noted that if you want to execute PyFlink jobs in a Flink cluster, you should make sure PyFlink is available in all the cluster nodes. You need to preinstall it in all the cluster nodes before submitting PyFlink jobs or attach a Python virtual environment with PyFlink installed during submitting PyFlink jobs. Please see submitting PyFlink jobs for more details. The latter case is more flexible, as it means the Python environments are isolated for different jobs. This allows different PyFlink jobs to use different Python libraries and even different Python versions.

Preinstalling PyFlink in all the cluster nodes is not very friendly for users as it increases the burden on users to get started. However, this is unavoidable as there are many transitive dependencies of PyFlink (such as Apache Beam and Pandas). Besides, PyFlink is optimized via Cython, so there are native binaries. Users may not be able to simply attach the PyFlink package and all the transitive dependencies during submitting PyFlink jobs.

Besides installing from PyPI, you could build PyFlink from the source. This is useful when you want to maintain your fork of Flink or cherry-pick some bug fixes which are still not released.

Flink is a distributed computing engine. It has no storage (besides the state), which is the immediate information during processing and is usually not used as the data source and sink of a Flink job. In order to create a Flink/PyFlink job, you need to define where the data you want to process comes from and where the execution results will be written (optional).

There are two levels of API in PyFlink (same as Java): Table API (relational and declarative API) and DataStream API (imperative API). It has provided separate ways to define source and sink in each API stack. Besides, in each API stack, it has provided multiple ways to define source and sink. We will give a few simple examples below:

Reading from Kafka using DataStream API:

source = KafkaSource.builder() \

.set_bootstrap_servers("localhost:9092") \

.set_topics("input-topic") \

.set_group_id("my-group") \

.set_starting_offsets(KafkaOffsetsInitializer.earliest()) \

.set_value_only_deserializer(

JsonRowDeserializationSchema.builder()

.type_info(Types.ROW([Types.LONG(), Types.STRING()]))

.build()) \

.build()

env = StreamExecutionEnvironment.get_execution_environment()

ds = env.from_source(source, WatermarkStrategy.no_watermarks(), "Kafka Source")Reading from Kafka using Table API:

env_settings = EnvironmentSettings.in_streaming_mode()

t_env = TableEnvironment.create(env_settings)

t_env.create_temporary_table(

'kafka_source',

TableDescriptor.for_connector('kafka')

.schema(Schema.new_builder()

.column('id', DataTypes.BIGINT())

.column('data', DataTypes.STRING())

.build())

.option('properties.bootstrap.servers', 'localhost:9092')

.option('properties.group.id', 'my-group')

.option('topic', 'input-topic')

.option('scan.startup.mode', 'earliest-offset')

.option('value.format', 'json')

.build())

table = t_env.from_path("kafka_source")Writing to Kafka using DataStream API:

sink = KafkaSink.builder() \

.set_bootstrap_servers('localhost:9092') \

.set_record_serializer(

KafkaRecordSerializationSchema.builder()

.set_topic("topic-name")

.set_value_serialization_schema(

JsonRowSerializationSchema.builder()

.with_type_info(Types.ROW([Types.LONG(), Types.STRING()]))

.build())

.build()

) \

.set_delivery_guarantee(DeliveryGuarantee.AT_LEAST_ONCE) \

.build()

ds.sink_to(sink)Writing to Kafka using Table API:

env_settings = EnvironmentSettings.in_streaming_mode()

t_env = TableEnvironment.create(env_settings)

t_env.create_temporary_table(

'kafka_sink',

TableDescriptor.for_connector('kafka')

.schema(Schema.new_builder()

.column('id', DataTypes.BIGINT())

.column('data', DataTypes.STRING())

.build())

.option('properties.bootstrap.servers', 'localhost:9092')

.option('topic', 'output-topic')

.option('value.format', 'json')

.build())

table.execute_insert('kafka_sink')Here are a few things worth noticing:

Besides the usage above, there are many other ways to define source and sink. You could refer to this link for more information about Table API connectors and this link about DataStream API connectors.

It should be noted that it also supports conversion between Table API and DataStream API. This means you could define a job reading data using Table API connectors, convert it to a DataStream and write it out using DataStream API connectors, or convert it back to Table and write the results out using Table API connectors.

After reading out the data from external storage, you could define the transformations you want to perform on the input elements. It has provided many APIs in each API stack (Table API or DataStream API).

DataStream API has provided the following functionalities:

Table API is a relational API that seems very similar to SQL. It has provided the following functionalities:

The section above is a brief introduction to the transformations that could be performed on the data. We will not talk about the details of each functionality in this article. However, there are a few things worth noticing:

Flink is a distributed compute engine that can execute Flink jobs in a standalone cluster, YARN cluster, and K8s cluster in addition to executing locally.

If you already have a PyFlink job (such as word_count.py), you could execute it locally via python word_count.py or right click and execute it in IDE. This way, it will launch a mini Flink cluster that runs in a single process to execute the PyFlink job.

It also supports submitting a PyFlink job to a remote cluster (such as a standalone cluster, YARN, K8s, etc.) via Flink’s command line tool.

This simple example shows how to execute a PyFlink job to the YARN cluster:

./bin/flink run-application -t yarn-application \

-Djobmanager.memory.process.size=1024m \

-Dtaskmanager.memory.process.size=1024m \

-Dyarn.application.name=<ApplicationName> \

-Dyarn.ship-files=/path/to/shipfiles \

-pyarch shipfiles/venv.zip \

-pyclientexec venv.zip/venv/bin/python3 \

-pyexec venv.zip/venv/bin/python3 \

-pyfs shipfiles \

-pym word_countMore information about job submission could be referred to this link.

In the beginning, Python user-defined functions are executed in separate Python processes launched during job startup. This is not easy to debug as users have to make some changes to the Python user-defined functions to enable remote debugging.

Since Flink 1.14, it could execute Python user-defined functions in the same Python process on the client side in local mode. Users could set breakpoints in any place they want to debug (such as PyFlink framework code, Python user-defined functions, etc.). This makes debugging PyFlink jobs very easy, just like debugging any other usual Python program.

Users could also use logging inside the Python user-defined functions for debugging purposes. It should be noted that the logging messages will appear in the logging file of the TaskManagers instead of the console.

import logging

@udf(result_type=DataTypes.BIGINT())

def add(i, j):

logging.info("i: " + i + ", j: " + j)

return i + jIt also supports Metrics in the Python user-defined functions. This is very useful for long running programs and could be used to monitor specific statistics and configure alerts.

For a production job, it’s very likely that you want to refer to some third-party Python libraries. You may also need to use some connectors for which the jar files are not part of the Flink distribution. Most connectors are not bundled in Flink distribution (such as Kafka, HBase, Hive, Elasticsearch, etc.).

This may not be a big problem for programs that only need to run in a single machine. However, PyFlink jobs need to be executed in a distributed cluster. Providing an easy way to manage and distribute the dependencies across the cluster is changing but very useful. PyFlink has provided comprehensive solutions for all these kinds of dependencies.

1) Jar Files

It allows to specify the jar files as the following:

# DataStream API

env.add_jars("file:///my/jar/path/connector1.jar", "file:///my/jar/path/connector2.jar")

# Table API

t_env.get_config().set("pipeline.jars", "file:///my/jar/path/connector.jar;file:///my/jar/path/udf.jar")Note: All the transitive dependencies should be specified. So, for connectors, it’s advised to use the fat jar whose name usually contains sql (such as flink-sql-connector-kafka-1.16.0.jar for Kafka connector instead of flink-connector-kafka-1.16.0.jar).

2) Third-Party Python Libraries

You could specify third-party Python libraries as the following:

# DataStream API

env.add_python_file(file_path)

# Table API

t_env.add_python_file(file_path)The specified Python libraries will be distributed across all the cluster nodes and are available to use in the Python user-defined functions during execution.

3) Python Virtual Environment

If there are many Python libraries needed to be used, it may be a good practice to package the Python virtual environment together with the Python dependencies:

# Table API

t_env.add_python_archive(archive_path="/path/to/venv.zip")

t_env.get_config().set_python_executable("venv.zip/venv/bin/python3")

# DataStream API

env.add_python_archive(archive_path="/path/to/venv.zip")

env.set_python_executable("venv.zip/venv/bin/python3")Besides API, it has provided configurations and command line options for all these kinds of dependencies to give users more flexibility.

| Configuration | Command Line Options | |

| Jar Package | pipeline.jars pipeline.classpaths |

--jarfile |

| Python libraries | python.files | -pyfs |

| Python virtual environment | python.archives python.executable python.client.executable |

-pyarch -pyexec -pyclientexec |

| Python Requirements | python.requirements | -pyreq |

Please see the Python Dependency Management section of the PyFlink documentation for more details.

Besides the aspects above, there are a few other things worth noticing when developing PyFlink jobs.

If you have a big resource (such as a machine learning model to load in your Python user-defined function), it’s advised to load it in a special method named open. This ensures that it will only be loaded once during job initialization.

# DataStream API

class MyMapFunction(MapFunction):

def open(self, runtime_context: RuntimeContext):

import pickle

with open("resources.zip/resources/model.pkl", "rb") as f:

self.model = pickle.load(f)

def map(self, value):

return self.model.predict(value)

# Table API

class Predict(ScalarFunction):

def open(self, function_context):

import pickle

with open("resources.zip/resources/model.pkl", "rb") as f:

self.model = pickle.load(f)

def eval(self, x):

return self.model.predict(x)

predict = udf(Predict(), result_type=DataTypes.DOUBLE())You could also refer the resources in the Python user-defined function as the following:

with open("resources.zip/resources/model.pkl", "rb") as f:

model = pickle.load(f)

@udf(result_type=DataTypes.DOUBLE())

def predict(x):

return mode.predict(x)The difference between this approach and the approach above is that the loaded resource will be part of the Python user-defined function. During execution, the resource data will be serialized and distributed as part of the Python user-defined function, which may become a problem if the resource data is too huge. However, if we load the resource data in the open method, there will be no such problems.

If event time is enabled, the calculation of some operators (such as window and pattern recognition) is triggered by watermark. If your job has no output, please check whether you have defined the watermark generator.

Usually, there are a few ways to define watermark:

If the watermark generator is defined correctly, another common problem that may cause this issue is that there may be very little test data, and watermark isn’t advancing as expected (please see the Timely Stream Processing section on how watermark works). In this case, you could simply set the parallelism of the job to 1 or configure source idleness to work around this problem during the test phase.

If there are still no outputs after making the changes above, you could visit Flink‘s Web UI to see many detailed statistics for each operator, such as the number of input records and output records of each operator. (You may need to config pipeline.operator-chaining: false to disable the operator chain.)

If the source is unbounded, your jobs will always run. You could visit Flink's web UI on how it runs. You can see a lot of useful information from Flink’s web UI, including how long the job has run, if there are any exceptions, and the number of input/output elements of each operator (transformation).

Flink’s web UI can be accessed differently in different deployment modes:

INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint [] - Web frontend listening at http://localhost:55969.rest.port, which is 8081 by defaultThis section introduces the architecture of PyFlink and the internal designs to help users understand how PyFlink works, especially how Python API programs are translated into Flink jobs and how the Python user-defined functions are executed.

Understanding this is helpful to answer questions like:

Note: We will not discuss the basic concepts in Flink (such as the architecture of Flink, stateful streaming processing, and event time and watermark) in this article. We advise users to read the official Flink documentation to understand these concepts.

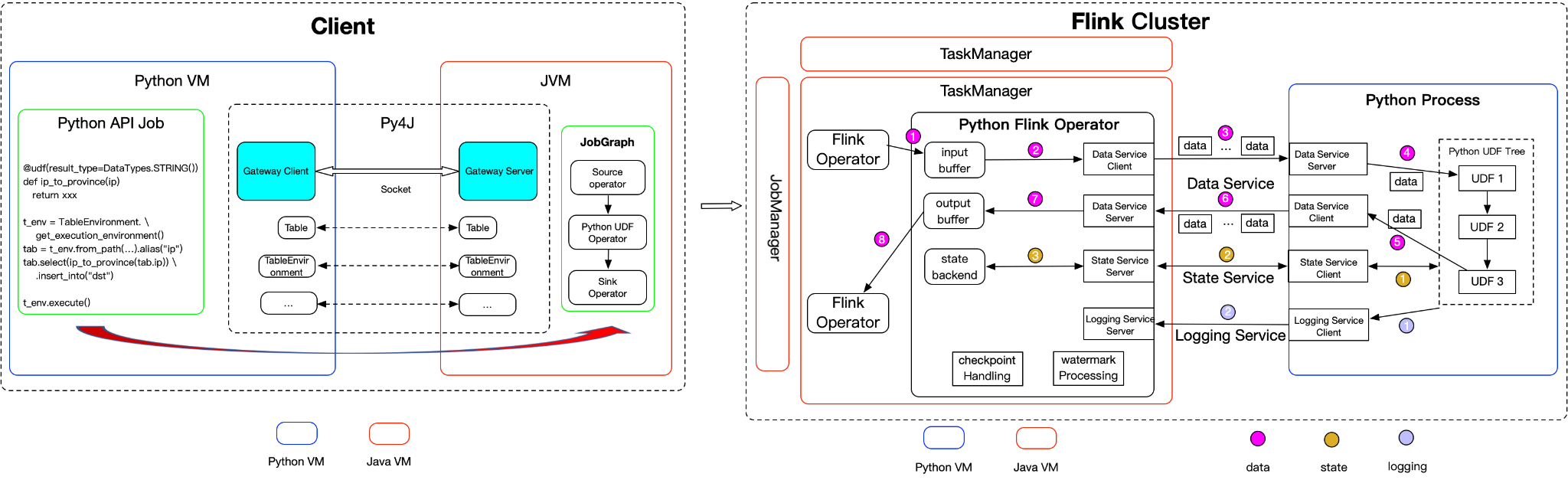

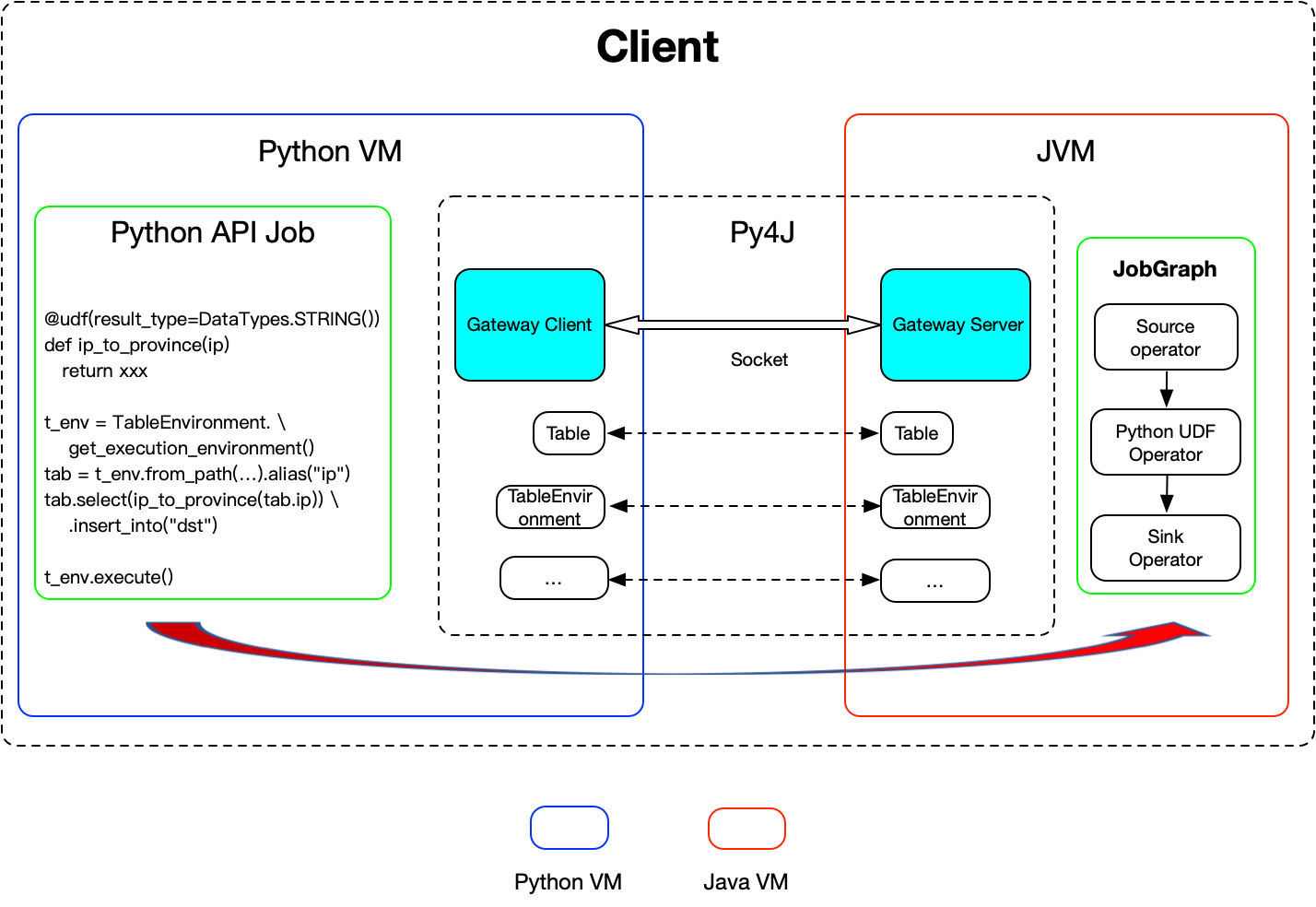

Here is a diagram of the overall architecture of PyFlink. It is mainly composed of two parts:

Architecture of PyFlink

JobGraph is the protocol between a client and a Flink cluster. It contains all the necessary information to execute a job:

Currently, there is no multiple language support for JobGraph. It only supports Java representation. Generally, there are two directions to make it possible to generate a JobGraph in PyFlink recognizable by Flink cluster:

Considering the huge work (such as SQL parsing and query optimization), which have already been done in the Java Table API, it’s unfeasible and unnecessary to duplicate all this work in Python Table API. The community has chosen the second direction. The architecture of job compiling is listed below:

It has leveraged Py4J, which enables Python programs running in a Python process to access the Java objects in a JVM. Methods are called as if the Java objects resided in the Python process. It has provided a corresponding Python API for each Java API, which is simply a wrapping of the Java ones. When users call one Python API in PyFlink, internally it will create a corresponding Java object in JVM and then call the corresponding API on the Java object. So, it reuses the same job compiling stack as the Java API.

This means:

In the previous section, we can see that Python API is simply a wrapping of the Java API. This works well for the APIs we provided. However, there are places where this doesn’t work. Let’s take a look at the following example:

source = KafkaSource.builder() \

.set_bootstrap_servers("localhost:9092") \

.set_topics("input-topic") \

.set_group_id("my-group") \

.set_starting_offsets(KafkaOffsetsInitializer.earliest()) \

.set_value_only_deserializer(

JsonRowDeserializationSchema.builder()

.type_info(Types.ROW([Types.LONG(), Types.STRING()]))

.build()) \

.build()

env = StreamExecutionEnvironment.get_execution_environment()

ds = env.from_source(source, WatermarkStrategy.no_watermarks(), "Kafka Source")

ds.map(lambda x: x[1]).print()

env.execute()For the example above, all the Python methods could be mapped to Flink’s Java API except ds.map(lambda x: x[1]). The reason is that, in Flink’s Java API of map, it takes a Java MapFunction as input, but lambda x: x[1] is a Python function that is not recognizable in Java API. In order to support it, during the compilation phase, we need to serialize and wrap it into a Java wrapper object and spawn a Python process to execute it during job execution.

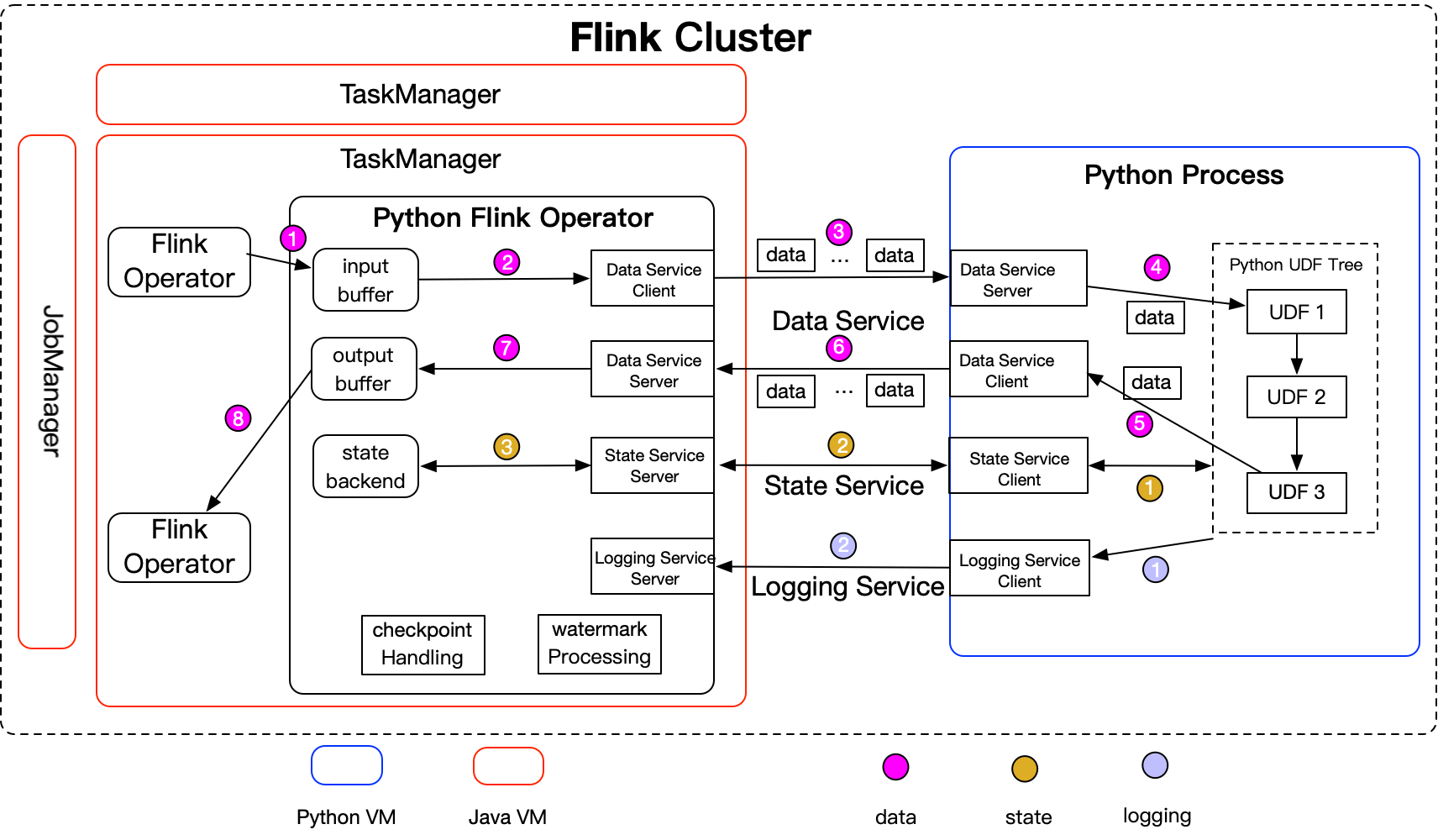

During execution, a Flink job is composed of a series of Flink operators. Each operator accepts inputs from upstream operators, transforms them, and produces outputs to the downstream operators. For transformations where the processing logic is Python, a specific Python operator will be generated:

The following things need to be highlighted:

Launching Python user-defined functions in a separate process works well in most cases, but there are also some problems:

The community has introduced thread mode to overcome the problems above, which executes Python user-defined functions in JVM since Flink 1.15. It’s disabled by default, but you can enable it by setting configuration: python.execution-mode: thread.

When thread mode is enabled, Python user-defined functions will be executed in a very different manner to the process mode:

However, there are some limitations for thread mode, which is why it’s not enabled by default:

You could refer to the article entitled Exploring the thread mode in PyFlink for more details about thread mode.

In the previous section, we can see that it supports access state in Python user-defined functions. Here is an example that shows how to calculate the average value of each group using state:

from pyflink.common.typeinfo import Types

from pyflink.datastream import StreamExecutionEnvironment, RuntimeContext, MapFunction

from pyflink.datastream.state import ValueStateDescriptor

class Average(MapFunction):

def __init__(self):

self.sum_state = None

self.cnt_state = None

def open(self, runtime_context: RuntimeContext):

self.sum_state = runtime_context.get_state(ValueStateDescriptor("sum", Types.INT()))

self.cnt_state = runtime_context.get_state(ValueStateDescriptor("cnt", Types.INT()))

def map(self, value):

# access the state value

sum = self.sum_state.value()

if sum is None:

sum = 0

cnt = self.cnt_state.value()

if cnt is None:

cnt = 0

sum += value[1]

cnt += 1

# update the state

self.sum_state.update(sum)

self.cnt_state.update(cnt)

return value[0], sum / cnt

env = StreamExecutionEnvironment.get_execution_environment()

env.from_collection([(1, 3), (1, 5), (1, 7), (2, 4), (2, 2)]) \

.key_by(lambda row: row[0]) \

.map(Average()) \

.print()

env.execute()In the example above, both sum_state and cnt_state are state objects provided in PyFlink. It allows access to states during job execution, and the states could be recovered after job failover.

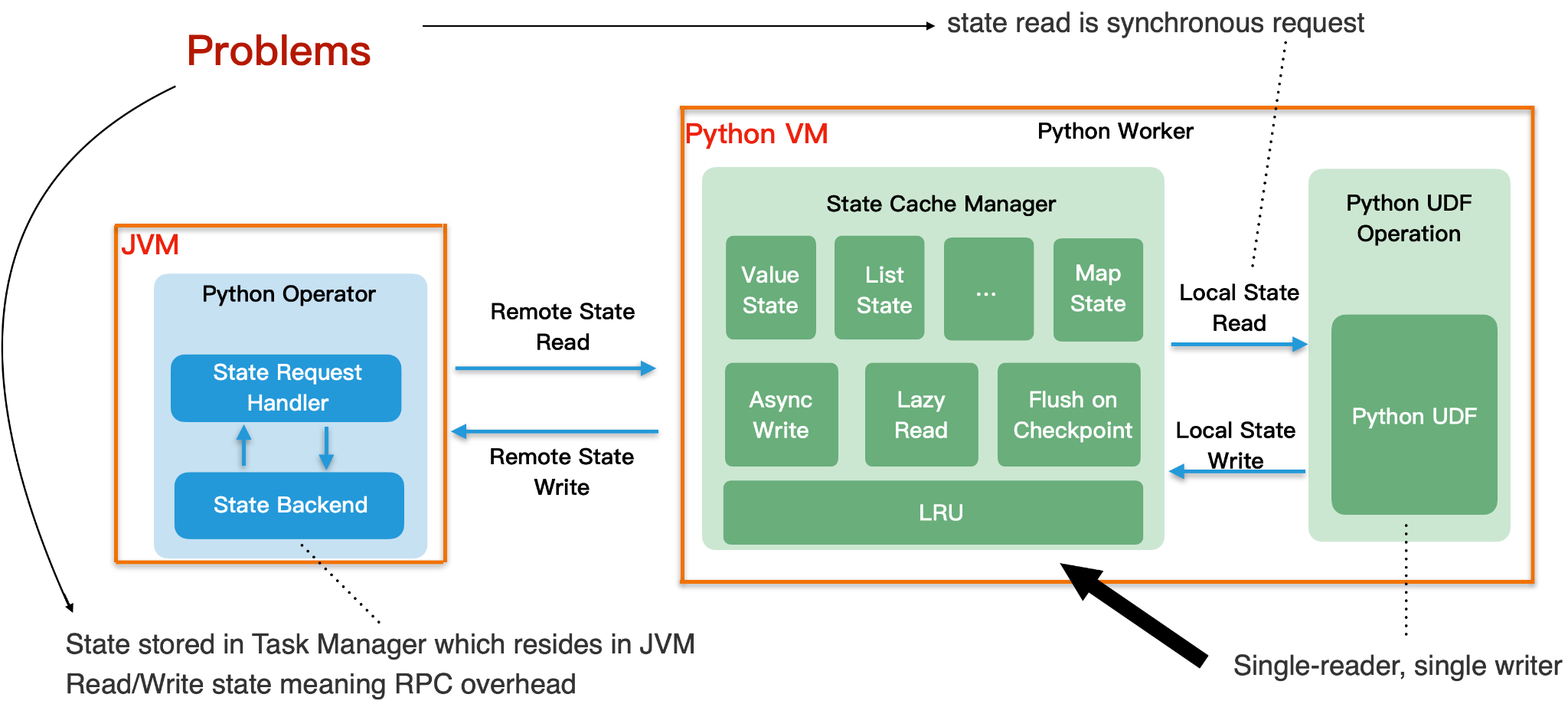

The diagram above shows:

It has made the following optimizations to improve the performance of state access:

Note: The means to tune Flink, Java jobs also apply for PyFlink jobs. There are already many resources discussing this, so we will not discuss them again. This section only discusses some basic ideas to tune the performance of PyFlink jobs. More specifically, we only discuss how to tune the performance of Python operators in this section.

Python operators need to launch a separate Python process to execute Python user-defined functions. It may need to occupy a lot of memory in Python user-defined functions (such as users loading a very big machine learning model).

If the memory configured for the Python process is lower than required, it may affect the stability of the job. For example, if the job runs in K8s or YARN deployment, which restricts the memory, the Python process may crash because memory exceeds the limit.

The following configurations could be used to tune the memory of the Python process:

In process mode, the Python operator sends data to the Python process in batches. It needs to buffer the data before sending them to the Python process to improve the network performance.

During the checkpoint, it needs to wait before all the buffered data is processed. There may be a lot of elements in a batch, but the processing logic in the Python user-defined functions is inefficient, so each checkpoint takes a long time. If you have observed that the checkpoint time is very long (or even fails), you could try to tune the configuration python.fn-execution.bundle.size.

From the previous section, we know that thread mode may be good for performance in cases where the data size is big or when you want to get lower latency. You could simply set the configuration python.execution-mode: thread to enable it.

Currently, the functionalities of PyFlink are mostly ready. Next, the community will spend more effort in the following areas:

Allocate Indexes to Hot and Warm Nodes in Elasticsearch through Shard Filtering

Apache Flink Community China - August 22, 2023

Apache Flink Community China - April 23, 2020

Alibaba Clouder - April 25, 2021

Apache Flink Community China - September 29, 2021

Apache Flink Community China - December 25, 2019

Apache Flink Community China - August 11, 2021

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Data Geek

Dikky Ryan Pratama May 9, 2023 at 5:40 am

very inspiring!