By Sun Jincheng , nicknamed Jinzhu at Alibaba. More on the author at the end of this blog.

Apache Flink, versions 1.9.0 and later, support Python, thus creating PyFlink. In the latest version of Flink, 1.10, PyFlink provides support for Python user-defined functions to enable you to register and use these functions in Table APIs and SQL. But, hearing all of this, you may be still wondering what exactly is PyFlink's architecture and where can I use it as a developer? Written as a quick guide to PyFlink, this article will answer these questions and provide a quick demo in which PyFlink is used to analyze Content Delivery Network (CDN) logs.

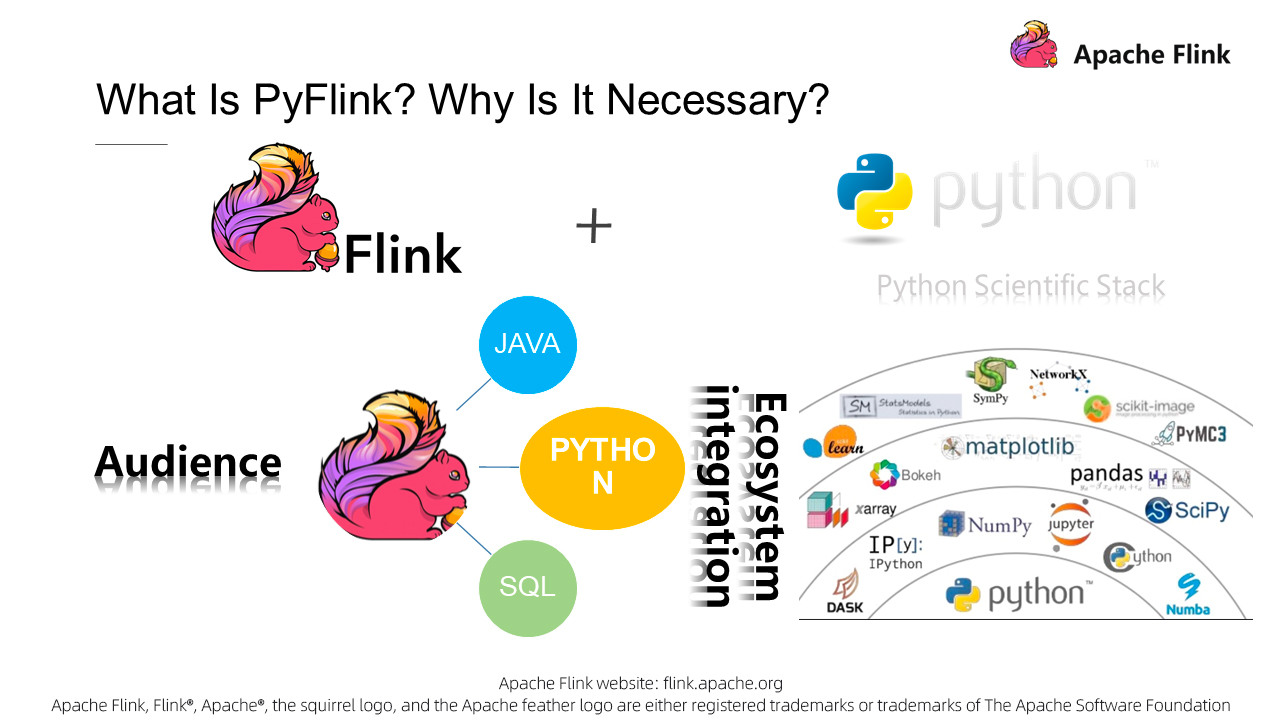

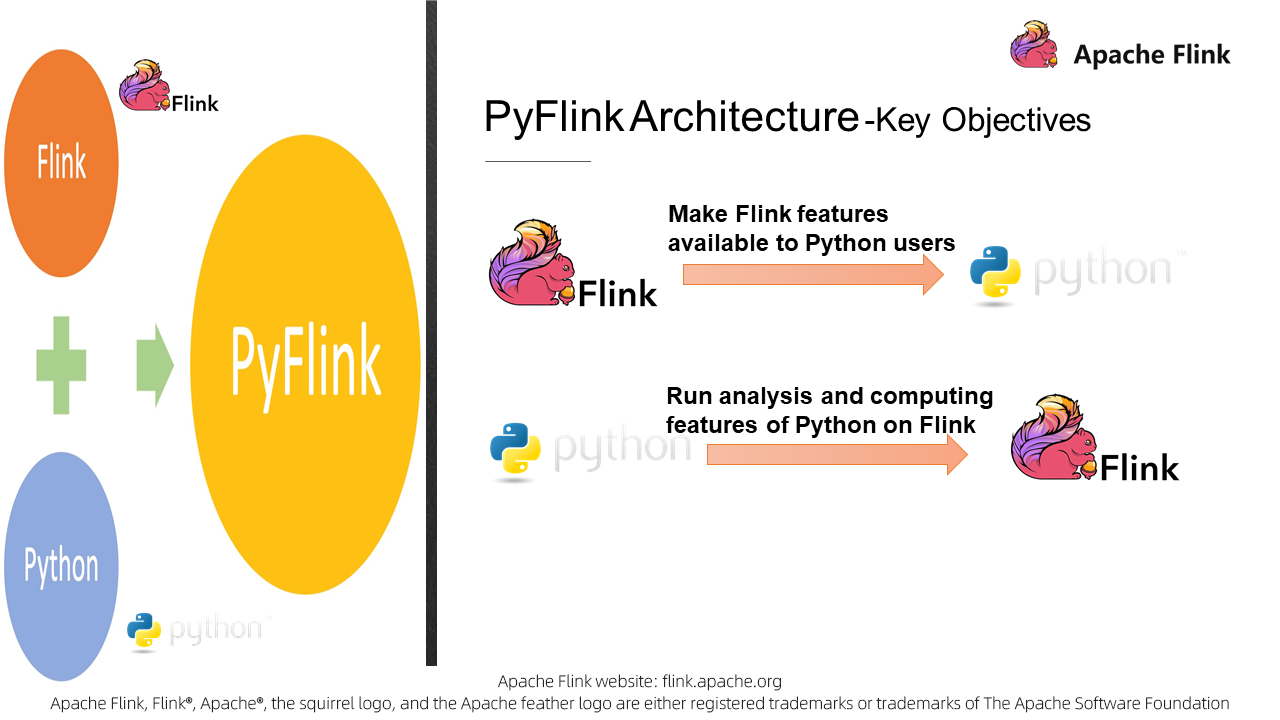

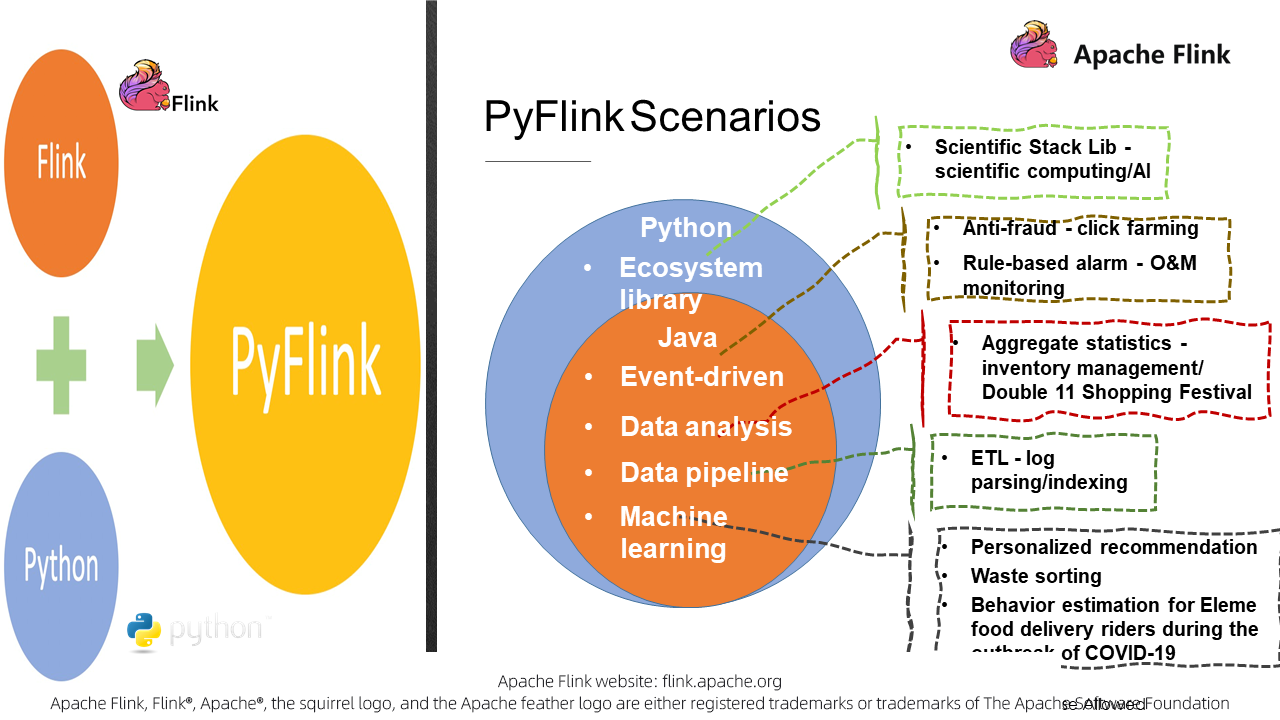

So, what exactly is PyFlink? As its name suggests, PyFlink is simply a combination of Apache Flink with Python, or rather Flink on Python. But what does Flink on Python mean? First, the combination of the two means that you can use all of Flink's features in Python. And, more important than that, PyFlink also allows you to use the computing capabilities of Python's extensive ecosystem on Flink, which can in turn help further facilitate the development of its ecosystem. In other words, it's a win-win for both sides. If you dive a bit deeper into this topic, you'll find that the integration of the Flink framework and Python language is by no means a coincidence.

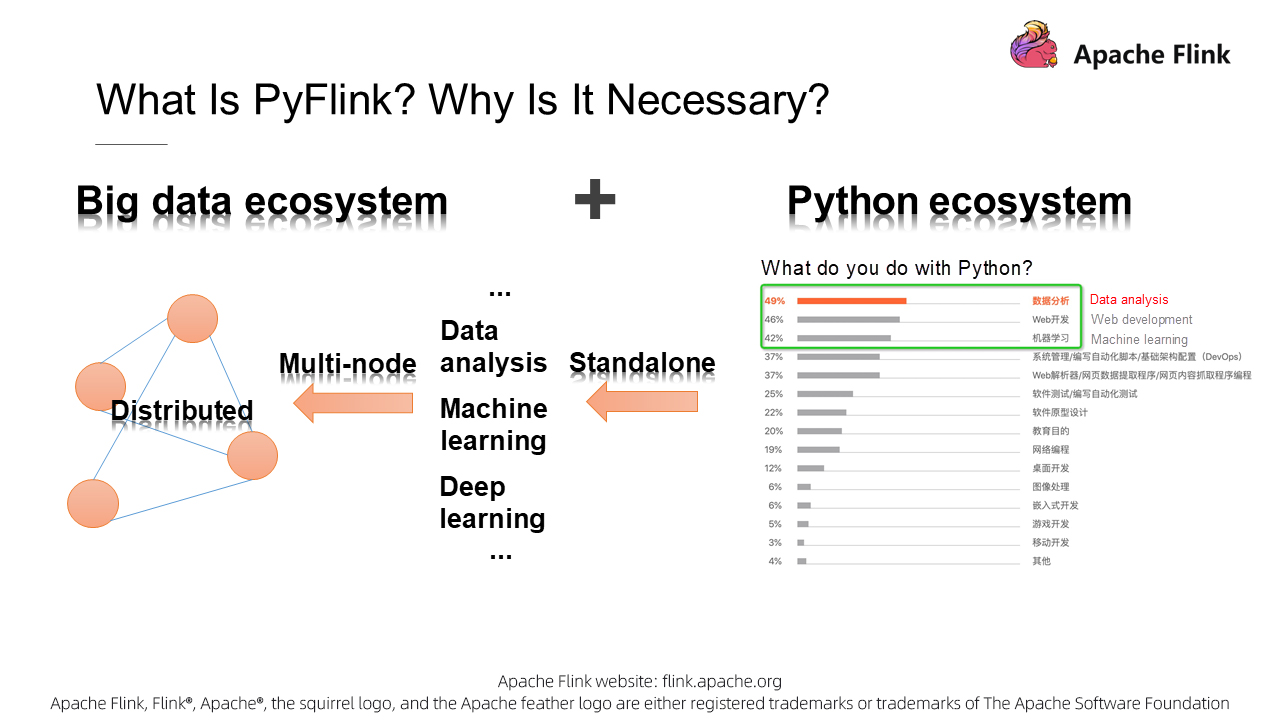

The python language is closely connected to big data. To understand this, we can take a look at some of the practical problems people are solving with Python. A user survey shows that most people are using Python for data analysis and machine learning applications. For these sorts of scenarios, several desirable solutions are also addressed in the big data space. Apart from expanding the audience of big data products, the integration of Python and big data greatly enhances the capabilities of the Python ecosystem by extending its standalone architecture to a distributed architecture. This also explains the strong demand for Python in analyzing massive amounts of data.

The integration of Python and big data is in line with several other recent trends. But, again, why does Flink now support Python, as opposed to Go or R or another language? And also, why do most users choose PyFlink over PySpark and PyHive?

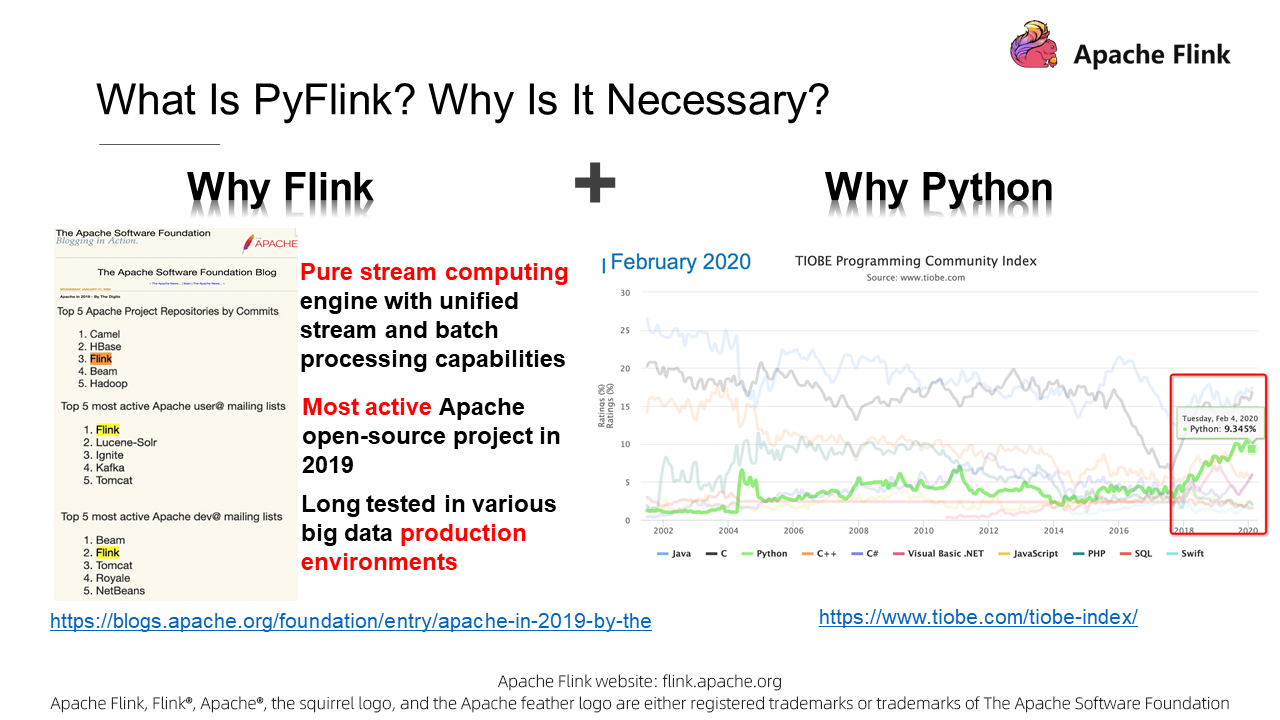

To understand why, let's first consider some of the benefits of using the Flink framework:

Next, let's look at why Flink supports Python instead of other languages. Statistics show that Python is the most popular language after Java and C, and has been rapidly developing since 2018. Java and Scala are Flink's default languages, but it seems reasonable for Flink to support Python.

PyFlink is an inevitable product of the development of related technology. However, understanding the significance of PyFlink is not enough, because our ultimate goal is to benefit Flink and Python users and solve real problems. Therefore, we need to further explore how we can implement PyFlink.

To implement PyFlink, we need to know the key objectives to be achieved and the core issues to be resolved. What are PyFlink's key objectives? In short, the key objectives of PyFlink are detailed as follows:

On this basis, let's analyze the key issues to be resolved for achieving these objectives.

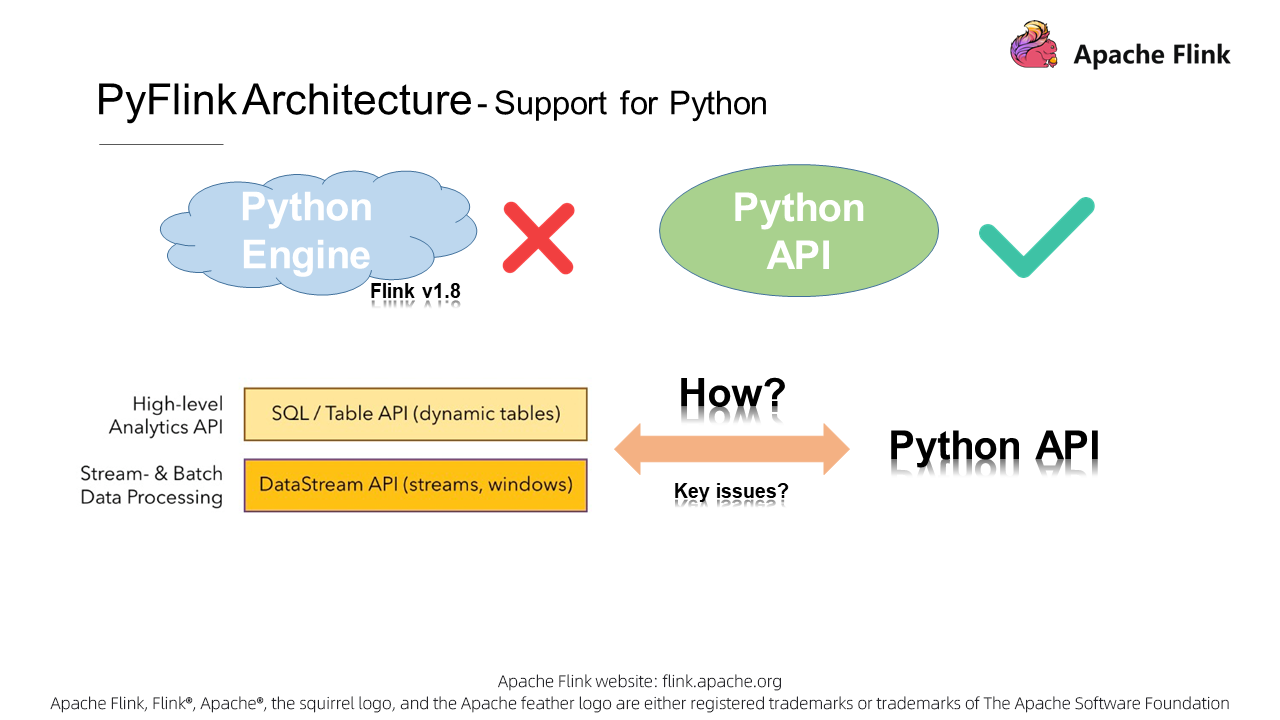

To implement PyFlink, do we need to develop a Python engine on Flink, like the existing Java engine? The answer is no. Attempts were made in Flink versions 1.8 and earlier, but they didn't work well. A basic design principle is to achieve given objectives at minimal costs. The simplest but best way is to provide one layer of Python APIs and reuse the existing computing engine.

Then, what Python APIs should we provide for Flink? They are familiar to us: the high-level Table API and SQL, and the stateful DataStream API. We are now getting closer to Flink's internal logic, and the next step is to provide a Table API and a DataStream API for Python. But, what exactly is the key issue left to be resolved then?

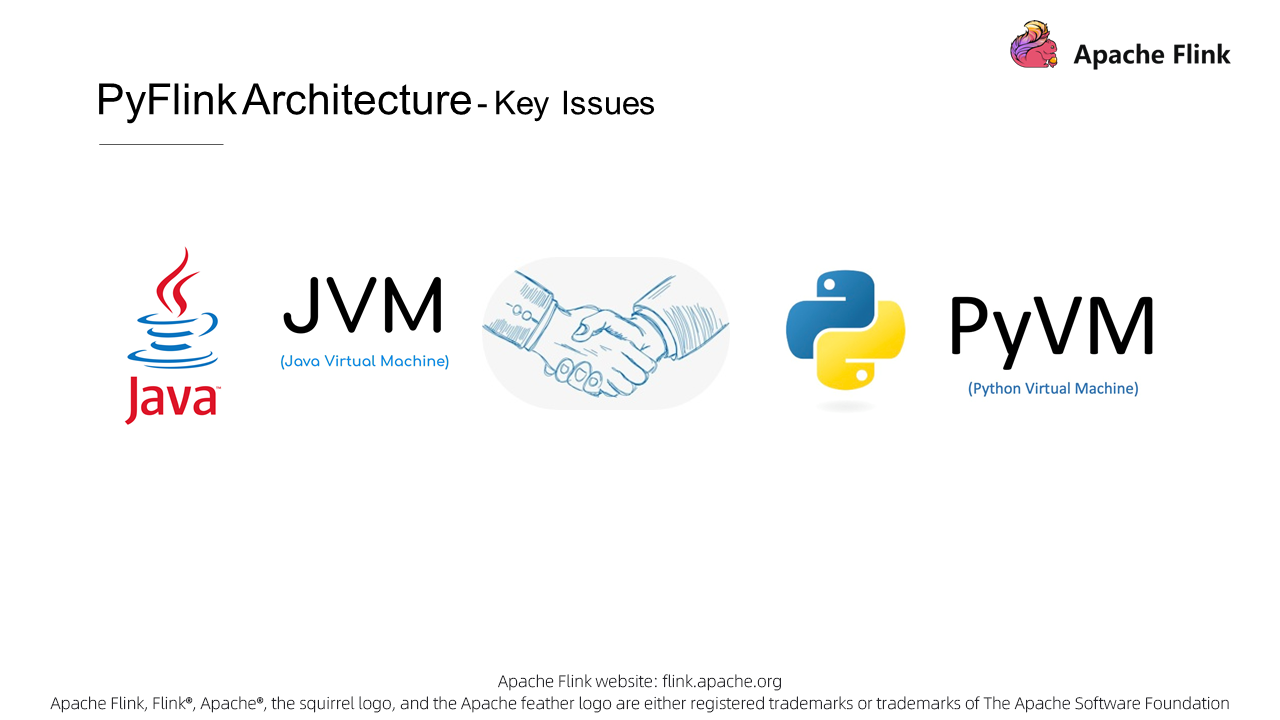

Obviously, the key issue is to establish a handshake between a Python virtual machine (PyVM) and a Java virtual machine (JVM), which is essential for Flink to support multiple languages. To resolve this issue, we must select an appropriate communications technology. So, here we go.

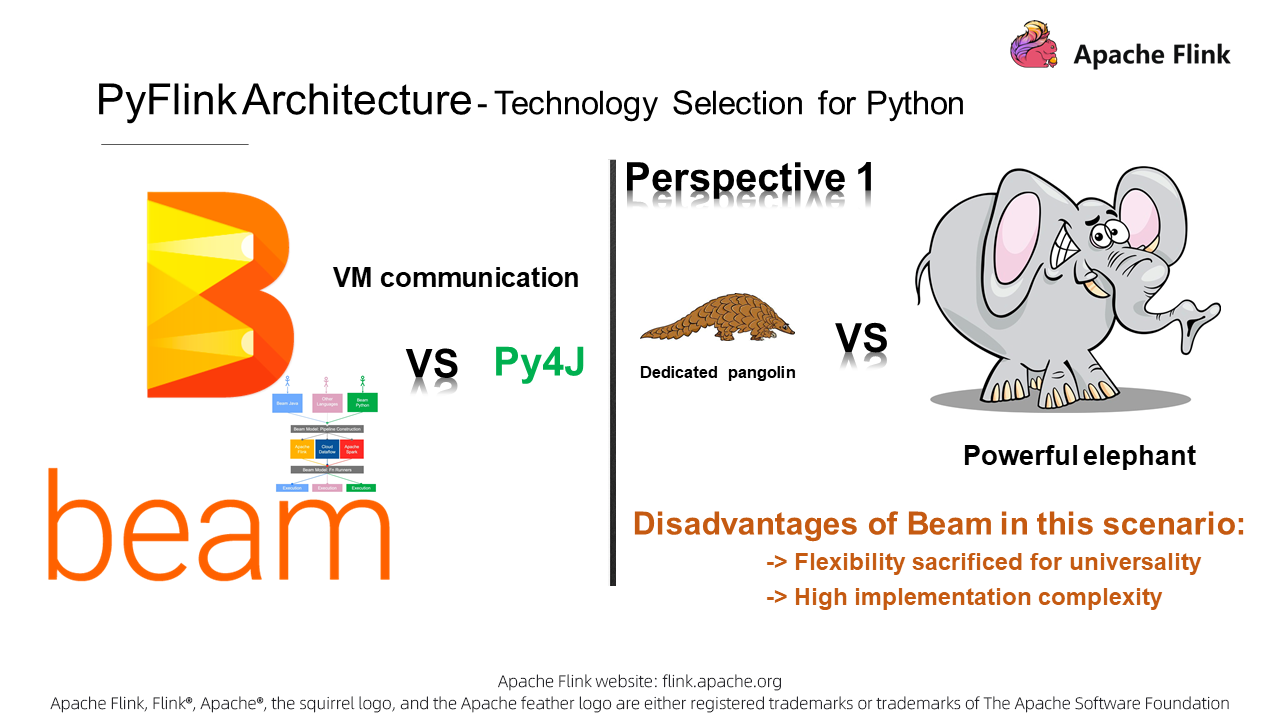

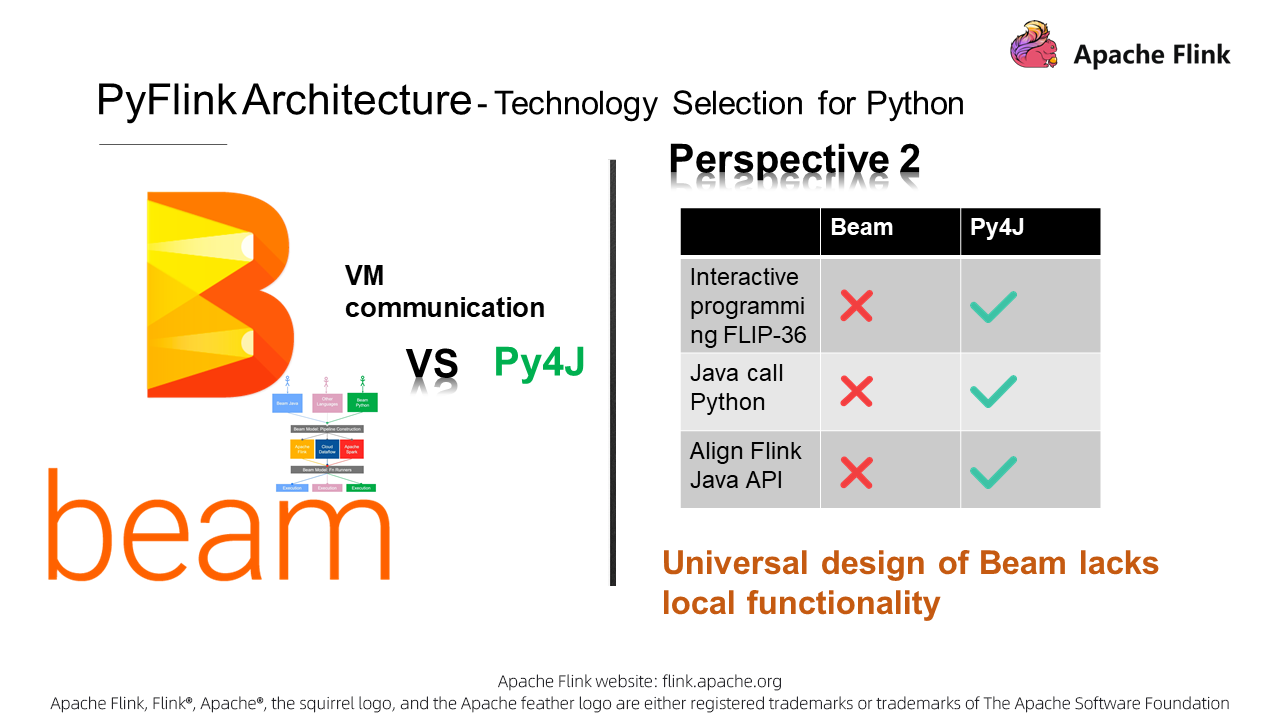

Currently, two solutions are available for implementing communications between PyVMs and JVMs, which are Apache Beam and Py4J. The former is a well-known project with multi-language and multi-engine support, and the latter is a dedicated solution for communication between PyVM and JVM. We can compare and contrast Apache Beam and Py4J from a few different perspectives to understand how they differ. First, consider this analogy: To get past a wall, Py4J would dig a hole in it like a mole, while Apache Beam would tear down the entire wall like a big bear . From this perspective, using Apache Beam to implement VM communication is somewhat complicated. In short, this is because Apache Beam focuses on universality and lacks flexibility in extreme cases.

Besides this, Flink requires interactive programming like FLIP-36. Moreover, for Flink to work properly, we also need to ensure that there is semantic consistency in its API design, especially with regard to its multi-language support. The existing architecture of Apache Beam cannot meet these requirements, and so the answer is clear that Py4J is the best option for supporting communications between PyVMs and JVMs.

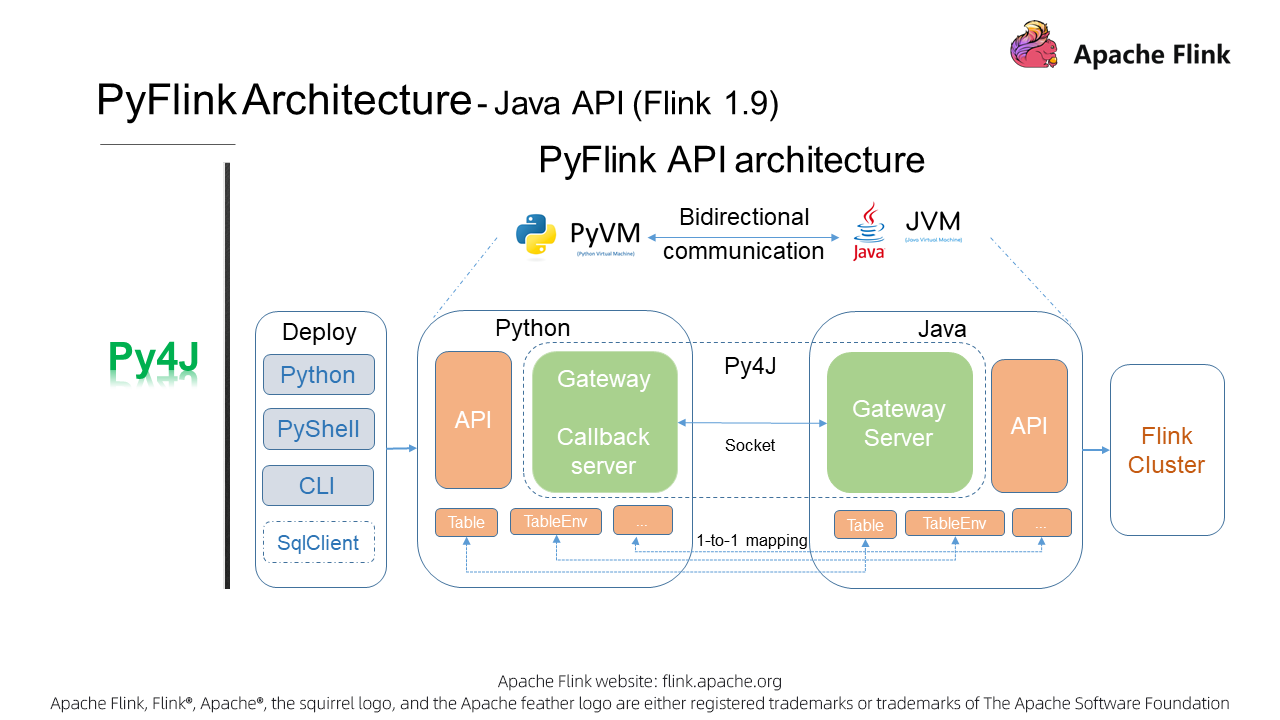

After establishing communications between a PyVM and JVM, we have achieved our first objective for making Flink features available to Python users. We achieved this already in Flink version 1.9. So, now, let's take a look at the architecture of the PyFlink API in Flink version 1.9:

Flink version 1.9 uses Py4J to implement virtual machine communications. We enabled a gateway for the PyVM, and a gateway server for the JVM to receive Python requests. In addition, we also provided objects such as TableENV and Table in the Python API, which are the same as those provided in the Java API. Therefore, the essence of writing the Python API is about how to call the Java API. Flink version 1.9 also resolved the issue of job deployment. It enables you to submit jobs through various ways, such as running Python commands and using the Python shell and CLI.

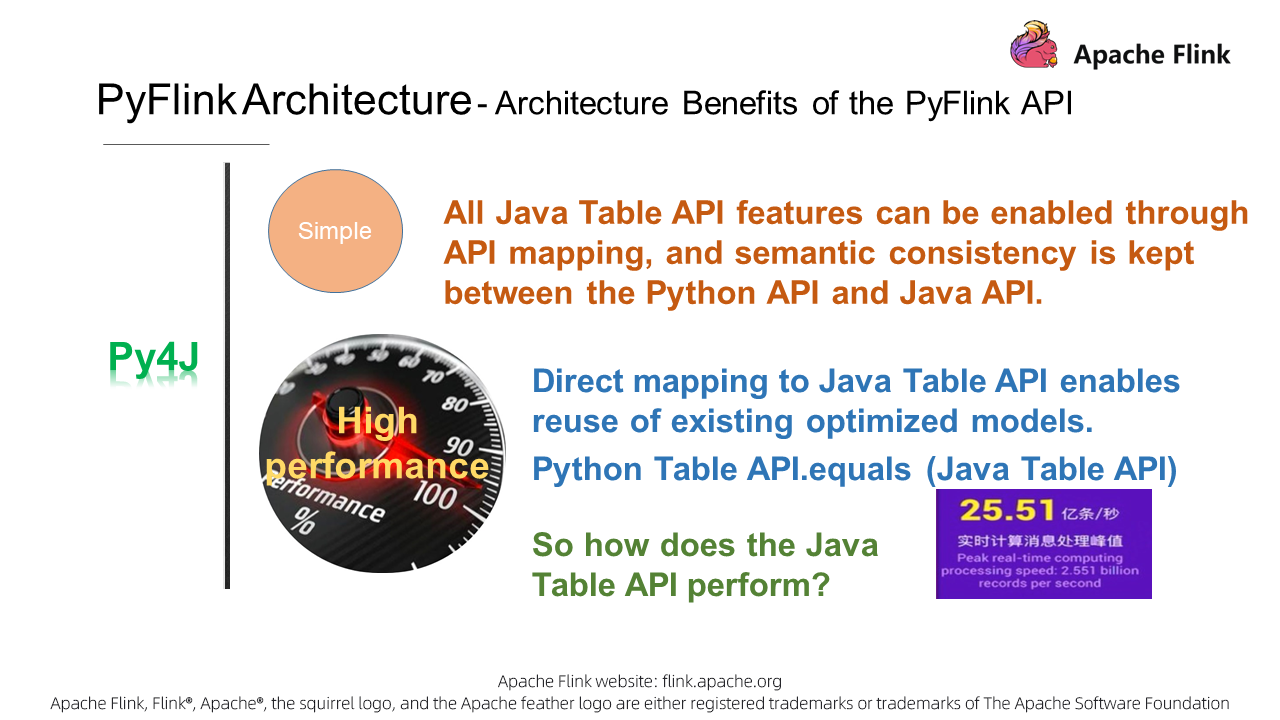

But, what advantages does this architecture provide? First, the architecture is simple, and ensures semantic consistency between the Python API and Java API. Second, it also provides superb Python job handling performance comparable to that of Java jobs. For example, the Flink Java API was able to process 2.551 billion data records per second during last year's Double 11.

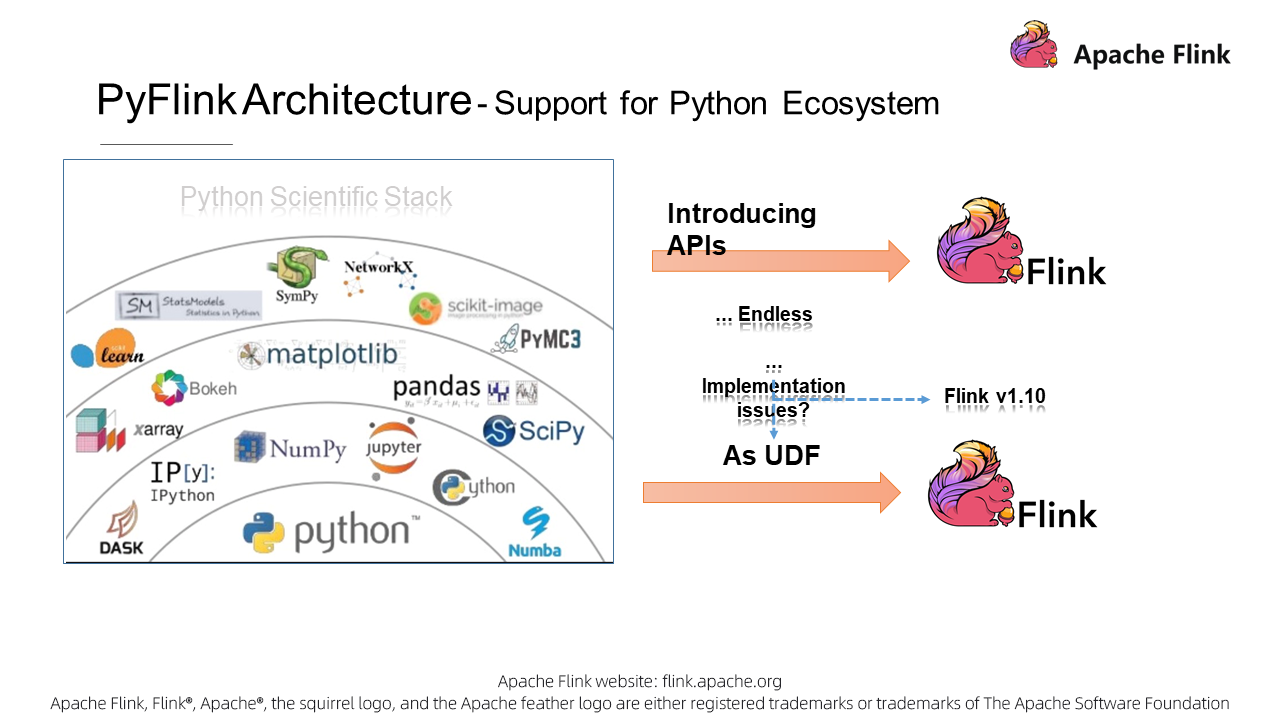

The previous section describes how to make Flink features available to Python users. This section shows you how to run Python functions on Flink. Generally, we can run Python functions on Flink in one of two ways:

Next, let's select a technology for this key issue.

Executing Python user-defined functions is actually quite complex. It involves not only communication between virtual machines, but also it also involves all of the following: managing the Python execution environment, parsing business data exchanged between Java and Python, passing the state backends in Flink to Python, and monitoring the execution status. With all of this complexity, this is the time for Apache Beam to come into play. As a big bear that supports multiple engines and languages, Apache Beam can do a lot to help out this kind of situation, so let's see just how Apache Beam deals with executing Python user-defined functions.

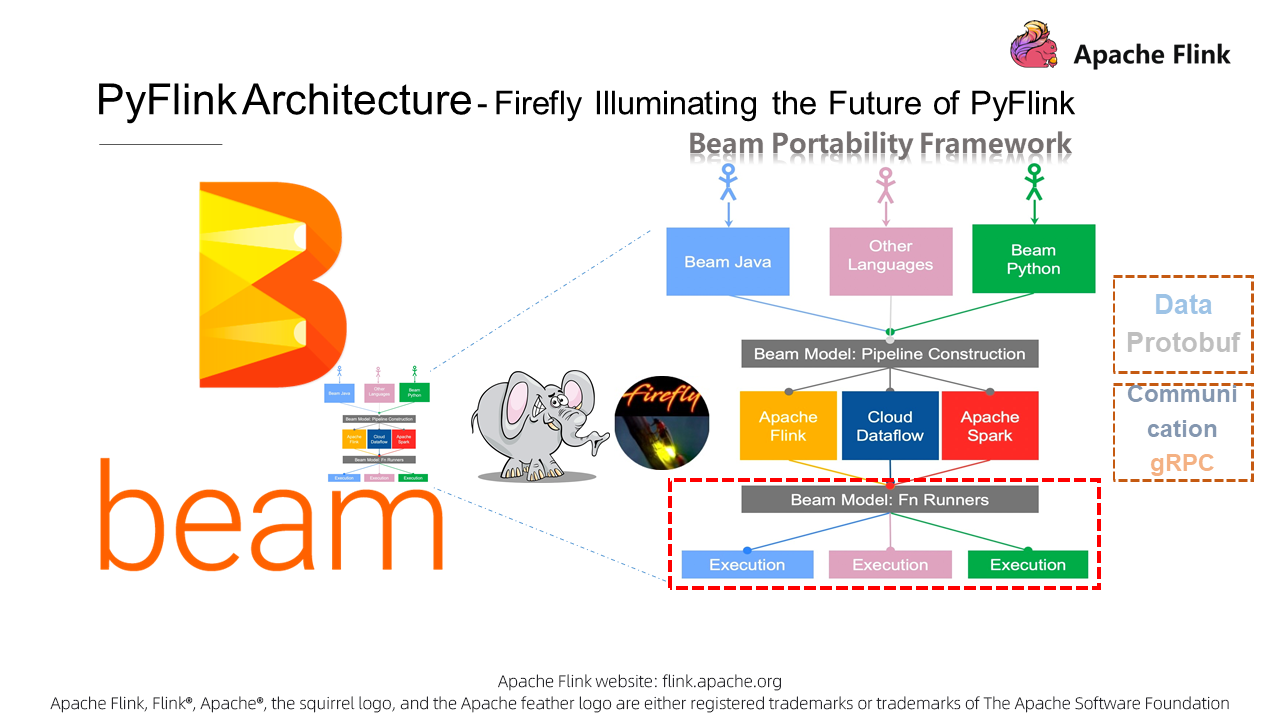

Below the Portability Framework is shown, which is a highly abstract architecture for Apache Beam that is designed to support multiple languages and engines. Currently, Apache Beam supports several different languages, which include Java, Go, and Python. Beam Fn Runners and Execution, situated in the lower part of the figure, indicate the engines and user-defined function execution environments. Apache Beam uses Protocol Buffers, also often referred to as Protobuf, to abstract the data structures, so to enable communication over the gRPC protocol and encapsulate core gRPC services. In this aspect, Apache Beam is more like a firefly that illuminates the path of user-defined function execution in PyFlink. Interestingly, the firefly has become Apache Beam's mascot, so perhaps no coincidence there.

Next, let's take a look at the gRPC services that Apache Beam provides.

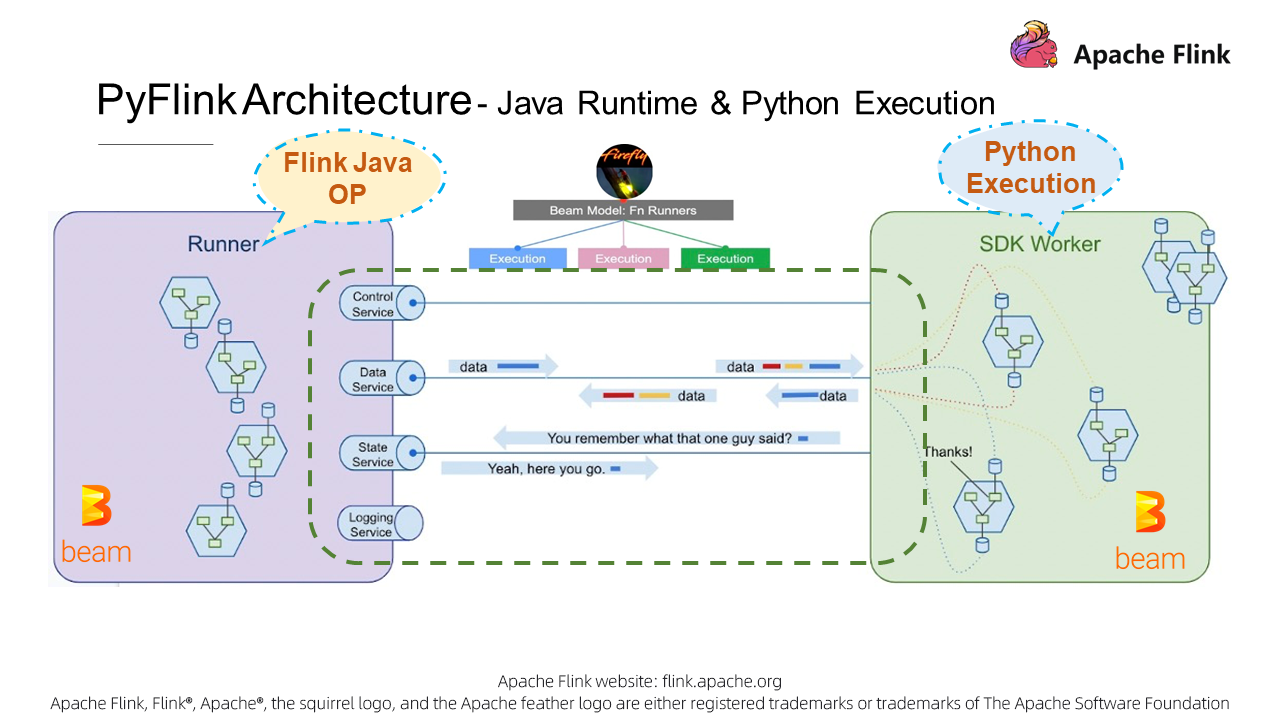

In the figure below, a runner represent a Flink Java operator. Runners map to SDK workers in the Python execution environment. Apache Beam has abstracted services such as Control, Data, State, and Logging. In fact, these services have been running stably and efficiently on Beam Flink runners for a long time. This makes PyFlink UDF execution easier. In addition, Apache Beam has solutions for both API calls and user-defined function execution. PyFlink uses Py4J for communications between virtual machines at the API level, and uses Apache Beam's Portability Framework for setting up the user-defined function execution environment.

This shows that PyFlink strictly follows the principle of achieving given objectives at minimal costs in technology selection, and always adopts the technical architecture that best suits long-term development. By the way, during cooperation with Apache Beam, I have submitted more than 20 optimization patches to the Beam community.

The UDF architecture needs to not only implement communication between PyVM and JVM, but also meet different requirements in the compilation and running stages. In the following PyLink user-defined function architecture diagram, behavior in JVM is indicated in green, and that in PyVM is indicated in blue. Let's look at the local design during compilation. The local design relies on pure API mapping calls. Py4J is used for VM communication. Each time we call a Python API, the corresponding Java API is called synchronously, as shown in the following GIF.

To support user-defined functions, a user-defined function registration API (register_function) is required. When defining Python user-defined functions, you also need some third-party libraries. Therefore, a series of add methods such as add_Python_file() are required for adding dependencies. When you write a Python job, the Java API will also be called to create a JobGraph before you submit the job. Then you can submit the job to the cluster through several different methods like through a CLI.

Now let's look at how the Python API and Java API work in this architecture. On the Java side, JobMaster assigns jobs to TaskManager like it does with common Java jobs, and TaskManager executes tasks, which involve operator execution in both JVM and PyVM. In Python user-defined function operators, we will design various gRPC services for communication between JVM and PyVM; for example, DataService for business data communication, and StateService for Python UDFs to call Java State backends. Many other services such as Logging and Metrics will also be provided.

These services are built based on Beam's Fn APIs. User-defined functions are eventually run in Python workers, and the corresponding gRPC services return the results to Python user-defined function operators in JVM. Python workers can run as processes, in Docker containers, and even in external service clusters. This extension mechanism lays a solid foundation for the integration of PyFlink with other Python frameworks, which we will discuss later in PyFlink roadmap. Now that we have a basic understanding of the Python user-defined function architecture introduced in PyFlink 1.10, let's take a look at its benefits:

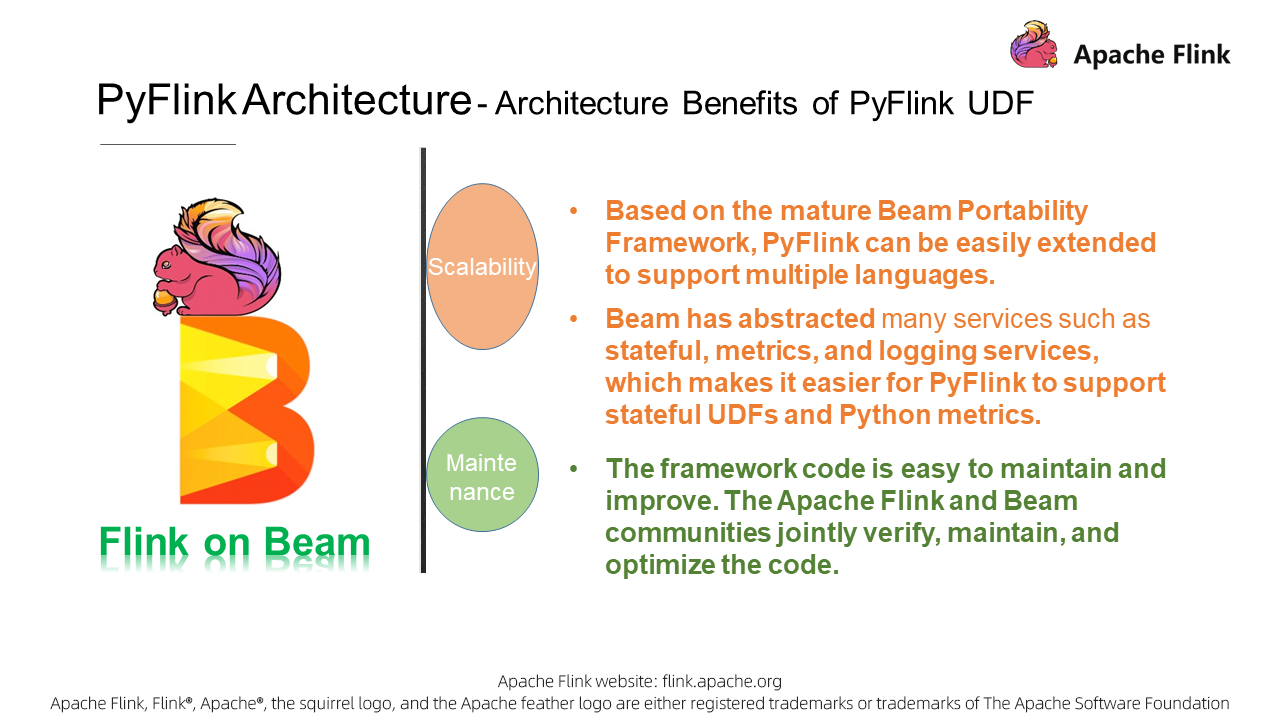

First, it is a mature multi-language support framework. The Beam-based architecture can be extended easily to support other languages. Second, support for stateful user-defined functions. Beam abstracts stateful services, which makes it easier for PyFlink to support stateful user-defined functions. Third, easy maintenance. Two active communities - Apache Beam and Apache Flink - maintain and optimize the same framework.

With the knowledge of PyFlink's architecture and the ideas behind it, let's look at specific application scenarios of PyFlink for a better understanding of the hows and whys behind it.

What are the business scenarios that PyFlink supports? We can analyze its application scenarios from two perspectives: Python and Java. Bear in mind that PyFlink is suitable for all scenarios where Java can apply, too.

You can use PyFlink in all these scenarios. PyFlink also applies to Python-specific scenarios, such as scientific computing. With so many application scenarios, you may wonder what specific PyFlink APIs are available for use now. So let's take a look into that question now, too.

Before using any API, you need to install PyFlink. Currently, to install PyFlink, run the command: pip install apache-Flink.

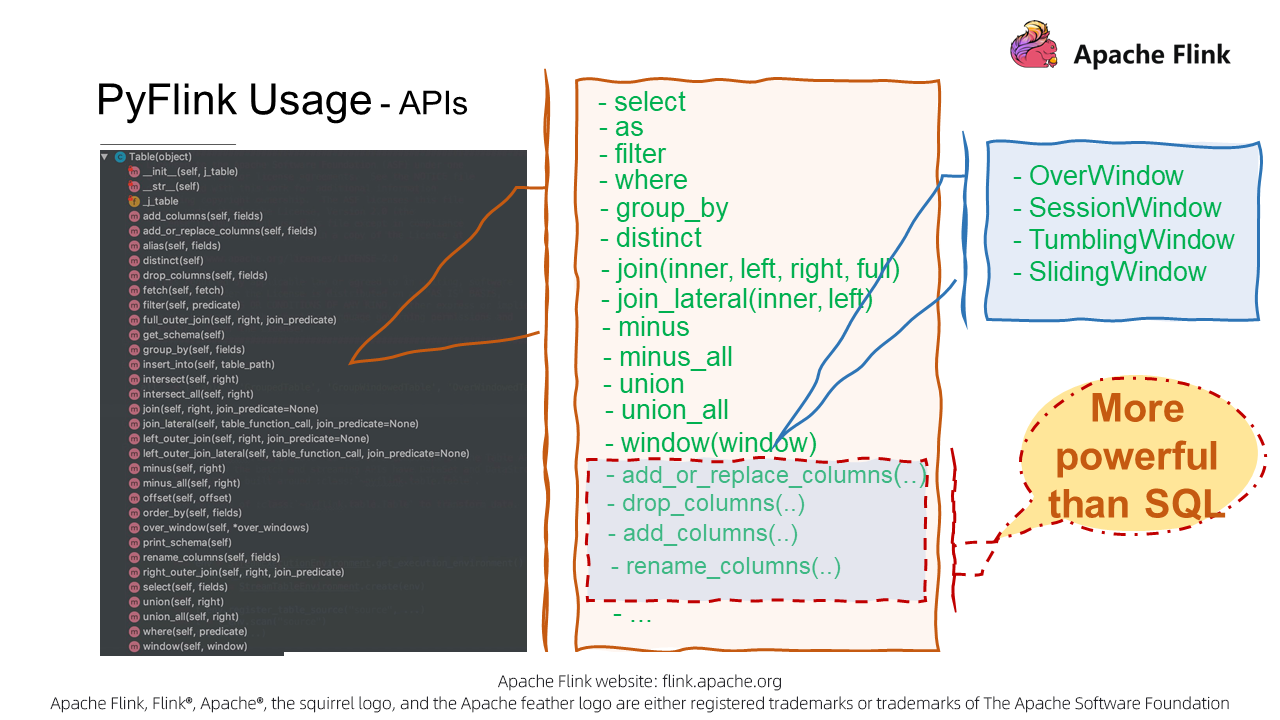

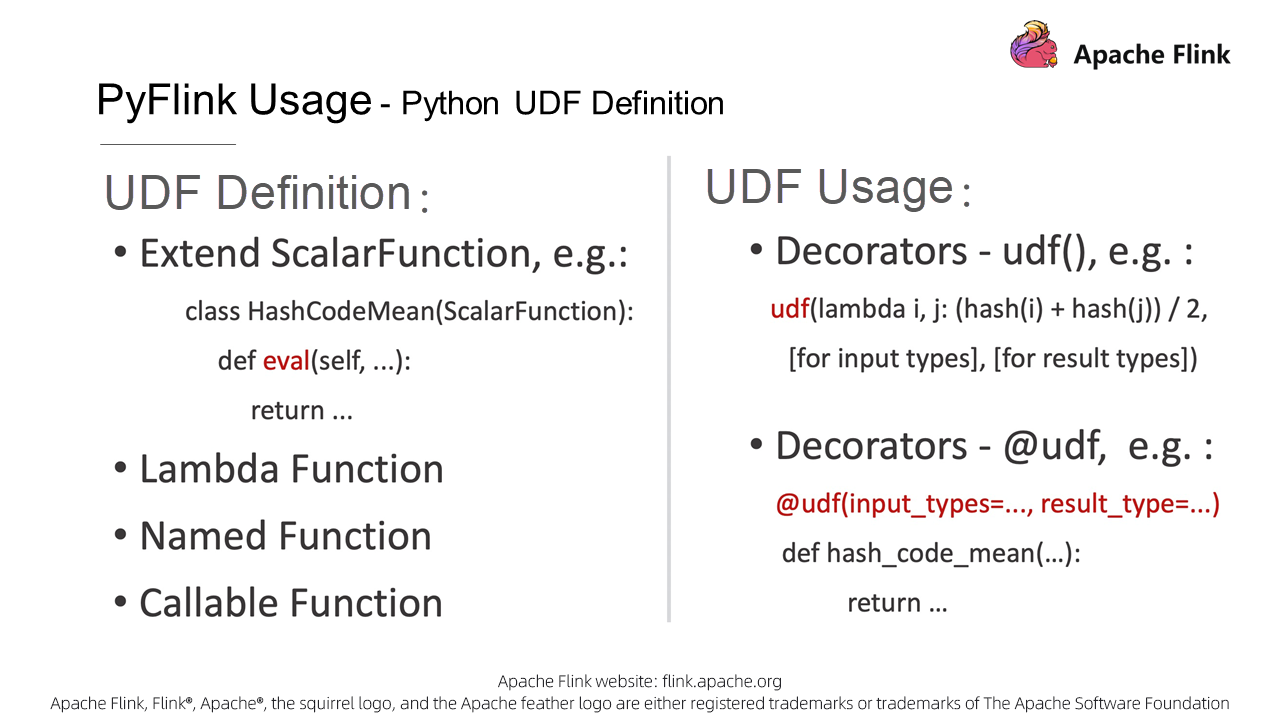

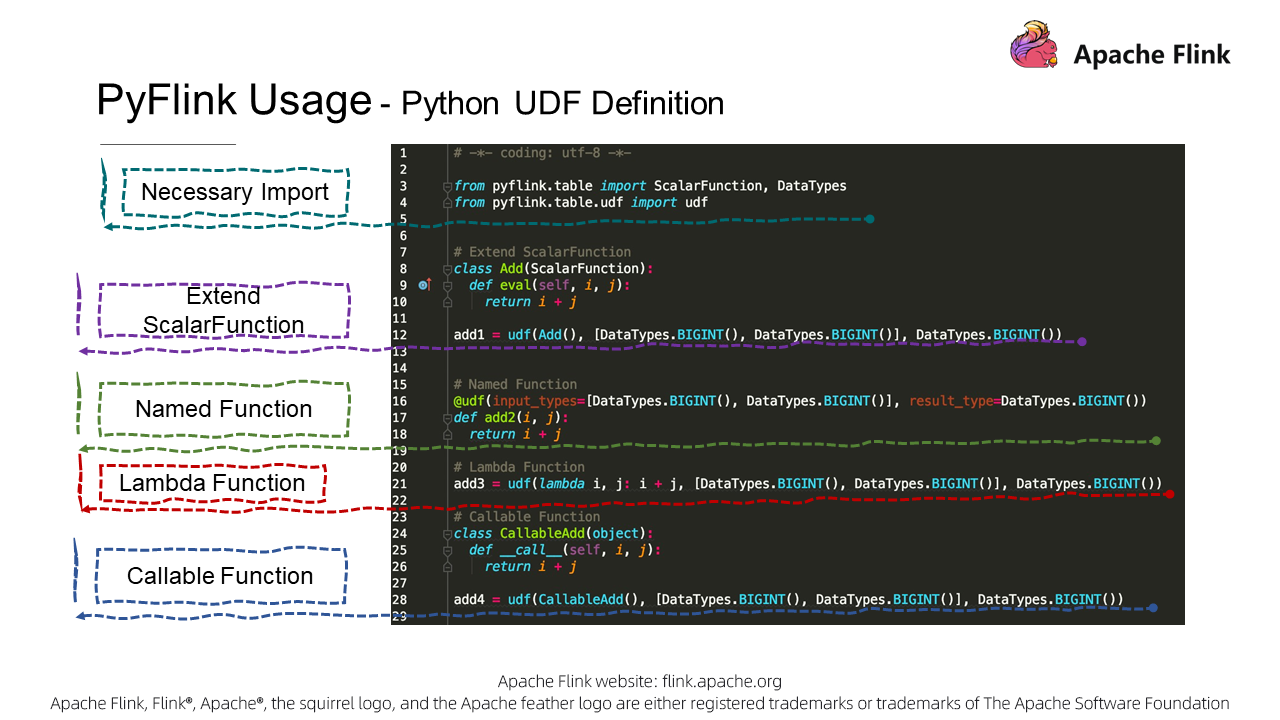

PyFlink APIs are fully aligned with Java Table APIs to support various relational and window operations. Some ease-of-use PyFlink APIs are even more powerful than SQL APIs, such as APIs specific to column operations. In addition to APIs, PyFlink also provides multiple ways to define Python UDFs.

ScalarFunction can be extended (for example, by adding metrics) to provide more auxiliary features. In addition, PyFlink user-function functionss support all method definitions that Python supports, such as the lambda, named, and callable functions.

After defining these methods, we can use PyFlink Decorators for tagging, and describe the input and output data types. We can also further streamline later versions based on the type hint feature of Python, for type derivation. The following example will help you better understand how to define a user-defined function.

In this example case, we add up two numbers. First, for this, import necessary classes, then define the previously mentioned functions. This is pretty straightforward, so let's proceed to a practical case.

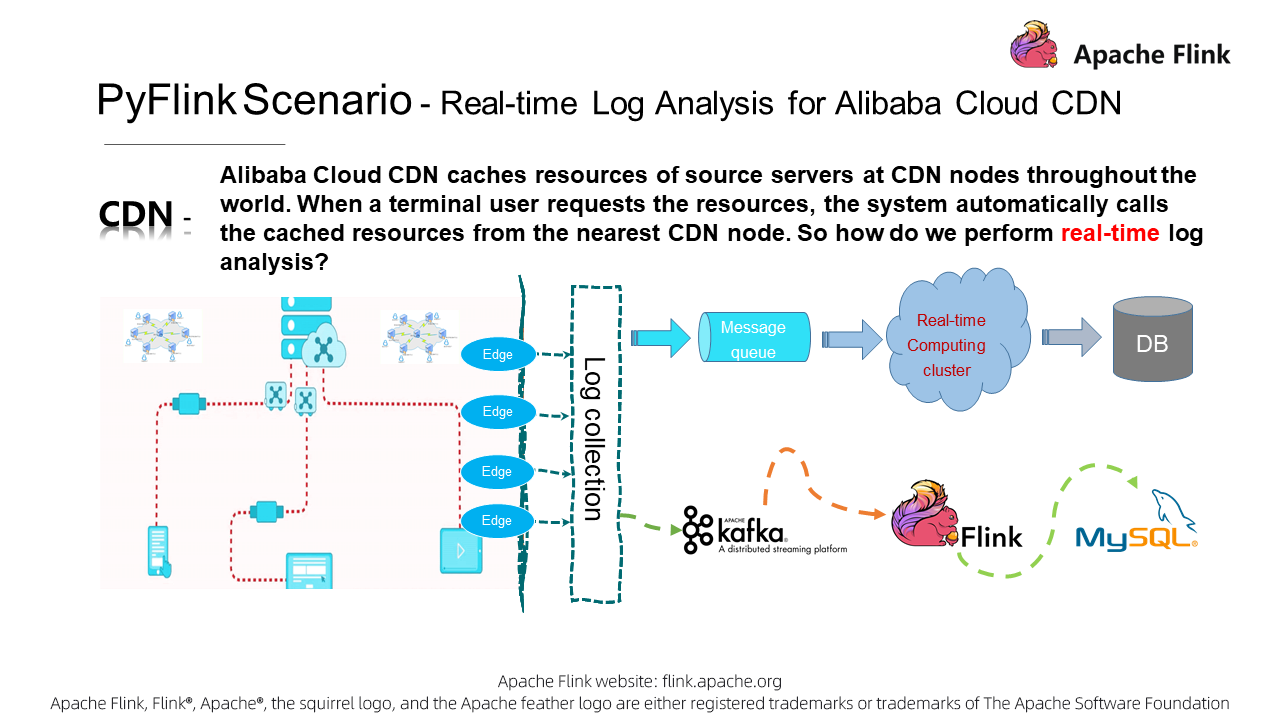

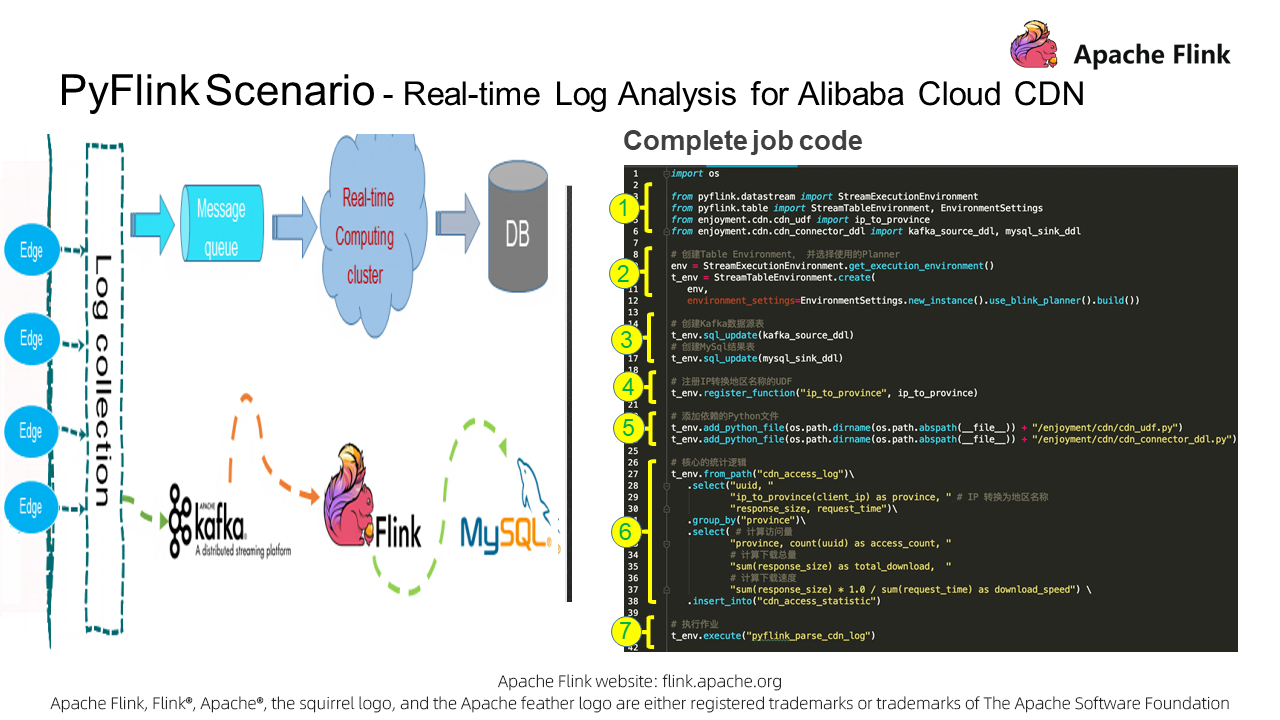

Here I take Alibaba Cloud Content Deliver Network (CDN)'s real-time log analysis feature as an example to show you how to use PyFlink to resolve practical business problems. Alibaba Cloud CDN is used to accelerate resource downloads. Generally, CDN logs are parsed in a common pattern: First, collect log data from edge nodes, and then save that data to message queues. Second, combine message queues and Realtime Compute clusters to perform real-time log analysis. Third, write analysis results into the storage system. In this example, the architecture is instantiated, Kafka is used as a message queue, Flink is used for real-time computing, and the final data is stored in a MySQL database.

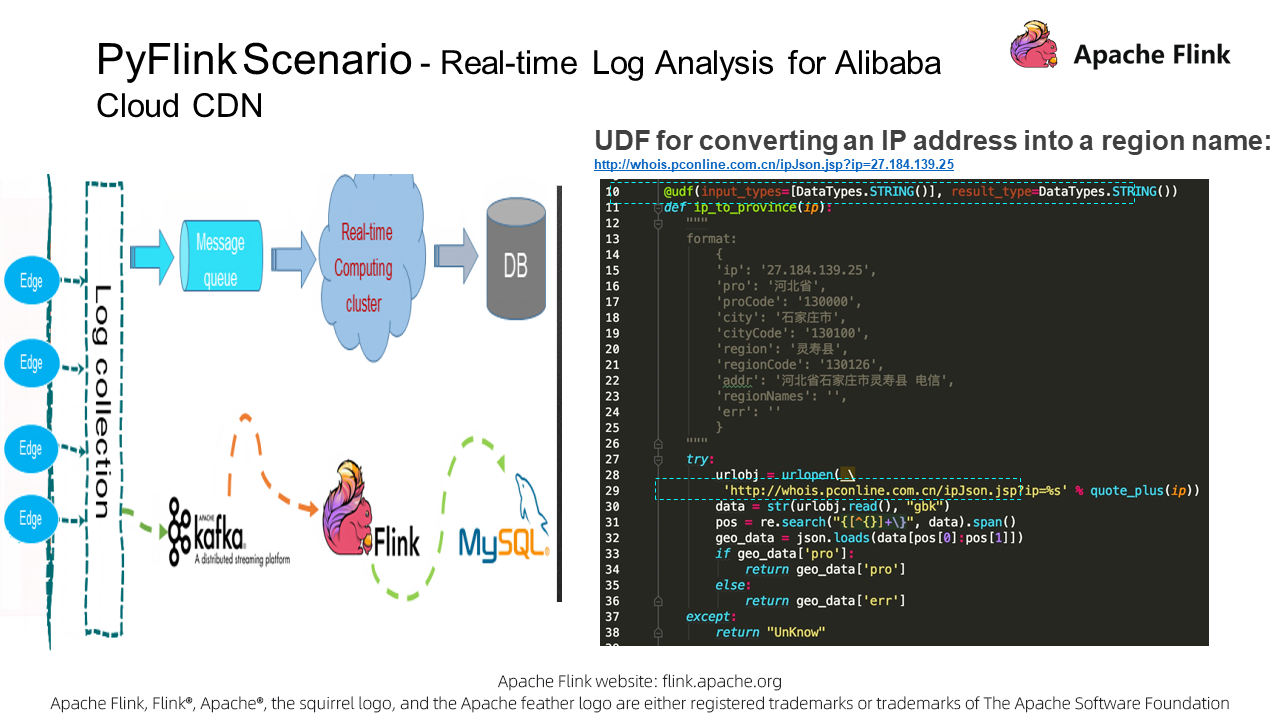

For convenience, we have simplified the actual business statistical requirements. In this example, statistics for page views, downloads, and download speeds are collected by region. In terms of data formats, we have selected only core fields. For example, uuid indicates a unique log ID, client_ip indicates the access source, request_time indicates the resource download duration, and response_size indicates the resource data size. Here, the original logs do not contain a region field despite the requirement to collect statistics by region. Therefore, we need to define a Python UDF to query the region of each data point according to the client_ip. Let's analyze how to define the user-defined function.

Here, the ip_to_province() user-defined function, a name function, is defined. The input is an IP address, and the output is a region name string. Here, both the input type and output type are defined as strings. The query service here is for demonstration purposes only. You'll need to replace it with a reliable region query service in your production environment.

import re

import json

from pyFlink.table import DataTypes

from pyFlink.table.udf import udf

from urllib.parse import quote_plus

from urllib.request import urlopen

@udf(input_types=[DataTypes.STRING()], result_type=DataTypes.STRING())

def ip_to_province(ip):

"""

format:

{

'ip': '27.184.139.25',

'pro': '河北省',

'proCode': '130000',

'city': '石家庄市',

'cityCode': '130100',

'region': '灵寿县',

'regionCode': '130126',

'addr': '河北省石家庄市灵寿县 电信',

'regionNames': '',

'err': ''

}

"""

try:

urlobj = urlopen( \

'http://whois.pconline.com.cn/ipJson.jsp?ip=%s' % quote_plus(ip))

data = str(urlobj.read(), "gbk")

pos = re.search("{[^{}]+\}", data).span()

geo_data = json.loads(data[pos[0]:pos[1]])

if geo_data['pro']:

return geo_data['pro']

else:

return geo_data['err']

except:

return "UnKnow"So far, we have analyzed the requirements and defined the user-defined function, so now let's proceed to job development. According to the general job structure, we need to define a Source connector to read Kafka data, and a Sink connector to store the computing results to a MySQL database. Last, we also need to write the statistical logic.

Note that PyFlink also supports SQL DDL statements, and we can use a simple DDL statement to define the Source connector. Be sure to set connector.type to Kafka. You can also use a DDL statement to define the Sink connector, and set connector.type to jdbc. As you can see, the logic of connector definition is quite simple. Next, let's take a look at the core statistical logic.

kafka_source_ddl = """

CREATE TABLE cdn_access_log (

uuid VARCHAR,

client_ip VARCHAR,

request_time BIGINT,

response_size BIGINT,

uri VARCHAR

) WITH (

'connector.type' = 'kafka',

'connector.version' = 'universal',

'connector.topic' = 'access_log',

'connector.properties.zookeeper.connect' = 'localhost:2181',

'connector.properties.bootstrap.servers' = 'localhost:9092',

'format.type' = 'csv',

'format.ignore-parse-errors' = 'true'

)

"""

mysql_sink_ddl = """

CREATE TABLE cdn_access_statistic (

province VARCHAR,

access_count BIGINT,

total_download BIGINT,

download_speed DOUBLE

) WITH (

'connector.type' = 'jdbc',

'connector.url' = 'jdbc:mysql://localhost:3306/Flink',

'connector.table' = 'access_statistic',

'connector.username' = 'root',

'connector.password' = 'root',

'connector.write.flush.interval' = '1s'

)

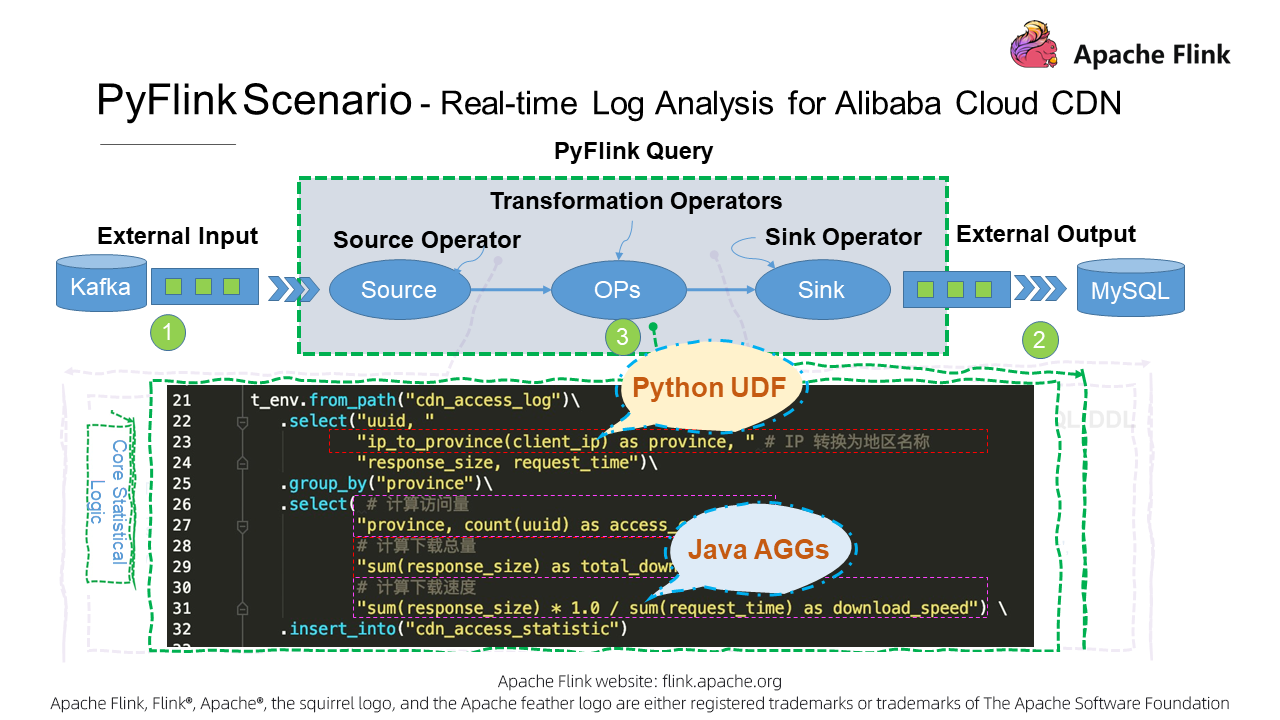

"""For this part, you'll need to first read data from the data source, and then convert the client_ip into a specific region through ip_to_province(ip). Second, collect statistics for page views, downloads, and download speeds by region. Last, store the statistical results are in the result table. In this statistical logic, we not only use the Python user-defined function, but also two built-in Java AGG functions of Flink: sum and count.

# 核心的统计逻辑

t_env.from_path("cdn_access_log")\

.select("uuid, "

"ip_to_province(client_ip) as province, " # IP 转换为地区名称

"response_size, request_time")\

.group_by("province")\

.select( # 计算访问量

"province, count(uuid) as access_count, "

# 计算下载总量

"sum(response_size) as total_download, "

# 计算下载速度

"sum(response_size) * 1.0 / sum(request_time) as download_speed") \

.insert_into("cdn_access_statistic")Now let's go through the code again. First, you'll need to import core dependencies, then create an ENV, and last set a planner. Currently, Flink supports Flink and Blink planners. We recommend that you use the Blink planner.

Second, run DDL statements to register the Kafka source table and MySQL result table that we defined earlier. Third, register the Python UDF. Note that you can specify other dependency files of the UDF in the API request, and then submit them to the cluster together with the job. Finally, write the core statistical logic, and call the executor to submit the job. So far, we have created an Alibaba Cloud CDN real-time log analysis job. Now, let's check the actual statistical results.

import os

from pyFlink.datastream import StreamExecutionEnvironment

from pyFlink.table import StreamTableEnvironment, EnvironmentSettings

from enjoyment.cdn.cdn_udf import ip_to_province

from enjoyment.cdn.cdn_connector_ddl import kafka_source_ddl, mysql_sink_ddl

# 创建Table Environment, 并选择使用的Planner

env = StreamExecutionEnvironment.get_execution_environment()

t_env = StreamTableEnvironment.create(

env,

environment_settings=EnvironmentSettings.new_instance().use_blink_planner().build())

# 创建Kafka数据源表

t_env.sql_update(kafka_source_ddl)

# 创建MySql结果表

t_env.sql_update(mysql_sink_ddl)

# 注册IP转换地区名称的UDF

t_env.register_function("ip_to_province", ip_to_province)

# 添加依赖的Python文件

t_env.add_Python_file(

os.path.dirname(os.path.abspath(__file__)) + "/enjoyment/cdn/cdn_udf.py")

t_env.add_Python_file(os.path.dirname(

os.path.abspath(__file__)) + "/enjoyment/cdn/cdn_connector_ddl.py")

# 核心的统计逻辑

t_env.from_path("cdn_access_log")\

.select("uuid, "

"ip_to_province(client_ip) as province, " # IP 转换为地区名称

"response_size, request_time")\

.group_by("province")\

.select( # 计算访问量

"province, count(uuid) as access_count, "

# 计算下载总量

"sum(response_size) as total_download, "

# 计算下载速度

"sum(response_size) * 1.0 / sum(request_time) as download_speed") \

.insert_into("cdn_access_statistic")

# 执行作业

t_env.execute("pyFlink_parse_cdn_log")We sent mock data to Kafka as CDN log data. On the right side of the figure below, statistics for page views, downloads, and download speed are collected by region in real time.

In general, business development with PyFlink is simple. You can easily describe the business logic through SQL or Table APIs without understanding the underlying implementation. Let's take a look at the overall prospects for PyFlink.

The development of PyFlink has always been driven by the goals to make Flink features available to Python users and to integrate Python functions into Flink. According to the PyFlink roadmap shown below, we first established communication between PyVM and JVM. Then, in Flink 1.9, we provided Python Table APIs that opened existing Flink Table API features to Python users. In Flink 1.10, we prepared for integrating Python functions into Flink by doing the following: integrating Apache Beam, setting up the Python user-defined function execution environment, managing Python's dependencies on other class libraries, and defining user-defined function APIs for users to support Python user-defined functions.

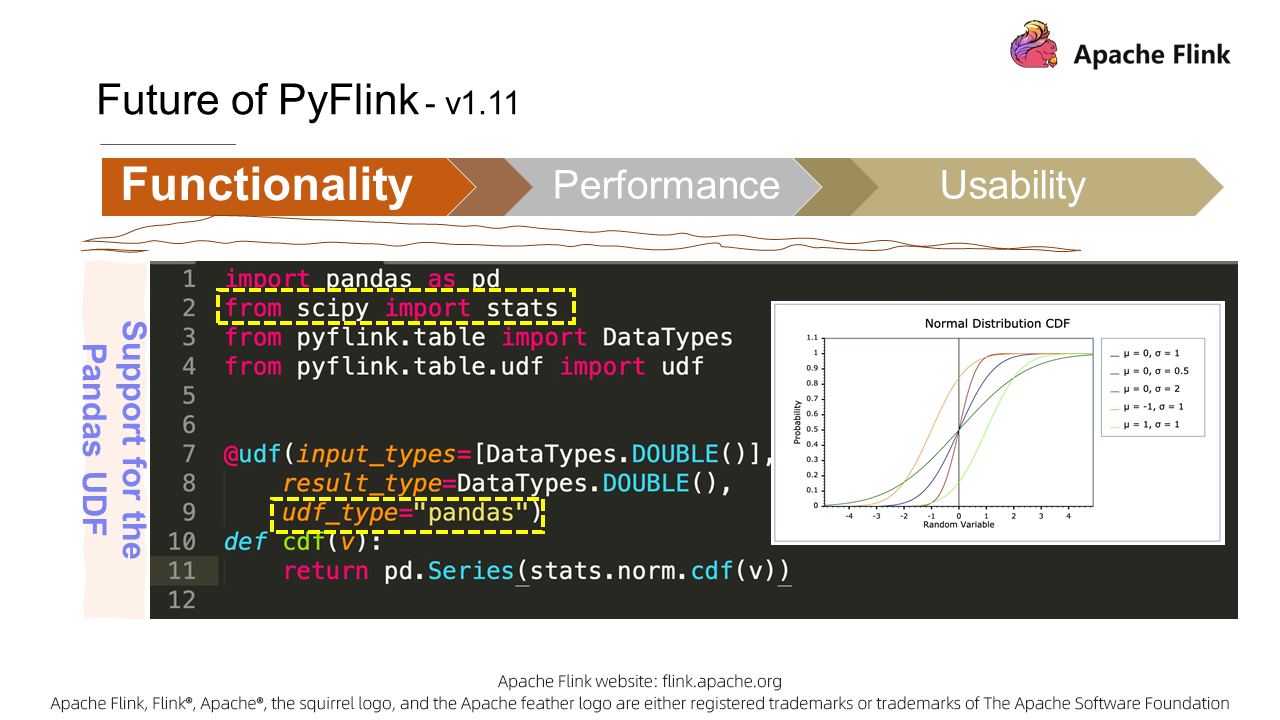

To extend the features of distributed Python, PyFlink provides support for Pandas Series and DataFrame, so that users can directly use Pandas user-defined functions in PyFlink. In addition, Python user-defined functions will be enabled on SQL clients in the future, to make PyFlink easier to use. PyFlink will also provide the Python ML pipeline API to enable Python users to use PyFlink in machine learning. Monitoring on Python user-defined function execution is critical to actual production and business. Therefore, PyFlink will further provide metric management for Python user-defined functions. These features will be incorporated in Flink 1.11.

However, these are only a part of PyFlink's future development plans. We have more work to do in the future, such as optimizing PyFlink's performance, providing graph computing APIs, and supporting Pandas' native APIs for Pandas on Flink. We will continuously make the existing features of Flink available to Python users, and integrate Python's powerful features into Flink, to achieve our initial goal of expanding the Python ecosystem.

Let's quickly look at the key points of PyFlink in the upcoming version Flink 1.11.

Now, let's take a closer look at the core features of PyFlink based on Flink 1.11. We are working hard on the functionality, performance, and ease-of-use of PyFlink, and will provide support for Pandas user-defined functions in PyFlink 1.11. Thus, Pandas' practical class library features can be used directly in PyFlink, such as the cumulative distribution function.

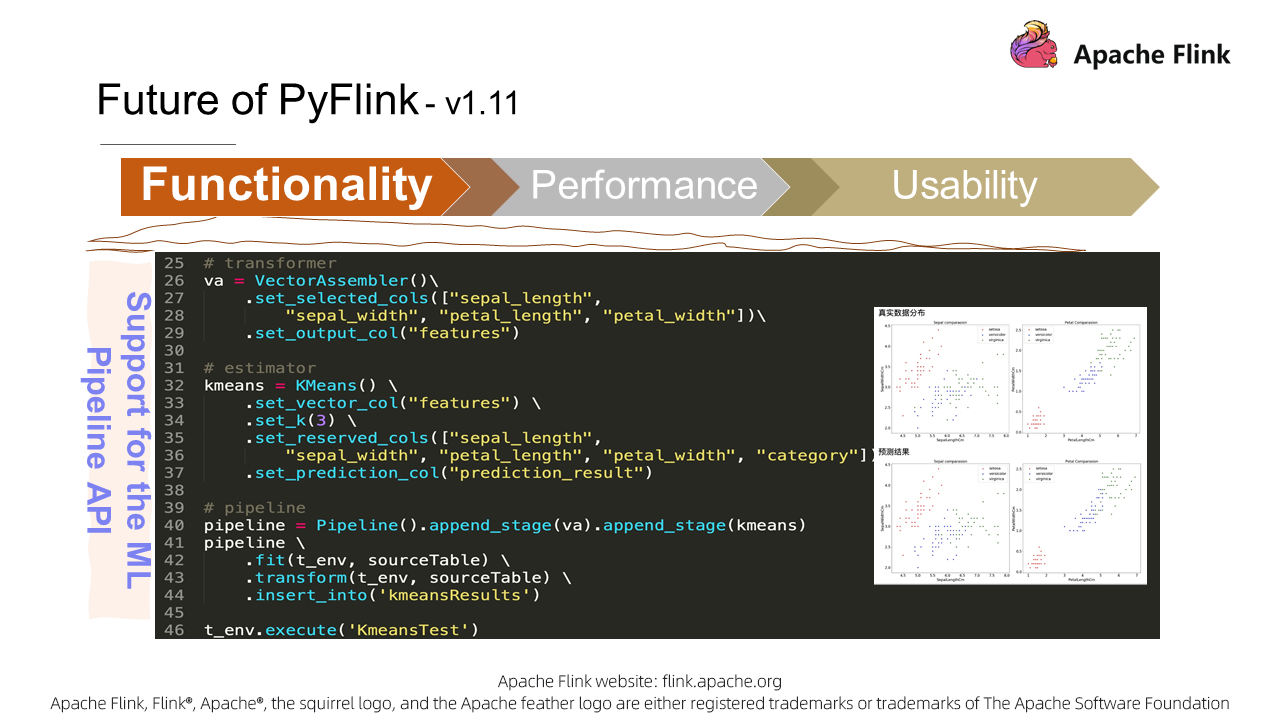

We will also integrate the ML Pipeline API in PyFlink to meet your business needs in machine learning scenarios. Here is an example of using PyFlink to implement the KMeans technique.

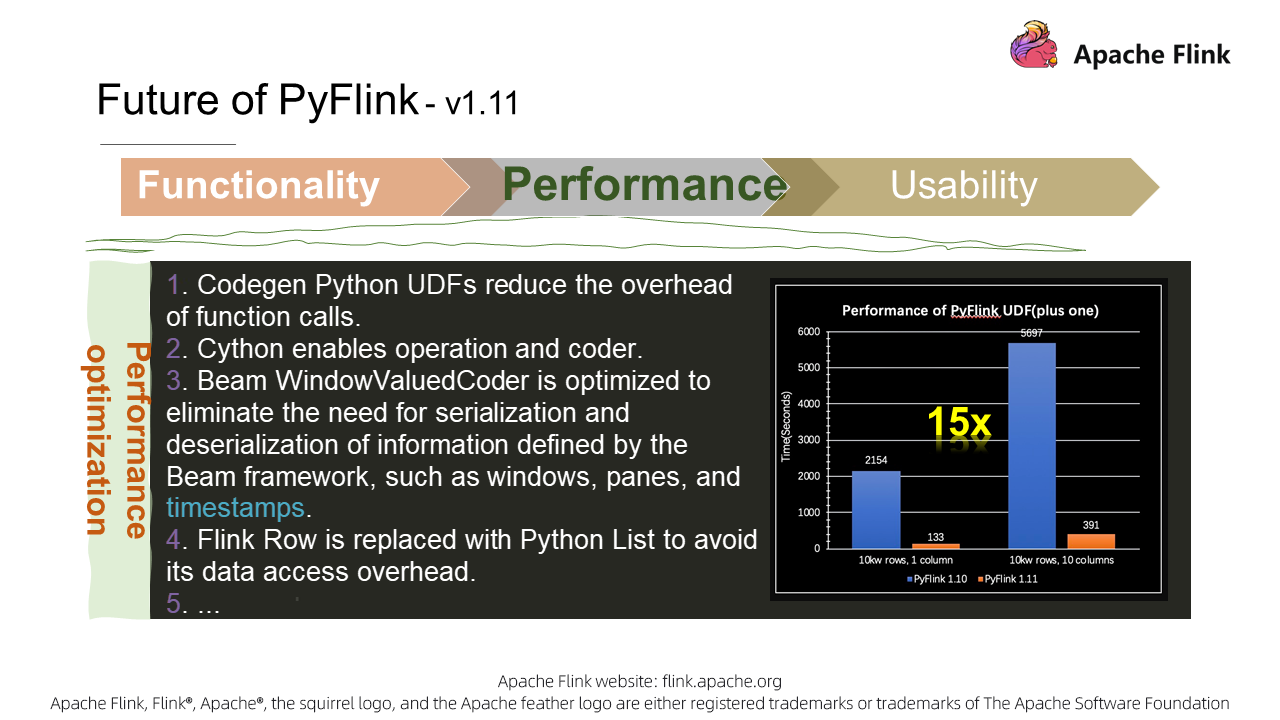

We will also make more effort to improve PyFlink's performance. We will attempt to improve the performance of Python UDF execution through Codegen, CPython, optimized serialization, and deserialization. The preliminary comparison shows that PyFlink 1.11's performance will be approximately 15-fold better compared to that of PyFlink 1.10.

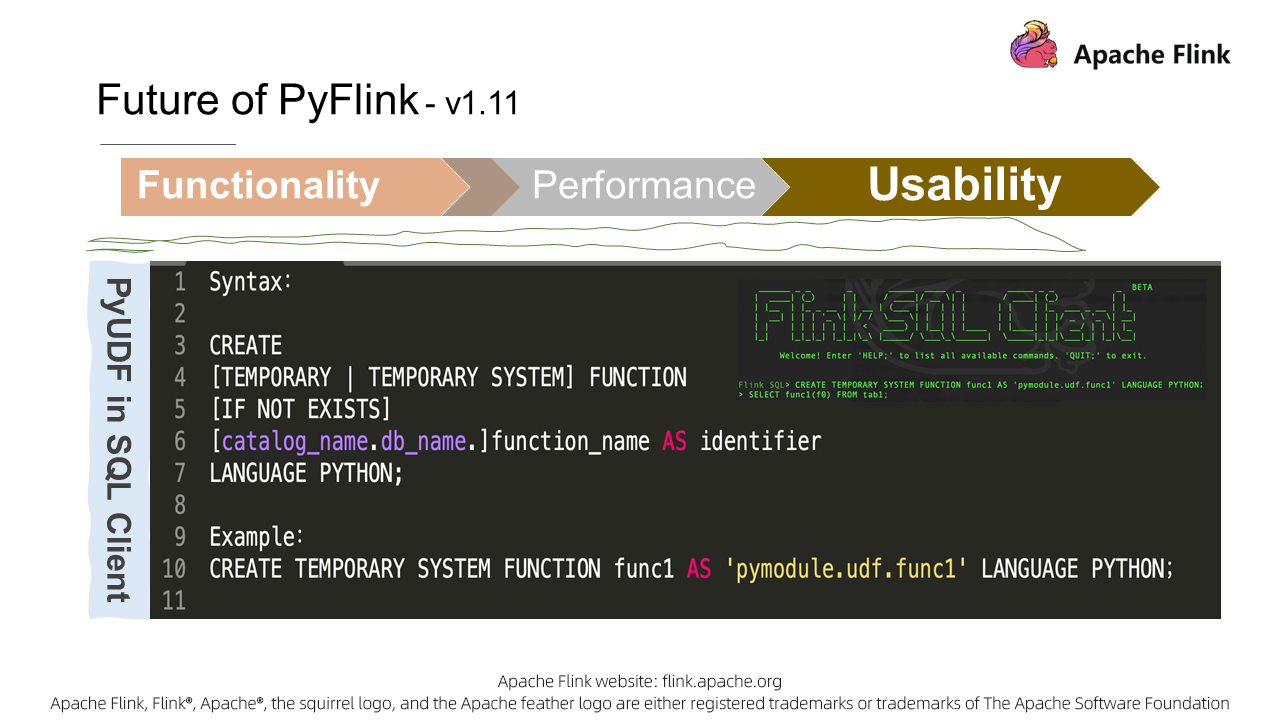

To make PyFlink easier to use, we will provide support for Python user-defined functions in SQL DDLs and SQL clients. This will enable you to use PyFlink through various channels.

We have already defined PyFlink, and described its significance, API architecture, and user-defined function architecture, as well as the trade-offs behind the architecture and the benefits of it. We have gone through the CDN case, PyFlink roadmap, and key points of PyFlink in Flink 1.11. But, what else do we need to know?

Let's take a final look at PyFlink's future. Driven by the mission of making Flink features available to Python users, and running Python functions on Flink, what are the prospects for PyFlink? As you may know, PyFlink is a part of Apache Flink, which involves the Runtime and API layers.

How will PyFlink develop at these two layers? In terms of Runtime, PyFlink will build gRPC general services (such as Control, Data, and State) for communications between JVM and PyVM. In this framework, Java Python user-defined functions operators will be abstracted, and Python execution containers will be built to support Python execution in multiple ways. For example, PyFlink can be run as processes, in Docker containers, and even in external service clusters. In particular, when running in external service clusters, unlimited extension capabilities are enabled in the form of sockets. This all plays a critical role in subsequent Python integration.

In terms of APIs, we will enable Python-based APIs in Flink to fulfill our mission. This also relies on the Py4J VM communication framework. PyFlink will gradually support more APIs, including Java APIs in Flink (such as the Python Table API, UDX, ML Pipeline, DataStream, CEP, Gelly, and State APIs) and the Pandas APIs that are most popular among Python users. Based on these APIs, PyFlink will continue to integrate with other ecosystems for easy development; for example, Notebook, Zeppelin, Jupyter, and Alink, which is Alibaba's open-source version of Flink. As of now, PyAlink has fully incorporated the features of PyFlink. PyFlink will also be integrated with existing AI system platforms, such as the well-known TensorFlow.

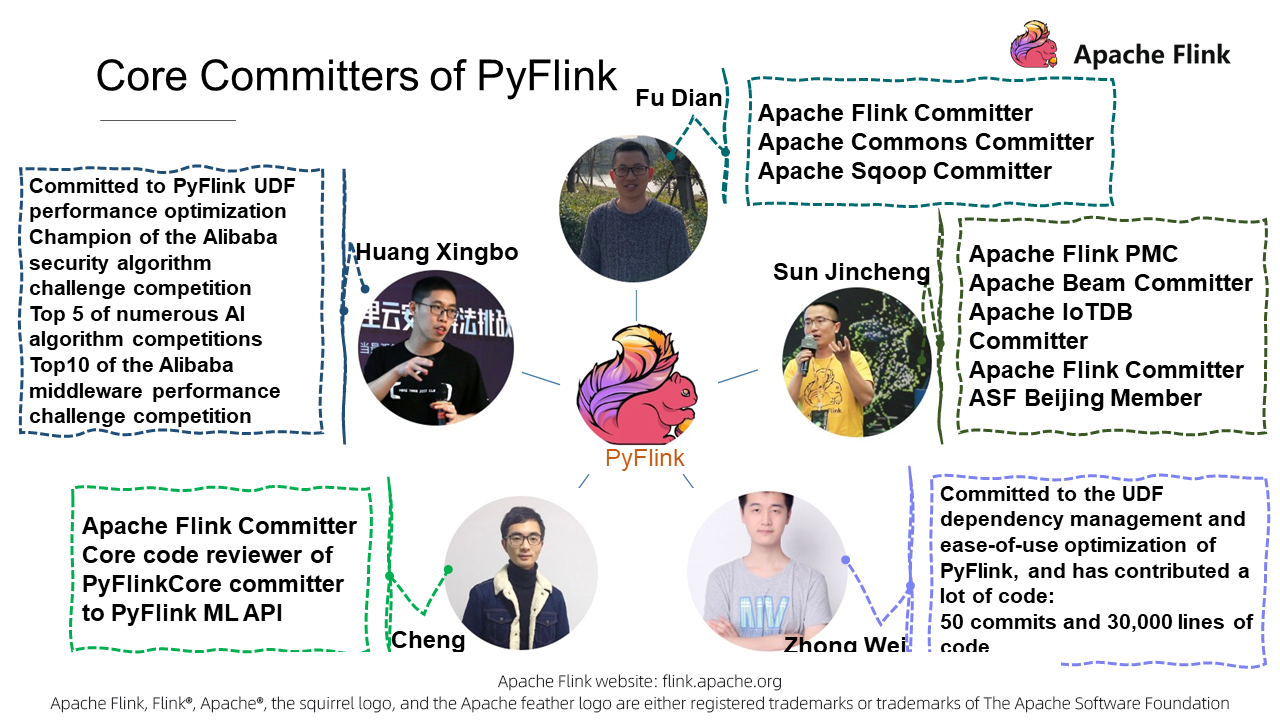

To this end, you will see that mission-driven forces will keep PyFlink alive. Again, PyFlink's mission is to make Flink features available for Python users, and run Python analysis and computing functions on Flink. At present, PyFlink's core committers are working hard in the community with this mission.

Finally, here's the core committers for PyFlink.

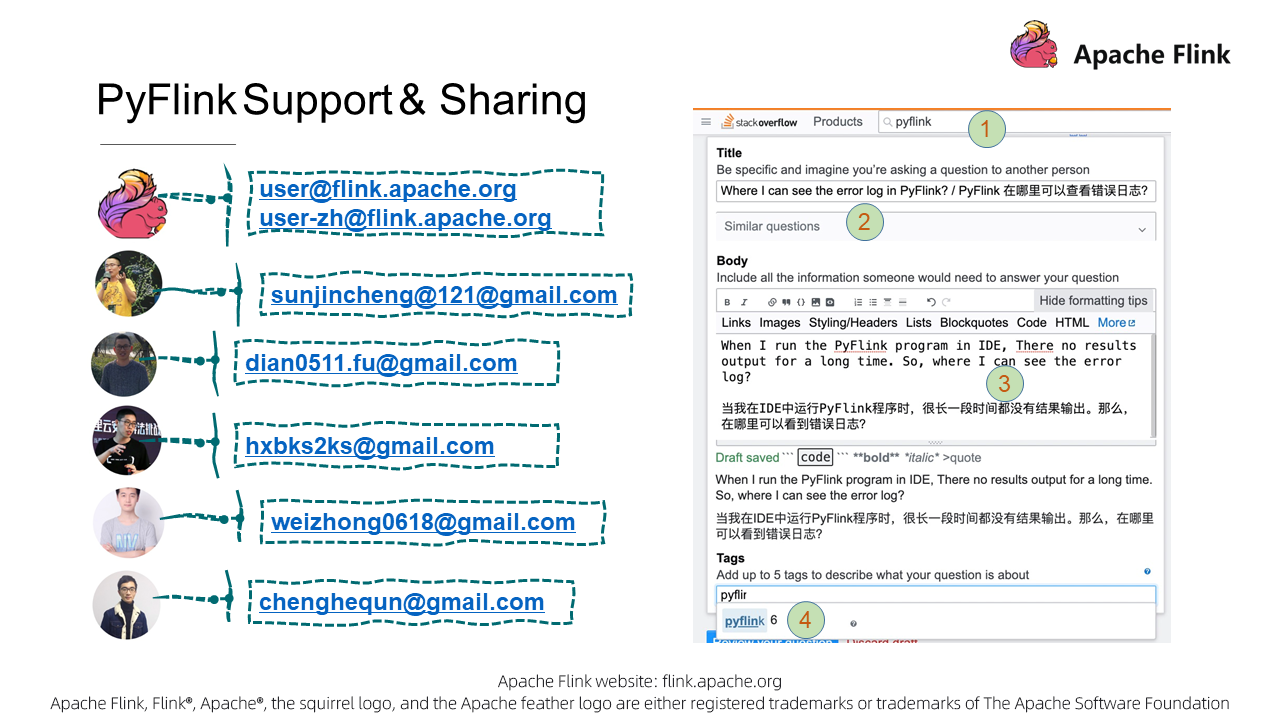

The last committer is me. My introduction is given at the end of this post. If you have any questions with PyFlink, don't hesitate to contact one of our team of committers.

For general problems, we recommend that you send emails to those in the Flink user list for sharing. You are encouraged to send emails to our committers for any urgent problems. However, for effective accumulation and sharing, we can ask questions at Stackoverflow. Before raising your question, please first search your question and see if it has been answered. If not, describe the question clearly. Finally, remember to add PyFlink tags to your questions, so we can promptly reply to your questions.

In this post, we have analyzed PyFlink in depth. In the PyFlink API architecture, Py4J is used for communications between PyVM and JVM, and semantic consistency is kept between Python and Java APIs in their design. In the Python user-defined function architecture, Apache Beam's Portability Framework has been integrated to provide efficient and stable Python user-defined functions. Also, the thoughts behind the architecture, technical trade-offs, and advantages of the existing architecture have been interpreted.

We then introduced applicable business scenarios for PyFlink, and used the real-time log analysis for Alibaba Cloud CDN as an example to show how PyFlink actually works.

After that, we looked at the PyFlink roadmap and previewed the key points of PyFlink in Flink 1.11. It is expected that the performance of PyFlink 1.11 will be improved by more than 15-fold over PyFlink 1.10. Finally, we analyzed PyFlink's mission: making PyFlink available to Python users, and running analysis and computing functions of Python on Flink.

The author of this article, Sun Jincheng, joined Alibaba in 2011. Sun has led the development of many internal core systems during his nine years of work at Alibaba, such as Alibaba Group's behavioral log management system, Alilang, cloud transcoding system, and document conversion system. He got to know the Apache Flink community in early 2016. At first, he participated in community development as a developer. Later, he led the development of specific modules, and then took charge of the construction of the Apache Flink Python API (PyFlink). He is currently a PMC member of Apache Flink and ALC (Beijing) and a committer for Apache Flink, Apache Beam, and Apache IoTDB.

Demo: How to Build Streaming Applications Based on Flink SQL

206 posts | 54 followers

FollowApache Flink Community China - August 11, 2021

Alibaba Clouder - April 25, 2021

Apache Flink Community China - November 6, 2020

Apache Flink Community China - December 25, 2019

Apache Flink Community China - September 29, 2021

Apache Flink Community China - August 22, 2023

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community