This guide details the process of adding a Spark SQL data source to Quick BI, enabling the connection to a Spark SQL database (version 3.1.2) via the public network or Alibaba Cloud VPC. By establishing this connection, you can analyze and visualize data within Quick BI.

Prerequisites

Ensure your network is connected:

To connect Quick BI with the Spark SQL database (version 3.1.2) over the public network, you should add Quick BI's IP address to the database's whitelist. For more information, see how to add a security group rule.

For internal network connections, use one of the following methods:

If the Spark SQL database is hosted on Alibaba Cloud ECS, establish a connection via Alibaba Cloud VPC.

Alternatively, deploy a jump server and create an SSH tunnel to access the database.

Retrieve the username and password for the user-created Spark SQL database (version 3.1.2).

Limits

The Spark SQL database must be version 3.1.2, and the Hive MetaStore used for underlying storage should be Hive 2.0 or higher.

Procedure

Log on to the Quick BI console.

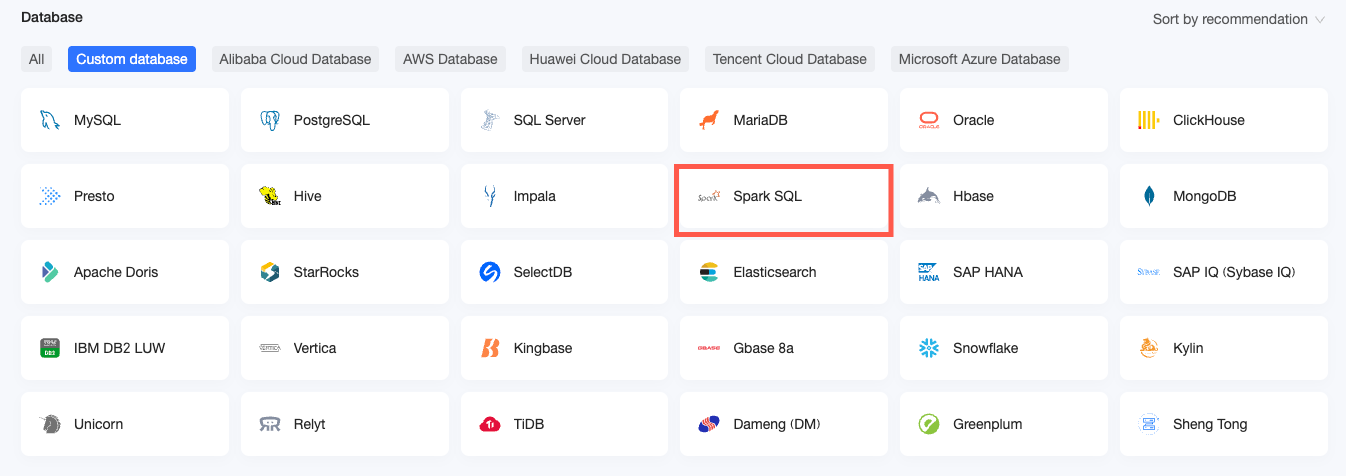

Follow the steps depicted in the figure below to add a user-created Spark SQL data source.

Navigate to the create data source interface via the create data source entry.

On the User-created Data Source tab, select the Spark SQL data source.

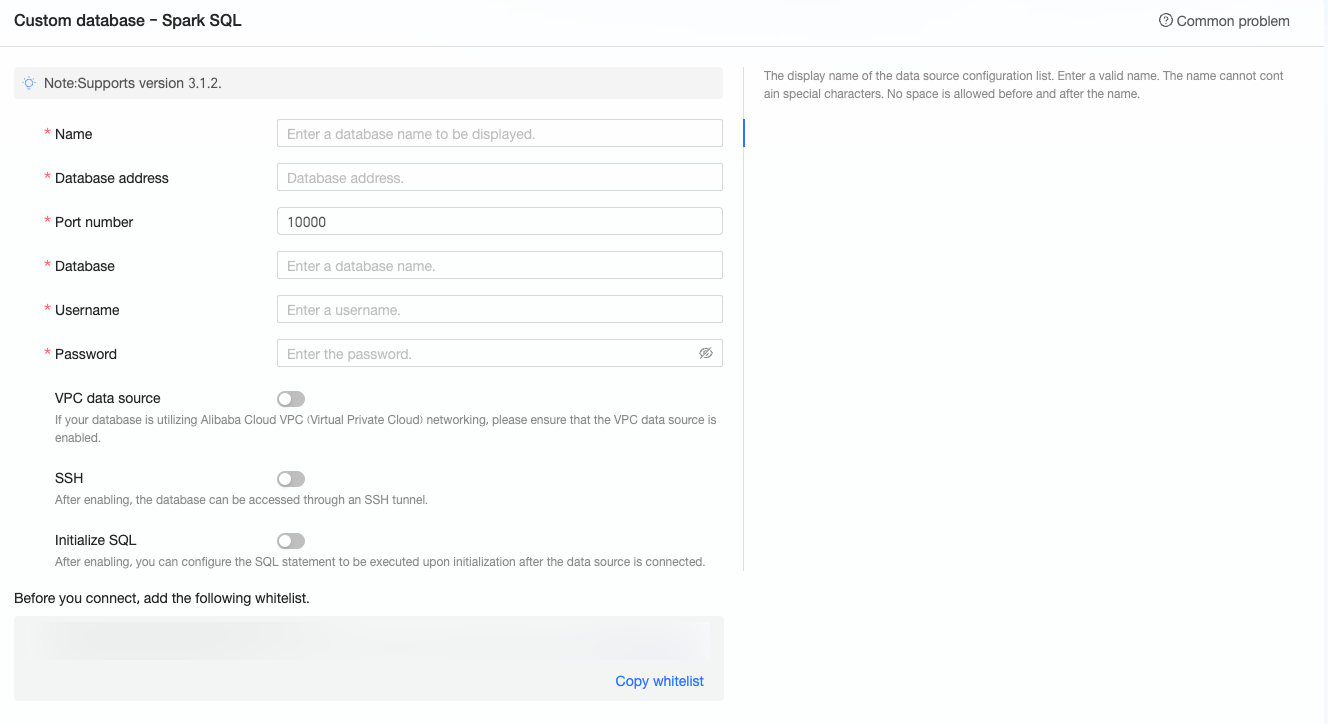

In the Configure Connection dialog box, configure the connection according to your business needs.

Name

Description

Display Name

The display name in the data source configuration list.

Enter a standard name without special characters or spaces at the beginning or end.

Database Address

The address where the Spark SQL database is deployed, including IP or domain name.

Port

The corresponding port number of the database.

Database

The custom database name when deploying the Spark SQL database.

Username and Password

The username and password for logging on to the Spark SQL database. Ensure that the username has create, insert, update, and delete permissions on the tables in the database.

VPC Data Source

If the database uses Alibaba Cloud VPC network, enable VPC data source.

If the database is deployed on Alibaba Cloud ECS, select "Instance". If accessed through Classic Load Balancer (CLB), select "CLB".

Instance

Purchaser Accessid: The AccessKey ID of the purchaser of this instance.

For more information, see obtain AccessKey.

NoteEnsure that the AccessKey ID has Read permission on the destination instance. Additionally, if you have Write permission on the corresponding security group, the system will automatically add the whitelist. Otherwise, you need to add it manually. For more information, see create a custom policy.

Purchaser Accesskey: The AccessKey Secret of the purchaser of this instance.

For more information, see obtain AccessKey.

Instance ID: The ECS instance ID. Log on to the ECS console and obtain the instance ID on the instance tab.

For more information, see view instance information.

Area: The area where the ECS instance is located. Log on to the ECS console and obtain the area in the upper left corner.

For more information, see view instance information.

CLB

Purchaser Accessid: The AccessKey ID of the CLB purchaser. Ensure that the account has Read permission on the CLB. Log on to the RAM console to obtain the AccessKey ID.

For more information, see view RAM user's AccessKey information.

Purchaser Accesskey: The AccessKey Secret corresponding to the AccessKey ID. Log on to the RAM console to obtain the AccessKey Secret.

For more information, see view RAM user's AccessKey information.

Instance ID: The instance ID of the CLB. Log on to the Server Load Balancer console and obtain the instance ID in the instance management list.

Area: The area where the instance is located. Log on to the Server Load Balancer console and obtain the area in the upper left corner of the instance management page.

SSH

If you select SSH, you need to configure the following parameters:

You can deploy a jump server and access the database over an SSH tunnel. Obtain the jump server information from O&M personnel or system administrators.

SSH Host: Enter the IP address of the jump server.

SSH Username: The username for logging on to the jump server.

SSH Password: The password corresponding to the username for logging on to the jump server.

SSH Port: The port of the jump server. Default value: 22.

For more information, see log on to a Linux instance with password authentication.

Initialize SQL

After enabling, you can configure the SQL statement to be executed after the data source connection is initialized.

Only SET statements are allowed for the SQL statement executed after each data source connection initialization, separated by semicolons.

Click Connection Test to verify the data source connectivity.

Once the test is successful, click OK to finalize the addition of the data source.

The newly created data source will now be listed among the available data sources.

What to Do next

Once the data source is established, you can proceed to create datasets and conduct data analysis.

To incorporate data tables from the Spark SQL database or custom SQL into Quick BI, refer to create and manage datasets.

For adding visualization charts and analyzing data, consult create a dashboard and visualization chart overview.

To delve deeper into data analysis, see drill settings and display.