This topic describes how to access a Hologres database from MaxCompute.

Background information

Hologres is an interactive analytics service for real-time data processing provided by Alibaba Cloud. Hologres allows you to write and query real-time data with high concurrency and analyze data with high performance. You can use Hologres and MaxCompute to build a federated data warehouse solution, thereby eliminating the need for data migration and combining real-time and offline data processing. The solution is suitable for scenarios such as large-scale offline analysis, real-time operational analysis, and interactive querying. To implement the solution, you can use the following methods to access Hologres from MaxCompute:

Prerequisites

The following MaxCompute configurations are complete:

MaxCompute is activated and a MaxCompute project is created.

For information about how to activate MaxCompute, see Activate MaxCompute and DataWorks. For information about how to create a MaxCompute project, see Create a MaxCompute project.

The MaxCompute client is installed.

For more information, see MaxCompute client (odpscmd).

A development environment is set up for Spark on MaxCompute.

In this topic, the Linux operating system and the Spark-2.4.5 package are used. For more information, see Set up a Linux development environment.

DataWorks is activated.

For more information, see Activate DataWorks.

A Hologres instance is purchased and connected to HoloWeb.

For more information, see Purchase a Hologres instance and Connect to HoloWeb and perform queries.

The PostgreSQL Java Database Connectivity (JDBC) driver is downloaded.

In this topic, the

postgresql-42.2.16.jarpackage is used. The package is saved in the /home/postgreSQL path on the Linux operating system. To download a PostgreSQL JDBC driver, go to the official download page.

Use an external table

In the Hologres console, select the instance that you purchased and create a Hologres database named

mc_db_holo.For information about how to create a Hologres database, see Create a database.

In the HoloWeb console, execute the following statement in the

mc_db_holodatabase to create a table namedmc_sql_holoand insert data into the table.For information about how to create a Hologres table, see CREATE TABLE.

CREATE TABLE mc_sql_holo( id INTEGER, name TEXT ); INSERT INTO mc_sql_holo VALUES (1,'zhangsan'), (2,'lisi'), (3,'wangwu') ;In the Resource Access Management (RAM) console, create a RAM role named

AliyunOdpsHoloRoleand modify the trust policy.For more information, see the "Create a Hologres external table in STS mode" section in the Hologres external tables topic.

NoteIn this example, the RAM role is created for a trusted Alibaba Cloud account.

Grant the RAM role

AliyunOdpsHoloRolethe access to the Hologres instance.For more information, see the "Create a Hologres external table in STS mode" section in the Hologres external tables topic.

On the MaxCompute client, execute the following statement to create an external table named

mc_externaltable_holo:create external table if not exists mc_externaltable_holo ( id int , name string ) stored by 'com.aliyun.odps.jdbc.JdbcStorageHandler' with serdeproperties ( 'odps.properties.rolearn'='acs:ram::13969******5947:role/aliyunodpsholorole') LOCATION 'jdbc:postgresql://hgprecn-cn-2r42******-cn-hangzhou-internal.hologres.aliyuncs.com:80/mc_db_holo?currentSchema=public&useSSL=false&table=mc_sql_holo/' TBLPROPERTIES ( 'mcfed.mapreduce.jdbc.driver.class'='org.postgresql.Driver', 'odps.federation.jdbc.target.db.type'='holo', 'odps.federation.jdbc.colmapping'='id:id,name:name' );NoteFor information about the parameters in the statement, see Hologres external tables.

On the MaxCompute client, execute the following statement to obtain data from the external table:

set odps.sql.split.hive.bridge=true; set odps.sql.hive.compatible=true; select * from mc_externaltable_holo limit 10;NoteFor information about the parameters in SET operations, see SET operations.

The following result is returned:

+----+----------+ | id | name | +----+----------+ | 1 | zhangsan | | 2 | lisi | | 3 | wangwu | +----+----------+On the MaxCompute client, execute the following statement to insert data to the external table:

set odps.sql.split.hive.bridge=true; set odps.sql.hive.compatible=true; insert into mc_externaltable_holo values (4,'alice');In the HoloWeb console, query data in the Hologres table

mc_sql_holo. The data you inserted in the previous step is contained in the table.select * from mc_sql_holo;

Use Spark on MaxCompute in local mode

In the HoloWeb console, execute the following statement in the

mc_db_holodatabase to create a table namedmc_jdbc_holo.For information about how to create a Hologres table, see CREATE TABLE.

CREATE TABLE mc_jdbc_holo( id INTEGER, name TEXT );Go to the /home/pythoncode path on the Linux operating system and create a Python script named

holo_local.py.Sample Python script:

from pyspark.sql import SparkSession spark = SparkSession \ .builder \ .appName("Spark_local") \ .config("spark.eventLog.enabled","false") \ .getOrCreate() jdbcDF = spark.read.format("jdbc"). \ options( url='jdbc:postgresql://hgprecn-cn-2r42******-cn-hangzhou.hologres.aliyuncs.com:80/mc_db_holo', dbtable='mc_jdbc_holo', user='LTAI****************', password='********************', driver='org.postgresql.Driver').load() jdbcDF.printSchema()Parameters:

url: the JDBC URL that contains the

jdbc:postgresql://prefix.hgprecn-cn-2r42******-cn-hangzhou.hologres.aliyuncs.com:80: the endpoint used to access the Hologres instance over the Internet. For information about how to obtain the endpoint, see Instance configurations.

mc_db_holo: the name of the Hologres database to which you want to connect. In this example, the Hologres database name is mc_db_holo.

dbtable: the name of the Hologres table from which you want to read data or to which you want to write data. In this example, the Hologres table name is mc_db_holo.

user: the AccessKey ID of an authorized Alibaba Cloud account or RAM user. You can obtain the AccessKey ID on the AccessKey Pair page.

password: the AccessKey secret corresponding to the AccessKey ID that you specified in the user parameter.

driver: the PostgreSQL driver. Set this parameter to org.postgresql.Driver.

Run the following spark-submit command from a directory on the Linux operating system to submit a job in local mode:

spark-submit --master local --driver-class-path /home/postgreSQL/postgresql-42.2.16.jar --jars /home/postgreSQL/postgresql-42.2.16.jar /home/pythoncode/holo_local.pyView the output logs. If the printed schema information is the same as that of the

mc_jdbc_holotable in Hologres, access to Hologres from MaxCompute is successful.

Use Spark on MaxCompute in cluster mode

In the HoloWeb console, execute the following statement in the

mc_db_holodatabase to create a table namedmc_jdbc_holo.For information about how to create a Hologres table, see CREATE TABLE.

CREATE TABLE mc_jdbc_holo( id INTEGER, name TEXT );Go to the /home/pythoncode path on the Linux operating system and create a Python script named

holo_yarncluster.py.Sample Python script:

from pyspark.sql import SparkSession spark = SparkSession \ .builder \ .appName("Spark_yarn") \ .getOrCreate() jdbcDF = spark.read.format("jdbc"). \ options( url='jdbc:postgresql://hgprecn-cn-2r42******-cn-hangzhou-internal.hologres.aliyuncs.com:80/mc_db_holo', dbtable='mc_jdbc_holo', user='LTAI****************', password='********************', driver='org.postgresql.Driver').load() jdbcDF.printSchema()Parameters:

url: the JDBC URL that contains the jdbc:postgresql:// prefix.

hgprecn-cn-2r42******-cn-hangzhou-internal.hologres.aliyuncs.com:80: the endpoint used to access the Hologres instance over the classic network. For information about how to obtain the endpoint, see Instance configurations.

mc_db_holo: the name of the Hologres database to which you want to connect. In this example, the Hologres database name is mc_db_holo.

dbtable: the name of the Hologres table from which you want to read data or to which you want to write data. In this example, the Hologres table name is mc_db_holo.

user: the AccessKey ID of an authorized Alibaba Cloud account or RAM user. You can obtain the AccessKey ID on the AccessKey Pair page.

password: the AccessKey secret corresponding to the AccessKey ID that you specified in the user parameter.

driver: the PostgreSQL driver. Set this parameter to org.postgresql.Driver.

Go to the /home/spark2.4.5/spark-2.4.5-odps0.33.2/conf directory and modify the spark-defaults.conf file.

# Modify the following configurations: spark.hadoop.odps.project.name = <MaxCompute_Project_Name> spark.hadoop.odps.end.point = <Endpoint> spark.hadoop.odps.runtime.end.point = <VPC_Endpoint> spark.hadoop.odps.access.id = <AccessKey_ID> spark.hadoop.odps.access.key = <AccessKey_Secret> spark.hadoop.odps.cupid.trusted.services.access.list = <Hologres_Classic_Network> # Retain the following configurations: spark.master = yarn-cluster spark.driver.cores = 2 spark.driver.memory = 4g spark.dynamicAllocation.shuffleTracking.enabled = true spark.dynamicAllocation.shuffleTracking.timeout = 20s spark.dynamicAllocation.enabled = true spark.dynamicAllocation.maxExecutors = 10 spark.dynamicAllocation.initialExecutors = 2 spark.executor.cores = 2 spark.executor.memory = 8g spark.eventLog.enabled = true spark.eventLog.overwrite = true spark.eventLog.dir = odps://admin_task_project/cupidhistory/sparkhistory spark.sql.catalogImplementation = hive spark.sql.sources.default = hiveParameters:

MaxCompute_Project_Name: the name of the MaxCompute project that you created.

To obtain the project name, log on to the MaxCompute console, select a region in the upper-left corner, and then choose Workspace > Projects in the left-side navigation pane.

access_id: the AccessKey ID used to access the MaxCompute project.

You can obtain the AccessKey ID on the AccessKey Pair page.

AccessKey Secret: the AccessKey secret corresponding to the AccessKey ID that you specified in the access_id parameter.

You can obtain the AccessKey secret on the AccessKey Pair page.

Endpoint: the endpoint used to access the MaxCompute project over the Internet.

For information about the public endpoint of each region, see Endpoints in different regions (Internet).

VPC_Endpoint: the endpoint used to access the Virtual Private Cloud (VPC) where the MaxCompute project resides.

For information about the VPC endpoint of each region, see Endpoints in different regions (VPC).

Hologres_Classic_Network: the endpoint used to access Hologres over the classic network. This configuration specifies a network policy for connecting to the Hologres instance in the MaxCompute sandbox environment. Otherwise, MaxCompute cannot access external services.

Run the following spark-submit command from a directory on the Linux operating system to submit a job in cluster mode:

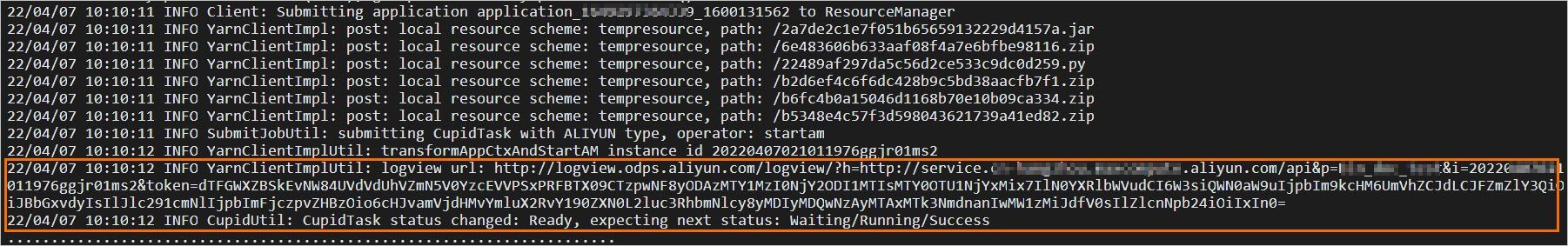

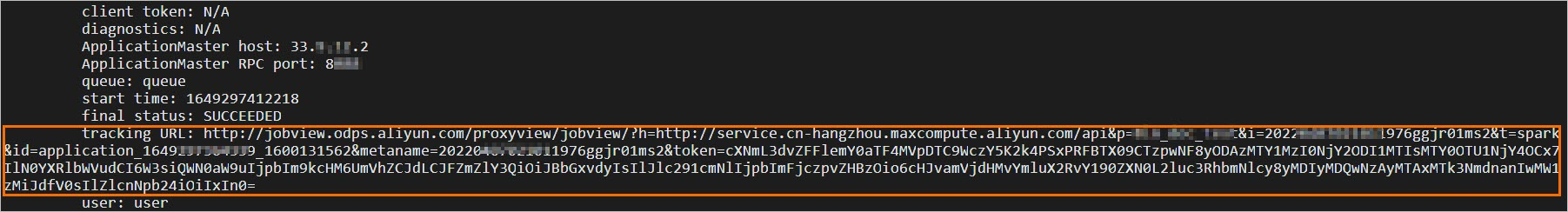

spark-submit --master yarn-cluster --driver-class-path /home/postgreSQL/postgresql-42.2.16.jar --jars /home/postgreSQL/postgresql-42.2.16.jar /home/pythoncode/holo_yarncluster.pyAfter you submit the job, you can use the following information in the output logs to monitor the job:

The LogView URL.

The JobView URL of Spark UI.

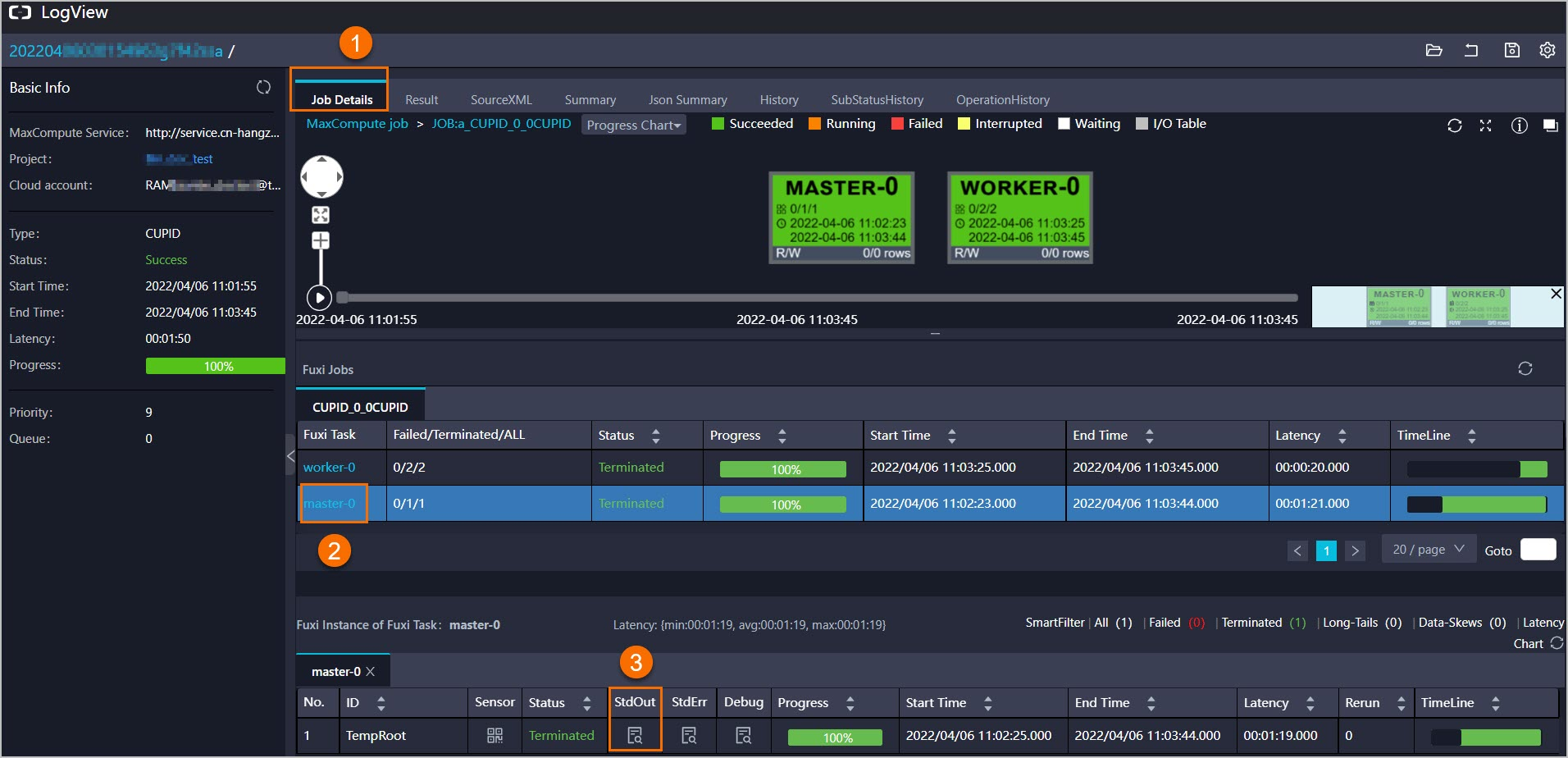

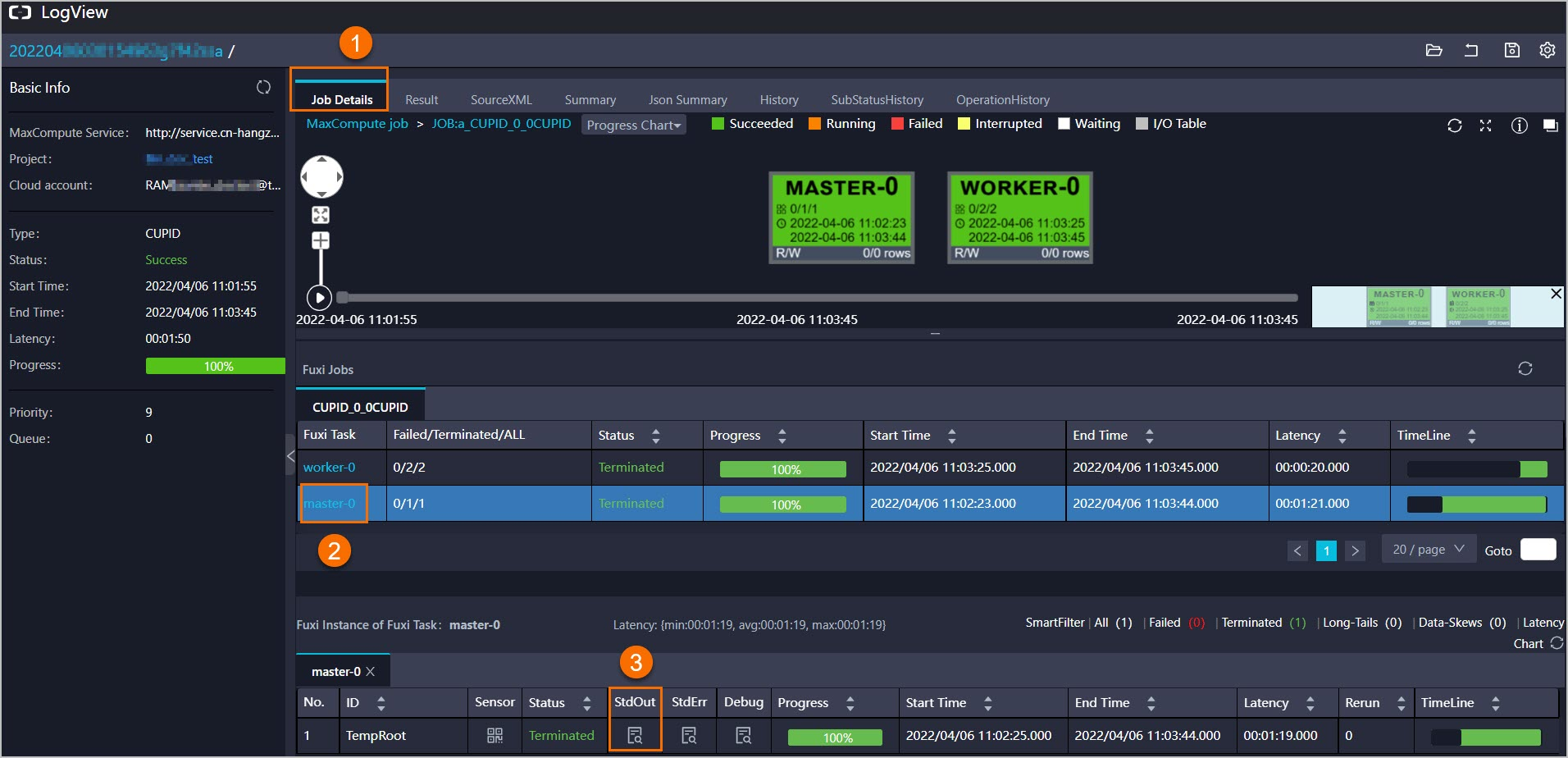

Open the Logview URL. If the job status is success, click to view the returns of the

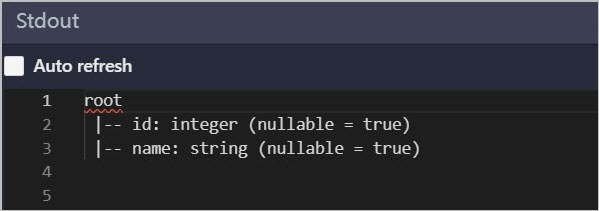

jdbcDF.printSchema()method.

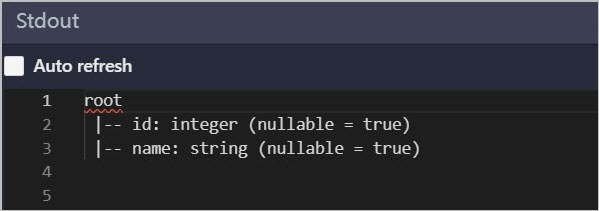

If the printed schema information in the Stdout section is the same as that of the

mc_jdbc_holotable in Hologres, access to Hologres from MaxCompute is successful. Note

NoteYou can also open the Jobview URL of Spark UI to view the job details.

Use Spark on MaxCompute in DataWorks mode

In the HoloWeb console, execute the following statement in the

mc_db_holodatabase to create a table namedmc_jdbc_holo.For information about how to create a Hologres table, see CREATE TABLE.

CREATE TABLE mc_jdbc_holo( id INTEGER, name TEXT );Go to the /home/spark2.4.5/spark-2.4.5-odps0.33.2/conf directory and modify the spark-defaults.conf file.

# Modify the following configurations: spark.hadoop.odps.project.name = <MaxCompute_Project_Name> spark.hadoop.odps.end.point = <Endpoint> spark.hadoop.odps.runtime.end.point = <VPC_Endpoint> spark.hadoop.odps.access.id = <AccessKey_ID> spark.hadoop.odps.access.key = <AccessKey_Secret> spark.hadoop.odps.cupid.trusted.services.access.list = <Hologres_Classic_Network> # Retain the following configurations: spark.master = yarn-cluster spark.driver.cores = 2 spark.driver.memory = 4g spark.dynamicAllocation.shuffleTracking.enabled = true spark.dynamicAllocation.shuffleTracking.timeout = 20s spark.dynamicAllocation.enabled = true spark.dynamicAllocation.maxExecutors = 10 spark.dynamicAllocation.initialExecutors = 2 spark.executor.cores = 2 spark.executor.memory = 8g spark.eventLog.enabled = true spark.eventLog.overwrite = true spark.eventLog.dir = odps://admin_task_project/cupidhistory/sparkhistory spark.sql.catalogImplementation = hive spark.sql.sources.default = hiveParameters:

MaxCompute_Project_Name: the name of the MaxCompute project that you created.

To obtain the project name, log on to the MaxCompute console, select a region in the upper-left corner, and then choose Workspace > Projects in the left-side navigation pane.

access_id: the AccessKey ID used to access the MaxCompute project.

You can obtain the AccessKey ID on the AccessKey Pair page.

AccessKey Secret: the AccessKey secret corresponding to the AccessKey ID that you specified in the access_id parameter.

You can obtain the AccessKey secret on the AccessKey Pair page.

Endpoint: the endpoint used to access the MaxCompute project over the Internet.

For information about the public endpoint of each region, see Endpoints in different regions (Internet).

VPC_Endpoint: the endpoint used to access the Virtual Private Cloud (VPC) where the MaxCompute project resides.

For information about the VPC endpoint of each region, see Endpoints in different regions (VPC).

Hologres_Classic_Network: the endpoint used to access Hologres over the classic network. This configuration specifies a network policy for connecting to the Hologres instance in the MaxCompute sandbox environment. Otherwise, MaxCompute cannot access external services.

Log on to the DataWorks console.

In the left-side navigation pane, click Workspaces.

On the Workspaces page, find the workspace that you want to view and choose Shortcuts > Data Development in the Actions column.

Create a PostgreSQL JDBC resource and an ODPS Spark node.

In the left-side navigation pane, right-click the business flow that you want to use and choose . In the Create Resource dialog box, upload the downloaded PostgreSQL JDBC package and click Create.

NoteFor information about how to create a business flow, see Create a workflow.

For information about how to a create MaxCompute resource, see Create and use MaxCompute resources.

Right-click the business flow that you want to use and choose . In the Create Resource dialog box, enter a resource name in the Name field and click Create.

In this example, the resource name is

read_holo.py.Copy and paste the following code to the

read_holo.pyfile and click the icon.

icon. from pyspark.sql import SparkSession spark = SparkSession \ .builder \ .appName("Spark") \ .getOrCreate() jdbcDF = spark.read.format("jdbc"). \ options( url='jdbc:postgresql://hgprecn-cn-2r42******-cn-hangzhou-internal.hologres.aliyuncs.com:80/mc_db_holo', dbtable='mc_jdbc_holo', user='LTAI****************', password='********************', driver='org.postgresql.Driver').load() jdbcDF.printSchema()Parameters:

url: the JDBC URL that contains the

jdbc:postgresql://prefix.hgprecn-cn-2r42******-cn-hangzhou.hologres.aliyuncs.com:80: the endpoint used to access the Hologres instance over the Internet. For information about how to obtain the endpoint, see Instance configurations.

mc_db_holo: the name of the Hologres database to which you want to connect. In this example, the Hologres database name is mc_db_holo.

dbtable: the name of the Hologres table from which you want to read data or to which you want to write data. In this example, the Hologres table name is mc_db_holo.

user: the AccessKey ID of an authorized Alibaba Cloud account or RAM user. You can obtain the AccessKey ID on the AccessKey Pair page.

password: the AccessKey secret corresponding to the AccessKey ID that you specified in the user parameter.

driver: the PostgreSQL driver. Set this parameter to org.postgresql.Driver.

Right-click the business flow that you want to use and choose . In the Create Node dialog box, enter a node name in the Name field and click Confirm. In this example, the node name is spark_read_holo.

Follow the instructions in the following figure to configure the

spark_read_holonode.Add an entry in the Configuration Items field. Set the key to

spark.hadoop.odps.cupid.trusted.services.access.list.Set the value to

hgprecn-cn-2r42******-cn-hangzhou-internal.hologres.aliyuncs.com:80, which specifies the endpoint used to access Hologres over the classic network.NoteThis configuration specifies a network policy for connecting to the Hologres instance in the MaxCompute sandbox environment. Otherwise, MaxCompute cannot access external services.

On the canvas of the business workflow, right-click the .

After you submit the job, you can view the job details in the output logs, including the diagnostic information, LogView URL, and Jobview URL of Spark UI.

Open the Logview URL. If the job status is success, click to view the returns of the

jdbcDF.printSchema()method.

If the printed schema information in the Stdout section is the same as that of the

mc_jdbc_holotable in Hologres, access to Hologres from MaxCompute is successful. Note

NoteYou can also open the Jobview URL of Spark UI to view the job details.