This topic describes best practices for tuning the performance of internal tables in Hologres.

Update statistics

Statistics are crucial for generating an optimal execution plan. Hologres collects sample statistics about data, such as data distribution, table and column statistics, number of rows, field width, cardinality, frequency, maximum and minimum values, and bucketing distribution features. These statistics help the optimizer estimate the cost of operator execution, reduce the search space, and determine the optimal join order, memory overhead, and degree of parallelism. This process results in a better execution plan. For more information about statistics, see Using Explain.

Statistics collection has limitations. If collection is not real-time, is manually triggered, or is periodically triggered, the statistics may not reflect the most accurate data characteristics. You should first check the information from explain to verify that the statistics are correct. In the statistics, rows represents the number of rows for each operator, and width represents the width.

Check whether statistics are correct

You can view the execution plan:

If statistics are not synchronized in time, a suboptimal execution plan may be generated. For example:

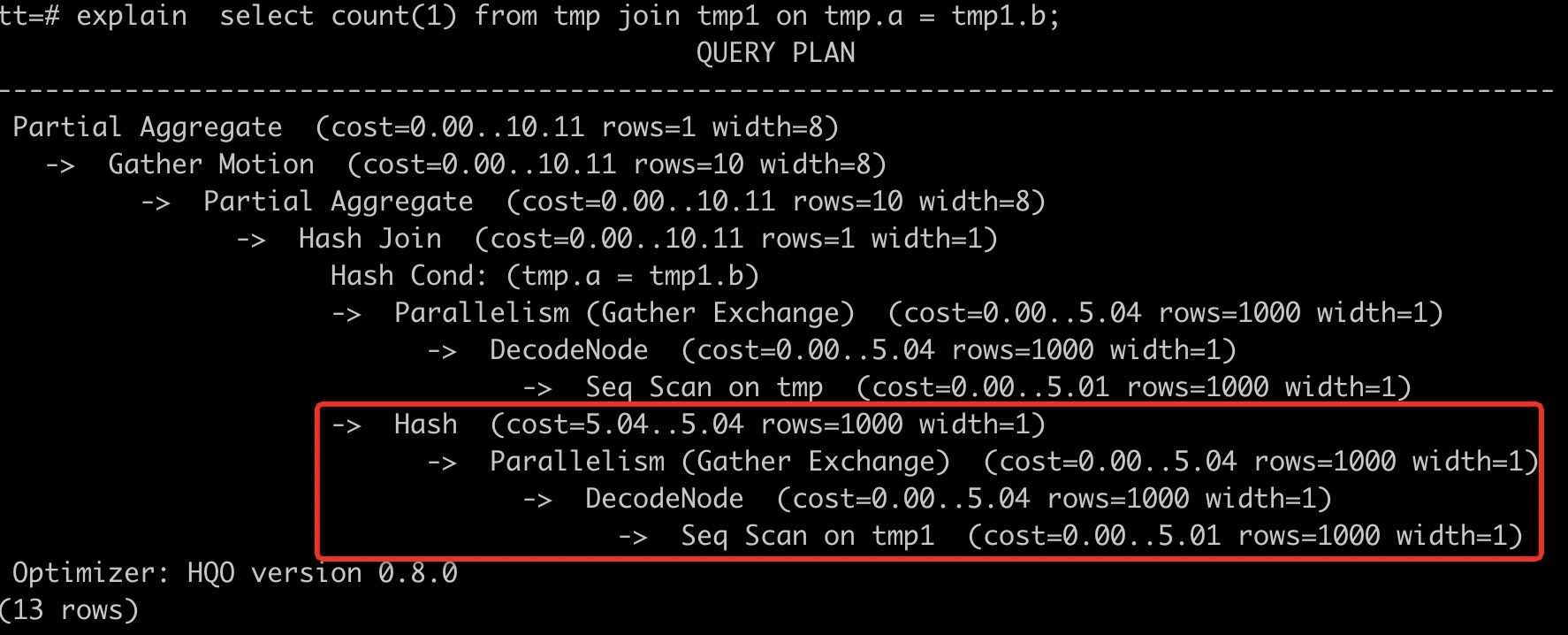

The tmp1 table has 10 million rows, and the tmp table has 1,000 rows. In Hologres, the default number of rows in the statistics is 1,000. If you run an explain SQL statement, the result may show that the number of rows for the tmp1 table does not match the actual number of rows. This indicates that the statistics are not updated.

Seq Scan on tmp1 (cost=0.00..5.01 rows=1000 width=1)

Update statistics

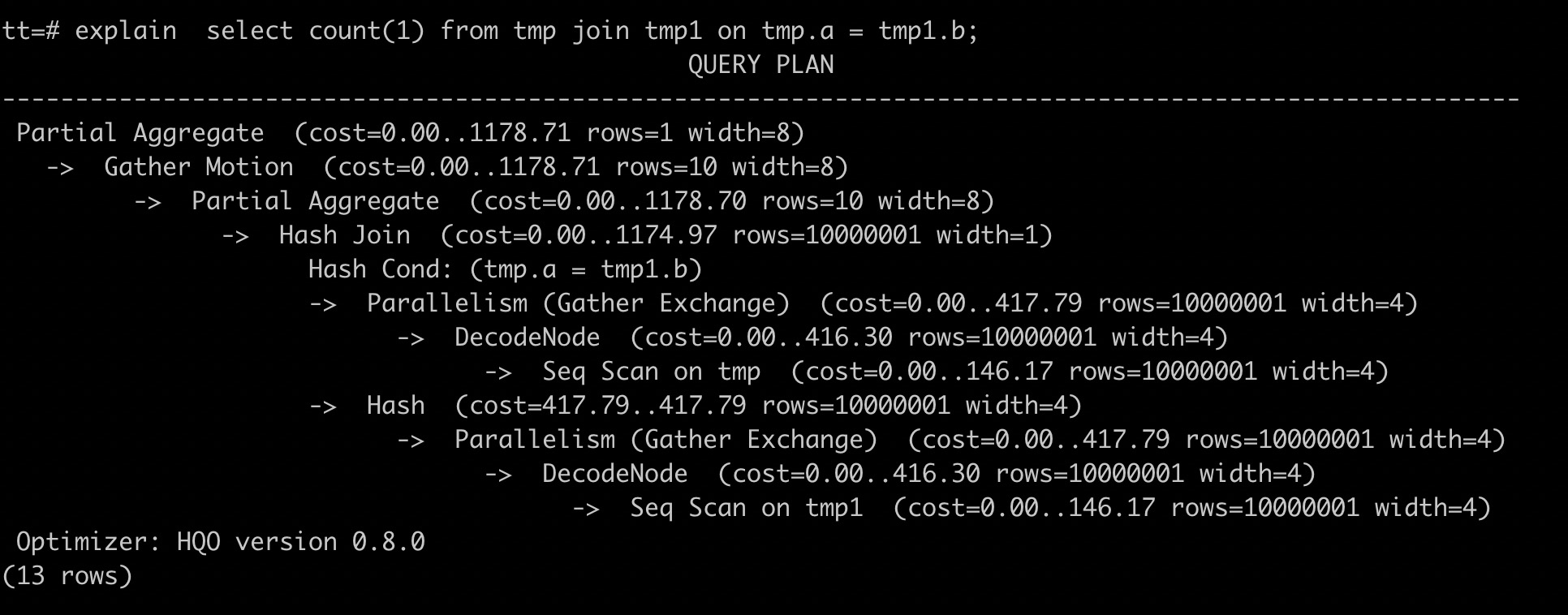

When the tmp1 and tmp tables are joined, the correct explain information should show that the larger table tmp1 is placed above the smaller table tmp, and the smaller table tmp is used for the hash join. Because the statistics for the tmp1 table are not updated in time, Hologres incorrectly selects the tmp1 table to create a hash table for the hash join. This process is inefficient and may cause an out-of-memory (OOM) error. To resolve this, run the analyze command for both tables in the join to collect statistics.

analyze tmp;

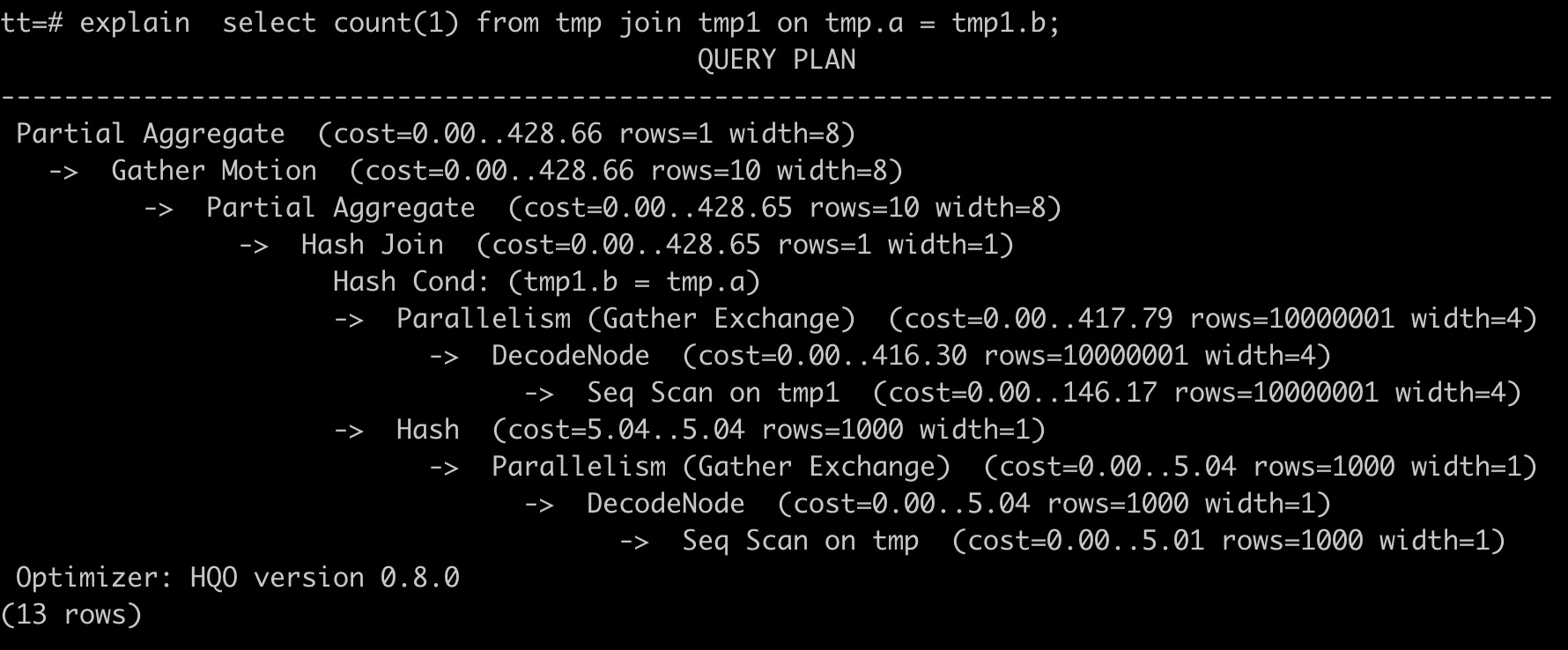

analyze tmp1;After you run the analyze command, the join order is correct. The smaller table tmp is used to create the hash table, and the larger table tmp1 is placed above it, as shown in the following figure. The tmp1 table now shows 10 million rows, which indicates that the statistics are updated.

If the explain result returns rows=1000, it means statistics are missing. Poor performance is often caused by the optimizer lacking statistics. To fix this, update the statistics by running analyze <tablename>. This is a simple and fast way to optimize query performance.

Recommended scenarios for updating statistics

You should run the analyze <tablename> command in the following situations.

After you import data.

After you perform many INSERT, UPDATE, or DELETE operations.

When you need to run ANALYZE for both internal and foreign tables.

When you need to run ANALYZE on a parent table for a partitioned table.

If you encounter the following issues, run

analyze <tablename>before you run the import task to optimize efficiency.An OOM error occurs during a multi-table join.

The error message is typically

Query executor exceeded total memory limitation xxxxx: yyyy bytes used.The import efficiency is low.

When you query or import data in Hologres, the efficiency is low and tasks take a long time to complete.

Set an appropriate shard count

The shard count represents the degree of parallelism for query execution and is critical to query performance. Too few shards can lead to insufficient parallelism. Too many shards can increase query startup overhead, reduce query efficiency, create an excessive number of small files, and consume more memory for metadata management. You should set a shard count that matches the instance type to improve query efficiency and reduce memory overhead.

Hologres sets a default shard count for each instance. This count is approximately equal to the number of cores used for queries in the instance, which is slightly less than the total number of purchased cores. The purchased cores are allocated to different nodes, such as query nodes, access nodes, control nodes, and scheduling nodes. For more information about the default shard count for different instance types, see Instance management. After an instance is scaled out, the default shard count for a database that existed before the scale-out is not automatically changed. You must change the shard count as needed. The shard count for a new database created after the scale-out is the default for the current instance type. The default shard count already considers scale-out scenarios. If resources are scaled out by more than five times, consider resetting the shard count. If the scale-out is less than five times, execution efficiency can still improve without changing the shard count. For more information, see Best practices for table group settings.

You should change the shard count in the following scenarios:

After a scale-out, your existing business has grown and you need to improve its query efficiency. In this case, you can create a new table group and set a larger shard count for it. The original tables and data remain in the old table group. You must re-import the data into the new table group to complete the resharding process.

After a scale-out, you need to launch a new business, but the existing business remains unchanged. In this case, you can create a new table group and set an appropriate shard count for it without changing the structure of the original tables.

You can create multiple table groups in a database. However, the sum of the shard counts of all table groups should not exceed the default shard count recommended by Hologres. This ensures the most effective use of CPU resources.

Optimization for JOIN scenarios

When you join two or more tables, you can use the following optimization methods to improve join performance.

Update statistics

As mentioned previously, if the statistics of tables in a join are not updated in time, the larger table might be used to create a hash table. This reduces join efficiency. You can update the table statistics to improve SQL performance.

analyze <tablename>;Select an appropriate distribution key

A distribution key is used to partition data across multiple shards. Even partitioning prevents data skew. Designing multiple related tables with the same distribution key can accelerate local joins. When you create a table, select an appropriate distribution key based on the following principles:

Suggestions for setting a distribution key

Select the join condition columns from your join queries as the distribution key.

Select columns that are frequently used in `GROUP BY` clauses as the distribution key.

Select columns with evenly and discretely distributed data as the distribution key.

For more information about the principles and usage of distribution keys, see Distribution keys.

Example of setting a distribution key

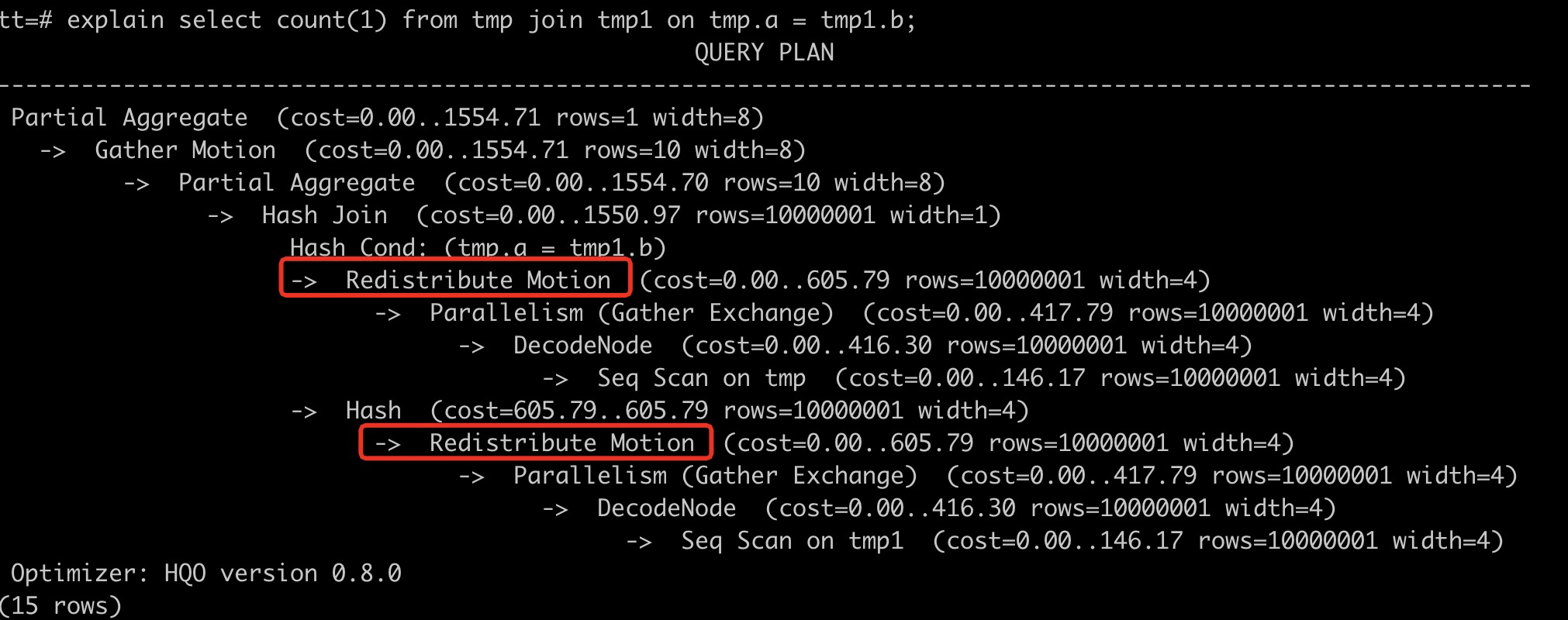

For example, when you join the `tmp` and `tmp1` tables, the execution plan from the `explain` SQL statement may show a `Redistribution Motion`. This means data is being redistributed and there is no local join, which leads to low query efficiency. You need to recreate the tables and set the join key as the distribution key to avoid the extra overhead of data redistribution during multi-table joins.

The following DDL statements are examples for recreating the two tables.

The following DDL statements are examples for recreating the two tables.begin; create table tmp(a int, b int, c int); call set_table_property('tmp', 'distribution_key', 'a'); commit; begin; create table tmp1(a int, b int, c int); call set_table_property('tmp1', 'distribution_key', 'b'); commit; -- Set the distribution key to the join key. select count(1) from tmp join tmp1 on tmp.a = tmp1.b ;After you reset the distribution keys for the tables and run the `explain` SQL statement again, the Motion operator in the red box is removed from the execution plan. The data is distributed among shards using the same hash key. Because the data distribution is the same, the Motion operator is no longer needed. This indicates that data will not be redistributed, which avoids redundant network overhead.

Use Runtime Filter

Starting from V2.0, Hologres supports Runtime Filter. This feature is typically used in multi-table joins, especially in scenarios where a large table is joined with a small table. You do not need to set it manually. The optimizer and execution engine automatically optimize the filtering behavior during the join process at query time. This reduces the amount of data scanned, lowers I/O overhead, and improves join query performance. For more information, see Runtime Filter.

Optimize the Join Order algorithm

When SQL join relationships are complex or many tables are joined, the query optimizer (QO) spends more time selecting the optimal join order. In certain scenarios, adjusting the join order policy can reduce the time spent on query optimization. You can use the following syntax to set the optimizer's join order algorithm.

set optimizer_join_order = '<value>';Parameter description

Parameter

Description

value

The join order algorithm for the optimizer. The options are as follows.

exhaustive2 (default for V2.2 and later): An upgraded and optimized dynamic programming algorithm.

exhaustive (default in earlier versions): Uses a dynamic programming algorithm for join order transformation. It generates the optimal execution plan but has the highest optimizer overhead.

query: Does not perform join order transformation. It executes joins in the order written in the SQL statement and has the lowest optimizer overhead.

greedy: Uses a greedy algorithm to explore the join order. It has a moderate optimizer overhead.

Additional information

The default exhaustive2 algorithm performs a global search for the optimal execution plan. However, for joins with many tables, such as more than 10, the optimization time can be high. Using the `query` or `greedy` algorithm can reduce optimizer time, but may not generate the optimal execution plan.

Optimize Motion operators such as Broadcast

Hologres includes four types of Motion Nodes. Each type corresponds to a data redistribution scenario, as shown in the following table.

Type | Description |

Redistribute Motion | Data is shuffled to one or more shards through hashing or random distribution. |

Broadcast Motion | Data is copied to all shards. Broadcast Motion is most advantageous only when both the number of shards and the number of broadcast tables are small. |

Gather Motion | Data is aggregated into a single shard. |

Forward Motion | Used for federated query scenarios. Data is transferred between an external data source or execution engine and the Hologres execution engine. |

Based on the results of the `explain` SQL statement, consider the following:

If a Motion operator is time-consuming, you should redesign the distribution key.

If incorrect statistics lead to the generation of a Gather Motion or Broadcast Motion, you can use the

analyze <tablename>command to change it to the more efficient Redistribute Motion distribution method.Broadcast Motion is advantageous only in scenarios with a small number of shards and small broadcast tables. Therefore, for small table broadcast scenarios, you should reduce the number of shards for the table. Try to keep the shard count proportional to the number of workers to improve query efficiency. For more information about shard count, see Shard count.

Disable Dictionary Encoding

For queries on character types such as Text, Char, and Varchar, Dictionary Encoding or Decoding reduces the time it takes to compare strings, but it introduces significant Decode or Encode overhead.

By default, Hologres creates Dictionary Encoding for all character type columns. You can set `dictionary_encoding_columns` to empty or disable automatic Dictionary Encoding for specific columns. Note that changing Dictionary Encoding settings causes data files to be re-encoded and stored. This process consumes CPU and memory resources for a period of time. You should change these settings during off-peak hours.

If the Decode operator is time-consuming, you can disable Dictionary Encoding to improve performance.

If a table has many character type fields, select them as needed. You do not need to add all character types to `dictionary_encoding_columns`. The following is a sample statement:

begin;

create table tbl (a int not null, b text not null, c int not null, d int);

call set_table_property('tbl', 'dictionary_encoding_columns', '');

commit;Common performance tuning methods

You can optimize the corresponding SQL statements to improve query efficiency.

Use Fixed Plan

Fixed Plan is suitable for high-throughput scenarios. It uses a simplified execution path to achieve significantly higher performance and throughput. For information on how to configure and use it, see Accelerate SQL execution using Fixed Plan.

Rewrite PQE operators

Hologres has multiple underlying execution engines: the native Hologres Query Engine (HQE), which is a vector engine, and the Postgres Query Engine (PQE), which is a distributed Postgres engine. If an SQL statement contains an operator that HQE does not support, the system sends the operator to PQE for execution. In this case, query performance is not fully optimized, and you need to modify the query statement.

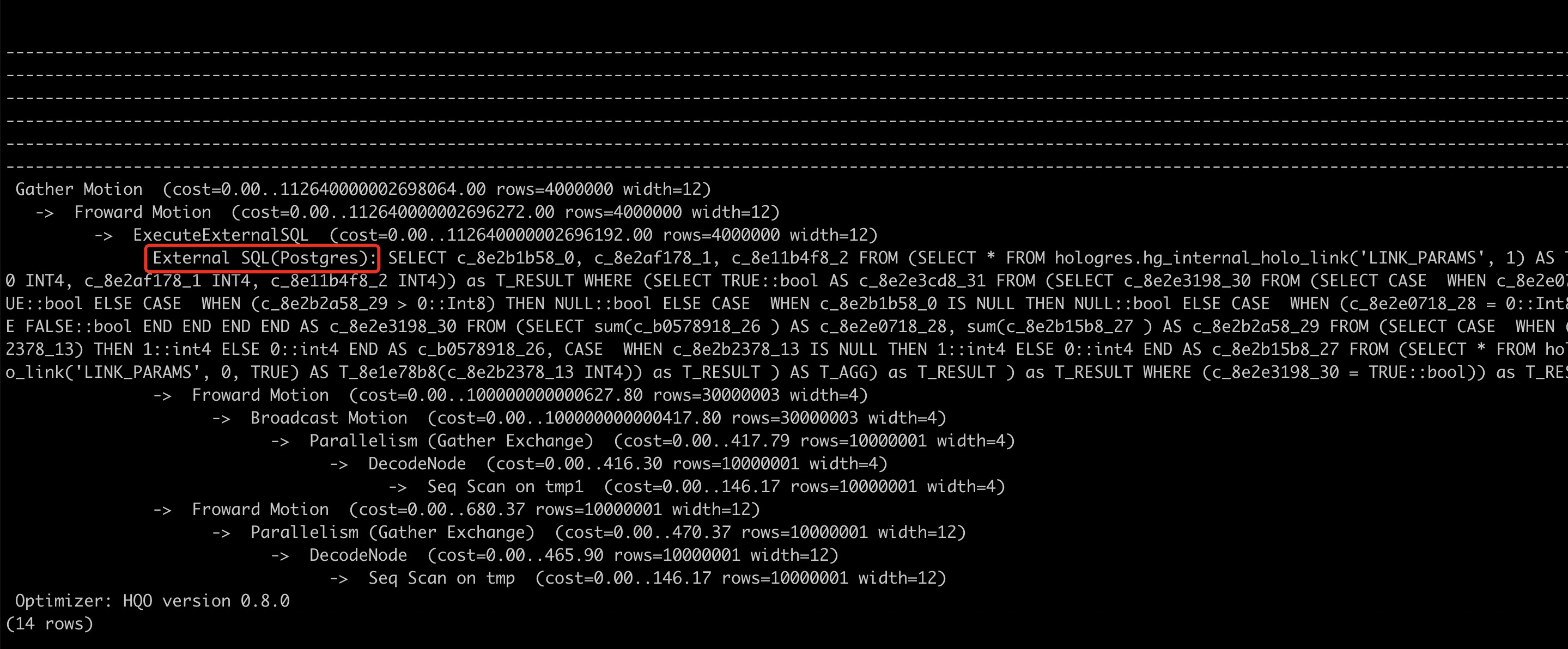

When you check the execution plan using an explain SQL query, if the plan contains External SQL (Postgres), it means that part of the SQL statement is executed in PQE.

For example, HQE does not support not in. The not in operation is sent to the external query engine PQE for execution. You can rewrite not in as not exists. The following is the SQL statement before optimization.

explain select * from tmp where a not in (select a from tmp1);The External operator indicates that this part of the SQL statement is executed in the external engine, which is Postgres.

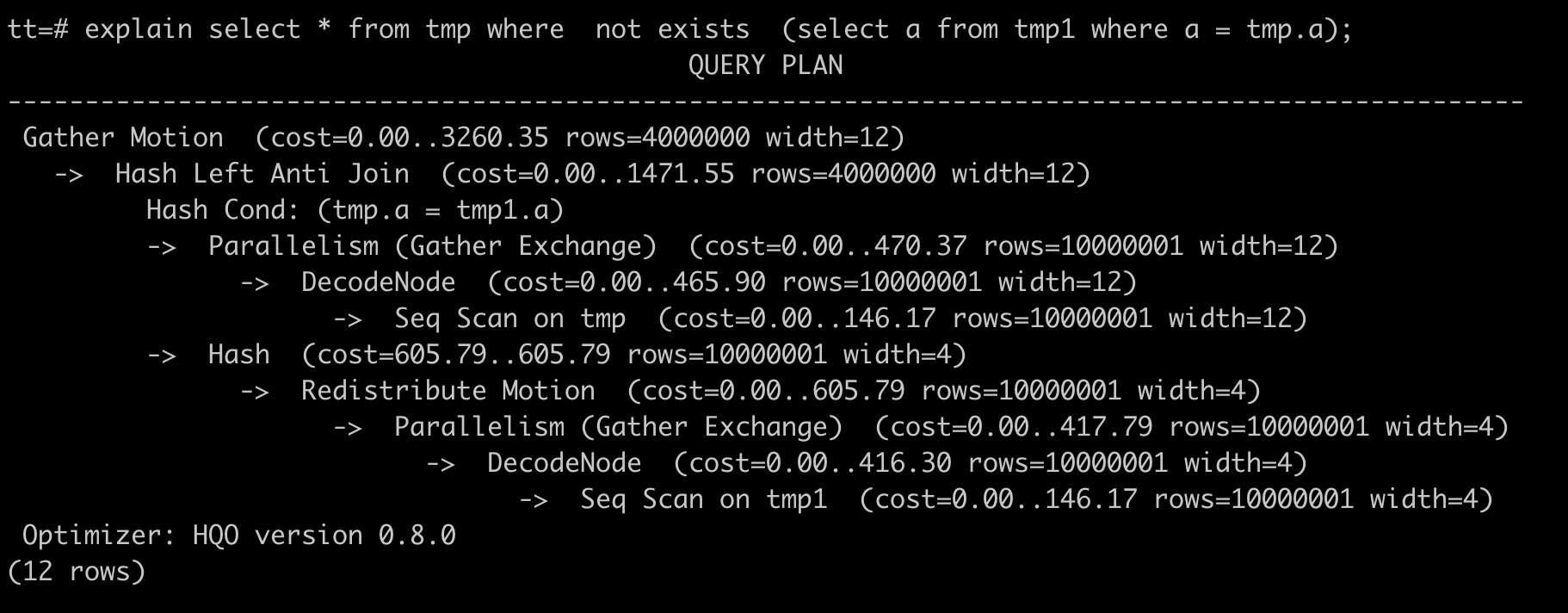

The following is the optimized SQL statement. The external query engine is no longer used.

explain select * from tmp where not exists (select a from tmp1 where a = tmp.a);

You can rewrite functions to run operators in the HQE. The following table provides suggestions for rewriting functions. Each version of Hologres continuously pushes more functions down to HQE. If a function is already supported by HQE in a later version, you can resolve the issue by upgrading your instance. For more information, see Function release notes.

Function not supported by the Hologres native engine (HQE) | Suggested rewrite | Example | Notes |

not in | not exists | | Not applicable. |

regexp_split_to_table(string text, pattern text) | unnest(string_to_array) | | regexp_split_to_table supports regular expressions. Starting from Hologres V2.0.4, HQE supports regexp_split_to_table. You need to enable the GUC with the following command: set hg_experimental_enable_hqe_table_function = on; |

substring | extract(hour from to_timestamp(c1, 'YYYYMMDD HH24:MI:SS')) | Rewrite as: | Some V0.10 versions and earlier versions of Hologres do not support substring. In V1.3 and later, HQE supports non-regular expression input parameters for the substring function. |

regexp_replace | replace | Rewrite as: | replace does not support regular expressions. |

at time zone 'utc' | Delete at time zone 'utc' | Rewrite as: | Not applicable. |

cast(text as timestamp) | to_timestamp | Rewrite as: | Supported by HQE starting from Hologres V2.0. |

timestamp::text | to_char | Rewrite as: | Supported by HQE starting from Hologres V2.0. |

Avoid fuzzy searches

Fuzzy searches, such as the `LIKE` operation, do not use indexes.

Impact of result caching on queries

By default, Hologres caches the results of identical queries or subqueries. Repeated executions of the same query will hit the cache. You can use the following command to disable caching to avoid affecting performance tests:

set hg_experimental_enable_result_cache = off;Optimization methods for OOM

An OOM error usually occurs when the instance has insufficient computing memory. The following is a common error message. OOM errors can be caused by various factors, such as complex calculations and high concurrency. You can perform targeted optimizations based on the specific cause to reduce OOM errors. For more information, see Troubleshooting guide for OOM issues.

Total memory used by all existing queries exceeded memory limitation.

memory usage for existing queries=(2031xxxx,184yy)(2021yyyy,85yy)(1021121xxxx,6yy)(2021xxx,18yy)(202xxxx,14yy); Used/Limit: xy1/xy2 quota/sum_quota: zz/100Optimization for Order By Limit scenarios

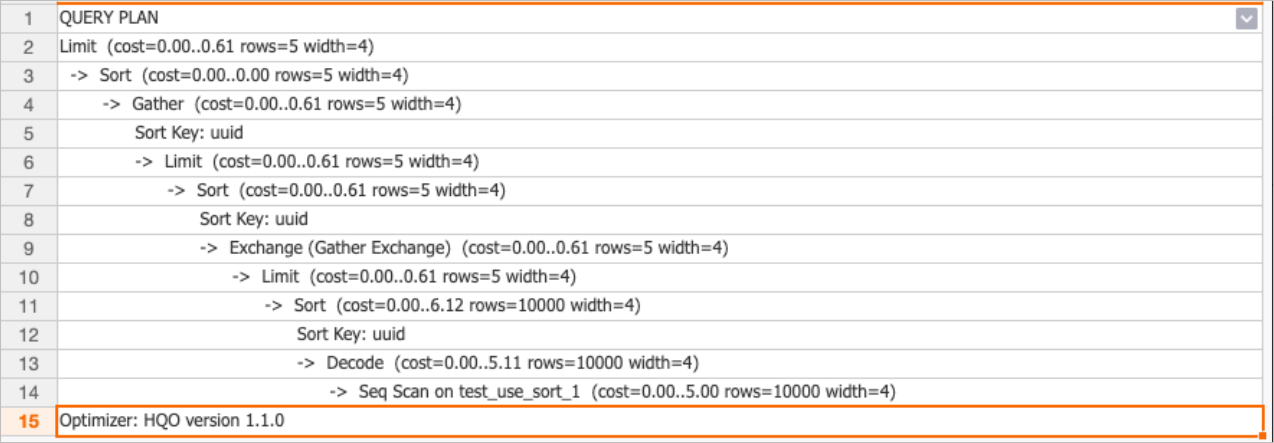

In versions earlier than Hologres V1.3, the Merge Sort operator was not supported for `Order By Limit` scenarios. When an execution plan was generated, an extra sort was performed on the final output, resulting in poor performance. Starting from V1.3, the engine has optimized `Order By Limit` scenarios to support the Merge Sort operator. This enables multi-way merge sort, which eliminates the need for an extra sort and improves query performance.

The following is an optimization example.

Table DDL

begin;

create table test_use_sort_1

(

uuid text not null,

gpackagename text not null,

recv_timestamp text not null

);

call set_table_property('test_use_sort_1', 'orientation', 'column');

call set_table_property('test_use_sort_1', 'distribution_key', 'uuid');

call set_table_property('test_use_sort_1', 'clustering_key', 'uuid:asc,gpackagename:asc,recv_timestamp:desc');

commit;

--Insert data

insert into test_use_sort_1 select i::text, i::text, '20210814' from generate_series(1, 10000) as s(i);

--Update statistics

analyze test_use_sort_1;Query command

set hg_experimental_enable_reserve_gather_exchange_order =on

set hg_experimental_enable_reserve_gather_motion_order =on

select uuid from test_use_sort_1 order by uuid limit 5;Execution plan comparison

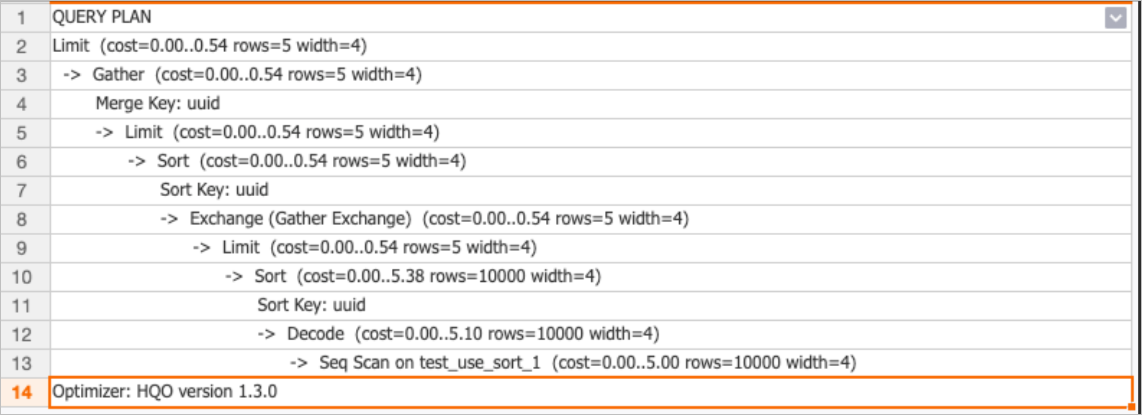

The following is the execution plan for a version earlier than Hologres V1.3 (V1.1).

The following is the execution plan for Hologres V1.3.

The execution plan comparison shows that Hologres V1.3 has one less sort in the final output. It performs a direct multi-way merge, which improves query performance.

Count Distinct optimization

Rewrite as APPROX_COUNT_DISTINCT

Count Distinct is a precise deduplication method. It requires shuffling records with the same key to the same node for deduplication, which is resource-intensive. Hologres provides the `APPROX_COUNT_DISTINCT` extension function, which uses HyperLogLog cardinality estimation for imprecise `COUNT DISTINCT` calculations to improve query performance. The average error rate can be controlled within 0.1% to 1%. You can rewrite your queries as needed. For more information, see APPROX_COUNT_DISTINCT.

Use the UNIQ function

Starting from V1.3, Hologres supports the `UNIQ` function for precise deduplication. When the cardinality of the `GROUP BY` key is high, `UNIQ` performs better and saves more memory than `Count Distinct`. If you encounter an OOM error with `Count Distinct`, you can replace it with `UNIQ`. For more information, see UNIQ.

Set an appropriate distribution key

If you have multiple `Count Distinct` operations with the same key and the data is discretely and evenly distributed, you can set the `Count Distinct` key as the distribution key. This ensures that the same data is distributed to the same shard, which avoids data shuffle.

Count Distinct optimization

Starting from V2.1, Hologres includes many performance optimizations for `Count Distinct` scenarios. These optimizations cover single `Count Distinct`, multiple `Count Distinct`, data skew, and SQL statements without a `Group By` field. You do not need to manually rewrite the statement to use `UNIQ` to achieve better performance. To improve `Count Distinct` performance, upgrade your Hologres instance to V2.1 or a later version.

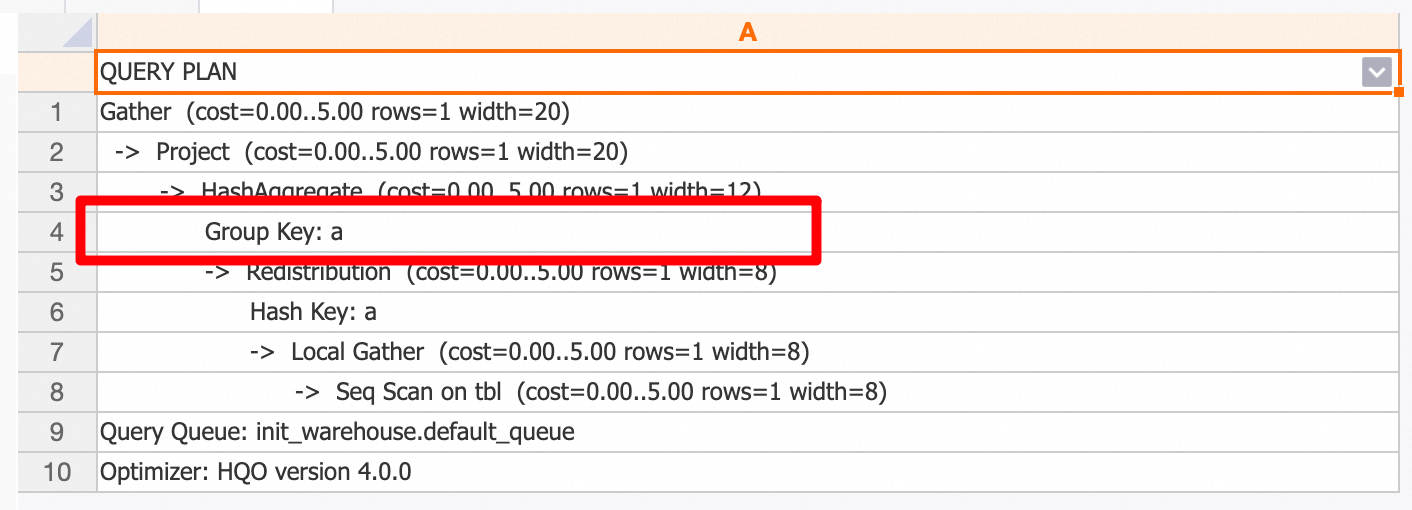

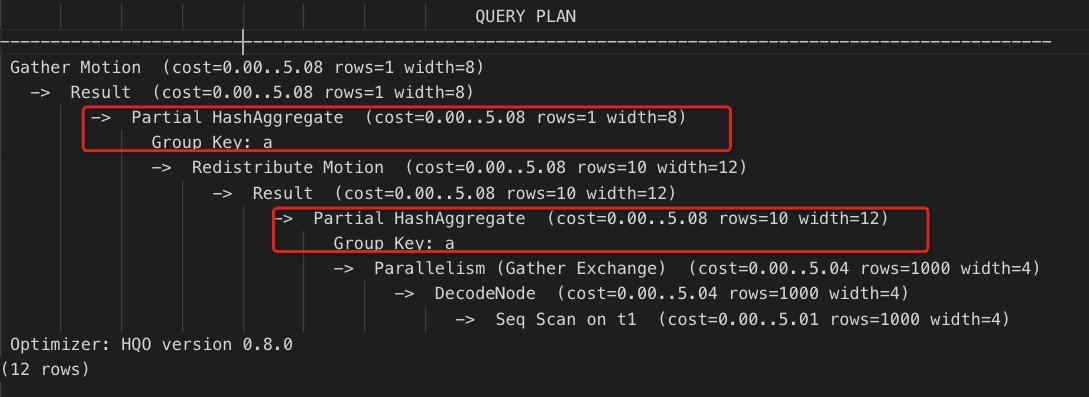

Group By optimization

A `Group By` key causes data to be redistributed based on the key of the grouping column during computation. If the `Group By` operation is time-consuming, you can set the `Group By` column as the distribution key.

-- If data is distributed based on the values in column a, runtime data redistribution is reduced, and the parallel computing capability of shards is fully utilized.

select a, count(1) from t1 group by a; Starting from V4.0, Hologres provides a merge optimization to reduce merges when you group by multiple related columns. For example, a clause such as GROUP BY COL_A, ((COL_A + 1)), ((COL_A + 2)) is rewritten to the equivalent GROUP BY COL_A. Due to the exploration overhead, the search depth is limited to a maximum of five layers. For example:

CREATE TABLE tbl (

a int,

b int,

c int

);

-- Query

SELECT

a,

a + 1 as a1,

a + 2 as a2,

sum(b)

FROM tbl

GROUP BY

a,

a1,

a2;The execution plan is shown in the following figure. You can see that a query rewrite was performed, and the GROUP BY clause contains only column A.

You can disable this feature using the following Grand Unified Configuration (GUC) parameters:

-- Disable the feature at the session level.

SET hg_experimental_remove_related_group_by_key = off;

-- Disable the feature at the database level.

ALTER DATABASE <database_name> SET hg_experimental_remove_related_group_by_key = off; Handle data skew

Uneven data distribution across multiple shards can slow down queries. You can use the following statement to determine whether data skew exists. For more information, see View the worker skew relationship.

-- hg_shard_id is a built-in hidden column in each table that describes the shard where the corresponding row of data is located.

select hg_shard_id, count(1) from t1 group by hg_shard_id;If there is significant data skew, change the `distribution_key`. Select a column with evenly and discretely distributed data as the new distribution key.

NoteChanging the `distribution_key` requires you to recreate the table and re-import the data.

If the data itself is skewed and the skew is unrelated to the `distribution_key`, you must optimize the data from a business perspective to avoid skew.

Optimization for With expressions (Beta)

Hologres is compatible with PostgreSQL and supports Common Table Expressions (CTEs). CTEs are commonly used in recursive queries with `WITH`. Because the implementation is based on inlining, as in PostgreSQL, referencing a CTE multiple times causes repeated computation. In Hologres V1.3, you can use the following GUC parameter to enable CTE Reuse. This allows a CTE to be computed once and referenced multiple times, saving computing resources and improving query performance. If your Hologres instance is a version earlier than V1.3, upgrade your instance.

set optimizer_cte_inlining=off;This feature is in beta and is disabled by default. By default, all CTEs are fully inlined and recomputed. You can enable this feature by manually setting the GUC.

When CTE Reuse is enabled, it relies on the Spill feature in the Shuffle stage. Because downstream consumers process CTEs at different rates, performance may be affected if the data volume is large.

Example

create table cte_reuse_test_t ( a integer not null, b text, primary key (a) ); insert into cte_reuse_test_t values(1, 'a'),(2, 'b'), (3, 'c'), (4, 'b'), (5, 'c'), (6, ''), (7, null); set optimizer_cte_inlining=off; explain with c as (select b, max(a) as a from cte_reuse_test_t group by b) select a1.a,a2.a,a1.b, a2.b from c a1, c a2 where a1.b = a2.b order by a1.b limit 100;Execution plan comparison

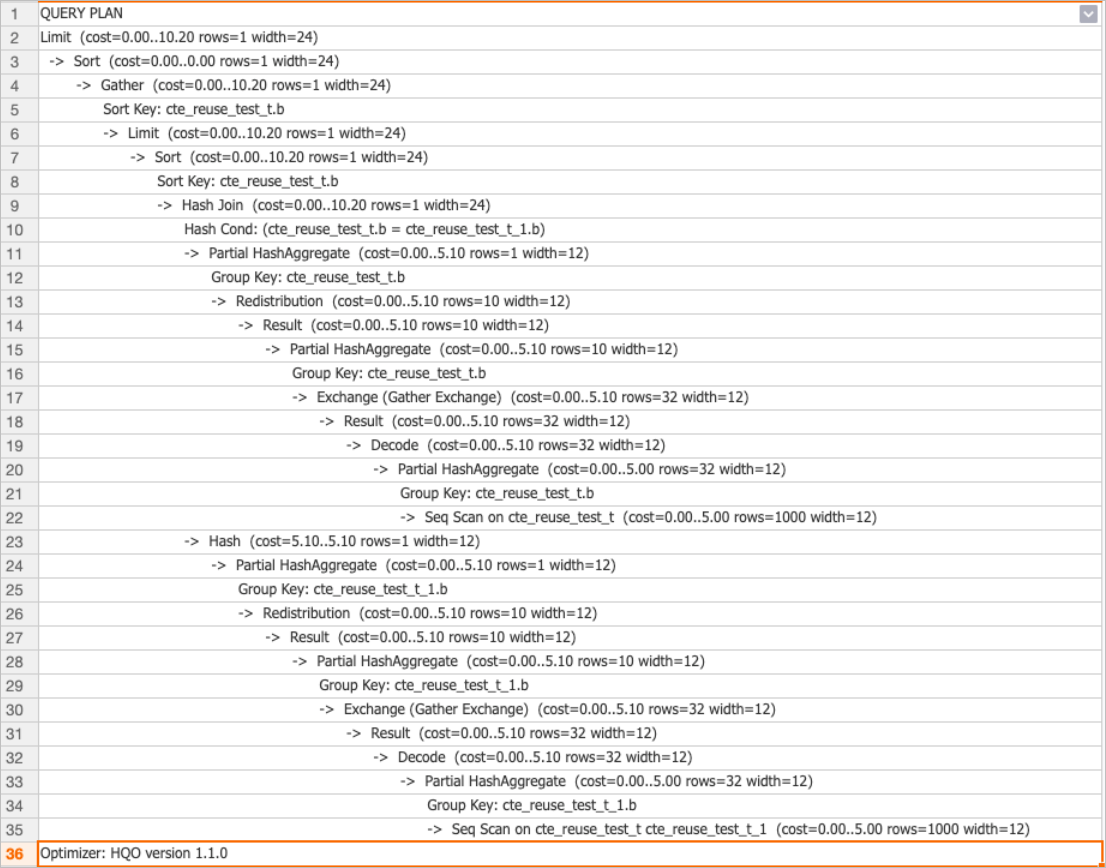

Execution plan for a version of Hologres earlier than V1.3 (V1.1)

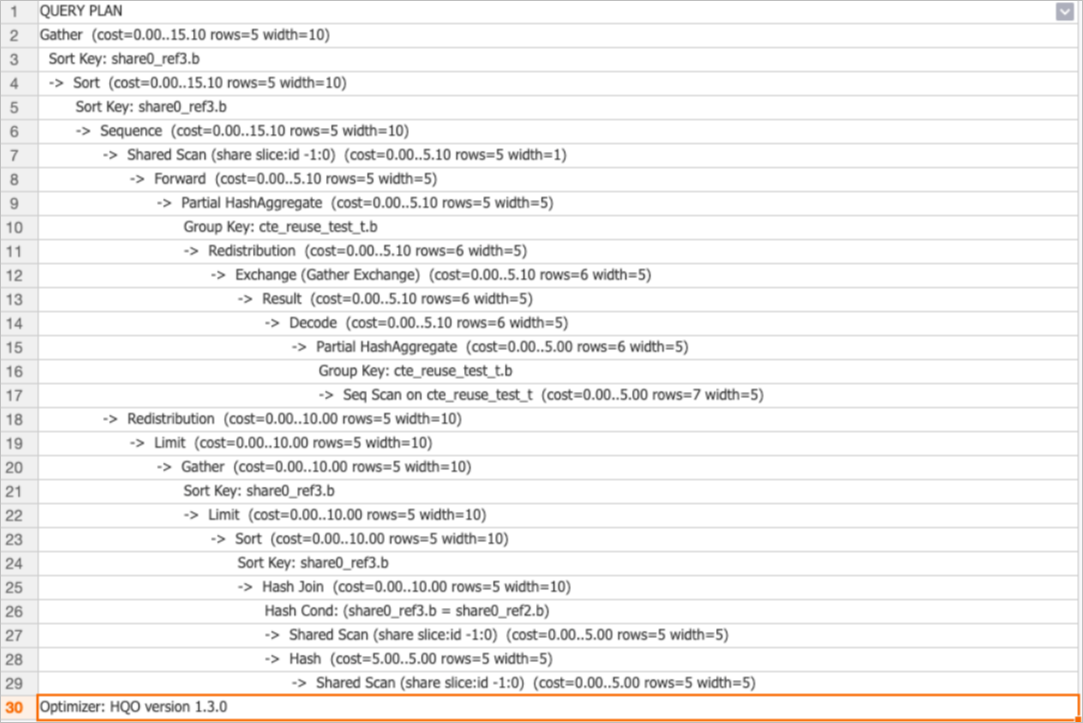

Execution plan for Hologres V1.3

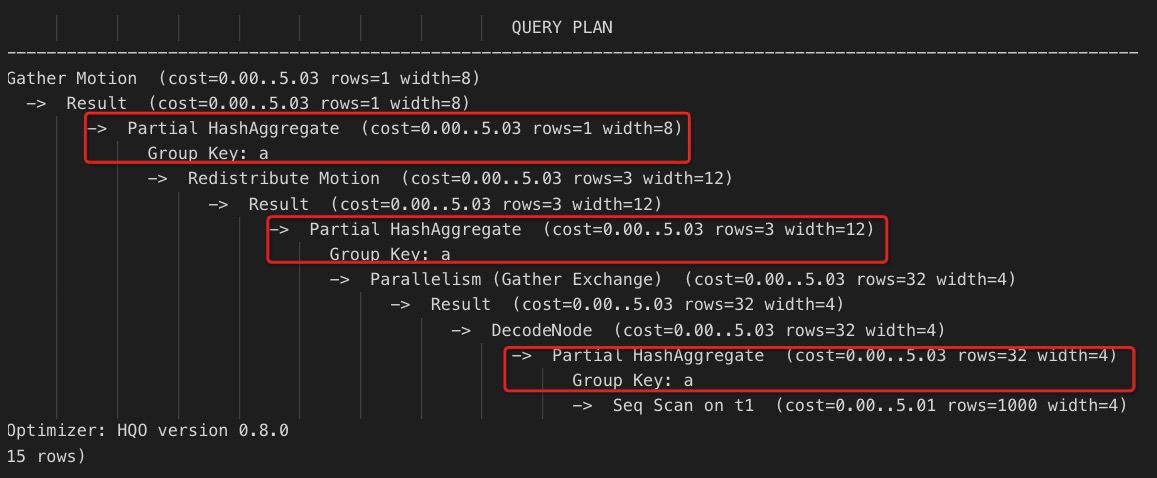

A comparison of the execution plans shows that versions of Hologres earlier than V1.3 perform multiple AGG computations (HashAggregate). In contrast, Hologres V1.3 computes the result only once and reuses it, which improves performance.

Optimize single-stage Agg to multi-stage Agg

If an Agg operator is time-consuming, check whether local shard-level pre-aggregation is being performed.

Performing a local Agg operation within a single shard first can reduce the amount of data for the final aggregation operation and improve performance. The details are as follows:

Three-stage aggregation: Data is first aggregated at the file level, then aggregated within a single shard, and finally the results from all shards are summarized.

Two-stage aggregation: Data is first aggregated within a single shard, and then the results from all shards are summarized.

You can force Hologres to perform a multi-stage aggregation operation using the following statement.

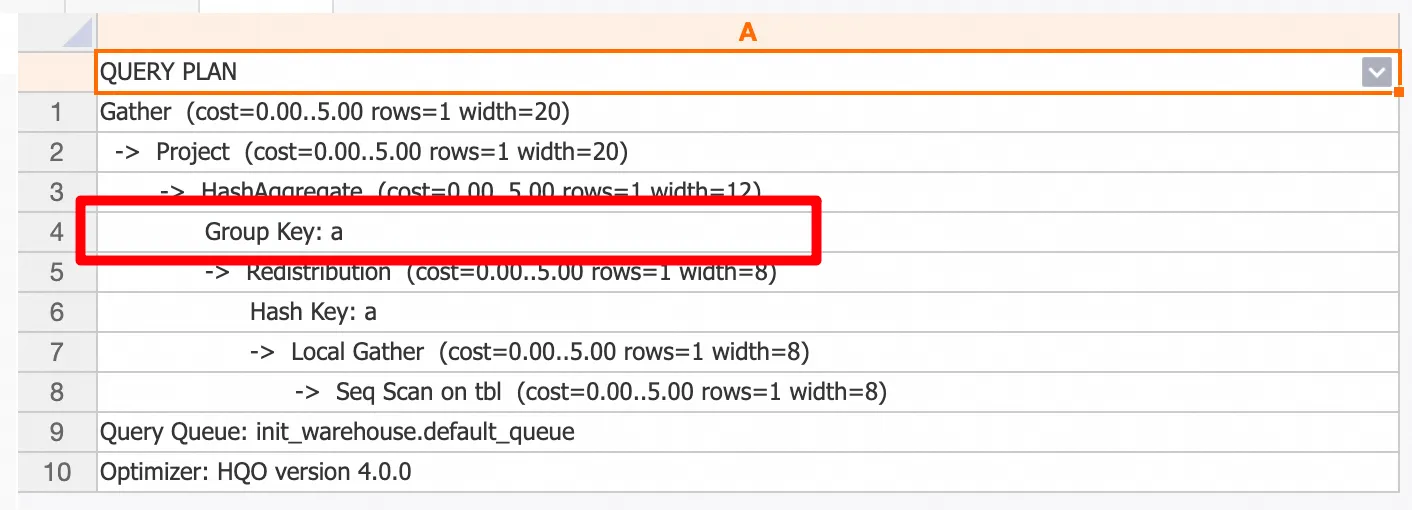

set optimizer_force_multistage_agg = on;Optimization for multiple aggregate functions with the same name

In production scenarios, an SQL statement may contain multiple identical aggregate functions that aggregate the same column. Starting from V4.0, Hologres supports equivalent rewrites to reduce the number of calculations and improve query performance. The following is a usage example:

Prepare data

-- Create a test table. CREATE TABLE tbl(x int4, y int4); -- Insert test data. INSERT INTO tbl VALUES (1,2), (null,200), (1000,null), (10000,20000);Sample query

SELECT sum(x + 1), sum(x + 2), sum(x - 3), sum(x - 4) FROM tbl;The following figure shows the execution plan. You can see that the query is rewritten to optimize the calculations.

You can disable this feature using the following GUC parameters:

-- Disable the feature at the session level. SET hg_experimental_remove_related_group_by_key = off; -- Disable the feature at the database level. ALTER DATABASE <database_name> SET hg_experimental_remove_related_group_by_key = off;

Table property optimization

Select a storage class

Hologres supports row-oriented, column-oriented, and row-column hybrid storage modes. You can select a storage class that suits your business scenario, as described in the following table.

Type | Scenarios | Disadvantages |

Row store |

| Poor performance for large-range queries, full table scans, and aggregation operations. |

Column store | Suitable for data analytics scenarios such as multi-column range queries, single-table aggregations, and multi-table joins. | Slower than row store for UPDATE and DELETE operations and for point queries in scenarios without an index. |

Row-column hybrid | Suitable for scenarios that have both row-oriented and column-oriented use cases. | Higher storage overhead. |

Select a data type

Hologres supports multiple data types. You should select a data type that suits your business scenario and requirements based on the following principles:

Use types that require less storage space.

Use the INT type instead of the BIGINT type.

Use precise DECIMAL/NUMERIC types. Specify the precision (PRECISION, SCALE) and keep it as small as possible. Reduce the use of non-precise types such as FLOAT or DOUBLE PRECISION to avoid errors in statistical summaries.

Do not use non-precise types such as FLOAT or DOUBLE for `GROUP BY` columns.

Use TEXT, which is more versatile. When you use

VARCHAR(N)andCHAR(N), keep the value of N as small as possible.Use TIMESTAMPTZ or DATE for date types. Avoid using TEXT.

Use consistent data types for join conditions.

When you perform multi-table joins, use the same data type for the join columns. This avoids the extra overhead caused by Hologres performing implicit type conversions on columns of different types.

Avoid using non-precise types such as FLOAT/DOUBLE in operations such as UNION or GROUP BY.

Operations such as `UNION` or `GROUP BY` do not currently support DOUBLE PRECISION and FLOAT data types. You must use the DECIMAL type.

Select a primary key

A primary key is mainly used to ensure data uniqueness and is suitable for data import scenarios with duplicate primary keys. When you import data, you can set an option to select a deduplication method:

ignore: Ignores new data.

update: Overwrites old data with new data.

Setting a primary key properly can help the optimizer generate better execution plans in certain scenarios, such as in a query with group by pk,a,b,c.

However, in column store scenarios, setting a primary key can have a significant impact on data write performance. Typically, write performance without a primary key is three times better than with a primary key.

Select a partitioned table

Hologres currently supports only single-level partitioned tables. Setting partitions properly can accelerate query performance. However, improper settings, such as creating too many partitions, can create an excessive number of small files and significantly degrade query performance.

For data that is imported incrementally by day, you can create a partitioned table for each day. The data is stored separately, and only the data for the current day is accessed.

The following are scenarios where setting partitions is applicable:

Deleting an entire partition of a child table does not affect data in other partitions. The `DROP` or `TRUNCATE` statements have better performance than the `DELETE` statement.

For queries with a partition key column in the predicate condition, you can directly index to the corresponding partition using the partition key column. You can also directly query child partitions, which is more flexible.

Creating partitioned tables is suitable for periodically imported real-time data. For example, if new data is imported daily, you can use the date as the partition key column and import data into a child partition each day. The following are sample statements.

begin;

create table insert_partition(c1 bigint not null, c2 boolean, c3 float not null, c4 text, c5 timestamptz not null) partition by list(c4);

call set_table_property('insert_partition', 'orientation', 'column');

commit;

create table insert_partition_child1 partition of insert_partition for values in('20190707');

create table insert_partition_child2 partition of insert_partition for values in('20190708');

create table insert_partition_child3 partition of insert_partition for values in('20190709');

select * from insert_partition where c4 >= '20190708';

select * from insert_partition_child3;Select an index

Hologres supports multiple types of indexes, each with a different purpose. You should select the appropriate index based on your business scenario to improve query performance. Therefore, you should design your table schema in advance based on your business scenario before you write data. The index types are described in the following table.

Type | Name | Description | Usage suggestion | Sample query statement |

clustering_key | Clustering key | An in-file cluster index. Data within a file is sorted by this index. For some range queries, Hologres can directly filter data using the ordered property of the cluster index. | Use a range query or filter query column as the clustering key column. Index filtering follows the leftmost prefix matching principle. Set no more than two columns. |

|

bitmap_columns | Bitmap column | An in-file bitmap index. A bitmap is created for data within a file based on this index column. For equality queries, Hologres can encode the data for each row by value and use bit manipulation to quickly index to the corresponding row. The time complexity is O(1). | Use an equality query column as a bitmap column. |

|

segment_key (also known as event_time_column) | Segment key | A file index. Data is written to files in Append Only mode, and then small files are merged between files based on this index key. The segment_key identifies the boundary range of files. You can use the segment key to quickly index to the object file. The segment_key is designed for ordered, range-based data scenarios such as timestamps and dates, and is therefore strongly correlated with the data write time. | First, perform a quick filter using the segment_key, and then perform an in-file range or equality query using a bitmap or cluster index. It follows the leftmost prefix matching principle and usually has only one column. Set the first non-empty timestamp field as the segment_key. |

|

Both `clustering_key` and `segment_key` must follow the leftmost prefix matching principle of traditional databases such as MySQL. This means indexing is performed by sorting based on the leftmost column written in the index. If the leftmost column is ordered, sorting is performed based on the second column from the left. The following are examples.

call set_table_property('tmp', 'clustering_key', 'a,b,c');

select * from tmp where a > 1 ; --Can use the cluster index.

select * from tmp where a > 1 and c > 2 ; --Only 'a' can use the cluster index.

select * from tmp where a > 1 and b > 2 ; --Both 'a' and 'b' can use the cluster index.

select * from tmp where a > 1 and b > 2 and c > 3 ; --'a', 'b', and 'c' can all use the cluster index.

select * from tmp where b > 1 and c > 2 ; --Neither 'b' nor 'c' can use the cluster index.A bitmap index supports `and` or `or` queries on multiple columns. The following are examples.

call set_table_property('tmp', 'bitmap_columns', 'a,b,c');

select * from tmp where a = 1 and b = 2 ; --The bitmap index can be used.

select * from tmp where a = 1 or b = 2 ; --The bitmap index can be used.`bitmap_columns` can be added after the table is created. `clustering_key` and `segment_key` must be specified when the table is created and cannot be added later.

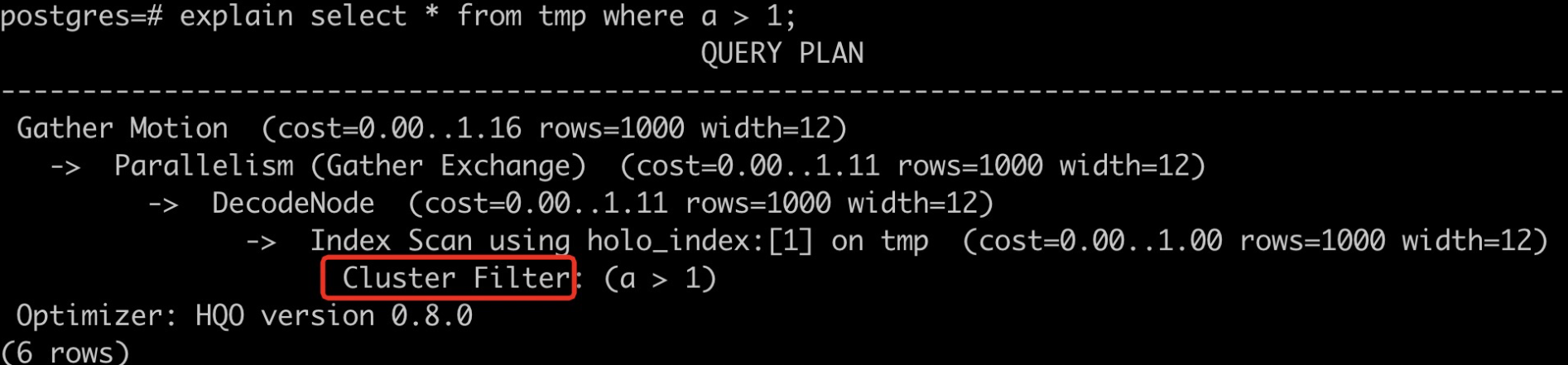

Check whether an index is used

You can create the tmp table and specify the index fields using the following statements.

begin;

create table tmp(a int not null, b int not null, c int not null);

call set_table_property('tmp', 'clustering_key', 'a');

call set_table_property('tmp', 'segment_key', 'b');

call set_table_property('tmp', 'bitmap_columns', 'a,b,c');

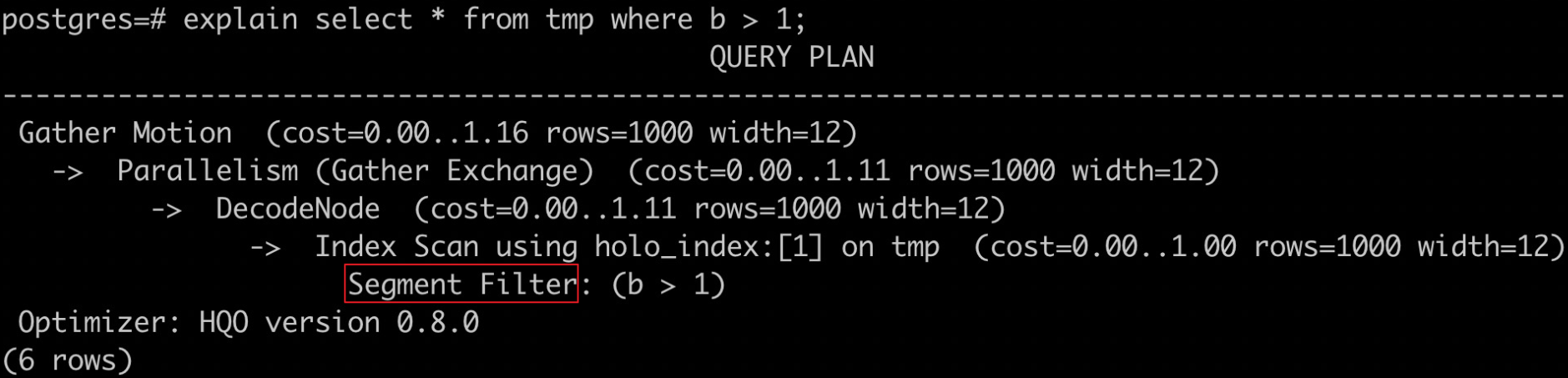

commit;To check whether the cluster index is used, run the following statement.

explain select * from tmp where a > 1;

To check whether the bitmap index is used, run the following statement.

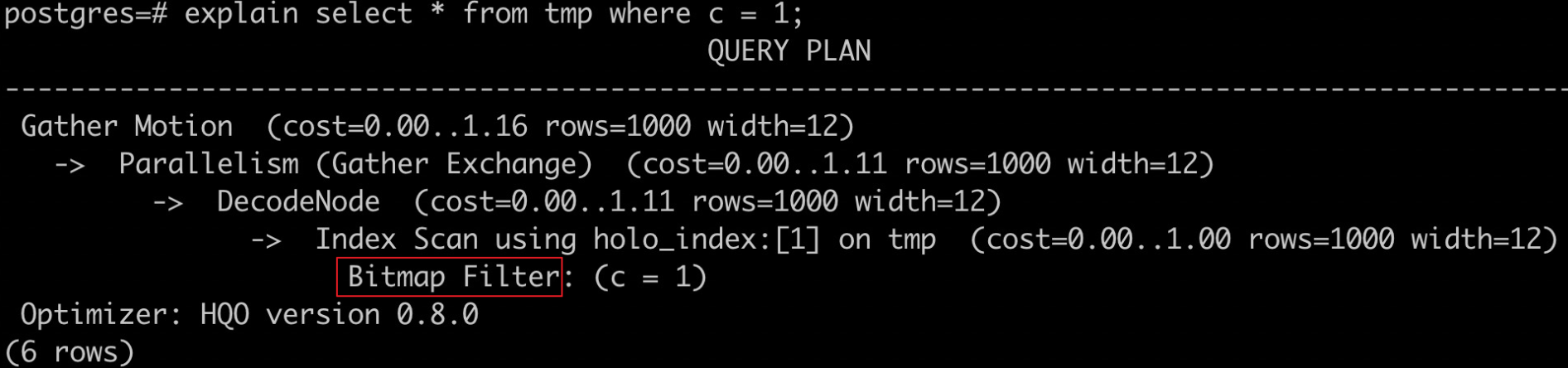

explain select * from tmp where c = 1;

To check whether the segment key is used, run the following statement.

explain select * from tmp where b > 1;