DataWorks is an all-in-one big data development and governance platform. It covers data integration, development, modeling, analysis, quality control, services, mapping, and open capabilities. The platform supports end-to-end data processing and helps you build an enterprise-level data middle platform. This topic describes the core features of DataWorks.

Data Integration: Aggregate data from all sources

The Data Integration module in DataWorks is a stable, efficient, and elastic data synchronization platform. It reliably synchronizes data at high speed between a wide range of heterogeneous data sources, even in complex network environments.

Overview

DataWorks Data Integration supports full and incremental data synchronization in offline, real-time, or integrated modes. It offers the following features:

For offline synchronization, you can configure the scheduling cycle for synchronization tasks.

Synchronize data between more than 50 heterogeneous data sources, including relational databases, data warehouses, non-relational databases, file storage, and message queues.

Connect to data sources across complex network environments, including the public internet, IDCs, or VPCs.

Provides secure and controllable data synchronization through robust security controls and operational monitoring.

Core technology and architecture

Engine architecture: Data Integration uses a star-shaped engine architecture, allowing any connected data source to form synchronization links with any other supported source. For a list of supported data sources, see Supported data sources and synchronization solutions.

Data integration resource groups and network connectivity: Before synchronizing data, you must establish network connectivity between your data source and a resource group. Data Integration tasks can run on serverless resource groups (recommended) or exclusive resource groups for data integration (legacy). For more information about network solutions, see Network connectivity solutions.

Use cases

Data Integration is ideal for data transfer scenarios such as ingesting data into data lakes and warehouses, sharding databases and tables, archiving real-time data, and moving data between clouds.

Data Studio and Operation Center: Process your data

DataWorks Data Studio and Operation Center are, respectively, a development platform for data processing and an intelligent operations and maintenance (O&M) platform. Together, they provide an efficient, standardized way to build and manage data development workflows.

Overview

Key features of Data Studio include:

Support for multiple compute engines, including MaxCompute, E-MapReduce, CDH, Hologres, AnalyticDB, and ClickHouse. You can develop, test, deploy, and manage tasks for these engines on a unified platform.

An intelligent editor and visual dependency orchestration. The scheduling capabilities are proven by Alibaba Group's complex internal tasks and business dependencies.

Isolated development and production environments, combined with features like version control, code review, smoke testing, deployment control, and operational auditing that standardize your data development lifecycle.

The Operation Center supports features such as data timeliness assurance, task diagnostics, impact analysis, automated O&M, and mobile-based O&M.

Core technology and architecture

Efficient and standardized development workflow

NoteDataWorks provides workspaces in standard mode to isolate development and production environments. For more information about the standard mode, see Differences between workspace modes.

Visual development interface: Build task workflows using a drag-and-drop interface. Develop data and configure scheduling in a unified console.

Task monitoring, troubleshooting, and resolution

Data modeling: Intelligent data modeling

The intelligent data modeling feature in DataWorks incorporates over a decade of best practices from Alibaba's data warehouse modeling methodologies. It helps you build enterprise data assets by improving modeling and reverse modeling for data marts and data middle platforms.

Overview

This feature includes four modules: Data Warehouse Planning, Data Standard, Dimension Modeling, and Data Metrics.

Data Warehouse Planning: Plan your data warehouse layers, data domains, and data marts. You can also configure model design spaces, allowing different departments to share a common set of data standards and data models.

Data Standard: Define field standards, standard codes, units of measurement, and naming dictionaries. You can also automatically generate data quality rules from standard codes to simplify compliance checks.

Dimension Modeling: Supports reverse modeling to address the cold-start problem for existing data warehouses. It provides visual dimensional modeling, allows you to import models from Excel files or build them quickly using FML (an SQL-like domain-specific language), and integrates seamlessly with Data Studio to automatically generate ETL code.

Data Metrics: Define and build atomic metrics and derived metrics. This module integrates seamlessly with dimensional modeling, allowing you to batch-create derived metrics based on atomic metrics and various dimensions.

Core technology and architecture

Use cases

DataWorks intelligent data modeling can help you build in-house modeling capabilities and unlock the value of your data assets. Examples include:

Standardize management of massive data

Larger enterprises have more complex data structures. How to manage and store data in a structured and orderly manner is a challenge that every large enterprise faces.

Break information barriers by interconnecting business data

If the data of each business or department in an enterprise is isolated from one another, the decision-makers cannot clearly or fully understand the data. How to break data silos between departments or business domains is a big challenge for business data management.

Integrate data standards to achieve unified and flexible data interconnection

Inconsistent descriptions of the same data result in duplicate data, incorrect calculation results, and difficulties in business data management. How to formulate a unified data standard without changing the original system architecture and realize flexible interconnection between upstream and downstream business is one of the core focuses of standardized management.

Maximize data value to maximize profit

Make the most of various types of enterprise data to maximize the data value to deliver a more efficient data service for enterprises.

DataAnalysis: Instant and rapid analysis

Built on the goal of "making everyone a data analyst," DataAnalysis provides simple and efficient tools for technical users who are not data engineers, such as data analysts, product managers, and operations staff, to help them efficiently retrieve and analyze data for daily tasks.

Overview

DataAnalysis supports features such as personal data uploads, public datasets, table search and bookmarks, online SQL queries, SQL file sharing, downloading SQL query results, and viewing data on large screens using spreadsheets.

Use cases

This module is designed for non-professional data developers, such as data analysts, product managers, and operations staff, to perform data analysis that is efficient, scalable, fluid, and secure.

Scalable: Leverage the power of the compute engine to efficiently analyze massive, full-scale datasets.

Fluid: Analyze data from databases across different business systems. DataAnalysis allows you to export data to MaxCompute tables or share result sets with specified users and grant them permissions. This enables data to flow between different systems and personnel.

Secure: All operations, including SQL queries and the downloading of SQL results, can be integrated with security auditing.

Data Quality: Monitor quality end-to-end

DataWorks provides end-to-end data quality monitoring with over 30 preset monitoring templates at the table and field levels, and custom templates. The Data Quality module immediately detects changes in source data and identifies dirty data generated during the ETL process. It automatically blocks problematic tasks to effectively prevent dirty data from propagating to downstream systems.

ETL is the process of extracting, transforming, and loading data from a source to a destination.

Data Quality monitors datasets and supports data tables from various engines, including MaxCompute. When offline data changes, Data Quality verifies the data and blocks the production pipeline to prevent data pollution. It also manages historical verification results, allowing you to analyze and classify data quality levels. For more information, see Data Quality.

Data Quality helps you address the following issues:

Frequent database changes.

Frequent business changes.

Data definition issues.

Dirty data from business systems.

Quality issues caused by system interactions.

Issues caused by data correction.

Quality issues originating from the data warehouse itself.

Data Map: Unified management and lineage tracking

Built on data search capabilities, Data Map provides tools such as table usage instructions, data categories, data lineage, and field lineage. It helps data consumers and owners better manage data and collaborate on development.

DataService Studio: Publish APIs quickly and cost-effectively

The DataService Studio module in DataWorks is a flexible, lightweight, secure, and stable platform for building data APIs. It provides comprehensive data sharing capabilities and helps you unlock and share the value of your data through features like publication approval, access control, usage metering, and resource isolation.

Overview

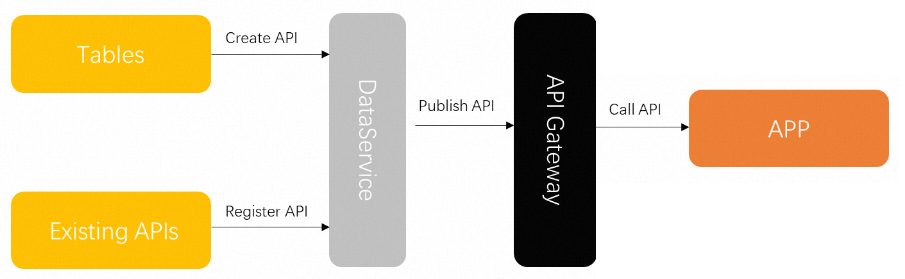

As a bridge between the data warehouse and upstream applications, DataService Studio helps you build a unified service bus for your enterprise. It unifies the creation and management of internal and external API services, closing the "last mile" gap between the data warehouse, databases, and data applications to accelerate data flow and sharing.

Generate data APIs from tables in various data sources using either a no-code or a self-service SQL mode. You can also use Function Compute to help process API request parameters and returned results.

DataService Studio uses a serverless architecture, eliminating the need to manage the underlying infrastructure. You can publish your API services to an API gateway with a single click.

Core technology and architecture

DataService Studio uses a serverless architecture. This allows you to focus on API query logic instead of managing the underlying infrastructure like the runtime environment. DataService Studio automatically provisions computing resources with elastic scaling, resulting in zero O&M costs.

Open Platform: Comprehensive open capabilities

The DataWorks Open Platform is the gateway for exposing DataWorks data and capabilities to external systems. To help you quickly integrate various application systems with DataWorks, the platform provides OpenAPI, OpenEvent, and Extensions. This enables easy data workflow management, data governance, and O&M, and allows integrated applications to respond promptly to business status changes.

Overview

The DataWorks Open Platform provides capabilities such as OpenAPI, OpenEvent, and Extensions.

OpenAPI: You can use OpenAPI to deeply integrate your own applications with DataWorks. For example, you can batch create, publish, and manage tasks to improve your big data processing efficiency and reduce manual operations.

For more information about OpenAPI, see OpenAPI.

OpenEvent: You can subscribe to system events in DataWorks to get real-time notifications and respond to changes. For example, subscribe to table change events to monitor core tables in real time, or subscribe to task change events to create a custom, real-time task monitoring dashboard.

For more information about OpenEvent, see OpenEvent.

Extensions: Extensions are service-level plug-ins that combine OpenAPI and OpenEvent. They allow you to customize workflow controls in DataWorks. For example, you can create a custom deployment control plug-in to block tasks that do not comply with your standards and requirements.

For more information about Extensions, see Extensions.

Use cases

The DataWorks Open Platform provides comprehensive open capabilities for deep system integration, automated operations, workflow definition, and business monitoring. We welcome users and partners to build industry-specific and scenario-based data applications and plug-ins on the DataWorks Open Platform.

Migration Assistant and cloud migration services

The DataWorks Migration Assistant helps you migrate jobs from open-source scheduling engines to DataWorks. It supports cross-cloud, cross-region, and cross-account job migration, allowing you to quickly clone and deploy DataWorks jobs. In addition, the DataWorks team, in collaboration with big data expert service teams, offers cloud migration services to help you quickly move your data and tasks to the cloud.

Overview

The main functions of Migration Assistant and cloud migration services include:

Task migration to the cloud: Migrate jobs from open-source scheduling engines to DataWorks.

DataWorks migration: Migrate development assets within the DataWorks ecosystem.

Use cases

These services are ideal for the following scenarios:

Task migration to the cloud: Migrate jobs from open-source scheduling engines to DataWorks.

Task backup: Use Migration Assistant to regularly back up task code to minimize losses from accidental project deletion.

Rapid business replication: Abstract common business logic and use the export/import feature of Migration Assistant to quickly replicate it.

Quick creation of test environments: Fully replicate your business code with Migration Assistant and change the data input from production to test data to quickly set up a test environment.

Cross-cloud development: Import and export between DataWorks on the public cloud and DataWorks in a private cloud to enable collaborative development.