Offline database migration lets you synchronize data from a data center or a self-built database on an ECS instance to a big data compute service. Supported services include MaxCompute, Hive, and TDH Inceptor. This topic describes how to create and configure a database migration task.

Prerequisites

You must create the data sources required for the migration. Database migration supports data from various source databases, such as MySQL, Microsoft SQL Server, Oracle, and OceanBase. For more information, see Supported data sources for database migration.

Function introduction

Offline database migration is a tool that improves efficiency and reduces costs. Unlike single offline pipelines, offline database migration lets you configure multiple offline pipelines in a batch operation. This lets you synchronize multiple database tables at once.

Procedure

On the Dataphin home page, choose Develop > Data Integration from the top menu bar.

In the top menu bar, select the desired project.

In the navigation pane on the left, choose Database Migration > Offline Database Migration.

On the Offline Database Migration page, configure the parameters. The following table describes the parameters.

Configure basic information.

Database Migration Folder Name: The name can be up to 256 characters long. It cannot contain vertical bars (|), forward slashes (/), backslashes (\), colons (:), question marks (?), angle brackets (<>), asterisks (*), or double quotation marks (").

Configure data source information.

Synchronization Source

Parameter

Description

Data Source Type

Select the type of the source data source. For more information about supported data sources and how to create them, see Supported data sources for database migration.

Oracle data source

Schema: Select the schema where the table resides. You can select tables across different schemas. If you do not specify a schema, the schema configured in the data source is used by default.

File encoding: If you select an Oracle data source, you must select an Encoding. Supported options are UTF-8, GBK, and ISO-8859-1.

Microsoft SQL Server, PostgreSQL, Amazon Redshift, Amazon RDS for PostgreSQL, Amazon RDS for MySQL, Amazon RDS for SQL Server, Amazon RDS for Oracle, Amazon RDS for DB2, PolarDB-X 2.0, and GBase 8C data sources

Schema: Select the schema where the table resides. You can select tables across different schemas. If you do not specify a schema, the schema configured in the data source is used by default.

DolphinDB data source

Database: Select the database where the table resides. If you leave this blank, the database specified during data source registration is used.

Hive data source

If you select a Hive data source, configure the following parameters.

File encoding: Supported values are UTF-8 and GBK.

ORC table compression format: Supported values are zlib, hadoop-snappy, lz4, and none.

Text table compression format: Supported values are gzip, bzip2, lzo, lzo_deflate, hadoop_snappy, framing-snappy, zip, and zlib.

Parquet table compression format: Supported values are hadoop_snappy, gzip, and lzo.

Field delimiter: The delimiter used to write data to the destination table. If unspecified, the default is

\u0001.

Time Zone

Select the time zone that matches your database configuration. The default time zone for data integration in China is

GMT+8. This time zone does not support daylight saving time. If your database is configured with a time zone that supports daylight saving time, such asAsia/Shanghai, and the synchronized data falls within the daylight saving time period, select a time zone such asAsia/Shanghai. Otherwise, the synchronized data will differ from the database data by one hour.Supported time zones include GMT+1, GMT+2, GMT+3, GMT+5:30, GMT+8, GMT+9, GMT+10, GMT-5, GMT-6, GMT-8, Africa/Cairo, America/Chicago, America/Denver, America/Los_Angeles, America/New York, America/Sao Paulo, Asia/Bangkok, Asia/Dubai, Asia/Kolkata, Asia/Shanghai, Asia/Tokyo, Atlantic/Azores, Australia/Sydney, Europe/Berlin, Europe/London, Europe/Moscow, Europe/Paris, Pacific/Auckland, and Pacific/Honolulu.

Data Source

Select the source data source. If the required data source is not available, click New Data Source to create one.

Batch Read Count

If the source data source is Oracle, Microsoft SQL Server, OceanBase, IBM DB2, PostgreSQL, Amazon Redshift, Amazon RDS for PostgreSQL, Amazon RDS for MySQL, Amazon RDS for SQL Server, Amazon RDS for Oracle, Amazon RDS for DB2, DolphinDB, or GBase 8C, you can configure the number of records to read in a batch. The default is 1024.

Synchronization Target

Parameter

Description

Data Source Type

Select the type of the destination data source. For more information about supported data sources and how to create them, see Supported data sources for database migration.

NoteWhen you synchronize data to an AnalyticDB for PostgreSQL data source, the system creates a daily partition for the destination table.

If you need other partitions, you can modify the preparation statements for partitions after the pipeline is generated. Click a single pipeline to make changes.

Data Source

Select the destination data source. If the required data source is not available, click New Data Source to create one. For more information about supported data sources and how to create them, see Supported data sources for database migration.

TDH Inceptor and ArgoDB destination data source types.

Configure the storage format.

TDH Inceptor: Supported storage formats are PARQUET, ORC, and TEXTFILE.

ArgoDB: Supported storage formats are PARQUET, ORC, TEXTFILE, and HOLODESK.

Hive destination data source type.

The required configuration items depend on the selected data lake table format: Hudi, Iceberg, or none.

NoteYou can select Iceberg or Hudi as the data lake table format only if the selected data source or the compute engine of the current project has the data lake table format feature enabled and Iceberg or Hudi is selected.

Hive as the default data lake table format

Is foreign table: Turn on this switch to specify the table as a foreign table. This switch is turned off by default.

Storage format: Supported storage formats are PARQUET, ORC, and TEXTFILE.

File encoding: If the storage format for Hive is ORC, you can configure the file encoding. Supported values are UTF-8 and GBK.

Compression format:

ORC storage format: Supported values are zlib, hadoop-snappy, and none.

PARQUET storage format: Supported values are gzip and hadoop-snappy.

TEXTFILE storage format: gzip, bzip2, lzo, lzo_deflate, hadoop-snappy, and zlib.

Performance configuration: If the Hive storage format is ORC, you can configure performance settings. In scenarios where the output table is in ORC format and has many fields, you can increase this setting to improve write performance if sufficient memory is available. If memory is insufficient, you can decrease it to reduce garbage collection (GC) time and improve write performance. The default value is

{"hive.exec.orc.default.buffer.size":16384}in bytes. Do not set this value to more than 262144 bytes (256 KB).Field delimiter: For the TEXTFILE storage format, you can configure a field delimiter. The system uses the specified delimiter when writing to the destination table. If not specified, the default is

\u0001.Field delimiter handling: For the TEXTFILE storage format, you can configure how to handle field delimiters. If the data contains the default or a custom field delimiter, select a handling policy to prevent write errors. Valid values are Keep, Remove, and Replace with.

Row delimiter handling: For the TEXTFILE storage format, you can configure row delimiter handling. If your data contains line feeds, such as

\r\nor\n, you can select a handling policy to prevent data write errors. The policies include Keep, Remove, and Replace with.Development Data Source Location: For projects in Dev-Prod mode, you can specify the Location for table storage in the CREATE TABLE statement of the development data source. For example,

hdfs://path_to_your_extemal_table.Production Data Source Location: For projects in Dev-Prod mode, you can specify the Location for table storage in the CREATE TABLE statement of the production data source. For example,

hdfs://path_to_your_extemal_table.NoteFor projects in Basic mode, you only need to specify one data source Location.

Data lake table format: Hudi

Execution engine: If Spark is enabled for the selected data source, you can select Spark or Hive. If Spark is not enabled, you can only select Hive.

Hudi table type: You can select MOR (merge on read) or COW (copy on write). The default is MOR (merge on read).

Extended properties: Enter the configuration properties supported by Hudi in the

k=vformat.

Data lake table format: Iceberg

Execution engine: If the selected data source is configured with Spark, Spark is displayed and selected by default. Otherwise, only Hive is displayed and selected.

Is foreign table: Turn on this switch to specify the table as a foreign table. This switch is turned off by default.

Storage format: Supported storage formats are PARQUET, ORC, and TEXTFILE.

File encoding: If the storage format for Hive is ORC, you can configure the file encoding. Supported values are UTF-8 and GBK.

Compression format:

ORC storage format: Supported values are zlib, hadoop-snappy, and none.

PARQUET storage format: Supported values are gzip and hadoop-snappy.

TEXTFILE storage format: gzip, bzip2, lzo, lzo_deflate, hadoop-snappy, and zlib.

Performance configuration: If the Hive storage format is ORC, you can configure this performance setting. In scenarios where the output table uses the ORC format and has many fields, you can increase this setting to improve write performance if sufficient memory is available. If memory is insufficient, you can decrease the setting to reduce GC time and improve write performance. The default value is

{"hive.exec.orc.default.buffer.size":16384}in bytes. Do not set a value greater than 262144 bytes (256 KB).Field delimiter: For the TEXTFILE storage format, you can configure the field delimiter. The system uses the specified delimiter when writing to the destination table. If you do not specify a delimiter, the default is

\u0001.Field delimiter handling: For the TEXTFILE storage format, you can configure how to handle field delimiters. If the data contains the default or a custom field delimiter, select a handling policy to prevent write errors. Valid values are Keep, Remove, and Replace with.

Row delimiter handling: For the TEXTFILE storage format, you can configure row delimiter handling. If the data contains line feeds (

\r\n,\n), you can select a handling policy to prevent data write errors. Options include Keep, Remove, and Replace with.Development Data Source Location: For projects in Dev-Prod mode, you can specify the Location for table storage in the CREATE TABLE statement of the development data source. For example,

hdfs://path_to_your_extemal_table.Production Data Source Location: For projects in Dev-Prod mode, you can specify the Location for table storage in the CREATE TABLE statement of the production data source. For example,

hdfs://path_to_your_extemal_table.NoteFor projects in Basic mode, you only need to specify one data source Location.

If the data lake table format is Paimon

Execution engine: Currently, only Spark is supported.

Paimon table type: You can select MOR (merge on read), COW (copy on write), or MOW (merge on write). The default is MOR (merge on read).

Extension Properties: Enter Hudi-supported configuration properties in the

k=vformat.

AnalyticDB for PostgreSQL and GaussDB (DWS) destination data source types.

Configure the following parameters.

ImportantThe conflict resolution policy is effective only in Copy mode when the AnalyticDB for PostgreSQL kernel version is later than 4.3. If the kernel version is 4.3 or earlier, or if the version is unknown, select a policy with caution to prevent task failures.

Conflict resolution policy: The copy loading policy supports a conflict resolution policy. Valid values are Report error on conflict and Overwrite on conflict.

Schema: Select the schema where the table resides. You can select tables across different schemas. If you do not specify a schema, the schema configured in the data source is used by default.

Lindorm destination data source type.

Configure the following parameters.

Storage format: Supported storage formats are PARQUET, ORC, TEXTFILE, and ICEBERG.

Compression format: Different storage formats support different compression formats.

ORC storage format: Supported values are zlib, hadoop-snappy, lz4, and none.

PARQUET storage format: Supported values are gzip and hadoop-snappy.

TEXTFILE storage format: Supported values are gzip, gzip2, lzo, lzo_deflate, hadoop-snappy, and zlib.

Development Data Source Location: For projects in Dev-Prod mode, you can specify the root path Location for table storage in the CREATE TABLE statements of the development environment, such as:

/user/hive/warehouse/xxx.db.Production Data Source Location: For projects in Dev-Prod mode, you can specify the root path Location for table storage in the CREATE TABLE statement for the production environment, such as:

/user/hive/warehouse/xxx.db.

MaxCompute data source

MaxCompute Table Type: You can select Standard Table or Delta Table.

Databricks data source

Schema: Select the schema where the table resides. You can select tables across different schemas. If you do not specify a schema, the schema configured in the data source is used by default.

Loading Policy

For Hive (when the data lake table format is Hudi or Paimon), TDH Inceptor, ArgoDB, StarRocks, Oracle, MaxCompute, and Lindorm (compute engine) destination data sources, the following loading policies are supported: Overwrite Data, Append Data, and Update Data.

Overwrite Data: If the data to be synchronized exists, the existing data is deleted before the new data is written.

Append Data: If the data to be synchronized exists, the existing data is not overwritten. The new data is appended.

Update Data: Data is updated based on the primary key. If the primary key does not exist, the new data is inserted.

NoteIf you set the MaxCompute table type to Standard table, you can select Append Data or Overwrite Data as the loading policy. If you set the MaxCompute table type to Delta table, you can select Update Data or Overwrite Data.

For Hive destination data sources (when the data lake table format is not selected), the supported loading policies are Overwrite Only Data Written By The Integration Task, Append Data, and Overwrite All Data.

For AnalyticDB for PostgreSQL and GaussDB (DWS) destination data sources, the following loading policies are supported: insert and copy.

insert: Data is synchronized record by record. This policy is suitable for small data volumes and can improve data accuracy and integrity.

copy: Data is synchronized using files. This policy is suitable for large data volumes and can improve synchronization speed.

Batch Write Data Volume

For Hive (when the data lake table format is Hudi), AnalyticDB for PostgreSQL, and StarRocks destination data sources, you can configure the batch write data volume. This is the amount of data written at one time. You can also set the batch write count. Data is written when either of the two thresholds is reached.

Batch Write Count

For Hive (when the data lake table format is Hudi), AnalyticDB for PostgreSQL, and StarRocks destination data sources, you can configure the batch write count. This is the number of records written at one time.

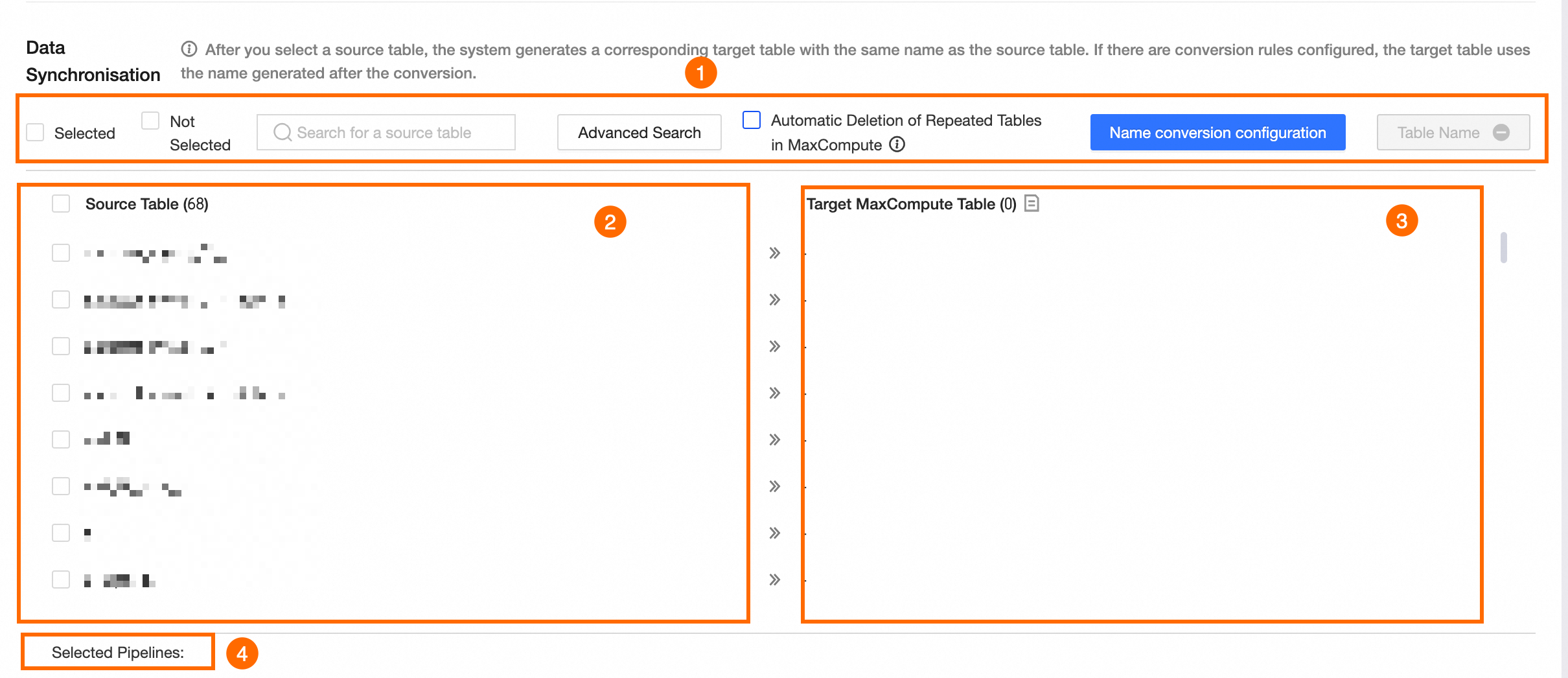

Configure data synchronization.

If the synchronization source is not FTP.

After you select the source tables, the corresponding destination tables are generated. By default, the names of the destination tables are the same as the names of the source tables. If you have configured name conversion rules, the converted names are used.

NoteIf the source is an external data source for which a collection task has not been configured, you cannot retrieve its metadata. In this case, the metadata information for data synchronization is empty. You can go to the Metadata Center to configure a collection task.

Section

Description

① Operation area

Selected, Not selected: Filter source tables by their selection status.

Search source table: Search for source tables by name. The search is case-sensitive.

Advanced search: The page can display up to 10,000 tables. The advanced search feature lets you search for tables by name across all tables in the database. Click Advanced Search. In the Advanced Search dialog box, configure the parameters.

Configure the search method.

You can search for source tables using Exact table name input or Fuzzy search.

Exact table name input: Enter table names in batches in the Search Content field. The table name separator is the configured separator, which is

\nby default. You can define your own.Fuzzy search: In the Search Content field, enter keywords from the table names. The system performs a fuzzy search based on the keywords.

Enter search content.

The search content varies based on the search method.

Exact table name input: Enter table names in batches to find tables. Use the configured separator to separate the table names.

Fuzzy search: Enter keywords from the table names.

Search results.

After you configure the Search method and Search content, click Search to view the results. In the results table, select the tables that you want to manage and select an operation type. Valid values are Batch select and Batch deselect. Click OK. The source tables are selected or deselected based on the operation type.

Automatically delete tables with the same name in the data source: If you select this option, Dataphin first deletes existing tables in the data source that have the same names as the tables generated by the database migration. Then, Dataphin automatically re-creates the tables.

ImportantIf the data source is a project data source, tables with the same names in both the production and development environments are deleted. Use this option with caution.

Name conversion configuration: Optional. The name conversion configuration lets you replace or filter source table names and field names during synchronization.

Click Name Conversion Configuration.

On the Name Conversion Configuration page, configure the conversion rules.

Table Name Conversion Rules: Click Create Rule. In the rule item, enter the Source table string to replace and Destination table replacement string. For example, to replace the table name

dataworkwithdataphin, set the string to replace toworkand the replacement string tophin.Table Name Prefix: In the Table Name Prefix text box, enter the prefix for the destination table name. During synchronization, the prefix is automatically added to the destination table name. For example, if the table name prefix is

pre_and the table name isdataphin, the generated destination table name ispre_dataphin.Table Name Suffix: In the Table Name Suffix text box, enter the suffix for the destination table name. During the sync, the suffix is automatically added to the destination table name. For example, if the table name suffix is

_prodand the table name isdataphin, the generated destination table name ispre_dataphin_prod.Field Name Rules: Click Add Rule. In the rule item, fill in Source field replacement string and Destination field replacement string. For example, to replace the field name

dataworkwithdataphin, set the string to replace toworkand the replacement string tophin.

After you complete the configuration, click OK. The Corresponding destination database table column displays the converted destination table names.

NoteEnglish letters in replacement strings and table name prefixes and suffixes are automatically converted to lowercase.

Validate table name: Check whether the current destination table names exist in the destination database.

② Source Table

In the Source Table list, select the source tables to synchronize.

③ Corresponding Destination Database Table

After you select a Source Table, the corresponding destination database table is generated. By default, the name of the destination table is the same as the name of the source table. If you have configured name conversion rules, the converted name is used.

NoteDestination table names can contain only letters, digits, and underscores (_). If a source table name contains other characters, configure a table name conversion rule.

④ Pipeline statistics

The number of selected pipelines.

The sync source is an FTP server.

Click Download Excel Template. Fill out the template according to the instructions and upload the file. To prevent parsing failures, you must follow the specified template format.

NoteYou can upload a single .xlsx file or a compressed ZIP package that contains one or more .xlsx files. The file size must be less than 50 MB.

After the file is uploaded, click Parse File.

Parameter

Description

① Operation area

Search source file: Search for source files by name.

View only failed parsing tasks: The list displays only the tasks that failed to be parsed.

Automatically delete tables with the same name in the data source: If you select this option, existing tables in the data source that have the same names as the destination tables generated by the database migration are automatically deleted. Then, the tables are automatically re-created.

ImportantIf the data source is a project data source, tables with the same names in both the production and development environments are deleted. Use this option with caution.

Validate table name: Check whether the current destination table names exist in the destination database.

② Source File and Corresponding Destination Table

Source file: In the Source File list, select the source files to synchronize.

Corresponding Destination Table: After the file is parsed, the corresponding destination database tables are generated based on the template file.

③ Pipeline statistics

The number of selected pipelines.

Configure task names

For Generation Method, select a method to generate names for the offline database migration tasks. You can select System Default or Custom Rule.

Parameter

Description

Generation Method

System Default

Task names are generated using the default naming convention of the system.

Custom Rule

ImportantBefore you configure a custom task naming rule, you must select the synchronization source and destination data sources. Otherwise, you cannot configure a custom task naming rule.

Default rule: After you select the source and destination data sources and choose Custom Rule as the generation method for the task name, the system generates a default rule in the task name rule text box. The default rule for the task name is

${source_data_source_type}2${target_data_source_type}_${source_table_name}.For example, if the source data source type for the current database migration task is MySQL, the destination data source type is Oracle, and the name of the first table in the source tables is

source_table_name1, the default node naming convention isMySQL2Oracle_${source_table_name}and the node name is previewed asMySQL2Oracle_source_table_name1.NoteThis default rule is different from the system default generation method.

Custom rule: In the Task Naming Rule text box on the left, enter a naming rule. You can delete the existing default rule or modify it.

The name cannot be more than 256 characters long. It cannot contain vertical bars (|), forward slashes (/), backslashes (\), colons (:), question marks (?), angle brackets (<>), asterisks (*), or double quotation marks ("). You can click a valid metadata name in the Available Metadata list on the right to copy it.

NoteAfter you add metadata to the naming rule, the metadata values in the task name preview are all from the information of the first table in the source table list.

Configure the synchronization method and data filtering.

Parameter

Description

Synchronization Method

Select a synchronization method. Valid values are Daily Sync, One-time Sync, and Daily Sync + One-time Sync.

Daily Sync: The system generates auto triggered tasks for the integration pipeline that are scheduled to run daily. This method is typically used to synchronize daily incremental or full data.

One-time Sync: The system generates one-time tasks for the integration pipeline. This method is typically used to synchronize historical full data.

Daily Sync + One-time Sync: The system generates both auto triggered tasks that are scheduled to run daily and one-time tasks. This method is typically used for scenarios where a one-time full data synchronization is followed by daily incremental or full data synchronization.

NoteIf the source database is FTP, Daily Sync + One-time Sync is not supported.

Create destination table as

Select the type of destination table to create. Valid values are partitioned table and non-partitioned table. The rules for creating destination tables vary based on the synchronization method.

Daily Sync: If you select partitioned table, the destination table is created as a partitioned table, and data is written to the

ds=${bizdate}partition by default. If you select non-partitioned table, the destination table is created as a non-partitioned table.One-time Sync: If you select partitioned table, the destination table is created as a partitioned table. You must configure the One-time sync write partition parameter, which supports constants or partition parameters. For example, you can use a constant such as

20230330or a partition parameter such asds=${bizdate}. If you select non-partitioned table, the destination table is created as a non-partitioned table.Daily Sync + One-time Sync: The default is partitioned table, which cannot be changed. You must configure the One-time sync write partition parameter, which supports constants or partition parameters. For example, a constant

20230330or a partition parameterds=${bizdate}.NoteCurrently, you can write data from a one-time synchronization task to only one specified partition of the destination table. To write full historical data to different partitions, you can use an SQL node to process the data and write it to the corresponding partitions after the one-time synchronization. Alternatively, you can select daily synchronization for incremental data and then perform data backfill to complete historical partitions.

Data Filtering

The source database is not Hive or MaxCompute

Daily Sync Filter Condition: If the synchronization method includes Daily Sync, you can configure the filter condition for daily synchronization. If you configure

ds=${bizdate}, the task extracts all data whereds=${bizdate}from the source database and writes it to the specified destination table partition.One-time Sync Filter Condition: If the synchronization method includes One-time Sync, you can configure the one-time synchronization filter condition. If you configure

ds=<${bizdate}, the task extracts all data from the source database whereds=<${bizdate}and writes it to the specified destination table or partition.

The source database is Hive or MaxCompute

Daily Sync Partition: If the source database is Hive or MaxCompute, you must specify the partition to read daily from the partitioned table. You can read a single partition, such as

ds=${bizdate}, or multiple partitions, such as/*query*/ds>=20230101 and ds<=20230107.One-time Synchronization Partition: If the source database is Hive or MaxCompute and the Synchronization Method includes One-time Synchronization, you must specify the partition to read from the partitioned table. You can specify a single partition, such as

ds=${bizdate}, or multiple partitions, such as/*query*/ds>=20230101 and ds<=20230107.If partition does not exist: Select a policy to handle the scenario where the specified partition does not exist.

Fail the task: The task is stopped and its status is set to failed.

Succeed the task: The task runs successfully, but no data is written.

Use the latest non-empty partition: If the source database is MaxCompute, you can use the latest non-empty partition (max_pt) of the table as the partition to synchronize. If the table does not have any partitions that contain data, the task fails and an error is reported. This option is not supported for Hive source databases.

NoteData filtering is not supported if the source is FTP.

Parameter Settings

If the source is FTP, you can use parameters in the source file path.

Scheduling and runtime configuration

Parameter

Description

Scan Configuration

Select a scheduling configuration. Valid values are Concurrent Scheduling and Batch Scheduling.

Concurrent Scheduling: The synchronization tasks for the selected tables in the source database are run concurrently at 00:00 every day in the specified scheduling time zone.

Batch Scheduling: The synchronization tasks for the selected tables in the source database are run in batches. You can set a period from 0 to 23 hours and a maximum of 142 synchronization tasks. For example, to synchronize 100 tables, if you set the task to synchronize 10 tables every 2 hours, it takes 20 hours to start all synchronization tasks in a cycle. A synchronization interval cannot exceed 24 hours.

Run Timeout

If a synchronization task runs longer than the specified threshold, it is automatically stopped and its status is set to failed. You can select System Configuration or Custom.

System Configuration: Use the default timeout period of the system. For more information, see Runtime configurations.

Custom: Specify a custom timeout period. You can enter a number from 0 to 168, exclusive of 0. The value can be accurate to two decimal places.

Auto-rerun On Failure

If a task instance or data backfill instance fails, the system determines whether to automatically rerun the instance based on this configuration. You can set the number of reruns to an integer from 0 to 10 and the rerun interval to an integer from 1 to 60.

Upstream Dependency

Click Add Dependency to add a Physical Node or a Logical Table Node as an upstream dependency for this node. If no dependency is configured, the virtual root node of the tenant is the default upstream dependency. You can also manually add a virtual node as a dependent object, which is useful in scenarios such as unified data backfill.

Resource configuration

Resource Group: Database migration integration tasks are dedicated tasks that consume scheduling resources. You can specify the resource group for the instances that are generated by each database migration integration task. When an instance is scheduled, it uses the resource quota of the specified resource group. If the available resources in the specified resource group are insufficient, the instance enters the Waiting for scheduling resources state. Resources are isolated between different resource groups to ensure scheduling stability.

You can select only a resource group that is associated with the current project and used for the Daily task scheduling scenario. For more information, see Resource group configuration.

NoteIn Basic projects, you can configure the Resource Group. In Dev-Prod projects, you can configure the Development Task Scheduling Resource Group and Production Task Scheduling Resource Group.

If you select Project default resource group, the configuration is automatically updated based on the project's default resource group settings.

By default, task scheduling in both production and development environments uses the project's default scheduling resource group. You can change this to another resource group that is associated with the current project, including resource groups from registered scheduling clusters.

After you configure the parameters, click Generate Pipeline to create the offline database migration pipelines.

In the Run Results area, you can view the execution results of the pipeline tasks, including the source tables, destination tables, synchronization method, task status, and details.

After the pipelines are generated, a folder for this offline database migration task is created in the offline integration directory. This folder contains the corresponding offline pipeline tasks. You can configure and publish the generated offline pipeline tasks. For more information, see Configure properties for an offline pipeline task.

If some tables fail to be created, or to add tables later, you can manually create offline pipeline or script tasks for the failed or new tables. Then, move them to the offline database migration folder. Perform the following steps:

Click the

button next to the target offline full database migration folder and select New Offline Pipeline or Offline Script.

button next to the target offline full database migration folder and select New Offline Pipeline or Offline Script.In the Create Offline Pipeline or Create Offline Script dialog box, configure the parameters and click OK. For more information, see Create an integration task using a single pipeline and Create an integration task in code editor mode.

NoteThe created offline pipeline and script tasks are placed in the current offline database migration folder.

You cannot move folders into or out of the offline database migration folder.

To move offline pipeline and script tasks in the offline integration directory, click the

icon next to their names, select Move, and then select the destination folder in the Move file dialog box. You can move tasks to the offline database migration folder.

icon next to their names, select Move, and then select the destination folder in the Move file dialog box. You can move tasks to the offline database migration folder.If you delete the database migration folder, all tasks within that folder are also deleted, including offline pipeline and script tasks.

What to do next

After you create and publish the offline database migration task, go to the Operation Center to view and manage the integration tasks to ensure that they run as expected. For more information, see Operation Center.