After creating the offline script pattern, you can proceed with data synchronization development by writing JSON scripts. This approach offers flexible capabilities and fine-grained control over the configuration management of data synchronization development. This topic explains how to create an offline script pattern.

Prerequisites

Ensure that the necessary data sources are configured. Before setting up the integration task, configure the data sources and target databases for integration to facilitate database read and write operations during the development and configuration of offline integration scripts. For details on the data sources supported by offline pipelines, see Supported data sources.

Procedure

Step 1: Create an offline script

Navigate to the Dataphin home page, click Development > Data Integration from the top menu bar.

To access the Create Offline Script dialog box, follow these steps:

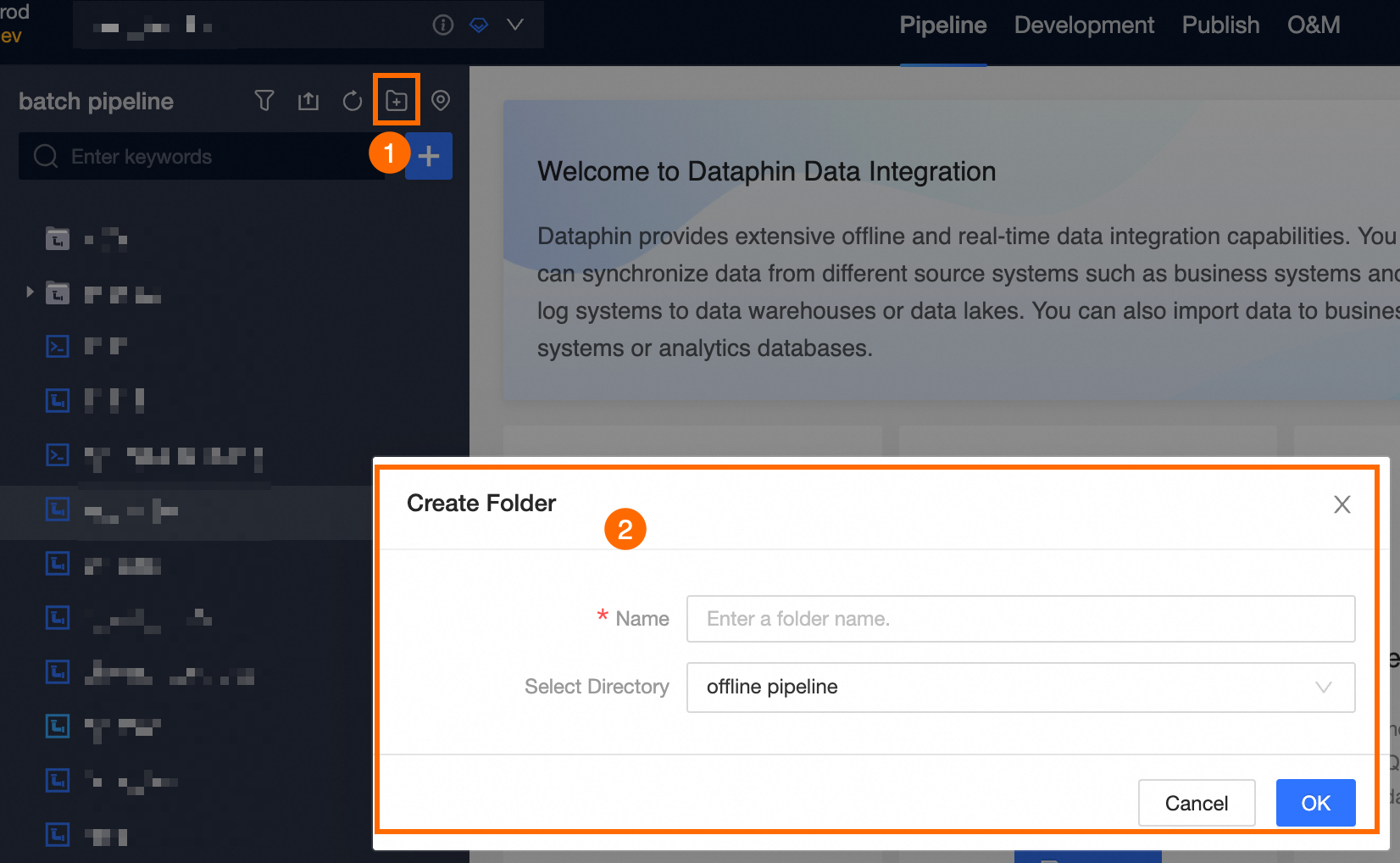

Select the project (Dev-Prod mode requires selecting the environment) > Click Batch Pipeline > Click

New icon > Click Batch Script.

New icon > Click Batch Script.In the Create Offline Script dialog box, enter the required parameters.

Area

Parameter

Description

Basic Information

Task Name

Enter the name of the offline script, adhering to the following naming conventions:

All characters are allowed except for vertical line (|), colon (:), question mark (?), angle brackets (<>), asterisk (*), quotation marks ("), forward slash (/), and backslash (\).

The name must not exceed 64 characters.

Schedule Type

Choose the scheduling type for the offline script. Schedule Type options include:

Recurring Task Node: For tasks that are executed periodically.

Manual Node: For tasks that are triggered manually and have no dependencies.

Description

Provide a brief description of the offline script, within 1000 characters.

Select Directory

The default directory is the offline pipeline. Alternatively, create a target folder on the offline pipeline page and select it as the directory for the task.

Datasource Config

Source Type

Select the type of the source data source.

Datasource

Choose the source data source. If the required data source is not listed, click Create. For more information, see Supported data sources.

NoteSelection is limited to data sources with read-through permissions. For information on obtaining permissions, see Request data source permission.

Target Type

Select the type of the target data source for data synchronization.

Datasource

Choose the target data source for data synchronization. If the required data source is not listed, click Create. For more information, see Supported data sources.

NoteSelection is limited to data sources with write-through permissions. For information on obtaining permissions, see Request data source permission.

Click OK.

Step 2: Develop the offline script

The offline script is developed using a code editor. Writing JSON scripts for data synchronization allows for more flexible capabilities and fine-grained configuration. The following figure illustrates the components:

The maximum number of input characters is 500000 characters.

Step 3: Pipeline schedule configuration

Click the

button on the development canvas menu bar to access the schedule configuration.

button on the development canvas menu bar to access the schedule configuration.On the schedule configuration page, set up the Basic Information, Schedule Configuration, Schedule Dependency, Schedule Parameters, Run Configuration, and Resource Configuration for the integration pipeline. The configuration details are as follows:

Basic Information: Configure the development and operation owners, and provide a description for the integration pipeline task. For instructions, see Configure basic information of offline integration pipeline.

Schedule Configuration: Define the scheduling method for the integration pipeline task in the production environment. Configure the scheduling type, cycle, logic, and execution properties. For instructions, see Offline integration pipeline schedule configuration.

Schedule Dependency: Define the dependency nodes for the integration pipeline task within the scheduling framework. Dataphin ensures timely and effective data production by executing nodes in sequence based on the configured dependencies. For instructions, see Offline integration pipeline schedule dependency configuration.

Run Configuration: Configure task-level run timeout and rerun policies for failed tasks to prevent resource waste and enhance task reliability. For instructions, see Offline integration pipeline run configuration.

Resource Configuration: Assign the integration task to a resource group, which will provide the resources needed for task scheduling. For instructions, see Configure offline integration pipeline task resources.

Click OK.

Step 4: Save and submit the offline integration task

Click the

icon at the top of the canvas to save the pipeline task.

icon at the top of the canvas to save the pipeline task.Click the

icon to submit the task. In the Submit Remarks dialog box, enter the remarks and click OK And Submit.

icon to submit the task. In the Submit Remarks dialog box, enter the remarks and click OK And Submit.Dataphin performs lineage analysis and submission checks upon submission. For more information, see Integration task submission instructions.

What to do next

If you are using Dev-Prod mode, publish the task. For details, see Manage publishing tasks.

If you are using Basic mode, the task will be scheduled in the production environment upon successful submission. Visit the Operation Center to view published tasks. For details, see Operation Center.