Container Compute Service (ACS) provides GPU computing power without requiring you to manage the underlying hardware or node configurations. ACS is easy to deploy, supports pay-as-you-go billing, and is ideal for large language model (LLM) inference tasks, which helps reduce inference costs. This topic describes how to use the GPU computing power of ACS with the deepgpu-comfyui plugin to accelerate Wan2.1 video generation.

Background information

ComfyUI

ComfyUI is a node-based graphical user interface (GUI) for running and customizing Stable Diffusion, a popular text-to-image model. It uses a visual flowchart, or workflow, that allows users to build complex image generation pipelines by dragging and dropping nodes instead of writing code.

Wan model

Tongyi Wanxiang, also known as Wan, is a large AI art and text-to-image (AI-Generated Content (AIGC)) model from Alibaba's Tongyi Lab. It is the visual generation branch of the Tongyi Qianwen large model series. Wan is the world's first AI art model to support Chinese prompts. It has multimodal capabilities and can generate high-quality artwork from text descriptions, hand-drawn sketches, or image style transfers.

Prerequisites

During the first time you use Container Compute Service (ACS), you need to assign the default role to the account. Only after you complete the authorization, ACS can call other services, such as ECS, OSS, NAS, CPFS, and SLB, create clusters, and save logs. For more information, see Quick start for first-time ACS users.

Supported GPU card types: L20 (GN8IS) and G49E.

Procedure

Step 1: Prepare the model data

Create a NAS or OSS volume to store model files persistently. This topic uses a NAS volume as an example. Run the following commands in the directory where the NAS volume is mounted.

For more information about how to create a persistent volume, see Create a NAS file system as a volume or Use a statically provisioned OSS volume.

Run the following command to download ComfyUI.

Make sure that Git is installed in your environment.

git clone https://github.com/comfyanonymous/ComfyUI.gitRun the following commands to download the following three model files to their corresponding directories in ComfyUI. For more information about the models, see the Wan_2.1_ComfyUI_repackaged project.

To ensure a smooth download, you may need to increase the peak public bandwidth. The download is expected to take about 30 minutes.

The

wan2.1_t2v_14B_fp16.safetensorsfilecd ComfyUI/models/diffusion_models wget https://modelscope.cn/models/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/master/split_files/diffusion_models/wan2.1_t2v_14B_fp16.safetensorsThe

wan_2.1_vae.safetensorsfilecd ComfyUI/models/vae wget https://modelscope.cn/models/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/master/split_files/vae/wan_2.1_vae.safetensorsThe

umt5_xxl_fp8_e4m3fn_scaled.safetensorsfilecd ComfyUI/models/text_encoders wget https://modelscope.cn/models/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/master/split_files/text_encoders/umt5_xxl_fp8_e4m3fn_scaled.safetensors

Download and decompress ComfyUI-deepgpu.

cd ComfyUI/custom_nodes wget https://aiacc-inference-public-v2.oss-cn-hangzhou.aliyuncs.com/deepgpu/comfyui/nodes/20250513/ComfyUI-deepgpu.tar.gz tar zxf ComfyUI-deepgpu.tar.gz

Step 2: Deploy the ComfyUI service

Log on to the ACS console. In the navigation pane on the left, choose Clusters. Click the name of the target cluster. In the navigation pane on the left, choose . In the upper-left corner, click Create from YAML.

This topic uses mounting a NAS volume as an example. Use the following YAML template and click Create.

Modify the

persistentVolumeClaim.claimNamevalue to match the name of your persistent volume claim (PVC).This example uses the inference-nv-pytorch 25.07 image from the

cn-beijingregion to minimize image pull times. To use the private image for other regions, see Usage method and update the image path in the YAML manifest.The test container image used in this example has the deepgpu-torch and deepgpu-comfyui plugins pre-installed. To use these plugins in other container environments, contact a solution architect (SA) to obtain the installation packages.

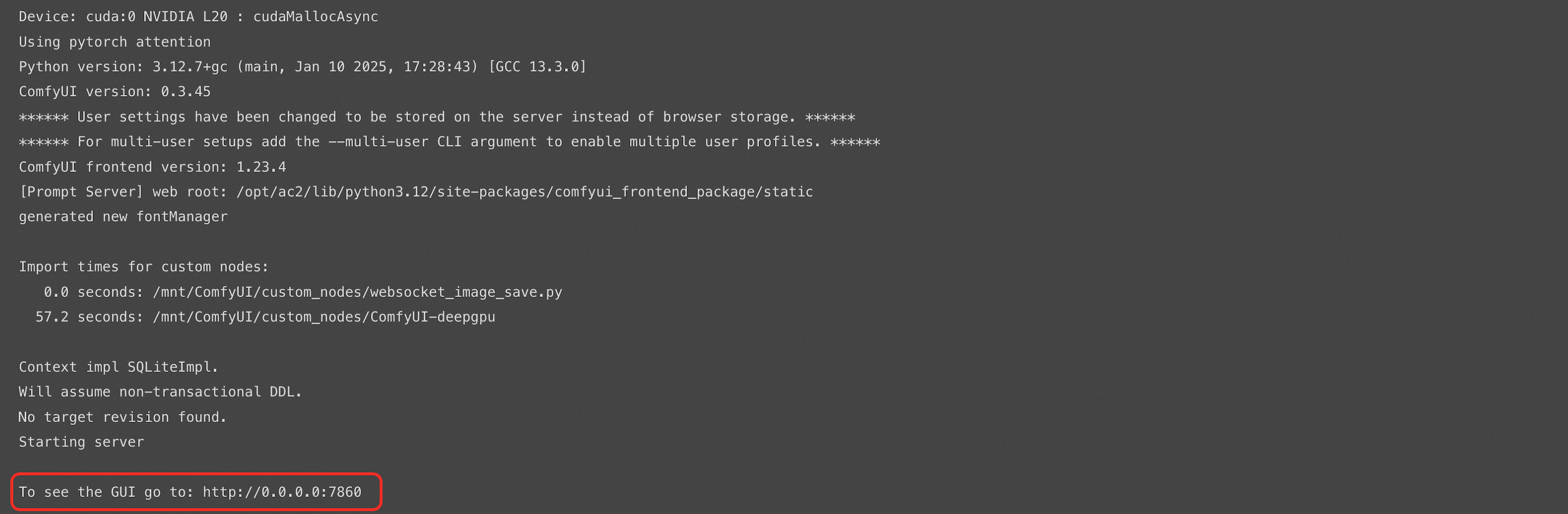

apiVersion: apps/v1 kind: Deployment metadata: labels: app: wanx-deployment name: wanx-deployment-test namespace: default spec: replicas: 1 selector: matchLabels: app: wanx-deployment template: metadata: labels: alibabacloud.com/compute-class: gpu alibabacloud.com/compute-qos: default alibabacloud.com/gpu-model-series: L20 #Supported GPU card types: L20 (GN8IS), G49E app: wanx-deployment spec: containers: - command: - sh - -c - DEEPGPU_PUB_LS=true python3 /mnt/ComfyUI/main.py --listen 0.0.0.0 --port 7860 image: acs-registry-vpc.cn-beijing.cr.aliyuncs.com/egslingjun/inference-nv-pytorch:25.07-vllm0.9.2-pytorch2.7-cu128-20250714-serverless imagePullPolicy: Always name: main resources: limits: nvidia.com/gpu: "1" cpu: "16" memory: 64Gi requests: nvidia.com/gpu: "1" cpu: "16" memory: 64Gi terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /dev/shm name: cache-volume - mountPath: /mnt #/mnt is the path in the pod where the NAS volume claim is mapped name: data dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 volumes: - emptyDir: medium: Memory sizeLimit: 500G name: cache-volume - name: data persistentVolumeClaim: claimName: wanx-nas #wanx-nas is the volume claim created from the NAS volume --- apiVersion: v1 kind: Service metadata: name: wanx-test spec: type: LoadBalancer ports: - port: 7860 protocol: TCP targetPort: 7860 selector: app: wanx-deploymentIn the dialog box that appears, click View to go to the workload details page. Click the Logs tab. If the following output is displayed, the service has started successfully.

Step 3: Learn how to use the plugin

Click the Access Method tab to obtain the External Endpoint of the service, such as 8.xxx.xxx.114:7860.

Access the ComfyUI URL

http://8.xxx.xxx.114:7860/in a browser. In the ComfyUI interface, right-click and then click Add Node to view the DeepGPU nodes included in the plugin.The first time you access the URL, it may take about 5 minutes to load.

Step 4: Test the sample workflow

Download the wan2.1 DeepyTorch accelerated workflow to your computer from a browser.

Image-to-video workflow

https://aiacc-inference-public-v2.oss-cn-hangzhou.aliyuncs.com/deepgpu/comfyui/wan/workflows/workflow_image_to_video_wan_1.3b_deepytorch.jsonText-to-video workflow

https://aiacc-inference-public-v2.oss-cn-hangzhou.aliyuncs.com/deepgpu/comfyui/wan/workflows/workflow_text_to_video_wan_deepytorch.json

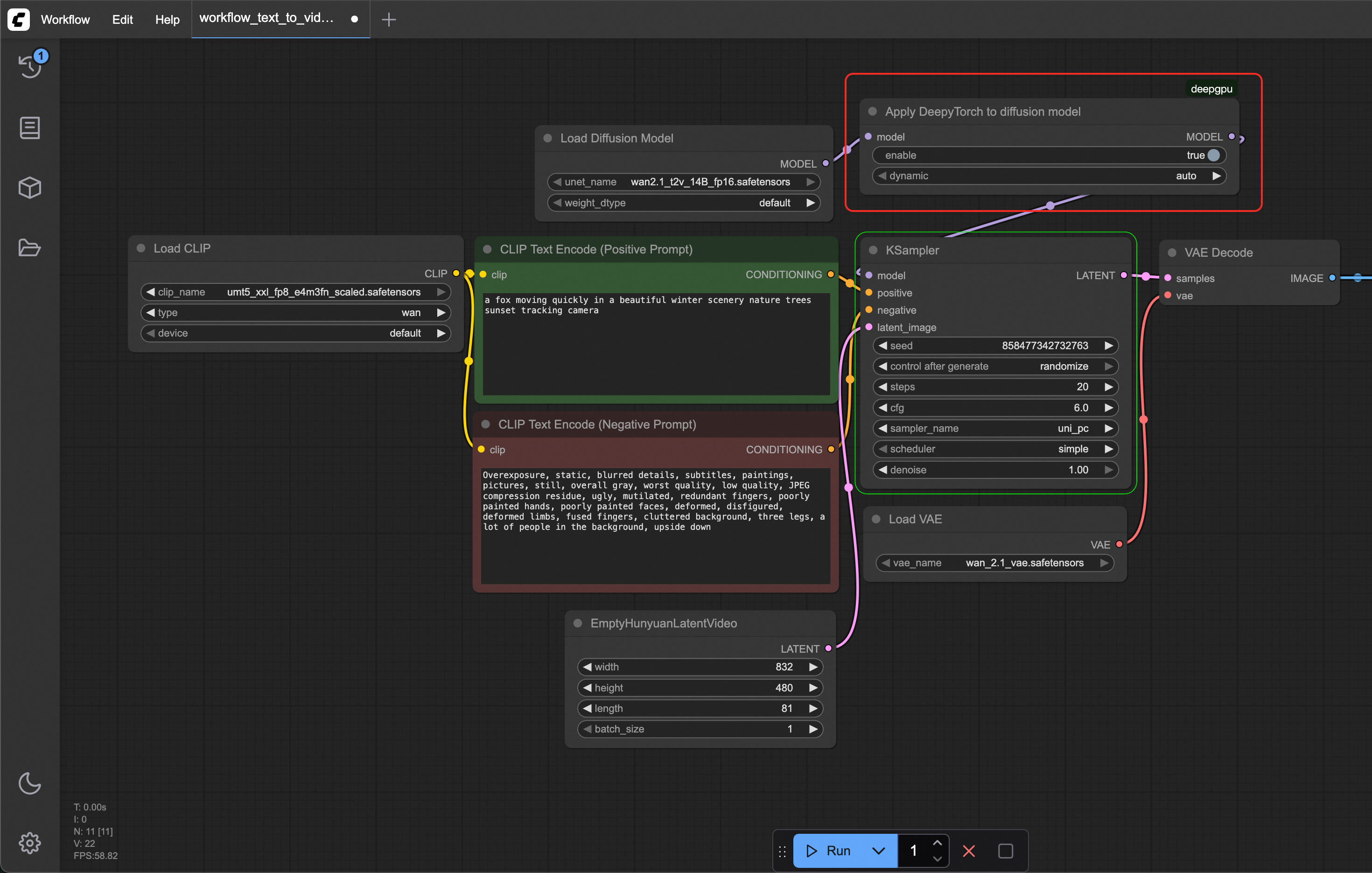

The following steps use the accelerated text-to-video workflow as an example. In ComfyUI, choose , and then select the downloaded

workflow_text_to_video_wan_deepytorch.jsonfile.After you open the workflow file, find the Apply DeepyTorch to diffusion model node and set its enable parameter to true to enable acceleration. Then, click Run and wait for the video to be generated.

The DeepyTorch accelerated workflow inserts an ApplyDeepyTorch node after the Load Diffusion Model node.

Click the Queue button on the left to view the video generation time and preview the video.

The first test run may take longer. Run the workflow two or three more times to get the best performance.

(Optional) To test the non-accelerated scenario, restart the ComfyUI service and select the following workflow to generate the video.

https://aiacc-inference-public-v2.oss-cn-hangzhou.aliyuncs.com/deepgpu/comfyui/wan/workflows/workflow_text_to_video_wan.json