If your domain name is being attacked or abused for resources, you may experience high bandwidth usage or traffic spikes. This can result in unexpected cost, which cannot be waived or refunded, but you can prevent traffic abuse.

Loss prevention

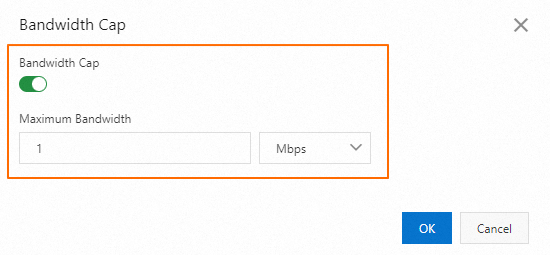

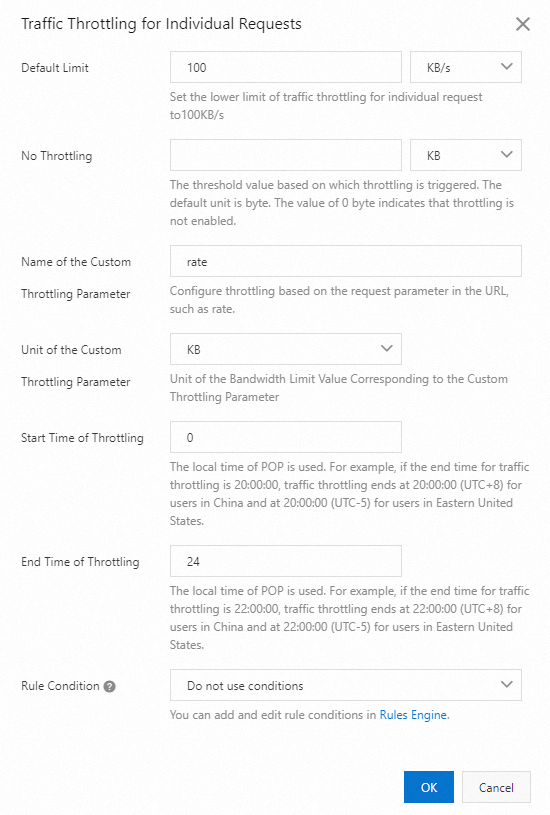

If you have received bills that are higher than expected, specify a bandwidth cap and configure traffic throttling for individual requests to reduce further losses.

Limit bandwidth usage

Limit the downstream speed

Analyze the cause

Analyze the logs and configure security settings to identify the cause of traffic abuse.

Identify the time period of abnormal traffic from your bills

You can view the billing details of cloud services on the Billing Details tab. Select a statistical dimension and a statistical period to view reports based on different dimensions. For more information, see Billing details.

Set Statistical Period to Details and Product to CDN. Review the bills and pay attention to abnormal increases in traffic and bandwidth, along with the time periods of abnormal peaks.

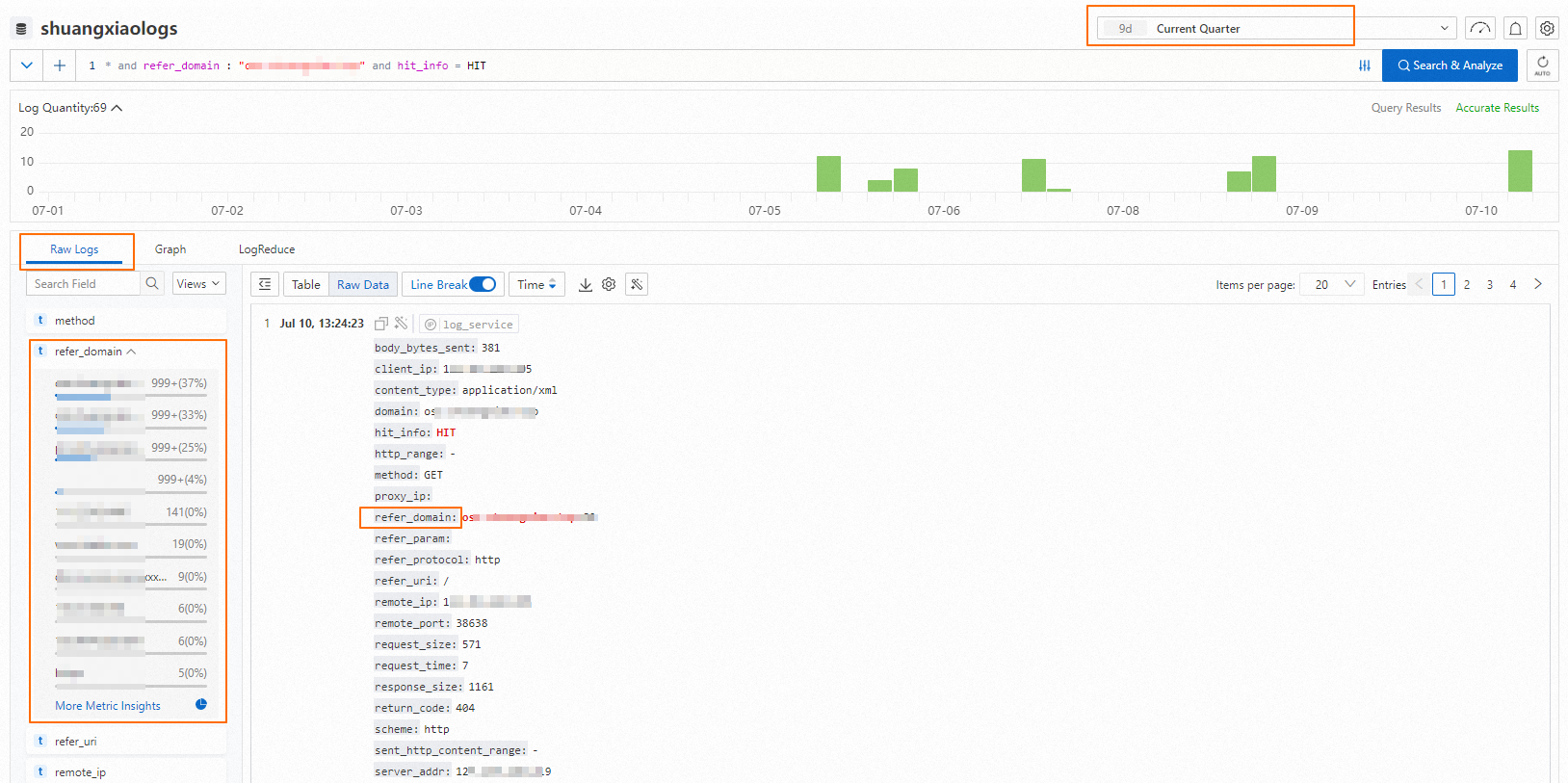

Check log files to identify abnormal traffic

Basic: offline logs

You can download offline logs to view the access logs of a selected time period, analyze the details of HTTP requests, and identify suspicious IP addresses and User-Agent headers.

Offline logs contain a small number of fields. If you want to view more details, use the real-time logs feature.

After you obtain offline log files, you can use command-line tools to quickly parse log files and extract information such as the top 10 IP addresses or User-Agent headers by access volume. For more information, see Analysis method of Alibaba Cloud Content Delivery Network access log.

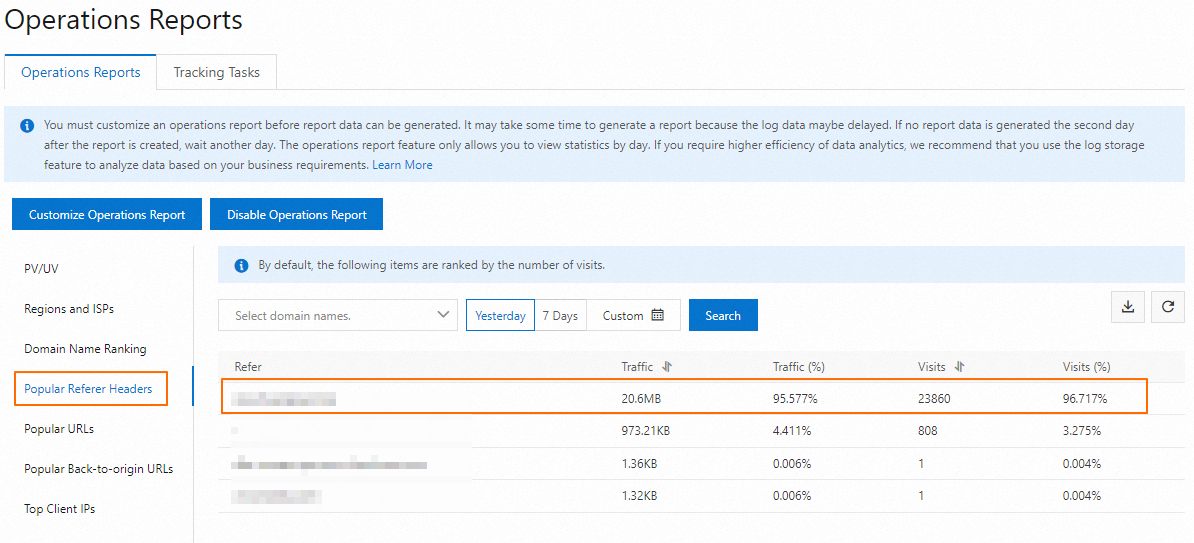

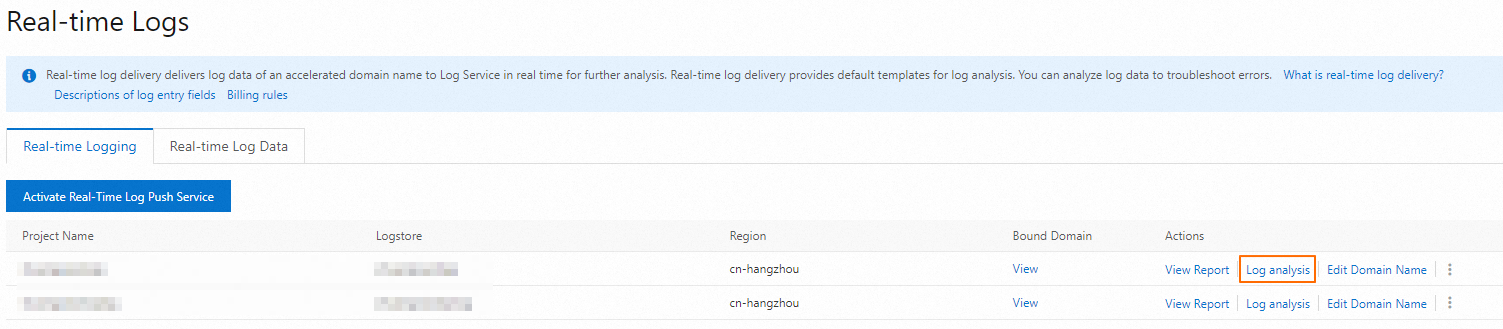

Advanced: operations reports and real-time logs

Configure operations reports and real-time logs before troubleshooting:

Operations reports need to be customized before statistical analysis is performed. If you've previously set up real-time log delivery or subscribed to reports, you can view historical log data. The operations report feature is free.

Real-time logs are generated only after you activate Simple Log Service (SLS) and successfully deliver logs. Real-time log is a paid feature.

Solve the issue

Using logs or reports, you can analyze attack types based on request characteristics. In most cases, you can examine top data such as IP addresses, User-Agent headers, and Referer headers to identify patterns and extract features.

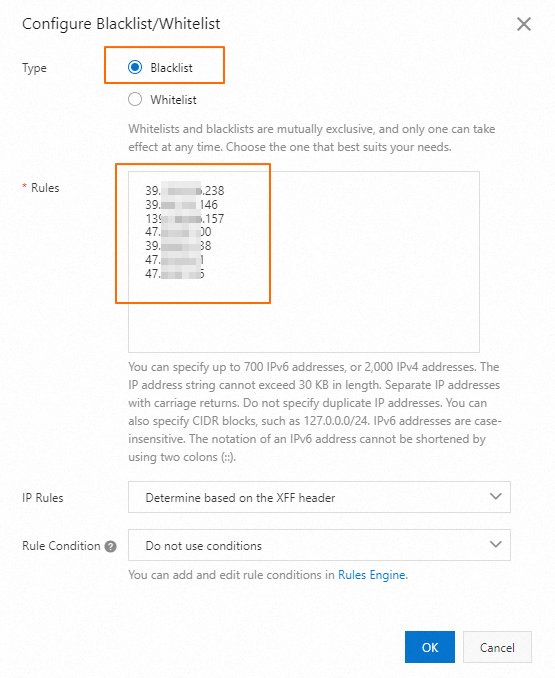

Restrict access from suspicious IP addresses

Configure an IP address blacklist or whitelist to restrict access from specific IP addresses. After you analyze the logs and identify suspicious attack IP addresses, add the IP addresses to the blacklist.

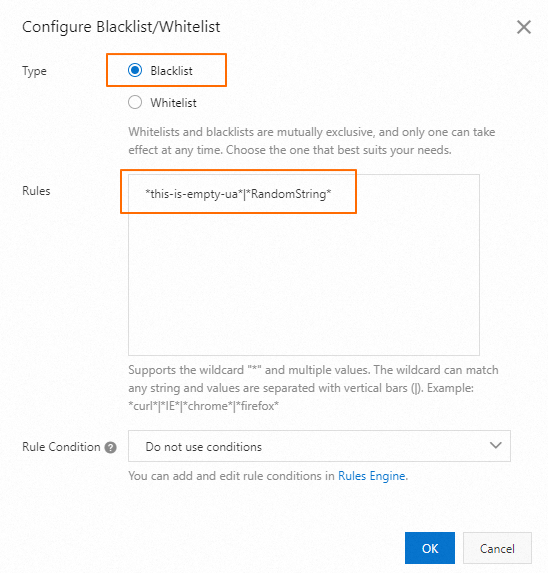

Filter suspicious User-Agent headers

Attackers attempt to bypass security checks by using forged User-Agent headers to send many requests. A forged User-Agent header may be a null value, a random string, or a forged string for common browsers.

Configure a User-Agent blacklist or whitelist to reject requests that contain an abnormal User-Agent header. For example, to reject empty User-Agent headers or non-standard random strings, you can use the parameters this-is-empty-ua and RandomString to represent empty User-Agent headers and random strings, respectively.

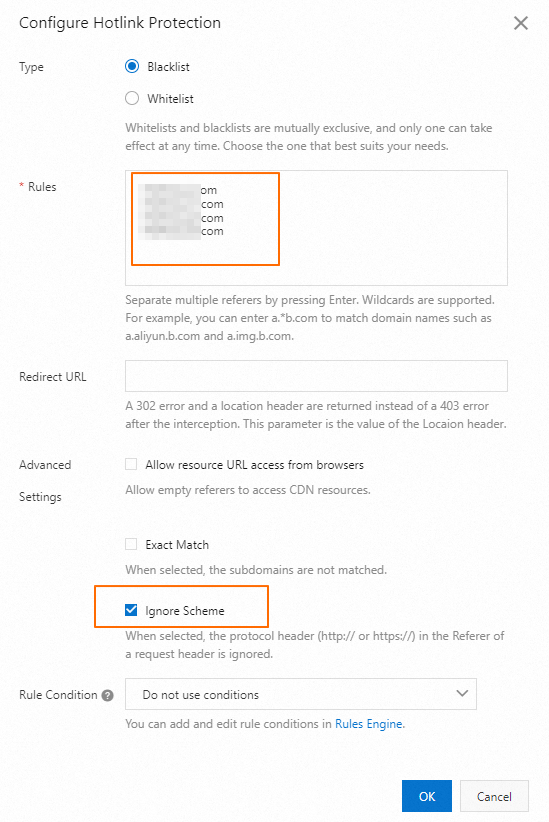

Add suspicious Referer headers to the blacklist

Attackers forge Referer headers in requests to impersonate legitimate reference sources and initiate malicious requests.

Configure a Referer blacklist or whitelist to allow requests that contain legitimate Referer headers, prevent links to resources from unauthorized third-party websites, and reject requests that contain malicious Referer headers. In the Rules field, enter the abnormal Referer headers found in the logs. We recommend that you select Ignore Scheme.

Upgrade to ESA and enable WAF and bot management

We recommend that you migrate your domain name to ESA. ESA provides a wide range of protection features that not only protect data security but also improve access speed and user experience. You can quickly migrate your domain name by following the instructions in Upgrade from CDN or DCDN to ESA. Then, follow the instructions to enable the security protection features of ESA.

WAF

The WAF feature of ESA provides a variety of rule matching and blocking capabilities, such as IP access rules, whitelist rules, and custom rules, to prevent malicious intrusions into website servers and protect core business data.

Create an IP access rule

In the ESA console, choose Websites and click the name of the website you want to manage.

In the left-side navigation pane, choose .

In the IP Access Rules section, select IP/CIDR Block, Region, or ASN from the Value drop-down list, specify the value, select an action from the Action drop-down list, and then click Create Rule. For information about the supported actions, see Actions.

Optional. By default, the rules apply to all HTTP (Layer 7) requests within your website. In the left-side navigation pane, choose . On the page that appears, click Create Application. On the Create Application page, turn on the IP Access Rules switch. This enables the IP access rule that you previously created to take effect for TCP/UDP (Layer 4 requests). By default, an IP access rule takes effect only for HTTP (Layer 7) requests.

Create a whitelist rule

In the ESA console, choose Websites and click the name of the website you want to manage.

In the left-side navigation pane of your website details page, choose . On the WAF page, click the Whitelist Rules tab.

On the Whitelist Rules tab, click Create Rule.

On the page that appears, specify Rule Name.

Specify the conditions for matching incoming requests in the If requests match... section. For more information, see Work with rules.

Specify the rules that you want to skip in the Then skip... section.

All Rules: All Web Application Firewall (WAF) and bot management rules are skipped.

Certain Rules: You can select specific rules that you want to skip. If you select Managed Rules from the drop-down list, you can specify the type such as SQL injection or ID of the rule that you want to skip.

Click OK.

Create a custom rule

In the ESA console, choose Websites and click the name of the website you want to manage.

In the left-side navigation pane, choose .

On the Custom Rules tab, click Create Rule.

On the page that appears, specify Rule Name.

Specify the conditions for matching incoming requests in the If requests match... section. For more information about custom rules, see Work with rules.

Specify the actions you want to perform in the Then execute... section.

Click OK.

Bot management

The Bot Management feature of ESA supports Smart Mode and Professional Mode configurations. In the smart mode, you can configure crawler management for your website. In the professional mode, you can configure more precise crawler rules to suit your website or application.

What is Smart Mode

Designed for entry-level users, Smart Mode helps quick management of automated traffic and crawlers. In contrast to Professional Mode available in the Enterprise plan, Smart Mode allows you to easily select actions to handle specific types of crawlers.

Set up Smart Mode

Options

ESA categorize traffic and crawlers based on their characteristics:

Definite Bots

Requests that are Definite Bots contain a large number of malicious crawler requests. It is recommended to block the request or initiate a slider challenge.

Likely Bots

Likely Bots requests are relatively low risk and may contain malicious crawlers and other traffic. It is recommended to observe or do slider challenges when there is risk.

Verified Bots

Verified Bots are usually crawlers for search engines, which are good for SEO optimization of your website. We recommend that you block traffic if you do not want any search engine crawlers to access your website.

Likely Human (Actions are not supported)

The requests are likely from real users. We recommend that you do not take special actions.

Static Resource Protection

By default, Definite Bots, Likely Bots, and Verified Bots take effect only for dynamic resource requests. These requests will be accelerated to access your origin server. After you enable Static Resource Protection, the configurations take effect for requests that hit the ESA cache, which are usually static files such as images and videos.

JavaScript Detection

ESA uses lightweight and background JavaScript detections to improve bot management.

NoteOnly browser traffic can pass JavaScript detection challenges. If your business involves non-browser traffic, such as requests from data centers, disable this feature to avoid false positives.

What is Professional Mode

In Professional Mode, you can implement anti-crawler features for web pages in browsers or apps developed on iOS or Android. You can create tailored anti-crawler rules based on request characteristics. Additionally, you can utilize built-in libraries, including the search engine crawler library, AI protection, bot threat intelligence, data center blacklist, and fake crawler list, which eliminate the need for manual updates and analysis of crawler characteristics.

Access Professional Mode

Anti-crawler rules settings for browser

If your web pages, HTML5 pages, or HTML5 apps are accessible from browsers, you can configure anti-crawler rules for the websites to protect your services from malicious crawlers.

Global settings

Rule Set Name: The name of the rule set. The name can contain letters, digits, and underscores (_).

Service Type: Select Browsers. This way, the rule set applies to web pages, HTML5 pages, and HTML5 apps.

SDK Integration:

Automatic Integration (Recommended):

WAF automatically references the SDK in the HTML pages of the website and embed JavaScript code. Then, the SDK collects information such as browser information, probe signatures, and malicious behaviors. Sensitive information is not collected. WAF detects and blocks malicious crawlers based on the collected information.

Manual Integration

If automatic integration is not supported, you can use manual integration. Copy the JavaScript code displayed on the web page to your HTML code.

Cross-origin Request: If you select Automatic Integration (Recommended), for multiple websites and these websites can access each other, you must select a different domain for this parameter to prevent duplicate JavaScript code. For example, if you log on to the Website A from Website B, you need to specify the domain name of Website B for this parameter.

If requests match...

Specify the conditions for matching incoming requests. For more information, see WAF.

Then execute...

Legitimate Bot Management

The search engine crawler whitelist contains the crawler IP addresses of major search engines, including Google, Baidu, Sogou, 360, Bing, and Yandex. The whitelist is dynamically updated.

After you select a search engine spider whitelist, requests sent from the crawler IP addresses of the search engines are allowed. The bot management module no longer checks the requests.

Bot Characteristic Detection

Script-based Bot Block (JavaScript): If you enable this, WAF performs JavaScript validation on clients. To prevent simple script-based attacks, traffic from non-browser tools that cannot run JavaScript is blocked.

Advanced Bot Defense (Dynamic Token-based Authentication): If you enable this, the signature of each request is verified. Requests that fail the verification are blocked. SDK Signature Verification is selected by default and cannot be cleared. Requests that do not contain signatures or requests that contain invalid signatures are detected. You can also select Signature Timestamp Exception and WebDriver Attack.

Bot Behavior Detection

After you enable AI protection, the intelligent protection engine analyzes access traffic and performs machine learning. Then, a blacklist or a protection rule is generated based on the analysis results and learned patterns.

Monitor: The anti-crawler rule allows traffic that matches the rule and records the traffic in security reports.

Slider CAPTCHA: Clients must pass slider CAPTCHA verification before the clients can access the website.

Custom Throttling

IP Address Throttling (Default): You can configure throttling conditions for IP addresses. If the number of requests from the same IP address within the value specified by Statistical Interval (Seconds) exceeds the value of Threshold (Times), the system performs the specified action on subsequent requests. The action can be specified by selecting Slider CAPTCHA, Block, or Monitor from the Action drop-down list. You can add up to three conditions. The conditions are in an OR relation.

Custom Session Throttling: You can configure throttling conditions for sessions. You can configure the Session Type parameter to specify the session type. If the number of requests from the same IP address within the value specified by Statistical Interval (Seconds) exceeds the value of Threshold (Times), WAF performs the specified action on subsequent requests. The action can be specified by selecting Slider CAPTCHA, Block, or Monitor from the Action drop-down list. You can add up to three conditions. The conditions are in an OR relation.

Bot Threat Intelligence Library

The library contains the IP addresses of attackers that have sent multiple requests to crawl content from Alibaba Cloud users over a specific period of time.

You can set Action to Monitor or Slider CAPTCHA.

Data Center Blacklist

After you enable this feature, the IP addresses in the selected IP address libraries of data centers are blocked. If you use the source IP addresses of public clouds or data centers to access the website that you want to protect, you must add the IP addresses to the whitelist. For example, you must add the callback IP addresses of Alipay or WeChat and the IP addresses of monitoring applications to the whitelist.

The data center blacklist supports the following IP address libraries: IP Address Library of Data Center-Alibaba Cloud, IP Address Library of Data Center-21Vianet, IP Address Library of Data Center-Meituan Open Services, IP Address Library of Data Center-Tencent Cloud, and IP Address Library of Data Center-Other.

You can set the Action parameter to Monitor, Slider CAPTCHA, or Block.

Fake Spider Blocking

After you enable this feature, WAF blocks the User-Agent headers that are used by all search engines specified in the Legitimate Bot Management section. If the IP addresses of clients that access the search engines are proved to be valid, WAF allows requests from the search engines.

Effective Time

By default, rules take effect immediately and permanently once being created. You can specify time ranges or cycles in which rules take effect.

Anti-crawler rules settings for apps

You can configure anti-crawler rules for your native iOS or Android apps (excluding HTML5 apps) to protect your services from crawlers.

Global settings

Rule Set Name: The name of the rule set. The name can contain letters, digits, and underscores (_).

Service Type: Select Apps to configure anti-crawler rules for native iOS and Android apps.

SDK Integration: To obtain the SDK package, click Obtain and Copy AppKey and then submit a ticket. For more information, see Integrate the Anti-Bot SDK into Android apps and Integrate the Anti-Bot SDK into iOS apps. After the Anti-Bot SDK is integrated, the Anti-Bot SDK collects the risk characteristics of clients and generates security signatures in requests. WAF identifies and blocks requests that are identified as unsafe based on the signatures.

If requests match...

Specify the conditions for matching incoming requests. For more information, see WAF.

Then execute...

Bot Characteristic Detection

Abnormal Device Behavior: After you enable this feature, the anti-crawler rule detects and controls the requests from the devices that have abnormal characteristics. The following behaviors are considered abnormal:

Expired Signature: The signature expires. This behavior is selected by default.

Using Simulator: A simulator is used.

Using Proxy: A proxy is used.

Rooted Device: A rooted device is used.

Debugging Mode: The debugging mode is used.

Hooking: Hooking techniques are used.

Multiboxing: Multiple protected app processes run on the device at the same time.

Simulated Execution: User behavior simulation techniques are used.

Using Script Tool: An automatic script is used.

Custom Signature Field: Turn on this switch and select header, parameter, or cookie from the Field Name drop-down list.

If the custom signature is empty or has special characters or the length exceeds the limit, you can hash the signature or process the signature by using other methods and enter the processing result in the Value field.

Action: You can set this parameter to Monitor or Block.

Monitor: triggers alerts and does not block requests.

Block: blocks attack requests.

Secondary Packaging Detection: Requests that are sent from apps whose package names or signatures are not in the whitelists are considered secondary packaging requests. You can specify valid application packages.

Valid Package Name: Enter the valid application package name. Example: example.aliyundoc.com.

Signature: Contact Alibaba Cloud technical support to obtain the package signature. This parameter is optional if the package signature does not need to be verified. In this case, the system verifies only the package name.

NoteThe value of Signature is not the signature of the application certificate.

Bot Throttling

IP Address Throttling (Default): You can configure throttling conditions for IP addresses. If the number of requests from the same IP address within the value specified by Statistical Interval (Seconds) exceeds the value of Threshold (Times), the system performs the specified action on subsequent requests. The action can be specified by selecting Block or Monitor from the Action drop-down list.

Device Throttling: You can configure throttling conditions for devices. If the number of requests from the same device within the value specified by Statistical Interval (Seconds) exceeds the value of Threshold (Times), the system performs the specified action on subsequent requests. The action can be specified by selecting Block or Monitor from the Action drop-down list.

Custom Session Throttling: You can configure throttling conditions for sessions. You can configure the Session Type parameter to specify the session type. If the number of requests from the same session within the value specified by Statistical Interval (Seconds) exceeds the value of Threshold (Times), the system performs the specified action on subsequent requests. The action can be specified by selecting Block or Monitor from the Action drop-down list. You can also configure the Throttling Interval (Seconds) parameter, which specifies the period during which the specified action is performed.

Bot Threat Intelligence Library

The library contains the IP addresses of attackers that have sent multiple requests to crawl content from Alibaba Cloud users over a specific period of time.

Data Center Blacklist

After you enable this feature, the IP addresses in the selected IP address libraries of data centers are blocked. If you use the source IP addresses of public clouds or data centers to access the website that you want to protect, you must add the IP addresses to the whitelist. For example, you must add the callback IP addresses of Alipay or WeChat and the IP addresses of monitoring applications to the whitelist. The data center blacklist supports the following IP address libraries: IP Address Library of Data Center-Alibaba Cloud, IP Address Library of Data Center-21Vianet, IP Address Library of Data Center-Meituan Open Services, IP Address Library of Data Center-Tencent Cloud, and IP Address Library of Data Center-Other.

Effective Time

By default, rules take effect immediately and permanently once being created. You can specify time ranges or cycles in which rules take effect.

Security analytics

Security analytics displays data such as interception, observation, and total access volume of WAF and Bot management, allowing you to dynamically adjust your protection rules based on the data.

Security analytics panels

You can see a top data panel and an overview panel.

Top data panels

You can filter the data to view the top five or all results in an available dimension:

The available dimensions are:

Overview panel

On the Overview panel:

The Request Analytics tab is an interactive chart that displays the same dimensions as the top data panel.

The Bot Analytics tabs is an interactive chart that displays incoming requests categorized into Likely Human, Definite Bots, Likely Bots, or Verified Bots.

In both charts, you can move your mouse pointer over a dimension name and click Filter or Exclude to filter out or exclude data from your analytics report.

More protection features

Set up real-time monitoring

Set up bandwidth peak monitoring for specified domain names under the CDN product. When the set bandwidth peak is reached, alerts will be sent to administrators (via text message, email, and DingTalk), allowing for more timely detection of potential threats. For more information, see Set alerts.

Set cost alerts

You can use the following features to monitor and limit the expenses. To configure the features, move your pointer over Expenses in the top navigation bar of the console and select Expenses and Costs.

Low balance alerts: If you enable this feature, the system sends an alert by text message when your balance is lower than the threshold that you specified.

Service suspension protection: If you disable this feature, the service immediately stops running after a payment becomes overdue to prevent high overdue payments.

High bill alert: After this feature is enabled, notifications are sent to you by text message if a daily bill reaches a specified amount.

To ensure the integrity of the statistics and the accuracy of bills, Alibaba Cloud CDN issues the bill approximately 3 hours after a billing cycle ends. The point in time at which the relevant fees are deducted from your account balance may be later than the point in time at which the resources are consumed within the billing cycle. Alibaba Cloud CDN is a distributed service. Therefore, Alibaba Cloud does not provide the consumption details of resources in bills. Other CDN providers use a similar approach.