The Prefill/Decode (PD) disaggregated architecture is a mainstream optimization technique for large language model (LLM) inference. It decouples the two core stages of LLM inference and deploys them on different GPUs. This approach avoids resource contention, which significantly reduces the Time to First Token (TPOT) and improves system throughput. This topic uses the Qwen3-32B model as an example to demonstrate how to use Gateway with Inference Extension for an SGLang PD-disaggregated model inference service deployed in an ACK cluster.

Before you proceed, make sure that you understand the concepts of InferencePool and InferenceModel.

For more background information about PD disaggregation, see Deploy an SGLang PD-disaggregated inference service.

This topic requires version 1.4.0 or later of Gateway with Inference Extension. When you install the component, make sure that you select Enable Gateway API Inference Extension.

Prerequisites

Create an ACK cluster of version 1.22 or later and add GPU nodes to the cluster. For more information, see Create an ACK managed cluster and Add GPU nodes to a cluster.

This topic requires a cluster with six or more GPU cards, each with 32 GB or more of video memory. Because the SGLang Prefill/Decode separation framework relies on GPU Direct RDMA (GDR) for data transmission, the selected node specifications must support Elastic RDMA (eRDMA). We recommend using the ecs.ebmgn8is.32xlarge specification. For more information about specifications, see ECS Bare Metal Instance specifications.

Node operating system image: Using eRDMA requires support from the relevant software stack. When you create a node pool, select the Alibaba Cloud Linux 3 64-bit (pre-installed with eRDMA software stack) operating system image from the Alibaba Cloud Marketplace images. For more information, see Add eRDMA nodes in an ACK cluster.

Install the ack-eRDMA-controller component. For more information, see Use eRDMA to accelerate container networks and Install and configure the ACK eRDMA Controller component.

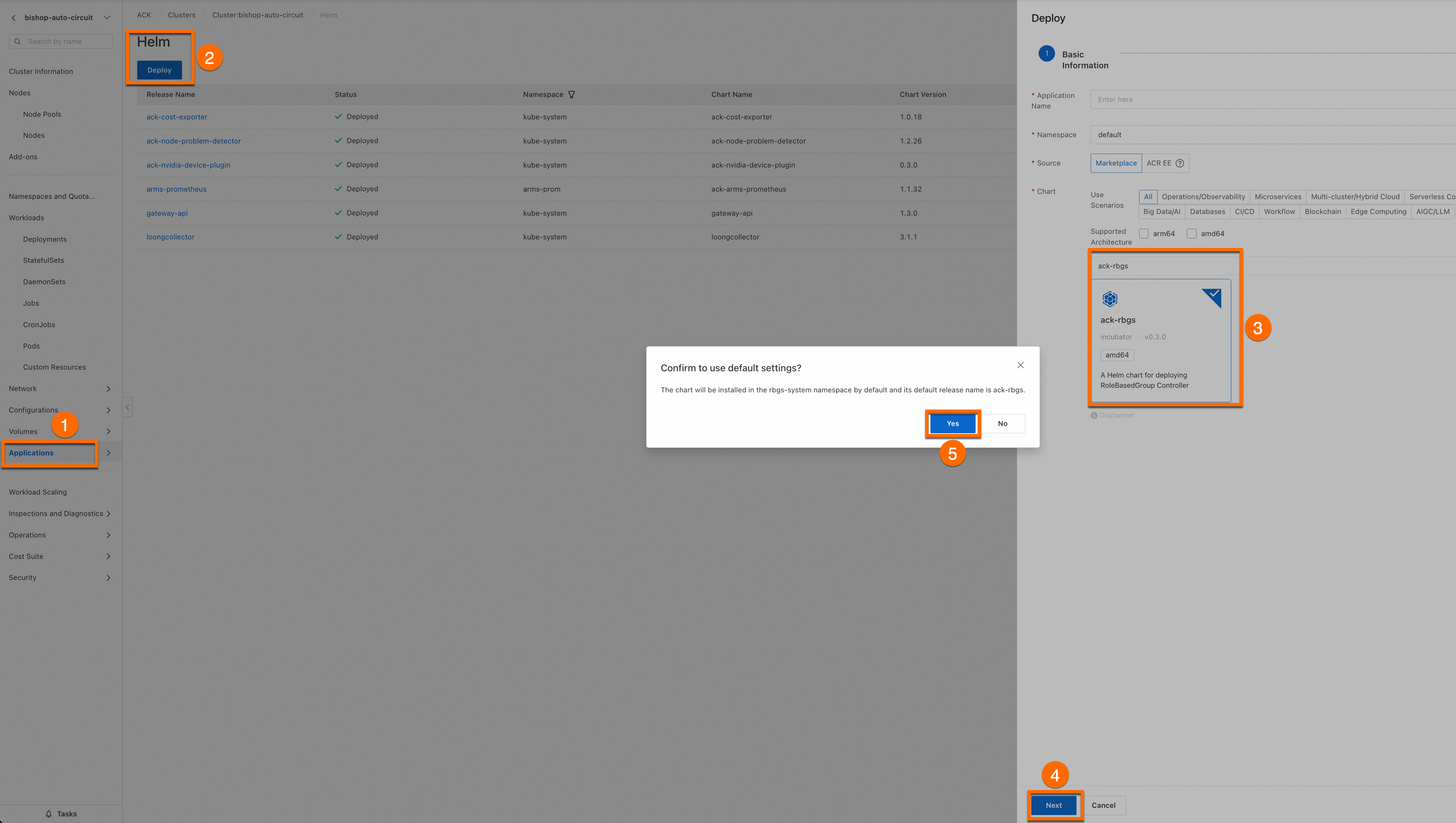

Install the ack-rbgs component as follows.

Log on to the Container Service Management Console. In the navigation pane on the left, select Cluster List. Click the name of the target cluster. On the cluster details page, use Helm to install the ack-rbgs component. You do not need to configure the Application Name or Namespace for the component. Click Next. In the Confirm dialog box that appears, click Yes to use the default application name (ack-rbgs) and namespace (rbgs-system). Then, select the latest Chart version and click OK to complete the installation.

Model deployment

Step 1: Prepare the Qwen3-32B model files

Download the Qwen-32B model from ModelScope.

Make sure that the git-lfs plugin is installed. If it is not installed, you can run

yum install git-lfsorapt-get install git-lfsto install it. For more information about installation methods, see Installing Git Large File Storage.git lfs install GIT_LFS_SKIP_SMUDGE=1 git clone https://www.modelscope.cn/Qwen/Qwen3-32B.git cd Qwen3-32B/ git lfs pullCreate a folder in OSS and upload the model files to it.

For information about how to install and use ossutil, see Install ossutil.

ossutil mkdir oss://<YOUR-BUCKET-NAME>/Qwen3-32B ossutil cp -r ./Qwen3-32B oss://<YOUR-BUCKET-NAME>/Qwen3-32BCreate a persistent volume (PV) named

llm-modeland a persistent volume claim (PVC) for the target cluster. For more information, see Use ossfs 1.0 to create a statically provisioned volume.Create an llm-model.yaml file. This file contains the configurations for a Secret, a statically provisioned PV, and a statically provisioned PVC.

apiVersion: v1 kind: Secret metadata: name: oss-secret stringData: akId: <YOUR-OSS-AK> # The AccessKey ID used to access OSS akSecret: <YOUR-OSS-SK> # The AccessKey secret used to access OSS --- apiVersion: v1 kind: PersistentVolume metadata: name: llm-model labels: alicloud-pvname: llm-model spec: capacity: storage: 30 Gi accessModes: - ReadOnlyMany persistentVolumeReclaimPolicy: Retain csi: driver: ossplugin.csi.alibabacloud.com volumeHandle: llm-model nodePublishSecretRef: name: oss-secret namespace: default volumeAttributes: bucket: <YOUR-BUCKET-NAME> # The name of the bucket. url: <YOUR-BUCKET-ENDPOINT> # The Endpoint information, such as oss-cn-hangzhou-internal.aliyuncs.com. otherOpts: "-o umask=022 -o max_stat_cache_size=0 -o allow_other" path: <YOUR-MODEL-PATH> # In this example, the path is /Qwen3-32B/. --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: llm-model spec: accessModes: - ReadOnlyMany resources: requests: storage: 30 Gi selector: matchLabels: alicloud-pvname: llm-modelCreate the Secret, the statically provisioned PV, and the statically provisioned PVC.

kubectl create -f llm-model.yaml

Step 2: Deploy the SGLang PD-disaggregated inference service

Create an sglang_pd.yaml file.

Deploy the SGLang PD-disaggregated inference service.

kubectl create -f sglang_pd.yaml

Inference routing deployment

Step 1: Deploy the inference routing policy

Create an inference-policy.yaml file.

# InferencePool declares that inference routing is enabled for the workload. apiVersion: inference.networking.x-k8s.io/v1alpha2 kind: InferencePool metadata: name: qwen-inference-pool spec: targetPortNumber: 8000 selector: alibabacloud.com/inference_backend: sglang # Selects both the prefill and decode workloads. --- # InferenceTrafficPolicy specifies the traffic policy applied to the InferencePool. apiVersion: inferenceextension.alibabacloud.com/v1alpha1 kind: InferenceTrafficPolicy metadata: name: inference-policy spec: poolRef: name: qwen-inference-pool modelServerRuntime: sglang # Specifies that the backend service runtime framework is SGLang. profile: pd: # Specifies that the backend service is deployed in PD-disaggregated mode. pdRoleLabelName: rolebasedgroup.workloads.x-k8s.io/role # Differentiates between the prefill and decode roles in the InferencePool by specifying pod labels. kvTransfer: bootstrapPort: 34000 # The bootstrap port used for KVCache transmission by the SGLang PD-disaggregated service. This must be consistent with the disaggregation-bootstrap-port parameter specified in the RoleBasedGroup deployment.Deploy the inference routing policy.

kubectl apply -f inference-policy.yaml

Step 2: Deploy the gateway and gateway routing rules

Create an inference-gateway.yaml file. This file includes the gateway, gateway routing rules, and backend timeout rules.

apiVersion: gateway.networking.k8s.io/v1 kind: Gateway metadata: name: inference-gateway spec: gatewayClassName: ack-gateway listeners: - name: http-llm protocol: HTTP port: 8080 --- apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: inference-route spec: parentRefs: - name: inference-gateway rules: - matches: - path: type: PathPrefix value: /v1 backendRefs: - name: qwen-inference-pool kind: InferencePool group: inference.networking.x-k8s.io --- apiVersion: gateway.envoyproxy.io/v1alpha1 kind: BackendTrafficPolicy metadata: name: backend-timeout spec: timeout: http: requestTimeout: 24h targetRef: group: gateway.networking.k8s.io kind: Gateway name: inference-gatewayDeploy the gateway and routing rules.

kubectl apply -f inference-gateway.yaml

Step 3: Verify inference routing for the SGLang PD-disaggregated service

Obtain the IP address of the gateway.

export GATEWAY_IP=$(kubectl get gateway/inference-gateway -o jsonpath='{.status.addresses[0].value}')Verify that the gateway routes traffic to the inference service over HTTP on port 8080.

curl http://$GATEWAY_IP:8080/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "/models/Qwen3-32B", "messages": [ {"role": "user", "content": "Hello, this is a test"} ], "max_tokens": 50 }'Expected output:

{"id":"02ceade4e6f34aeb98c2819b8a2545d6","object":"chat.completion","created":1755589644,"model":"/models/Qwen3-32B","choices":[{"index":0,"message":{"role":"assistant","content":"<think>\nOkay, the user sent \"Hello, this is a test\". It seems they are testing my response. First, I need to confirm what the user's request is. It's possible they want to see if my reply meets their expectations or to check for errors. I should remain friendly and","reasoning_content":null,"tool_calls":null},"logprobs":null,"finish_reason":"length","matched_stop":null}],"usage":{"prompt_tokens":12,"total_tokens":62,"completion_tokens":50,"prompt_tokens_details":null}}The output indicates that Gateway with Inference Extension correctly scheduled the requests to the SGLang PD-disaggregated inference service and generated responses based on the provided input.