GPU sharing allows you to schedule multiple pods to the same GPU to share the computing resources of the GPU. This improves GPU utilization and reduces costs. When you implement GPU sharing, multiple containers that run on the same GPU can be isolated from each other and run according to the resource usage of each application. This prevents the resource usage of one container from exceeding the limit and affecting the normal operation of other containers. This topic describes how to use GPU sharing in an ACK Edge cluster.

Prerequisites

An ACK Edge cluster that runs Kubernetes 1.18 or later is created. For more information, see Create an ACK Edge cluster.

The cloud-native AI suite is activated. For more information about the cloud-native AI suite, see Cloud-native AI suite and Billing of the cloud-native AI suite.

Limits

The cloud nodes of the ACK Edge cluster support the GPU sharing, GPU memory isolation, and computing power isolation features.

The edge node pools of the ACK Edge cluster support only GPU sharing. The GPU memory isolation and computing power isolation features are not supported.

Usage notes

For GPU nodes that are managed in Container Service for Kubernetes (ACK) clusters, you need to pay attention to the following items when you request GPU resources for applications and use GPU resources.

Do not run GPU-heavy applications directly on nodes.

Do not use tools, such as

Docker,Podman, ornerdctl, to create containers and request GPU resources for the containers. For example, do not run thedocker run --gpus allordocker run -e NVIDIA_VISIBLE_DEVICES=allcommand and run GPU-heavy applications.Do not add the

NVIDIA_VISIBLE_DEVICES=allorNVIDIA_VISIBLE_DEVICES=<GPU ID>environment variable to theenvsection in the pod YAML file. Do not use theNVIDIA_VISIBLE_DEVICESenvironment variable to request GPU resources for pods and run GPU-heavy applications.Do not set

NVIDIA_VISIBLE_DEVICES=alland run GPU-heavy applications when you build container images if theNVIDIA_VISIBLE_DEVICESenvironment variable is not specified in the pod YAML file.Do not add

privileged: trueto thesecurityContextsection in the pod YAML file and run GPU-heavy applications.

The following potential risks may exist when you use the preceding methods to request GPU resources for your application:

If you use one of the preceding methods to request GPU resources on a node but do not specify the details in the device resource ledger of the scheduler, the actual GPU resource allocation information may be different from that in the device resource ledger of the scheduler. In this scenario, the scheduler can still schedule certain pods that request the GPU resources to the node. As a result, your applications may compete for resources provided by the same GPU, such as requesting resources from the same GPU, and some applications may fail to start up due to insufficient GPU resources.

Using the preceding methods may also cause other unknown issues, such as the issues reported by the NVIDIA community.

Step 1: Install the GPU sharing component

The cloud-native AI suite is not deployed

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane, choose .

On the Cloud-native AI Suite page, click Deploy.

On the Deploy Cloud-native AI Suite page, select Scheduling Policy Extension (Batch Task Scheduling, GPU Sharing, Topology-aware GPU Scheduling).

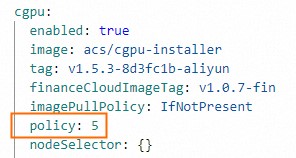

(Optional) Click Advanced to the right of Scheduling Policy Extension (Batch Task Scheduling, GPU Sharing, Topology-aware GPU Scheduling). In the Parameters panel, modify the

policyparameter of cGPU. Click OK.If you do not have requirements on the computing power sharing feature provided by cGPU, we recommend that you use the default setting

policy: 5. For more information about the policies supported by cGPU, see Install and use cGPU on a Docker container.

In the lower part of the Cloud-native AI Suite page, click Deploy Cloud-native AI Suite.

After the cloud-native AI suite is installed, you can find that ack-ai-installer is in the Deployed state on the Cloud-native AI Suite page.

The cloud-native AI suite is deployed

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane, choose .

Find ack-ai-installer and click Deploy in the Actions column.

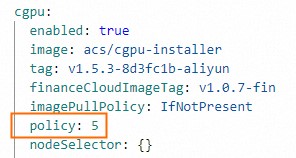

Optional. In the Parameters panel, modify the

policyparameter of cGPU.If you do not have requirements on the computing power sharing feature provided by cGPU, we recommend that you use the default setting

policy: 5. For more information about the policies supported by cGPU, see Install and use cGPU on a Docker container.

After you complete the configuration, click OK.

After ack-ai-installer is installed, the state of the component changes to Deployed.

Step 2: Create a GPU node pool

Create a cloud GPU node pool to enable the GPU sharing, GPU memory isolation, and computing power sharing features.

Create an edge GPU node pool to enable GPU sharing.

Cloud node pools

On the Clusters page, find the cluster to manage and click its name. In the left-side navigation pane, choose .

In the upper-right corner of the Node Pools page, click Create Node Pool.

In the Create Node Pool dialog box, configure the parameters to create a node pool and click Confirm Order.

The following table describes the key parameters. For more information about other parameters, see Create and manage a node pool.

Parameter

Description

Expected Nodes

The initial number of nodes in the node pool. If you do not want to create nodes in the node pool, set this parameter to 0.

Node Label

The labels that you want to add to the node pool based on your business requirement. For more information about node labels, see Labels for enabling GPU scheduling policies and methods for changing label values.

In this example, the value of the label is set to cgpu, which indicates that GPU sharing is enabled for the node. The pods on the node need to request only GPU memory. Multiple pods can share the same GPU to implement GPU memory isolation and computing power sharing.

Click the

icon next to the Node Label parameter, set the Key field to ack.node.gpu.schedule, and then set the Value field to cgpu.

icon next to the Node Label parameter, set the Key field to ack.node.gpu.schedule, and then set the Value field to cgpu. For more information about some common issues when you use the memory isolation capability provided by cGPU, see Usage notes for the memory isolation capability of cGPU.

ImportantAfter you add the label for enabling GPU sharing to a node, do not run the

kubectl label nodescommand to change the label value or use the label management feature to change the node label on the Nodes page in the ACK console. This prevents potential issues. For more information about these potential issues, see Issues that may occur if you use the kubectl label nodes command or use the label management feature to change label values in the ACK console. We recommend that you configure GPU sharing based on node pools. For more information, see Configure GPU scheduling policies for node pools.

Edge node pools

On the Clusters page, find the cluster to manage and click its name. In the left-side navigation pane, choose .

On the Node Pools page, click Create Node Pool.

In the Create Node Pool dialog box, configure the parameters and click Confirm Order. The following table describes the key parameters. For more information about the other parameters, see Edge node pool management.

Node Labels: Click the

icon in the Node Labels section, set Key to

icon in the Node Labels section, set Key to ack.node.gpu.scheduleand Value toshare. This label value enables GPU sharing. For more information about node labels, see Labels for enabling GPU scheduling policies.

Step 3: Add GPU-accelerated nodes

Add GPU-accelerated nodes to the cloud node pool and edge node pool, respectively.

Cloud nodes

If you have already added GPU-accelerated nodes to the node pool when you create the node pool, skip this step.

After the node pool is created, you can add GPU-accelerated nodes to the node pool. To add GPU-accelerated nodes, you need to select ECS instances that use the GPU-accelerated architecture. For more information, see Add existing ECS instances or Create and manage a node pool.

Edge nodes

For more information about how to add GPU-accelerated nodes to an edge node pool, see Add a GPU-accelerated node.

Step 4: Install and use the GPU inspection tool on cloud nodes

Download kubectl-inspect-cgpu. The executable file must be downloaded to a directory included in the PATH environment variable. This section uses

/usr/local/bin/as an example.If you use Linux, run the following command to download kubectl-inspect-cgpu:

wget http://aliacs-k8s-cn-beijing.oss-cn-beijing.aliyuncs.com/gpushare/kubectl-inspect-cgpu-linux -O /usr/local/bin/kubectl-inspect-cgpuIf you use macOS, run the following command to download kubectl-inspect-cgpu:

wget http://aliacs-k8s-cn-beijing.oss-cn-beijing.aliyuncs.com/gpushare/kubectl-inspect-cgpu-darwin -O /usr/local/bin/kubectl-inspect-cgpu

Run the following command to grant the execute permissions to kubectl-inspect-cgpu:

chmod +x /usr/local/bin/kubectl-inspect-cgpuRun the following command to query the GPU usage of the cluster:

kubectl inspect cgpuExpected output:

NAME IPADDRESS GPU0(Allocated/Total) GPU Memory(GiB) cn-shanghai.192.168.6.104 192.168.6.104 0/15 0/15 ---------------------------------------------------------------------- Allocated/Total GPU Memory In Cluster: 0/15 (0%)

Step 5: Example of GPU sharing

Cloud node pools

Run the following command to query information about GPU sharing in your cluster:

kubectl inspect cgpuNAME IPADDRESS GPU0(Allocated/Total) GPU1(Allocated/Total) GPU Memory(GiB) cn-shanghai.192.168.0.4 192.168.0.4 0/7 0/7 0/14 --------------------------------------------------------------------- Allocated/Total GPU Memory In Cluster: 0/14 (0%)NoteTo query detailed information about GPU sharing, run the kubectl inspect cgpu -d command.

Deploy a sample application that has GPU sharing enabled and request 3 GiB of GPU memory for the application.

apiVersion: batch/v1 kind: Job metadata: name: gpu-share-sample spec: parallelism: 1 template: metadata: labels: app: gpu-share-sample spec: nodeSelector: alibabacloud.com/nodepool-id: npxxxxxxxxxxxxxx # Replace this parameter with the ID of the node pool you created. containers: - name: gpu-share-sample image: registry.cn-hangzhou.aliyuncs.com/ai-samples/gpushare-sample:tensorflow-1.5 command: - python - tensorflow-sample-code/tfjob/docker/mnist/main.py - --max_steps=100000 - --data_dir=tensorflow-sample-code/data resources: limits: # The pod requests a total of 3 GiB of GPU memory. aliyun.com/gpu-mem: 3 # Specify the amount of GPU memory that is requested by the pod. workingDir: /root restartPolicy: Never

Edge node pools

Deploy a sample application for which cGPU is enabled and request 4 GiB of GPU memory for the application.

apiVersion: batch/v1

kind: Job

metadata:

name: tensorflow-mnist-share

spec:

parallelism: 1

template:

metadata:

labels:

app: tensorflow-mnist-share

spec:

nodeSelector:

alibabacloud.com/nodepool-id: npxxxxxxxxxxxxxx # Replace this parameter with the ID of the edge node pool that you created.

containers:

- name: tensorflow-mnist-share

image: registry.cn-beijing.aliyuncs.com/ai-samples/gpushare-sample:tensorflow-1.5

command:

- python

- tensorflow-sample-code/tfjob/docker/mnist/main.py

- --max_steps=100000

- --data_dir=tensorflow-sample-code/data

resources:

limits:

aliyun.com/gpu-mem: 4 # Request 4 GiB of memory.

workingDir: /root

restartPolicy: NeverStep 6: Verify the results

Cloud node pools

Log on to the control plane.

Run the following command to print the log of the deployed application to check whether GPU memory isolation is enabled:

kubectl logs gpu-share-sample --tail=1Expected output:

2023-08-07 09:08:13.931003: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1326] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 2832 MB memory) -> physical GPU (device: 0, name: Tesla T4, pci bus id: 0000:00:07.0, compute capability: 7.5)The output indicates that 2,832 MiB of GPU memory is requested by the container.

Run the following command to log on to the container and view the amount of GPU memory that is allocated to the container:

kubectl exec -it gpu-share-sample nvidia-smiExpected output:

Mon Aug 7 08:52:18 2023 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 418.87.01 Driver Version: 418.87.01 CUDA Version: 10.1 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:00:07.0 Off | 0 | | N/A 41C P0 26W / 70W | 3043MiB / 3231MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| +-----------------------------------------------------------------------------+The output indicates that the amount of GPU memory allocated to the container is 3,231 MiB.

Run the following command to query the total GPU memory of the GPU-accelerated node where the application is deployed.

nvidia-smiExpected output:

Mon Aug 7 09:18:26 2023 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 418.87.01 Driver Version: 418.87.01 CUDA Version: 10.1 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:00:07.0 Off | 0 | | N/A 40C P0 26W / 70W | 3053MiB / 15079MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | 0 8796 C python3 3043MiB | +-----------------------------------------------------------------------------+The output indicates that the total GPU memory of the node is 15,079 MiB and 3,053 MiB of GPU memory is allocated to the container.

Edge node pools

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side pane, choose .

Click Terminal in the Actions column of the pod that you created, such as tensorflow-mnist-multigpu-***. Select the name of the pod that you want to manage, and run the following command:

nvidia-smiExpected output:

Wed Jun 14 06:45:56 2023 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 515.105.01 Driver Version: 515.105.01 CUDA Version: 11.7 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 Tesla V100-SXM2... On | 00000000:00:09.0 Off | 0 | | N/A 35C P0 59W / 300W | 334MiB / 16384MiB | 0% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| +-----------------------------------------------------------------------------+In this example, a V100 GPU is used. The output indicates that the pod can use all memory provided by the GPU, which is 16,384 MiB in size. This means that GPU sharing is implemented without GPU memory isolation. If GPU memory isolation is enabled, the memory size displayed in the output will equal the amount of memory requested by the pod, which is 4 GiB in this example.

The pod determines the amount of GPU memory that it can use based on the following environment variables:

ALIYUN_COM_GPU_MEM_CONTAINER=4 # The amount of GPU memory that the pod can use. ALIYUN_COM_GPU_MEM_DEV=16 # The memory size of each GPU.To calculate the ratio of the GPU memory required by the application, use the following formula:

percetange = ALIYUN_COM_GPU_MEM_CONTAINER / ALIYUN_COM_GPU_MEM_DEV = 4 / 16 = 0.25

References

For more information about GPU sharing, see GPU sharing.

For more information about how to update the GPU sharing component, see Install the GPU sharing component.

For more information about how to disable the GPU memory isolation feature for an application, see Disable the memory isolation feature of cGPU.

For more information about the advanced capabilities of GPU sharing, see Advanced capabilities.