By Yang QiuDi

Hello everyone, I am Yang Qiudi, a senior product expert from Alibaba Cloud Intelligence Group. Today, I am honored to share with you some of our practices and reflections in supporting enterprises to build AI applications over the past year at the Yunqi Conference.

Colleagues engaged in the AI field, whether they are research scholars, enterprises implementing AI, or supplying companies providing AI technologies or products, I believe we all share a very consistent feeling: the development of AI applications is unstoppable and is reshaping the software industry. Let's take a look at some data:

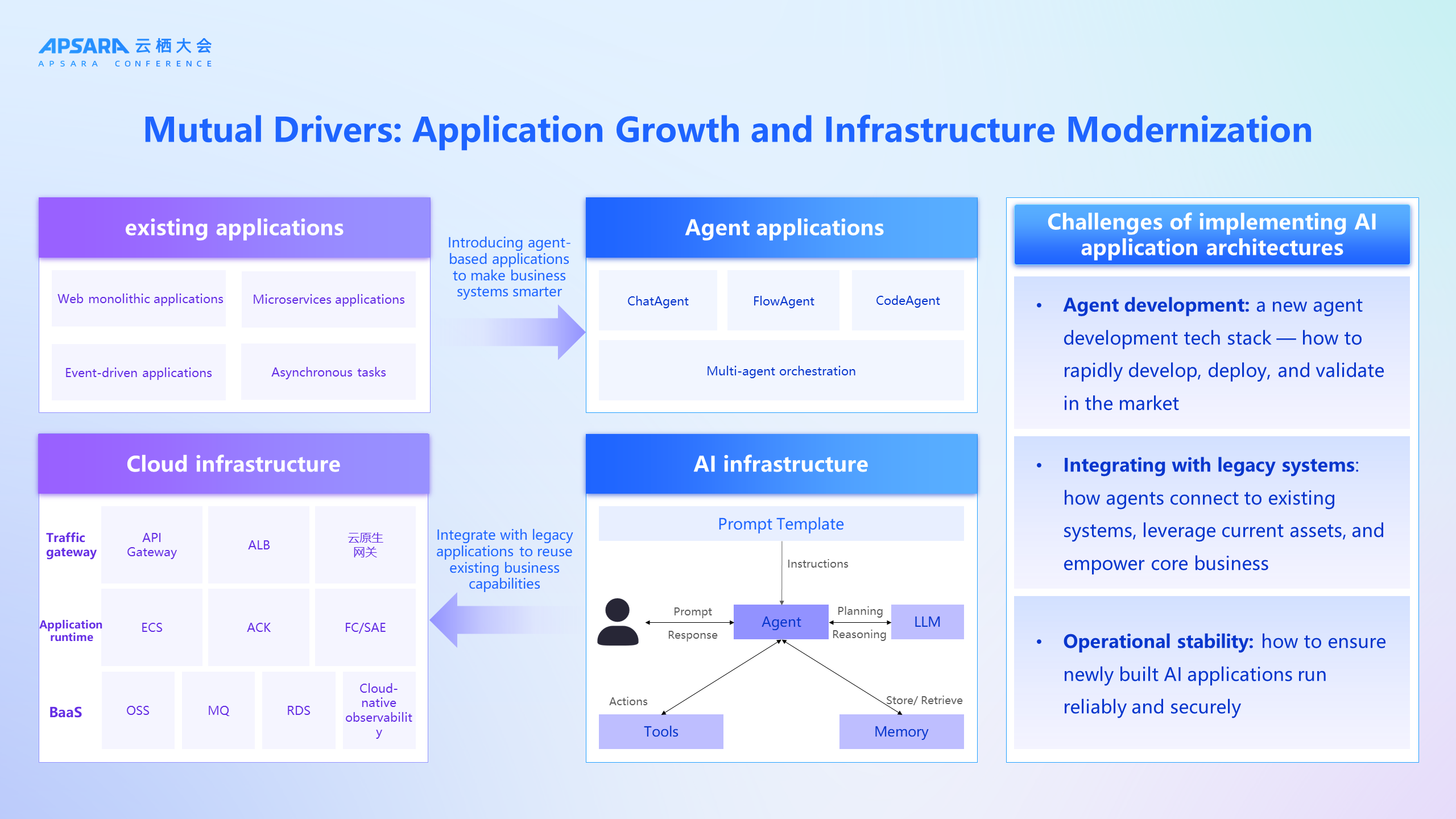

It can be seen that intelligent application has gradually become an important part of the customer application architecture. This evolution process shows that the development of applications and the upgrading of infrastructure drive each other mutually.

However, this evolution process is not without challenges. From the process of serving customers on the cloud, we found that the obstacles in landing AI application architecture center around the following three aspects:

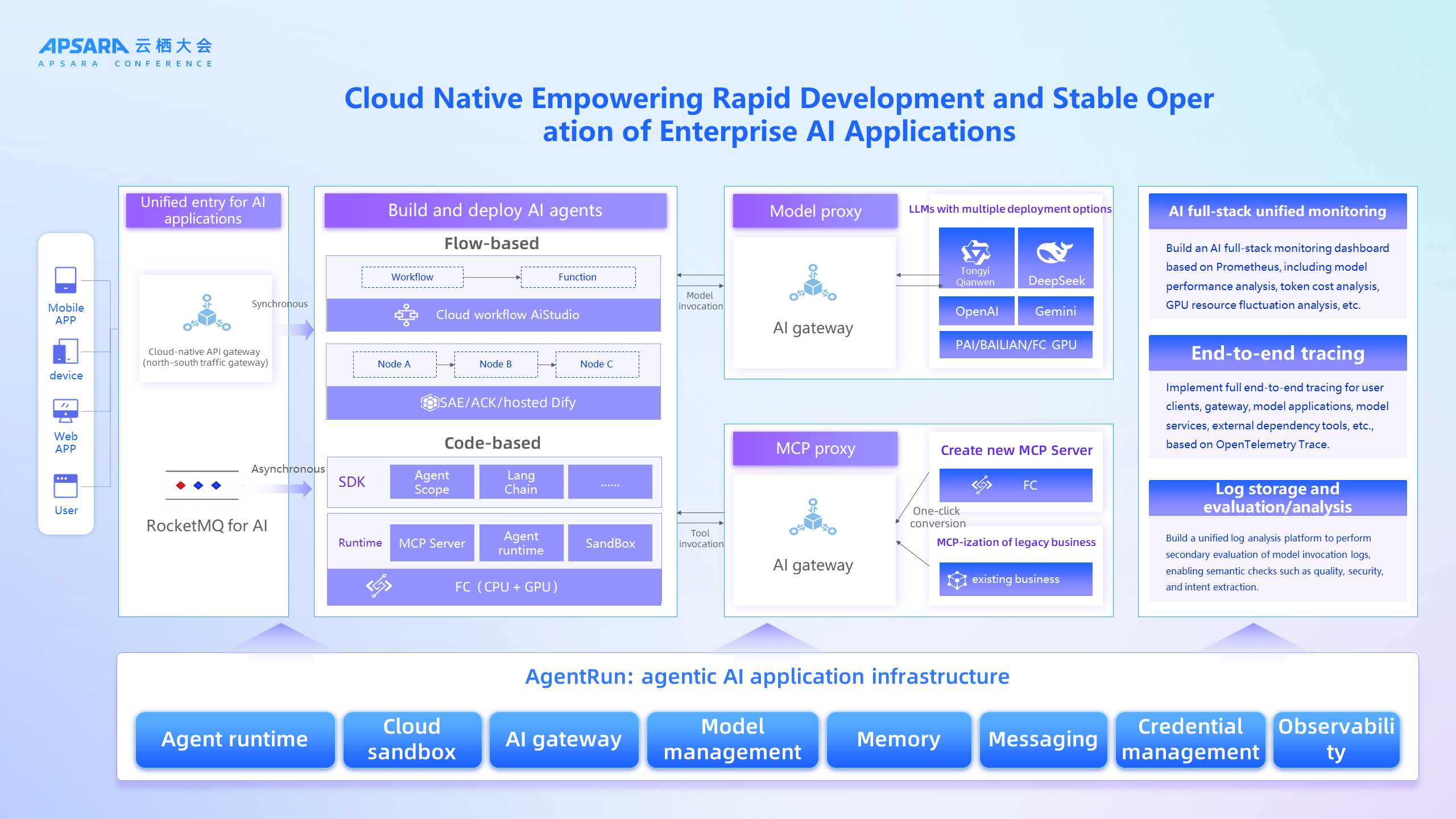

Solving these issues requires upgrading cloud infrastructure from traditional forms to an AI-native architecture. Key elements of AI infrastructure include: a function computing runtime with millisecond-level elasticity, an AI gateway that unifies traffic governance and protocol adaptation, messaging middleware that supports asynchronous high-throughput communication, and a full-stack observability system covering model invocation, agent orchestration, and system interaction. Only with the support of this new type of infrastructure can intelligent applications truly become the 'new infrastructure' of enterprise application architecture, driving continuous intelligent upgrades of the business.

Therefore, we have distilled the AI-native application architecture shown in the above figure, linking eight key components such as AI runtime, AI gateway, AI MQ, AI memory, and AI observability to form a complete AI-native technology stack, which we call AgentRun. Enterprises do not need to start from scratch; they can significantly shorten the time from Proof of Concept to production launch based on AgentRun.

Next, we will analyze how the eight core components of AgentRun provide support for AI-native architecture, focusing on the three challenges mentioned earlier.

With the overall architectural blueprint in place, we first need to solve the most fundamental question: as a 'new member' of enterprise IT systems, what kind of base should intelligent agents run on? This leads us to the core requirements for the runtime.

We have found that Agent applications have several typical characteristics: unpredictable traffic, multi-tenant data isolation, and vulnerability to injection attacks. These characteristics require the runtime to have three core capabilities: millisecond-level elasticity, session affinity management, and security isolation.

Traditional monolithic or microservice application development is bound by services, where developers strive to build functionally cohesive monolithic or microservices, but this often leads to deep coupling and complexity in code logic. The emergence of AI Agents completely subverts this model. Its core is no longer about building rigid services, but about dynamically and intelligently orchestrating a series of atomic tools or agents by understanding user intentions through large language models (LLM). This new development model aligns perfectly with the design philosophy of function computing (FaaS). Function computing allows developers to package each atomic capability of an agent into an independent function in the lightest and most native way. This means that any agent or tool envisioned by developers can be accurately mapped to a ready-to-use, lightweight, flexible, securely isolated, and highly elastic function. It not only provides a better development experience and lower costs but, more importantly, greatly enhances the production usability of agents and their go-to-market efficiency, making large-scale landings of AI innovation possible.

To deeply embrace the needs of AI agents and practice the Function-to-AI concept, function computing innovatively breaks the traditional FaaS stateless boundary. By natively supporting serverless session affinity, a dedicated persistent function instance is dynamically allocated to each user session, which can last up to 8 hours or even longer, perfectly solving the context retention problem in multi-turn dialogues of agents. The lightweight management and operation of hundreds of thousands of functions and millions of sessions, along with request-aware scheduling strategies, supports elastic scaling from zero to millions of QPS, perfectly matching the common sparse or burst traffic patterns of AI agent applications, ensuring stable service operation.

In terms of tool runtime, function computing includes built-in execution engines for multiple languages such as Python, Node.js, Shell, Java, with code execution latency < 100ms; built-in cloud sandbox tools such as Code Sandbox, Browser Sandbox, Computer Sandbox, and RL Sandbox are readily available. In terms of security isolation, function computing provides a multi-dimensional isolation mechanism at the request, session, and function levels through security container technology, offering virtual machine-level strong isolation for each task. Combined with session-level dynamic mounting capabilities, it achieves security isolation between the compute layer and storage layer, covering the most stringent security and data security demands of sandboxed code execution in all scenarios. For model runtime, function computing focuses on domain models and small-parameter large language models.

In terms of model runtime, function computing focuses on vertical models and small-parameter large language models, providing serverless GPU based on memory snapshot technology, achieving millisecond-level automatic switching between busy and idle times, significantly reducing the implementation costs of AI. Relying on request-aware scheduling strategies can better address GPU resource vacancy or contention issues, ensuring stable business request RT. With the decoupling and free combination of GPU and CPU computing power, virtualization technologies that slice a single card or even 1/N cards provide customers with finer-grained model resource configurations, making model hosting more economical and efficient.

As the most typical product of serverless, function computing has already served many important customers such as Bailian, Modao, and Tongyi Qianwen, and has become an ideal choice for enterprises building AI applications.

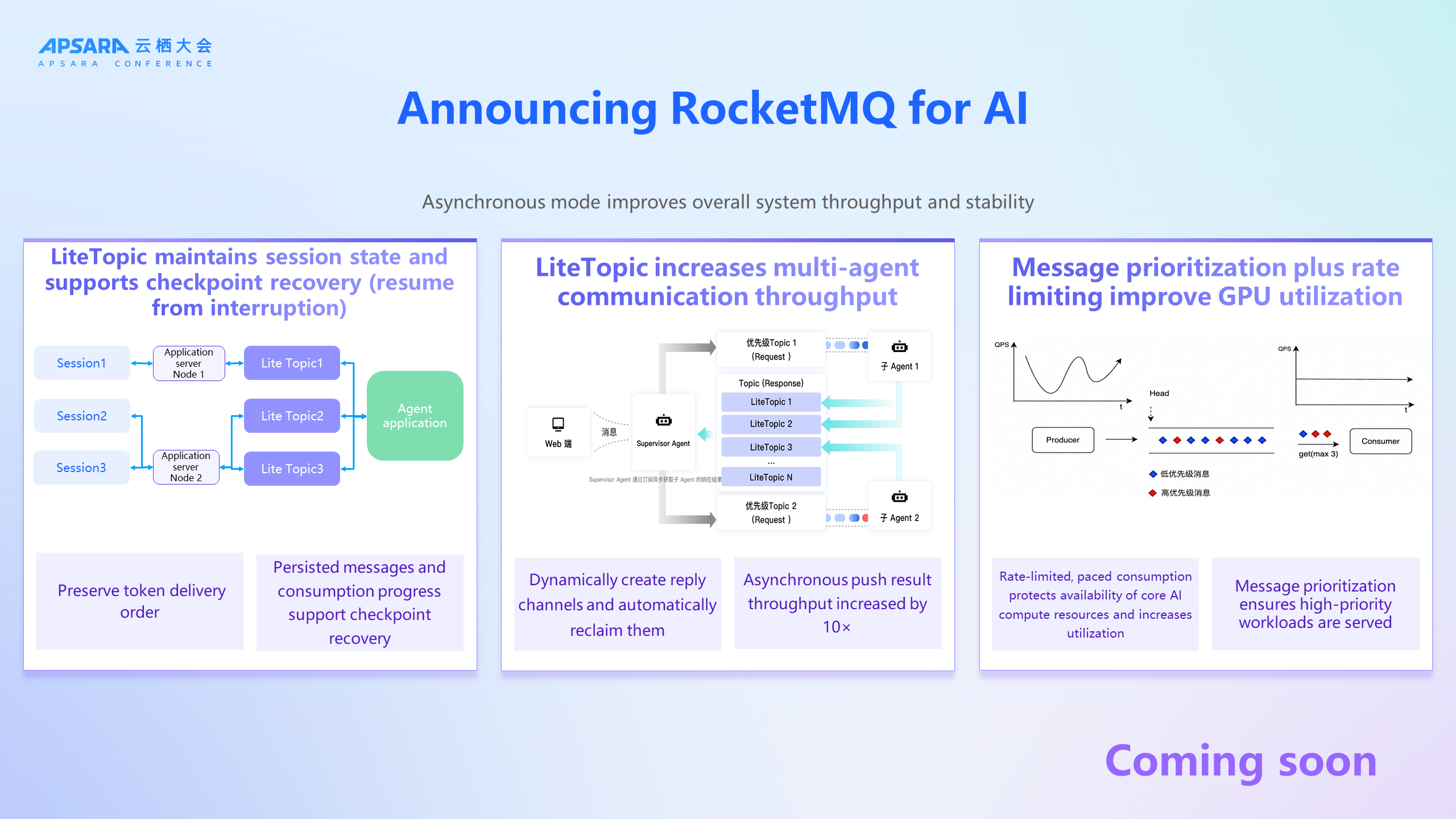

With an efficient runtime in place, as the scale of intelligent agents increases and interaction patterns become more diversified, we need to introduce asynchronous communication to ensure system throughput and stability. To this end, we have newly released RocketMQ for AI. Its core innovation is the newly released LiteTopic, which dynamically creates a lightweight LiteTopic for each session to persistently store the session context, intermediate results, and more. LiteTopic can support the breakpoint resume of agents and improve the throughput of multi-agent communication by more than ten times.

The implementation of this innovative architecture relies on RocketMQ's four core capabilities deeply optimized for AI scenarios:

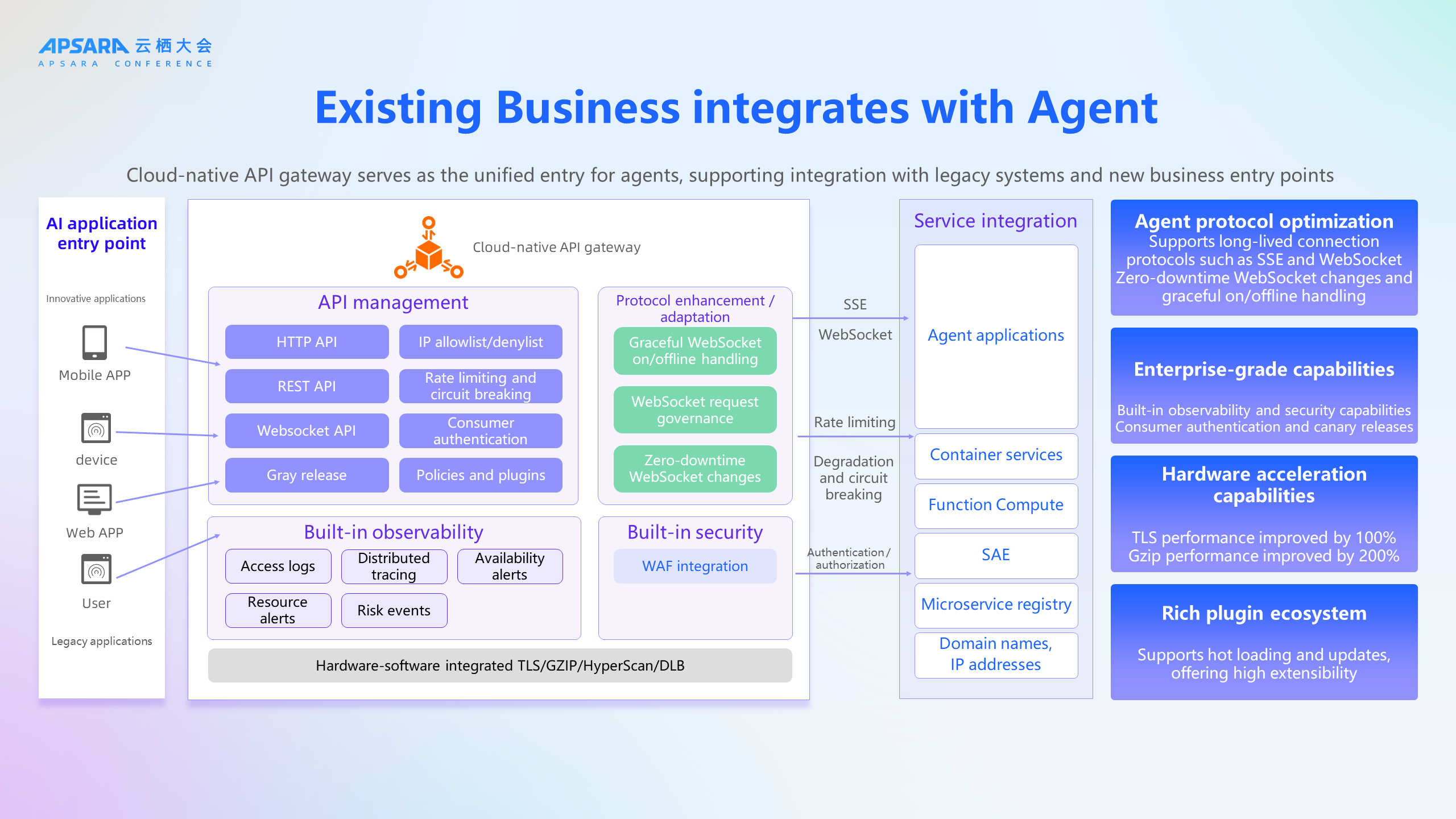

Compared to developing an intelligent agent from scratch, integrating legacy systems with intelligent agents is a less costly path to intelligence. However, it often runs into the following two challenges:

For most enterprises, there are large legacy systems and service interfaces that have been solidified; these systems are core assets of the business, yet they are usually built on traditional HTTP/REST protocols and lack the capability for direct interaction with intelligent agents. The challenge lies in how to allow intelligent agents to seamlessly access and invoke these legacy capabilities without overturning the existing architecture. Forcing a transformation of the legacy system would not only be costly but also impact the stability of existing operations. Therefore, a unified middleware is required that can both interface with legacy services and provide a standardized, governable calling entry for intelligent agents.

The Alibaba Cloud Native API Gateway is designed for this scenario: it seamlessly converts traditional APIs into services consumable by intelligent agents through protocol adaptation, traffic governance, built-in security, and observability capabilities, helping enterprises achieve intelligent upgrades at low costs. It features:

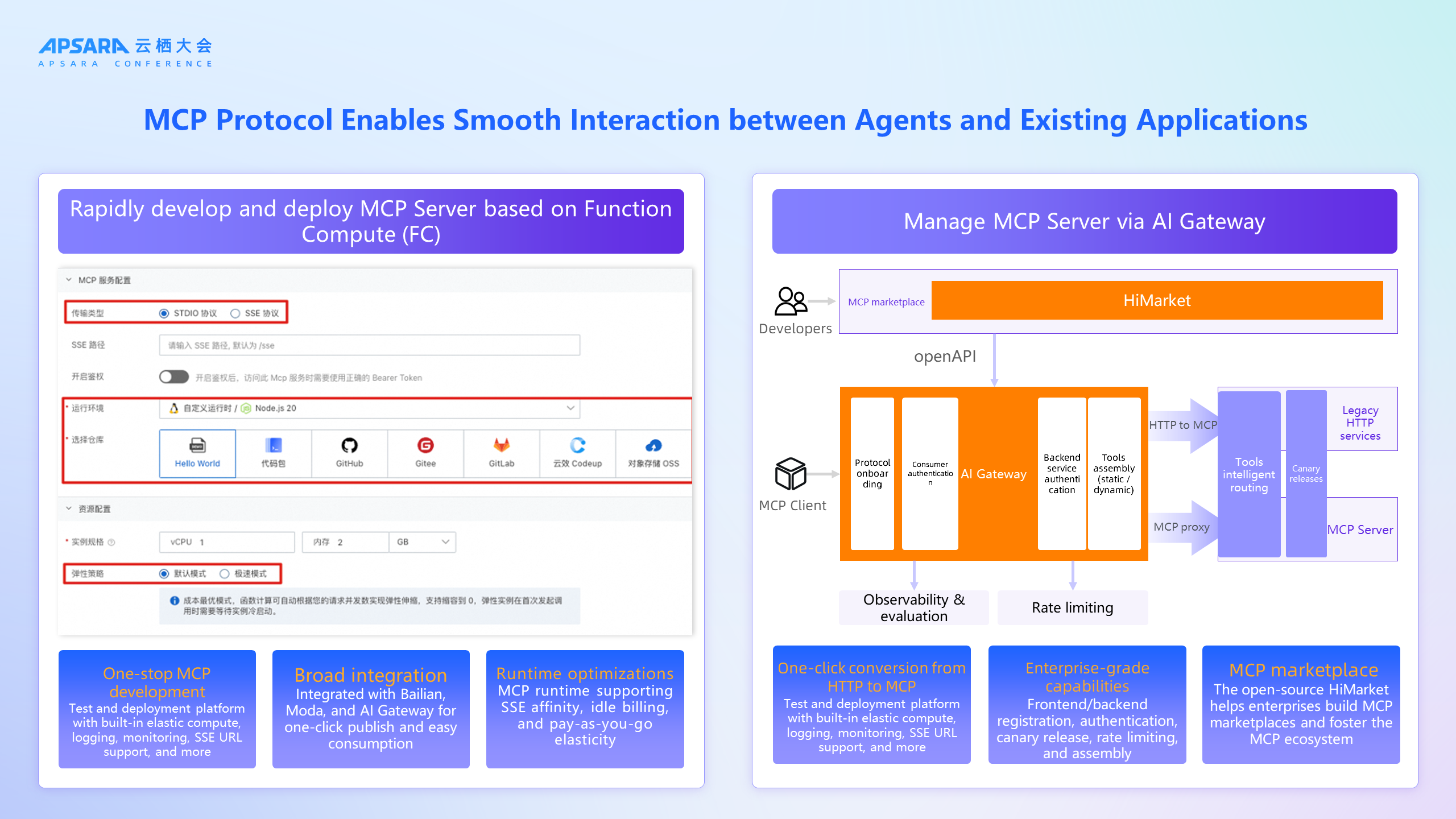

In addition to connecting legacy systems, enterprises need to continuously build new agent tools, especially based on the emerging standard protocol MCP (Model Context Protocol). The challenge is how to rapidly develop, deploy, and manage MCP Servers to ensure seamless integration with intelligent agents. Without an efficient development and runtime environment, enterprises often face complex resource preparation, long deployment cycles, and difficulty in ensuring elasticity and security when creating MCP Servers.

In response, Alibaba Cloud provides Function Computing as an ideal runtime for the rapid development and operation of MCP Servers, featuring millisecond-level elasticity, zero maintenance, and multi-language support. Leveraging the lightweight, millisecond-level elasticity, zero maintenance, and built-in multi-language runtime environment of Function Computing provides an ideal runtime for MCP Servers. Utilizing the one-stop development and broad integration capabilities of Function Computing increases the efficiency of MCP development.

At the same time, the AI Gateway allows enterprises to manage the registration, authentication, gray release, throttling, and observable management of MCP Servers at a unified entry point, supporting zero-code transitions from HTTP to MCP, enabling enterprises to build and launch MCP Servers in the shortest possible time, facilitating the rapid integration of intelligent agents with business scenarios. Moreover, the AI Gateway provides capabilities for the MCP marketplace, suitable for enterprises to build private AI open platforms.

Whether you build an intelligent agent from scratch or integrate intelligent agents with legacy systems, this is only the first step towards intelligent applications. When enterprises push intelligence into production, they will also face challenges such as inference delays, stability fluctuations, difficulty in problem resolution, prominent security risks, unreliable outputs, and high costs. These are systemic challenges faced by enterprise-level AI applications in stability, performance, security, and cost control. Below we will share some of our responses from the perspectives of the AI Gateway and AI Observability.

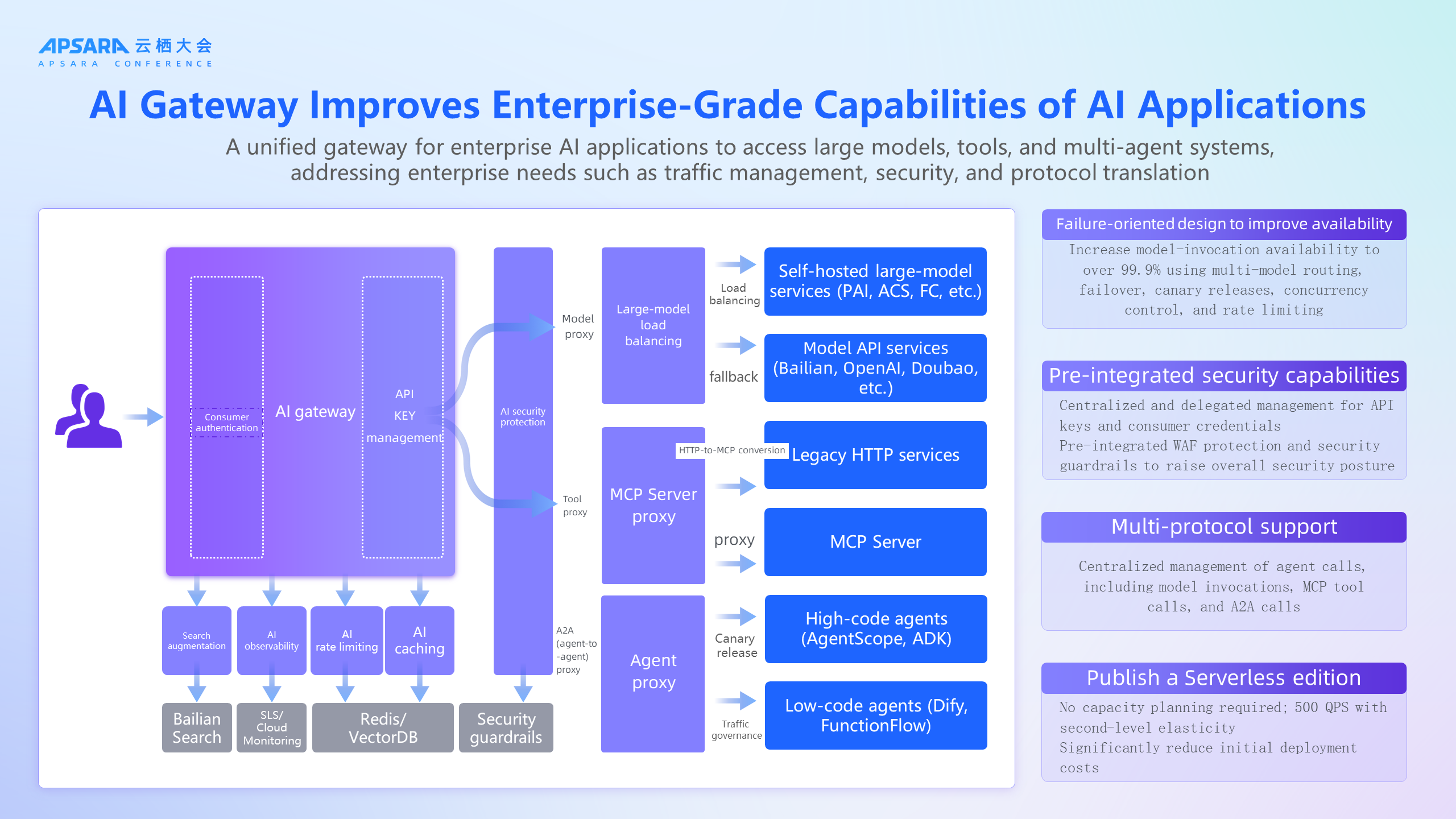

The gateway serves as the entry traffic control in the application architecture. However, compared to traditional web applications, the traffic characteristics of AI applications are vastly different, mainly characterized by high latency, large bandwidth, streaming transmission, long connections, and API-driven features. This has led to the emergence of a new form of gateway—AI Gateway.

In summary, the AI Gateway is the next-generation gateway that provides multi-model traffic scheduling, MCP and Agent management, intelligent routing, and AI governance. Alibaba Cloud offers both open source (Higress) and commercial (API Gateway) forms of AI Gateways. It plays a significant role in accelerating the stable operation of intelligent agents:

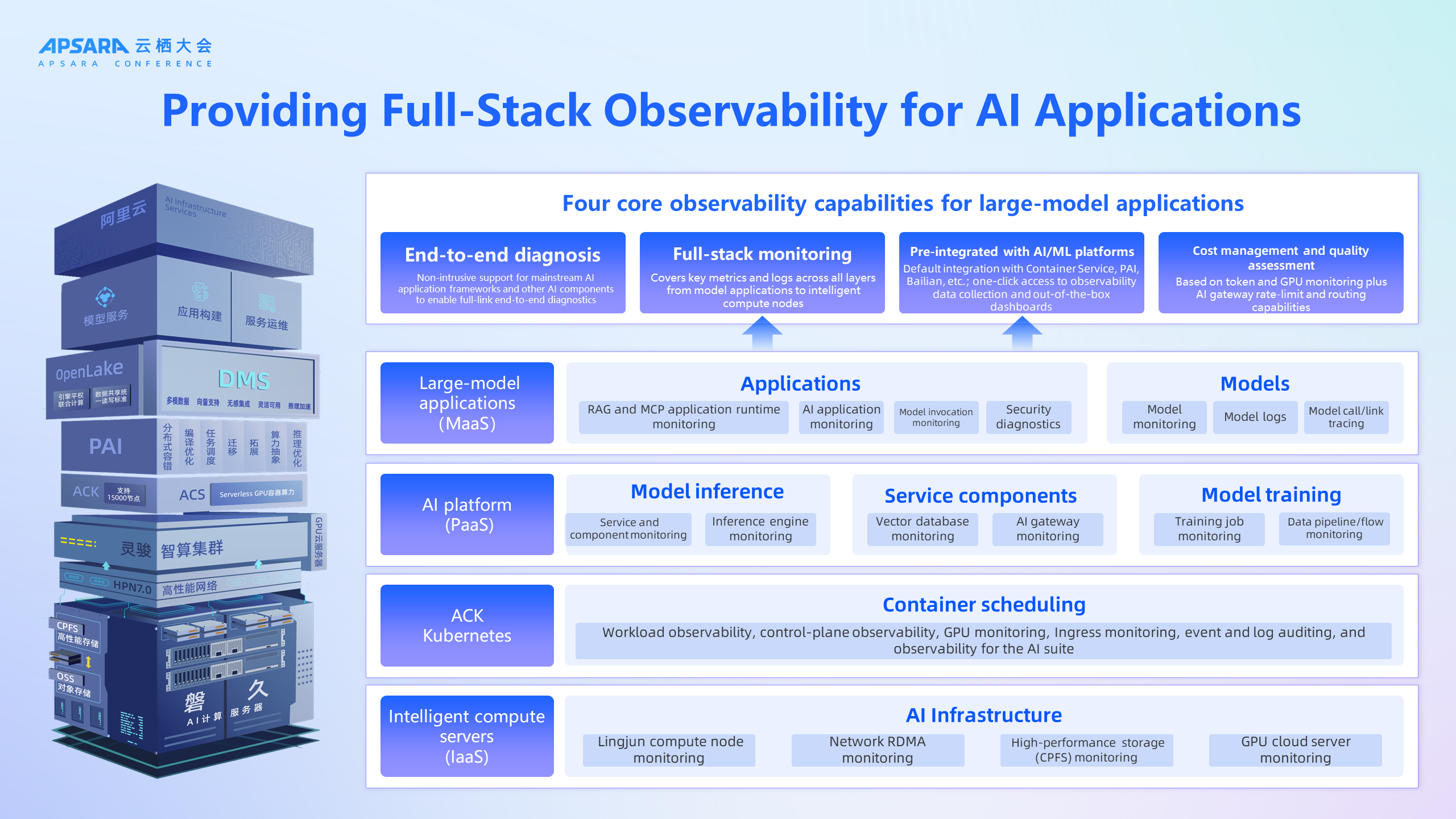

AI Observability encompasses a set of practices and tools that allow engineers to comprehensively gain insight into applications built on large language models.

Unlike traditional applications, AI applications face a series of unique challenges that have not been observed before, which can be summarized in three categories:

To tackle the above challenges, Alibaba Cloud's AI Observability solution provides:

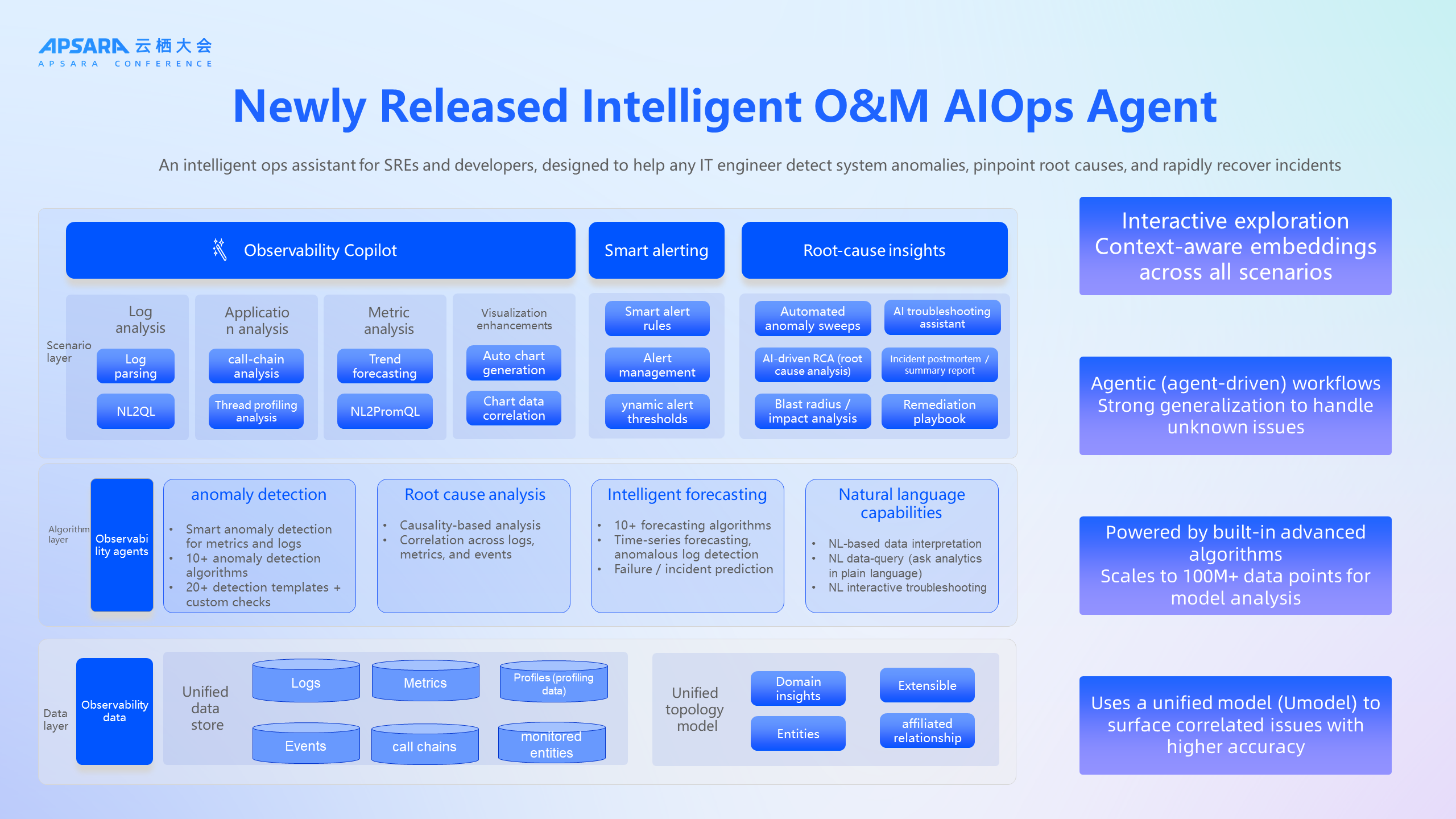

In addition to a complete solution, we also provide an intelligent operations assistant aimed at operation and development personnel, helping every IT engineer improve efficiency in discovering system anomalies, locating root causes of problems, and recovering from failures.

Unlike traditional rules-based AIOps, our AIOps intelligent agents are based on a multi-Agent architecture, possessing autonomy to solve unknown problems. After receiving an issue, it autonomously plans, executes, and reflects, thereby enhancing its ability to resolve problems. At the algorithm level, we have accumulated many atomic capabilities, which include preprocessing of massive datasets, anomaly detection, intelligent forecasting, etc., all of which can be utilized as tools by intelligent agents. We welcome everyone to log into our console for an experience and provide us with feedback.

So far, the AI-native architecture has fundamentally covered rapid development and deployment of intelligent agents, the integration of intelligent agents with legacy systems, and the stable operation of intelligent agents, but the last mile remains—how to incorporate this new intelligent agent development paradigm into existing enterprise DevOps processes.

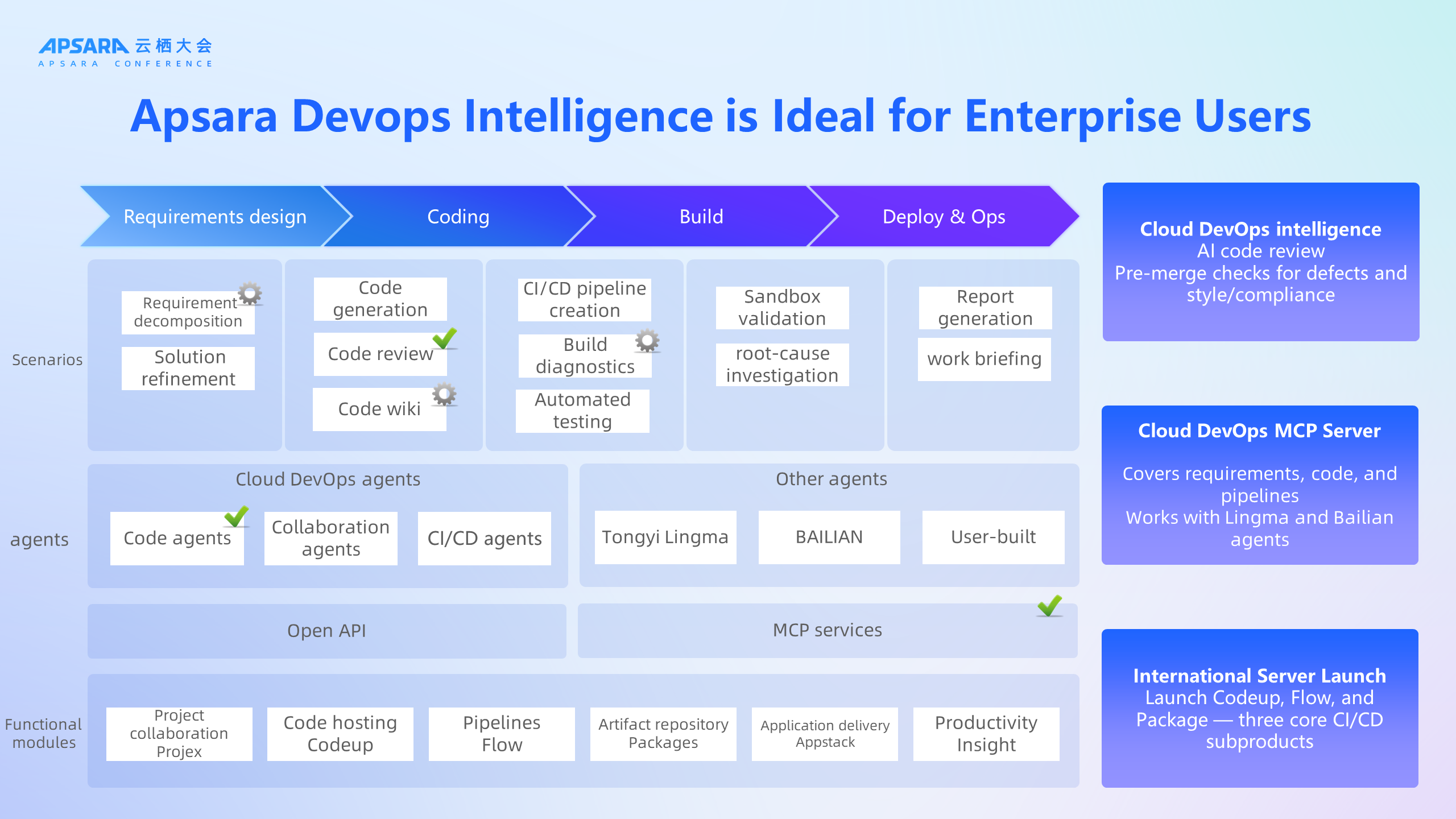

Alibaba Cloud's AI DevOps connects the entire link from coding, building, to operation and maintenance, and integrates with Lingma, AIOps, etc., injecting AI capabilities throughout. Once code is submitted for release, the system automatically captures and correlates online issues, generating intelligent diagnostic reports by the AI Agent.

Although today's sharing focuses more on commercial products, open source and openness has always been the fundamental color and core philosophy of cloud-native. For almost every commercial product, we have directly opened basic capabilities or engaged in continuous contributions based on external open source projects, promoting community evolution. For example:

We believe that it is precisely this parallel evolution model of commercial and open source that allows cloud-native products to better meet the demands of enterprise customers while maintaining ongoing technological leadership and community vitality through collaboration in open source and openness.

If you want to learn more about Alibaba Cloud API Gateway (Higress), please click: https://higress.ai/en/

The Changes in the Agent Development Toolchain and the Invariance of the Application Architecture

654 posts | 55 followers

FollowAlibaba Cloud Native Community - October 11, 2025

Alibaba Cloud Native Community - March 29, 2024

Alibaba Cloud Native Community - March 6, 2025

Alibaba Cloud Community - January 4, 2026

Alibaba Clouder - October 22, 2020

Alibaba Cloud Native Community - January 7, 2026

654 posts | 55 followers

Follow AgentBay

AgentBay

Multimodal cloud-based operating environment and expert agent platform, supporting automation and remote control across browsers, desktops, mobile devices, and code.

Learn More API Gateway

API Gateway

API Gateway provides you with high-performance and high-availability API hosting services to deploy and release your APIs on Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Native Community