By Jack Cai, Chief Architect of Elastic Compute, Alibaba Cloud Intelligence

Cloud computing is still in its infancy. While it is reshaping the business and technology world every day, it is also reshaping itself constantly to meet the needs of different customers. From deployment model to location coverage and operation model, cloud computing has never stopped evolving since its inception.

But no matter how far and wide the cloud extends itself, or how flexible and diversified its deployment and operation model will be, there is one principle that should be followed - CONSISTENCY. A consistent experience, backed by a consistent technology stack. This is exactly how Alibaba Cloud, as one of the most active innovators in the space, evolved its cloud offerings in the last ten years.

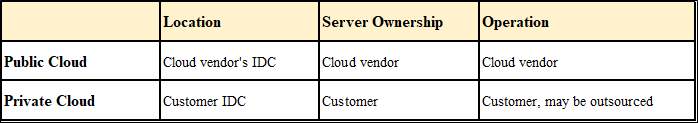

From a deployment model perspective, public cloud and private cloud used to be two separate worlds. Public cloud is typically owned and operated by a cloud provider, offering customers an "on-demand" IT service based on a shared infrastructure. Private cloud, on the other hand, is owned and often operated by the customer. Sometimes the operation of a private cloud is outsourced to the cloud provider or a third party. The table below provides a summary of the differences between the two.

Many Internet startups today typically are taking an "ALL-IN-CLOUD" approach, or more specifically, all in public cloud. In contrast, existing enterprises, especially large businesses, usually already have a dedicated on-premises infrastructure and need a more sophisticated approach to "cloudify" their IT.

Generally speaking, there are three common strategies that enterprises would adopt when developing their infrastructure:

Based on the above analysis, even though some enterprises may prefer to stick with private cloud for some time, in the long run, the reasons for adopting public cloud will become more and more compelling. This is especially true for businesses that are rapidly expanding and are looking for a more flexible deployment and operation option. Most enterprises will eventually employ a hybrid model to enjoy the advantages of both worlds.

The ideal way of a hybrid cloud is to have a consistent, unified and connected experience across the public and private cloud.

A consistent experience means the same development, deployment, security, scaling and operation interface, APIs and tools. In this scenario, users don't have to learn different ways to use a public cloud and their own private cloud. Applications don't have to adapt to different APIs. Operators don't have to use different tools. All these bring higher efficiencies and save investment.

A unified experience means being able to manage resources and applications across public and private cloud in one interface. In short, one interface, multiple clouds. Users don't have to log into different consoles to manage their cloud resources. This requires the public and private cloud to share some common capabilities, like identity management. In this regard, a private cloud effectively becomes a special "region" of the public cloud. This region is special because it's only visible to and accessible by one particular customer, which comes to its "private" nature.

A connected experience means the public and private cloud shall be connected as necessary based on customer's requirement. Connections can take place at different layers:

The best foundation to build a consistent, unified and connected hybrid cloud is one common technology implementation for both public and private cloud. But this is a challenging job. The OS to run a public cloud need to handle the management of hundreds of thousand servers. It is built for extreme scale and ultimate availability. As a result, the kernel itself may need hundreds of servers.

While the requirement of a private cloud differs in many areas, the most obvious difference being that its scale is much smaller, sometimes only tens of servers. So there is a strong requirement for a minimized cloud OS. This is exactly the same story as the Linux operation system, which supports both large servers that have hundreds of powerful CPU cores and small devices that have very limited computing resources such as a mobile phone.

Alibaba Cloud is the first major cloud vendor to take on this challenge. Back in 2014, Alibaba Cloud started to build a private cloud offering using the same Apsara Cloud OS that runs its public cloud, and delivered it to several early customers. On April 20th, 2016, Alibaba officially announced Apsara Stack Enterprise, which is a fully-fledged private cloud offering that includes most of our major public cloud services like ECS and OSS.

Then Microsoft announced Azure Stack in 2017. In 2018, AWS announced Outpost, taking a different approach to tackle the hybrid cloud market. Outpost is not a private cloud offering. Instead, it's an extension of AWS's public cloud, owned and run by AWS instead of the customer. While it does bring very consistent, unified and connected experience, it needs to be connected with a public cloud region's management system in order to be fully functional, and is only able to tolerate a few hours network disconnection. In 2019, Google announced Anthos to manage applications in a hybrid environment. It is based on Google Kubernetes Engine which does bring consistent application management experience. However, it relies on existing virtualization infrastructure in place. In this regard, it's not a self-contained private cloud stack.

Going forward, we will continue to see more consistencies across the public and private cloud, more unified management, and better connection. Hybrid will become more converged.

Hybrid cloud is not the only solution for cloud computing. From a location coverage perspective, it only covers major customer sites and public cloud regions. There are things in between and beyond them – the edges.

The key motivations for putting cloud on the edge are lower cost and lower latency. There are two driving scenarios: closer to the data and closer to the user. By moving computing power to the edge where it is closer to the source of the data, the time and cost for moving a potentially huge amount of data are decreased.

As the world become increasingly digitalized and as artificial intelligence become ubiquitous, the volume of the data that need to be analyzed is exploding. So it makes perfect sense to shift from a computation-centered world to a data-centered world. For instance, in autonomous vehicles, by moving the applications closer to their end "users" (which could be a device such as a vehicle), access latency can be dramatically reduced. This does not only improve user experience of those applications, but also critical in some scenarios such as autonomous driving.

The edge computing market is expected to have tremendous growth potential in the coming years. According to a report from Grand View Research Inc, the global edge computing market size will reach USD 43.4 billion by 2027, exhibiting a CAGR of 37.4% over the forecast period. Gartner also predicts that through 2025, the edge hardware infrastructure opportunity will grow to USD 17 billion.

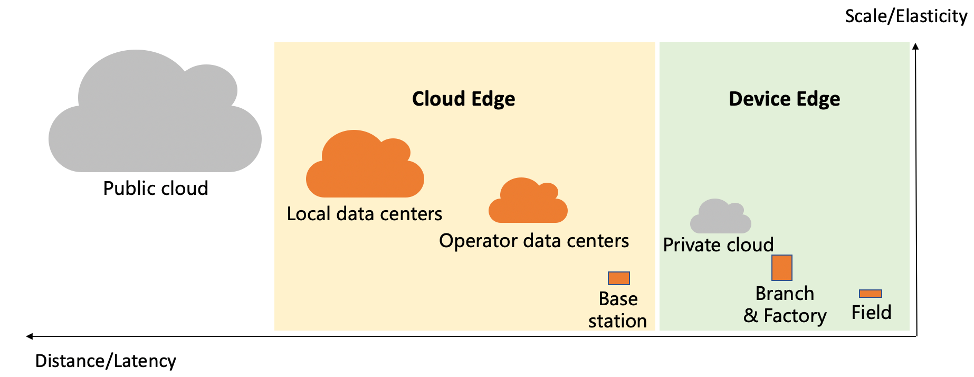

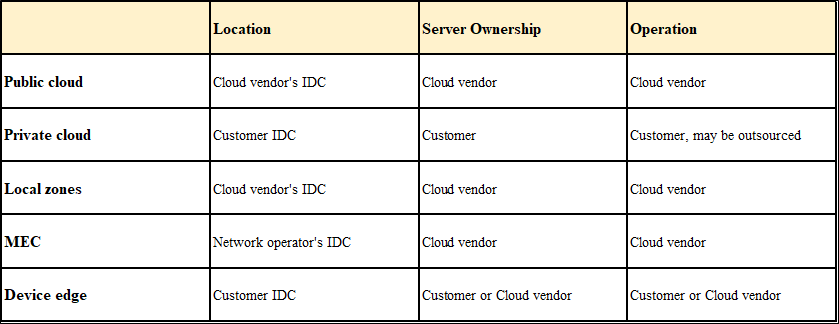

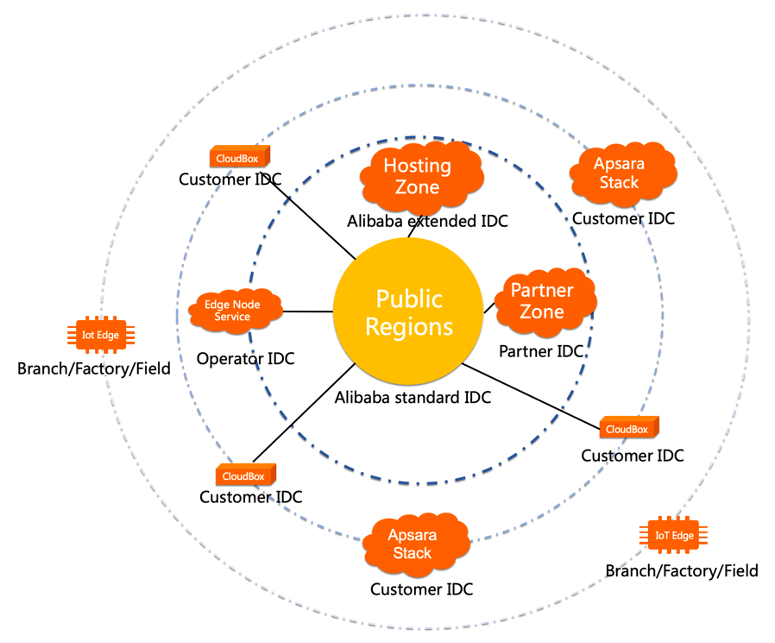

There are multiple tiers of edges, as illustrated in the above picture. The edges that reside at the public cloud side, which I labeled "cloud edge", include data centers local to certain cities or areas, operator data centers run by telecom and network operators, and base stations serving as network access points such as 5G cellular base stations. The edge at base stations are also referred to as "Multi-access Edge Computing", or MEC in short. Cloud edges are typically owned and run by cloud providers. From left to right, these cloud edges offer descending latency when accessed by customer applications or end users. And from top to bottom, they have decreasing scale and provide less resource elasticity.

On the other side, the edges that reside at customers' sites, which I labeled "device edge", include private cloud, branch offices or factories, and fields where IoT devices are deployed. Device edges are typically owned and run by customers, but as innovations continue, they may be owned and run by cloud providers too, AWS's Outpost being one example. Again, from left to right, these device edges offer descending latency when accessed by devices, and from top to bottom, smaller in scale and less elasticity.

All major cloud providers are aggressively investing in the edge cloud space. Alibaba Cloud addresses the cloud edge with the Edge Node Service (ENS) offering. With over 150 deployments covering 50+ cities, ENS was the first and by far the largest "edge cloud".

For the device edge, in addition to the Apsara Stack, Alibaba Cloud also has the Link IoT Edge offering, which is purpose-built for IoT scenarios. Last month Alibaba Cloud announced CloudBox, which is similar to AWS Outpost and completes Alibaba's edge portfolio. AWS uses Local Zone and Wavelength to cover the cloud edge, but today it only supports a limited set of locations. At the device edge, AWS has Outpost, Snowball Edge and IoT Greengrass for various scenarios. Azure is following quickly in the edge space too. In July 2020 Azure announced Edge Zone, which is still in preview right now.

The above table summarizes the various cloud deployments I have talked about so far. With the cloud now distributed farther and wider beyond the central region data centers, we are obviously witnessing the shaping of a new era – the distributed cloud era. Customers will have more options when choosing where to deploy their applications in order to save data moving cost, satisfy extreme latency requirement and improve end user experience. Yet again, a consistent experience will be vital across the distributed deployments of the cloud.

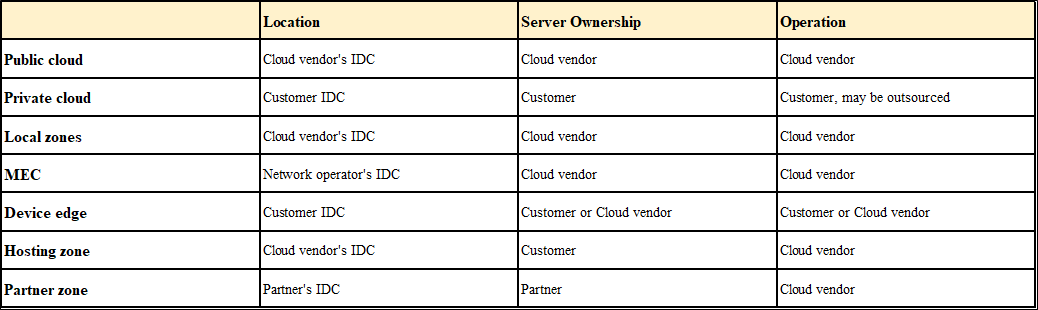

Distributed cloud is not the end of the cloud evolution story. While private cloud and device edge give full ownership and control to the customer, and public cloud and cloud edge take away the ownership and control but also the complexities in operations, there are other interesting combinations missing especially when locations are considered. AWS Outpost, for example, obviously implements one of those new combinations – it's owned and operated by the cloud vendor but deployed in a customer's data center.

Now let's open for more possibilities. What if the customers want the ownership of the servers but not the operation and the data center? What if they want to reuse their existing server investment? What if a partner wants to take advantage of their existing data center infrastructure or their established customer base without building their own cloud?

Alibaba Cloud has always been a pioneer in the cloud innovation, not only technology-wise, but also business-wise. We heard our customers and partners asking for new possibilities. So at Alibaba's Apsara Conference 2020, we announced two new offerings, namely Hosting Zone and Partner Zone.

Hosting Zone offers three key benefits. First, Hosting Zone allows customers to retain the ownership of the servers. Customers can even bring in their existing servers. Second, Alibaba Cloud is responsible for operating the Hosting Zone and makes a selected set of cloud services available based on customer's requirement. Last but not least, Hosting Zone gives customers very flexible choices of data center locations. It could be in existing Alibaba public cloud data centers, or smaller local data centers close to the customer or their end users. Alibaba is even willing to work with customers to open new data centers to satisfy their needs.

Partner Zone, as its name implies, is for partners to collaborate with Alibaba Cloud in new ways. Partner Zone can be deployed in Partner's data center, running on Partner's servers, but operated by Alibaba Cloud. Partners can choose the cloud services that they want in their Partner Zone. They can build their own branding on top of Alibaba Cloud. With Partner Zone, partners are now empowered by one of the best cloud technology and operational support forces to play a whole new role in the cloud era.

With the addition of Hosting Zone and Partner Zone, the cloud has never been so flexible, as shown in the above table. Obviously Alibaba has again led the way to reinvent cloud. Despite of all the flexibilities in deployment and operation models, there is one principle that should not be sacrificed – consistencies.

Reusing the same Apsara OS to support all these different shapes of the cloud has always been Alibaba's way to ensure consistencies. It's never been an easy job though. Technology wise, this requires:

Business-wise, this requires:

Nevertheless, with a strong belief and commitment to our cloud strategy, we made all these possible for you.

Putting everything together, Alibaba Cloud's elastic computing offering looks like the above diagram above. We believe that there are more possibilities to explore in the future, and we welcome you to continue exploring and innovating together with us.

The evolution of the cloud is never ending.

Jack Cai is the Chief Architect of ECS products at Alibaba Cloud Intelligence. His role covers PaaS, SaaS and IaaS. He was the lead architect of Huawei's SaaS platform and IBM's Bluemix PaaS application runtimes. Currently he is responsible for the architecture of Alibaba Cloud's Elastic Compute portfolio, building a reliable, elastic, high-performing, inclusive and intelligent cloud computing infrastructure.

33 posts | 12 followers

FollowAlibaba Cloud MaxCompute - July 14, 2021

Alibaba Clouder - October 27, 2020

Alex - July 9, 2020

Alibaba Clouder - May 27, 2020

Alibaba Clouder - May 13, 2020

Alibaba Clouder - June 23, 2021

33 posts | 12 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Hybrid Cloud Solution

Hybrid Cloud Solution

Highly reliable and secure deployment solutions for enterprises to fully experience the unique benefits of the hybrid cloud

Learn MoreMore Posts by Alibaba Cloud ECS