The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

This article is written by Zhou Xinyu, Shi Mingwei, Wang Chuan, Xia Zuojie, Tao Yutian, and Xu Xiaobin from Alibaba Cloud's Middleware Technologies Department.

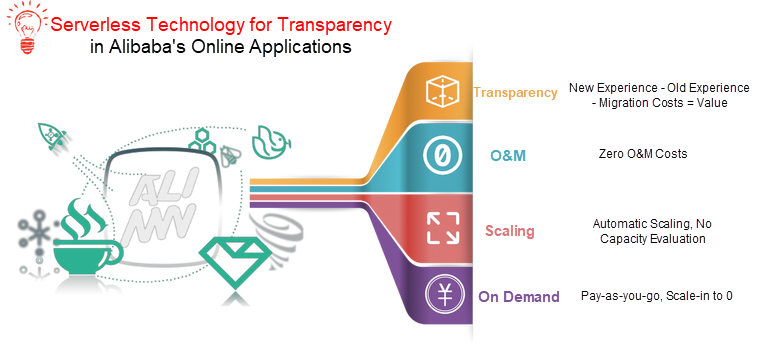

In recent years, serverless has become the backbone of the cloud native revolution in cloud computing. And, as such, serverless has been tasked by the industry with several big and important missions. The industry expects that this technology can be both capable of delivering existing applications as well as make up complex and intricate service metrics, which include, at least at Alibaba, serverless operations and maintenance management, high-speed auto scaling, and various complex billing solutions.

However, the full potential of this new technology cannot be realized overnight, especially to such an extent. It takes time to iron out all the kinks. Well, that's exactly what happened at Alibaba when we started to work serveless technology into our systems. At the beginning, we were faced several challenges. Our challenges specifically were that we were faced with two types of users who had very different requirements:

In addition, due to our own rigid and complex e-commerce scenarios and experienced with Double 11, we couldn't loosen our requirements for stability, performance, and observability even in the least bit. So, as a serverless product incubated by Alibaba, Cloud Service Engine had to meet all of the requirements of these two types of users, as well as meet the high demands at Alibaba.

In this article, we are going to take a deep dive to show you just how we were able to do it—overcome the immense challenges ahead of us to build a system that would work well for both us and our demanding customers. In this article we're going to specifically highlight some of the specific technologies that we employed in our Cloud Service Engine solution.

Alibaba's internal applications are generally divided into online and offline applications. Online applications have a large resource pool, and the average resource utilization rate is not high, especially when hybrid deployment is not considered. The Book mode that is being used for business deployment is the key factor that we cannot further improve utilization and reduce costs.

In the Book mode, resources are deployed based on the peak values of an application. In addition, the application owner will often reserve extra capacity, which results in low resource pool utilization. Cloud Service Engine is deployed in a serverless on-demand mode to significantly reduce costs through fast auto scaling, time-based reuse, and intensive deployment. This is quite challenging in reality.

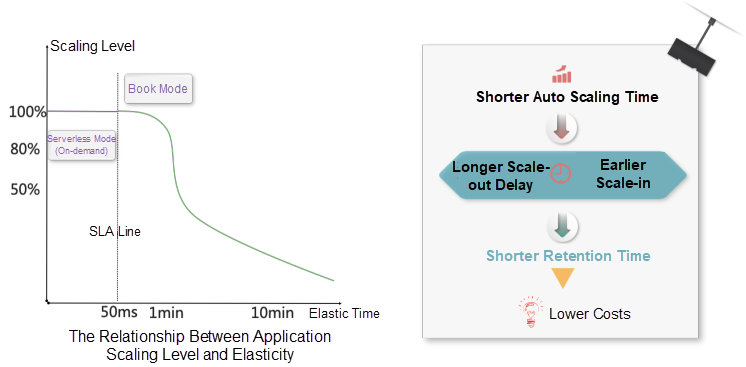

The difference between the serverless on-demand mode and the Book mode has to do with the application startup time:

Assume that the SLA for a single service request is 50 milliseconds. If the application startup time is less than 50 milliseconds, scale-out can be carried out as needed when requests arrive. Otherwise, Book can be performed only based on the current level of demand. The shorter the startup time, the longer the scale-out time can be delayed and the earlier scale-in can be performed. Consequently, a shorter startup time results in lower costs.

However, most online applications take several minutes to start, and some can even take half an hour. Online applications are generally developed based on the high-speed service framework and the Pandora framework. At the same time, they rely on a large number of middleware-rich clients and second-party packages provided by other departments. The startup process involves lots of initialization work, such as network connection, cache loading, and configuration loading.

The startup bottleneck of online applications lies in their code. For function scenarios, such as AWS Lambda and Function Compute, elastic optimization methods for the IaaS or Runtime framework are almost useless in online business scenarios. In the Cloud Service Engine R&D phase, we focused on ways to reduce the application startup time from minutes to seconds or even milliseconds without having to interfere with the corresponding code.

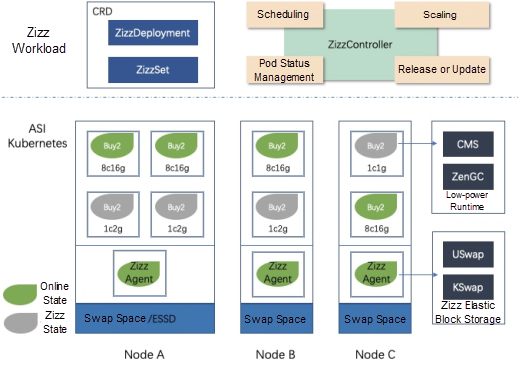

The Alibaba Cloud Service Engine team was looking for a universal and low-cost elastic solution to support the large-scale transparent evolution of Alibaba's online applications to the serverless architecture. This year, the team proposed a new elastic solution named Cloud Service Engine Zizz, which was verified on core applications during Double 11. This solution can maintain low-power instances with less than 10% of their original resources and then restore them to the online state in seconds when necessary. It implements startup two orders of magnitude faster than cold startup.

The Zizz solution is based on one core approach and one assumption:

The core approach is hot standby. The cold startup time of an application is too long and difficult to reduce, and therefore a batch of instances are started in advance.

Hot standby allows for online traffic reception during peak traffic periods and performs offline processing at the service discovery level during off-peak hours. In this solution, we assumed that the power consumption of an offline instance is very low. If a hot standby instance needs to consume all physical resources of an online instance, hot standby becomes essentially the same as the Book mode. Theoretically, an instance in the offline state is not driven by front-end traffic, and therefore only a small number of back-end tasks are running in this case. So, you should be able to switch a standby instance into a state of low power consumption. By doing this, you can significantly reduce the CPU and memory specifications, and maintain standby instances at a very low cost. Therefore, this solution can deliver greater flexibility for time-based reuse and intensive deployment.

Based on the preceding factors, Cloud Service Engine Zizz has incorporated several core technologies, including low-power technology based on the elastic heap, memory elasticity based on kernel-state and User-state swap capabilities, and dynamic configuration upgrade or downgrade of instance types based on Inplace updates.

At the same time, these core technologies are packaged and output as capabilities in the Kubernetes workload mode through custom Kubernetes custom resource definitions (CRD). The following is the overall architecture:

In the embedding field, the low-power running mode is already widely used, including in operating system design and application design. For an operating system, its low-power operation is considered in the design phase. For an application, low-power operation is considered in the design and development phases. Generally, applications register low-power system operation events. After an application receives a low-power operation event, it performs a series of logical processes to bring this application into the low-power operation state. Inspired by this, in future cloud computing environments, application operating modes also need to support similar configurations. This is also the idea behind the low-power operation of applications introduced in the Cloud Service Engine Zizz solution, and it allows the solution to better adapt to the requirements of serverless scenarios. Three core technologies make Zizz low-power runtime possible.

According to the Zizz architecture design, we can downgrade the resource configuration of an instance when this instance enters the low-power mode. For CPU resources, we maintain a low-power CPU pool (or CPU Set). The initial value of a CPU pool is 1C, and the number of CPU cores increases with the number of low-power instances added to the pool. This means that multiple low-power instances on the same node compete for resources in the CPU pool. From the perspective of CPU resource utilization, multiple low-power instances constitute a low-power serverless scenario where CPU resources are reused according to time.

The Zizz solution is designed to provide a general-purpose low-power solution for all application instances without being bound to the runtime of a specific process. This low-power solution allows an instance to elastically scale its memory based on the request traffic during runtime, and provides an extremely low-cost runtime when no request traffic exists.

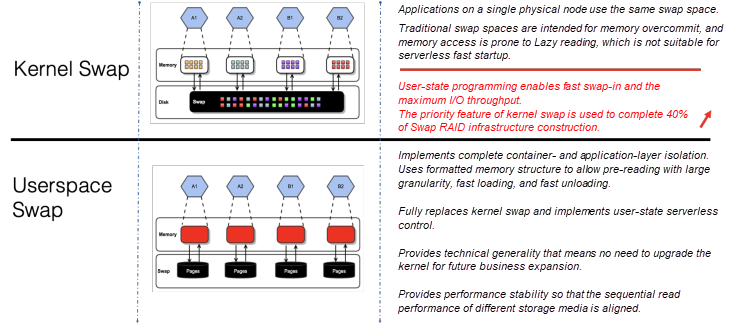

After exploration and demonstration, we believe that the Linux Swap technology can fulfill this mission. Linux Swap provides the system with a transparent memory scaling capability independent of process runtime. According to the current memory demand on the system, the anonymous memory of the system is swapped, in the order of low to high according to the LRU access activity, to low-speed external storage media. This expands the system's available memory. During the specific swap process, the system will switch the inactive anonymous memory page to an external low-speed storage device based on other settings of the swap subsystem and the proportion of the current memory consumed by Anonymous and Filecache. From the perspective of system memory, a swap space is an extended low-speed memory pool. In terms of cost, swap spaces provide a low-cost process runtime context. In the future, we hope to provide a multi-level storage architecture that goes beyond memory and disks and supports the context required for low-power instance running.

Combined with serverless elastic scenarios, running instances must be able to quickly enter and exit from the low-power consumption mode, and the runtime states of the instances must be fully controlled based on traffic. Currently, the design and implementation of Linux Swap is more concerned with system memory overcommit, and the implementation of internal memory swap is more inclined to page-granularity memory access in the Lazy mode. This kind of memory swap causes response time jitter in real business systems, which is unacceptable in commercial systems. As a result, we must provide a set of swap-in and swap-out implementations that allow for the full control of user states based on the current Linux Swap technology.

The following figure shows some system customizations and innovative implementations based on Linux Swap. For traditional Linux Swap, we use user-state programming and concurrent fast swap-in implementation, which can achieve maximum I/O throughput on different storage media.

Considering future large-scale application scenarios and some existing problems in Linux Kernel Swap, we have developed User-space Swap to implement the per process swap isolated storage. This supports fast swap-in with large granularity and aligns the sequential read performance of different storage media.

Alibaba mainly uses Java technology stacks as the basis for runtime, and this is particularly true of inventory applications. For Java applications, the Cloud Service Engine Zizz solution uses the AJDK Elastic Heap technology as the basis for the low-power running of Java application instances. This technology effectively avoids large-scale access from full garbage collection to the JVM Heap region and the associated memory region. It only achieves low-power operation for fast memory recycling in the local Heap region. In addition, only memory with a relatively small working set size is used, which avoids the pressure on the swap subsystem caused by the rapid expansion of heap memory during traditional Java runtime and effectively reduces the system I/O load.

The Cloud Service Engine Zizz solution uses the Elastic Heap feature and related commands provided by AJDK to convert a running instance into a state of low power consumption when no request traffic exists. Instances in the low power consumption state have low memory footprints.

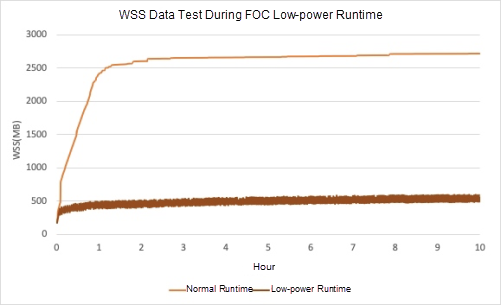

The following is an evaluation of the runtime working set size of a typical application with a 4-core and 8 GB memory specification used by Alibaba. If you are using a traditional fixed heap, the memory is reclaimed by using the content management system, and the working set size of this application instance increases to about 2.7 GB and then stabilizes. The runtime working set size after Elastic Heap parameter tuning is as follows:

AJDK Elastic Heap ensures that the working set size stays stable at 500 MB to 600 MB for up to 32 hours of application instance testing. Using both the Elastic Heap technology and swap spaces can provide Java application instances with very stable and low-power runtime and maintain a low memory footprint and working set size in the low-power state. This effectively reduces the memory and I/O costs associated with the operation of inventory applications in the low-power mode.

During the development of Zizz, we decided to challenge ourselves by considering future cloud computing scenarios. Therefore, the swap feature of the Zizz solution uses Alibaba Cloud Enhanced SSDs (ESSDs) instead of traditional local data disks.

Currently, the ESSD type used by Cloud Service Engine Zizz is PL1, with a maximum throughput of 350 Mbit/s. By using fast concurrent swap-in, the swap-in I/O throughput reaches the upper limit for ESSDs, which is 300 to 350 Mbit/s. For more information, see Alibaba Cloud Enhanced SSD specifications.

At the I/O level, our current plan is the same as that for CPUs. We hope to use Cgroups to build a preemptible dynamic I/O reuse pool for multiple low-power running instances. This will not only control the impact of I/O requests for low-power instances on system I/O, but will also maximize the dynamic utilization of the I/O allocated to each low-power instance. Currently, the implementation does not involve the special implementation of Zizz low I/O power consumption, because this feature is set to be completed in the next phase of work.

Up to now, we have introduced an innovative solution to reduce the application startup time. Next, we will introduce the FaaS scenario in greater detail and discuss the RSocket Broker architecture.

To understand this architecture, consider that star entertainers usually pour their valuable time into activities and performances, while business discussions and trivial matters are handed over to professional agents. These agents build a bridge between stars and the outside world, and both the stars and the agents perform their respective duties. Likewise, the core value of applications is usually in its business field, while general basic services such as service discovery, load balancing, encryption, observability, traceability, security, and circuit breaking are necessary but not the most valuable features of applications. If we compare stars to the business field, agents play the role of a broker. The broker removes general infrastructure services from applications so that the latter only needs to focus on its business field. This architecture is currently the one best suited to function systems.

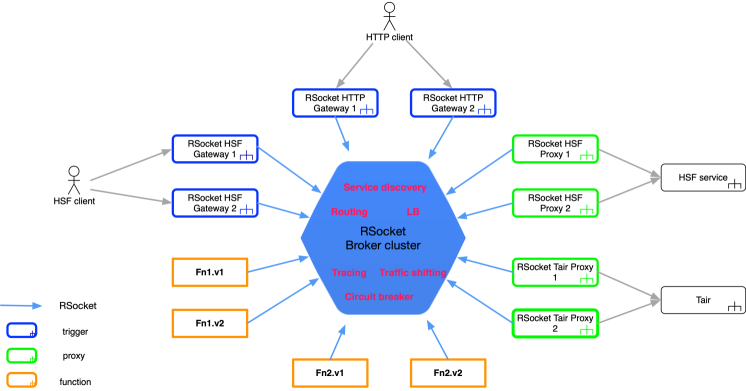

The below graphic shows this architecture:

The network communications between all the components including triggers, functions, and proxies in the Cloud Service Engine Function architecture go through the backbone broker.

As a network hub, the broker is extremely performance-demanding. Therefore, all components in the Cloud Service Engine Function architecture use the RSocket protocol for network communication. This is a next-generation, cross-language, and open-source communication framework based on the Reactive programming model. Alibaba, as a member of the Reactive Foundation, is one of its major contributors. Stress testing has shown that a broker configured with a common specification can support tens of thousands of connections (one connection per function) and tens of thousands of queries per second.

- Full asynchronization without blocking: The RSocket protocol is based on the Reactive programming model and is completely asynchronous and free from blocking. In Cloud Service Engine, protocols like high-speed service gateway, HTTP, Tair, and MetaQ can be adapted to non-blocking implementations.

- Efficient payload forwarding: The network payloads of RSocket are divided into two parts including metadata and data, which are, respectively, similar to the header and the body of an HTTP request. When routing payloads, the broker only parses the smaller metadata part and does not parse the data part. In addition, the broker does not store any memory copy when forwarding payloads.

- Single-connection multiplexing: All components establish one persistent connection with each broker, with two-way communication and multiplexing in each connection. These persistent connections eliminate the need of the overhead of new TCP connection establishment for each call, and the single-connection mode optimizes the network by increasing the utilization and throughput of maximum transmission units (MTUs).

- Lightweight use of middleware: For Java, middleware originally required a heavy-duty client, which resulted in slow startup, a stateful architecture, dependency conflicts, and the need for upgrades. For Node.js and Python, some middleware products do not have clients or official versions so that their capabilities cannot be fully utilized. With the broker architecture, Cloud Service Engine Function allows functions in all programming languages to use Alibaba Group's main middleware in a lightweight way.

- Transparent service routing and load balancing: The broker is responsible for service routing and load balancing, and therefore function processes do not need to take these into consideration

- Tracing: All function requests provide the distributed tracing pass-through function, which allows engineers to quickly integrate with the existing Alibaba ecosystem without any additional concerns and simplifies troubleshooting.

- Traffic shifting: The Cloud Service Engine Function provides support for alias-based traffic shifting rules. Users can use deployment policies, such as multi-version deployment, blue-green deployment, and canary release. In this way, rollback can be performed in seconds without the need for redeployment, and traffic is distributed in a fine-grained manner among multiple function versions by percentage.

- Circuit breaking: Functions come with Alibaba's open-source Sentinel , and therefore users only need to configure circuit breaking rules for Sentinel.

- Auto scaling: Auto scaling based on concurrent metrics helps users automatically apply for and reclaim resources based on the actual load requirements of the system, efficiently respond to user requests, and achieve a convenient serverless O&M experience.

After more than a year of hard work and continuous testing and feedback from partners and early users, the Cloud Service Engine Function successfully supported the 2019 Double 11. It has been serving dozens of businesses such as Taobao Shopping Guide, Fliggy Shopping Guide, ICBU, CBU, and UC, and involves a large number of functions.

At first, it was enough for serverless automatic elasticity to ensure a good user experience (traffic-based adaptive capability). If the underlying infrastructure could ensure the resources needed by users along with sufficient stability, most users did not care how many servers were deployed. As the underlying infrastructure, a user instance was retained during low traffic periods in case it was occasionally needed.

With the development of technology and engineers' pursuit of ever greater performance and simplicity, existing infrastructure was improved, and more advanced and rational infrastructure was created based on existing capabilities. In addition, with more users requiring pay-as-you-go billing, the ability to fully reclaim idle resources is becoming more important.

In fact, under an ideal O&M scheduling model, reasonable use of the capability of scaling in to zero in a limited resource pool allows for the highly efficient use of resources. The following section describes how to use zero instances in pre-release scenarios.

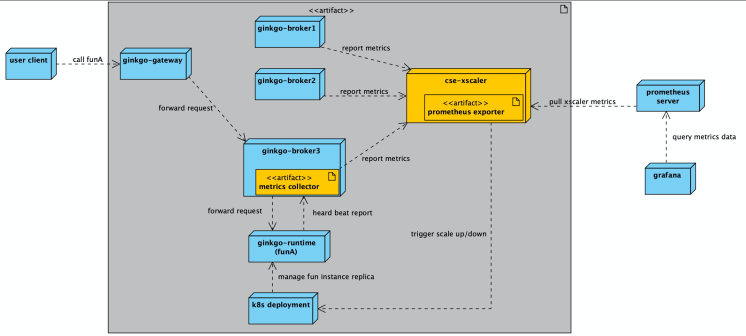

Based on the wide range of scenarios in which the Cloud Service Engine Function is used within Alibaba and its large number of users, the Cloud Service Engine team quickly escalated the priority of zero-instance capability in the Cloud Service Engine Function to a high level. The following is a zero-instance architecture combined with the Cloud Service Engine Function architecture:

Note: In the preceding figure, ginkgo is the internal development code of the Cloud Service Engine Function.

As shown in the preceding diagram, the process of scaling in to 0 and the process of scaling out from 0 to 1 for the user Fn (funA in the diagram) are both complete and closed data loops. The following describes relevant details:

The process of scaling in to 0 (1 - > 0)

The process of scaling out from 0 to 1 (0 - > 1)

From the preceding process, we can see that the following components are combined with the ginkgo architecture:

As a platform product, the Cloud Service Engine has a lot of users who need to experience, verify, and use serverless functions in the pre-release environment. However, due to its design, Cloud Service Engine has only limited physical resources in the pre-release environment. If all Fn instances run on it, its CPU, memory, ENI, and other resources will soon create a bottleneck, causing the scheduling of new pods to become stuck in the pending state.

An earlier solution was for Cloud Service Engine to maintain a brute-force script and run it once a day to clean up the Fns created in the pre-release environment a given number of days ago. This was a convenient but crude solution. Although it was effective because one cleanup immediately releases a large number of resources, the defects are obvious too. 1. It could not avoid false positives. Due to simple cleaning conditions, no matter how the number of days (N) variable was adjusted, it could not avoid false positives and would kill Fns that the user still needed. If N was too large, it would release barely any resources, but if N was too small, false positives were much more likely. 2. As the number of trial users in the pre-release environment increased, daily cleanup became insufficient.

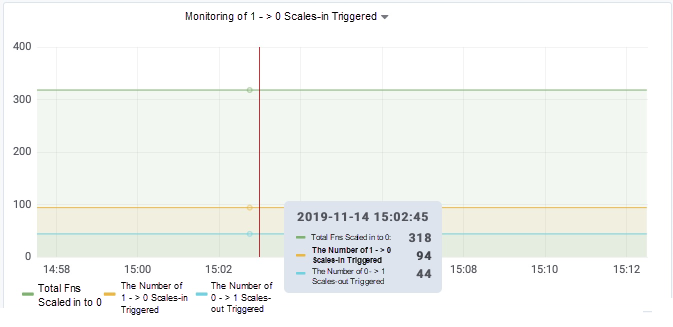

To provide a better solution for the utilization of pre-release resources and take advantage of the staggered utilization of pre-release public resource pools, providing a zero-instance capability for Cloud Service Engine was a great choice. To reduce the jitter and fluctuations that the scale-in to 0 causes for pre-release users, the Cloud Service Engine's pre-release environment currently implements a default six-hour waiting period before the scale-in to 0. In this way, when a user does not use the Fn for six hours, the system automatically reclaims all the physical resources occupied by the Fn to free up space for other operations by other users.

In a cluster, we use three nodes to support more than 400 functions. The zero-instance feature is what makes this possible.

The construction of the zero-instance feature is also a system project. Here, the Cloud Service Engine team is constantly polishing and optimizing the zero-instance user experience as it develops new technologies and approaches. Generally, we will continue to improve the following aspects:

Docker uses container images as a de facto standard for software and runtime environment delivery. Therefore, docker containers have become standardized, lightweight, and resource-limited sandboxes. As a lightweight sandbox, a docker container is naturally suitable for a small number of processes. In a container that exclusively occupies a pid namespace, process 1 is naturally the process specified in the Dockerfile or the command that starts the container. However, the first users who tried docker soon discovered that docker containers without the init system were plagued by two annoying problems:

There are many ways to solve both problems, including leaving only one process in the container. Moreover, we can also make process 1 in the container responsible for waiting for all sub-processes to avoid botnets as well as for forwarding the SIGTERM signal so that the sub-processes can exit gracefully, or have docker support the init system.

In the containerization process, Alibaba provides a user experience very similar to a virtual machine by using the rich containers of Pouch. In this way, developers can smoothly migrate and maintain their applications based on past experience. As a result, Alibaba's applications are migrated from virtual machines to containers, in which case the applications are actually migrated to the rich containers of Pouch. However, the Docker community has always considered containers to be lightweight and a container to be responsible for only one specific task.

Each container should have only one concern. Decoupling applications into multiple containers makes it easier to scale horizontally and reuse containers. For instance, a web application stack might consist of three separate containers, each with its own unique image, to manage the web application, database, and an in-memory cache in a decoupled manner.

Kubernetes provides standards for using lightweight containers to orchestrate multiple processes and services. However, the images of Alibaba applications are products of the rich container model. As you can see, a large number of processes, including a business process, log collection process, monitoring collection process, and staragent process, are running in the running instances. A common practice is to use an image that includes a series of basic O&M components such as staragent as the basic image, add your program, and then package it into the final image for release. This method allows us to quickly enjoy the convenience of image release when migrating from a virtual machine to a container. It can ensure the consistency of the operating environment while allowing the continued use of the virtual machine O&M experience.

However, the implementation of Cloud Service Engine quickly encountered a side effect of the rich container model. We received feedback from the Cloud Service Engine Function users that the CPU usage of a newly scaled instance skyrocketed within 30 to 60 seconds after instance startup, and that timeout errors significantly increased. After troubleshooting, we confirmed that the staragent component performed a plug-in update after it started, which seized CPU resources and causes the business process to fail to respond to requests in a timely manner.

Generally, the version of the O&M component packaged in a basic image is outdated and must be updated upon startup. If the instance specification is high and the application startup is slow, this is not a major problem. However, in Cloud Service Engine Function scenarios, this problem is amplified to an unacceptable level when applications start fast and instance specifications are low, such as with 1 core.

After figuring out the cause of the problem, we came up with solutions and naturally chose the most cloud-native one: to strip the O&M components, place them in a sidecar container, and use the resource isolation capability of this container to ensure that O&M processes do not compete for business resources.

During the process of creating the O&M sidecar, we solved the following problems:

In a serverless scenario, to enable instances to automatically scale up and down based on load changes, applications must be started as quickly as possible. The factors that affect the startup speed of applications include the following:

Clearly, making containers lightweight is an important step in the cloud-native process. In addition to accelerating the startup of applications, this also provides the following benefits:

Containers can be made lightweight only when a lightweight image is provided by application developers. No change is allowed on the application side when the infrastructure is not ready. However, if the application does not change, the infrastructure must be continuously supported and adapted through existing methods. This seems to be an endless loop. Cloud Service Engine strives to promote the development of lightweight images on the application side and the evolution of the underlying infrastructure, allowing users to enjoy the value of lightweight containers.

Serverless implementation is a very challenging system project, which involves a wide range of technologies. In terms of infrastructure, we have received a great deal of valuable help from Alibaba's Container and Scheduling Team. In terms of systems and JVM, we have explored many new innovations with the help of the relevant teams to address the challenges of rapid application startup. In terms of middleware and O&M control, we are constantly reappraising current practices.

Alibaba's demanding requirements for performance, quality, and stability have pushed us to strive for excellence in all aspects. Cloud Service Engine successfully passed its first major test in the 2019 Double 11. However, we know that this is just the beginning. We have not yet to tap the full potential of serverless technology, and the technological innovations made possible by serverless go far beyond what we have discussed in this article. We are fortunate that we can witness and be a part of this massive force driving unprecedented technological innovation today.

Fast, Stable, and Efficient: etcd Performance After 2019 Double 11

666 posts | 55 followers

FollowApache Flink Community - March 20, 2025

Alibaba Cloud Community - November 14, 2022

Alibaba Cloud Community - November 3, 2021

Alibaba Clouder - November 3, 2020

Alibaba Clouder - November 22, 2019

Alibaba Clouder - December 11, 2020

666 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community