By Qunar Basic Platform Team

Microservice architecture has been implemented in Qunar for many years, and the number of microservice applications has reached the thousands. As the call procedure between services becomes more complex, failures occur frequently, bringing huge economic losses to Qunar. Thus, stability construction has become an important work. Netflix proposed to improve system stability through chaos engineering in 2010. Since then, chaos engineering has been proved as an effective means to discover system weaknesses and establish the capability and reliability of the system to resist out-of-control conditions in the production environment. From the end of 2019, Qunar has also combined with its technical system to start the exploration of chaos engineering. The following part introduces our practical experience in this process.

We studied open-source tools related to chaos engineering at the beginning of the project to avoid repeated development. We analyzed them based on the characteristics of our technical system.

We also considered the active situation of the community. Finally, we chose ChaosBlade as the fault injection tool in combination with the in-house chaos engineering console in the final plan. (There was no chaosblade-box at that time.)

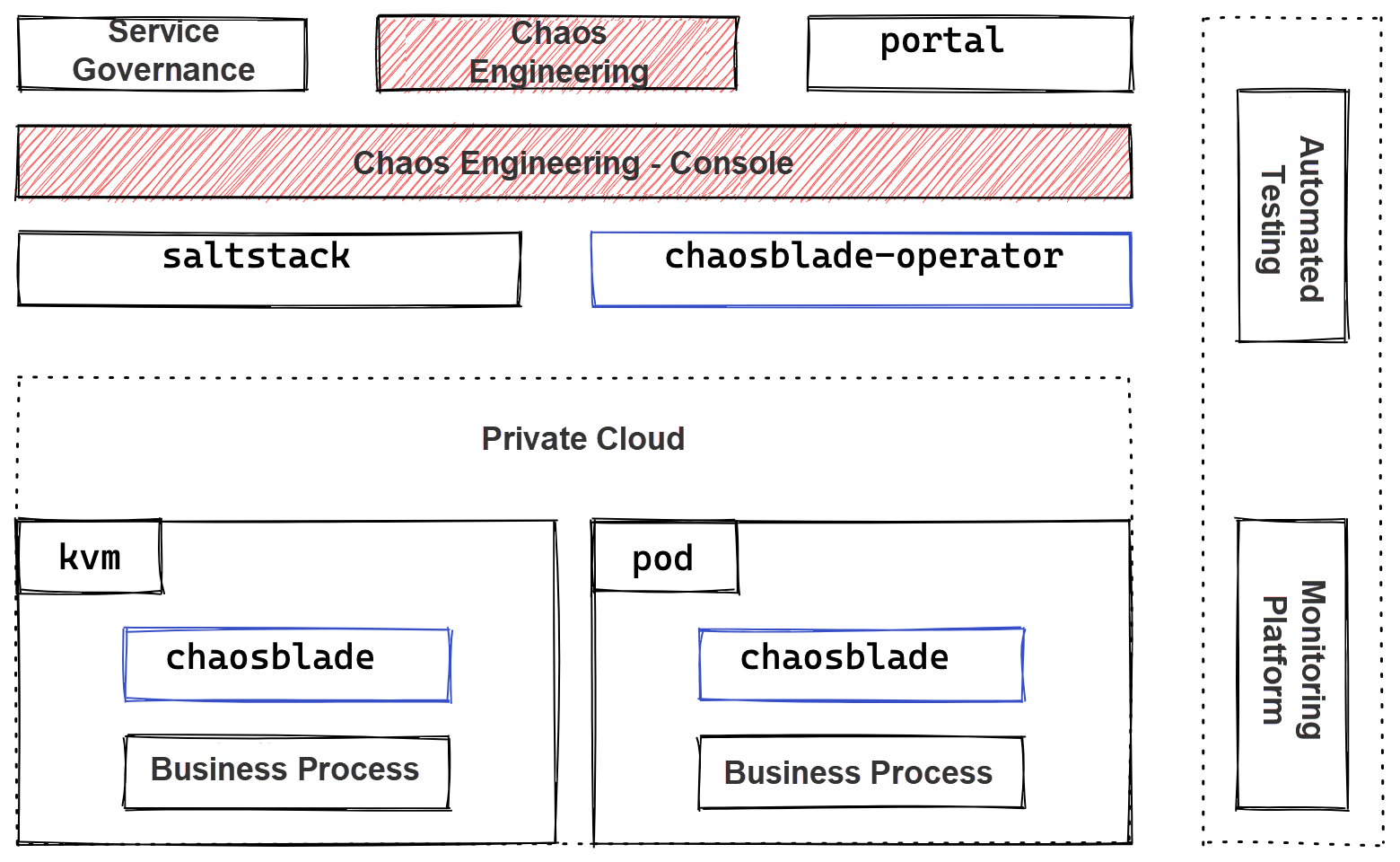

Based on Qunar's internal system, the overall architecture is listed below:

Its vertical structure from top to bottom is listed below:

Its horizontal structure is listed below:

The application of Chaos engineering in Qunar has mainly gone through two stages:

1) Build the Capability of Fault Injection: The main issue to be solved at this stage is enabling users to create fault drills and verify whether some aspects of the system meet expectations through appropriate fault strategies manually.

2) In scenarios with strong and weak dependencies, provide the capability of dependency labeling, strong/weak dependency verification, and automated closed loop of strong and weak dependencies. Use Chaos engineering to improve the efficiency of microservice governance.

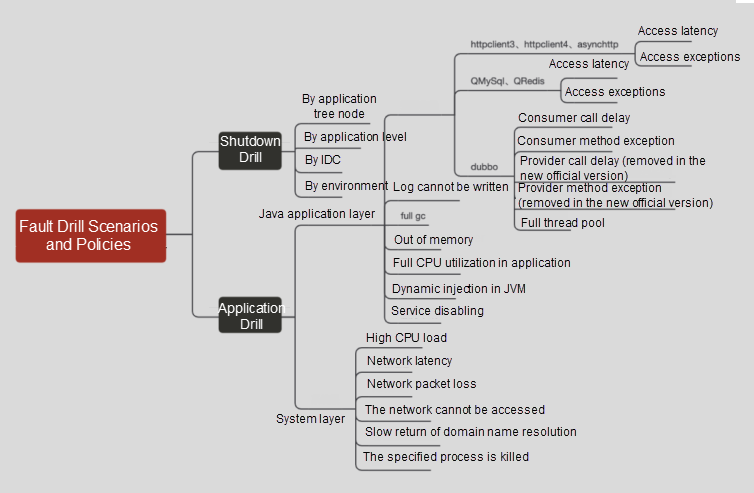

It is a basic capability of Chaos engineering to simulate faults through fault injection. At this stage, we mainly provide fault injection for three scenarios: machine shutdown, OS-layer failure, and Java application. On top of this, we provide scenario-based functions.

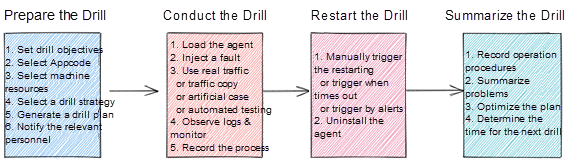

A typical drill process is listed below:

chaosblade-exec-jvm provides the basic capabilities of Java fault injection and plug-ins of some open-source components, but they are still insufficient for the company's internal components. As a result, the personnel responsible for middleware carried out custom development, adding AsyncHttpClient, QRedis, and other fault injection-related plug-ins. The fault injection function based on the call point for HTTP DUBBO was also added.

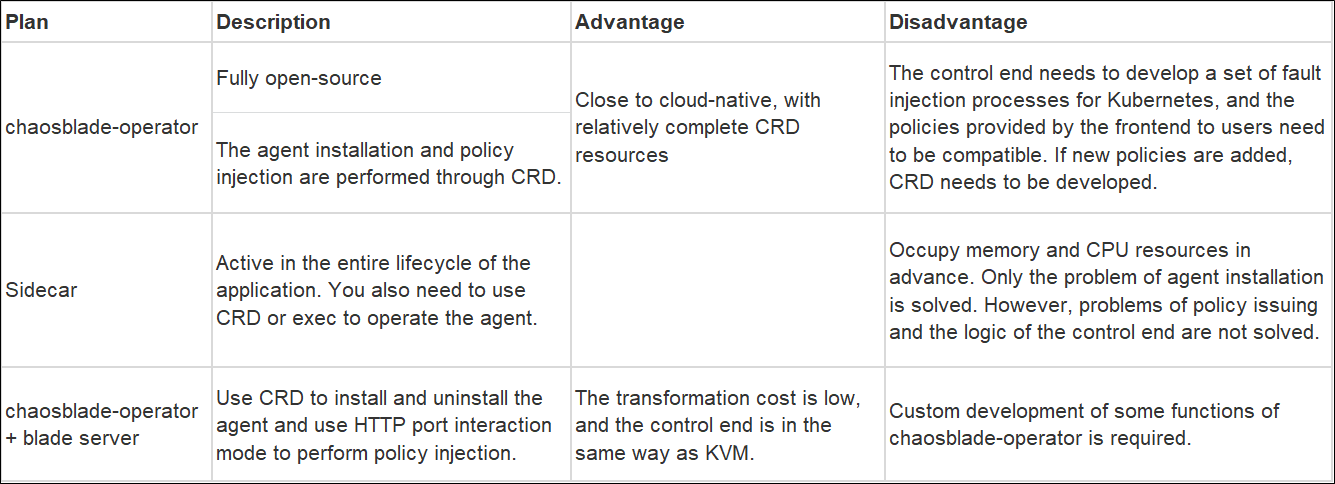

In the middle of 2021, Qunar started the containerized migration of applications. Fault drills also needed to support containerized drills. Based on chaosblade-operator, we compared the following plans:

The three main concerns in the plan:

Based on the comparison of the plans above, we selected the third implementation plan.

Based on the fault drill platform, we provide the fault drill functions in scenarios with strong and weak dependencies:

However, the verification of the strong/weak dependency still needs to be operated by humans. So, we combine the automatic testing tools to develop the function of automatic labeling of strong and weak dependencies. The maintenance of strong and weak dependencies can be completed through an automated process, forming a closed loop.

The chaos console obtains the application dependency from the service governance platform periodically. Then, it generates fault drills based on the exception returning policy (according to downstream interfaces.) Then, it injects faults into the test environment of the application, performing assertion through running cases on the automated test platform and differentiating the results in real-time. Finally, the assertion result is obtained. The Chaos console combines the test assertion and logs hits by the fault policy to determine whether the current downstream interface is strongly or weakly dependent.

1) Compatibility of Java Agent

The automated testing platform supports the recording and playback mode. During regression testing, you can use pre-recorded traffic to perform mock operations on certain interfaces. During the process, the recording and playback agent based on JVM-SandBox is used. chaosblade-exec-jvm is also an agent based on JVM-SandBox. You may encounter compatibility issues when using two agents together.

2) Test assertions are different from ordinary tests.

When using the automated test platform for regression testing, more attention is paid to the integrity and accuracy of the data. However, when doing fault drills, there is usually a problem with weak dependencies. In addition to conventional judgments of status code, the judgment of the returned results is more about whether the core data nodes are correct. For this reason, a separate set of assertion configurations is added to the automated test platform to adapt to the fault drills.

The main open-source project used in Qunar's chaos engineering practice is ChaosBlade. We have carried out varied custom development and bug fixes for chaosblade, chaosblade-exec-jvm, and chaosblade-operator. Some modifications have been submitted to the official for repo and merge. At the same time, we also have communicated with the ChaosBlade community. We prepare to participate in community construction to contribute our share to the ChaosBlade open-source community.

Our fault drill platform has performed over 80 simulated data center power-off drills and over 500 daily drills, involving over 50 core applications and over 4,000 machines. Business lines have also formed a good atmosphere of quarterly cycle drills and pre-launch verification.

The main goal of our next step is to automate online random drills, determine the minimum explosion radius through service-dependent procedures, and establish steady-state assertions for online drills. Finally, we plan to realize regular random drills for all procedures of the core pages of the whole company. At the same time, we will explore the use scenarios of chaos engineering in service governance and stability construction. This will provide technical guarantees for the stable development of the company's business.

639 posts | 55 followers

FollowAlibaba Cloud Community - October 10, 2022

Alibaba Clouder - May 24, 2019

Alibaba Cloud Native Community - April 22, 2021

Alibaba Developer - September 6, 2021

Alibaba Cloud Native Community - March 3, 2022

Alibaba Cloud Native Community - June 29, 2022

639 posts | 55 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Native Community