By Qin Long, Alibaba Cloud Technical Expert

Contributed by Alibaba Cloud ECS

In the traditional O&M mode, we usually only focus on the system backbone process and business process but ignore the bypass system or underlying architecture. When an alarm occurs in the system, it is likely to be caused by the part that we do not pay attention to enough. As a result, the operation and maintenance personnel cannot deal with it well. It may be necessary for personnel from various business domains to work together in the event of a large-scale failure. However, the personnel come from different business domains and have different responsibilities and expertise, so they cannot work together well and handle the failure efficiently.

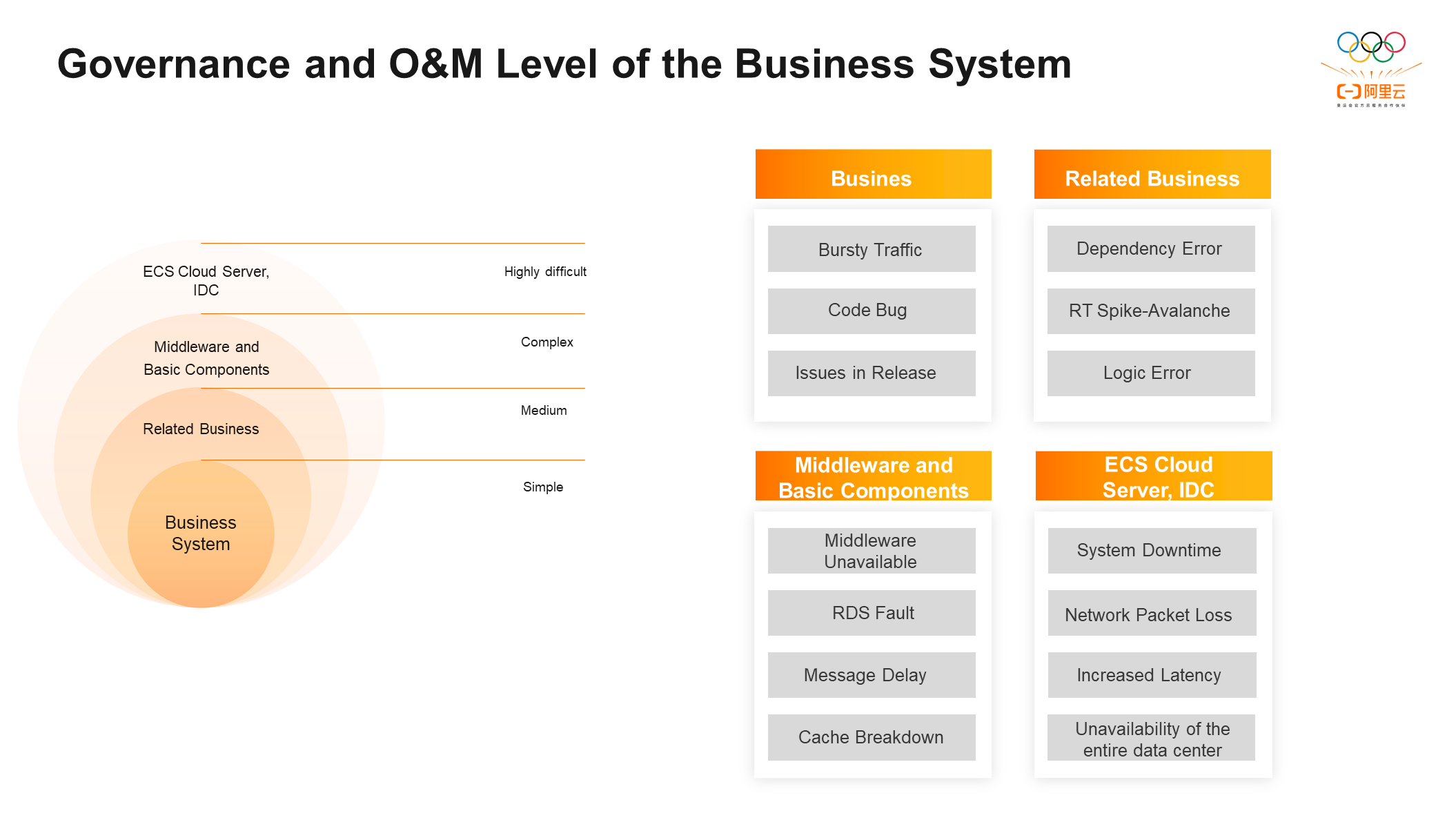

The governance and O&M levels of a business system can be divided into four layers according to different levels of the business system:

① Business Systems: The systems that business developers are best at or most often involve (such as developing code)

② Related Business: It refers to some second-party dependence developed by the same company or department.

③ Middleware and Basic Components: General developers do not care about this layer.

④ Infrastructure Layer: O&M personnel is familiar with it, and developers are hardly involved in O&M at this layer.

Each technical layer will face different problems:

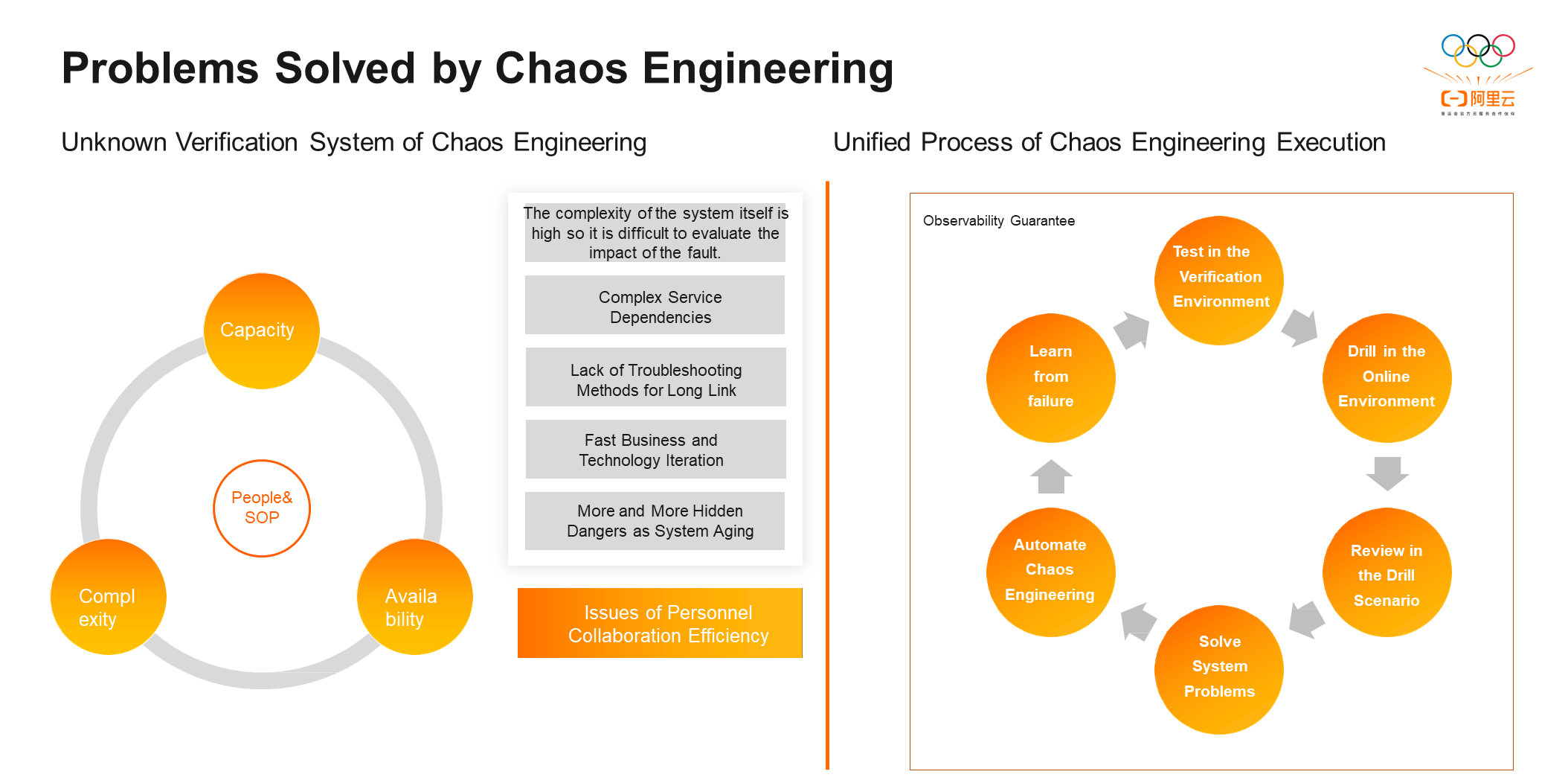

We introduced chaos engineering to solve the unknown problems of the different technical layers above and verify from four aspects:

① Capacity: After the system capacity is specified, then we can specifiy how many users or calls the system can withstand.

② System Complexity: As the system ages, it will have more hidden dangers. The updating and iteration of businesses and technologies will also lead to more technical debts, longer links, and fewer troubleshooting methods.

③ Availability: There is no 100% available system in the world, and any system may go wrong. Availability is how the system will behave when it goes wrong.

④ The Efficiency of Human and Process Collaboration and Personnel Collaboration: It is the most unstable factor in system fault handling.

All drill scenes of chaos engineering are derived from faults. After troubleshooting the fault, first, test it in the verification environment. Subsequently, mature cases can be tested in online drills. After the drill is completed, review the drill process to summarize the problems that need to be solved. Finding and optimizing the functions that can be optimized from the drill is the final goal of chaos engineering. Finally, all stable cases are gathered together and summarized into an automation case set of chaos engineering. The case set will run stably on the online system and is used for the performance and complexity regression of online systems to prevent certain changes from affecting system stability or availability.

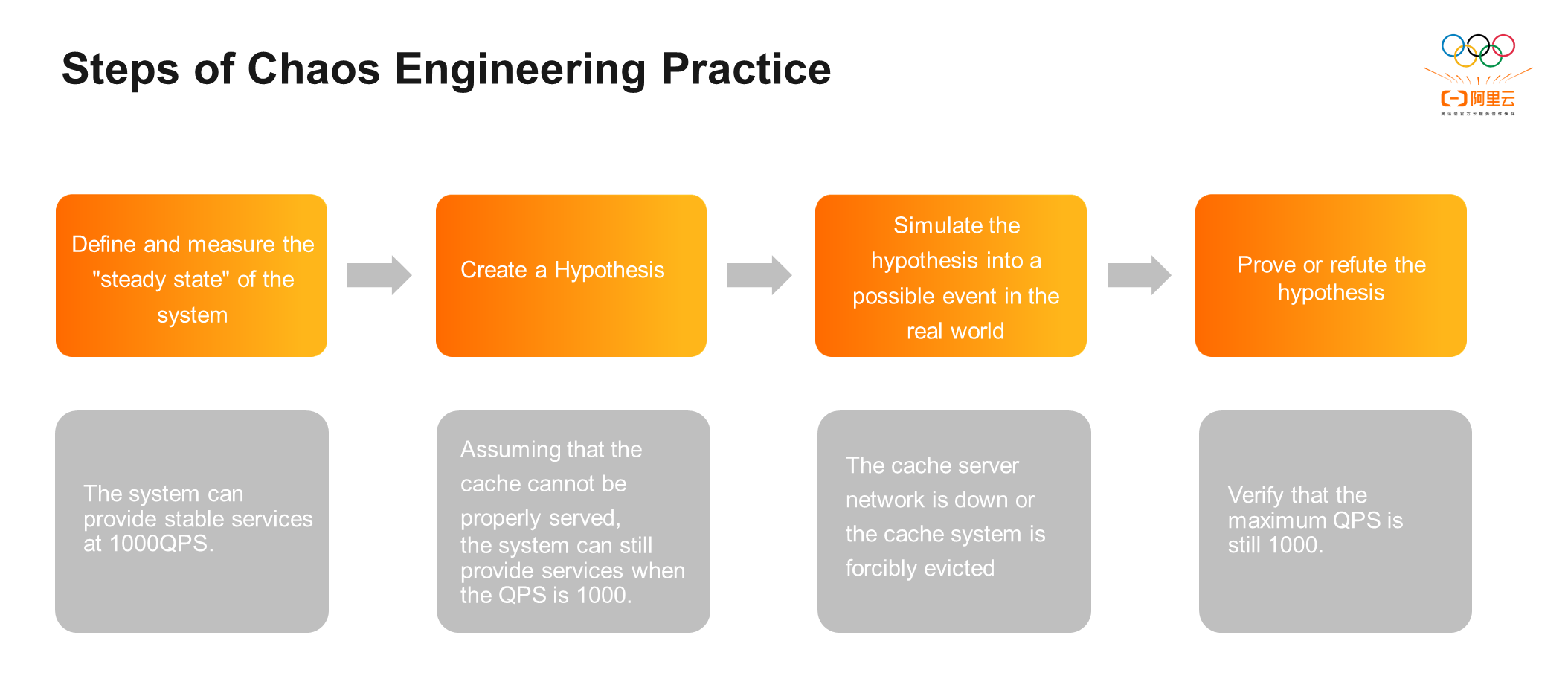

The practice of chaos engineering is divided into four classic steps:

Step 1: Define and Measure the Steady State of the System: It is necessary to make clear what kind of request the system can support under what conditions or what kind of performance the system has when it runs stably. For example, the system can provide stable services at 1000QPS.

Step 2: Create a Hypothesis: Find variables that may affect the stability of the system in the steady state of the system. For example, assuming the cache cannot be properly served, the system can still provide services when the QPS is 1000.

Step 3: Simulate the Hypothesis into a Possible Event in the Real World: For example, if the cache fails to serve properly, the following events may occur in real life: the cache server network is down, or the cache system is forcibly evicted.

Step 4: Prove or Refute the Hypothesis: For example, if the cache fails to serve properly and cause system instability, we need to take different steps depending whether the maximum QPS is still 1000. If the result is that the system QPS can still reach 1000, the system can be stable in the model case. If the result is that the QPS cannot reach 1000 (for example, the system is unstable when the QPS is 200), you can find the system bottleneck and manage it.

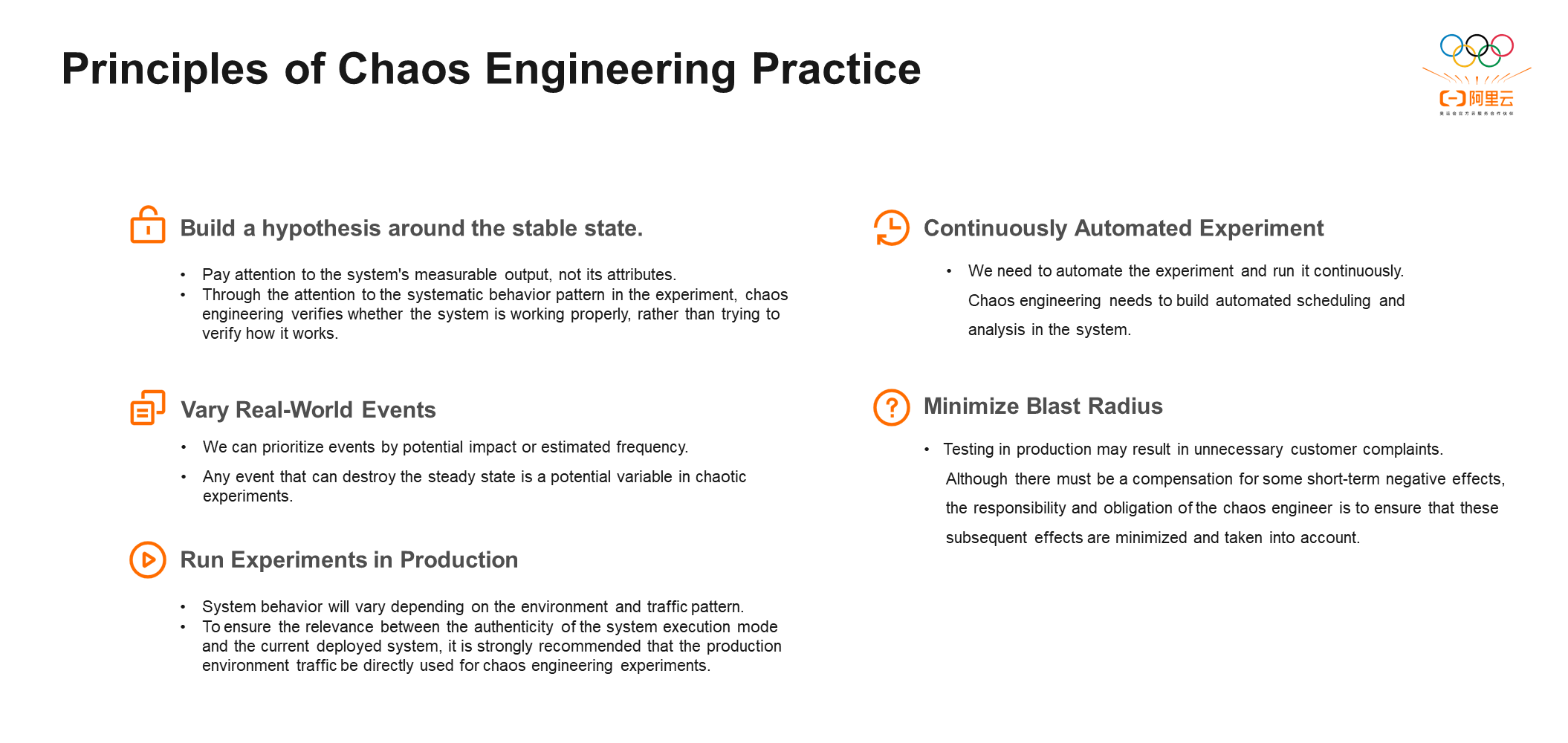

There are five principles in the practice of chaos engineering:

① Build a Hypothesis around Stable State Behavior: Chaos engineering should focus on whether the system can normally work when unstable events occur rather than trying to verify how the system works.

② Varied Real-World Events: First, experiment with real possible events without caring about impossible events. Second, enumerate as many possible problems in the system as possible and prioritize the events that have a high occurrence probability or have already occurred.

③ Run Experiments in the Production Environment: The experiment cannot be conducted in production at the initial stage of elastic computing because of low system stability and poor observability, and the impact range cannot be observed when the fault is injected online. For example, when experimenting in an environment where code is isolated but data is not isolated, it is completely impossible to detect the real bottleneck of the system because any small changes and any elements different from the online environment will affect the accuracy of the final result. Therefore, we advocate conducting experiments in a production environment to maximize verification of the system's performance when problems occur.

④ Automate Experiments to Run Continuously: Taking performance as part of regression requires functional regression and automated performance regression.

⑤ Minimize Blast Radius: When observability is strong enough, control the possible impact of the drill on the system. The purpose of the drill should be to verify the weak points of the system, not to completely crush the system. Therefore, it is necessary to control the range of the drill, minimize the impact, and try not to cause an excessive impact on online users.

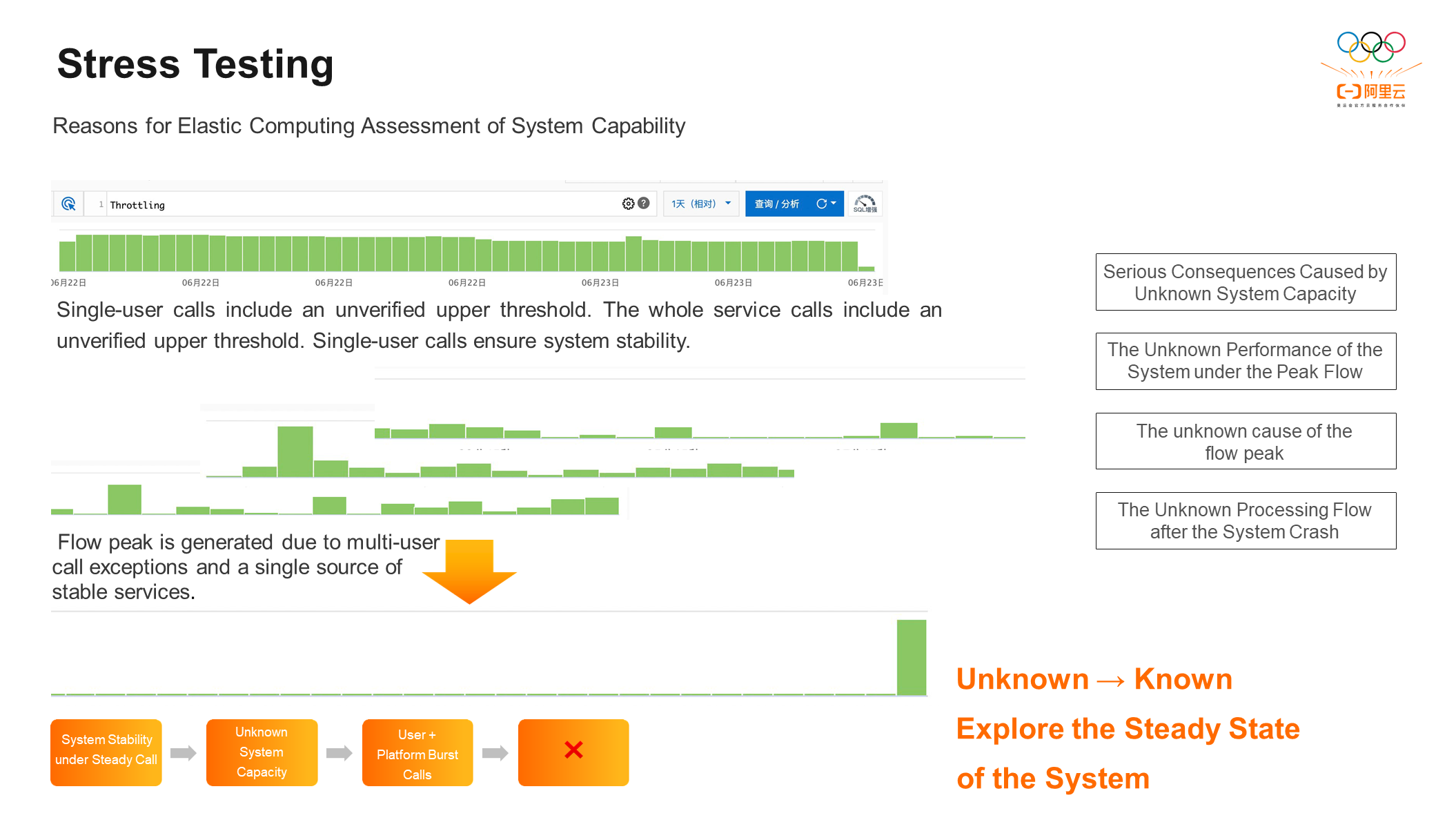

When performing stress testing, we set the upper limit of QPS for the API of each user, which is flow control. The call stack of all users is relatively stable in stress testing scenarios, but there are still unstable elements in the system. First, there is flow control for users, but it is not verified. Only when users call within the threshold can the function normally run without too much pressure on the system. Second, the capacity of a single interface or the whole application is unknown. However, a single-user call can ensure the stability of the system.

The superposition of different user call peaks from a single call source can lead to excessive stress on the backend of the system and cause problems in the peak system.

The process above can be summarized into four steps:

Step 1: Establish a more stable system

Step 2:Potential problem caused by the unknown system capacity

Step 3: The user triggers problems that may occur in the real world (burst calls from different users on the same platform).

Step 4: The conclusion is that the system cannot withstand the pressure, which eventually leads to failure.

There are four problems that need to be solved in the preceding process: the serious consequences caused by the unknown system capacity, the unknown performance of the system under the peak flow, the unknown cause of the flow peak, and the unknown processing flow after the system crash. The unknown needs to be transformed into the known to explore the stability of the system. Therefore, we introduced stress testing, which is mainly divided into three steps.

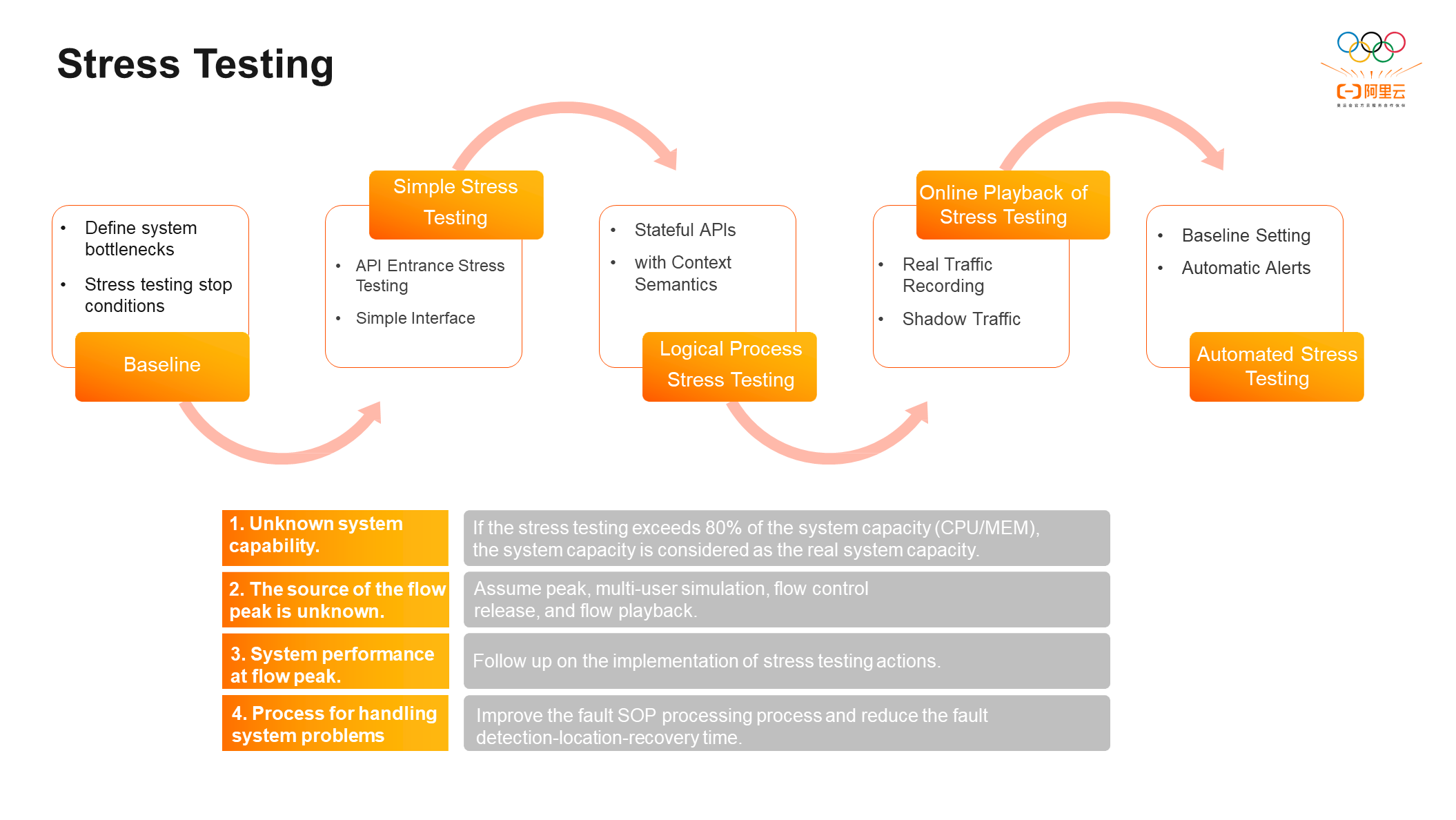

Step 1: Baseline: It defines system bottlenecks and stress testing stop conditions.

Step 2: Actual Stress Testing: API is generally used as the entrance in stress testing, which may be external API, internal API, and internal call. There are three types of stress testing methods:

Step 3: Automate Stress Testing: Unify stable cases and cases that do little harm to the system into an automated case set and set up automatic alerts based on baselines. When performing the regression of automated stress testing, if an interface does not meet the baseline predicted before, the system will automatically alert you to achieve system performance regression and finally solve the four problems mentioned above:

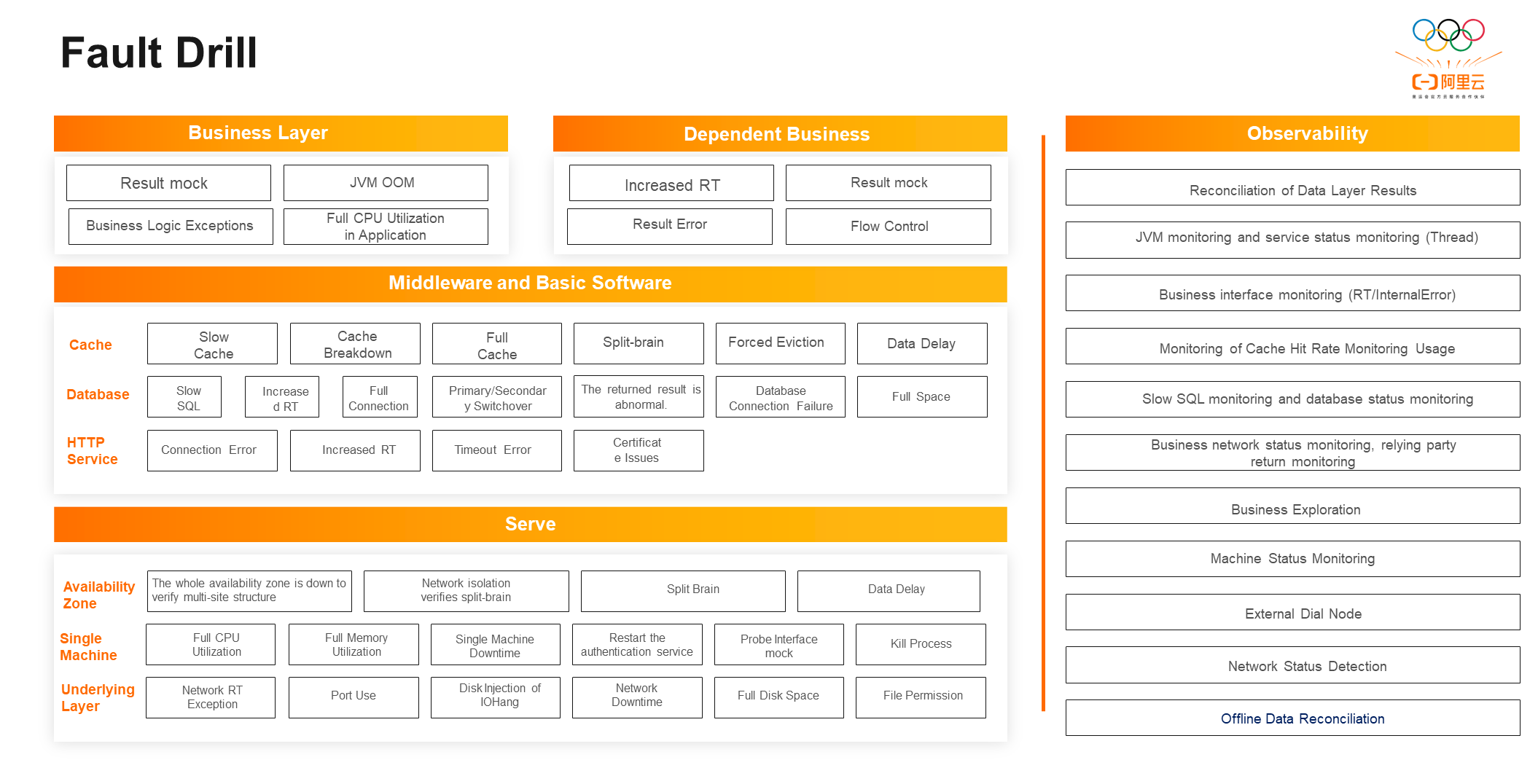

Fault drills and observability are inseparable. Only when the observability construction is relatively complete can fault drills be injected into the online system to control the blast surface of the system.

Observability is divided into four layers:

Different fault drill methods are available for different layers. For example, mock in the business layer needs all business domain personnel. It also needs to pay attention to the inside of the VM to monitor the business layer. Dependent businesses should pay more attention to RT increase, result mock, result error reporting, etc. The problems of middleware and basic software layer include slow cache, breakdown, slow MySQL, inability to connect, etc. As an infrastructure provider, elastic computing pays more attention to the server layer. When it goes online in each region, the control system needs to go through multi-AZ, downtime, and drills to verify the multi-active availability of the control system. Therefore, the server layer has a wealth of drill experience.

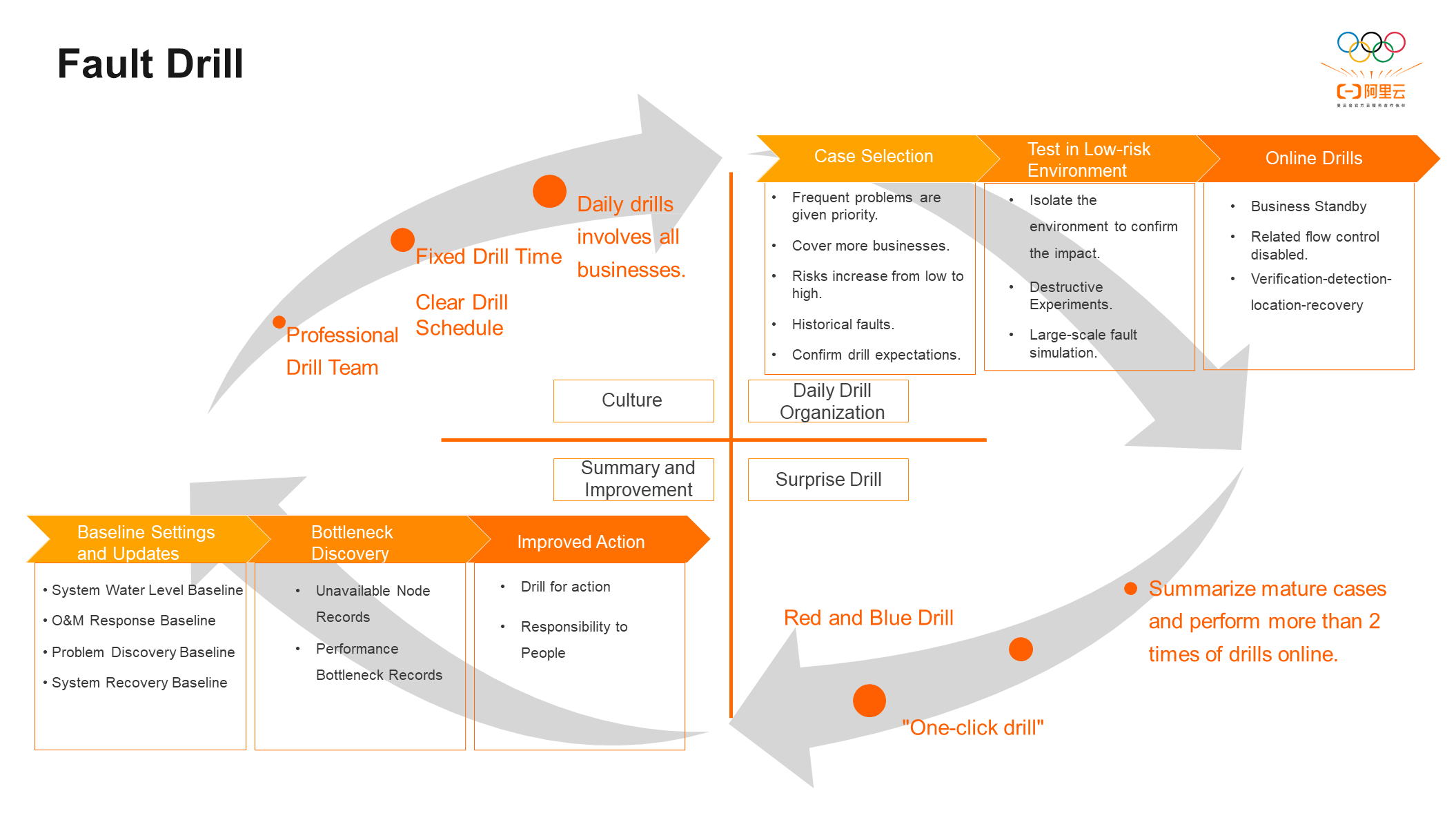

The procedure for a fault drill is listed below:

Step 1: The concept of fault drills is to maximize system avalanches and instability events, which conflicts with the daily philosophy of developers: First of all, we should let everyone accept the fault drill. The professional drill team will arrange a fixed drill time and a clear drill schedule, and all businesses will be involved in the drill.

Step 2: Organize Daily Drills: The selection principle of events in the daily drill organization is that frequent problems are given priority, and risks are from low to high. Then, try the drill in a low-risk environment, confirm the impact in an isolated environment, and carry out destructive experiments and large-scale fault simulation in a low-risk environment. For example, fault drills with completely uncontrollable impact need to be carried out in a low-risk environment, and online drills can only be carried out with relatively stable cases or cases with confirmed impact. You must generally follow the detection-location-recovery process during online drills.

Step 3: Conduct an unexpected inspection: The unexpected inspection are divided into the red and blue team drill and the one-click drill. The red and blue team drill is relatively conservative. Some personnel familiar with the drill case will be selected from the drill team to participate in the fault drill as the Red team and inject problems into the system from time to time. All other business personnel is the Blue team, who are responsible for verifying the detection-location-recovery time of problems. The one-click drill is a more radical way. Generally, the business leader directly injects faults and tests the fault handling process of all business personnel. Only systems with very high maturity can achieve the goal of one-click drills.

Step 4: Summarize and Improve: Summary and improvement are the ultimate goals of fault drill and stress testing in chaos engineering. Determine the system limit through fault drills and stress testing (including the system water level limit, operation and maintenance response limit, problem discovery limit, and system recovery limit), clarify the system performance and problem handling process, record unavailable nodes and performance bottlenecks, extract unavailable nodes as improvement targets, and assign responsibilities to specific personnel for system stability improvement.

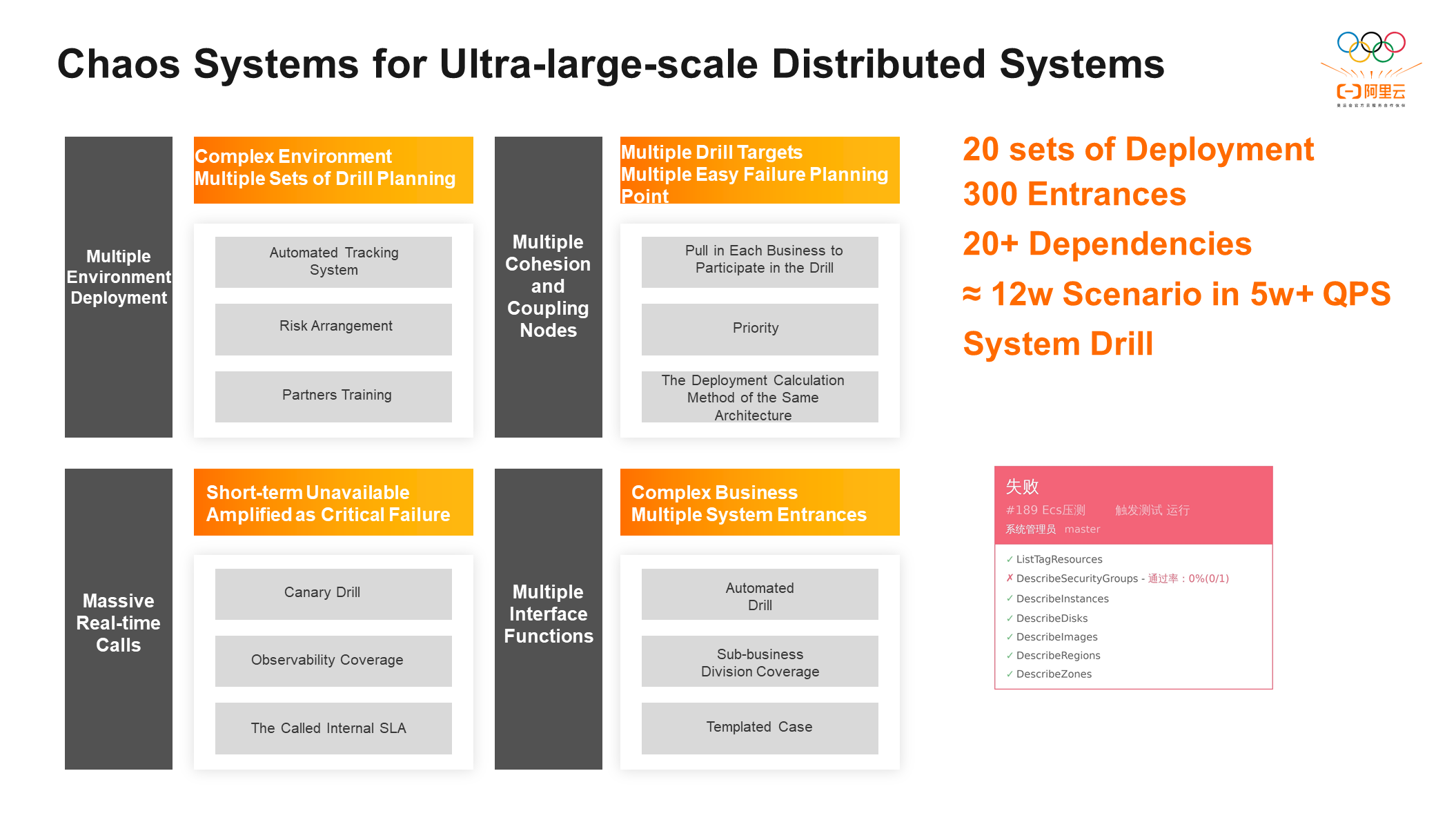

What is the difference between chaos engineering and simple chaos engineering in the elastic computing system, which is a super-large-scale distributed system?

① Multiple Environment Deployment: Elastic computing system includes 20 sets of highly available deployments and multiple sets of environment planning, so we hope to have an automated tracking system that can automate case coverage and region coverage.

② There are many cohesive and coupled nodes, many dependencies that need to be planned, and many business parties that need to be touted. The solution is to make more business domains involved and schedule the drill according to the priority.

③ Massive Real-Time Calls: You need to face a very large flow system during the drill, and the flow cannot be stopped for a short time. Therefore, if a part of the system is unstable during the drill, it will be amplified into a very serious fault online. You can use canary drills, SLA, and degradation rules to avoid the problem.

④ Multiple API Functions: We want to implement case coverage through automated drills. Automated regression cases can automatically return to online systems every day and regress system performance changes.

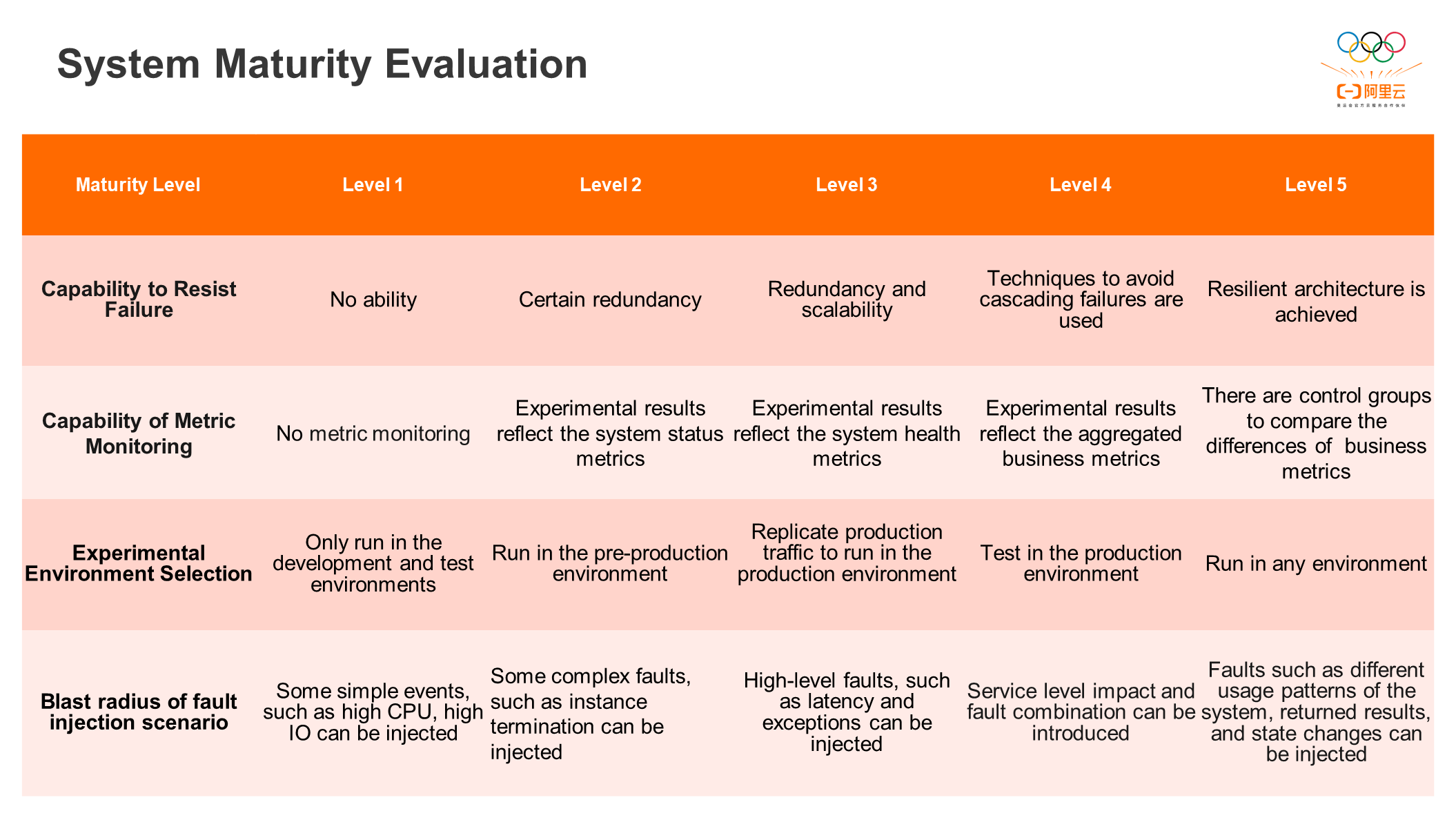

Chaos engineering system maturity can be divided into five levels from the vertical:

Level 1: It is the start-up system deployed in a single environment and in a single region. The test can only be conducted in development and test environments.

Level 2: It provides a preliminary multi-AZ deployment and can inject slightly complex faults.

Level 3: Drills in both the production environment and the canary environment can be conducted.

Level 4: A relatively mature system that can run experiments in a production environment and perform relatively mature fault injection or related business fault injection

Level 5: System faults and data plane faults can be injected into various environments, which are also target systems.

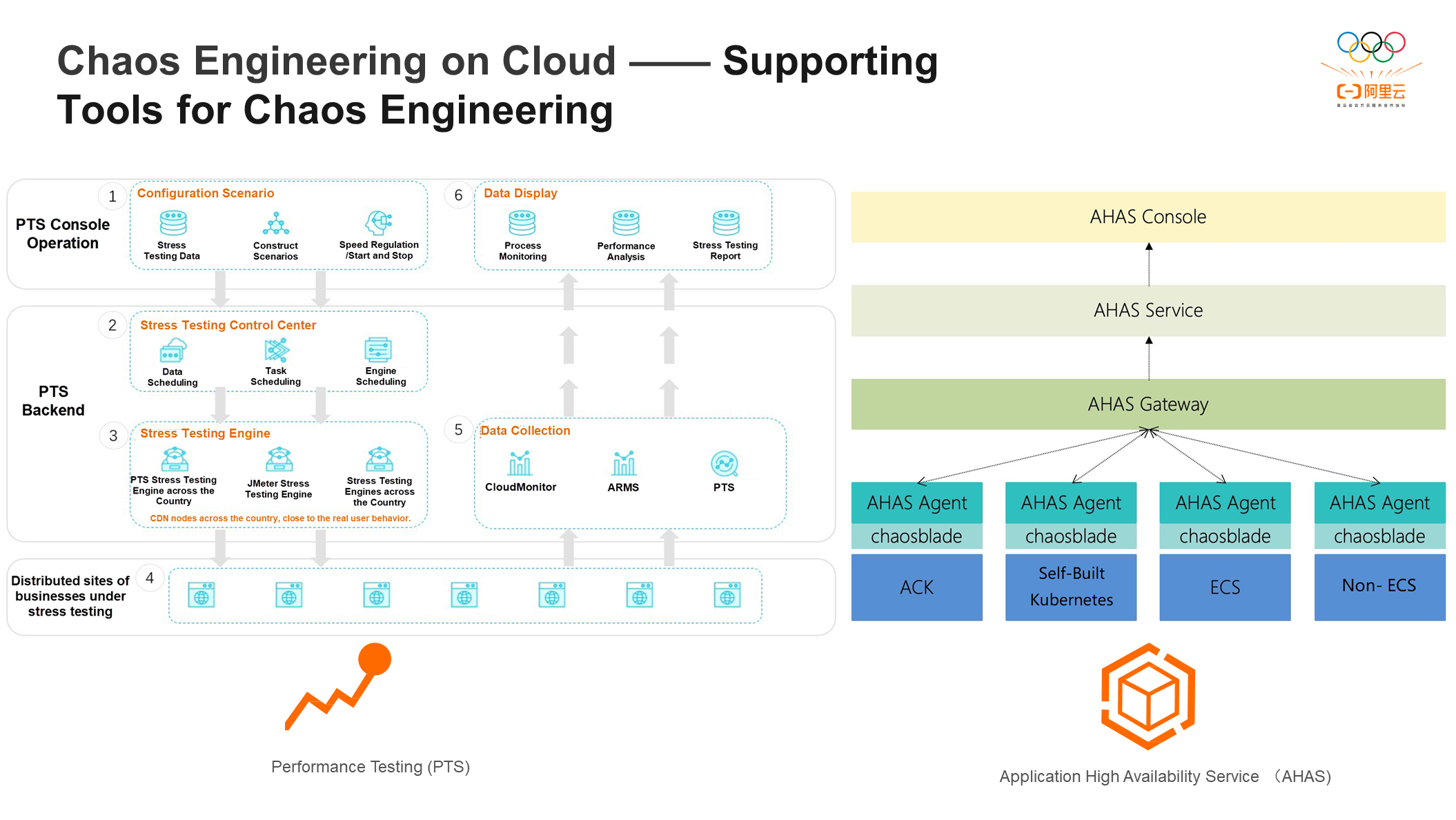

Many supporting tools are available on the cloud.

In terms of stress testing, there are PTS tools that can help configure scenarios, control the progress of stress testing, and simulate user requests through qualified nodes deployed nationwide to get closer to user behavior. The observability work can be completed by CloudMonitor ARMS and PTS monitoring during stress testing, and the system stress can be observed in the midst of stress testing.

The fault injection platform AHAS can inject problems at various levels, including the application layer, the underlying layer, and the physical machine layer.

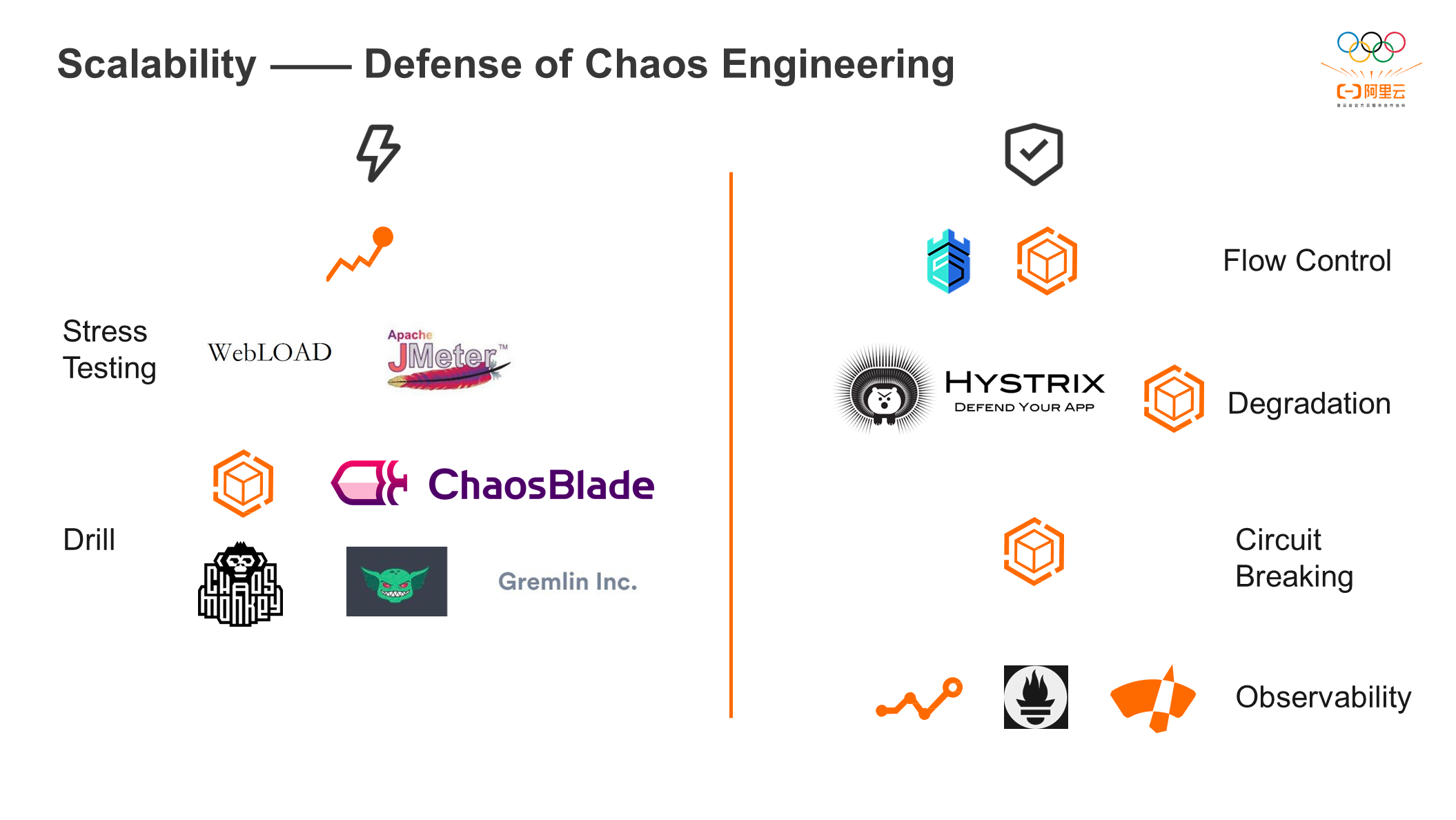

There must be some defense with stress testing and drills. The main tools for stress testing include PTS and Jmeter. There is internal and external documentation for the prevention and control tools for stress testing, such as Sentinel and AHAS. If a system problem occurs, you can use Hystrix and AHAS to downgrade the system. There are AHAS, ChaosBlade, and ChaosMonkey for drills. You can use AHAS to break the circuits for the fault scenarios in drills. In addition, some observability components are provided on the cloud, including ARMS and CloudMonitor. Prometheus can be used for observability on and off the cloud.

Q1: Is chaos engineering only useful for enterprises with complex distributed systems? Is it necessary for small enterprises? Is there a framework or tool that actually works?

A: First, list the risks that you may encounter in your system. For example, there are fewer risks in website applications. The related businesses are fewer in the system, or the coupling degree is not deep. The middleware is used less. In addition, technical components are covered by ECS or cloud service vendors. Therefore, chaos engineering is only used in scenarios (such as traffic bursts or code bugs), and there are few drills.

Chaos engineering has a few changes to the code layer, but it requires a lot of time and effort. AHAS can be used in most scenarios. AHAS provides a complete solution for fault injection and how the system performs flow control, degradation, and circuit breaking after fault injection. Therefore, there is no need to worry about the time and effort required.

Whether it is necessary for small enterprises to do chaos engineering needs to be evaluated according to the specific situation. If the system may have hidden dangers in this respect, it is recommended to do chaos engineering. At the same time, we recommend that most cloud companies do chaos engineering to identify the weak points of the system.

Observability on the Cloud - Problem Discovery and Location Practice

1,311 posts | 462 followers

FollowAlibaba Cloud Community - September 3, 2024

Alibaba Clouder - December 3, 2020

Alibaba Clouder - May 24, 2019

Alibaba Developer - January 19, 2022

Alibaba Cloud Native - October 12, 2024

Alibaba Cloud Native Community - March 3, 2022

1,311 posts | 462 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Alibaba Cloud Community