The best way to reduce faults is to increase the occurrence frequency of problems. Within a controllable range or environment, we can constantly improve the fault tolerance capability and resilience of a system by repeatedly reproducing faults.

How many steps do you need to implement a highly effective chaos engineering experiment? The answer: just two steps.

Step 1: Log on to ChaosBlade.

Step 2: Download the release version to build a tool for fault drills.

A highly available architecture is the core for ensuring service stability. After providing many Internet services and holding Double 11 Global Shopping Festivals over all these years, Alibaba has accumulated many highly available core technologies. These technologies, including end-to-end stress testing, online traffic management, and fault drills, have been made available to the public through open source release and cloud-based services. This allows enterprises and developers to benefit from technical accumulation of Alibaba: to improve development efficiency and shorten the business development process.

For example, Alibaba Cloud Performance Testing Service (PTS) enables you to build an end-to-end stress testing system. The open source component Sentinel allows you to implement the throttling and downgrade feature. After six years of improvement and practicing, including tens of thousands of online drills, Alibaba condenses its ideas and practices in the fault drill field into a chaos engineering tool. This tool, ChaosBlade, was released as an open source project.

To access the project and experience the demo, click here.

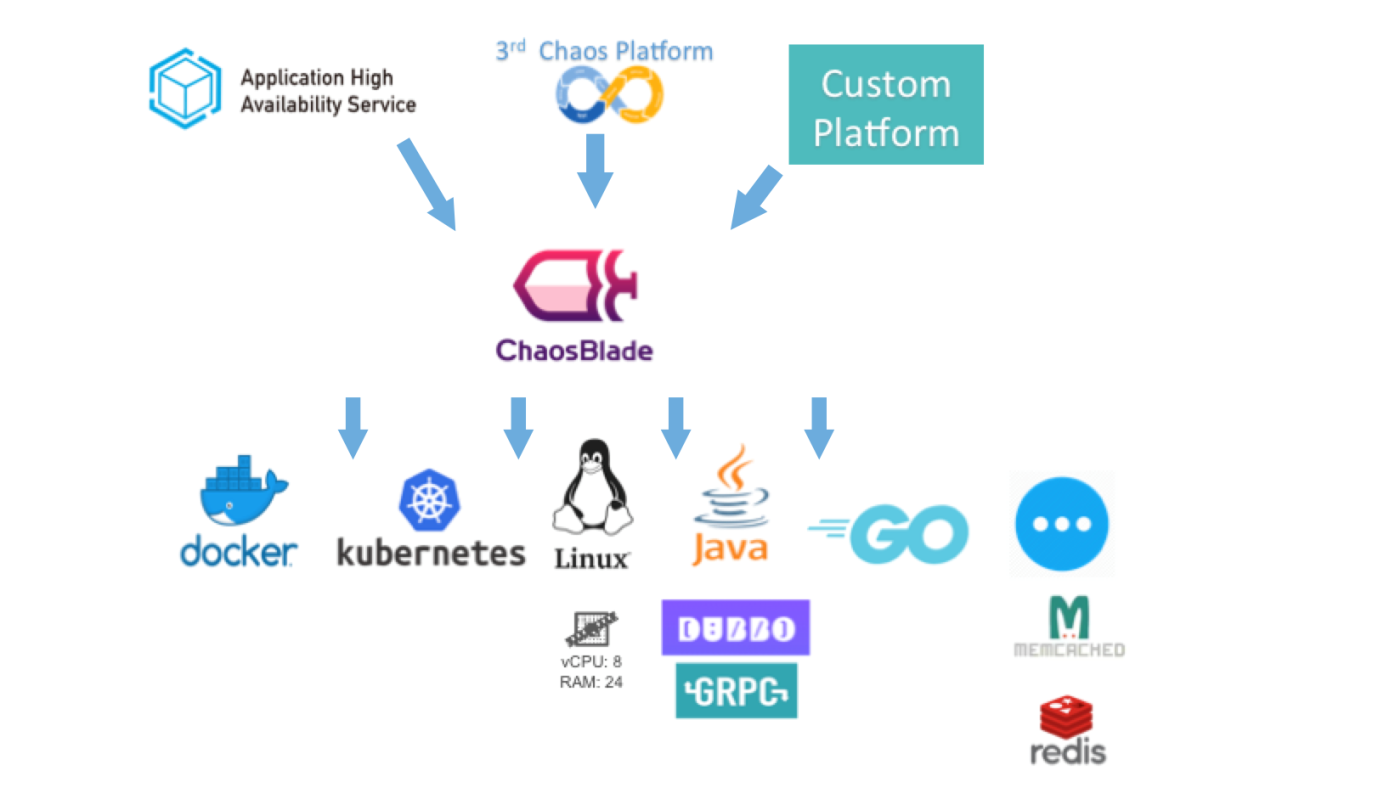

ChaosBlade is a chaos engineering tool that follows principles of chaos engineering experiments, and provides extensive fault scenarios to help you improve the fault tolerance and recoverability of distributed systems. It can inject underlying-layer faults. It is simple to use, free of intrusions, and highly scalable.

ChaosBlade is developed based on the Apache License 2.0 protocol and it currently has two repositories: chaosblade and chaosblade-exe-jvm.

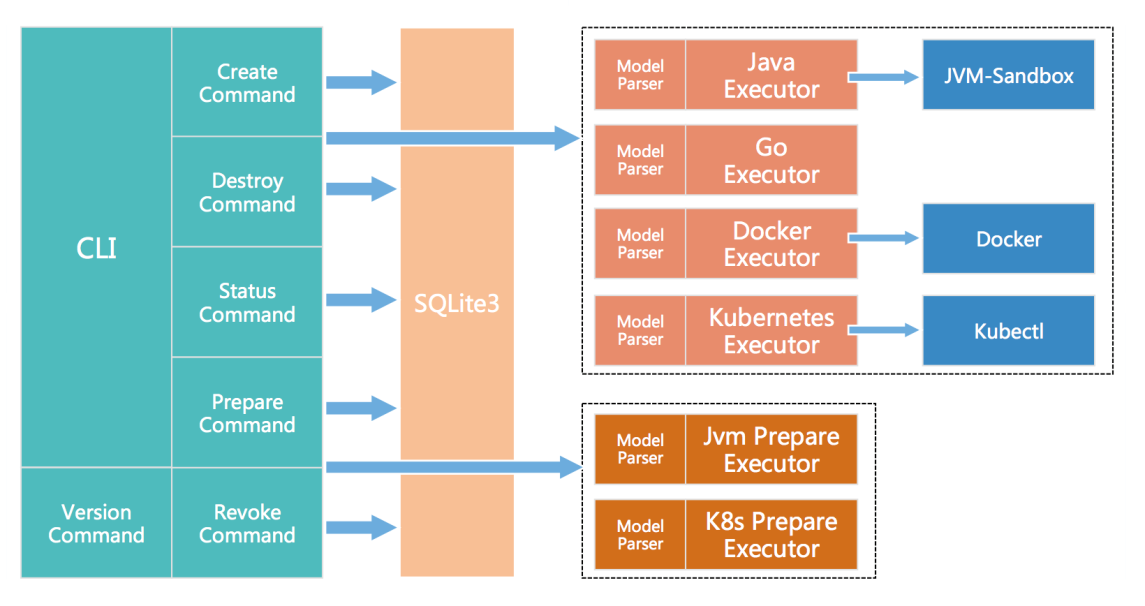

The chaosblade repository contains command line interfaces (CLIs), basic resources implemented by using Golang, and container-related chaos experiment executors. The chaosblade-exe-jvm repository is a ChaosBlade executor for chaos experiments on applications that are running on Java virtual machines (JVMs).

Later on, the ChaosBlade community will add chaos experiment executors for other languages such as C++ and Node.js.

With more and more attention paid to chaos engineering, it has gradually become an essential tool for exploring system vulnerabilities and ensuring high system availability. However, the entire chaos engineering industry is still in a rapidly evolving stage, without uniform standards for best practices and frameworks. Implementing chaos engineering may lead to potential business risks, and insufficient experience and tools may further hinder DevOps personnel from implementing chaos engineering.

Before releasing ChaosBlade, we can already find many outstanding open source chaos engineering tools. Each of these tools may effectively address problems of a particular field. However, many of them are unfriendly to beginners, and some support very limited scenarios. These are the reasons why people find chaos engineering difficult to implement.

With years of experience in practicing chaos engineering, Alibaba Group releases the chaos engineering experiment tool ChaosBlade as an open source project for the following purposes:

ChaosBlade simulates faults such as delayed calls, unavailable services, and fully occupied resources. This allows you to check whether faulty nodes or instances are auto-isolated or stopped, traffic scheduling works, preventive measures are effective, and whether the overall QPS or RT is affected. On this basis, you can verify whether throttling, downgrading, or lockdown works by gradually increasing the range of faulty nodes. Estimate the fault tolerance bottom line and fault tolerance capability of the system by increasing the number of faulty nodes, until a service request times out.

ChaosBlade simulates terminating service pods and nodes and increasing the pod resource load. This allows you to check whether the system is available upon fault occurrence, and verify the reasonableness of replica configuration, resource limit configuration, and the container deployment in pods.

ChaosBlade allows you to verify the effectiveness of a scheduling system by simulating a scenario where the upper layer resource load is high and unbalanced; verify the fault tolerance capability of an application system by simulating a scenario where the underlying distributed storage system is unavailable; verify whether scheduling jobs can automatically migrate to an available node by simulating a scenario where scheduling nodes are unavailable; and to verify whether primary-backup failover works properly by simulating a scenario of faulty primary or backup nodes.

To improve the accuracy and timeliness of monitoring alarms, ChaosBlade supports injecting faults to the system. This allows you to verify the accuracy of monitoring metrics, completeness of monitoring dimensions, reasonableness of alarming thresholds, promptness of alarms, correctness of alarm recipients, and availability of notification channels.

You can randomly inject faults into the system to evaluate the ability of the related personnel to handle emergent problems, and to verify whether the fault reporting and handling procedures are reasonable. By doing so, you can improve the ability of your team to locate and solve problems through practice.

Chaos engineering experiment scenarios supported by ChaosBlade not only cover basic resources, such as full CPU usage, high disk I/O, and network latency, but also applications running on JVMs. Experiment scenarios of applications running on JVMs include Dubbo call timeout and exceptions, and scenarios where the specified method encounters delay or throws an exception or returns specific values. ChaosBlade also support container-related experiments, such as terminating containers and pods. We will continuously increase new experiment scenarios.

You can implement ChaosBlade by using CLI commands. It has a user-friendly auto-suggest function to help beginners quickly get started. The pattern of the commands follows a fault injection model that has been concluded by Alibaba Group through years of fault testing and drills. The commands are clear and easy to read and understand. This reduces the difficulty for beginners to implement chaos engineering experiments.

All ChaosBlade experiment executors follow the same fault injection model mentioned previously. This ensures all experiment scenarios follow the unified model, and facilitates scenario development and maintenance. The model itself is easy to understand, which allows beginners to quickly get started. You can easily add more chaos engineering experiment scenarios by following this model.

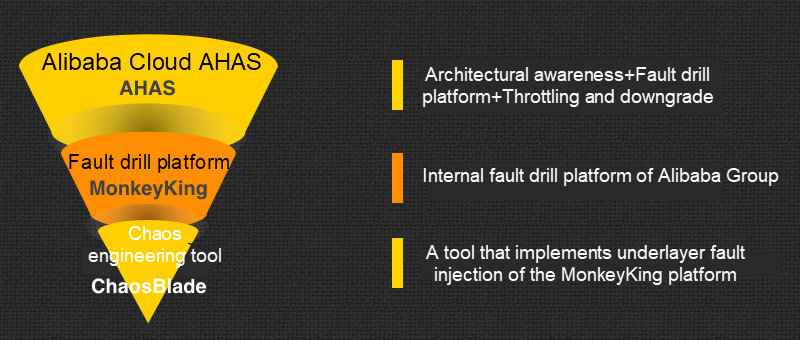

EOS (2012-2015):

EOS is an earlier version of the fault drill platform. Faults are injected into the system through bytecode enhancement. EOS supports simulating common remote procedure call (RPC) faults, and managing strong and weak dependencies of microservices.

MonkeyKing (2016-2018):

MonkeyKing is an upgraded version of the fault drill platform, with more extensive fault scenarios, such as resources and container-layer scenarios. MonkeyKing supports performing large scale drills in the production environment.

AHAS (2018.9-Present):

Integrated with all functions of the fault drill platform, Alibaba Cloud Application High Availability Service (AHAS) supports orchestrated drills and drill plug-ins. AHAS also integrates the architecture awareness and throttling downgrade features.

ChaosBlade (2019.3):

ChaosBlade implements underlying layer fault injection for the MonkeyKing platform. ChaosBlade defines a set of fault models by aggregating the underlying fault injection capability of the fault drill platform. ChaosBlade is released as an open source project in combination with the user-friendly CLI tool to help cloud-native users perform chaos engineering tests.

Zhou Yang (Zhongting)

Alibaba Cloud AHAS team senior technical expert, with years of experience in research and development of stability products, architectural evolution, and support for normal operation and large-scale promotion campaigns. Zhou Yang is also the founder of the fault drill platform MonkeyKing, technical owner of the AHAS product, and proponent of chaos engineering.

Xiao Changjun (Qionggu)

Alibaba Cloud senior development engineer, with years of experience in application performance monitoring and chaos engineering. Xiao Changjun is a backbone developer of Alibaba Cloud AHAS, and the owner of the ChaosBlade open source project.

2,593 posts | 791 followers

FollowAlibaba Cloud Native Community - June 29, 2022

Alibaba Cloud Native Community - March 3, 2022

Alibaba Developer - January 19, 2022

Alibaba Cloud Native Community - April 22, 2021

Aliware - May 20, 2019

Alibaba Cloud Community - July 1, 2022

2,593 posts | 791 followers

Follow Mobile Testing

Mobile Testing

Provides comprehensive quality assurance for the release of your apps.

Learn More Penetration Test

Penetration Test

Penetration Test is a service that simulates full-scale, in-depth attacks to test your system security.

Learn More Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Clouder