By Muaaz Sarfaraz, Alibaba Cloud Community Blog author.

This tutorial provides a step-by-step tutorial on how to setup PySpark in Alibaba Cloud ECS instance which is running CentOS 7.x operating system. For our particular article we would be using centos_7_06_64_20G_alibase_20181212.vhd as the ECS instance OS.

This guide should be extremely helpful for the users who are interested in setting up a PySpark cluster on the cloud for training purposes or for working on big data. However, before we jump right into it, it's important to note that it has few dependencies and prerequisites.

Before you begin, the following resources are needed for setting up a PySpark node on Alibaba Cloud.

You may also need some additional things based on your needs. For example, you may need additional storage, an SLB instance, or other components. For this tutorial in particular, we will just be using the minimal resource required, which are one ECS instance as both the master and the slave node and an EIP. Understand that the technique explained in the tutorial can be easily extended to a multiple master and slave node configuration over various ECS instances.

Apart from these resources, we would also have to install the following items:

Those who have already installed Python, and have acquired the cloud resources can move to Section 3.

Alibaba Cloud Elastic Computing Service (ECS) is a virtual computing environment with elastic capabilities provided by Alibaba Cloud. It includes CPU, memory, and other fundamental computing component. A user can choose an ECS instance from a variety that suits ones need (compute optimized, I/O optimized) with adequate CPU cores , memory, system disk, additional data disk (optional), network capacity and choose a relevant OS for the ECS.

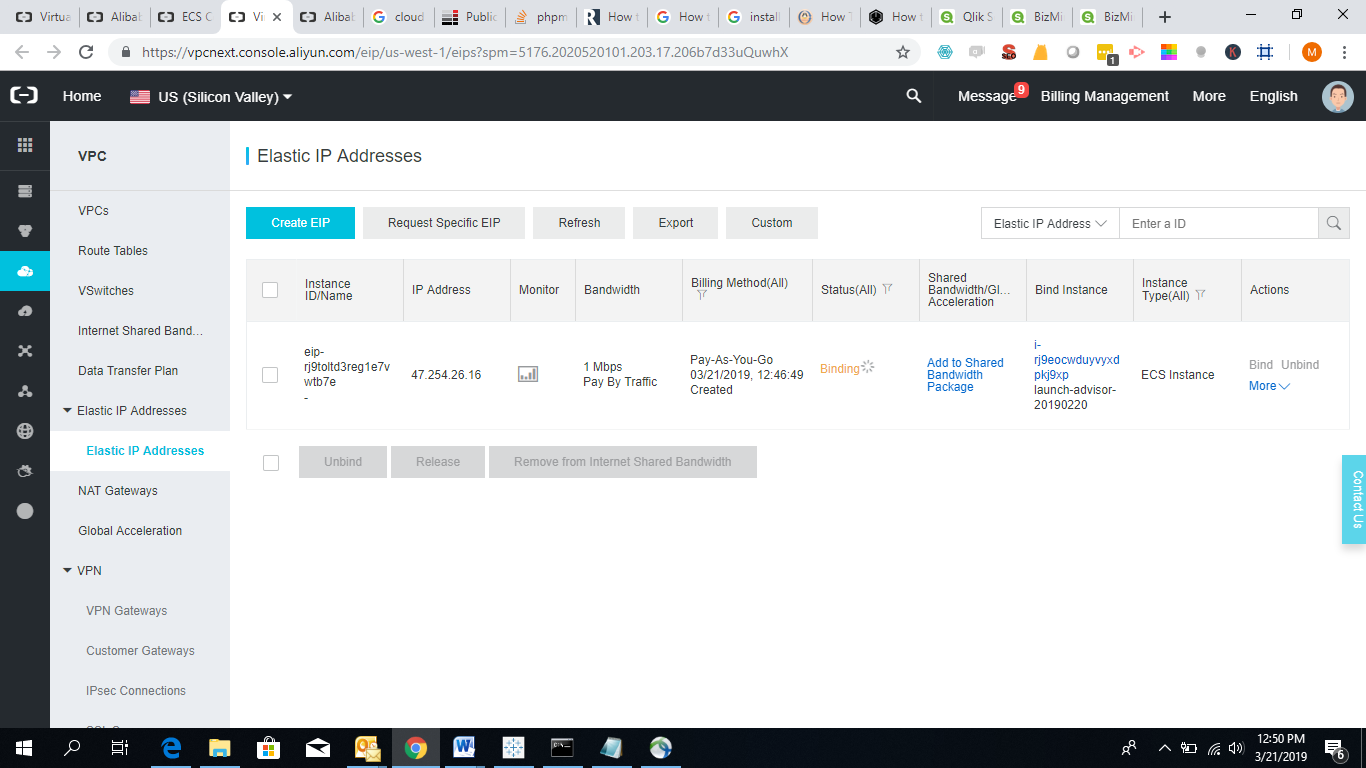

Alibaba Cloud Elastic IP address (EIP) is a public IP address that is independent of the instance that is provided by Alibaba Cloud. It can be purchased independently and associated to the relevant ECS, or other cloud resource (SLB, NAT gateway).

If your ECS does not have a public IP, one may select an EIP and bind it to the ECS, else for this tutorial public IP can also be used for downloading relevant packages from the internet.

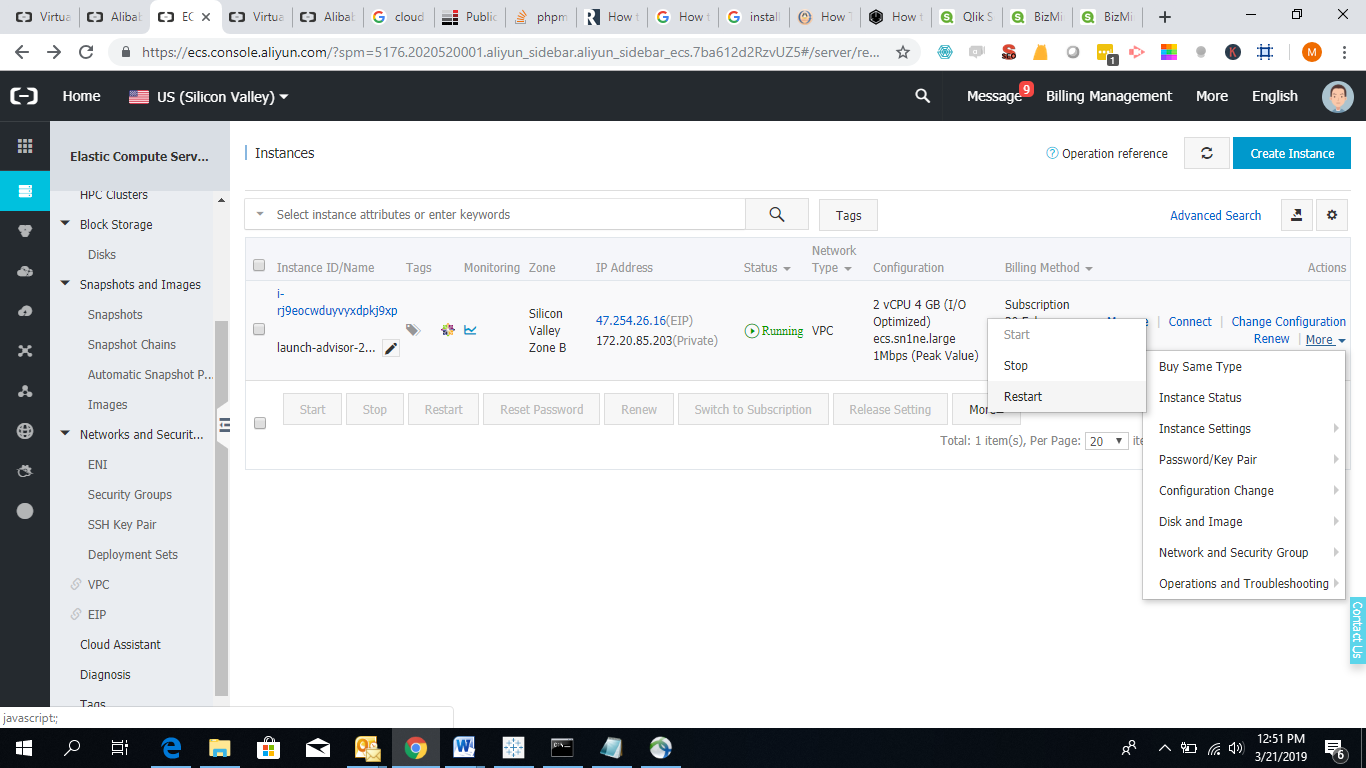

Before you can setup a Python on Alibaba cloud you have to buy the cloud ECS instance. Based on your need, you can choose to opt for PAYG (Pay as you go mode) with no long term commitment or save by initially committing to the period of usage by selecting subscription mode.

Choose the ECS instance with the required memory and CPU and sufficient system storage. We recommend using at least a 2vCPU, 4 GB memory and 30GB of ultra-cloud system disk, and scale as the need grows.

By default you would get a private IP but in order to connect your ECS instance to the internet you would need an Elastic Public IP which would be charged for traffic. This is needed so the relevant packages could be downloaded to your ECS instance. If you haven't acquired the network bandwidth with the ECS you would need to buy an EIP and then bind it to your instance. By default the ECS security group allows the internet traffic. In order to secure your ECS instance either unbind the EIP after downloading the required packages or secure your ECS by allowing only relevant traffic using security group

Python is a powerful general-purpose high-level programming language known for being human interpretable (code readability) and easy to understand. Moreover, it has become a popular choice among data scientists, big data developers and machine learning practitioners because of its huge supportive community and vast amount of libraries. One can find python libraries in statistics to deep learning.

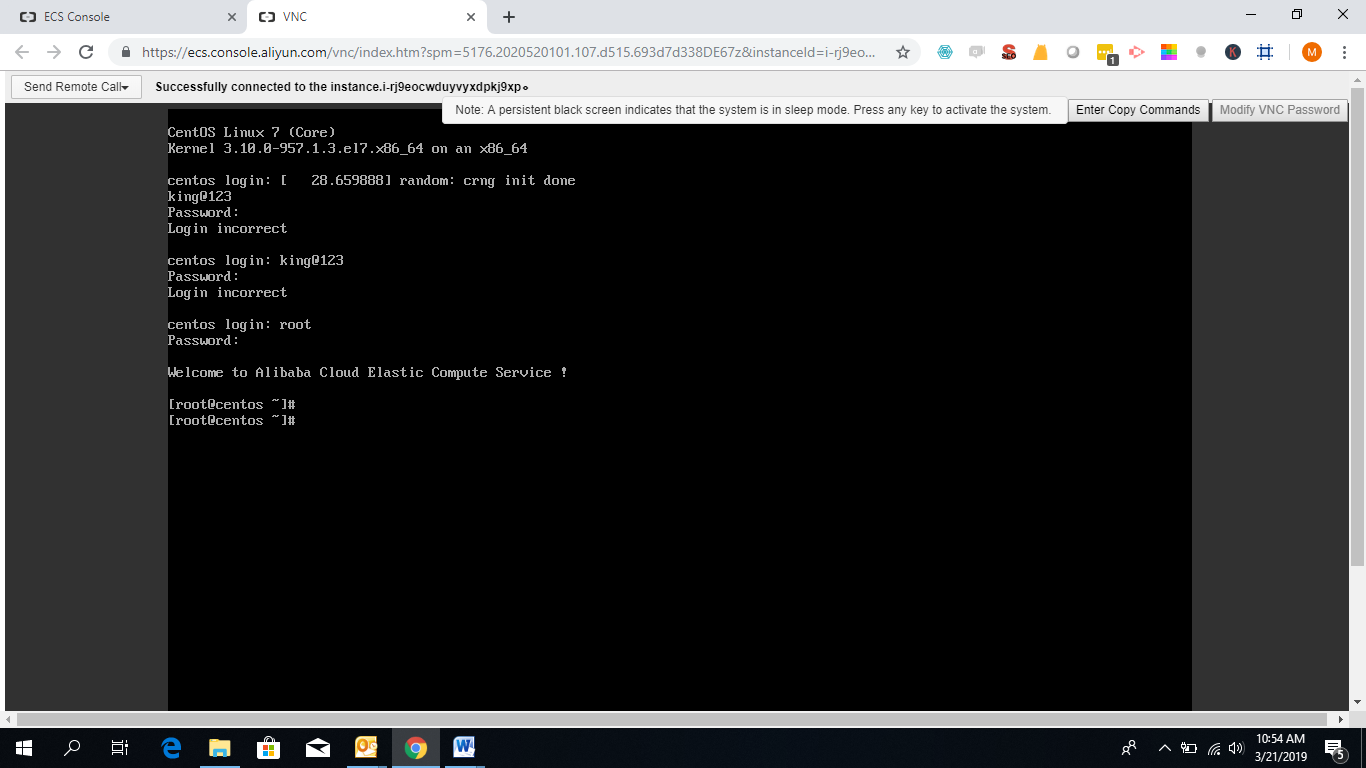

1. Connect to your ECS instance using the Alibaba Cloud console

2. Insert the VNC password.

You would get a similar screen as shown below, once you have successfully logged in

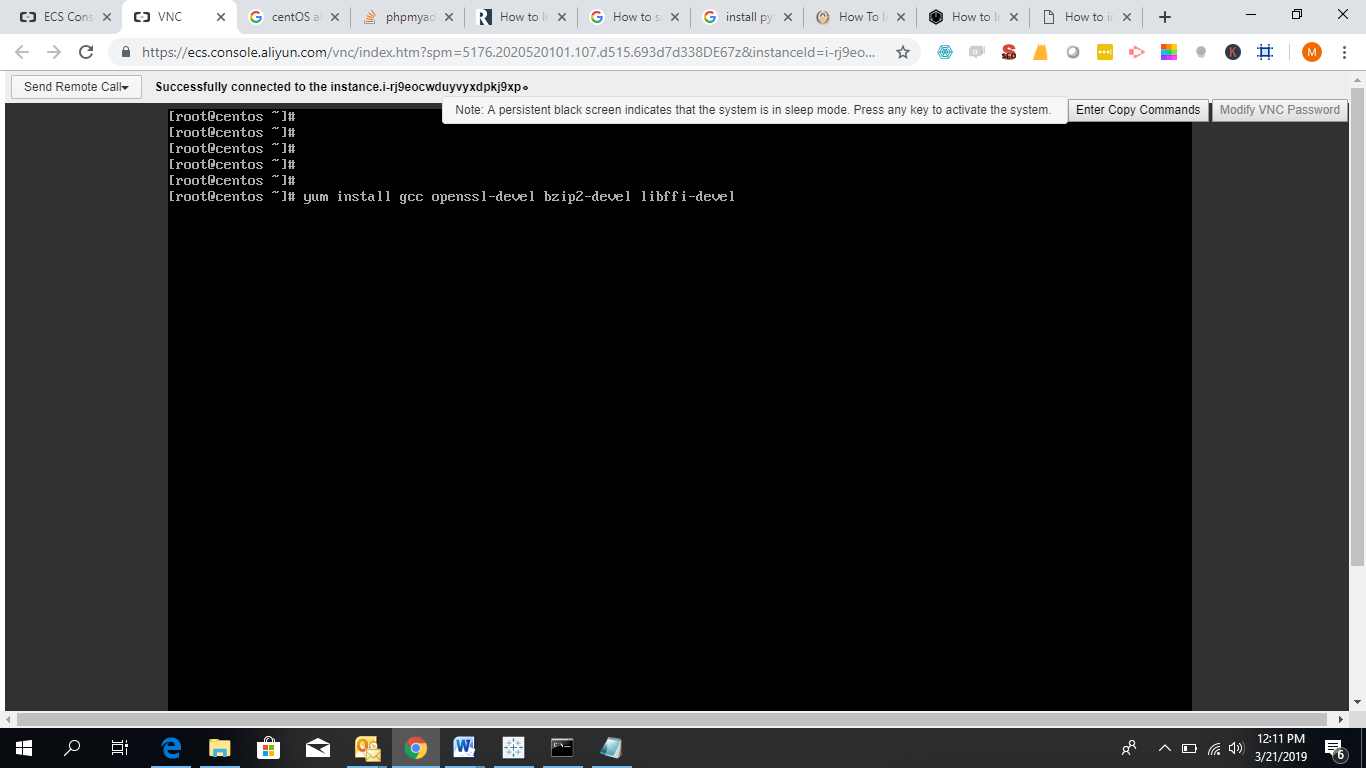

3. Run the following command in your machine after logging in successfully. This would install the gcc compiler in your Linux machine which is needed for installing Python.

yum install gcc openssl-devel bzip2-devel libffi-develNote: In order to avoid writing such a long statement on the prompt you can copy the command above and paste it on your ECS instance using the power of Alibaba Cloud console feature. There is a button on top right called Enter copy Commands, this would paste any statement copied by you on your machine to your ECS instance.

4. For downloading python in a specific directory of your choice move to that directory and run the wget command. For this guide we are changing the directory to /usr/src where we would download the python package.

Specifcially, run the following commands:

cd /usr/src

wget https://www.python.org/ftp/python/3.7.2/Python-3.7.2.tgzNext, extract the files from using tar, do so by running the tar xzf Python-3.7.2.tgz command.

5. In order to install the extracted python package, move to the python directory, configure it and install it using the following commands:

cd Python-3.7.2

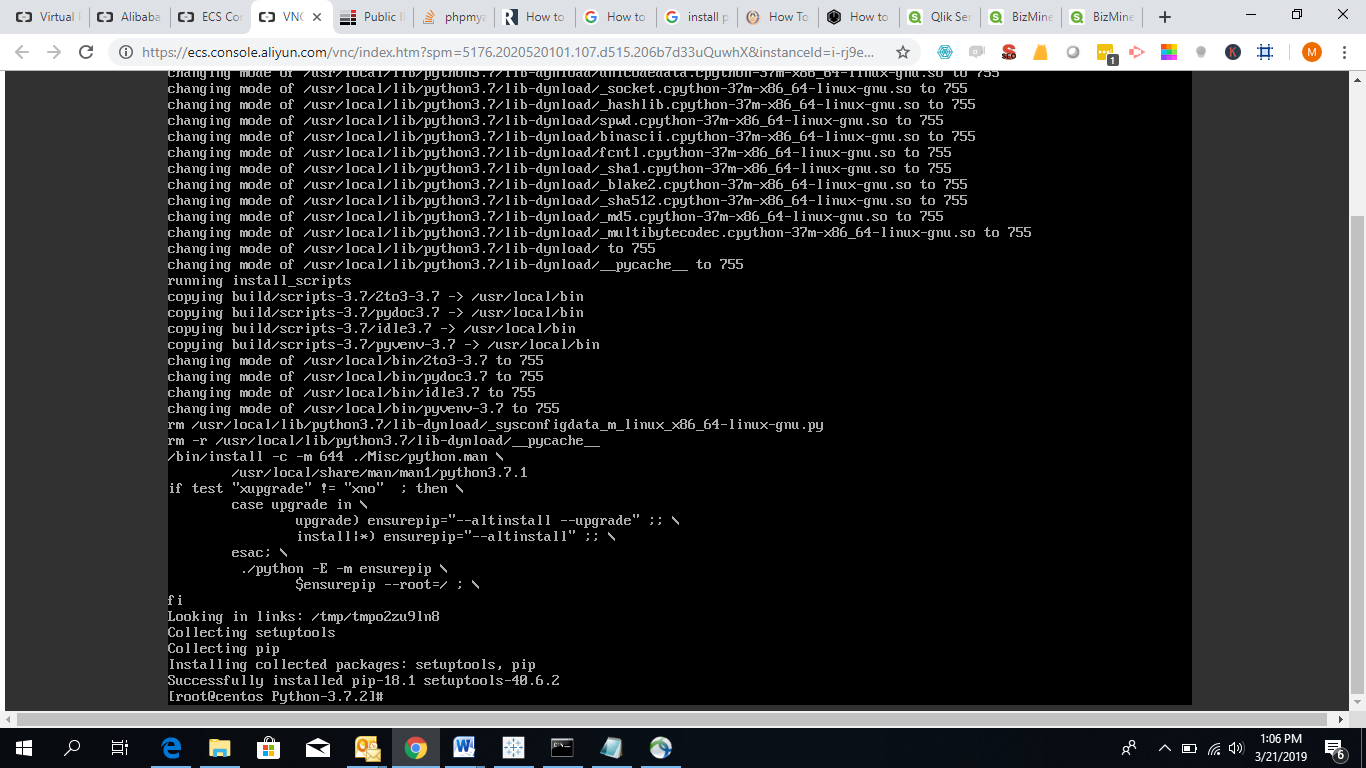

./configure --enable-optimizationsOnce configured, run the make altinstall command. This command would install the Python and its dependencies on the system. If the command runs successfully, you would see the same message as shown in the below screen. The output of the final message would be "Successfully installed".

6. (Optional) You may remove the downloaded python package now to free some space, by running the rm /usr/src/Python-3.7.2.tgz command.

7. Check python successfully installed by requesting the python version. Do so, by running the python3.7 –V command.

In the instance above both Python versions Python2.x and Python 3.x are installed and could be invoked by different commands. Running just "python" would execute Python 2.x and running "python3.7" would execute Python 3.x

Spark is an open-source cluster computing framework. In other words, it is a resilient distributed data processing engine. It was introduced as an improvement to Hadoop, with additional features like in-memory process, stream processing and less latency. Spark is written in Scala but offers support to the following other languages as well, such as Java, Python, and R. The main use case of Spark is to perform ETL and SQL on large datasets, perform streaming analysis and machine learning on big data. The main offerings or the components of Spark are:

PySpark is a combination of Apache Spark and Python. Thanks to the collaboration of the two platforms one can use the simplicity of Python language to interact with powerful spark components (mentioned in Section 2) to process the big data.

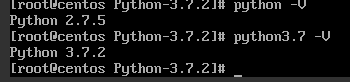

1. We have to ensure that Java is installed.

Let's check whether Java is installed by running the Java –version command.

Install Java, if not already installed, to setup Spark by following Step-2, else move to Step-4.

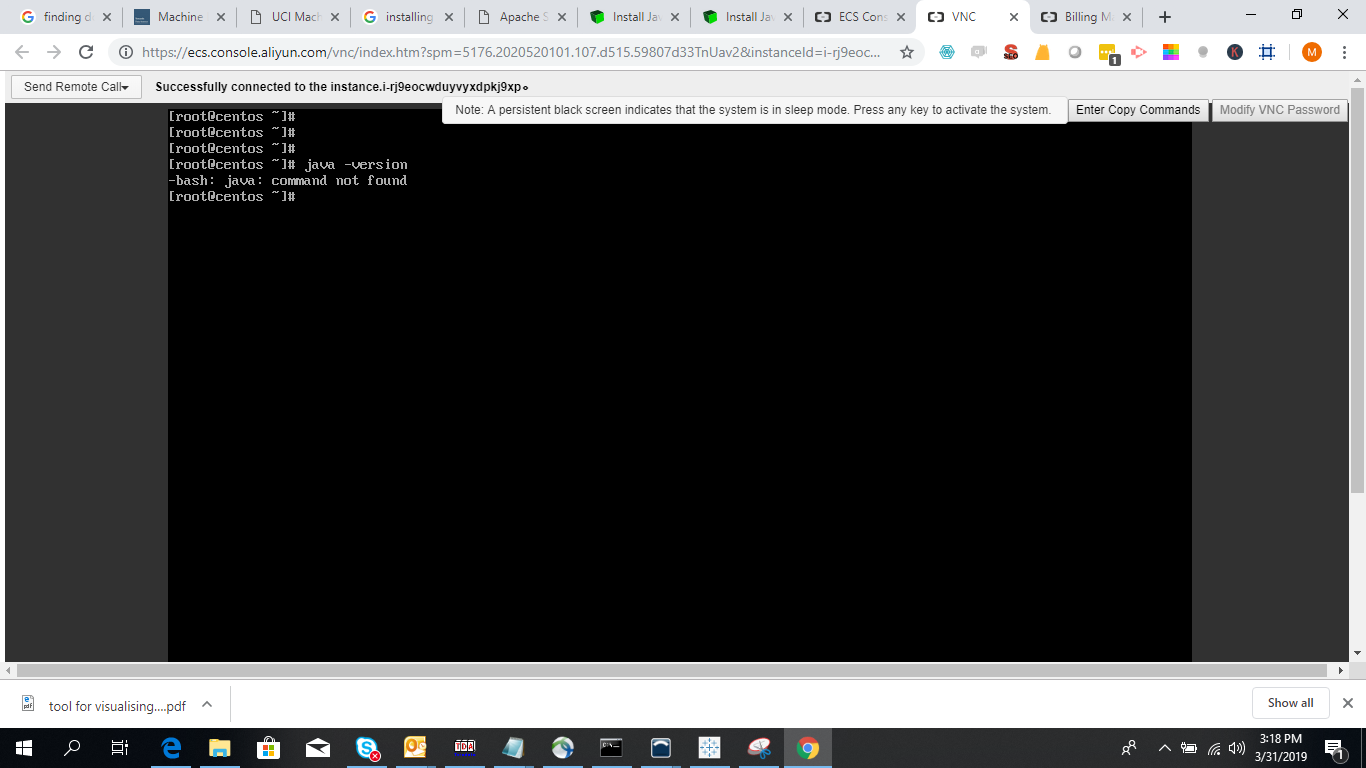

2. Update your system by running the following commands:

sudo yum update

sudo yum install java-1.8.0-openjdk-headlessEnter y and press enter to install.

3. Run the java –version command to ensure successful installation.

4. Change directory by running the cd /opt command, and download spark binaries by running the following command:

wget https://www-eu.apache.org/dist/spark/spark-2.4.0/spark-2.4.0-bin-hadoop2.7.tgzIf the link is broken check for new updated link at the following Apache website.

5. Untar the binary by running the tar -xzf spark-2.4.0-bin-hadoop2.7.tgz command.

6. Enter the cd spark-2.4.0-bin-hadoop2.7 command.

7. Consider this basic configuration guide about Spark.

Now the spark cluster is ready to be configured based on shell and Hadoop's deploy scripts that are available at sparks directory /sbin:

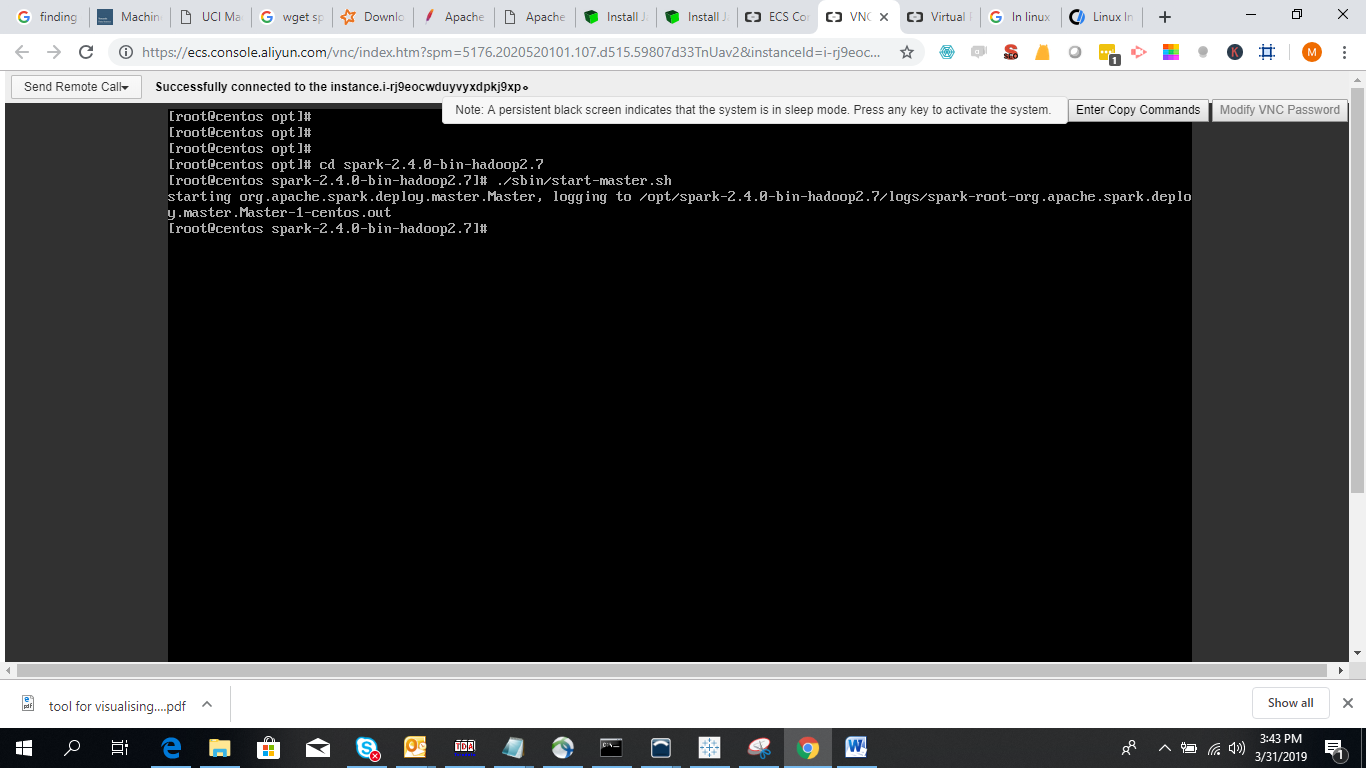

sbin/start-master.sh: Starts a master instance on the machine the script is executed on.sbin/start-slaves.sh: Starts a slave instance on each machine specified in the conf/slaves file.sbin/start-slave.sh: Starts a slave instance on the machine the script is executed on.sbin/start-all.sh: Starts both a master and a number of slaves as described above.sbin/stop-master.sh: Stops the master that was started via the sbin/start-master.sh script.sbin/stop-slaves.sh: Stops all slave instances on the machines specified in the conf/slaves file.sbin/stop-all.sh: Stops both the master and the slaves as described above.To configure the ECS node as master we would run the sbin/start-master.sh command, or one of the scripts shown below:

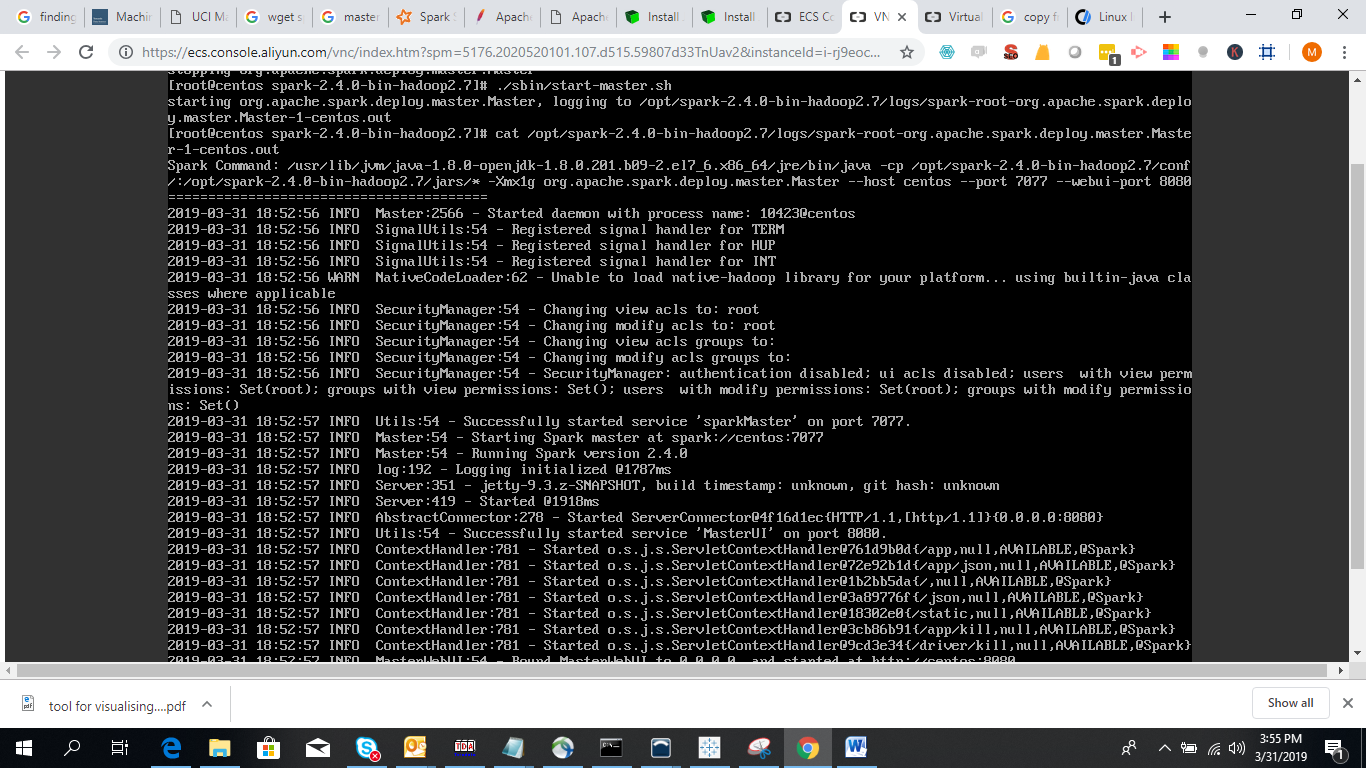

8. You can open the log file to see the port at which master is running

cat /opt/spark-2.4.0-bin-hadoop2.7/logs/spark-root-org.apache.spark.deploy.master.Master-1-centos.out

Master URL is spark://centos:7077.

9. Now setup the slave node (you may run as many slave nodes and connect to the master node)

For starting a slave process on the second node, while being inside the spark directory:

./sbin/start-slave.sh <master-spark-URL>In my case it is:

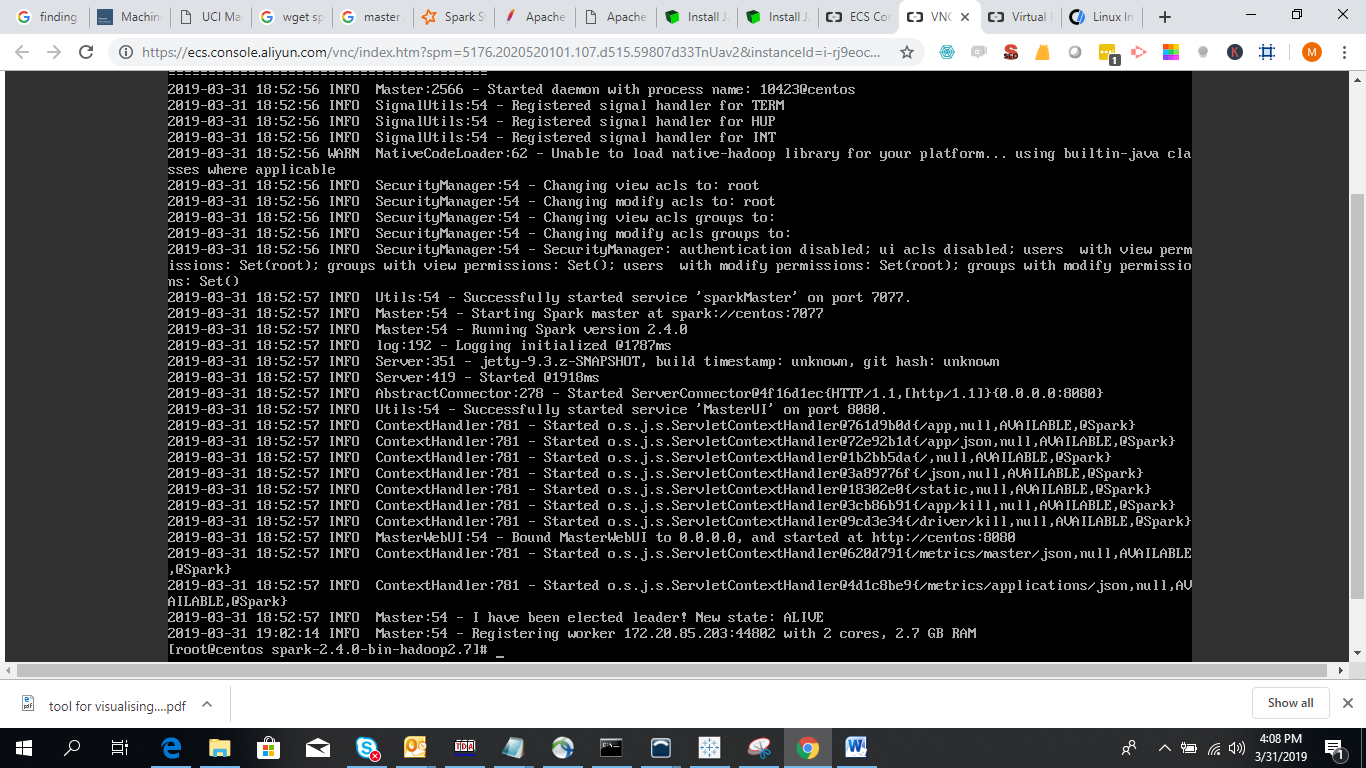

./sbin/start-slave.sh spark://centos:7077You can now reopen the master log to see if it has connected.

10. The worker has been registered.

Now it is time to update the path directory

export SPARK_HOME=/opt/spark-2.4.0-bin-hadoop2.7

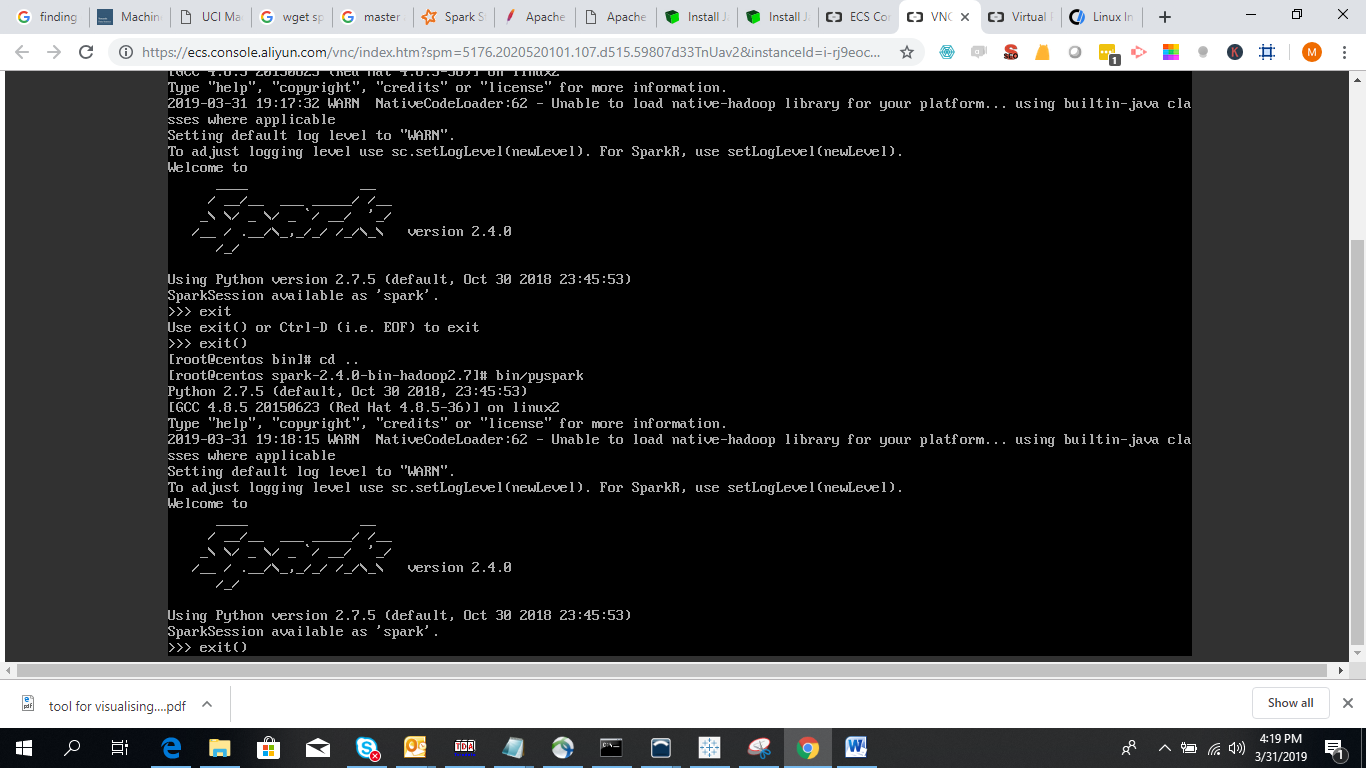

export PATH=$SPARK_HOME/bin:$PATH11. Now let's run Spark to see it is installed perfectly

bin/pyspark

You can enter the command exit() to leave spark

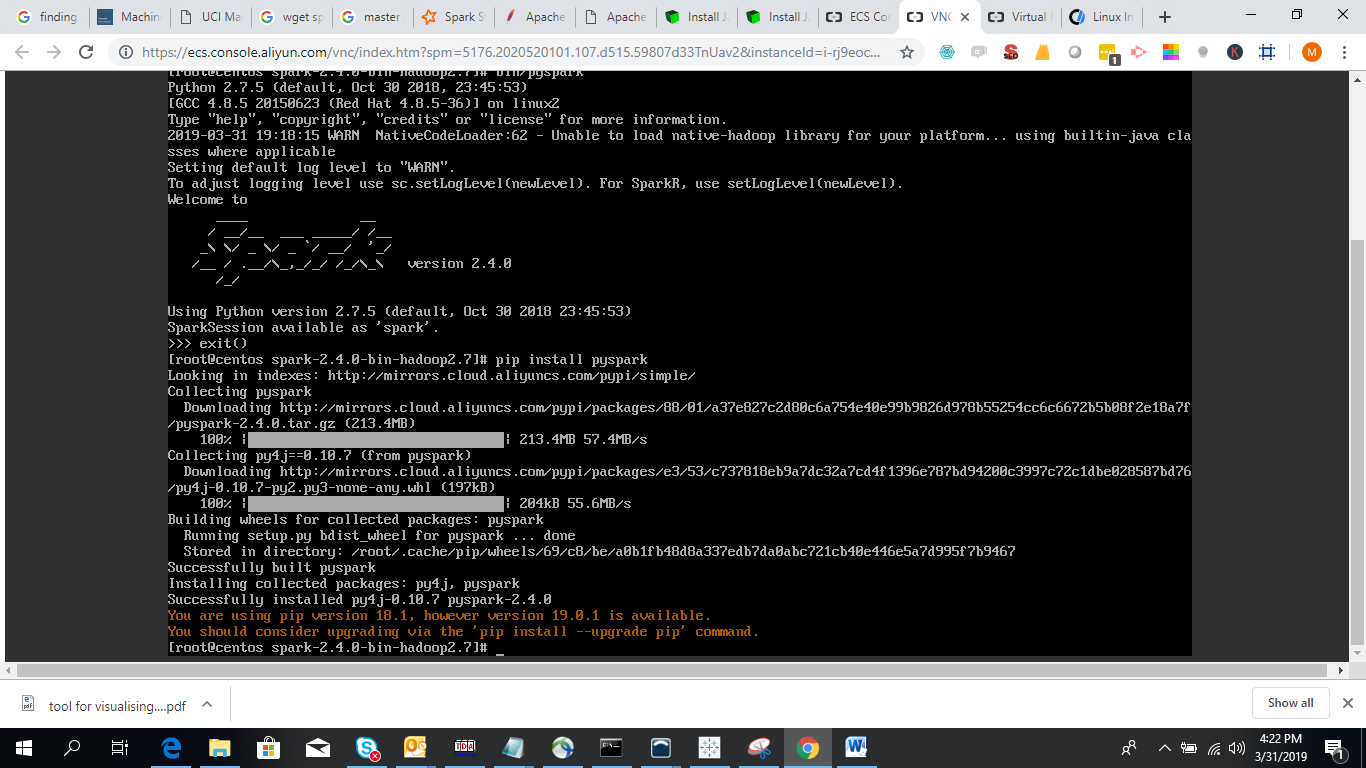

12. Now you have setup both Python and Spark. In order to leverage Python APIs on Spark all you need is pyspark. PySpark can be downloaded and installed from PyPi repository.

Run the pip install pyspark command.

Now to get started here is a basic example.py file that leverages Spark with Python API using pyspark library.

Enter the python command:

Run the following command line by line to see how Python API leverages spark

from pyspark import SparkContext

outFile = "file:///opt/spark-2.4.0-bin-hadoop2.7/logs/spark-root-org.apache.spark.deploy.master.Master-1-centos.out"

sc = SparkContext("local", "example app")

outData = sc.textFile(outFile).cache()

numAs = logData.filter(lambda s: 'a' in s).count()

print("Lines with a: %i " % (numAs))

2,605 posts | 747 followers

FollowAlibaba Cloud MaxCompute - June 2, 2021

Alibaba EMR - September 23, 2022

Alibaba Clouder - April 9, 2019

Alibaba Clouder - September 2, 2019

Alibaba EMR - August 28, 2019

Alibaba Cloud Indonesia - February 15, 2024

2,605 posts | 747 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Clouder