Apache Arrow was introduced in Spark 2.3. The efficiency of data transmission between JVM and Python has been significantly improved through technology provided by Column Store and Zero Copy.

This article looks into how you can use Apache Arrow to Assist PySark in data processing operations, and also discusses Apache Arrow and its usage in Spark in general and how the efficiency of data transmission has been improved with Column Store and Zero Copy.

Before we look into how you can use Apache Arrow in Spark, let's take a brief look at Apache Arrow and first Column Store.

To understand Column Store, you need to first know that most storage engines used Row Store before the big data era. Many early systems, such as trading systems and ERP systems, process all the information of an entity by adding, deleting, modifying, and querying each time. With Row Store, a single entity can be quickly located and processed. But with Column Store, operations on different attributes of an entity require multiple random reads and writes, which can be very inefficient.

However, with the advent of the big data era, especially given the continuous development of data analysis, a task does not need to read all attributes of an entity at one time, but rather a task only focuses on specific attributes, and performs complex operations, such as Aggregate, on these attributes. Given this, additional data will need to be read for Row Store, which in turn causes a bottleneck in the system. However, for the newer developed Column Store, the processes of reading extra data can be greatly reduced, and the data with the same attribute can also be compressed, greatly accelerating the processing speed.

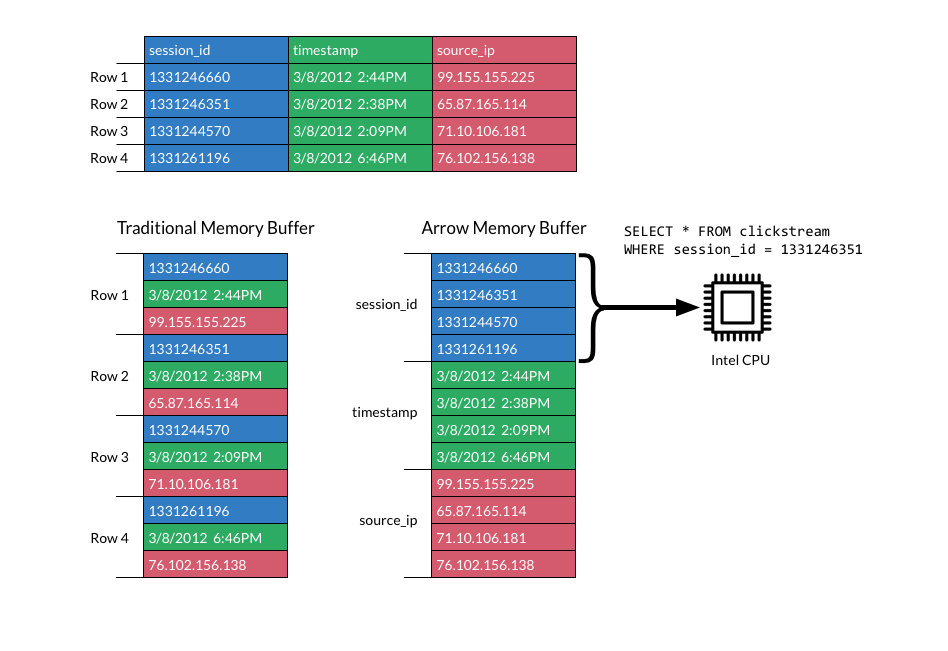

The following is a comparison between Row Store and Column Store. It is taken from the Apache Arrow official website. The upper is a two-dimensional table consisting of three attributes: session_id, timestamp, and source_ip. On the left is the representation of Row Store in memory. Data is stored in sequence by rows, and each row is stored in sequence by columns. On the right is the representation of Column Store in memory. Each column is stored separately, and the cluster size of columns written at one time is controlled based on attributes, such as batch size. As such, when the query statement involves only a few columns (such as the SQL query in the figure), only the session_id column needs to be filtered to avoid reading all data columns, thus reducing a large amount of I/O loss. Meanwhile, considering the CPU pipeline and the use of CPU SIMD technology, the query speed will be greatly improved.

In the big data field, Column Store is inspired by the Dremel paper published in 2010 by Google. The Dremel paper discusses a storage format that supports nested structures, and uses Column Store to improve query performance. It also describes how Google uses this storage format to implement parallel queries. This paper affects the development of the Hadoop ecosystem. Apache Parquet and Apache ORC have been used by Hadoop ecosystems, such as Spark, Hive, and Impala, as Column Store formats.

But what is Apache Arrow anyway? Well, as defined on the official website, Apache Arrow is a cross-language and cross-platform memory data structure. From this definition, we can see the differences between Apache Arrow, and Apache Parquet, as well as Apache ORC. Parquet and ORC are designed to compress disk data by using efficient compression algorithms based on Column Store. For example, algorithms, such as Snappy, Gzip, and Zlib, are used to compress column data. Therefore, in most cases, data is first decompressed when it is read, which consumes some CPU. For Arrow, the data in memory is not supported to be compressed (data written to the disk is supported to be compressed). Arrow performs similar indexing operations through the dictionary-encoded mode.

In addition to Column Store, Arrow is also very powerful in data transmission across languages. The cross-language features of Arrow are shown in the Arrow specification. The author of the specification specifies layout of different data types, including the number of bits occupied by different original data types in memory, the composition of array data, and the representation of Null values. Based on these definitions, if Arrow is used in different platforms and different languages, a completely identical memory structure is adopted.

Therefore, following this, it is possible to transmit data efficiently between different platforms and languages. Prior to Arrow, serialization and deserialization technology must be used to transmit data in different languages, which consumes a lot of CPU resources and time. However, Arrow has the same data structure in memory according to the specifications, so the Arrow memory structure can be shared through technologies, such as Shared Memory and Memory-Mapped File, eliminating the serialization and deserialization processes.

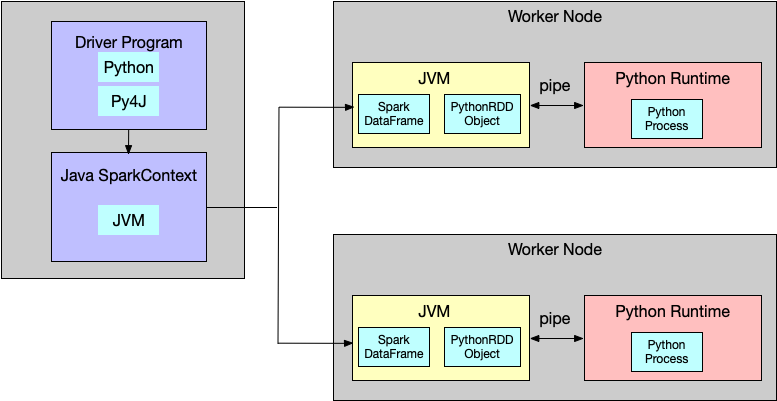

Now that we've looked at Arrow, let's take a look at how Apache Spark uses Arrow to accelerate PySpark processing. For a long time, customers using PySpark have complained about the inefficiency of Python. Following this, many users also turned to Scala for their development workflows. Spark is developed in the Scala language and the JVM is started at the underlying layer, while PySpark is a Python sub-process started by the PythonRDD object in Scala. Py4J is used for communication between Python and JVM, and Java objects in JVM can be dynamically accessed through Py4J Python using the Linux pipe. RDD needs to be serialized in the underlying JVM and deserialized in Python. When dealing with large data volumes, this will be far less efficient than directly using Scala. The process is shown in the following figure.

Many data scientists and analysts are accustomed to using Python to process data, especially using Pandas and Numpy libraries for subsequent data processing. For Spark 2.3 and later, Arrow can greatly improve this efficiency. From the code point of view, in the dataframe.py code of Spark 2.4, the implementation of toPandas is:

if use_arrow:

try:

from pyspark.sql.types import _check_dataframe_convert_date, \

_check_dataframe_localize_timestamps

import pyarrow

batches = self._collectAsArrow()

if len(batches) > 0:

table = pyarrow.Table.from_batches(batches)

pdf = table.to_pandas()

pdf = _check_dataframe_convert_date(pdf, self.schema)

return _check_dataframe_localize_timestamps(pdf, timezone)

else:

return pd.DataFrame.from_records([], columns=self.columns)

except Exception as e:

# We might have to allow fallback here as well but multiple Spark jobs can

# be executed. So, simply fail in this case for now.

msg = (

"toPandas attempted Arrow optimization because "

"'spark.sql.execution.arrow.enabled' is set to true, but has reached "

"the error below and can not continue. Note that "

"'spark.sql.execution.arrow.fallback.enabled' does not have an effect "

"on failures in the middle of computation.\n %s" % _exception_message(e))

warnings.warn(msg)

raiseIf Arrow is used (Spark 2.4 is used by default), an important row is _collectAsArrow(). And, _collectAsArrow() is implemented as:

def _collectAsArrow(self):

"""

Returns all records as a list of ArrowRecordBatches, pyarrow must be installed

and available on driver and worker Python environments.

.. note:: Experimental.

"""

with SCCallSiteSync(self._sc) as css:

sock_info = self._jdf.collectAsArrowToPython()

return list(_load_from_socket(sock_info, ArrowStreamSerializer()))ArrowStreamSerializer() is used. ArrowStreamSerializer is defined as:

class ArrowStreamSerializer(Serializer):

"""

Serializes Arrow record batches as a stream.

"""

def dump_stream(self, iterator, stream):

import pyarrow as pa

writer = None

try:

for batch in iterator:

if writer is None:

writer = pa.RecordBatchStreamWriter(stream, batch.schema)

writer.write_batch(batch)

finally:

if writer is not None:

writer.close()

def load_stream(self, stream):

import pyarrow as pa

reader = pa.open_stream(stream)

for batch in reader:

yield batch

def __repr__(self):

return "ArrowStreamSerializer"It can be seen that, after the JVM converts the memory data structure set up according to the Arrow specification into column-based structure, the Python layer does not need any reverse sequence process, but directly reads the data, which is also one of the reasons why Arrow is efficient.

For comparison, if the Arrow method is not used, then:

def collect(self):

"""Returns all the records as a list of :class:`Row`.

>>> df.collect()

[Row(age=2, name=u'Alice'), Row(age=5, name=u'Bob')]

"""

with SCCallSiteSync(self._sc) as css:

sock_info = self._jdf.collectToPython()

return list(_load_from_socket(sock_info, BatchedSerializer(PickleSerializer())))The serialization method is PickleSerializer. Each piece of data needs to be deserialized by using PickleSerializer.

So, how can we use this feature for development? Spark provides Pandas UDFs, that is, vectorized UDFs. A Pandas UDF transfers Spark DataFrame in JVM to Python through Arrow to generate Pandas DataFrame and executes the UDF for definition. Currently, two types are available: Scalar and Grouped Map.

Here, the Scalar Python UDFs will be given a brief introduction to its use and possible scenarios. Scalar Python UDFs can be used in select and withColumn methods. The input parameters are of the pandas.Series type, and the output parameters are pandas.Series of the same length. Spark internally converts the column data into the pandas.Series type in batches after acquiring the data based on the batch size through Arrow, and executes the UDF in each batch. Finally, the results of different batches are integrated to obtain the final data results.

The following is an example on the official website:

import pandas as pd

from pyspark.sql.functions import col, pandas_udf

from pyspark.sql.types import LongType

# Declare the function and create the UDF

def multiply_func(a, b):

return a * b

multiply = pandas_udf(multiply_func, returnType=LongType())

# The function for a pandas_udf should be able to execute with local Pandas data

x = pd.Series([1, 2, 3])

print(multiply_func(x, x))

# 0 1

# 1 4

# 2 9

# dtype: int64

# Create a Spark DataFrame, 'spark' is an existing SparkSession

df = spark.createDataFrame(pd.DataFrame(x, columns=["x"]))

# Execute function as a Spark vectorized UDF

df.select(multiply(col("x"), col("x"))).show()

# +-------------------+

# |multiply_func(x, x)|

# +-------------------+

# | 1|

# | 4|

# | 9|

# +-------------------+First, udf and multiply_func are defined. The main function is to multiply the data of rows corresponding to column a and column b to obtain the result. Then, the Pandas UDF is generated through the pandas_udf decorator. Finally, the df.select method is used to call the Pandas UDF to obtain the result. It should be noted here that the input and output data of pandas_udf are vectorized data, and multiple rows are contained, which can be set according to spark.sql.execution.arrow.maxRecordsPerBatch.

It can be seen that the Pandas UDF is very simple to use, and only needs to be defined. With the Pandas UDF, we can easily integrate deep learning frameworks with Spark. For example, if we use some deep learning frameworks, such as scikit-learn, in the UDF, we can separately train data in batches. The following is a simple example of using the Pandas UDF for training:

# Load necessary libraries

from pyspark.sql.functions import pandas_udf, PandasUDFType

from pyspark.sql.types import *

import pandas as pd

from scipy.optimize import leastsq

import numpy as np

# Create the schema for the resulting data frame

schema = StructType([StructField('ID', LongType(), True),

StructField('p0', DoubleType(), True),

StructField('p1', DoubleType(), True)])

# Define the UDF, input and outputs are Pandas DFs

@pandas_udf(schema, PandasUDFType.GROUPED_MAP)

def analyze_player(sample_pd):

# return empty params in not enough data

if (len(sample_pd.shots) <= 1):

return pd.DataFrame({'ID': [sample_pd.player_id[0]],

'p0': [ 0 ], 'p1': [ 0 ]})

# Perform curve fitting

result = leastsq(fit, [1, 0], args=(sample_pd.shots,

sample_pd.hits))

# Return the parameters as a Pandas DF

return pd.DataFrame({'ID': [sample_pd.player_id[0]],

'p0': [result[0][0]], 'p1': [result[0][1]]})

# perform the UDF and show the results

player_df = df.groupby('player_id').apply(analyze_player)

display(player_df)In addition, TensorFlow and MXNet can also be used for integration with Spark. Alibaba Cloud EMR Data Science cluster will launch corresponding functions in the near future, to integrates the scheduling and data exchange functions between EMR Spark and deep learning frameworks.

Use Relational Cache to Accelerate EMR Spark in Data Analysis

62 posts | 7 followers

FollowAlibaba Clouder - August 10, 2020

Apache Flink Community - January 21, 2025

Alibaba Clouder - September 2, 2019

Alibaba Clouder - July 26, 2019

Alibaba Cloud Indonesia - February 19, 2024

Alibaba Clouder - April 9, 2019

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by Alibaba EMR