By Yibin Xu (Senior R&D Engineer of Alibaba Cloud Intelligence)

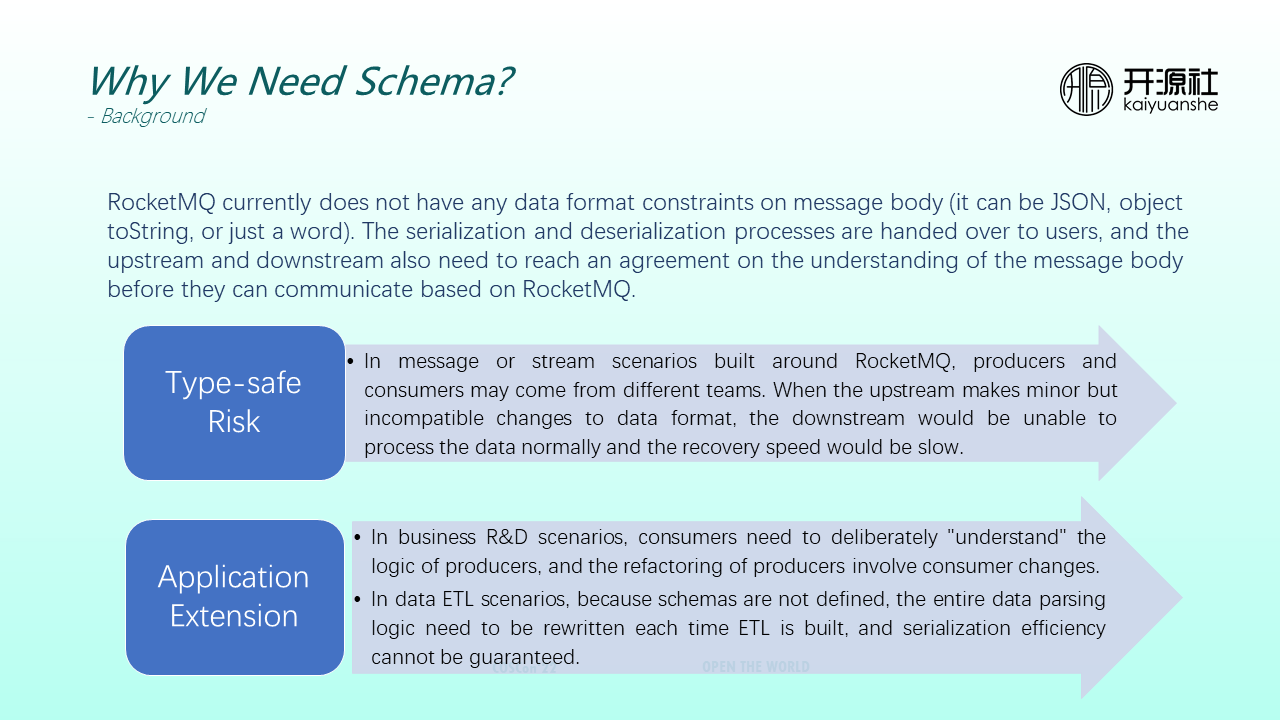

Currently, RocketMQ does not have any m constraints on the message body. It can be JSON, object toString, a word, or a log. Serialization and deserialization are left to the users. The upstream and downstream businesses must agree on the understanding of the message body before they can communicate over RocketMQ. The situation above will lead to the following two problems.

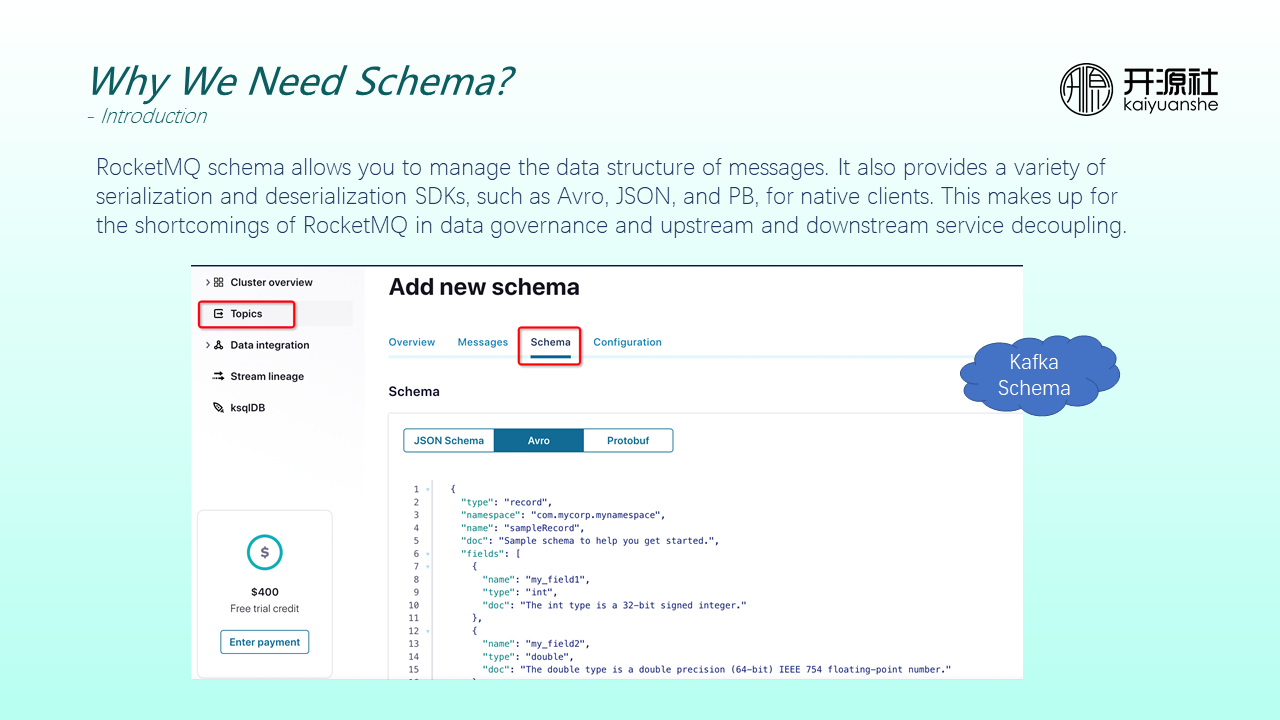

RocketMQ schema allows you to manage the data structure of messages. It also provides a variety of serialization and deserialization SDKs (such as Avro, JSON, and PB) for native clients. This makes up for the shortcomings of RocketMQ in data governance and upstream and downstream service decoupling.

As shown in the preceding figure, when you create topics on Kafka commercial edition, you are reminded to maintain the schemas related to the topics. If the schemas are maintained, the upstream and downstream businesses can clearly understand what data needs to be passed in when they see the topics. This improves R&D efficiency.

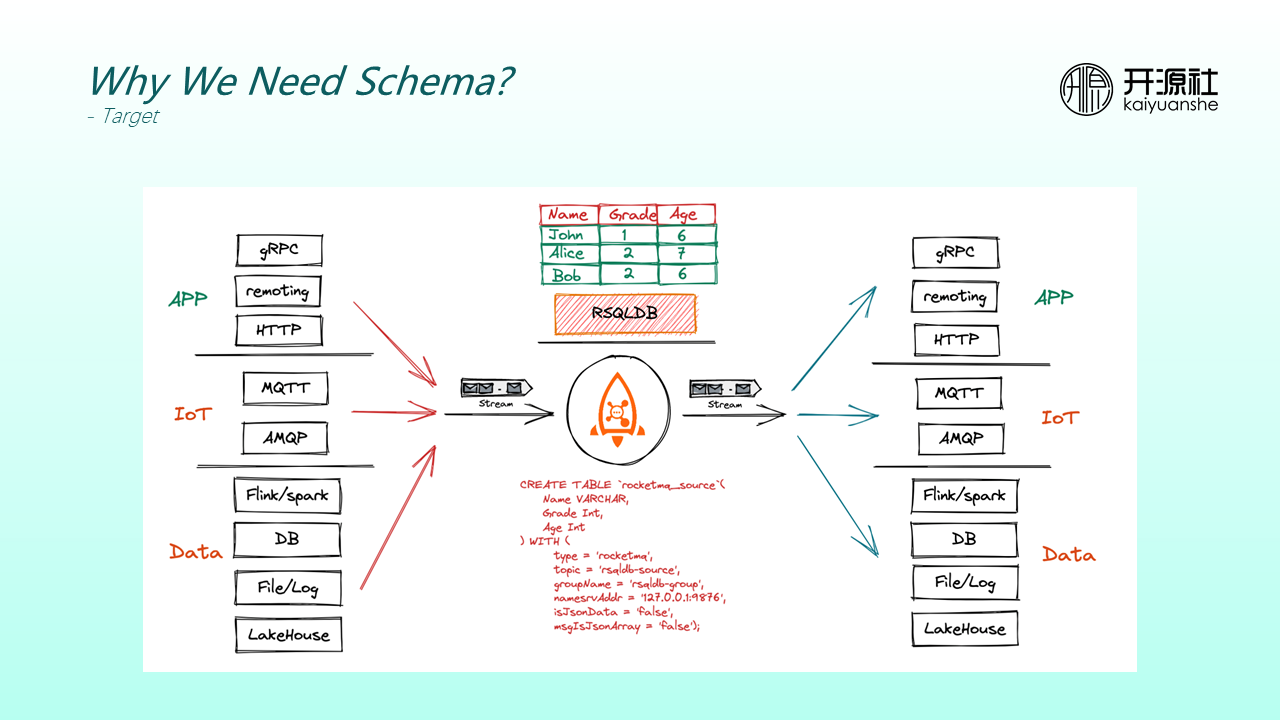

We hope RocketMQ can be used in app business scenarios, IoT messaging scenarios, and big data scenarios to become the business hub of the entire enterprise.

After RSQLDB is added, users can use SQL to analyze RocketMQ data. RocketMQ can be used as a communication pipeline with stream characteristics and as a data deposit, which is a database. If RocketMQ is approaching both the streaming engine and DB engine, its data definition, standardization, and governance become extremely important.

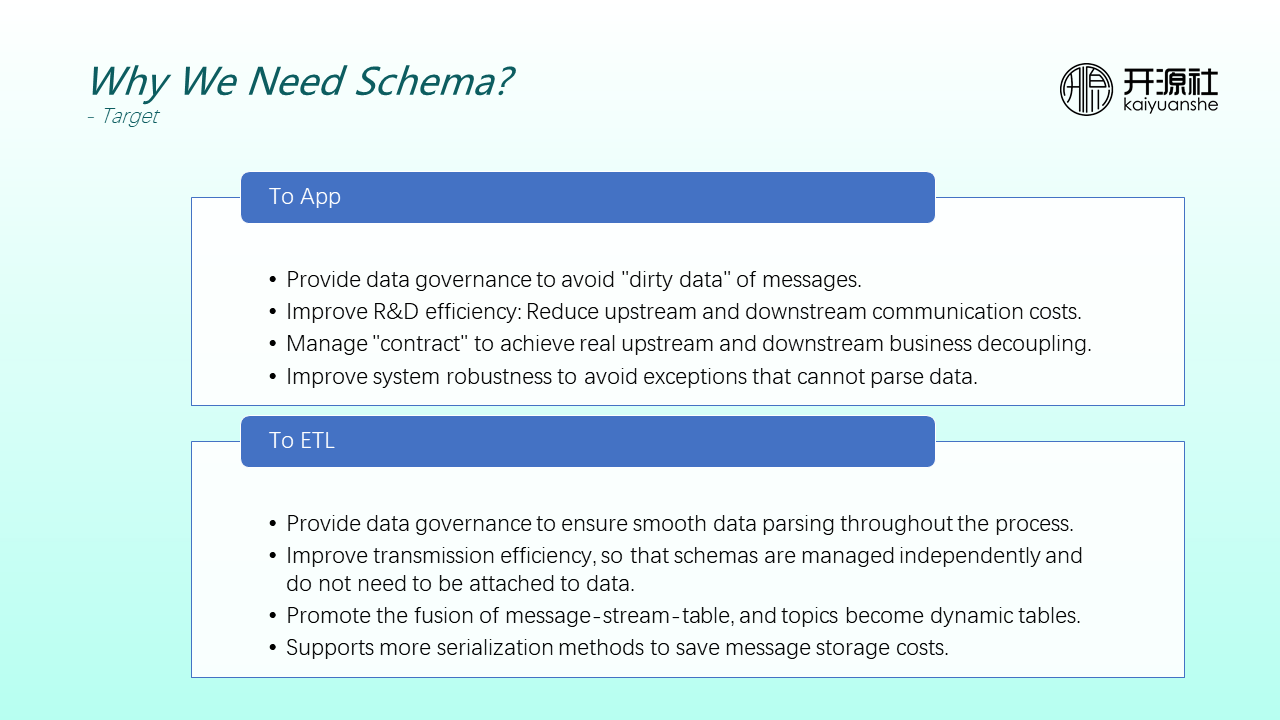

We expect RocketMQ to have the following benefits in business messaging scenarios (after adding schemas):

① Govern Data: Avoid dirty data of messages and prevent producers from generating messages with irregular formats

② Improve R&D Efficiency: Reduce communication costs at the upstream and downstream R&D stage or joint debugging stage of the business

③ Host Contract: Decouple the upstream and downstream of the business in a real sense after managing contracts

④ Improve the Robustness of the Entire System: Avoid data exceptions (such as sudden failure to parse downstream data).

We expect RocketMQ to have the following benefits in streaming scenarios:

① Govern Data: Ensure the smoothness of data parsing throughout the link

② Improve Transfer Efficiency: Improve the transmission efficiency of the entire link as schemas are independently managed without the need to attach them to data

③ Promote the integration of message-stream-table, and topics can become dynamic tables.

④ Support More Serialization Methods to Save Message Storage Costs: JSON is used to parse data in most business scenarios. Avro, which is commonly used in big data scenarios, can reduce message storage costs.

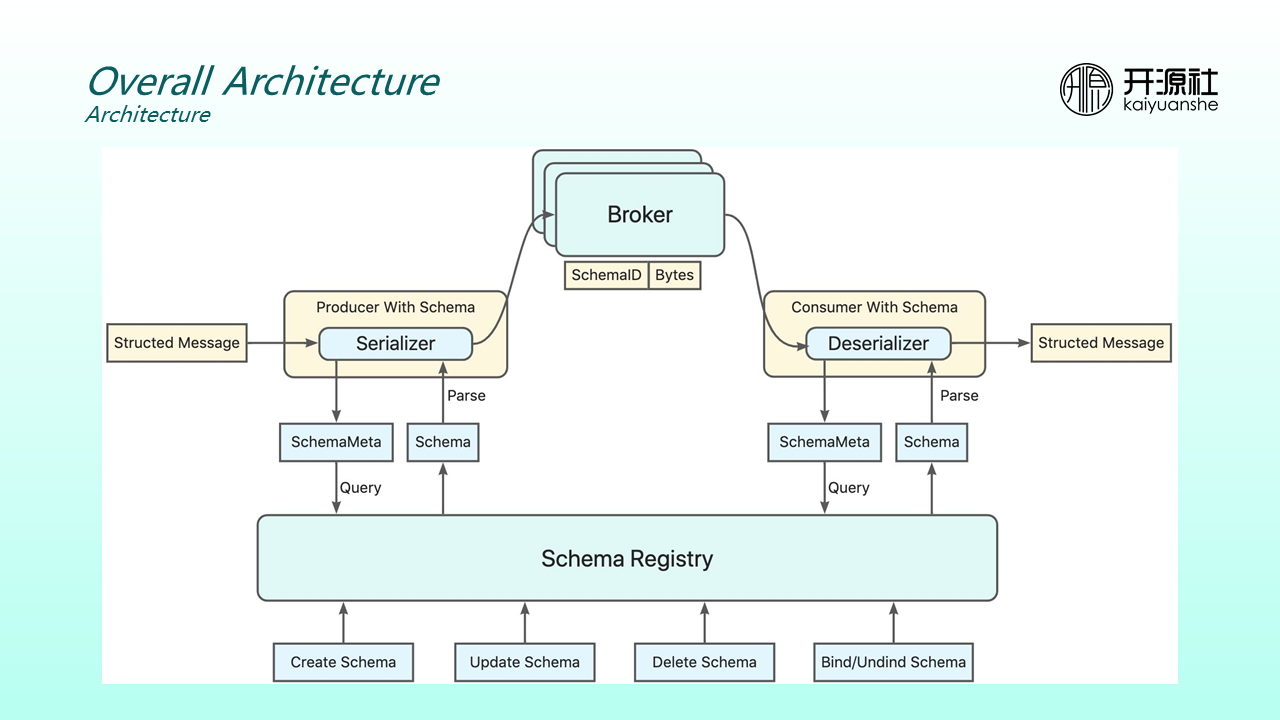

The preceding figure shows the overall architecture after Schema Registry is introduced. Schema Registry is introduced under the original core architecture of producers, brokers, and consumers to host data structures of the message body.

The lower layer is the management APIs of a schema, including creating, updating, deleting, and binding. In schema management APIs' interactions with producers and consumers, producers serialize schemas before sending them to brokers. During serialization, producers query metadata from the registry and then parse schemas. Consumers support query schema based on IDs and topics and then perform deserialization. When sending and receiving messages, you only need to care about struct and do not need to care about how to serialize or deserialize data.

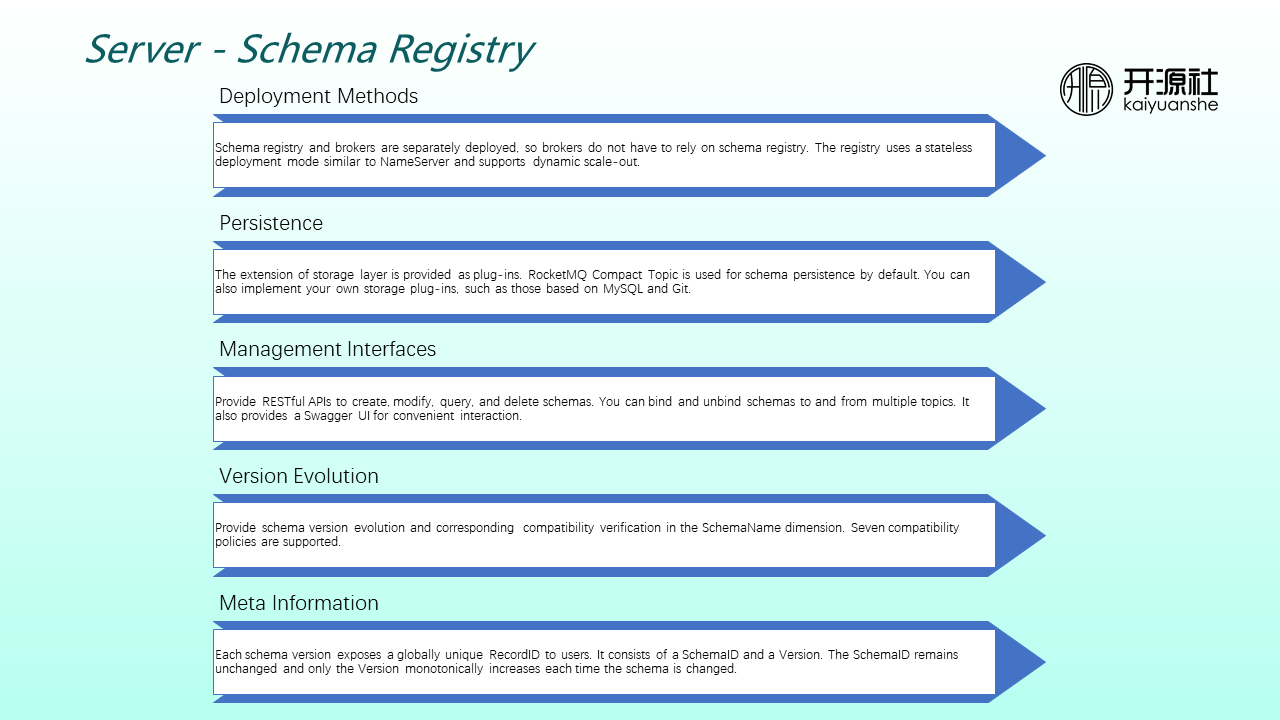

Schema Registry is deployed in a similar way to NameServer and is deployed separately from brokers. Therefore, brokers do not have to rely heavily on Schema Registry. They use a stateless deployment mode and can be dynamically scaled. In terms of persistence, new features of Compact Topic 5.0 are used by default. You can implement storage plug-ins based on MySQL or Git. The management interface provides a RESTful interface for adding, deleting, modifying, and checking. It also supports schemas to bind or unbind with multiple topics.

After the application is started, it provides a built-in Swagger UI for interactive version evolution. It also provides version evolution in the SchemaName dimension and corresponding compatibility check and supports seven compatibility policies. In terms of metadata, each schema version exposes a globally unique RecordID to you. After you obtain the RecordID, you can go to the registry to find the unique schema version.

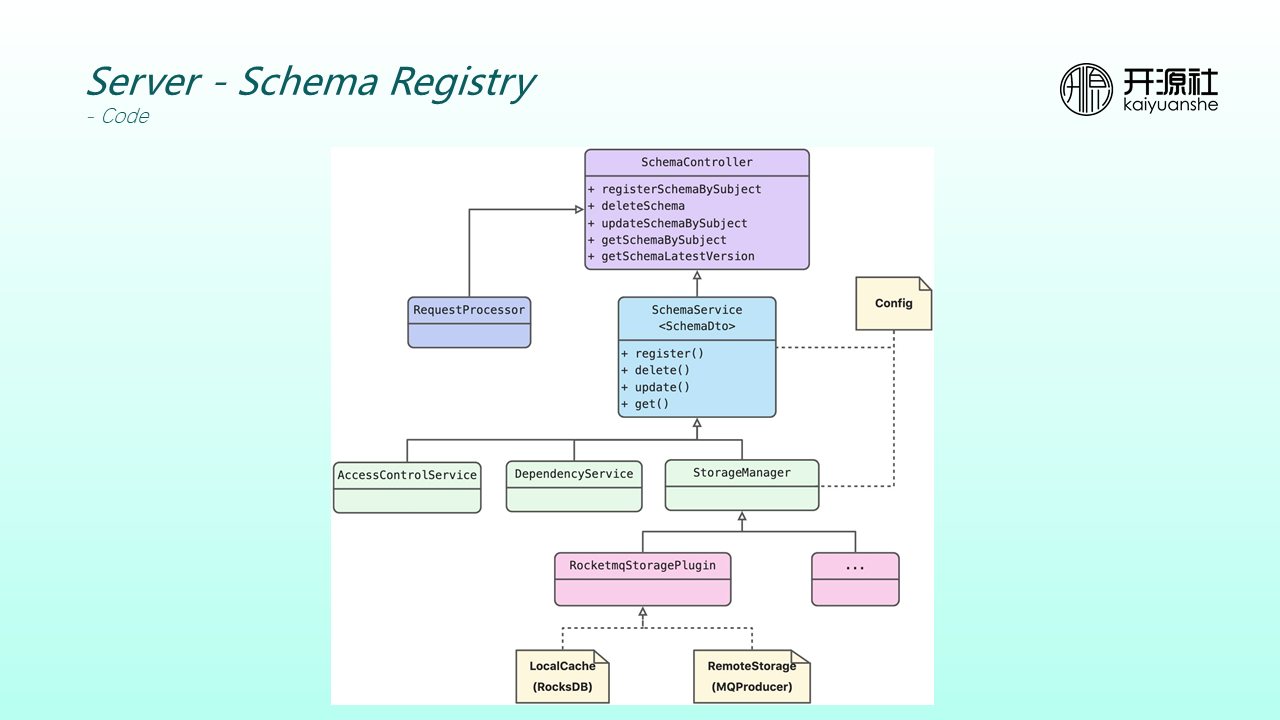

The code is designed as shown in the preceding figure. It mainly exposes a restful interface for Spring boot applications. Under the Controller is the Service layer that involves permission verification, jar package management, and StoreManager. StoreManager includes local cache and remote persistence.

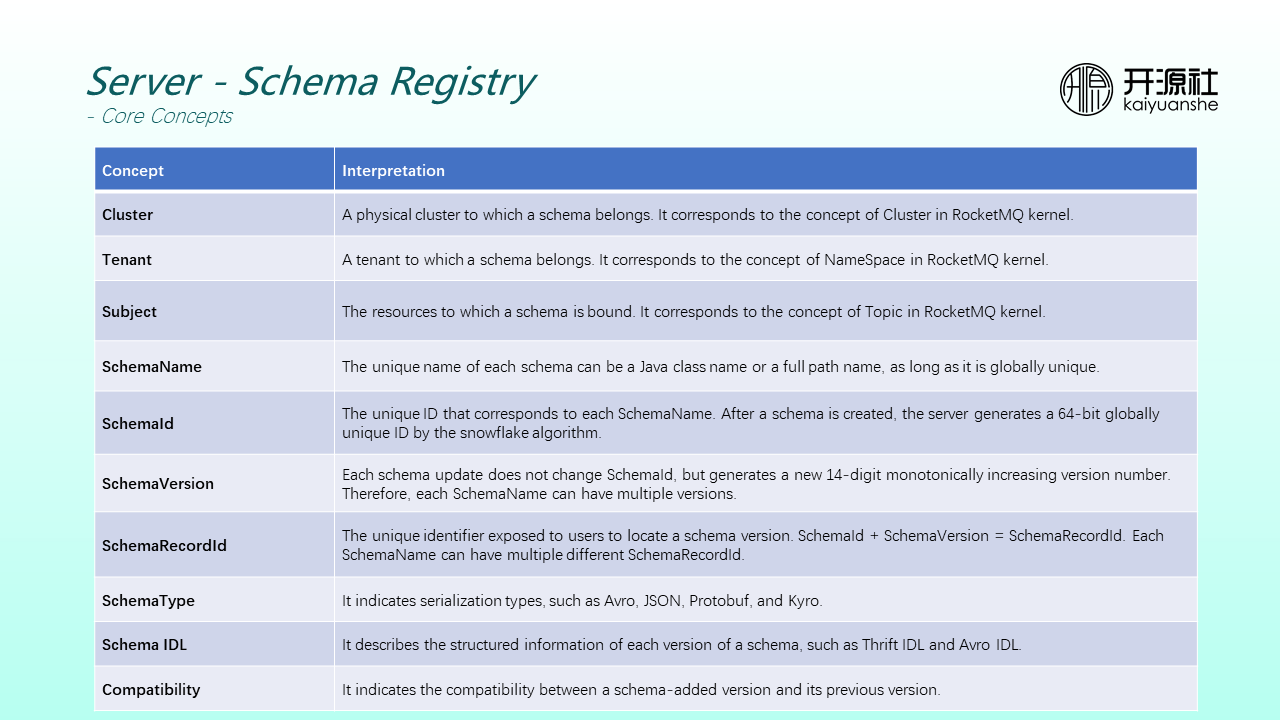

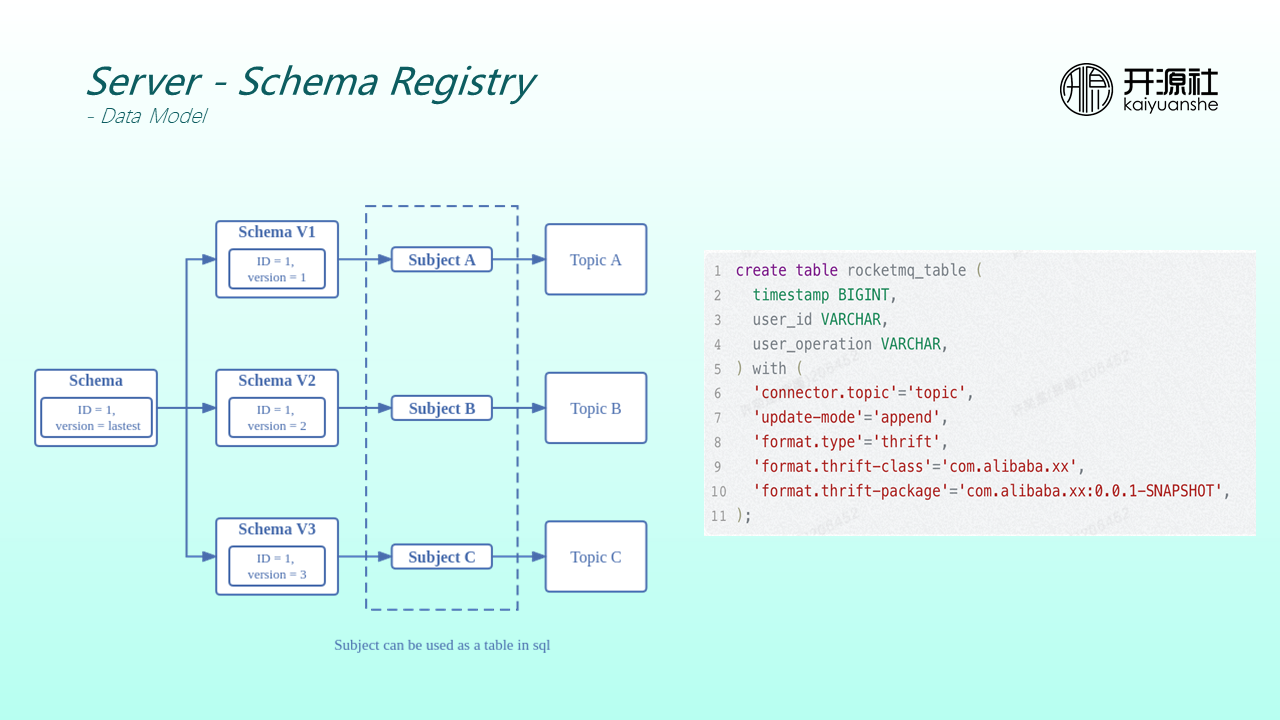

The core concepts of the Schema Registry are aligned with the RocketMQ kernel. For example, clusters in the registry correspond to clusters in the kernel, Tenant corresponds to NameSpace, and subjects correspond to topics in the kernel. Each schema has a unique SchemaName. You can use the Java class name or full path name of your application as the SchemaName to ensure it is globally unique and can be bound to subjects. Each schema has a unique ID generated by the snowflake algorithm on the server. Each update of SchemaVersion does not change the ID but generates a monotonically increasing version number. Therefore, a schema can have multiple different versions.

The ID and version are superimposed to generate a new concept record ID, which is exposed to users for uniquely locating a schema version. SchemaType includes common serialization types (such as Avro, JSON, and Protobuf). IDL is used to specifically describe structured information about a schema.

Each schema has an ID. The ID remains unchanged, but versions can be iterated. For example, from version 1 to version 2 to version 3, each version supports binding with different subjects. Subjects can be understood as Flink tables. For example, in the right figure, Flink SQL is used to create a table. First, create RocketMQ topics and register them with NameServer. You must create schemas and register them with subjects because of the table structure. Therefore, after schemas are introduced, they can be seamlessly compatible with data engines (such as Flink).

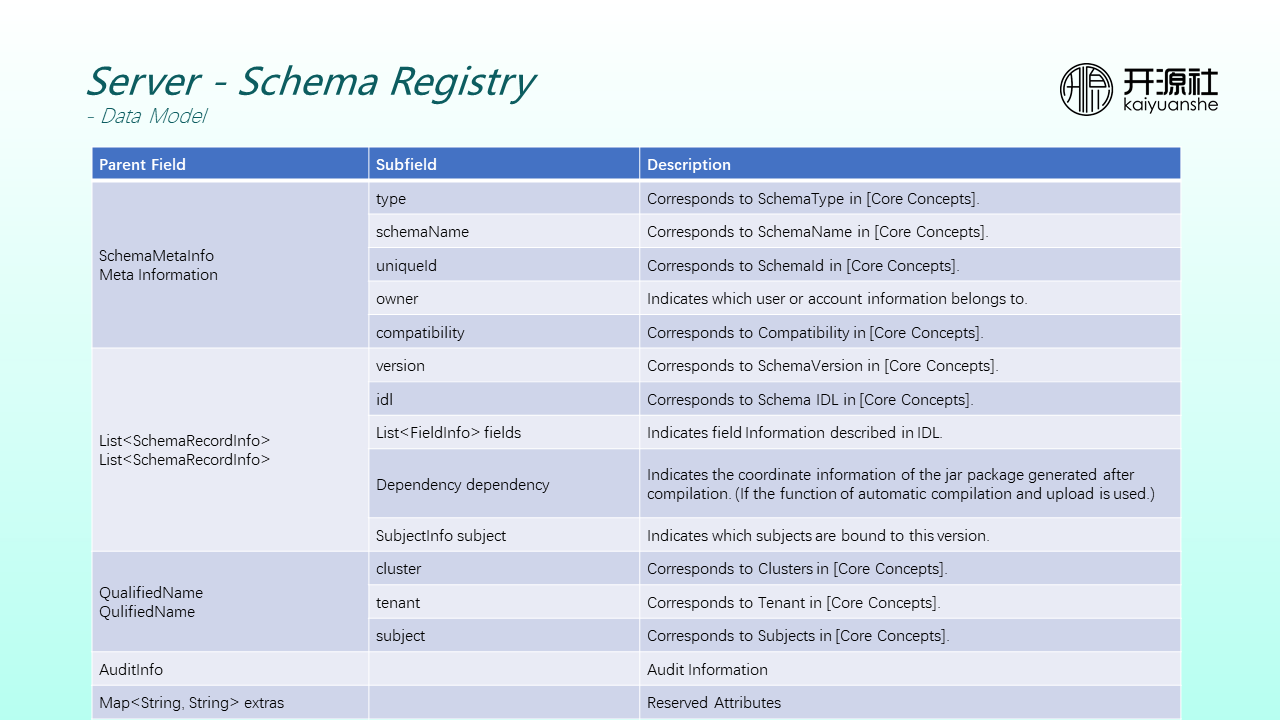

Schemas store the following types of information:

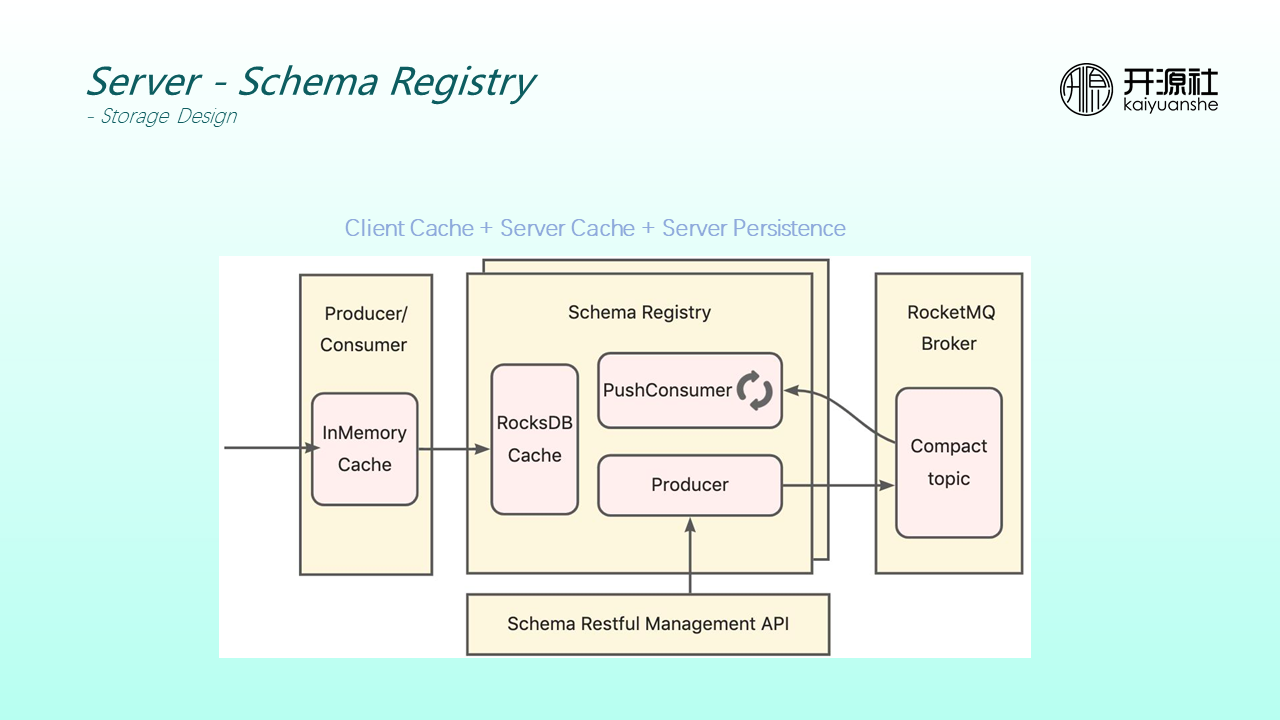

The specific storage design is divided into the following three layers:

Client Cache: If Producers and Consumers interact with the registry every time they send and receive messages, the performance and stability are affected. Therefore, RocketMQ implements one layer of cache. Schema update frequency is relatively low, and the cache can meet most requests for sending and receiving messages.

Server Cache: RocksDB is used for one layer of caching. Thanks to RocksDB, service restarts and upgrades do not affect their data.

Server-Side Persistence: Remote storage is implemented by plug-ins. The compact topic feature of RocketMQ5.0 supports KV storage.

Remote persistence and local cache synchronization are monitored and synchronized through the PushConsumer of the registry.

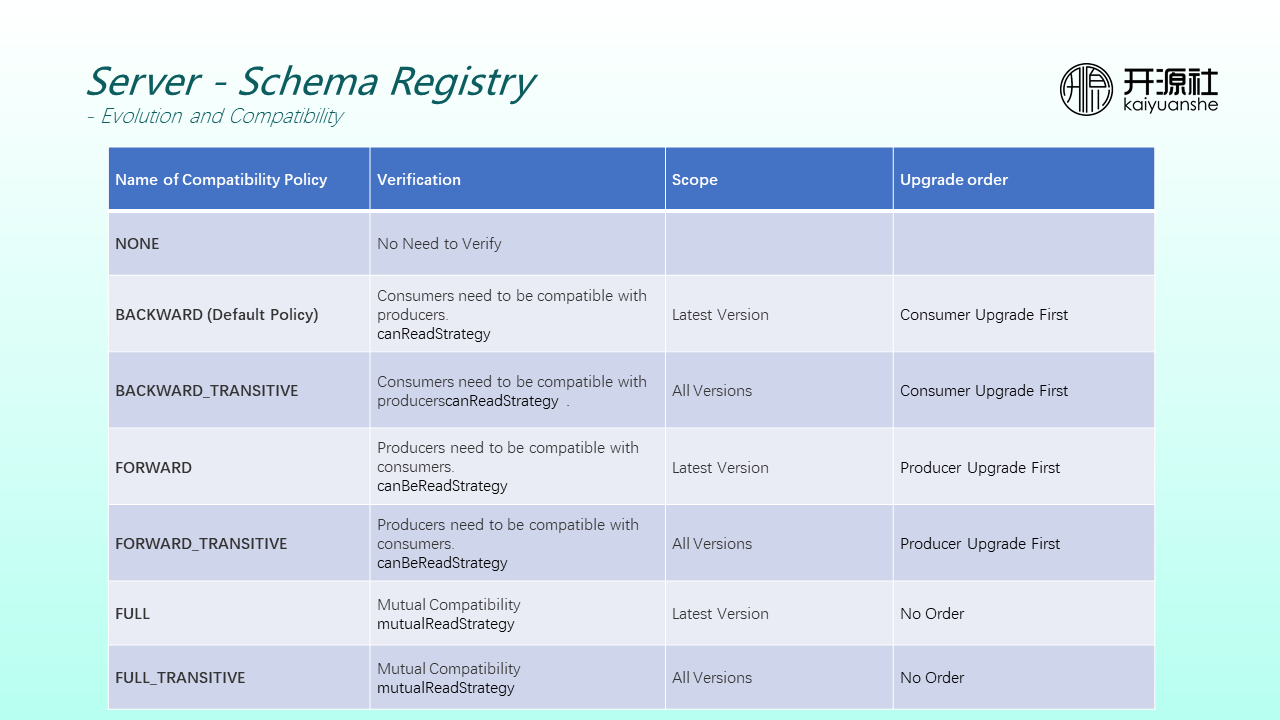

Currently, Schema Registry supports seven compatibility policies. The verification is that consumers are compatible with producers. After schemas are evolved, consumers need to be upgraded first. The higher version of consumers can be compatible with the lower version of the producer.

If the compatibility policy is backward_transative, all versions of producers are compatible.

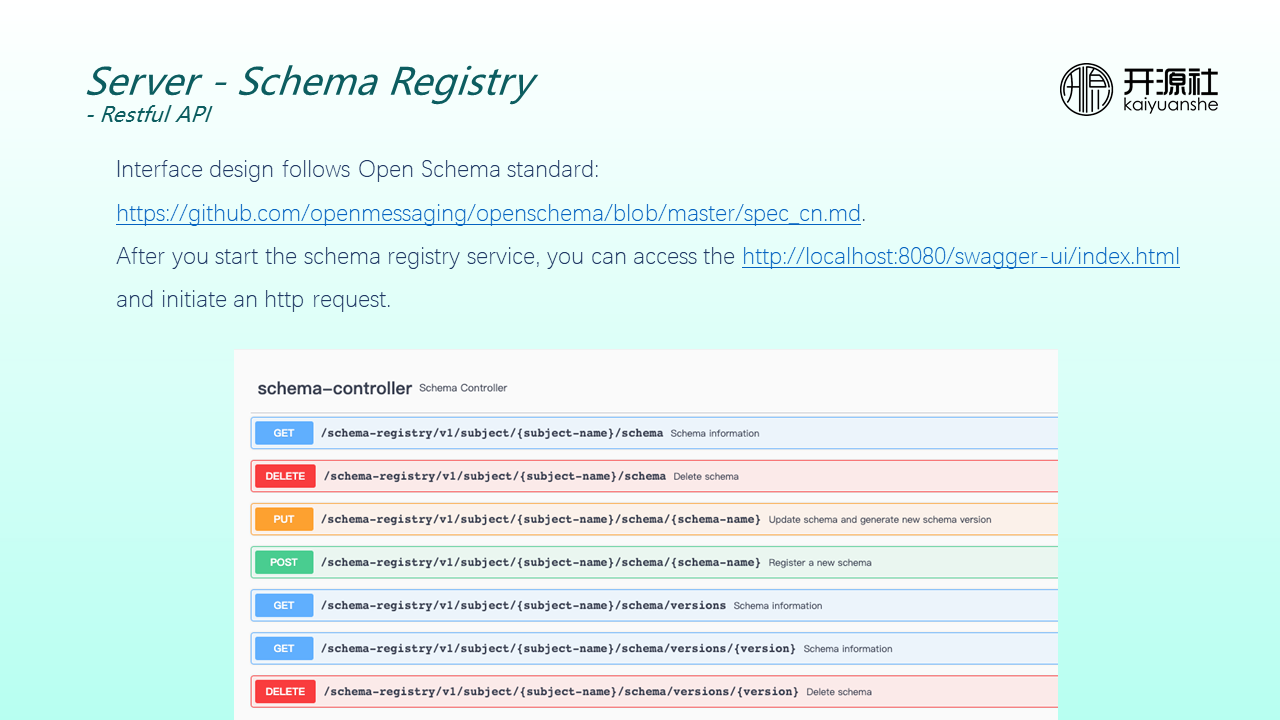

The interface design complies with the Open Schema standard. After the registry service is started, you can initiate an HTTP request by accessing the swagger UI page of the local host and managing schemas yourself.

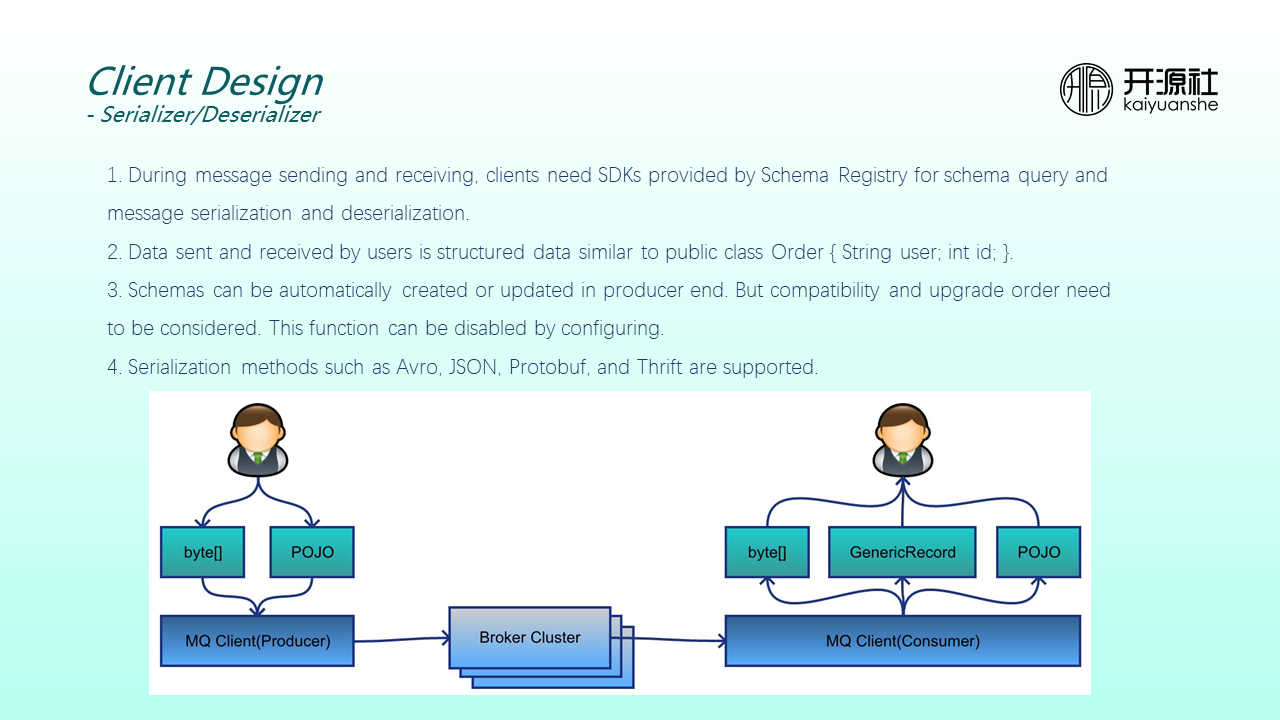

During message sending and receiving, MQ clients need to provide SDKs for schema query and message serialization, and deserialization.

As shown in the figure above, the previous clients passed byte arrays when sending and receiving messages. Now, we want producers to care about an object and consumers to care about an object. If consumers are unaware of the class of objects, they can understand the message using a common type (such as generating the record). Therefore, data sent and received by users is structured data similar to public class Order.

Schemas can be automatically created or updated on the producer end. Mainstream serialization methods (such as Avro and JSON).

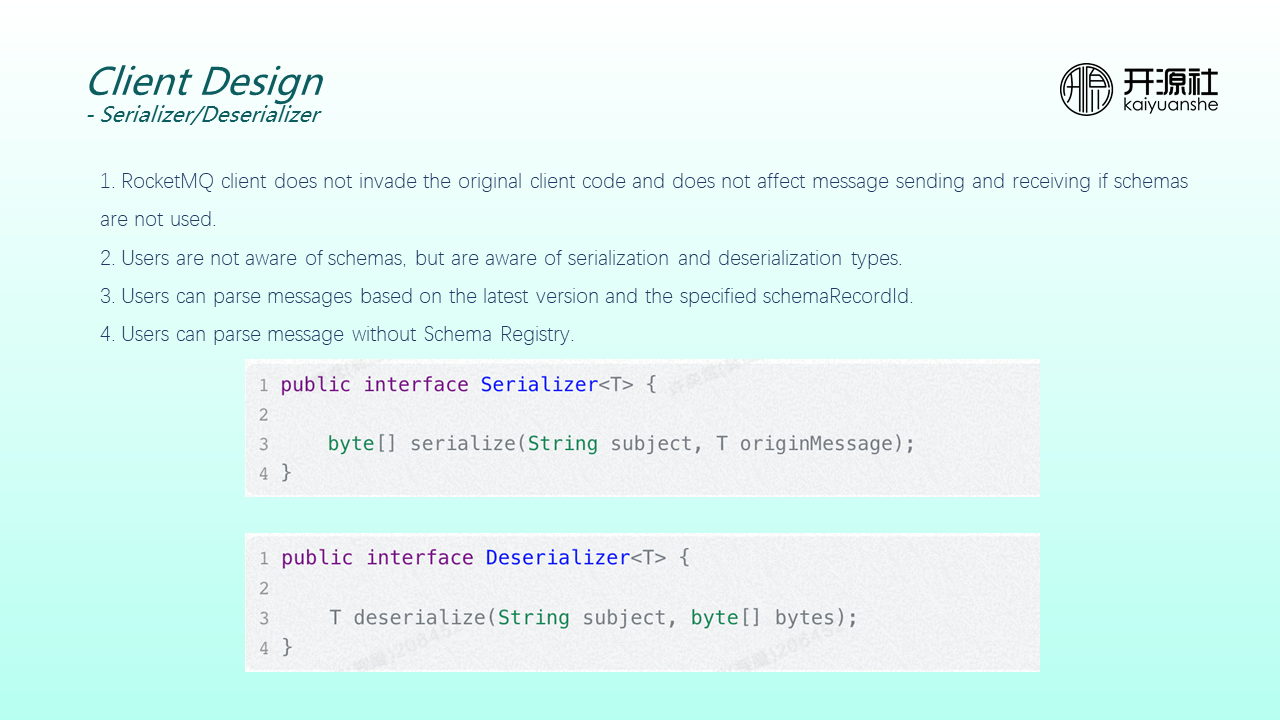

The design principle is to not invade the original client code. If schemas are not used, message sending and receiving are unaffected because clients are not aware of the schema but are aware of the serialization and deserialization types. What's more, the client is designed to support parsing by the latest version and by the specified ID during serialization. In addition, message parsing without Schema Registry is supported to meet the needs of lightweight scenarios (such as streams).

The preceding code shows the serialization and deserialization of the core APIs of a schema. The parameters are simple. As long as topics and the original message objects are passed in, they can be serialized to message body formats. Deserialization is the same. If subjects and the original byte arrays are passed in, the objects are parsed and passed to clients.

The preceding figure shows the sample of the producer that integrates schemas. You need to specify registry URLs and serialization types to create producers. You need to send the original objects Instead of byte arrays.

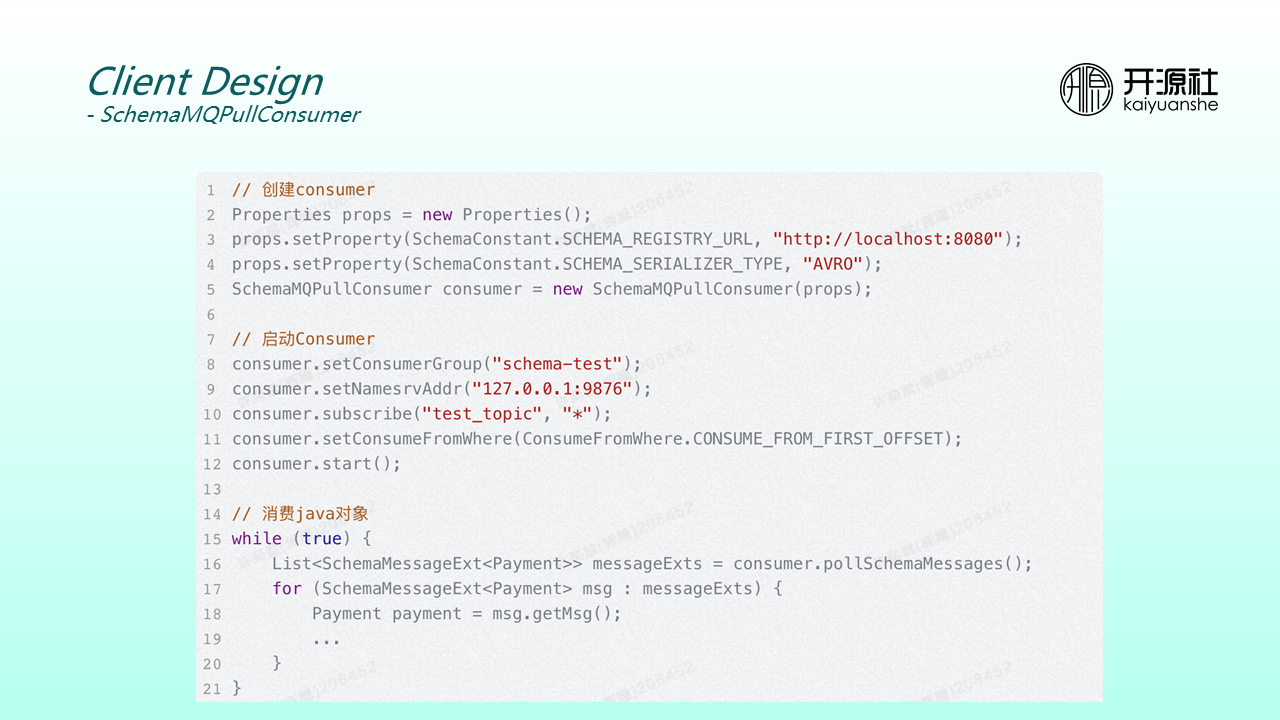

When creating consumers, you must specify the registry URL and serialization types and then use the getMessage method to obtain generic or actual objects.

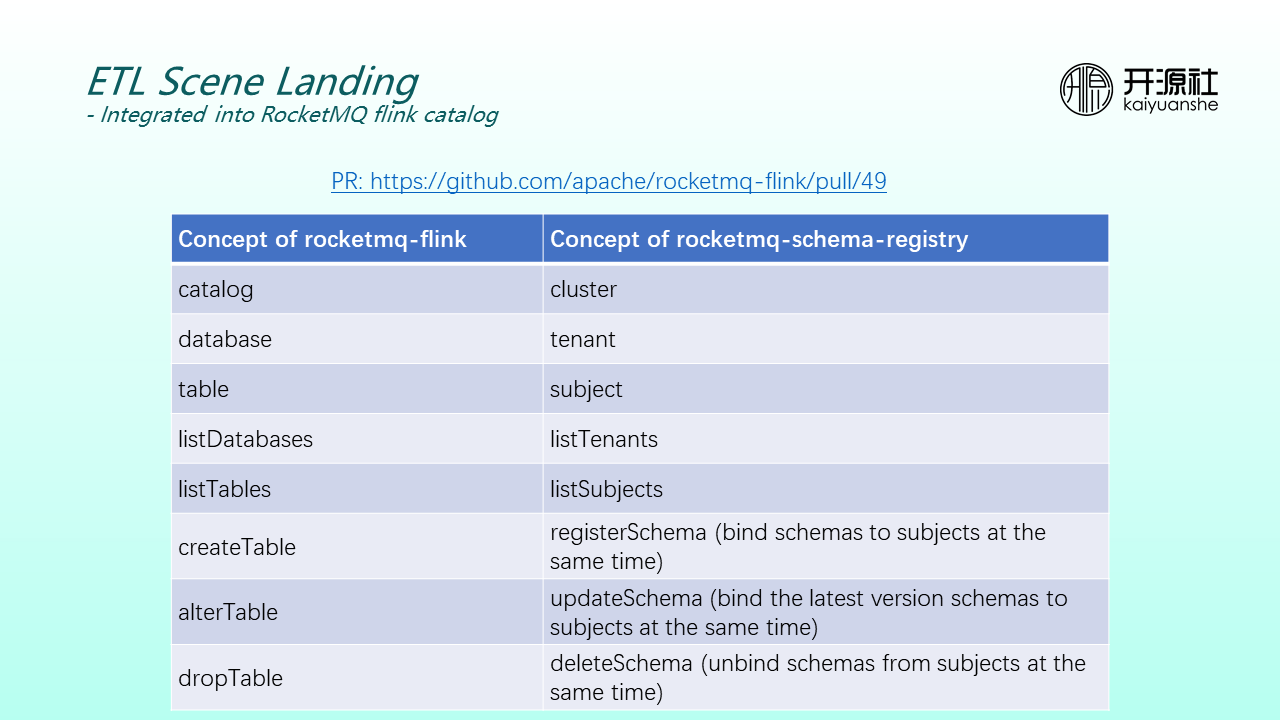

The RocketMQ Flink catalog is mainly used to describe metadata (such as Tables and Databases of RocketMQ Flink). Therefore, some concepts need to be naturally aligned when you implement them based on Schema Registry. For example, catalogs correspond to clusters, databases correspond to tenants, and subjects correspond to tables.

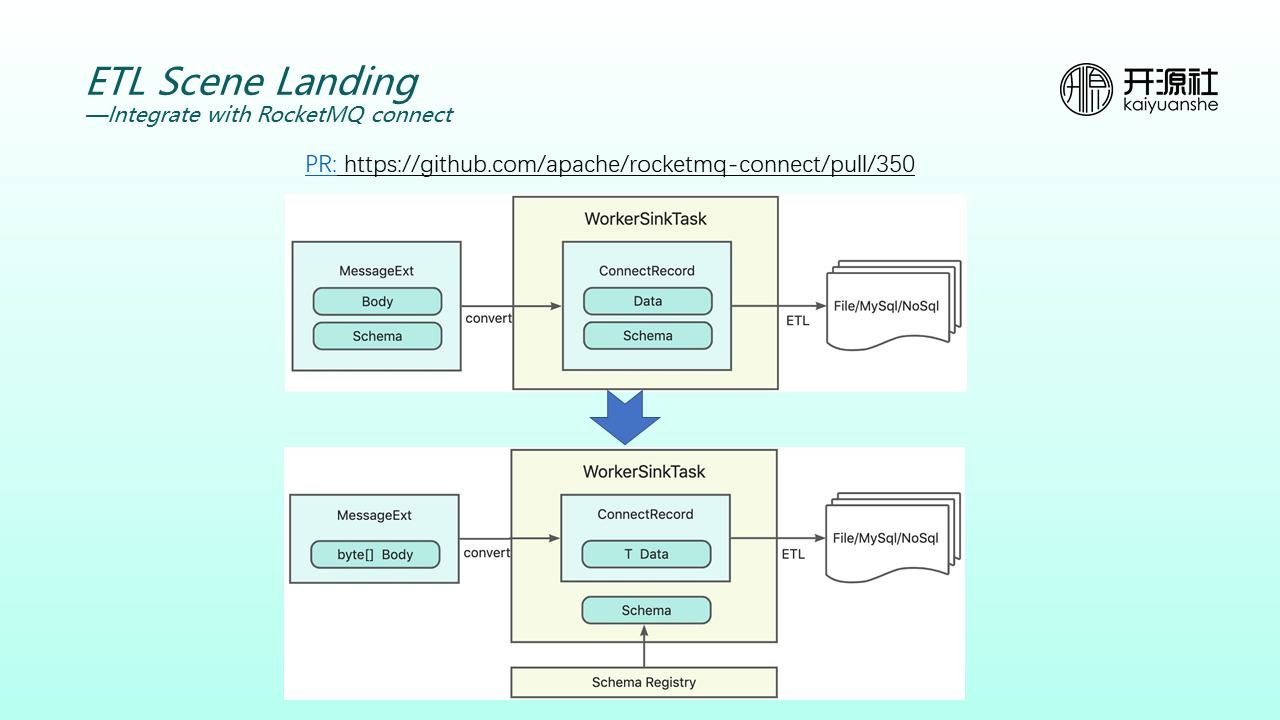

In the process of disparate data source conversion, an important part is to convert schemas of the disparate data source. This process involves converters. ConnectRecord transfers data and schemas together. If converters rely on the registry for third-party managing of schemas, ConnectRecord does not need to put the original data and schemas together. This improves transmission efficiency. It is also the starting point of connect integrating the Schema Registry.

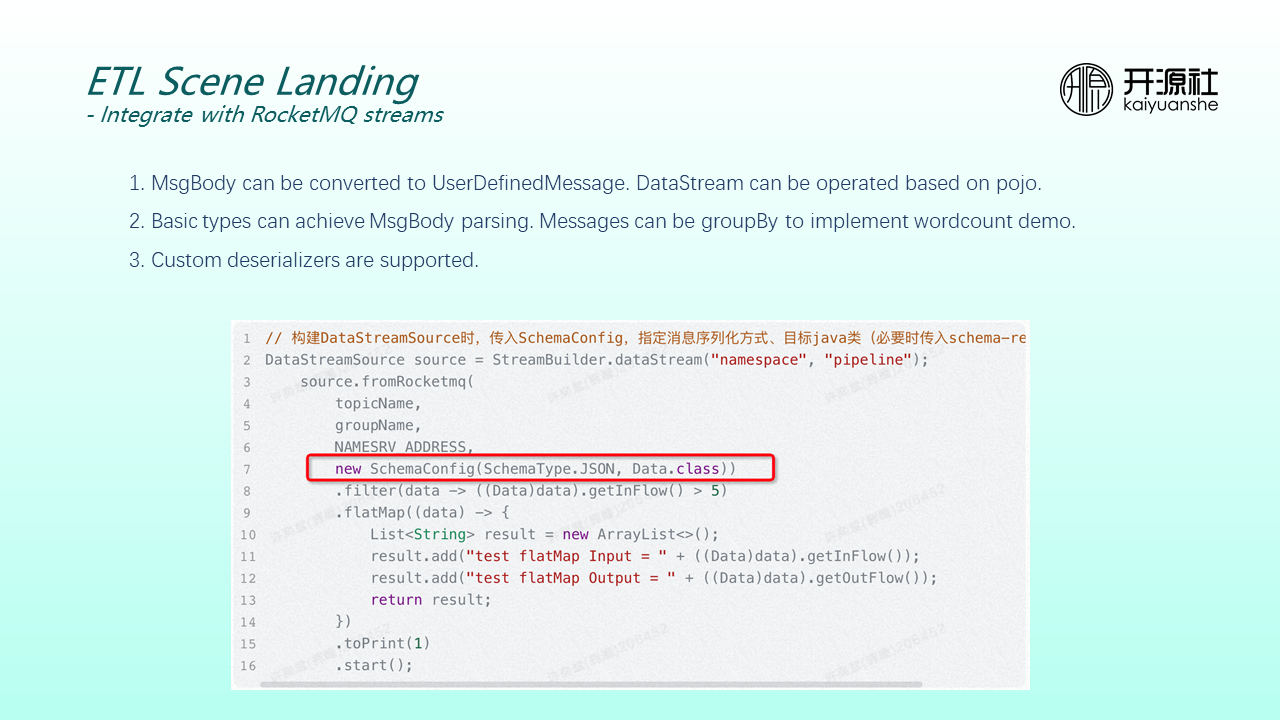

The starting point of the integration into the RocketMQ streams scenario is to make RocketMQ streams APIs more user-friendly. If schemas are unintegrated, you need to convert data into JSON. After the integration, you can directly use objects to be close to the usage habits of Flink or streams during stream analysis. This is more user-friendly.

In the preceding code, the parameter schemaConfig is added to configure schemas, including serialization type and target Java class. The subsequent computing of the filters, maps, and window operators can be simply based on object operations.

In addition, integrated streams can support basic type parsing, group by an operation based on messages, and custom deserialization optimizer.

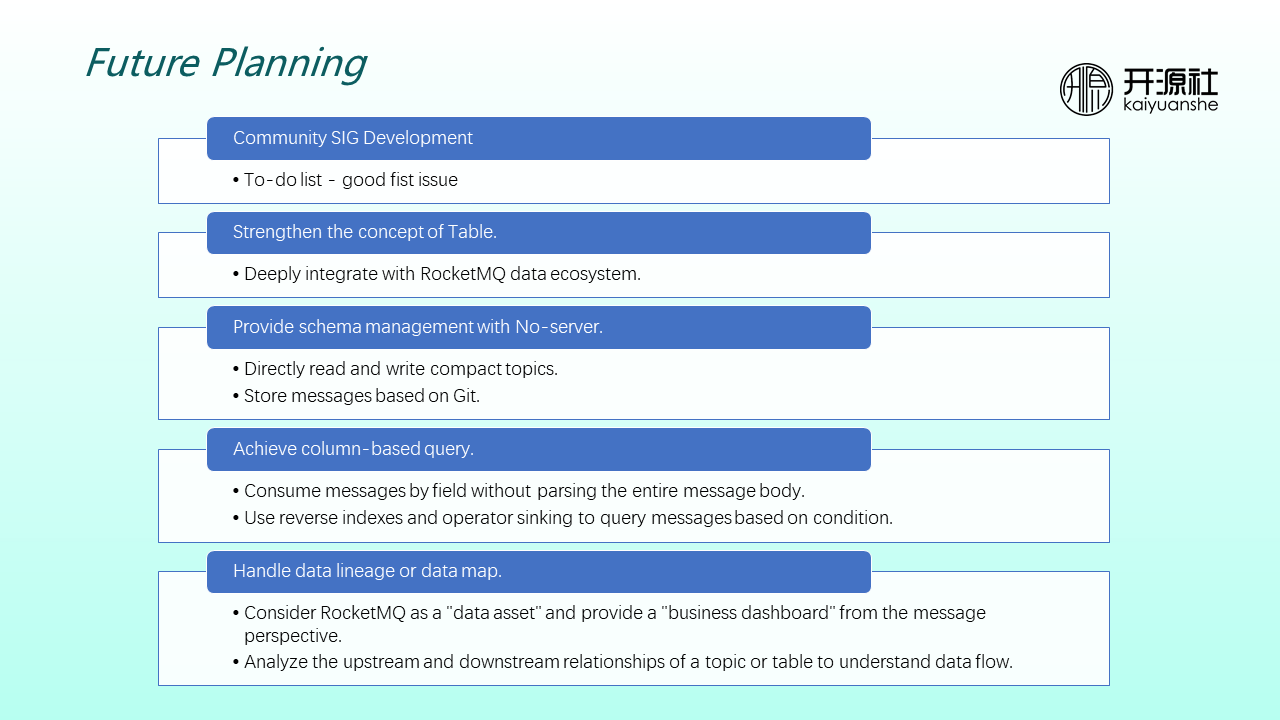

In the future, we will continue to improve on the following results:

RocketMQ Connect Builds a Streaming Data Processing Platform

List of Capabilities Related to Cloud-Native Scenarios From JDK 9 to 19 (Part 1)

664 posts | 55 followers

FollowAlibaba Cloud Native - June 11, 2024

Alibaba Developer - April 26, 2022

Alibaba Cloud Native Community - March 22, 2023

Alibaba Cloud Native Community - January 31, 2023

Alibaba Cloud Native Community - July 20, 2023

Alibaba Cloud Native Community - November 22, 2022

664 posts | 55 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Alibaba Cloud Native Community