By Shawn Jiang, CIO of Alibaba Cloud Intelligence Group and Head of aliyun.com

Large language models, or LLMs, are developing rapidly. However, effectively implementing them in an enterprise setting comes with many challenges and pitfalls. This article is adapted from a talk by Shawn Jiang, CIO of Alibaba Cloud Intelligence Group and Head of aliyun.com, at AICon Shenzhen 2025, titled "Implementing AI Applications at Alibaba Cloud." In his talk, he shares a systematic approach to AI adoption, based on his team's experience with AI staff projects across various use cases like documentation development, translation, customer service, telemarketing, contract review, BI, employee services, and R&D. This approach covers everything from organizational challenges and identifying business opportunities, to defining AI applications, designing operational metrics, and finally, to the engineering and implementation of these AI applications.

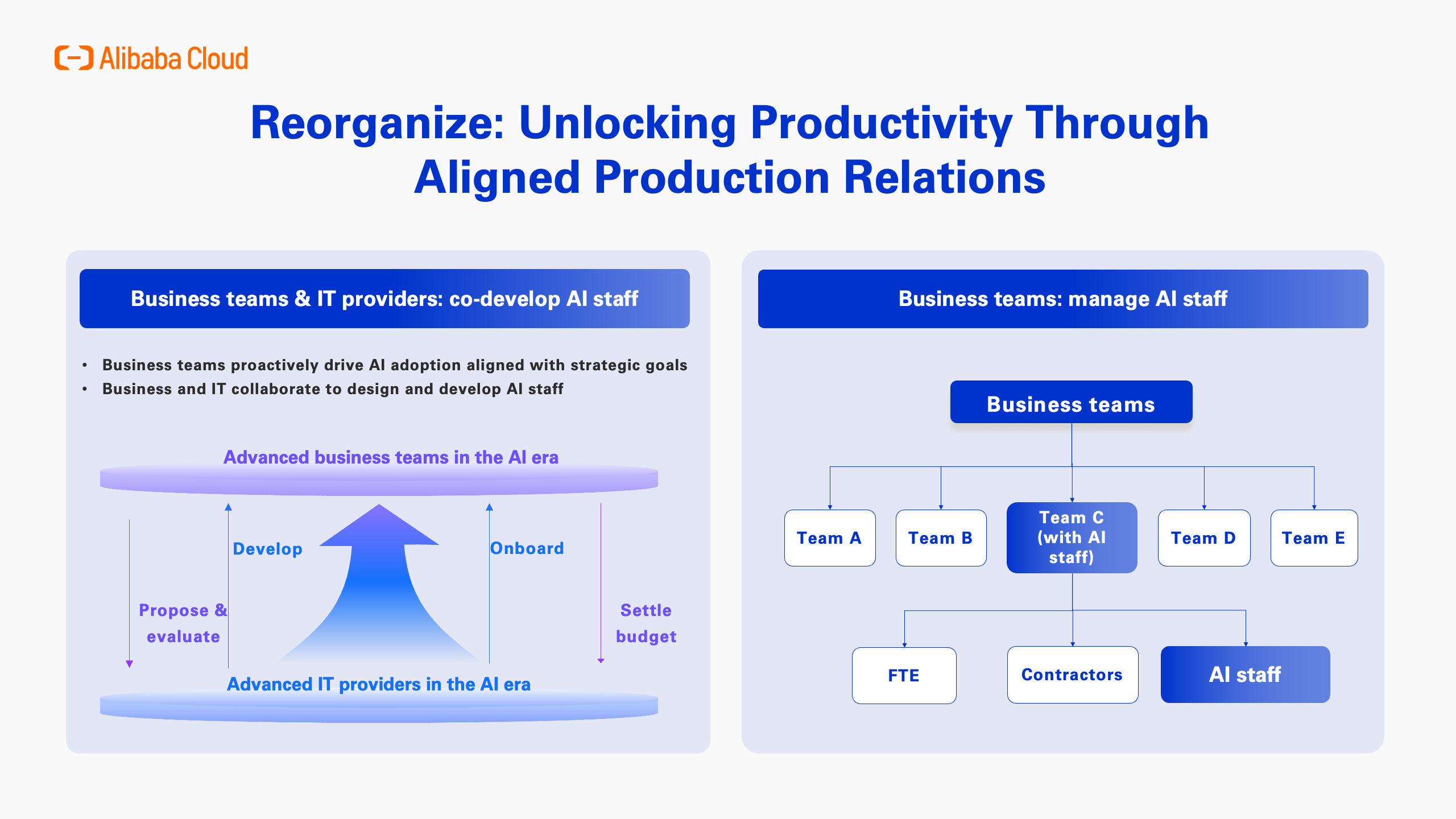

The talk addresses the challenge of varying levels of AI knowledge and skills within a company. It explores how to quickly transform the organization, adjust production relations, and foster a shared culture that supports AI adoption. It also delves deep into the primary contradiction today: the expectation gap between business and IT departments regarding AI projects. Shawn shares practical solutions for coordinating with business units, motivating subject matter experts to help with data preparation and performance evaluation, and aligning expectations for AI initiatives across the enterprise.

The following is a transcript of the talk, edited by InfoQ for clarity while preserving the original meaning.

This is my first public speech in the three years since becoming the CIO of Alibaba Cloud. In this presentation, I'll be sharing a condensed look at the key lessons and experiences my team and I have gained while driving digitalization and intelligent transformation at Alibaba Cloud over these past three years.

Before becoming CIO, I was in charge of R&D for the core systems of Alibaba Cloud's Apsara OS. My talks back then focused on Apsara and Alibaba Cloud's products, and I was speaking from the perspective of someone delivering a service. But today, in my first speech as CIO, I'm taking a different angle—that of a project leader. I'll be sharing our hands-on experience and what we've learned about driving digitalization and intelligent transformation within our company.

For the past two or three years, my team and I have been dedicated to implementing LLMs in various enterprise scenarios, and I've had a lot of thoughts throughout this journey. I'd like to start with some of my observations and reflections from this time.

We often wonder: When a person or a company becomes highly successful, is it because of the times we live in, or is it a result of their own efforts? I believe it's mainly the times. I often say that we've gotten to where we are today largely because we've been riding in a great "lift." We caught the "China" lift, the "Chinese Internet" lift, and the lift of Alibaba, where I work. The lifts were going up so fast that I naturally reached new levels along with them.

To put it another way: Are you doing push-ups inside an ascending lift, or on solid ground? The heights you reach will vastly differ. Personal effort is certainly important, but the platform you're on matters more. In this era, I believe AI is the greatest lift. For both organizations and individuals, whether or not you get on this AI lift will determine how high you'll make it in the future.

A research report from ARK Invest predicts that by 2030, computing performance will have increased a thousand times over today's levels. Jensen Huang once made an even bolder prediction: that AI computing power will increase a million-fold in the next 10 years. A million times—what does that even mean? Before the age of AI, we used to talk about Moore's Law, where computing performance roughly doubled every 18 months. But in the AI era, the pace of technological development has accelerated dramatically. If we don't get on this high-speed AI lift soon, we'll almost certainly be left behind by the times. That's why we must act quickly. We need to get on that lift and do our push-ups while it's ascending, not while we're still on the ground.

We're seeing that both companies and individuals are beginning to grasp the importance of AI—even if my lift analogy is a bit blunt. This realization has made many companies, including their CEOs and business departments, quite anxious. From my perspective, the biggest difference between this tech revolution and previous ones is this: Throughout the history of IT, from the PC Internet to the mobile Internet era, technology adoption in enterprises was always a gradual, step-by-step process. Back then, CEOs could see the industry hype and vendor marketing, but they remained quite calm. They felt they could take their time.

This time around, however, it's completely different. I've found that, for the first time, CEOs and business departments are getting even more hyped up than the IT teams and vendors. So, you could say the biggest contradiction in companies today is the gap between the "mind-blowing" and "magical" AI that business departments see on social media and in PR, and the uneven and insufficient progress being made by the IT department, or the CIO. This disconnect is becoming more pronounced than ever.

Based on my observations within Alibaba Group and my conversations with over 30 CIOs, I've noticed a common trend in many companies: There's a flood of new ideas, countless demos, and a rush to create various tools. You might even see a single team eager to set up multiple agentic systems on Dify. However, these efforts are often made by R&D teams. They put their ideas into demos, without properly involving business teams in the process. This has been common practice among some, if not all, companies.

At the same time, we've also seen that the crucial work is critically lacking, like delving deep into the business to identify real value, properly defining products, implementing knowledge engineering (not just traditional software engineering), and mobilizing experts. This is another widespread phenomenon I've observed.

This leads us to believe that there is tremendous work to be done if companies want to truly leverage AI and generate real business results. And this is an area where we have done a great deal of exploration and practical work.

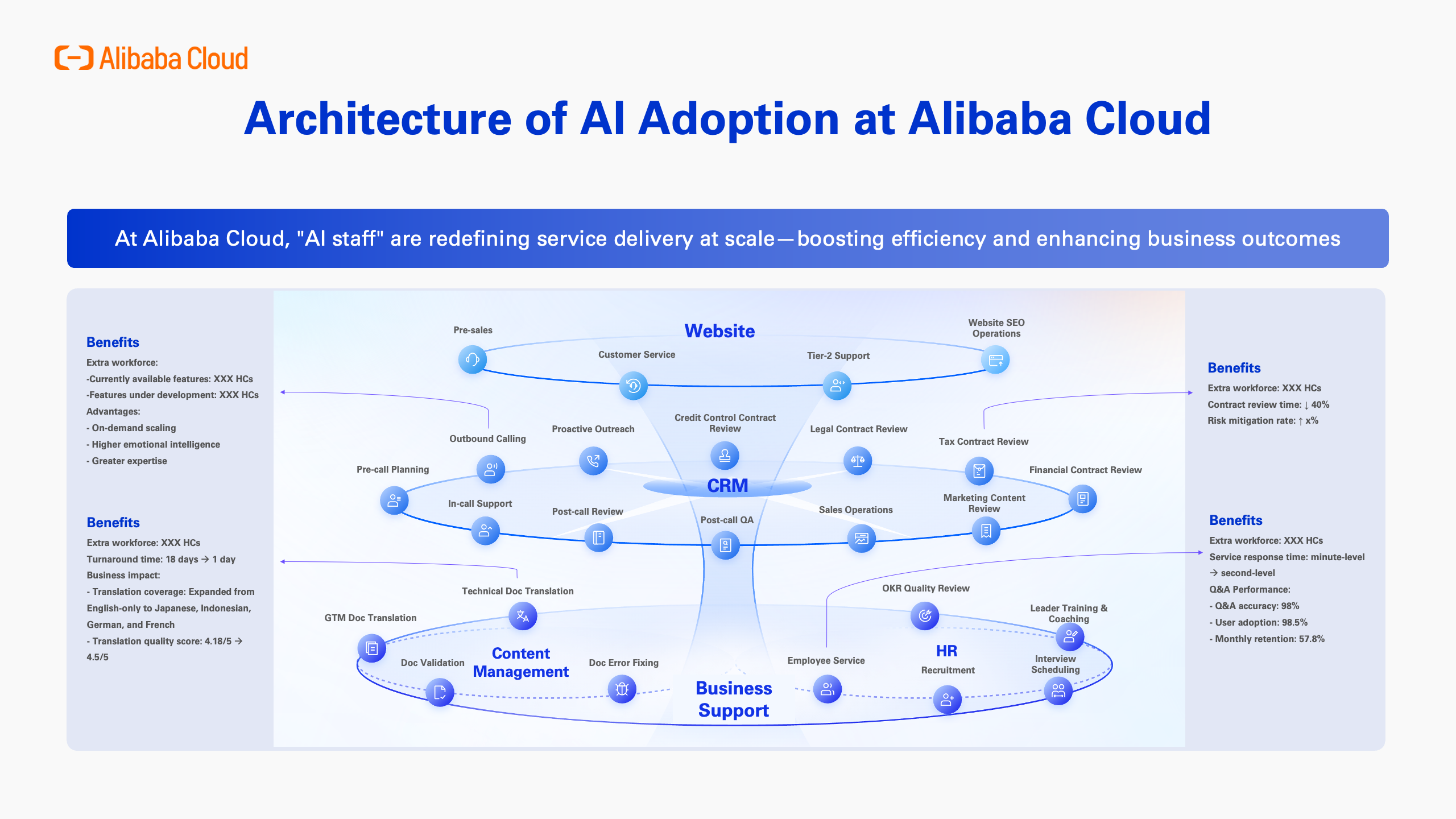

Next, I'd like to show you a full picture of how we've adopted AI at Alibaba Cloud. As you can see in this diagram, we've deployed AI staff across our organization. These AI staff are now widely used throughout our official website, CRM, business support systems, content management systems, and even our HR systems, delivering tangible results within our current operations.

In total, we've launched 28 of these AI staff projects. I'll share a few examples to give you a more concrete idea of what this looks like in practice.

The first example is our AI translators. Translation is one of the tasks that LLMs excel at. But at Alibaba Cloud, we've faced a huge challenge in this area. As a public cloud service provider, our documentation is absolutely critical for our customers—we all know how much B2B services depend on good documentation. We have over 300 products and more than 100,000 documents, totaling hundreds of millions of words. Also, we've been working hard to expand our business globally, to markets like Japan, the US, Europe, Indonesia, and Türkiye. Our developers in those regions rely heavily on our documentation to use our services.

The problem was finding talent who were fluent in the local language and experts in cloud computing. Technical translation demands both, and even with a high budget, finding and hiring such experts was nearly impossible. In the past, we had to make do by translating our documentation into English only, with some documents in Japanese. Work on other languages came to a standstill, which resulted in poor feedback from our developers outside China.

Before the recent breakthroughs in AI, we tried using traditional NLP for translation, but the results were nowhere near good enough. Even with ChatGPT 3.5, the technology still fell short of our requirements. It wasn't until ChatGPT 4 that we found the translation quality was finally on par with professional human translators who were experts in both the technology and the local language.

What's more, when we calculated the cost—and this was over a year ago—we found that the cost per document was just 0.5% of what we had been paying our professional human translation teams. From that point on, we began using LLMs for translation on a massive scale. Today, we've translated all our documents into Indonesian. This means we've solved an organizational problem that even a large budget couldn't fix.

To put some numbers on it, our professional human translation teams used to get a quality score of about 4.12 out of 5 . Now, with AI, our translations are scoring 4.6. In our international markets, we've seen a significant improvement in user experience and Net Promoter Score (NPS). So, this wasn't just about cutting costs; it was about using AI to solve a problem that was previously unsolvable.

The second example is our outbound calling agents. Alibaba Cloud serves millions of business customers. Given the B2B nature of our company, providing sales and support is essential. However, we can't assign dedicated sales and service staff to every single customer. As a result, a large portion of our sales and service is conducted over the phone by a team of several thousand customer service agents.

But, this presented a major pain point. Cloud computing itself is quite complex. Finding enough outbound call agents who have the right skills, understand cloud technology, and have the patience to make calls all day is extremely difficult. Recruiting and training these teams is a huge challenge, and turnover is very high. This created a major bottleneck and compromised the quality of our sales and teleservices.

So, we decided to tackle this with AI, by introducing intelligent outbound calling. We were able to build on our existing foundational knowledge in areas likemultimodal technologies. Today, we've deployed intelligent outbound calling for both sales and customer service. Its capacity is equivalent to the service bandwidth of several hundred human agents.

The third example is AI staff for contract risk review. A key characteristic of our B2B business is that we work with many large government and corporate clients, who typically don't use our standard contracts. These clients' contracts involve large sums of money and require strict risk reviews, covering areas like finance, tax, legal, risk control, and credit control. In the past, completing these reviews required elite professionals in fields like legal and finance, many of whom came from the Big Four international accounting firms. However, given the massive scale of our business, it's simply impossible to hire enough of these top-tier experts to handle the entire workload.

This created a huge bottleneck in our contract review process. Review times were excessively long, sometimes up to five months, with an average of two to four weeks. This significantly dragged down our business efficiency, especially when it came to serving our major clients. To solve this problem, we developed a team of AI staff, including those covering digital finance, credit control, and legal experts. We deployed these AI staff right at the contract drafting stage. While our sales team communicates with clients and drafts the contract, the AI staff can identify potential risks in real time, and suggest negotiation strategies. This is a huge improvement over discovering issues during the final review and having to start over. This approach has dramatically improved the efficiency of our contract risk management.

The final example is AI staff for employee services. In a company of our size, HR systems have one prominent feature: they are extremely fragmented. For example, processes and services like leave requests, health checks, benefits, and proof of employment are all scattered across different systems. At the same time, various information is also scattered, from internal company benefits to external recruitment policies. When employees need to find this information or use these systems, they face two major difficulties. First, these are services they don't use very often. Second, because they're so scattered, they're very hard to find. And because these are low-frequency services, we can't justify having a large support team for them. This puts a heavy burden on our HR team, and consequently the service experience for our employees suffers.

To address this, we consolidated all of these low-frequency, scattered services into a single intelligent agent. We created an AI staff member called "Cloud Bao," accessible through our DingTalk platform, to provide employees with a unified, intelligent service. We found that by introducing this agent, we effectively added the equivalent of about 10 employees to our service team. More importantly, employee experience has improved dramatically. For example, an employee can just type a request in natural language, like "I need to take leave next Monday," or "Book a health check for my father," and the system can quickly respond and help complete the task.

We've now implemented intelligent services across more than 20 scenarios, and I've just shared a few examples with you. Behind all these AI staff, there's a common logic we use for evaluation: First, how much have we extended our human workforce? Second, how much has business efficiency improved? And third, how much have business results improved? We pay close attention to this framework. For every AI staff member we deploy, we must measure whether it has truly expanded our service bandwidth. We also need to know if it's more efficient and effective than the previous manual process. This is a crucial point, and it's what truly sets our agents apart from many others you might see out there.

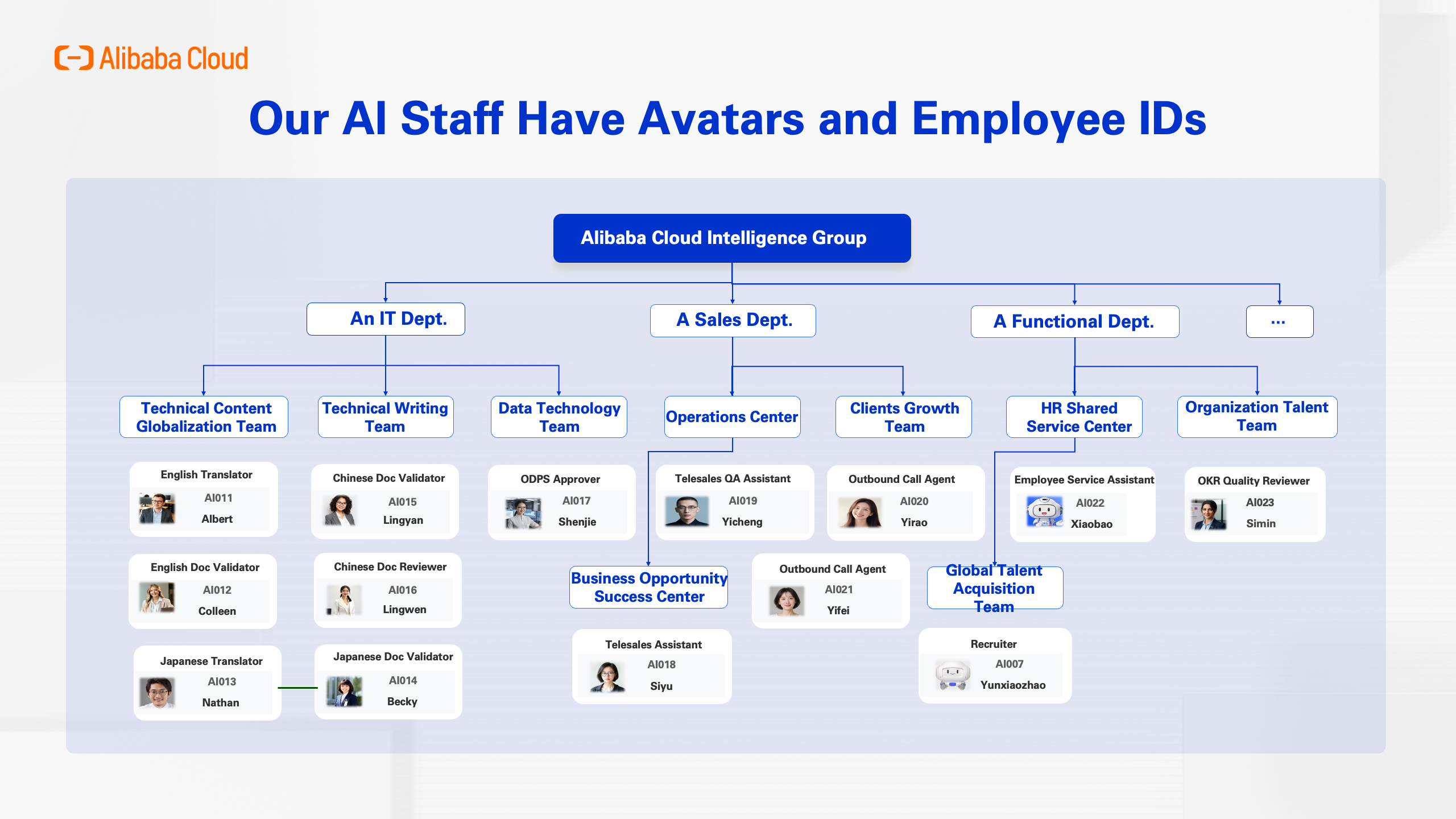

Ultimately, these intelligent agents are actually working in their designated roles. In our HR system, these AI staff are assigned to specific business departments and report to their respective teams, just like any regular employee. To be truly integrated into the team, they must perform tasks equivalent to the work of a certain number of human employees in their assigned roles. In our DingTalk and other internal work systems, these AI staff have avatars and employee IDs. The only difference is that their employee IDs start with "AI," like AI001 and AI002. We have 28 of these agents active right now, with many more in the pipeline waiting to be launched. They very closely resemble our human colleagues.

Over the past two years, as I've led my team in implementing these business solutions, I've come to realize that successfully applying technology to achieve real business results is not as simple as it sounds. There's a world of difference between creating a cool demo and delivering something that generates real business value. Next, I'd like to share some of the challenges we've faced along the way and the solutions we've developed. I hope you'll find them helpful.

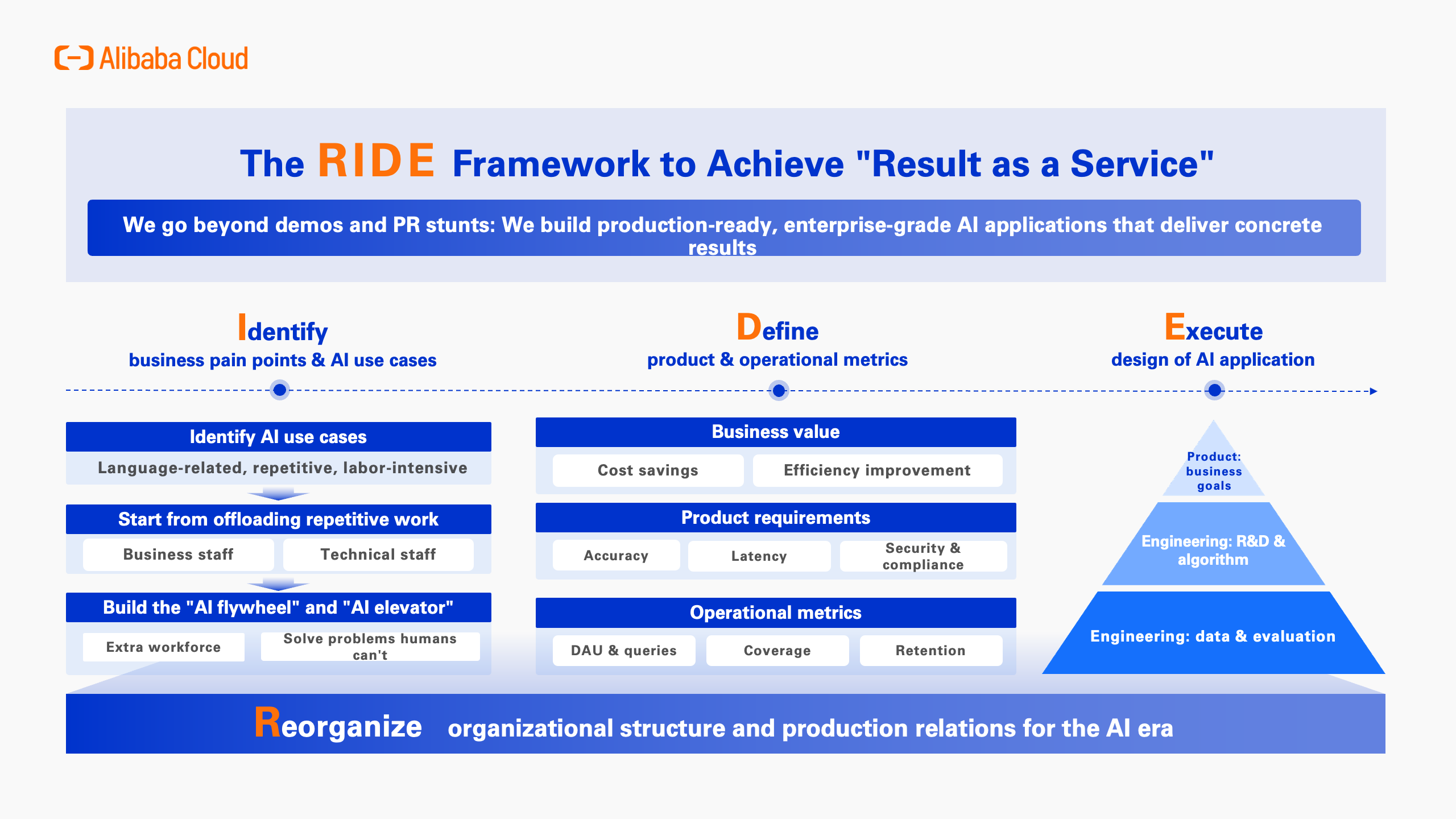

Recently, you may have heard of a concept coined by Sequoia Capital called RaaS, or "Result as a Service." The core idea is that it's not enough to simply provide tools and products, and expect companies to implement them on their own. That's why we place special emphasis on projects that have already been launched, and that produce tangible business results. As CIO, the service my team provides to our internal business departments is exactly this: a "result-oriented service." And in the process of advancing RaaS, we've developed our own methodology, which we call RIDE.

RIDE comprises four key steps:

● Reorganize the organizational structure and its production relations;

● Identify business pain points and AI use cases;

● Define product and operational metrics; and

● Execute the AI application design.

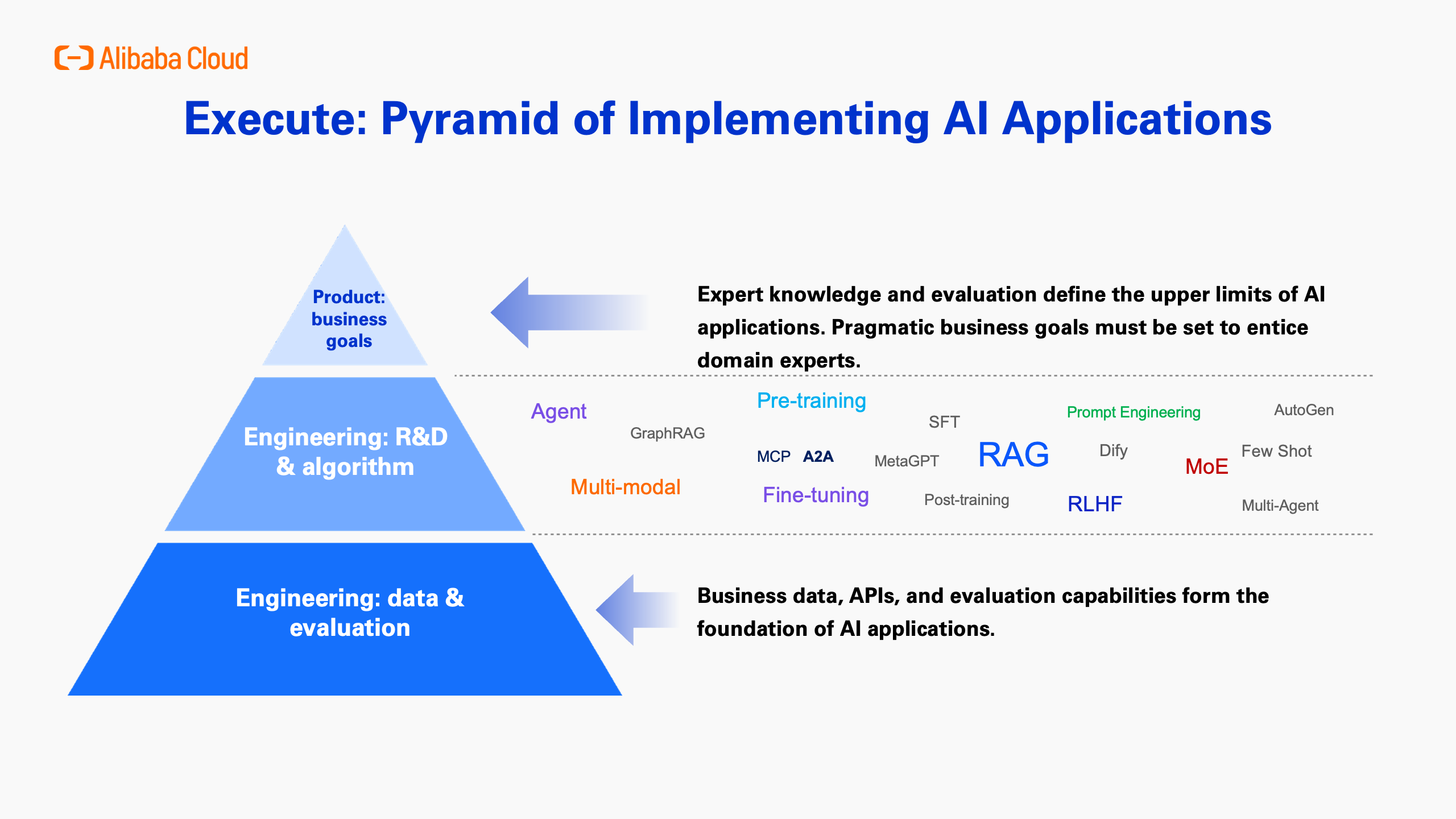

First is the foundation, Reorganize. In the AI era, existing organizational structures are ill-suited to the new productivity that AI brings. This mismatch manifests in every aspect of our work, hindering AI development and implementation. Therefore, we must redefine our production relations. Second is Identify. This means we need to pinpoint which business problems are best suited for AI solutions. To do this, we first need to clearly define the problem. Then, by combining our understanding of AI's capabilities with business needs, we can determine which issues can be effectively resolved by AI. Next is Define. Once we've clarified the problem and AI's capabilities, we need to precisely define the product and its operational metrics, then track these metrics accurately. Finally, we have Execute. The execution phase is structured like a pyramid. At the top are the business objectives. At the bottom are the data and evaluations. And in the middle is the R&D and algorithm work.

Of course, this RIDE methodology wasn't created on day one of our AI transformation. We developed it after successfully implementing 28 AI staff. During that process, we discovered that if we didn't follow these steps, projects were very likely to fail. Following these steps doesn't guarantee success, but it significantly increases the chance of it. This methodology is the result of two years of hard-won experience.

Let's start with Reorganize.

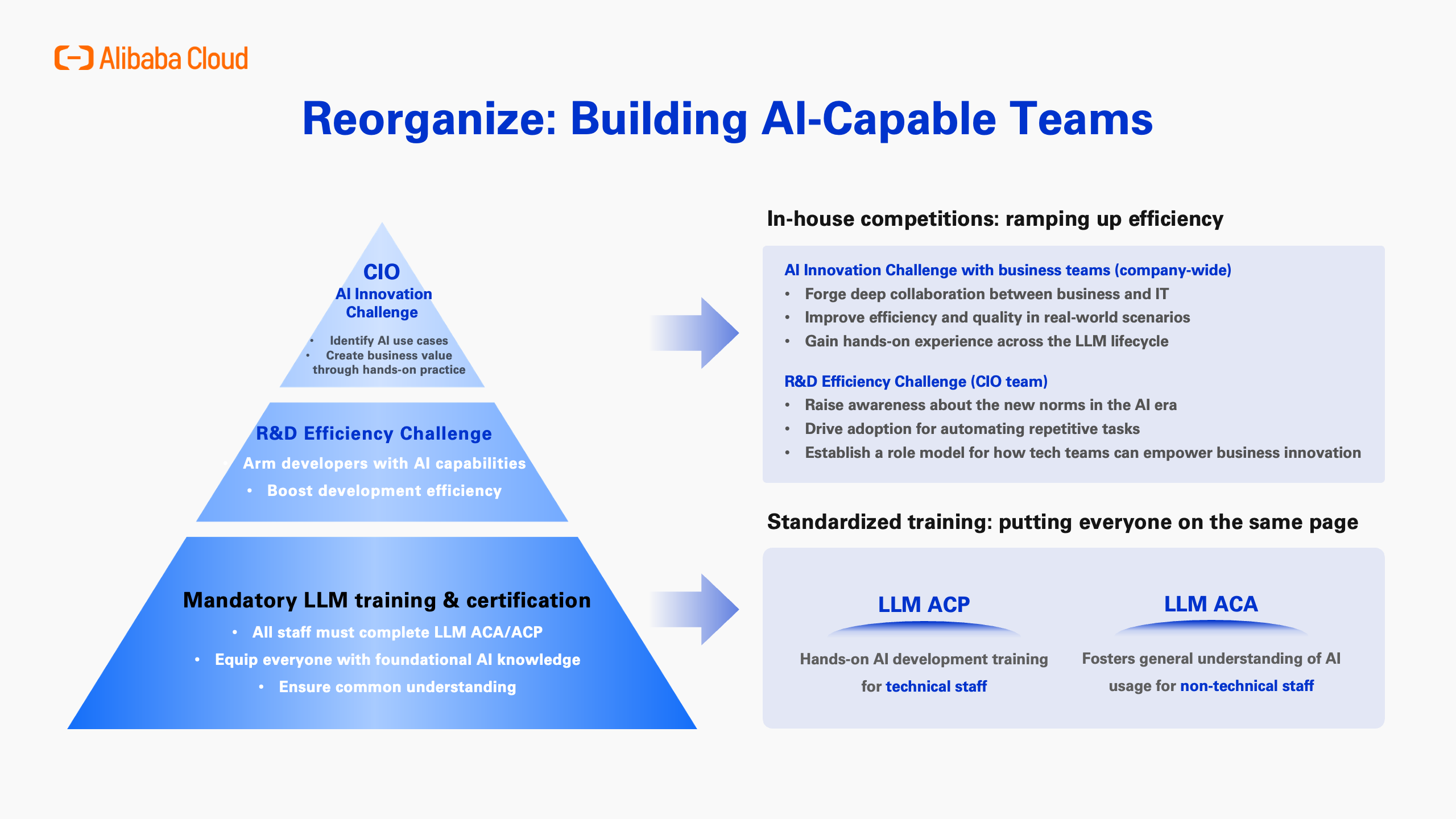

In the first year of implementation, I noticed a problem: There were significant gaps in understanding the basic concepts of LLMs—their capabilities, limitations, development stages, and underlying principles—not just between business teams and our team, but even within my own team. Product managers, algorithm engineers, and the engineering team were not on the same page.

To solve this, we launched an initiative to create a common language and understanding across all teams. We required everyone to participate in a certification training program about LLMs. The main reason for this requirement was to bridge the gaps in foundational knowledge. I call this "General Education for the AI Era"; it was basically like putting the entire team through a new "compulsory education" program. This training has two tracks: Alibaba Cloud Certified Associate (ACA) for non-technical staff, and Alibaba Cloud Certified Professional (ACP) for technical staff. We did this because it's not enough for technical staff to speak the same language; we also need non-technical and technical teams to be on the same page.

This general education is crucial for team collaboration. We started by having everyone in the CIO department get certified. Following that, our business partners in finance, HR, sales, and other support departments are now also undergoing certification. Currently, the entire Alibaba Group is using this method for foundational AI education to put everyone on the same page. Otherwise, you end up in a situation where everyone is talking about the same concepts, but their understanding is completely different from reality. It's hard to appreciate the feeling of helplessness this creates unless you've been deeply involved in the work. Once we align everyone's understanding through this general education, communication becomes much more efficient.

Building on this foundation, we held two competitions for our staff. One was focused on improving R&D efficiency, and the other on improving business efficiency. What sets these competitions apart is that they are centered around end-to-end (E2E) results. For example, in the R&D competition, we measured how many person-months it took to deliver a requirement of a certain granularity E2E result, and how many it takes now. We don't just look at code adoption rates, because those can be easily inflated. Plus, code completion tools tend to just handle the easy parts.

For the business E2E competition, our goal was to dive deep into real business scenarios, help the business expand, and deliver results that surpassed previous levels of effectiveness and efficiency. So, these two initiatives are critical. First is the general education to standardize our knowledge, because knowledge in the AI era is changing rapidly—every single month. There's a huge gap between current practical knowledge and older foundational knowledge. Second is "using competition to drive practice." By participating in competitions with the right goals, the entire organization can identify its weaknesses, discover opportunities for mutual learning, and be inspired to continuously innovate and improve efficiency.

Now, let me tell you a bit more about our AI staff.

Our AI staff all report to business departments, which is a crucial arrangement. This isn't just a formality; it's a psychological matter. We don't want business departments feeling that AI technology is a threat to their jobs. Instead, we need to make it clear that AI is here to help them. If this relationship isn't managed well, you'll run into countless obstacles.

Therefore, we've positioned ourselves as a supplier of AI staff. The business departments are the "AI-capable organizations" that can "hire" our AI staff and co-develop them with us. This way, business departments are more willing to adopt AI technology, which reduces resistance. So, that's the first point: positioning ourselves as an external supplier. Second, we've also found that AI staff can't bear responsibility on their own, nor can you give them a low performance rating. This raises the question: If an AI staff member makes a mistake while performing a task, who is held accountable? By having the AI staff report to the business department, they become part of that team, which helps put the team at ease. The business team also participates in the AI staff's development. Furthermore, each AI staff member is supervised by a human employee, who is ultimately responsible for their work in that specific business domain.

We often hear people say that while LLMs might work okay for B2C applications, they hallucinate too much for B2B and can't be 100% accurate or reliable. But what we've found in practice is that humans also "hallucinate" —and more than you might think. If you observe carefully, you'll see that people aren't always reliable in many tasks. They make mistakes; people just don't always catch them.

So, one thing we emphasize is this: Once the AI project and business department are truly aligned and we've refined the AI through collaboration, we must re-evaluate the standards we originally set for it. If you demand 100% accuracy, you're essentially comparing AI to a "god." But if you compare it to the effectiveness and accuracy of a person, then you're comparing it to a "human." Therefore, the only meaningful benchmark is to have the AI perform better and more accurately than a person. So, how do we avoid comparing AI to a god? It goes back to what I mentioned earlier: by addressing the organizational dynamics and sorting out the internal business logic, goals, and relationships.

From an HR management perspective, AI staff need to be treated as full-time employees whose performance is measured based on real business outcomes. We discussed with our CPO: How can we assess if an AI staff member is truly performing like a full-time employee? We decided on a clear goal: It must take over a repetitive but valuable task within an existing business process. That is its one and only goal. And its impact must be measurable in terms of the equivalent human effort it provides.

For an AI staff member to go live and be officially deployed, it must meet two criteria. First, its efficiency in performing the task must be higher than a human's by at least a certain percentage . It has to be more efficient. Second, its effectiveness, or the quality of its results, must also show a certain degree of improvement. Only when the AI staff member is both more efficient and more effective can it be "officially onboarded" and start working in the business department.

So, that was the Reorganize phase. If you don't solve these organizational problems, you'll keep running into roadblocks and might even get stuck. Once the organizational issues are resolved, business departments will say, "Great, let's collaborate on developing an AI staff member." The next question is, how do we go about that?

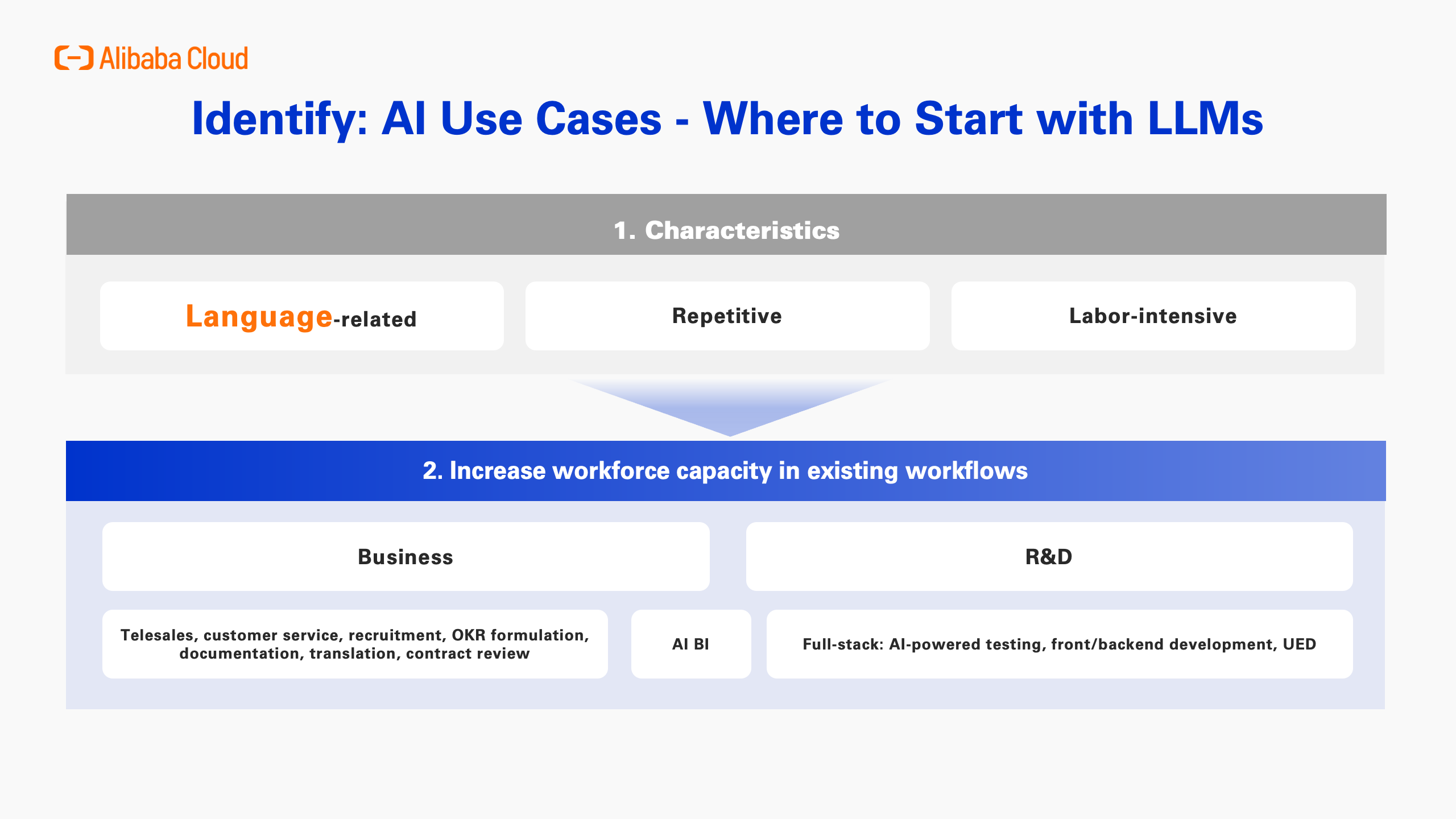

The first step is to Identify business opportunities.

The core of this AI revolution is the LLM. So, we operate on a simple belief: All work centered around language-related tasks will be deeply affected. This includes roles like telesales, customer service, recruiting, managing OKRs, documentation, translation, and contract review. Aside from human languages, this work also includes R&D-related tasks involving programming languages like C, Java, and SQL. These language-centric jobs are impacted the most. So, the first characteristic we look for is language-related work.

The second characteristic is tasks that are repetitive and done at scale. AI is all about automation, so the more repetitive and large-scale a task is, the better suited it is for AI. The third characteristic is an existing pain point, like being understaffed or getting complaints about inefficiency. We use these three characteristics to work with business departments to Identify which business areas to start with. This is how we identify opportunities and define use cases. Defining the problem clearly is crucial for a smooth process. If you end up solving the wrong problem, all your effort and investment will go to waste.

We mentioned earlier that we need to quantify the AI's contribution in terms of human resources for a given role. But how exactly do we calculate that ?

In our experience, we basically have two scenarios. First isroles with focused responsibilities like technical translators, whose work is measured by word count. So, we can calculate the cost of AI translation per word and make a direct, linear replacement. If a person's daily output is 20,000 words, then we can equate an AI that produces 20,000 words to one full-time person. For roles with mixed responsibilities, like a Product Manager, they might be writing a PRD one moment, analyzing tickets the next, then creating a demo, then interviewing customers. For these roles, we often find that some tasks are repetitive, tedious, and low-value. We can still create an AI staff member to help these critical, complex, and irreplaceable roles by offloading those tedious tasks, then calculate the equivalent human resources saved. We've seen this in finance and legal teams. When you offload their most tedious review work, they can focus on higher-value activities, and their job satisfaction soars.

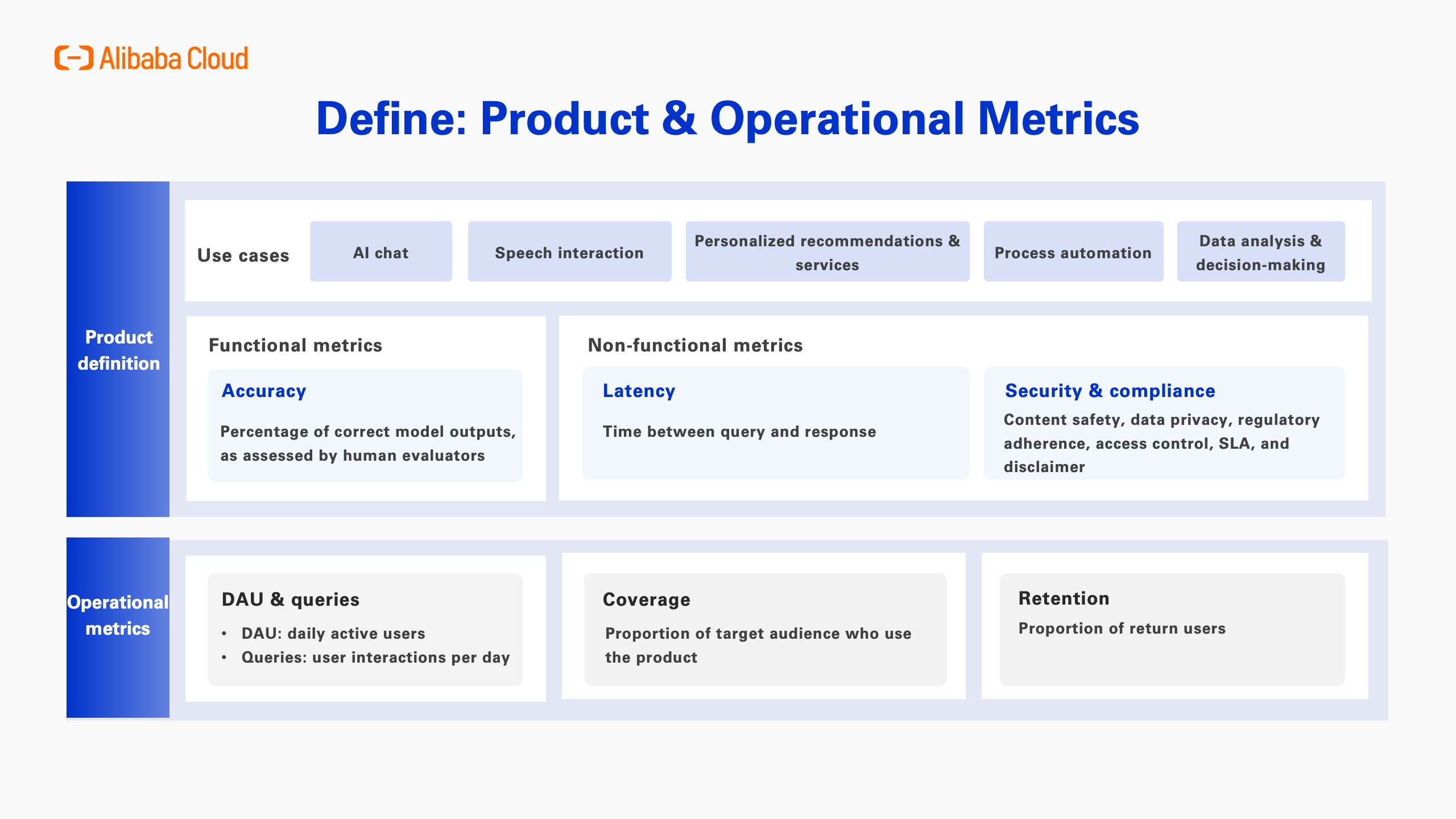

After the Identify step, the next phase is Define.

Building products in this era is very different from how we did it in the past. Many of the products we've discussed so far are similar in that they all involve aspects like interaction and user experience. In this regard, the process doesn't differ much from building products during the mobile Internet era. But for AI products, there's one particularly crucial factor: accuracy. Of course, besides accuracy, there are other non-functional metrics like response timeliness and security compliance. For example, in telesales, when you're having a real-time conversation with a customer, latency must be very low; if there's lag, the customer will feel the conversation is really choppy, like talking to a robot. So, real-time performance and accuracy are absolutely critical. If the accuracy isn't good enough, the product is simply unusable and can never be deployed for real-world tasks. That's why accuracy is the number one core metric for any AI project. The entire project team must prioritize it. It's the most central part of the product definition, something we must completely Redefine.

Operational metrics are equally important. If you only focus on product metrics and accuracy, you're likely to run into pitfalls. Even for internal business projects, you can't forget the fundamentals we learned from the mobile Internet era, such as:

● DAU, or daily active users;

● The number of user queries;

● Coverage rate;

● And retention rate, which is the most critical of all.

If a customer uses your product today and is willing to use it again next week, it means your AI agent is genuinely solving their problems. But if they use it once and never come back, then it doesn't matter how impressive your product metrics are. It probably means you've defined the wrong problem to begin with. Operational metrics act as a reality check. If you don't keep a close eye on them, it's easy for your product, engineering, and algorithm teams to fall into a trap of self-congratulation. What do I mean by that? It's when they say, "Our metrics look great!" but in reality, customers aren't using the product at all.

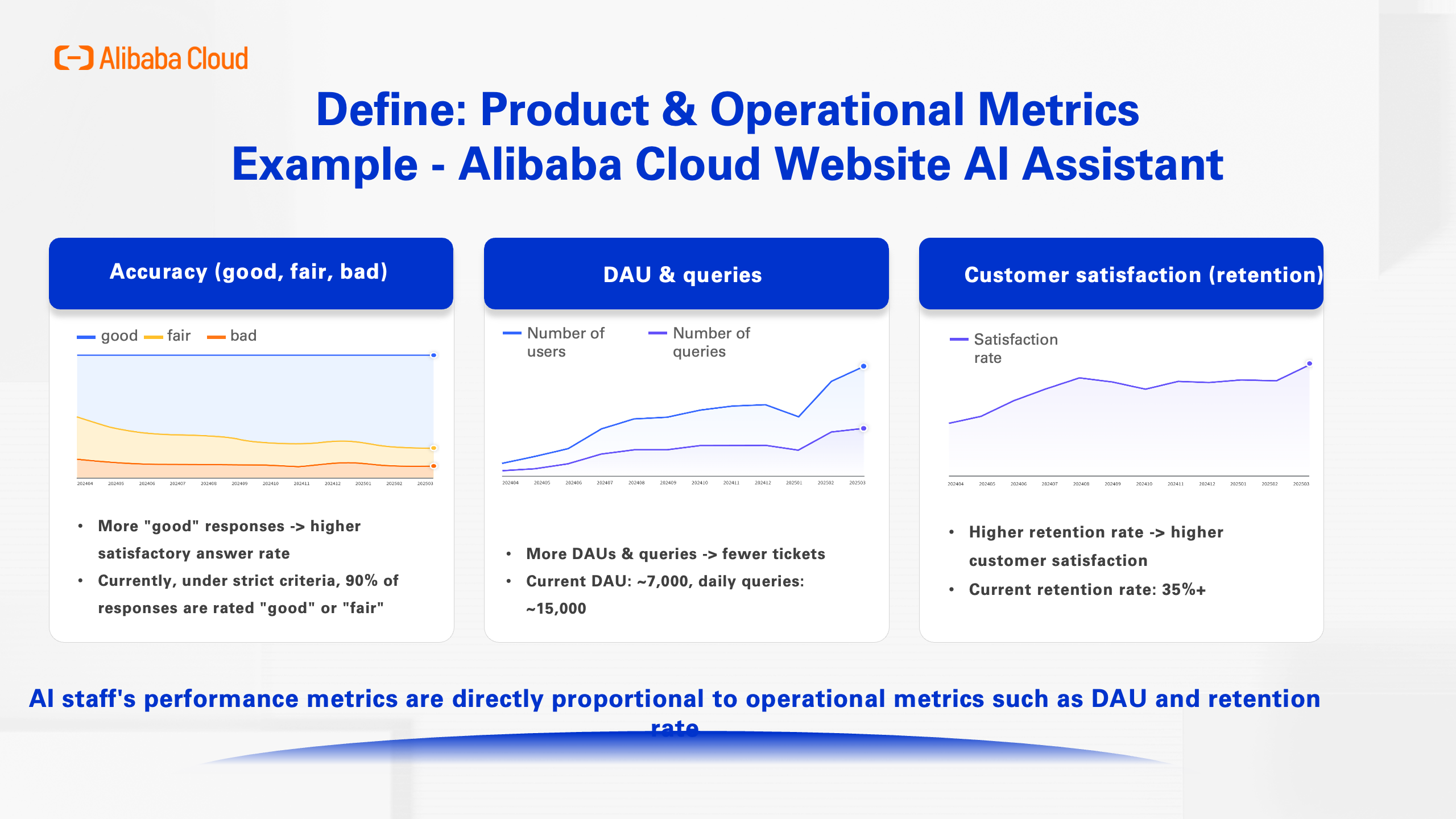

Let me give you an example. For the AI assistant on the Alibaba Cloud official website, this is how we set up our measurement system. The graph on the left shows our accuracy metrics over the past year or so. The blue area represents good performance, where we accurately resolved customer queries and tasks. The yellow area is for mediocre performance, where the task was completed but with irrelevant information. And the red area represents poor performance, where the answers were completely unrelated to the customers' queries. The middle graph shows DAU and the number of customer queries. And the one on the right shows the retention rate.

Currently, our retention rate has reached a fairly high level. You can clearly see from the graphs that as our accuracy improves, both DAU and retention rate also rise steadily. Conversely, if our DAU and retention rate stagnate or even decline, then even if our engineering and algorithm teams claim to have high accuracy, we're just deceiving ourselves.

In reality, many teams may not be aware of this. The reason I can point this out is that I've also been misled by accuracy metrics like the ones in the left graph on multiple occasions. It's not that the team does it intentionally. In today's information landscape, a quick search online will turn up tons of articles with titles like, "Boost your accuracy to 95% with this one simple trick." But these articles are often misleading. They all have a hidden prerequisite: that 95% accuracy is only achievable in a specific scenario. When faced with a massive volume of diverse queries, it's much harder to achieve that level of performance. I'll share more on this later.

Once you've defined your product and operational metrics—the "Define" phase—the next step is "Execute."

Here's the key to the "Execute" phase : It must be driven by product and business goals. That's because this goal-driven approach is what truly mobilizes domain experts. First, without the deep involvement of domain experts and strong evaluation capabilities, it's very difficult to raise the performance ceiling of your LLM application. Second, if your project's goal lacks value or doesn't address a real pain point, you'll find that you won't get the resources you need. In other words, you'll struggle to get cooperation from other teams, and your own team's sense of purpose will diminish, which will directly hinder the project's progress.

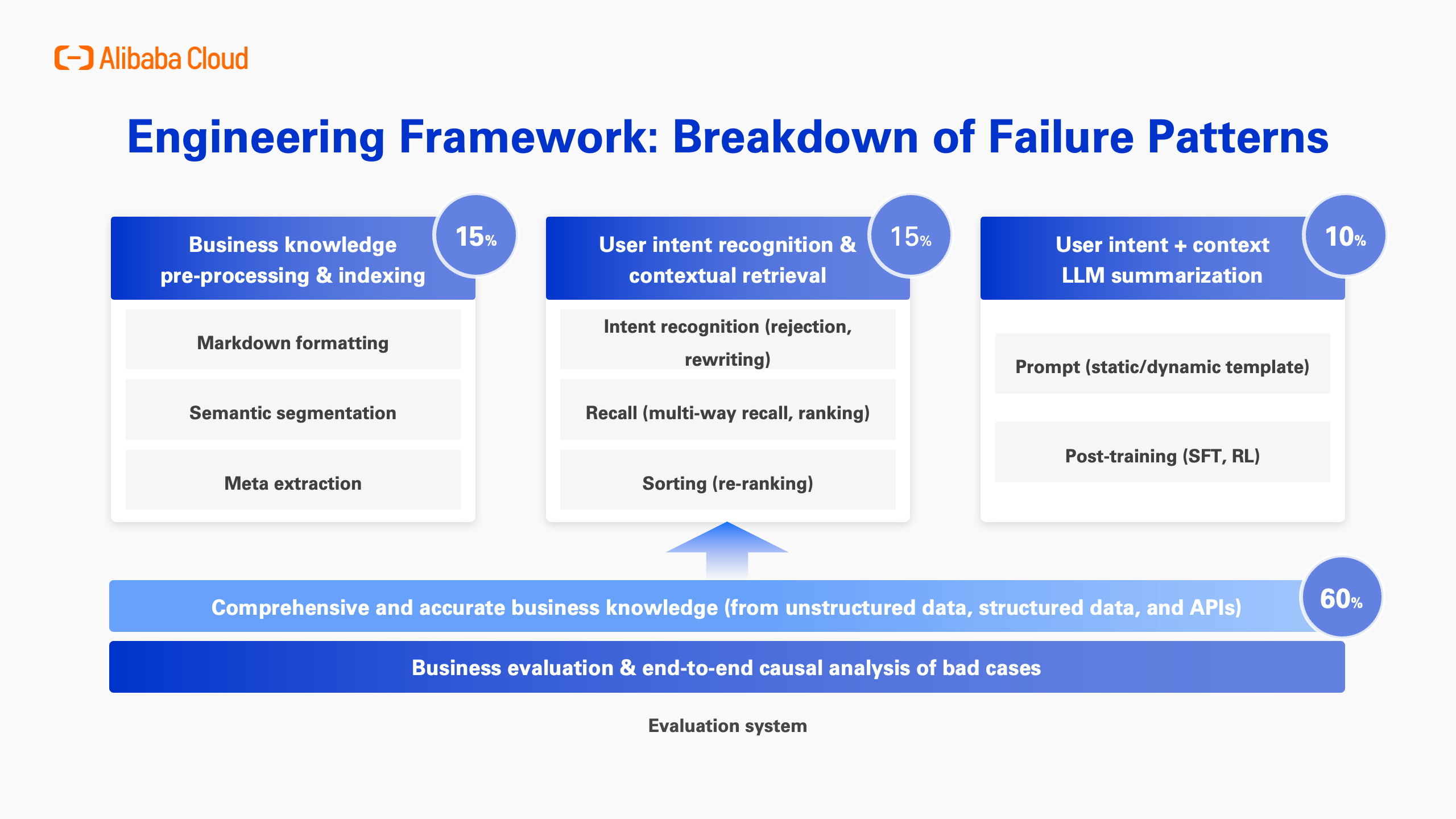

In our overall execution framework, the base of the pyramid is data and evaluation. I've made this the largest foundational block because it's the cornerstone. Business data, business APIs, and evaluation capabilities are the foundation for any LLM application, and you must invest sufficiently in this area. Built on this foundation are R&D and algorithms—things like pre-training, RAG, and fine-tuning. These are the technical buzzwords you see in the media. They aren't unimportant, but they are merely "necessary conditions." I've observed that most product and R&D teams spend 80 to 90% of their time on this part—the R&D and algorithms. What I want to emphasize is this: these are only "necessary conditions." By themselves, they cannot solve the problem of end-to-end AI implementation. Even if you invest ten times the effort into these "necessary conditions," you still won't achieve real results. You must find a way to fulfill the "sufficient conditions." If you can't do that, the project's chances of success are very slim.

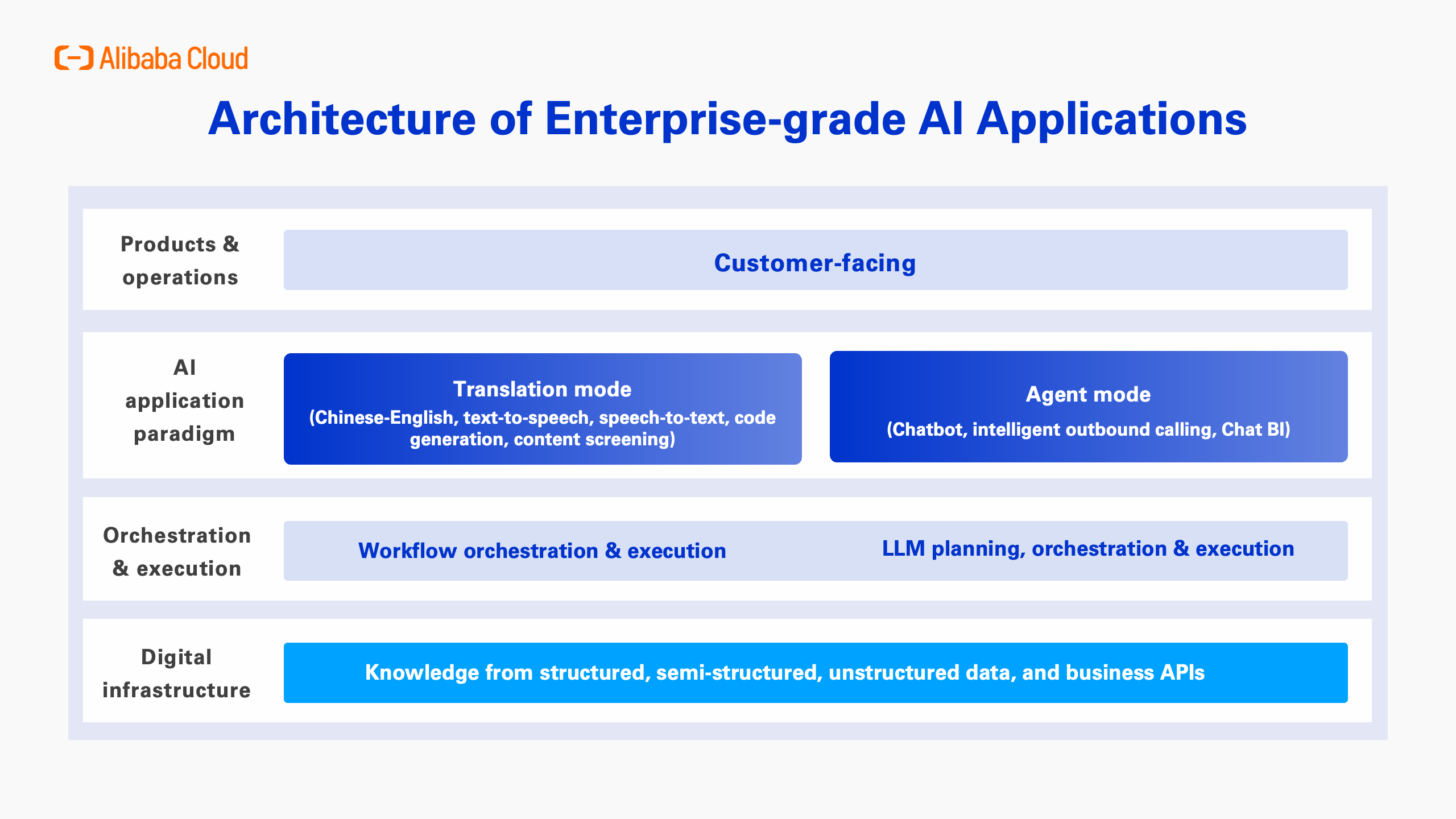

In our communications with business teams and while handling various complex problems, we've identified a few common patterns: First, at the infrastructure layer, you have the preparation of knowledge and data. In the middle, there's orchestration and scheduling. This could be the familiar workflow orchestration, autonomous agent planning and orchestration, or a combination of the two. And at the very top, you have the customer-facing product and operations.

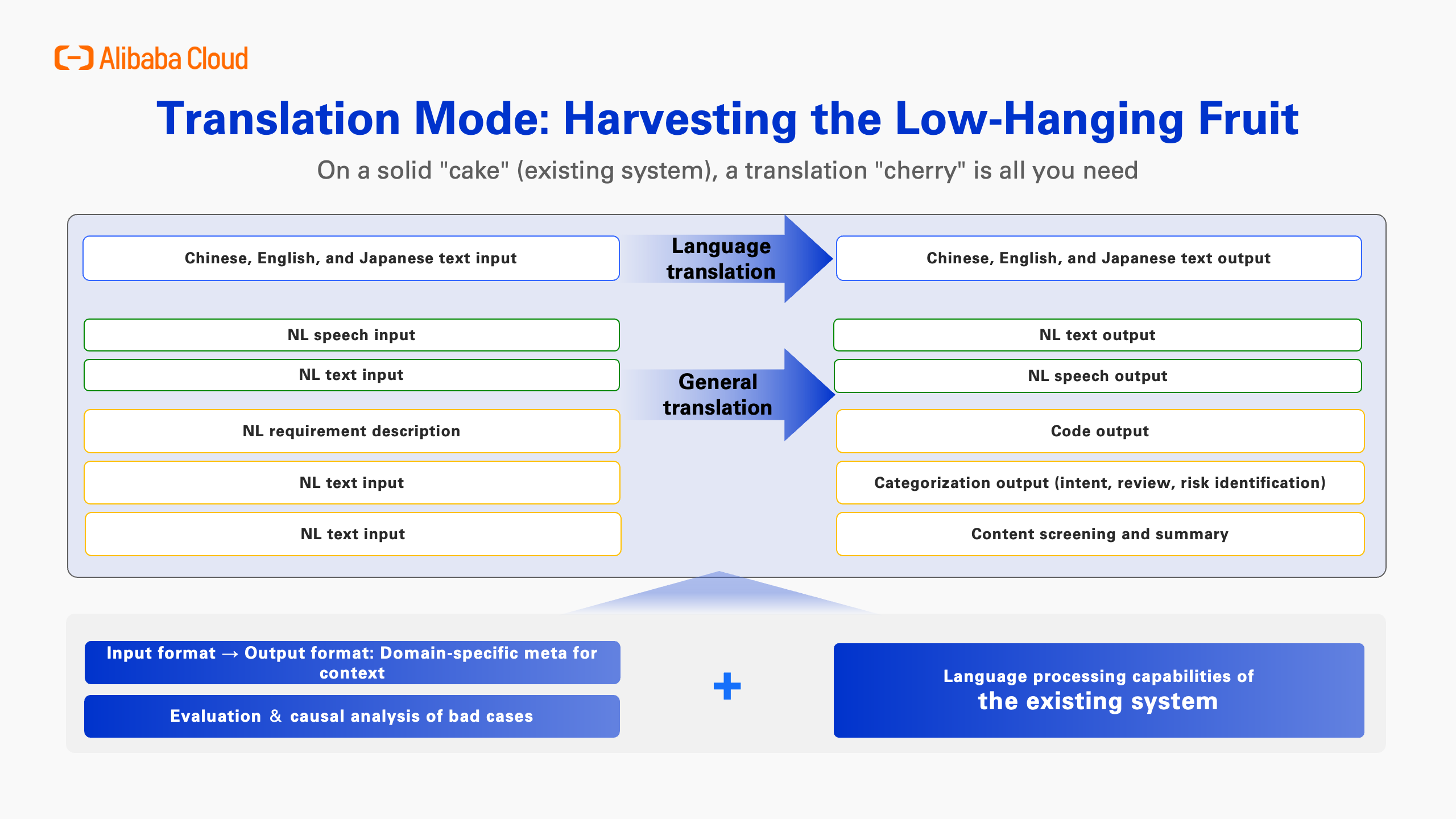

Here, I'll focus on the two modes highlighted in dark blue: Translation Mode and Agent Mode. I believe these are the two main types of application modes. Translation Mode is the easiest to get results from because it's quite straightforward, whereas Agent Mode is more complex.

First, let's talk about Translation Mode. Internally, we call all translation-type models the "low-hanging fruit" of AI, because they are relatively easy to implement. The algorithm behind this wave of LLMs is the Transformer framework. The Transformer was originally developed by Google for translation tasks, and the algorithm evolved through this continuous work. Later, pre-trained models like BERT were also widely used in the translation field. Therefore, LLMs are exceptionally good at translation.

Translation can be divided into two types: narrow and general. Narrow translation refers to converting between languages, like from Chinese to English. General translation, on the other hand, covers a much wider range of forms. For example: converting natural speech to text and back to speech; converting natural language to SQL or Java; or even "translating" a research paper into a version that a middle school student can understand. All of these fall under the general category . The Transformer excels at both language and general translation, which is why this is the area where you can get results the fastest.

But there's a potential pitfall here: Even if the translation capability on the left is ready, if the existing system on the right isn't, then you'll run into problems .

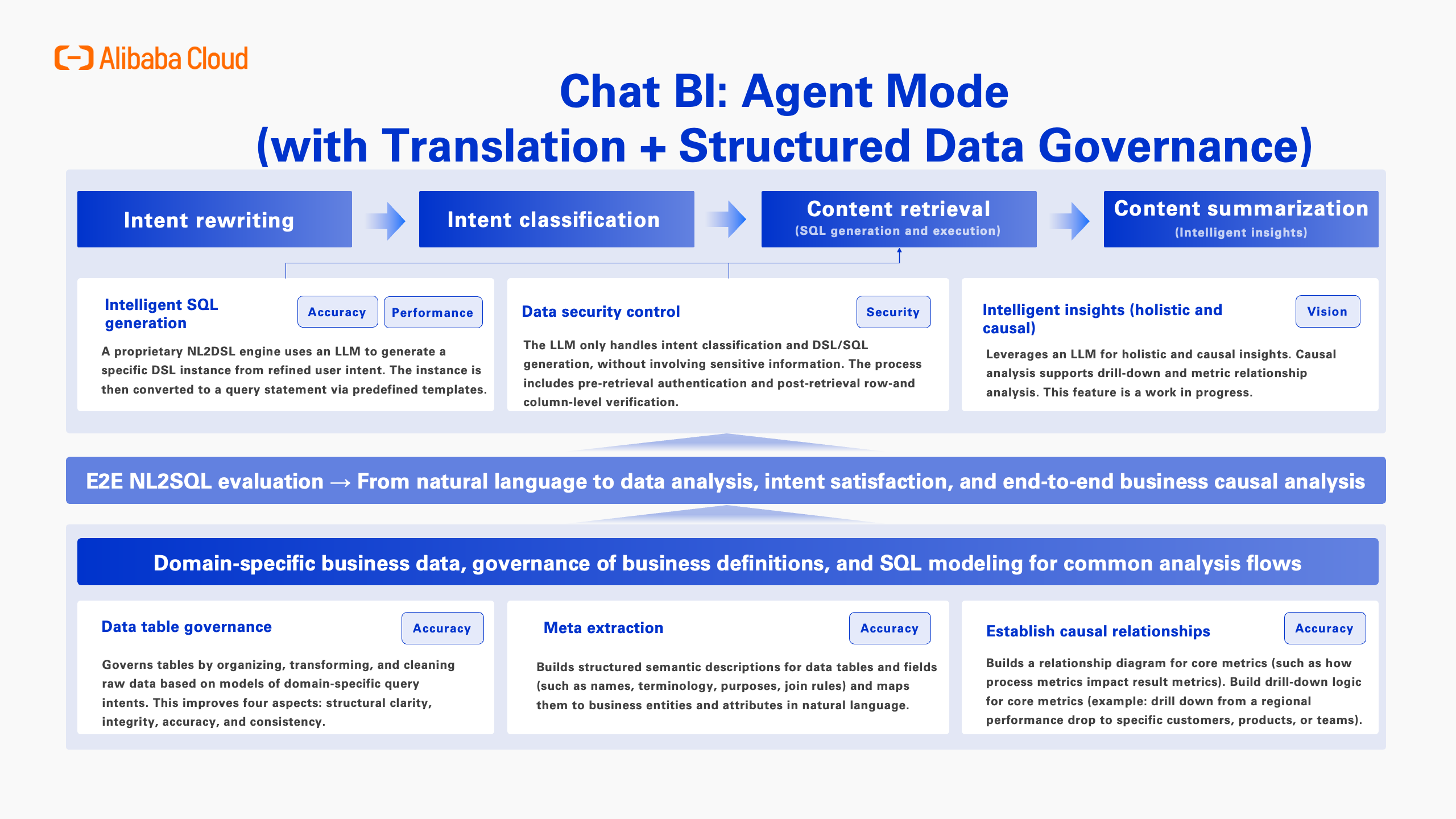

Take Chat BI, for example. Why are there so few successful Chat BI cases in enterprises? A big reason is that the logic of Chat BI is quite simple: It translates natural language into SQL, executes it in a backend database or big data system, retrieves the results, then translates the results back into natural language for the user. In essence, it's a flow of Natural Language → SQL → Execution Result → Natural Language, which is fundamentally a form of translation.

But here's an interesting problem we've noticed: Many companies want to implement Chat BI, but if their databases, business logic, and data definitions aren't well-established—to the point where even a human isn't capable of writing the required SQL—then the natural language can't be translated either. This is because the backend system lacks a solid foundation for execution. I believe most pitfalls with Chat BI implementation in enterprises come from trying to build something too "broad" from the get-go. If the existing system's APIs and data aren't ready, or if even your own team can't perform these operations manually, then the natural language translation simply won't work. This is the key problem.

So, this is how we do things: First, you must identify the capabilities of your existing system. For example, if your ODPS, database, and data mid-end already support BI and operations, then you can build functional Chat BI applications on top of those high-frequency SQL statements.

So, it's crucial to see this in two parts: One is the translation, and the other is the processing capability of the existing system. I like to use this analogy: The existing system is the "cake base," and the LLM translation is the "cherry" on top. If your cake base is ready, I can add a cherry, and you get a cherry cake. But if you don't even have the cake base, then don't bother asking me to make a cherry cake. It's impossible.

Therefore, the key takeaway is this: You need to determine if your "cake base" is ready before you add the "cherry." You can't just take a cherry and pretend it's a cherry cake. This is a common misconception.

Translation Mode is the low-hanging fruit. It's easy to get started, but it's full of potential pitfalls.

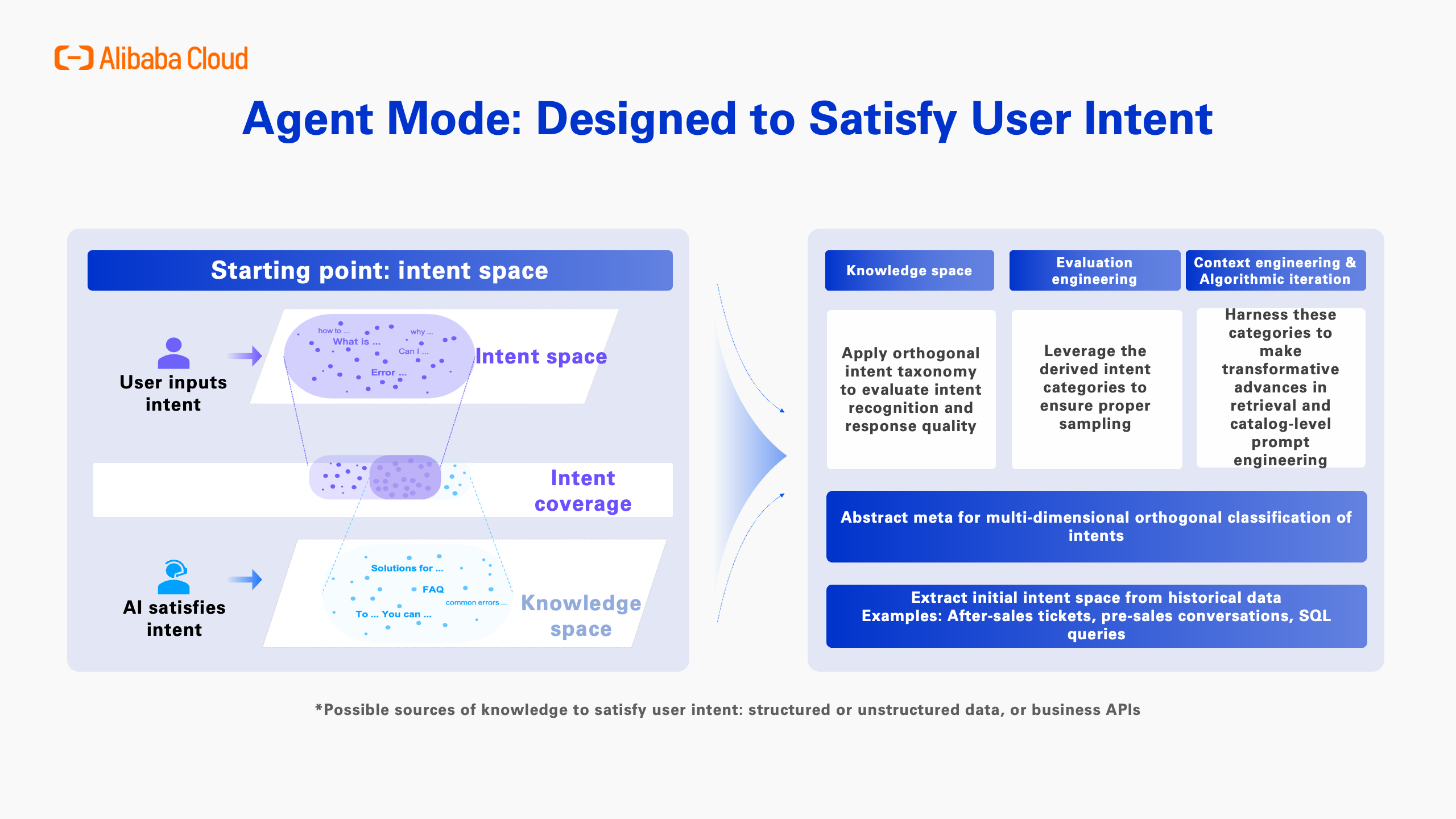

Next, let's discuss the Agent Mode. Please pay close attention to this statement: All Agent applications begin with user intent and end with satisfying user intent . If you don't start with user intent, and if you don't measure your agent's success by its ability to satisfy that intent, you are guaranteed to fail. This is the most common problem I've seen, not just within our teams but across the entire industry. That's why we've developed a method that I insist on following when we build agents internally.

The first step is to find the "intent space" for the domain. When a customer interacts with an agent, they always have an intent. So, what are all the possible intents? For example, in a customer service scenario, customers ask all sorts of questions. These questions essentially form a space, or a collection. So, the first step is to clearly define the boundaries and completeness of this collection. If you don't know its scope, you can't measure it. Only after establishing a complete intent space can you move forward.

So, the first thing is to establish the intent space. Then, you need to perform knowledge engineering based on it. I believe this is the most fundamental "necessary condition." This means asking: Are your knowledge bases and documents complete? Are the necessary APIs and structured data in place? Can you actually satisfy the customer's intents?

Once you have the knowledge and the intent space, you can then start evaluating based on intent. You can only do real work when you both understand the user's intent and possess the necessary knowledge. Without clear intent and the right knowledge, you'll just be spinning your wheels.

Based on our experience, when building an intent space for customer service scenarios, you should start with domains where user intents are being satisfied. We analyze tickets to build this intent space. Then, you can classify the intents, and check and supplement your knowledge base for each category; this is essentially knowledge engineering. Only when both the intent space and knowledge space are ready can you begin evaluation and figure out how to measure your agent's performance. And only when you can measure performance can you iterate on your engineering and algorithms. This is a fundamental principle and a mandatory lesson for anyone building agents internally.

So, let me summarize the two modes. The Translation Mode is like the cherry on top. You must first have the cake before you can add the cherry. If the cake isn't ready, just adding a cherry will lead to failure. The key to Agent Mode, however, is that it starts with user intent and ends with satisfying that intent . This is a complete, logical methodology.

Next, let's delve into the more complex Agent Mode, and look at some key points for achieving end-to-end implementation in a business system.

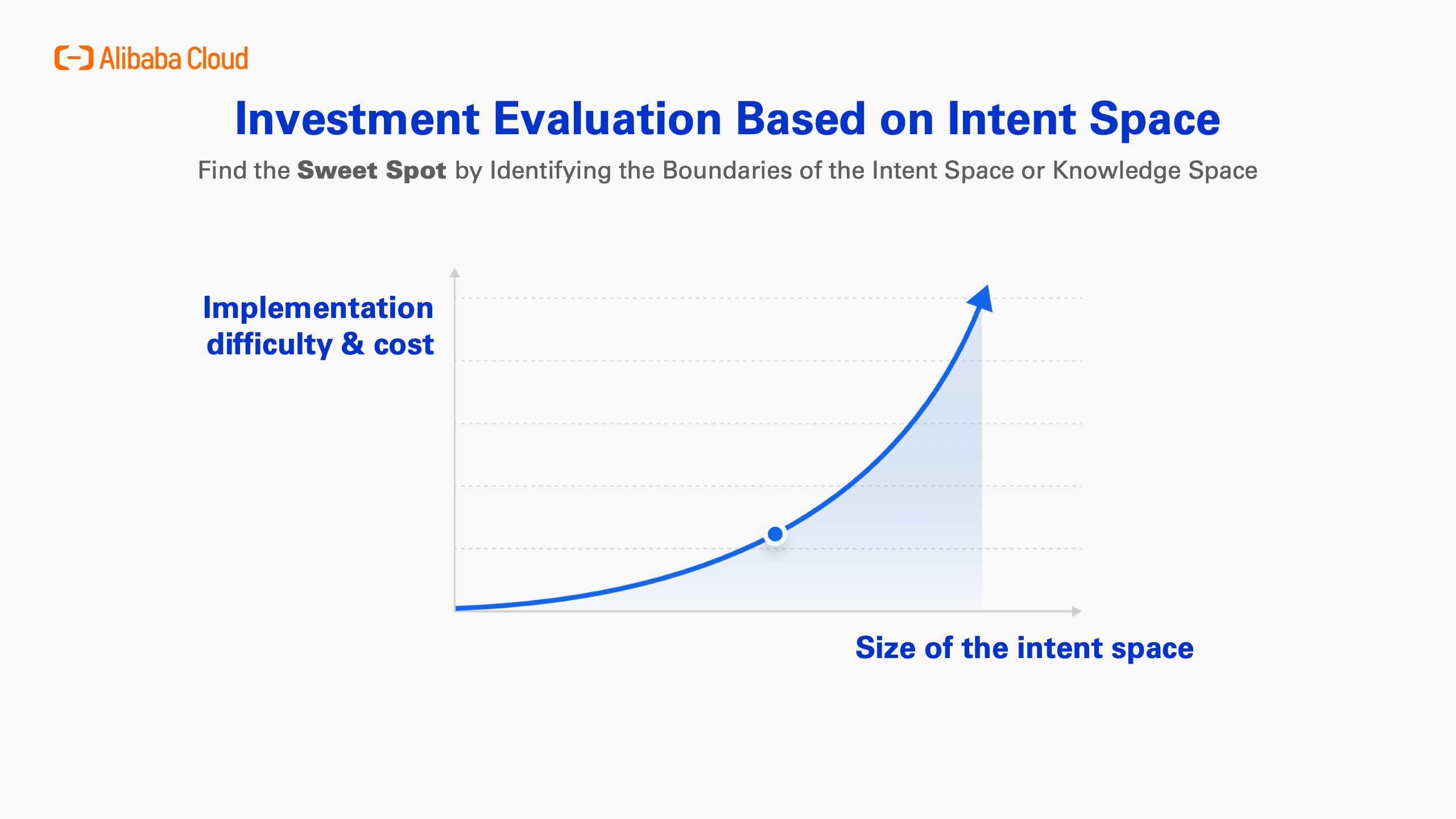

The first thing is to conduct an ROI assessment for your investment in the intent space. When you build an Agent, will it have a high ROI? That depends on the size of the intent space. If there's vast knowledge required and many broad intents, then the investment will be huge. A large intent space means you'll need to invest more in knowledge, engineering, and iteration to satisfy those intents.

So, the conclusion is clear: The first thing you must do is control the scale of the intent space. If you don't, you're setting yourself up for failure, because the subsequent investment will be unsustainable. Keep this question in mind: How do you control an Agent's intent space? If you don't manage this well, or if it's not clearly defined, you can't even calculate the ROI. And a project without a calculable ROI has a much lower chance of success.

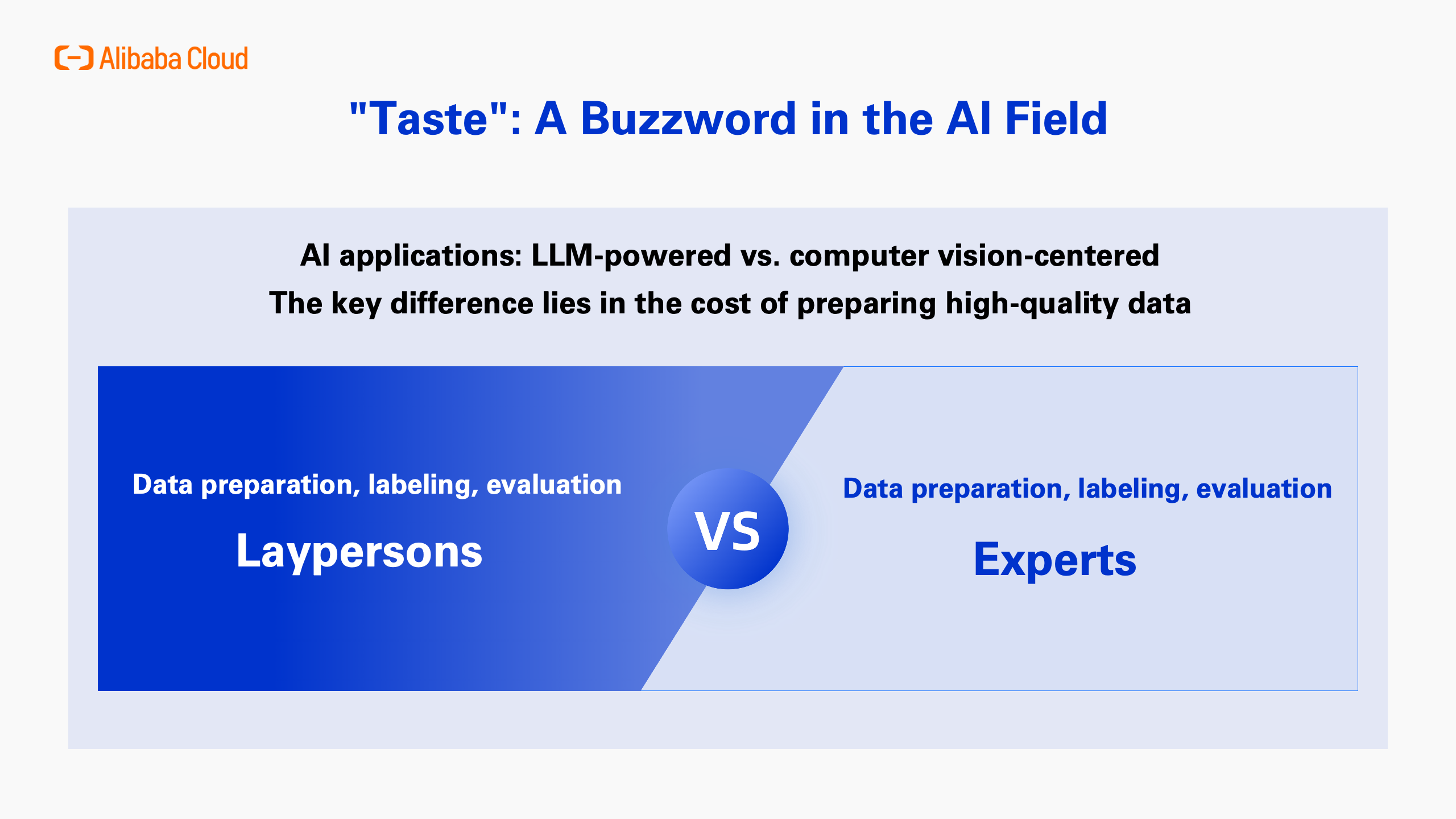

Second, you've probably heard this word being used a lot in the AI field recently: "taste." In the age of AI, taste is incredibly important. So where does this idea of taste come from? My guess is that it traces back to a 1995 interview with Steve Jobs. A reporter asked him how he knew his decisions were the right ones. Jobs thought briefly and replied, "Ultimately, it comes down to taste." This is highly relevant to the key challenge in this new wave of AI: evaluation.

So, what's the biggest difference between this AI revolution and the previous one? Well, the previous wave of deep learning mainly focused on computer vision. How did we evaluate data at that time? We would draw circles around cats, dogs, traffic lights, cars, and people in an image. That's how data labeling was done. For evaluation, you just had to check if the classification was correct—was the cat mistaken for a dog? If not, great. This is how ImageNet was created. Fei-Fei Li hired many outsourced teams to do the labeling. This kind of work is perfectly suited for outsourcing, because anyone can do it. The reason is simple: distinguishing a cat from a dog isn't hard. Even in specialized fields like fault or defect detection, labeling is quite straightforward.

But this time, it's a different story. With LLMs, the input is a short essay, and the output is also a short essay. This is especially true in professional domains, making direct measurement very challenging. This is why "taste" is so important—because there are no standard answers. Many of us have taken college entrance exams. Is there a standard answer for the essay question? No. What about open-ended questions, like summarizing the main idea? None there, either. That's just what evaluating LLMs is like . So, the key difference with the current wave of LLMs is that for measurement and evaluation, there are no standard answers. And because of that, the cost is highest, and it becomes the biggest bottleneck for implementation. How do you break through this bottleneck? The only way is through heavy investment.

So, when we talk about "taste," we're really talking about the challenge of evaluation. You can only truly start your evaluation engineering when you have the foundational elements we discussed earlier. Both manual and automated evaluation are highly dependent on those elements. This is because the output is non-standard; there are no right or wrong answers. Think about it—why is programming advancing so quickly? Because math and code have standard answers that can be validated by an editor. But for plain text, there are no standard answers.

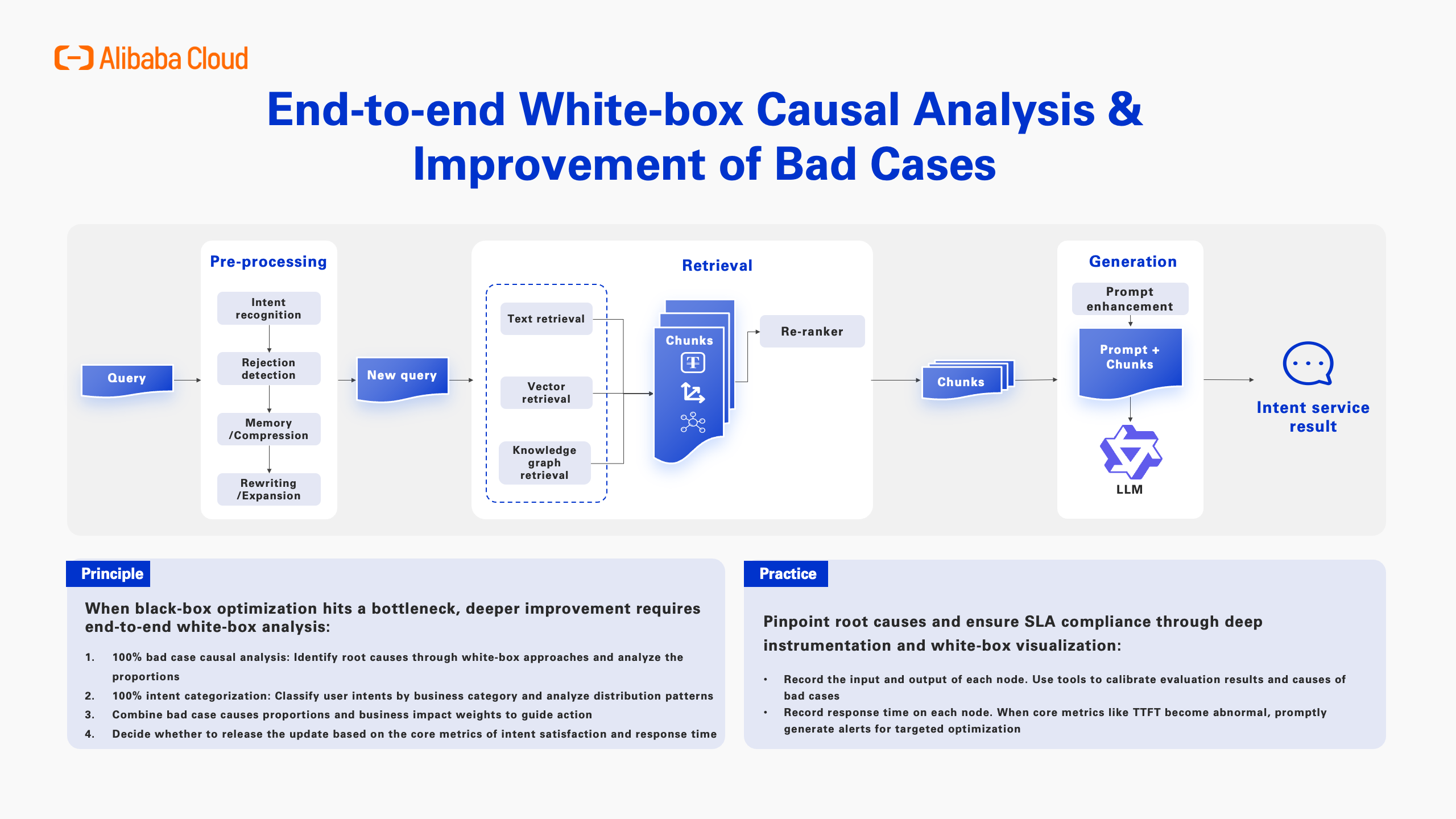

In this process, there's a very important concept called E2E causal analysis. An agent's process involves many steps. Within these complex workflows and orchestration logics, if an intent isn't satisfied, we must be able to pinpoint which step caused the problem in that bad case. Every bad case should be attributed to a specific step in the engineering process. Only then can we cluster the root causes and make improvements.

The diagram here summarizes a common finding from our experience. If you can measure performance, you'll discover that most problems occur at the data layer—specifically with unstructured and structured data APIs. If these fundamental capabilities are lacking, it becomes the main reason why Agents fail. Some problems might also arise in steps like knowledge pre-processing, intent recognition, context retrieval, or the final intent summary. Data is extremely important, but you can't even begin to talk about data without evaluation.

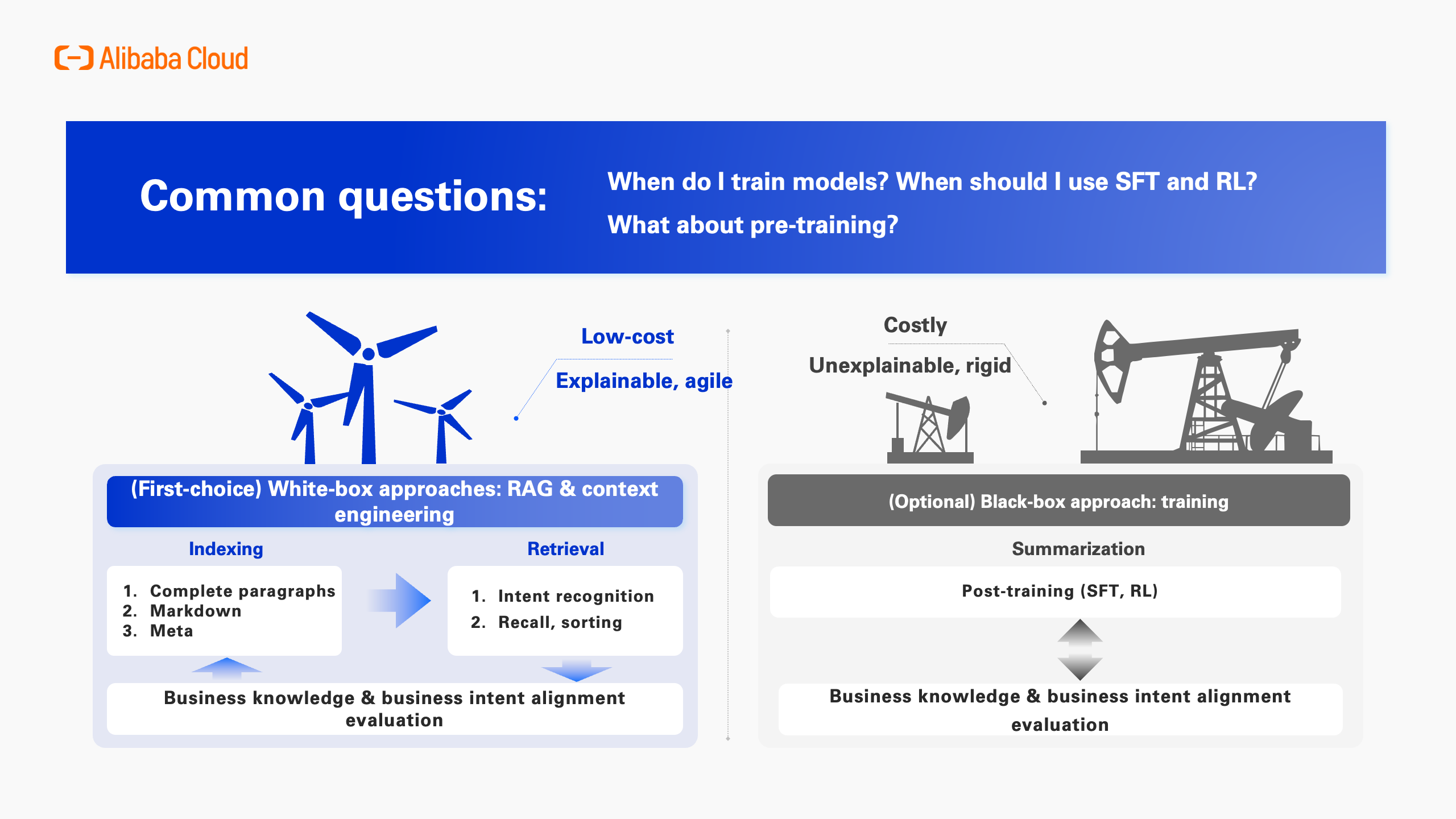

Another common question is, "Do we need to do model training?" Our answer is very clear: You should always start by using the base model's API in a transparent, white-box manner. Focus on evaluation, data, and causal analysis. Training should only be introduced when you have high-quality data and strong evaluation capabilities. The reason is simple: without them, any investment in training is a waste. Training is time-consuming and expensive. If you can't measure the outcome or don't have enough data, it's just not a smart move. So, we only bring in pre-training when it's absolutely necessary; when the base model alone isn't enough. For instance, if real-time performance is critical, we might train a smaller model to meet those needs. We call this a "large-and-small model" approach. The key takeaway is: only train when you have to.

In Agent Mode, we handle conversions from speech-to-text and text-to-speech, along with complex workflows and Agent scheduling. The end goal is simple: a customer expresses a need, and we satisfy their intent. This is a comprehensive process of understanding and satisfying user intent, and it requires integrating multiple systems to build an Agent that can genuinely serve customers and even handle sales.

This is similar to Chat BI, which is essentially another form of Agent Mode. It does more than just translate natural language into SQL; after the SQL is executed, the results must be translated back into natural language. But the key here is the underlying data mid-end, and whether the existing data governance is solid. If the existing system—the "cake base," so to speak—is flawed, you can't achieve good Chat BI results. Chat BI itself is just a technical tool. Ultimately, you must determine if the specific domain already has a mature data architecture and established data analysis practices. Only by adding AI on top of this solid foundation can you create that "magical" effect.

As we drive AI transformation within Alibaba Cloud, our core mission is to provide "Result as a Service" (RaaS) to our business departments. We are likely one of the few teams today that can truly implement end-to-end solutions at scale and deliver concrete business results. Our method for achieving this is called RIDE, which stands for Reorganize, Identify, Define, and Execute. Here’s the key takeaway: No matter how hard you work on the "necessary conditions," you cannot succeed without investing in "sufficient conditions." The core purpose of the RIDE methodology is to shift the focus toward identifying and assembling the complete set of sufficient conditions—because only then can teams achieve successful and effective AI implementation.

To circle back to the "lift" analogy from the beginning, I'd like to follow up with the iceberg analogy. Above the water, my team and I are driving the digital transformation of businesses. We can achieve this because of what lies beneath the surface: the powerful foundation of Alibaba Cloud. Our powerful tools for enterprise applications are our MaaS platform, Model Studio, which includes Qwen and various model services; our PaaS offerings like PAI, ODPS, and databases; and our underlying IaaS like ECS, Lingjun, storage, and network services. The cost of these capabilities is constantly decreasing, while their features continue to expand. So, when a company chooses a strong technical foundation, it's like getting on a better "lift" for its digital transformation, one that's accelerated by technological growth and falling costs. I personally believe that Alibaba Cloud is this "great lift." Once a business adopts our cloud, the lift provides continuous upward momentum for their digital transformation.

Qwen3-Next: A New Generation of Ultra-Efficient Model Architecture Unveiled

1,320 posts | 464 followers

FollowAlex - July 9, 2020

Alibaba Clouder - February 24, 2020

Ali Ali - January 23, 2025

Data Geek - February 21, 2025

Neel_Shah - August 27, 2025

Alibaba Cloud Community - September 3, 2025

1,320 posts | 464 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community