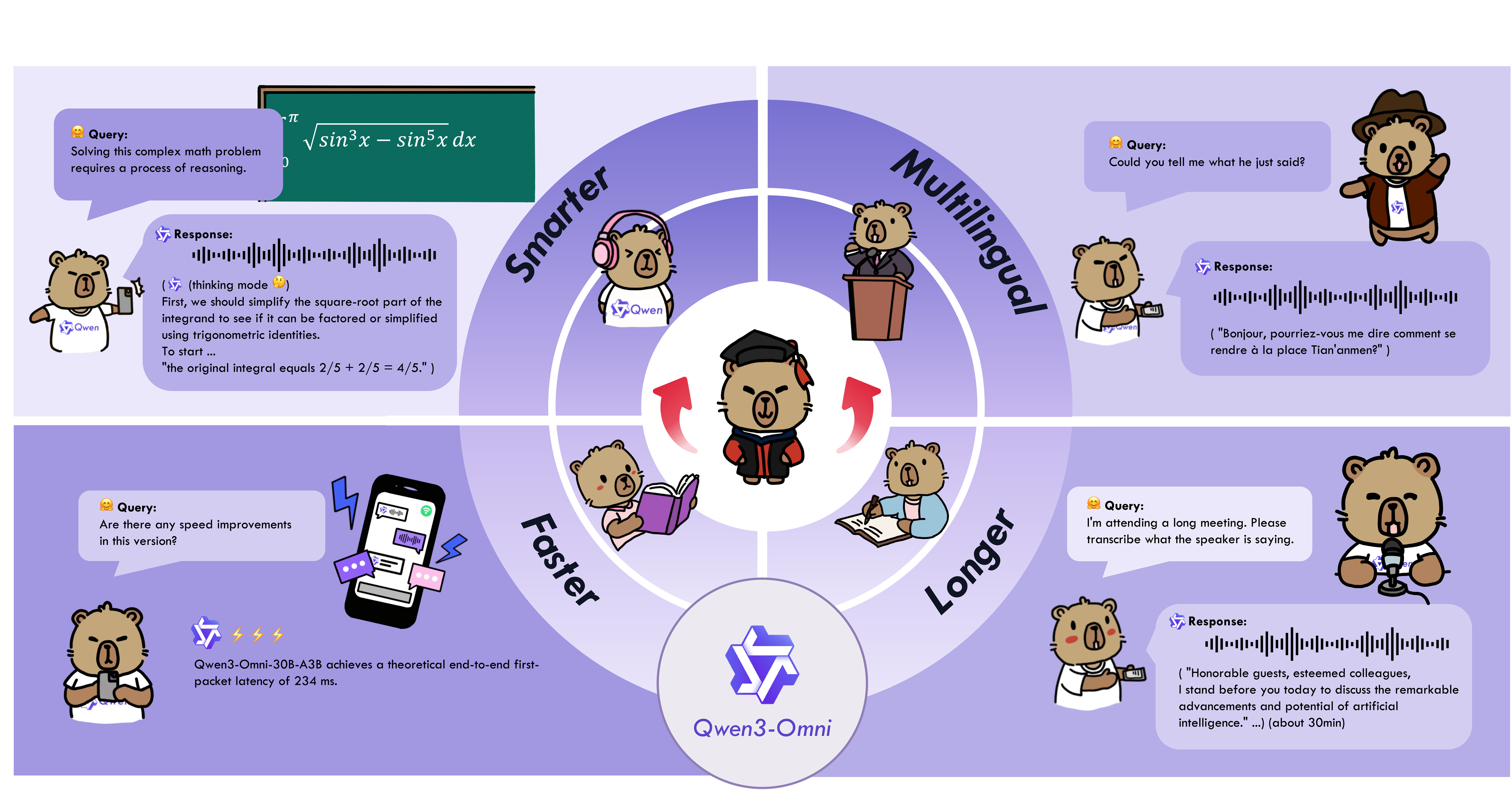

Qwen3-Omni is the natively end-to-end multilingual omni model. It processes text, images, audio, and video, and delivers real-time streaming responses in both text and natural speech. We introduce several upgrades to improve performance and efficiency.

Key Features:

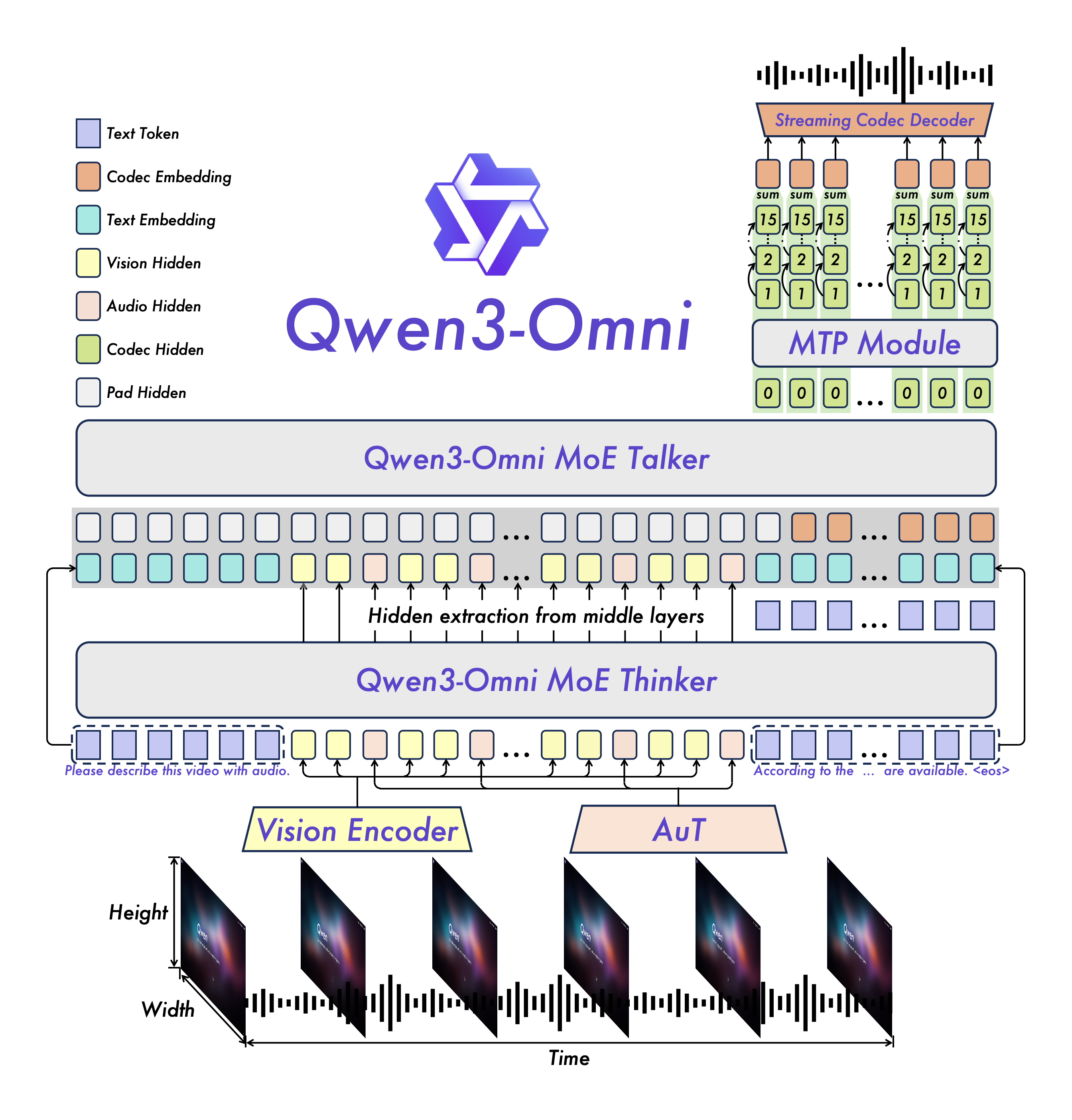

Qwen3-Omni adopts the Thinker-Talker architecture. Thinker is tasked with text generation while Talker focuses on generating streaming speech tokens by receives high-level representations directly from Thinker. To achieve ultra–low-latency streaming, Talker autoregressively predicts a multi-codebook sequence. At each decoding step, an MTP module outputs the residual codebooks for the current frame, after which the Code2Wav renderer incrementally synthesizes the corresponding waveform, enabling frame-by-frame streaming generation.

AuT: The audio encoder adopts the AuT, trained on 20 million hours of audio data, providing extremely strong general audio representation capability.

MoE: Both the Thinker and Talker adopt MoE architectures to support high concurrency and fast inference.

Multi-Codebook: The Talker adopts a multi-codebook autoregressive scheme that the Talker generates one codec frame per step, while the MTP module produces the remaining residual codebooks.

Mixing unimodal and cross-modal data during the early stage of text pretraining can achieve parity across all modalities—i.e., no modality-specific performance degradation—while markedly enhancing cross-modal capabilities.

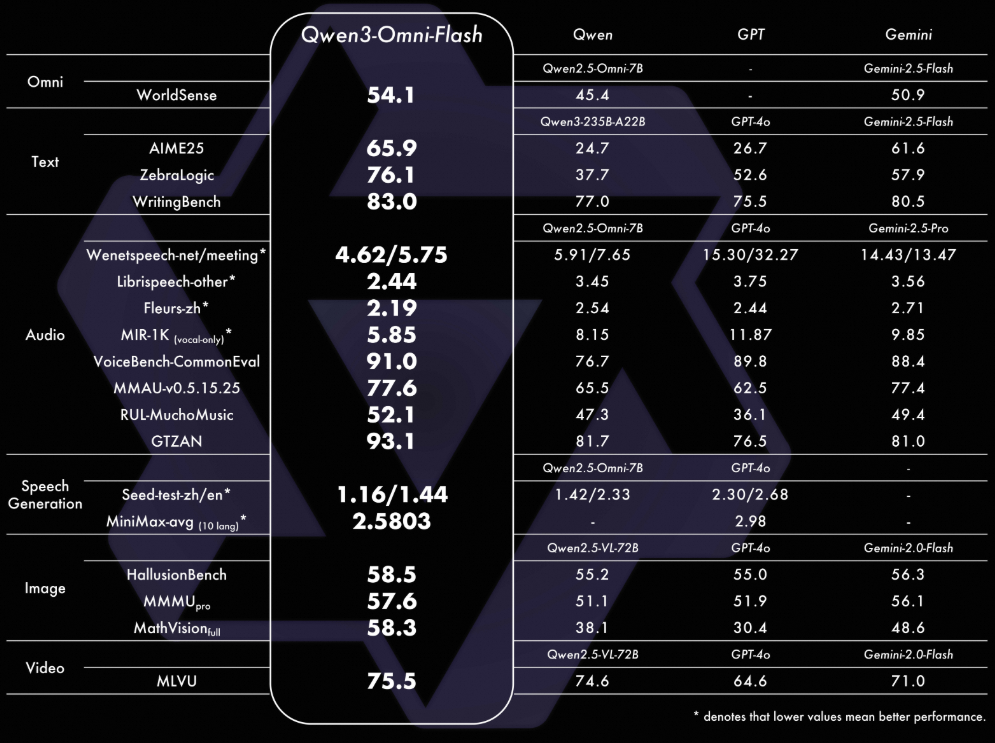

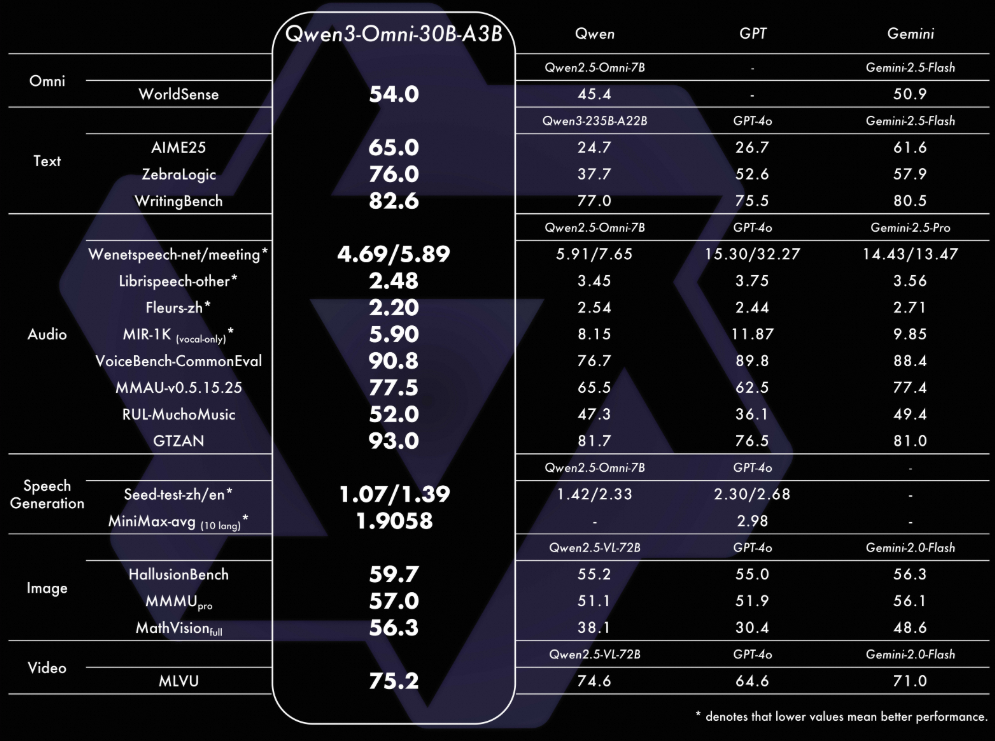

Qwen3-Omni achieves Gemini-2.5-Pro-level performance in speech recognition and instruction following tasks.

Substantial latency reduction across the entire pipeline—from encoders to Thinker, Talker, and Code2Wav—enables fully streaming generation, with enabling streaming from the first codec frame.

We conducted a comprehensive evaluation of Qwen3-Omni, which matches the performance of same-sized single-modal models within the Qwen series and excels particularly on audio tasks. Across 36 audio and audio-visual benchmarks, Qwen3-Omni achieves open-source SOTA on 32 benchmarks and overall SOTA on 22, outperforming strong closed-source models such as Gemini-2.5-Pro, Seed-ASR, and GPT-4o-Transcribe.

We are eager to hear your feedback and see the innovative applications you create with Qwen3-Omni. In the near future, we will further advance the model along multiple axes, including multi-speaker ASR, video OCR, audio–video proactive learning, and enhance support for agent-based workflows and function calling.

Original source: https://qwen.ai/blog?id=fdfbaf2907a36b7659a470c77fb135e381302028&from=research.research-list

Qwen3-Next: Towards Ultimate Training & Inference Efficiency

1,320 posts | 464 followers

FollowAlibaba Cloud Community - September 27, 2025

Alibaba Cloud Community - November 7, 2025

Alibaba Cloud Community - September 16, 2025

Alibaba Cloud Community - December 11, 2025

Alibaba Cloud Community - September 12, 2025

Alibaba Cloud Community - January 14, 2026

1,320 posts | 464 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community