We introduce Qwen3-Coder-Next, an open-weight language model designed specifically for coding agents and local development. Built on top of Qwen3-Next-80B-A3B-Base, which adopts a novel architecture with hybrid attention and MoE, Qwen3-Coder-Next has been agentically trained at scale on large-scale executable task synthesis, environment interaction, and reinforcement learning, obtaining strong coding and agentic capabilities with significantly lower inference costs.

Rather than relying solely on parameter scaling, Qwen3-Coder-Next focuses on scaling agentic training signals. We train the model using large collections of verifiable coding tasks paired with executable environments, enabling the model to learn directly from environment feedback. This includes:

This recipe emphasizes long-horizon reasoning, tool usage, and recovery from execution failures, which are essential for real-world coding agents.

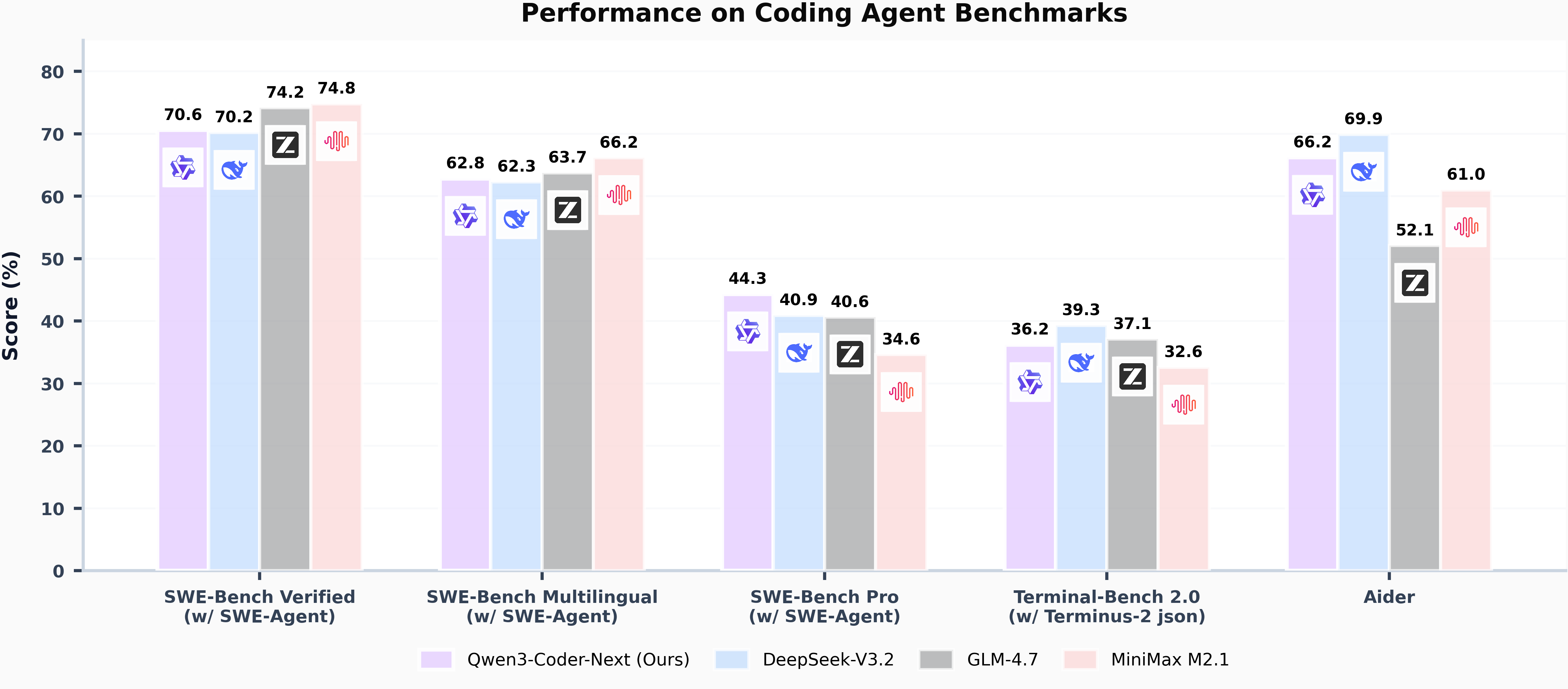

The figure below summarizes performance across several widely used coding agent benchmarks, including SWE-Bench (Verified, Multilingual, and Pro), TerminalBench 2.0, and Aider.

The figure demonstrates that:

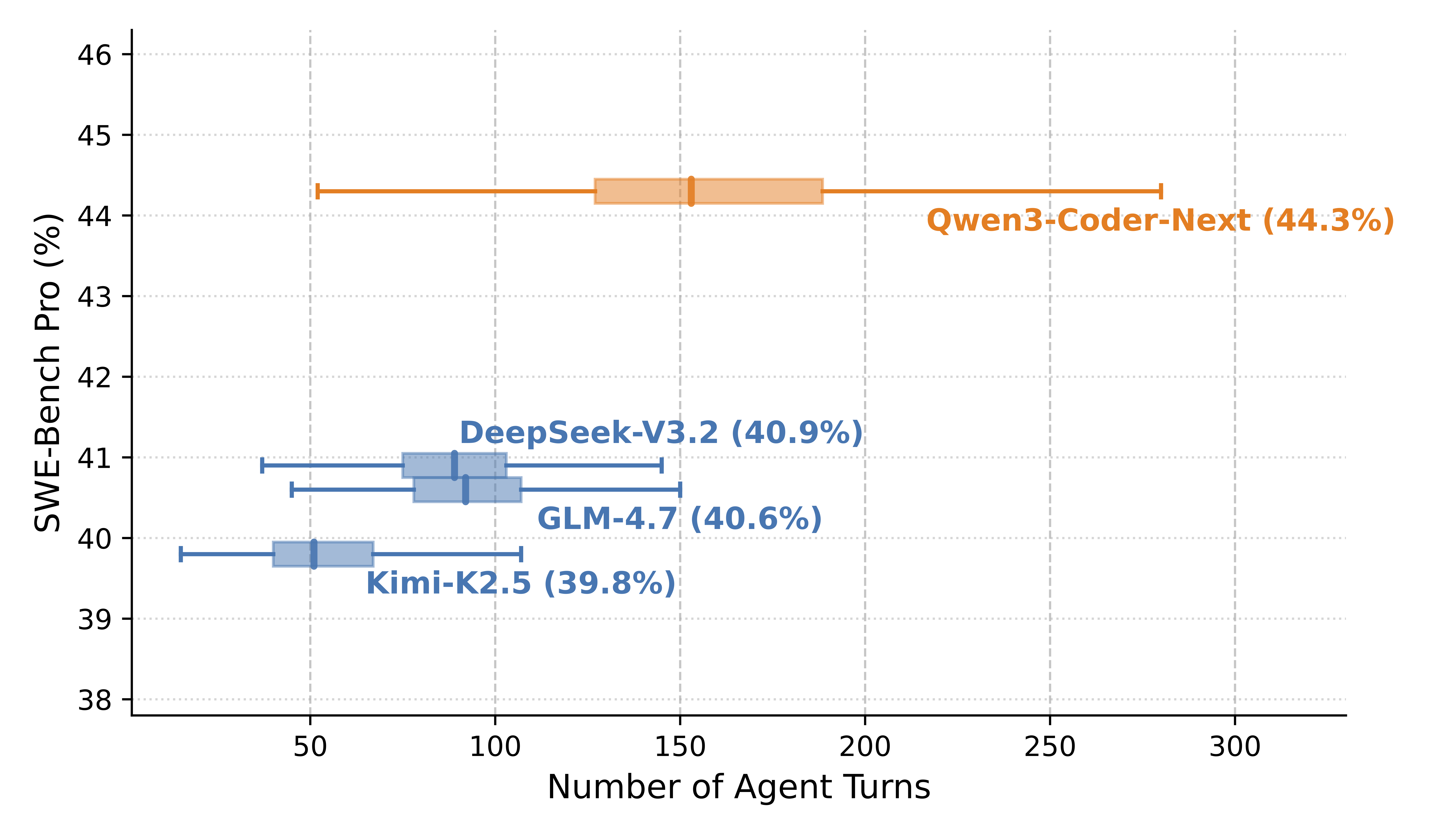

As shown in the figure below, Our model achieves strong results on SWE-Bench Pro by scaling the number of agent turns, providing evidence that the model excels at long-horizon reasoning in multi-turn agentic tasks.

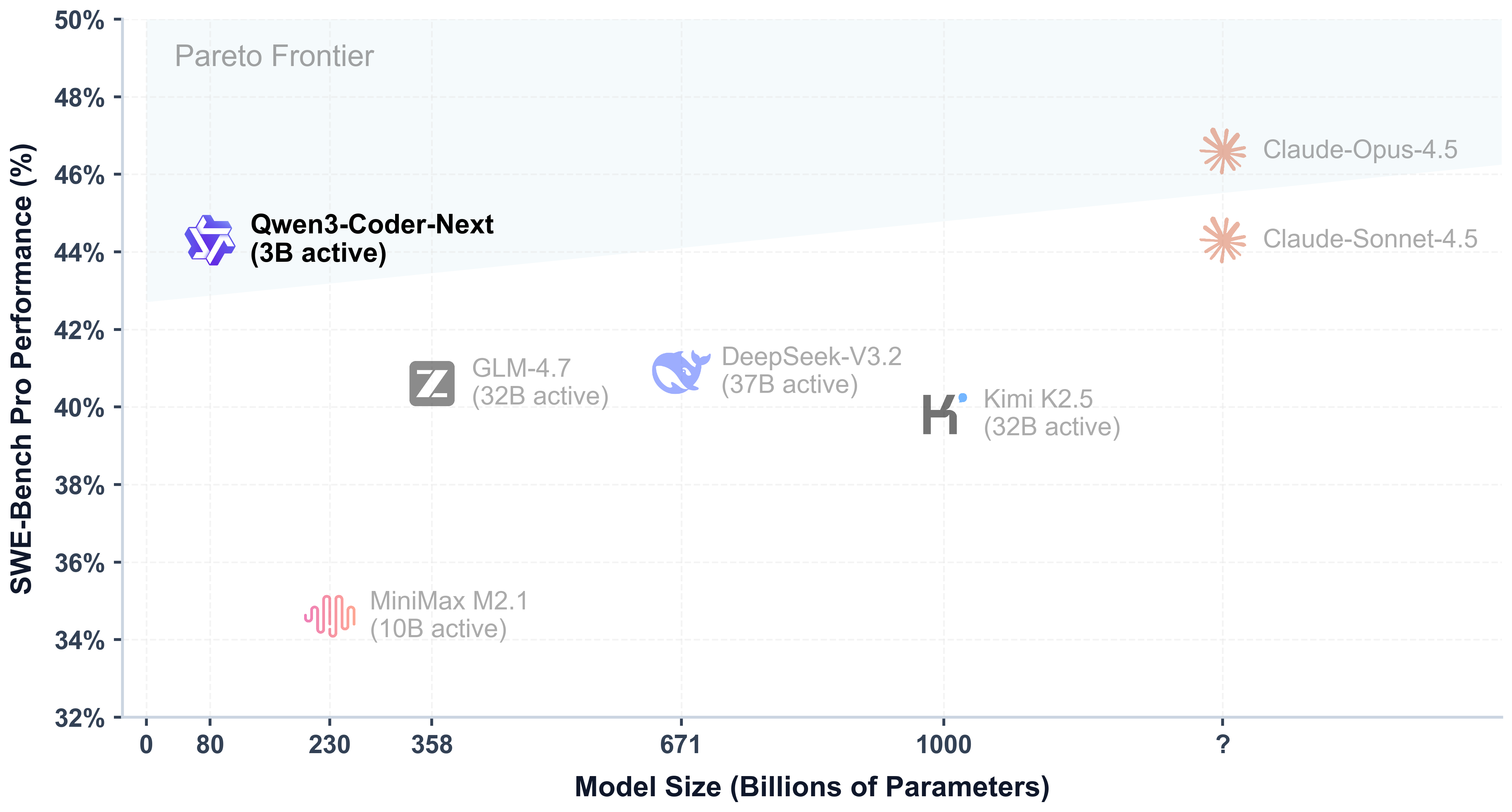

This figure highlights how Qwen3-Coder-Next achieves an improved Pareto tradeoff between efficiency and performance.

This comparison makes the efficiency story clear:

Qwen3-Coder-Next shows promising results on coding agent benchmarks, providing good speed and reasoning abilities for practical use. While it performs competitively—even compared to some larger open-source models—there is still much room for improvement.

Looking ahead, we believe strong agent skills—like using tools by itself, handling tough problems, and managing complex tasks—are key for better coding agents. Next, we plan to improve the model’s reasoning and decision-making, support more tasks, and update quickly based on how people use it.

If you find our work helpful feel free to give us a cite.

@techreport{qwen_qwen3_coder_next_tech_report,

title = {Qwen3-Coder-Next Technical Report},

author = {{Qwen Team}},

url = {https://github.com/QwenLM/Qwen3-Coder/blob/main/qwen3_coder_next_tech_report.pdf},

note = {Accessed: 2026-02-03}

}Alibaba Brings Cloud-Based AI Innovation to the Olympic Winter Games Milano Cortina 2026

1,337 posts | 469 followers

FollowAlibaba Cloud Community - February 5, 2026

Alibaba Cloud Community - January 27, 2026

ApsaraDB - December 29, 2025

Alibaba Cloud Community - August 22, 2025

Alibaba Cloud Community - July 24, 2025

Alibaba Cloud Community - July 29, 2025

1,337 posts | 469 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community