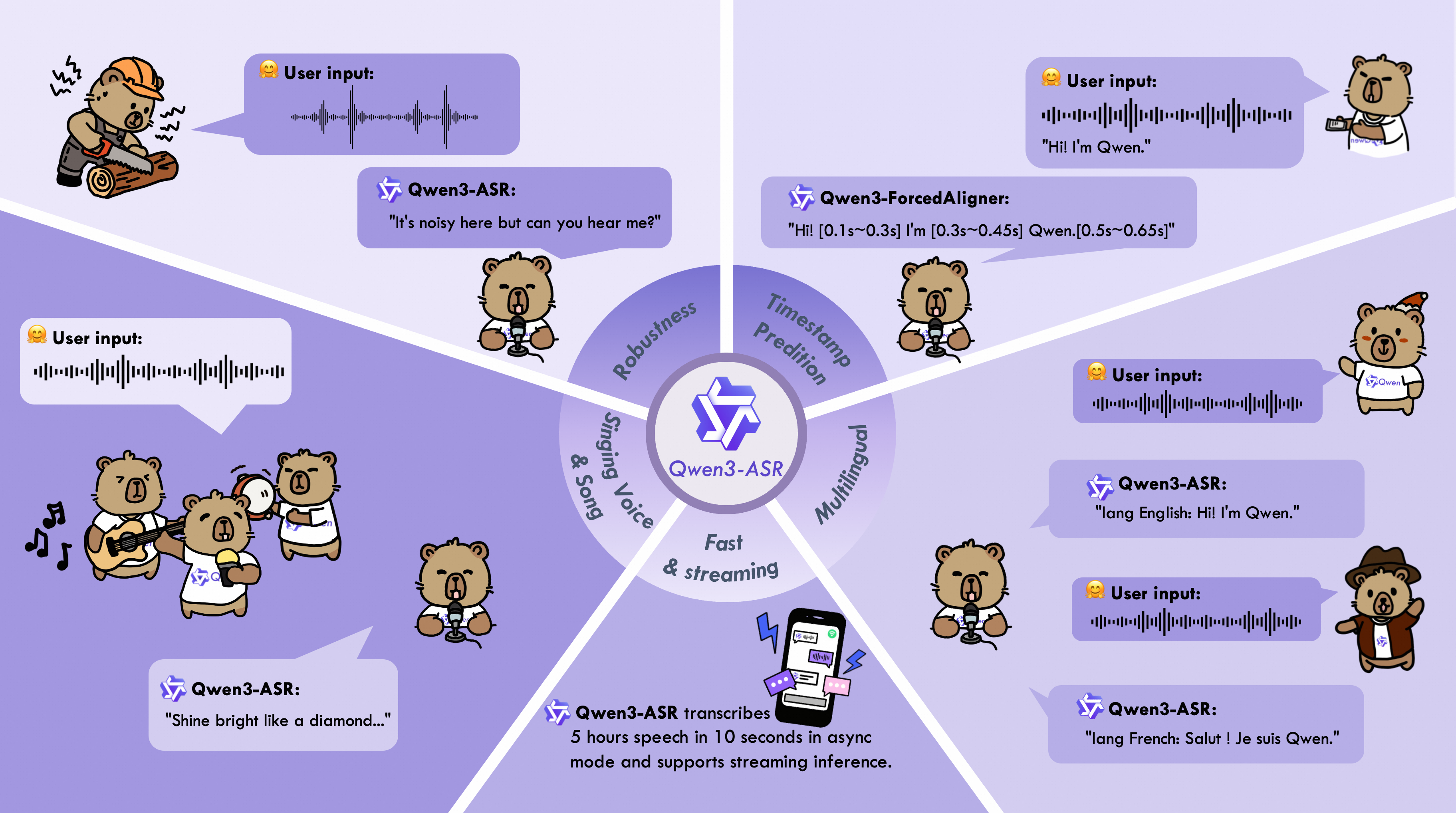

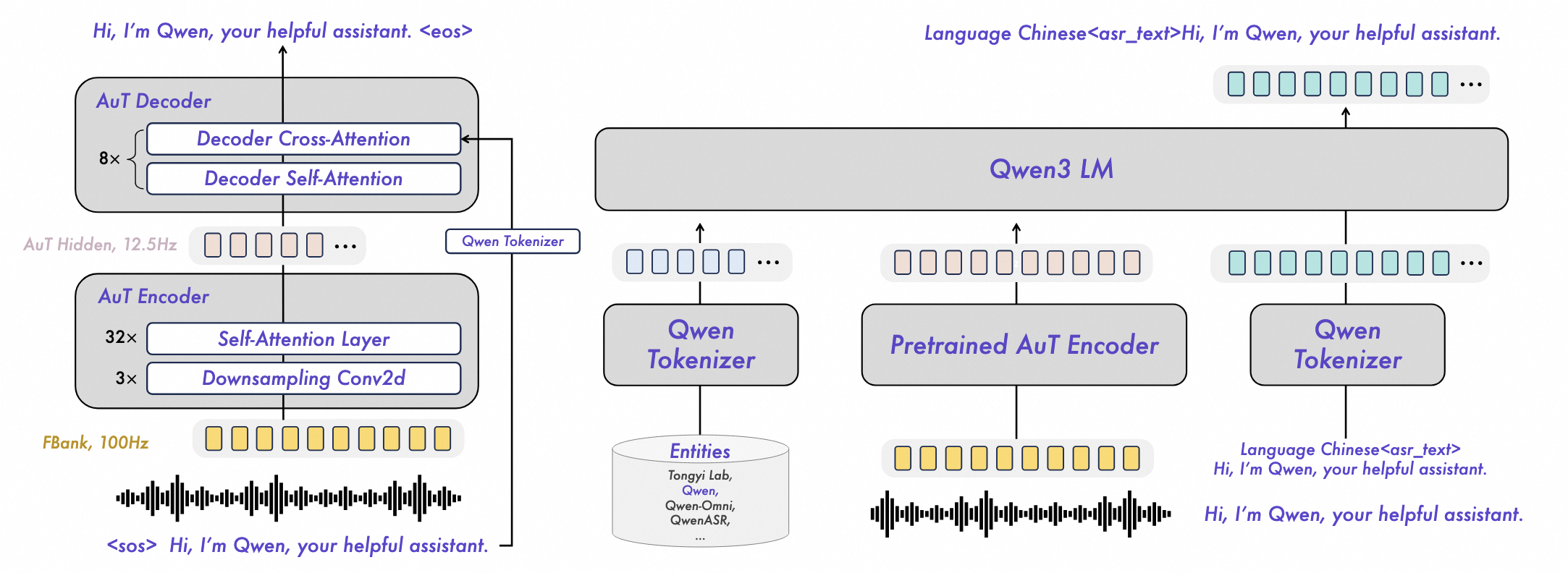

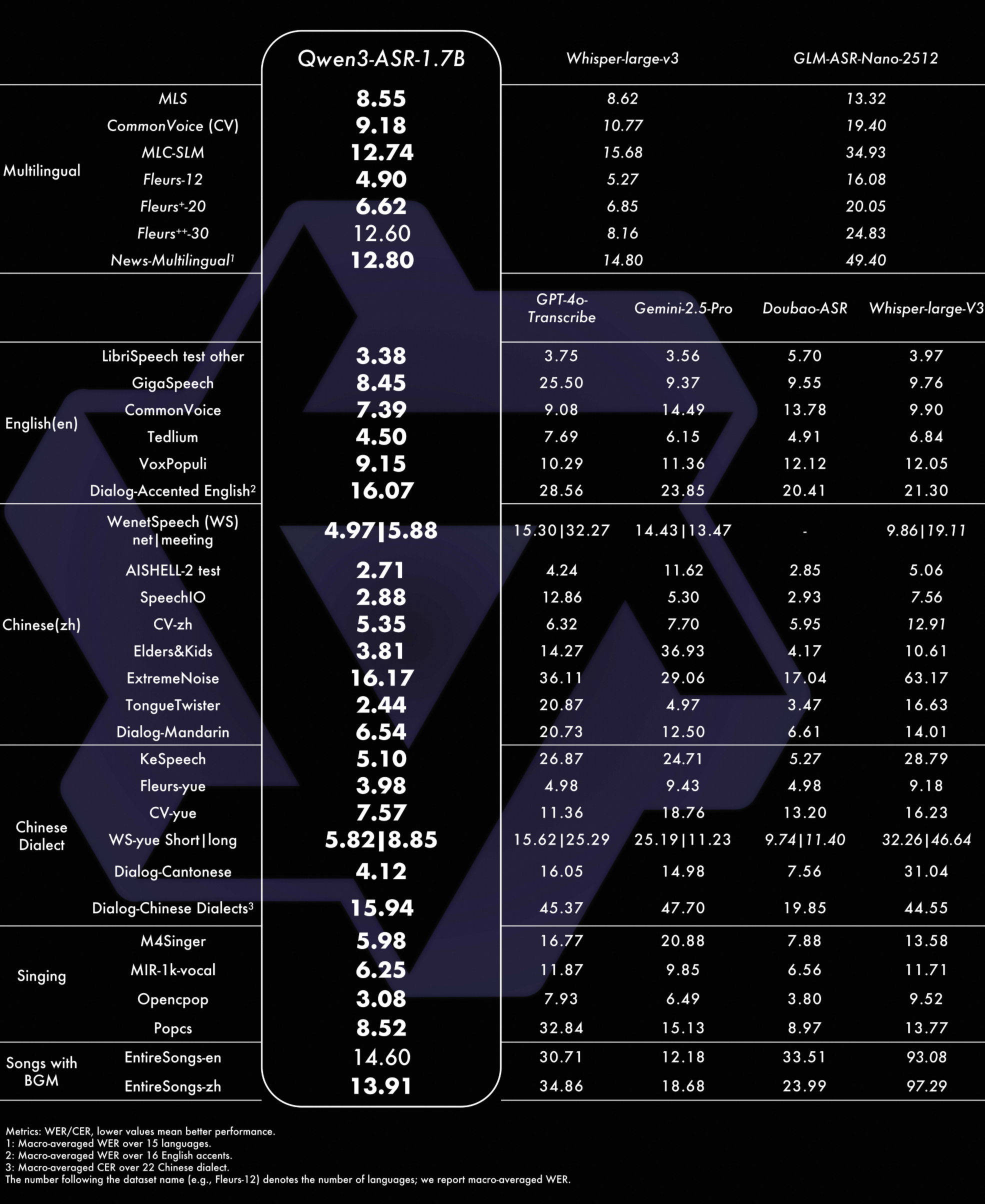

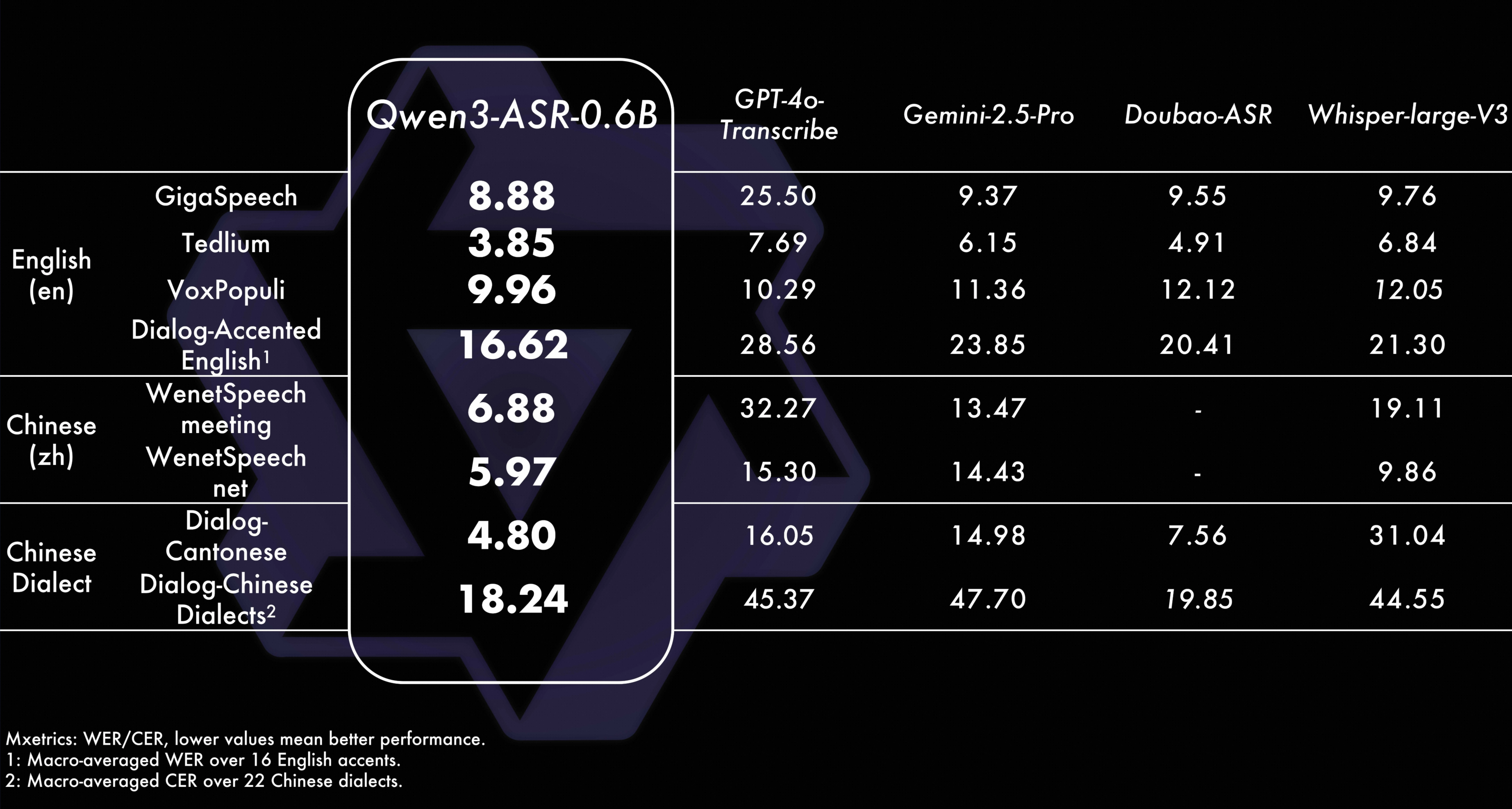

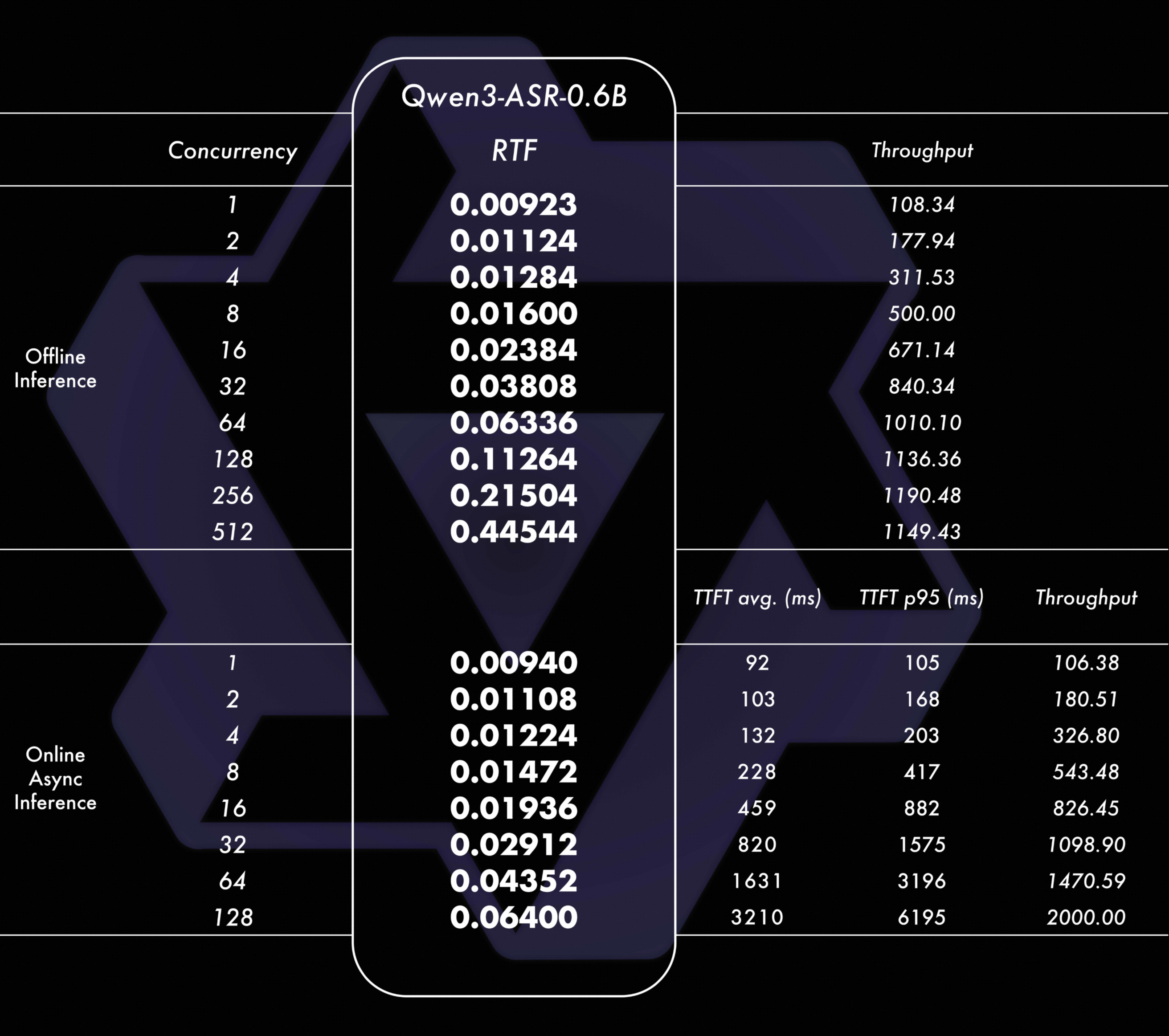

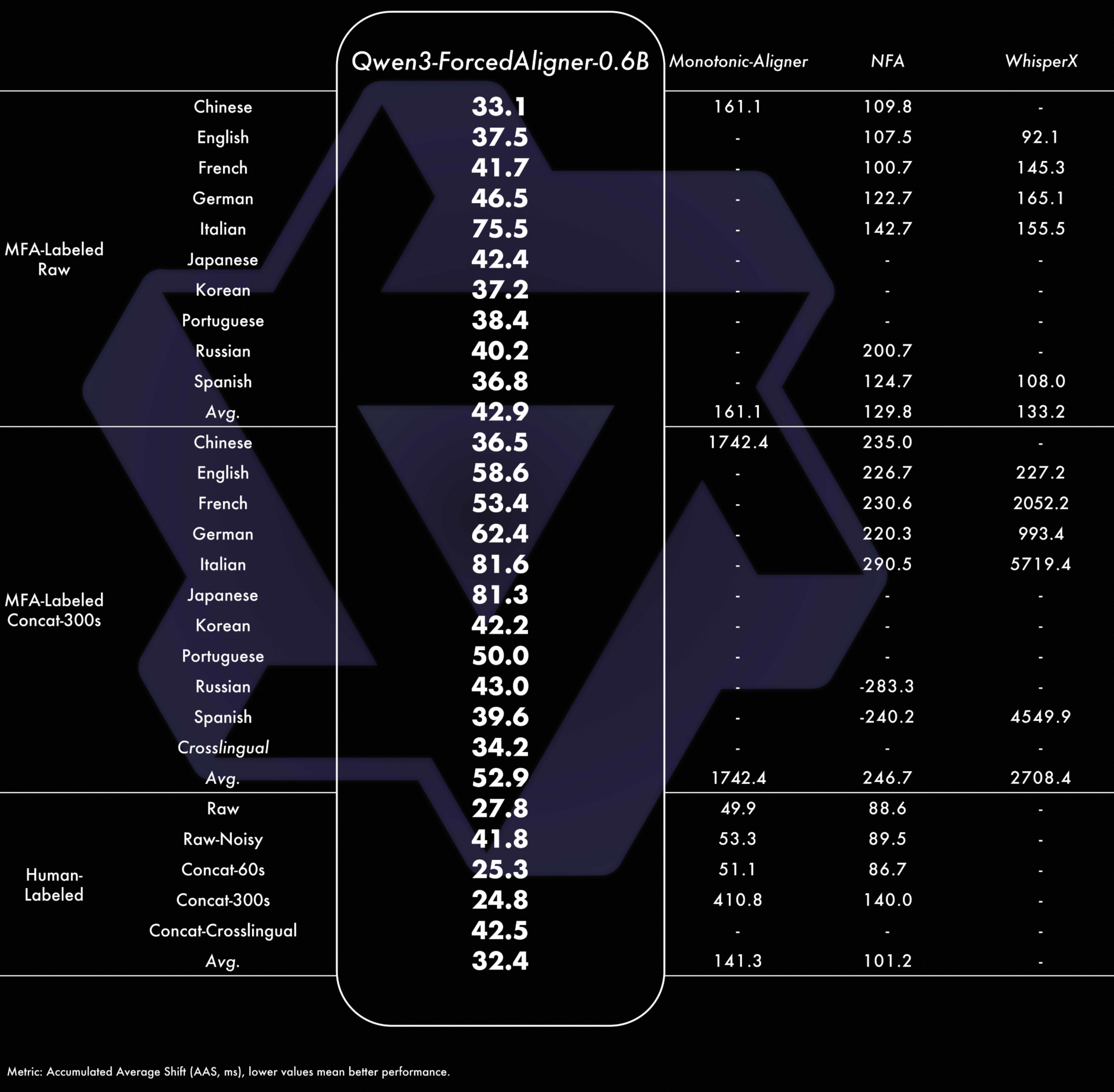

Qwen3-ASR family includes two powerful all-in-one speech recognition models and a novel non-autoregressive speech forced alignment model. Qwen3-ASR-1.7B and Qwen3-ASR-0.6B are ASR models that support language identification and ASR for 52 languages and accents. Both of them leverage large-scale speech training data and the strong audio understanding ability of their foundation model Qwen3-Omni. We conduct comprehensive internal evaluation besides the open-sourced benchmarks as ASR models might differ little on open-sourced benchmark scores but exhibit significant quality differences in real-world scenarios. The experiments reveal that the 1.7B version achieves state-of-the-art performance among open-sourced ASR models and is competitive with the strongest proprietary commercial APIs while the 0.6B version offers the best accuracy–efficiency trade-off. Qwen3-ASR-0.6B can achieve an average time-to-first-token as low as 92 ms and transcribe 2,000 seconds speech in 1 second at online async mode and a concurrency of 128. Qwen3-ForcedAligner-0.6B is an LLM based NAR timestamp predictor that is able to align text-speech pairs in 11 languages. Timestamp accuracy experiments show that the proposed model outperforms the three strongest force alignment models and takes more advantages in efficiency and versatility. To further accelerat the community research of ASR and audio understanding, we open-source the weights of the three models so as to a powerful and easy-using inference-finetune framework under the Apache 2.0 license.

| Model | Supported Languages | Supported Dialects | Inference Mode | Audio Types |

|---|---|---|---|---|

| Qwen3-ASR-1.7B & Qwen3-ASR-0.6B | Chinese (zh), English (en), Cantonese (yue), Arabic (ar), German (de), French (fr), Spanish (es), Portuguese (pt), Indonesian (id), Italian (it), Korean (ko), Russian (ru), Thai (th), Vietnamese (vi), Japanese (ja), Turkish (tr), Hindi (hi), Malay (ms), Dutch (nl), Swedish (sv), Danish (da), Finnish (fi), Polish (pl), Czech (cs), Filipino (fil), Persian (fa), Greek (el), Hungarian (hu), Macedonian (mk), Romanian (ro) | Anhui, Dongbei, Fujian, Gansu, Guizhou, Hebei, Henan, Hubei, Hunan, Jiangxi, Ningxia, Shandong, Shaanxi, Shanxi, Sichuan, Tianjin, Yunnan, Zhejiang, Cantonese (Hong Kong accent), Cantonese (Guangdong accent), Wu language, Minnan language. | Offline / Streaming | Speech, Singing Voice, Songs with BGM |

| Qwen3-ForcedAligner-0.6B | Chinese, English, Cantonese, French, German, Italian, Japanese, Korean, Portuguese, Russian, Spanish | – | NAR | Speech |

Main Features:

We conducted a systematic evaluation of the Qwen3-ASR series across Chinese/English, multilingual settings, Chinese dialects, singing voice recognition, and challenging acoustic and linguistic scenarios. The results show that Qwen3-ASR-1.7B achieves open-source SOTA on multiple public and internal benchmarks across several dimensions. Moreover, compared with the latest ASR APIs from multiple commercial providers, it also delivers the best performance on a number of benchmarks. Specifically:

Qwen3-ASR-0.6B strikes a strong balance between accuracy and efficiency: it delivers robust performance on multiple Chinese and English benchmarks, and maintains extremely low RTF and high throughput under high concurrency in both offline batch and online async inference. At concurrency 128, Qwen3-ASR-0.6b can transcribe 5 hours speech at online async mode.

Qwen3-ForcedAligner-0.6B outperforms Nemo-Forced-Aligner, WhisperX and Monotonic-Aligner, which are three strong E2E based forced-alignment models. And it is also proved of taking great advantages on aspects of language coverage, timestamp accuracy, speech and audio length supported.

Find more detailed results in our paper.

1,329 posts | 464 followers

FollowAlibaba Cloud Community - January 26, 2026

Alibaba Cloud Community - December 17, 2025

Alibaba Cloud Community - September 27, 2025

Alibaba Cloud Community - April 29, 2025

Alibaba Cloud Community - July 2, 2025

Alibaba Cloud Community - October 14, 2025

1,329 posts | 464 followers

Follow Voice Messaging Service

Voice Messaging Service

A one-stop cloud service for global voice communication

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Alibaba Cloud Community