This week Alibaba’s consumer-facing AI application, Qwen App, unveiled incentives valuing 3 billion yuan ($431 million) for consumers to celebrate the upcoming Chinese Lunar New Year festival; To support global developers on coding tasks, Alibaba has introduced Qwen3-Coder-Next, an open-weight language model specifically engineered for coding agents, as well as a customized model for the agentic coding platform Qoder.

To celebrate the upcoming Chinese New Year (CNY) festival —which begins on February 17, 2026 — Alibaba’s Qwen App will launch new incentives starting February 6, offering users incentives and digital red packets totaling RMB 3 billion in value.

To maximize the benefits available for consumers, Alibaba also plans to further upgrade Qwen App by integrating Freshippo (Alibaba’s new retail platform for groceries and fresh goods), Tmall Supermarket (its B2C online grocery arm), and the entertainment ticketing platform Damai. The App has already integrated core services from Alibaba’s ecosystem, including Taobao, Taobao Instant Commerce, Alipay, Fliggy, and Amap.

As such, users can order food and beverages, enjoy entertainment and leisure activities all through Qwen App while enjoying significant incentives during the CNY holiday.

Highlighting Qwen App’s strategic shift from “AI that responds” to “AI that acts,” the latest upgrade further transforms Alibaba’s foundational AI capabilities into real-world task execution. The app aims to automates everyday activities across commerce, travel, payments, and productivity—all within a single, unified interface. Since its public beta launch on November 17, 2025, the Qwen App has seen rapid adoption, surpassing 100 million monthly active users within just two months.

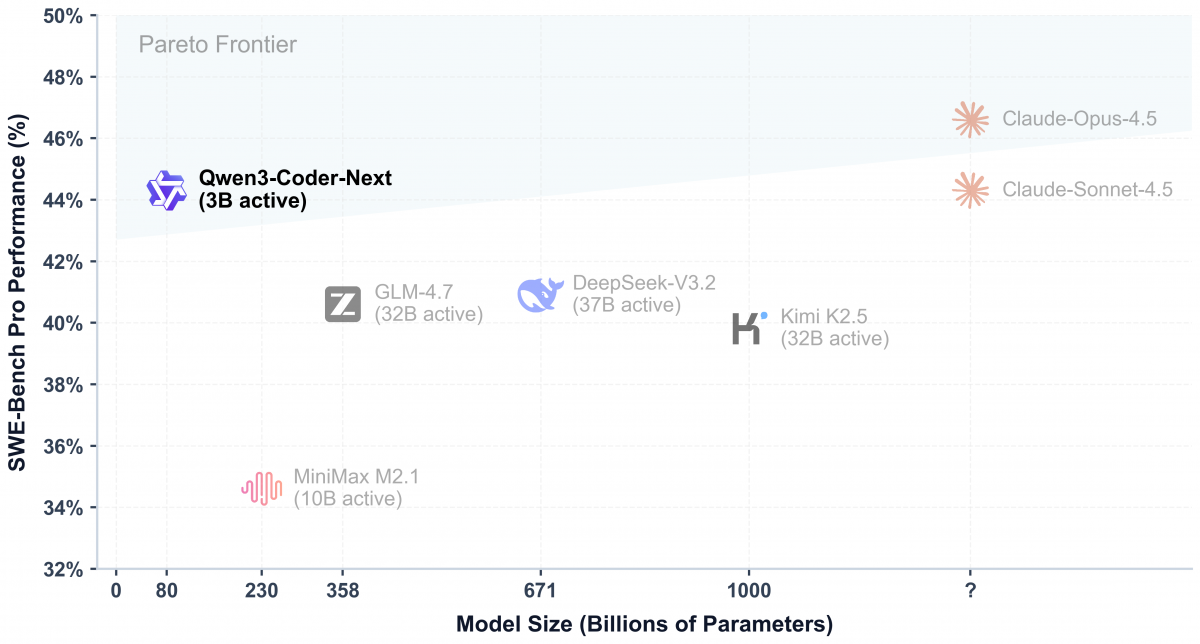

Alibaba has introduced Qwen3-Coder-Next, an open-weight language model specifically engineered for coding agents. Built on top of Qwen3-Next-80B-A3B-Base, which adopts a novel architecture with hybrid attention and MoE, Qwen3-Coder-Next has been agentically trained at scale on large-scale executable task synthesis, environment interaction, and reinforcement learning, obtaining strong coding and agentic capabilities with significantly lower inference costs.

Rather than relying primarily on parameter scaling, Qwen3-Coder-Next emphasizes scaling agentic training signals. It was trained on extensive collections of verifiable coding tasks paired with executable environments, allowing the model to learn directly from real-time environment feedback. This training paradigm prioritizes long-horizon reasoning, tool integration, and robust recovery from execution failures—capabilities critical for effective real-world coding agents.

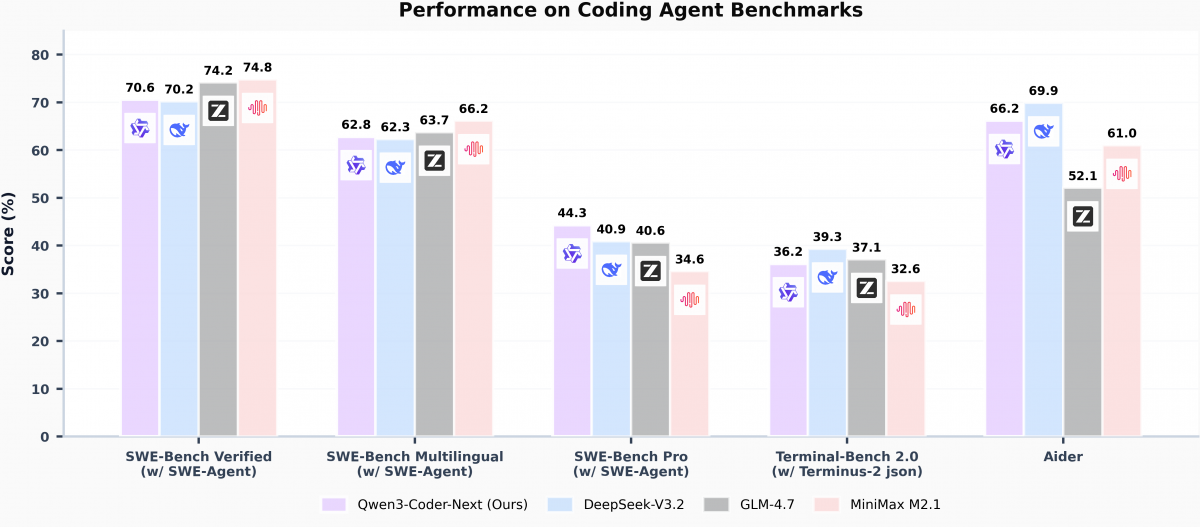

Thanks to this innovative approach, Qwen3-Coder-Next (3B active) achieves performance on SWE-Bench-Pro that rivals models with 10× to 20× more active parameters. Qwen3-Coder-Next distinguishes itself through significant cost efficiency for coding agent deployment. As a highly capable yet lightweight agentic model, it is designed to accelerate research, evaluation, and practical deployment of autonomous coding agents in a cost-effective way.

Qwen3-Coder-Next achieves an improved Pareto tradeoff between efficiency and performance

The Base and Instruct variants of Qwen3-Coder-Next is now accessible for free on Hugging Face and ModelScope. The model’s tech report is also available on Github.

Open-source Qwen models have surpassed 400 in total number, spanning a wide range of sizes—from 0.5 billion to 480 billion parameters – multiple modalities (including text, image, audio, and video), and support for 119 languages and dialects. These models have garnered over 1 billion downloads and inspired developers worldwide to create more than 200,000 derivative models. 53% of new derivatives on Hugging Face in December were based on Qwen.

Alibaba has unveiled a customized Qwen-Coder model for the agentic coding platform Qoder. The model has undergone extensive reinforcement learning (RL) training, integrating Qoder’s proprietary Agent framework and tools.

This custom model is highlighted with distinguished features. It is a large-scale reinforcement learning model trained with real product environments, authentic software development tasks, and genuine software engineering reward signals: Core capabilities required by the Qoder Agent—such as compliance with engineering standards, efficient task planning, and comprehension of code graphs—are directly embedded into Qwen-Coder-Qoder through training, enabling the model itself to drive Agent execution. Additionally, Qoder extracts “best practices in software engineering” and translates them into reward signals to further refine model training.

Global developers can now experience Qwen-Coder-Qoder on the Qoder platform for free from Feb 1 to 15. Thanks to the rapid iteration of models on Qoder, the online retention rate of code generated by Qoder has increased by 3.85%, the tool anomaly rate has dropped by 61.5%, and token consumption has been reduced by 14.5%.

This article was originally published on Alizila written by Crystal Liu.

Alibaba Cloud Upgrades Flagship Database PolarDB with AI-Ready Capabilities

1,337 posts | 469 followers

FollowAlibaba Cloud Community - November 22, 2024

Alibaba Cloud Community - October 9, 2024

Alibaba Cloud Community - August 8, 2025

Alibaba Cloud Community - November 27, 2025

Alibaba Cloud Community - September 19, 2024

Alibaba Cloud Community - January 21, 2025

1,337 posts | 469 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community