By Yang Che

This article series describes how to support and optimize hybrid cloud data access scenarios based on ACK Fluid. For more information about related articles, please see:

In the previous article, I discussed how to accelerate read access to third-party storage to achieve better performance, lower costs, and reduce reliance on leased line stability.

In some scenarios, customers may not use standard CSI interfaces for connecting with cloud services due to historical reasons and the development and maintenance cost of container storage interfaces. Instead, they use non-containerized methods such as automated scripts. However, when migrating to the cloud, it becomes necessary to consider how to connect with cloud services based on standard interfaces.

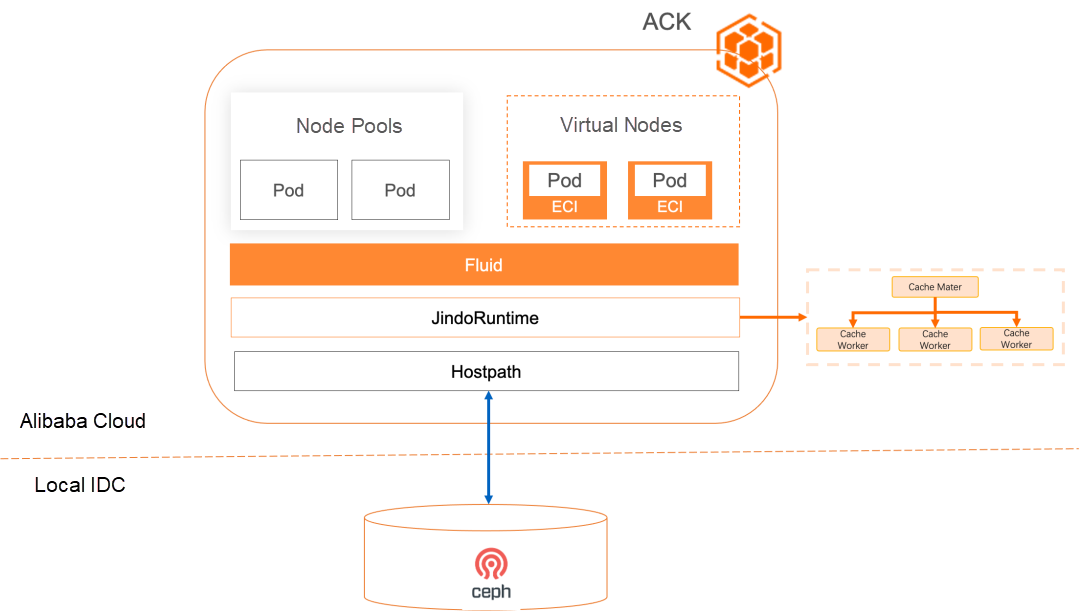

This article focuses on using ACK Fluid to implement Kubernetes-based mounting of third-party storage host directories. This approach is more standardized and can improve efficiency.

Many enterprises have on-premises storage that does not support the CSI protocol and can only be mounted as host directories using tools like Ansible. This poses challenges for connecting with the standardized Kubernetes platform. Additionally, similar performance and cost issues as mentioned in the previous article need to be addressed:

• Lack of standards and difficulties in migrating to the cloud: Host directory mounting cannot be perceived and scheduled by Kubernetes, making it challenging to use and manage with containerized workloads.

• Lack of data isolation: When the entire directory is mounted on the host, data becomes globally visible and can be accessed by all workloads.

• Similar requirements for cost, performance, and availability as Scenario 2, which I won't repeat here.

ACK Fluid provides the capability to accelerate PV host directories based on JindoRuntime [1]. It supports direct host directory mounting, enabling data access acceleration through distributed caching in a native, simple, quick, and secure manner.

Here are the key benefits:

Summary: ACK Fluid offers out-of-the-box benefits, including high performance, low cost, automation, and no data persistence, for accessing host directories of third-party storage in a cloud computing environment.

• Create an ACK Pro cluster with a version of 1.18 or later. For more information, see Create an ACK Pro cluster [2].

• Cloud-native AI suite is installed, and ack-fluid components are deployed. Note: If you have installed open source Fluid, uninstall it and then deploy ack-fluid components.

• If the cloud-native AI suite is not installed, enable Fluid Data Acceleration during installation. For more information, see Install the cloud-native AI suite [3].

• If the cloud-native AI suite is installed, deploy the ack-fluid on the Cloud-native AI Suite page in the Container Service console.

• A kubectl client is connected to the cluster. For more information, see Use kubectl to connect to a cluster [4].

• Create PV volumes and PVC volume claims that require access to the storage system. Different storage systems in a Kubernetes environment have different methods for creating storage volumes. To ensure stable connections between the storage systems and the Kubernetes clusters, follow the official documentation of the corresponding storage system for preparation.

In this example, sshfs is used to simulate converting third-party storage into a data volume claim through Fluid and accelerating access to the data volume claim.

2.1 First log on to the three machines 192.168.0.1, 192.168.0.2, and 192.168.0.3 and install the sshfs service respectively. In this example, CentOS is used and run the following command:

$ sudo yum install sshfs -y2.2 Log on to the sshfs server 192.168.0.1. Run the following command to create a new subdirectory under the /mnt directory as the mount point of the host directory, and create a test file.

$ mkdir /mnt/demo-remote-fs

$ cd /mnt/demo-remote-fs

$ dd if=/dev/zero of=/mnt/demo-remote-fs/allzero-demo count=1024 bs=10M2.3 Run the following command to create the corresponding host directories for the client nodes 192.168.0.2 and 192.168.0.3 of the sshfs.

$ mkdir /mnt/demo-remote-fs

$ sshfs 192.168.0.1:/mnt/demo-remote-fs /mnt/demo-remote-fs

$ ls /mnt/demo-remote-fs2.4 Run the following command to add tags to the nodes 192.168.0.2 and 192.168.0.3: The tag demo-remote-fs=true is used to configure node scheduling constraints for the Master and Worker components of JindoRuntime.

$ kubectl label node 192.168.0.2 demo-remote-fs=true$

kubectl label node 192.168.0.3 demo-remote-fs=true2.5 Select node 192.168.0.2 and run the following command to access data and evaluate the file access performance. It takes 1 minute and 5.889 seconds to copy 10 GB of files.

$ ls -lh /mnt/demo-remote-fs/

total 10G

-rwxrwxr-x 1 root root 10G Aug 13 10:07 allzero-demo

$ time cat /mnt/demo-remote-fs/allzero-demo > /dev/null

real 1m5.889s

user 0m0.086s

sys 0m3.281sUse the following YAML to create a dataset.yaml file:

The dataset.yaml configuration file contains two Fluid resource objects to be created: Dataset and JindoRuntime.

• Dataset: describes the information about the host directory to be mounted.

• JindoRuntime: the configurations of the JindoFS distributed cache system to be started, including the number of worker component replicas and the maximum available cache capacity for each worker component.

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: hostpath-demo-dataset

spec:

mounts:

- mountPoint: local:///mnt/demo-remote-fs

name: data

path: /

accessModes:

- ReadOnlyMany

---

apiVersion: data.fluid.io/v1alpha1

kind: JindoRuntime

metadata:

name: hostpath-demo-dataset

spec:

master:

nodeSelector:

demo-remote-fs: "true"

worker:

nodeSelector:

demo-remote-fs: "true"

fuse:

nodeSelector:

demo-remote-fs: "true"

replicas: 2

tieredstore:

levels:

- mediumtype: MEM

path: /dev/shm

quota: 10Gi

high: "0.99"

low: "0.99"The following table describes the object parameters in the configuration file.

| Parameter | Description |

Dataset.spec.mounts[*].mountPoint |

The information about the data source to be mounted. When you mount a host directory as a data source, the local://<path> format is supported. path is the mounted host directory and needs to be set to an absolute path. |

Dataset.spec.nodeAffinity |

Set constraints on node scheduling for the Master and Worker components of JindoRuntime, with fields consistent with Pod.Spec.Affinity.NodeAffinity. |

JindoRuntime.spec.replicas |

The number of workers for the JindoFS cache system. It can be adjusted as needed. |

JindoRuntime.spec.tieredstore.levels[*].mediu mtype |

The type of cache. Only HDD (Mechanical Hard Disk Drive), SSD (Solid State Drive), and MEM (Memory) are supported. In AI training scenarios, we recommend that you use MEM. When MEM is used, the cache data storage directory specified by path needs to be set to the memory file system. For example, you can specify a temporary mount point and mount a temporary file system (TMPFS) file system to the mount point. |

JindoRuntime.spec.tieredstore.levels[*].path |

The directory used by JindoFS workers to cache data. For optimal data access performance, we recommend that you use /dev/shm or other paths where the memory file system is mounted. |

JindoRuntime.spec.tieredstore.levels[*].quota |

The maximum cache size that each worker can use. You can modify the number based on your requirements. |

3.1 Run the following commands to create the Dataset and JindoRuntime resource objects:

$ kubectl create -f dataset.yaml3.2 Run the following command to view the deployment of the Dataset:

$ kubectl get dataset hostpath-demo-datasetExpected output:

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

hostpath-demo-dataset 10.00GiB 0.00B 20.00GiB 0.0% Bound 47s3.3 If the Dataset is in the Bound state, the JindoFS cache system is started in the cluster. Application pods can access the data defined in the Dataset.

The data access efficiency of application pods may be low because the first access cannot hit the data cache. Fluid provides the DataLoad cache preheating operation to improve the efficiency of the first data access.

4.1 Create a dataload.yaml file. Sample code:

apiVersion: data.fluid.io/v1alpha1

kind: DataLoad

metadata:

name: dataset-warmup

spec:

dataset:

name: hostpath-demo-dataset

namespace: default

loadMetadata: true

target:

- path: /

replicas: 1The following table describes the object parameters.

| Parameter | Description |

spec.dataset.name |

The name of the Dataset object to be preheated. |

spec.dataset.namespace |

The namespace to which the Dataset object belongs. The namespace must be the same as the namespace of the DataLoad object. |

spec.loadMetadata |

Specifies whether to synchronize metadata before preheating. This parameter must be set to true for JindoRuntime. |

spec.target[*].path |

The path or file to be preheated. The path must be a relative path of the mount point specified in the Dataset object. For example, if the data source mounted to the Dataset is pvc://my-pvc/mydata, setting the path to /test will preheat the /mydata/test directory under my-pvc's corresponding storage system. |

spec.target[*].replicas |

The number of worker pods created to cache the preheated path or file. |

4.2 Run the following command to create a DataLoad object:

$ kubectl create -f dataload.yaml4.3 Run the following command to check the DataLoad status:

$ kubectl get dataload dataset-warmupExpected output:

NAME DATASET PHASE AGE DURATION

dataset-warmup hostpath-demo-dataset Complete 96s 1m2s4.4 Run the following command to check the data cache status:

$ kubectl get datasetExpected output:

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

hostpath-demo-dataset 10.00GiB 10.00GiB 20.00GiB 100.0% Bound 157mAfter the DataLoad cache preheating is performed, the cached data volume of the dataset has been updated to the size of the entire dataset, which means that the entire dataset has been cached, and the cached percentage is 100.0%.

5.1 Use the following YAML to create a pod.yaml file, and modify the claimName in the YAML file to be the same as the name of the dataset that is created in this example.

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

command:

- "bash"

- "-c"

- "sleep inf"

volumeMounts:

- mountPath: /data

name: data-vol

volumes:

- name: data-vol

persistentVolumeClaim:

claimName: hostpath-demo-dataset # The name must be the same as the Dataset. 5.2 Run the following command to create an application pod:

kubectl create -f pod.yaml5.3 Run the following command to log on to the pod and access the data:

$ kubectl exec -it nginx bashThe expected output is that the time required to copy 10 GB of files is 8.629 seconds, which is one-eighth of the time required for sshfs direct remote copy (1 minute 5.889 seconds):

root@nginx:/# ls -lh /data

total 10G

-rwxrwxr-x 1 root root 10G Aug 13 10:07 allzero-demo

root@nginx:/# time cat /data/allzero-demo > /dev/null

real 0m8.629s

user 0m0.031s

sys 0m3.594s5.4 Cleanup application pods

$ kubectl delete po nginx[1] The general acceleration capability of the PV host directory

https://www.alibabacloud.com/help/en/ack/cloud-native-ai-suite/user-guide/accelerate-pv-storage-volume-data-access

[2] Create an ACK Pro cluster

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/create-an-ack-managed-cluster-2#task-skz-qwk-qfb

[3] Install the cloud-native AI suite

https://www.alibabacloud.com/help/en/ack/cloud-native-ai-suite/user-guide/deploy-the-cloud-native-ai-suite#task-2038811

[4] Use kubectl to connect to a cluster

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/obtain-the-kubeconfig-file-of-a-cluster-and-use-kubectl-to-connect-to-the-cluster#task-ubf-lhg-vdb

212 posts | 13 followers

FollowAlibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Container Service - October 30, 2024

Alibaba Cloud Native - November 30, 2023

Alibaba Cloud Native Community - September 19, 2023

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Cloud Native