By Yang Che

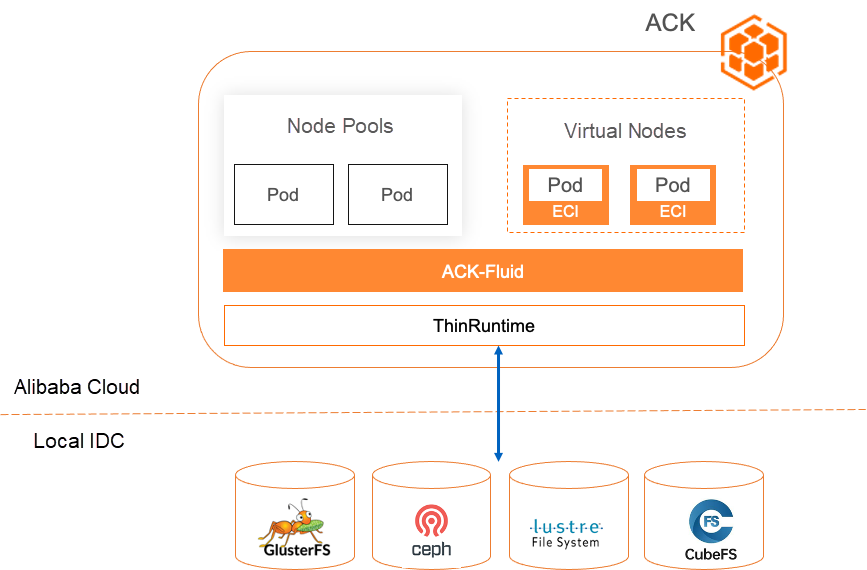

In the previous article, Scenarios and Architecture, I discussed the different scenarios and architectures supported by ACK Fluid for accessing data in hybrid cloud environments. In this article, I will focus on how to use ACK Fluid to enable elastic computing instances in the public cloud to access on-premises storage systems.

The out-of-the-box elastic capabilities of Alibaba Cloud Container Service for Kubernetes (ACK) can effectively complement the elastic capabilities of self-managed data centers. With the rise of AI and machine learning, the concept of computing power driving innovation has become widely accepted. Many customers who were initially hesitant about cloud computing are now considering public clouds for their technical verifications. As a first step, they often choose to use Elastic Computing Instances (ECIs). However, connecting self-built storage with elastic resources in the cloud, especially ECI resources, has become a challenge for hybrid cloud users who want to utilize Alibaba Cloud services. For example, if users want to compare the cost of running training tasks on Alibaba Cloud and on their on-premises data centers, the traditional approach would involve migrating data to the cloud, which raises concerns about data privacy and incurs time and financial costs. This prevents quick verification and can even lead to delays in innovation if customers fail to pass internal security reviews in the short term.

It is common for enterprises to store their data offline using various storage types, including open source solutions such as Ceph, Lustrure, JuiceFS, CubeFS, and self-built storage. However, when using public cloud computing resources, there are several challenges:

• Time-consuming security and cost assessments: Migrating data to cloud storage requires lengthy evaluations by security and storage teams, which slows down the cloud migration process.

• Limited adaptability for data access: Public clouds only support a limited number of distributed storage types, such as NAS, OSS, and CPFS for ECIs, and do not provide support for third-party storage.

• Lengthy and difficult access to the cloud platform: Developing and maintaining cloud-native compatible CSI plugins requires expertise and development adaptation projects. It also involves version upgrades and limited support for certain scenarios. For example, self-built CSI cannot be adapted to ECIs.

• Lack of trusted and transparent data access methods: Ensuring data security, transparency, and reliability during data transmission and access in the black box system of serverless containers.

• Avoiding the need for business modifications: Ensuring that business users do not notice any differences at the infrastructure level and avoiding any modifications to existing applications.

ACK Fluid provides the ThinRuntime extension mechanism, which allows you to connect third-party storage clients based on FUSE to Kubernetes in containerized mode. ACK Fluid supports standard Kubernetes, edge Kubernetes, Serverless Kubernetes, and other forms on Alibaba Cloud.

Here are the key features:

Summary: ACK Fluid provides scalability, security, controllability, cost-effectiveness, and cloud platform independence for accessing on-premises data in cloud computing environments.

The open source MinIO is used as an example to show how to connect third-party storage to Alibaba Cloud ECI through Fluid.

• An ACK Pro cluster has been created with a cluster version of 1.18 or later. For detailed instructions, please refer to the documentation on creating an ACK Pro cluster [1].

• The cloud-native AI suite has been installed and ack-fluid components have been deployed. Note: If you have previously installed open source Fluid, please uninstall it before deploying the ack-fluid components.

• If the cloud-native AI suite is not installed, enable Fluid Data Acceleration during the installation process. For detailed instructions, please refer to the documentation on installing the cloud-native AI suite [2].

• If the cloud-native AI suite is installed, deploy the ack-fluid components on the Cloud-native AI Suite page in the Container Service console.

• Virtual nodes have been deployed in the ACK Pro cluster. For detailed instructions, please refer to the documentation on deploying the virtual node controller and using it to create Elastic Container Instance-based pods [3].

• A kubectl client is connected to the cluster. For detailed instructions, please refer to the documentation on using kubectl to connect to a cluster [4].

Deploy Minio and store it in the ACK cluster.

The YAML file minio.yaml is as follows:

apiVersion: v1

kind: Service

metadata:

name: minio

spec:

type: ClusterIP

ports:

- port: 9000

targetPort: 9000

protocol: TCP

selector:

app: minio

---

apiVersion: apps/v1 # for k8s versions before 1.9.0 use apps/v1beta2 and before 1.8.0 use extensions/v1beta1

kind: Deployment

metadata:

# This name uniquely identifies the Deployment

name: minio

spec:

selector:

matchLabels:

app: minio

strategy:

type: Recreate

template:

metadata:

labels:

# Label is used as selector in the service.

app: minio

spec:

containers:

- name: minio

# Pulls the default Minio image from Docker Hub

image: bitnami/minio

env:

# Minio access key and secret key

- name: MINIO_ROOT_USER

value: "minioadmin"

- name: MINIO_ROOT_PASSWORD

value: "minioadmin"

- name: MINIO_DEFAULT_BUCKETS

value: "my-first-bucket:public"

ports:

- containerPort: 9000

hostPort: 9000Deploy the preceding resources to the ACK cluster:

$ kubectl create -f minio.yamlAfter the deployment is successful, other pods in the ACK cluster can access data in the Minio storage system through the Minio API endpoint at http://minio:9000. In the preceding YAML configuration, the username and password of Minio are both set to minioadmin, and a bucket named my-first-bucket is created by default when Minio is started. In the following example, we will access the data in the my-first-bucket bucket. Before performing the following steps, first run the following command to store the sample file in the my-first-bucket:

$ kubectl exec -it minio-69c555f4cf-np59j -- bash -c "echo fluid-minio-test > testfile"

$ kubectl exec -it minio-69c555f4cf-np59j -- bash -c "mc cp ./testfile local/my-first-bucket/"

$ kubectl exec -it minio-69c555f4cf-np59j -- bash -c "mc cat local/my-first-bucket/testfile"

fluid-minio-testAs a MinIO storage administrator, connecting to Fluid mainly consists of three steps. The following debugging process can be completed in open source Kubernetes:

Fluid will pass the necessary operation parameters required by FUSE in ThinRuntime, as well as the mount point and other parameters describing the data path in Dataset, to the ThinRuntime FUSE Pod container. Inside the container, you need to execute the parameter parsing script and pass the parsed runtime parameters to the FUSE client program. The client program will then mount the FUSE file system in the container.

Therefore, when describing a storage system using a ThinRuntime CRD, you need to use a specially created container image. The image must include the following two programs:

• FUSE client program

• The runtime parameter parsing script required by the FUSE client program

For the FUSE client program, in this example, choose a goofys client compatible with the S3 protocol to connect to and mount the MinIO storage system.

For the parameter parsing script required by the runtime, define the following Python script fluid-config-parse.py:

import json

with open("/etc/fluid/config.json", "r") as f:

lines = f.readlines()

rawStr = lines[0]

print(rawStr)

script = """

#!/bin/sh

set -ex

export AWS_ACCESS_KEY_ID=`cat $akId`

export AWS_SECRET_ACCESS_KEY=`cat $akSecret`

mkdir -p $targetPath

exec goofys -f --endpoint "$url" "$bucket" $targetPath

"""

obj = json.loads(rawStr)

with open("mount-minio.sh", "w") as f:

f.write("targetPath=\"%s\"\n" % obj['targetPath'])

f.write("url=\"%s\"\n" % obj['mounts'][0]['options']['minio-url'])

if obj['mounts'][0]['mountPoint'].startswith("minio://"):

f.write("bucket=\"%s\"\n" % obj['mounts'][0]['mountPoint'][len("minio://"):])

else:

f.write("bucket=\"%s\"\n" % obj['mounts'][0]['mountPoint'])

f.write("akId=\"%s\"\n" % obj['mounts'][0]['options']['minio-access-key'])

f.write("akSecret=\"%s\"\n" % obj['mounts'][0]['options']['minio-access-secret'])

f.write(script)The preceding Python script is executed in the following steps:

Note: In Fluid, only the storage path of each encrypting parameter in the /etc/fluid/config.json file is provided. Therefore, the parameter parsing script is required to read the file, such as

export AWS_ACCESS_KEY_ID=`cat $akId`in the preceding example.

Then, use the following Dockerfile to create an image. Here, we directly select the image containing the goofys client program (i.e., cloudposse/goofys) as the base image of the Dockerfile:

FROM cloudposse/goofys

RUN apk add python3 bash

COPY ./fluid-config-parse.py /fluid-config-parse.pyRun the following command to build and push an image to the image repository:

$ IMG_REPO=<your image repo>

$ docker build -t $IMG_REPO/fluid-minio-goofys:demo .

$ docker push $IMG_REPO/fluid-minio-goofys:demoBefore creating Fluid Dataset and ThinRuntime to mount the Minio storage system, you need to develop ThinRuntimeProfile CR resources. A ThinRuntimeProfile is a Kubernetes cluster-level fluid resource. It describes the basic configurations of the storage system that need to be connected to Fluid, such as the description of containers and computing resources. The cluster administrator needs to define several ThinRuntimeProfile CR resources in the cluster in advance. After that, the cluster user needs to display the claim to reference a ThinRuntimeProfile CR to create a ThinRuntime, so as to mount the corresponding storage system.

The following is an example of ThinRuntimeProfile CR for the MinIO storage system (profile.yaml):

apiVersion: data.fluid.io/v1alpha1

kind: ThinRuntimeProfile

metadata:

name: minio

spec:

fileSystemType: fuse

fuse:

image: $IMG_REPO/fluid-minio-goofys

imageTag: demo

imagePullPolicy: IfNotPresent

command:

- sh

- -c

- "python3 /fluid-config-parse.py && chmod u+x ./mount-minio.sh && ./mount-minio.sh"In the CR example above:

• fileSystemType describes the file system type (fsType) to which ThinRuntime FUSE is mounted. This parameter needs to be specified according to the Fuse client program of the storage system used. For example, the fsType of the mount point for goofys is fuse, and the fsType of the mount point for s3fs is fuse.s3fs.

• fuse describes the container information of ThinRuntime FUSE, including image information, such as image, imageTag, and imagePullPolicy and container startup command.

Create a ThinRuntimeProfile CR minio and deploy it to the ACK cluster.

Create a credential Secret that is required to access minio:

$ kubectl create secret generic minio-secret \

--from-literal=minio-access-key=minioadmin \

--from-literal=minio-access-secret=minioadminAn example of creating a Dataset and ThinRuntime CR (dataset.yaml) is as follows, aiming to generate storage data volumes available to users:

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: minio-demo

spec:

mounts:

- mountPoint: minio://my-first-bucket # minio://<bucket name>

name: minio

options:

minio-url: http://minio:9000 # minio service <url>:<port>

encryptOptions:

- name: minio-access-key

valueFrom:

secretKeyRef:

name: minio-secret

key: minio-access-key

- name: minio-access-secret

valueFrom:

secretKeyRef:

name: minio-secret

key: minio-access-secret

---

apiVersion: data.fluid.io/v1alpha1

kind: ThinRuntime

metadata:

name: minio-demo

spec:

profileName: minio• Dataset.spec.mounts[*].mountPoint: specifies the data bucket to be accessed (e.g., my-frist-bucket).

• Dataset.spec.mounts[*].options.minio-url: specifies the URL that minio can access in the cluster (e.g., http://minio:9000).

• ThinRuntime.spec.profileName: specifies the created ThinRuntimeProfile (e.g., minio-profile).

Create a Dataset and a ThinRuntime CR:

$ kubectl create -f dataset.yamlCheck the Dataset status. After a period of time, you can find that the Dataset and Phase statuses change to Bound, and the Dataset can be mounted and used normally:

$ kubectl get dataset minio-demo

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

minio-demo N/A N/A N/A Bound 2m18sFor end users, it is simple to access MinIO. The following is the YAML file (pod.yaml) of the sample Pod Spec. You only need to use the PVC with the same name as the Dataset:

apiVersion: v1

kind: Pod

metadata:

name: test-minio

labels:

alibabacloud.com/fluid-sidecar-target: eci

alibabacloud.com/eci: "true"

spec:

restartPolicy: Never

containers:

- name: app

image: nginx:latest

command: ["bash"]

args:

- -c

- ls -lh /data && cat /data/testfile && sleep 180

volumeMounts:

- mountPath: /data

name: data-vol

volumes:

- name: data-vol

persistentVolumeClaim:

claimName: minio-demo• alibabacloud.com/fluid-sidecar-target=eci: indicates the need to enable ACK Fluid to provide specific support for ECI.

• alibabacloud.com/eci: indicates the corresponding virtual nodes in the ACK scheduled to ECI.

Create a data access pod:

$ kubectl create -f pod.yamlView the results of the data access pod:

$ kubectl logs test-minio -c app

total 512

-rw-r--r-- 1 root root 6 Aug 15 12:32 testfile

fluid-minio-testAs you can see, the Pod test-minio can normally access the data in the MinIO storage system.

$ kubectl delete -f pod.yaml

$ kubectl delete -f dataset.yaml

$ kubectl delete -f profile.yaml

$ kubectl delete -f minio.yamlNote

This example is used to demonstrate the entire data access process. The relevant MinIO environment configuration is for demonstration purposes only.

[1] Create an ACK Pro cluster

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/create-an-ack-managed-cluster-2#task-skz-qwk-qfb

[2] Install the cloud-native AI suite

https://www.alibabacloud.com/help/en/ack/cloud-native-ai-suite/user-guide/deploy-the-cloud-native-ai-suite#task-2038811

[3] Deploy the virtual node controller and use it to create Elastic Container Instance-based pods

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/deploy-the-virtual-node-controller-and-use-it-to-create-elastic-container-instance-based-pods#task-1443354

[4] Use kubectl to connect to a cluster

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/obtain-the-kubeconfig-file-of-a-cluster-and-use-kubectl-to-connect-to-the-cluster#task-ubf-lhg-vdb

Optimize Hybrid Cloud Data Access Based on ACK Fluid (1): Scenario and Architecture

212 posts | 13 followers

FollowAlibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 30, 2023

Alibaba Cloud Native Community - September 19, 2023

Alibaba Container Service - January 15, 2026

212 posts | 13 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Elastic Block Storage

Elastic Block Storage

Block-level data storage attached to ECS instances to achieve high performance, low latency, and high reliability

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Alibaba Cloud Native