By Chilin Huang, Luying and Cheyang

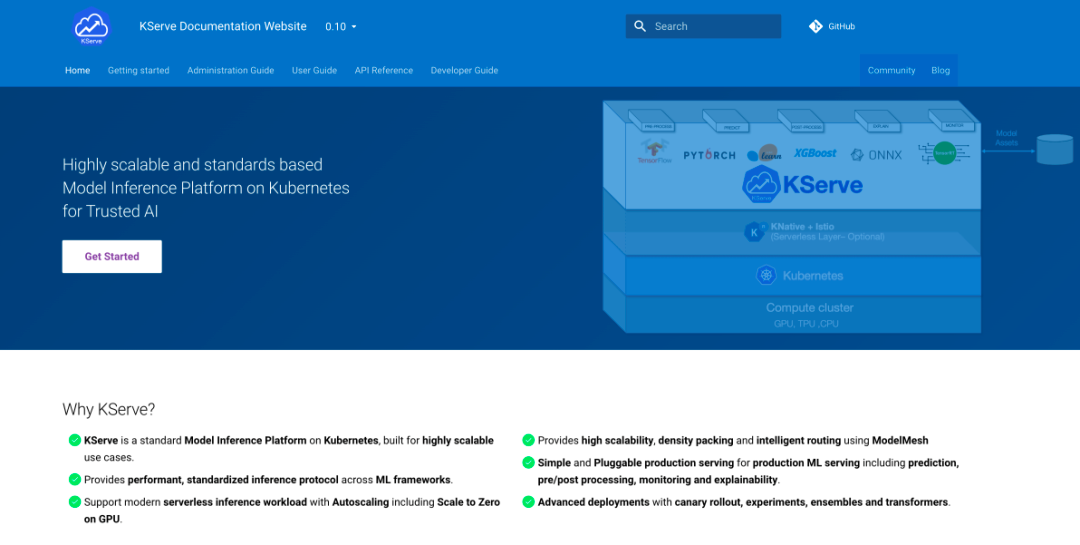

KServe is a standardized model inference platform designed for highly scalable scenarios on Kubernetes. It supports serverless inference workloads for Machine Learning (ML) models on any framework. KServe offers high-performance and abstract interfaces, allowing seamless integration with popular ML frameworks like Tensorflow, XGBoost, Scikit-Learn, PyTorch, and ONNX, making it ideal for production model serving. It simplifies auto-scaling, network, health check, and service configuration complexities, and supports GPU auto-scaling, zeroing, and canary releases. KServe provides a plug-and-play solution for production-level machine learning services, encompassing predictive, pre-processing, post-processing, and interpretability capabilities.

Artificial intelligence generated content (AIGC) and large-scale language models (LLM) have gained significant prominence in the past year, further raising public expectations for AI. To generate new business value, an increasing number of companies are turning to KServe for the following reasons:

However, in real-world production scenarios, KServe encounters challenges in supporting LLMs. The main problems are as follows:

During Kubecon 2023, KServe mentioned that Fluid could potentially address its elasticity challenges. Fluid is an open-source, Kubernetes-native distributed dataset orchestration and acceleration engine. It primarily caters to data-intensive applications in cloud-native scenarios, such as big data and AI applications. For more details, please refer to the Overview of Fluid Data Acceleration [1].

The Alibaba Cloud Container Service team collaborates with KServe and the Fluid community to explore a simple, convenient, high-performance, production-level support for LLMs on the Alibaba Cloud Serverless Kubernetes platform. The key capabilities include:

Everything is ready. Let's explore the KServe big model inference in Alibaba Cloud Container Service for Kubernetes (ACK).

The ACK cluster used in this example contains three ecs.g7.xlarge ECS instances and one ecs.g7.2xlarge ECS instance. You can select to add three ecs.g7.xlarge ECS instances during creation. After creating the cluster, create a node pool to add one ecs.g7.2xlarge ECS instance. For details about how to create a node pool, see Create a Node Pool [3].

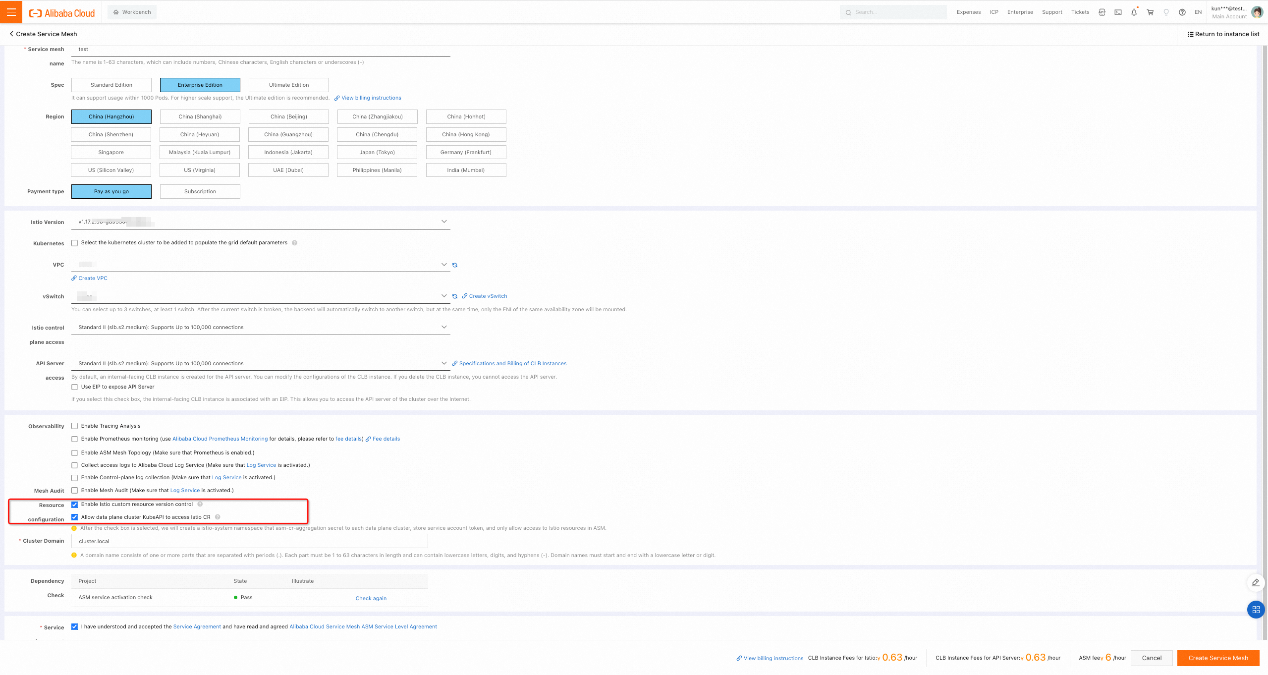

You can use the preceding configuration to create an ASM instance.

Note: KServe on ASM relies on the deployment and usage of the cert-manager component. cert-manager is a certificate lifecycle management system that facilitates certificate issuance and deployment. If cert-manager is not installed in the cluster, you need to enable the Automatically Install CertManager in the Cluster option on the KServe on ASM page to install cert-manager automatically. Alternatively, if cert-manager is already installed, you can disable it before clicking on Enable KServe on ASM.

1. Deploy ack-fluid in your ACK or ASK cluster and make sure that the version of ack-fluid is 0.9.10 or later.

Note: If your cluster is an ACK cluster on the data plane, you must install the cloud-native AI suite and deploy ack-fluid in the ACK cluster. Reference: Accelerate Online Application Data Access [10].

If your cluster on the data plane is an ASK cluster, you must deploy ack-fluid in the ASK cluster. For more information, see Accelerate Job Application Data Access [11].

2. Prepare an AI model and upload it to an OSS bucket.

a) Prepare the trained AI model data. This article uses bloom, an open-source transformer language model based on pytorch, as an example. You can obtain the model data in the hugging face community: https://huggingface.co/bigscience/bloom-560m/tree/main

b) Upload the downloaded model data file to an OSS bucket and record the storage location of the model data file. The format of the storage location of the model data file is oss://{bucket}/{path}. For example, if you create a bucket named fluid-demo and upload all model data files to the models/bloom directory in the bucket, the storage location of the model data files is oss://fluid-demo/models/bloom

Note: You can use ossutil, a client tool provided by OSS, to upload data. For more information, see Install Ossutil [12].

3. Create a namespace for deploying Fluid and AI services, and configure OSS access permissions.

a) Use kubectl to connect to the ACK/ASK cluster on the data plane. For more information, see Use kubectl to Connect to a Kubernetes Cluster [13].

b) Use kubectl to create a namespace to deploy the Fluid and KServe AI services. In this example, a kserve-fluid-demo namespace is used.

kubectl create ns kserve-fluid-democ) Use kubectl to add eci labels to the namespace to schedule pods in the namespace to virtual nodes.

kubectl label namespace kserve-fluid-demo alibabacloud.com/eci=trued) Create an oss-secret.yaml file with the following content. fs.oss.accessKeyId and fs.oss.accessKeySecret respectively represent the AccessKey ID and AccessKey secret that are used to access OSS.

apiVersion: v1

kind: Secret

metadata:

name: access-key

stringData:

fs.oss.accessKeyId: xxx # Replace the value with the Alibaba Cloud AccessKey ID that is used to access OSS.

fs.oss.accessKeySecret: xxx # Replace the value with the Alibaba Cloud AccessKey secret that is used to access OSS.e) Run the following command to deploy the Secret and configure the OSS AccessKey:

kubectl apply -f oss-secret.yaml -n kserve-fluid-demo4. Declare the AI model data to be accessed in Fluid. You need to submit a Dataset CR and a Runtime CR. Dataset CR describes the URL location of the data in the external storage system. JindoRuntime CR describes the cache system and its specific configuration.

a) Use the following content and save it as a oss-jindo.yaml file.

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: oss-data

spec:

mounts:

- mountPoint: "oss://{bucket}/{path}" # Replace it with the location where the model data file is saved.

name: bloom-560m

path: /bloom-560m

options:

fs.oss.endpoint: "{endpoint}" # Replace it with the actual OSS endpoint.

encryptOptions:

- name: fs.oss.accessKeyId

valueFrom:

secretKeyRef:

name: access-key

key: fs.oss.accessKeyId

- name: fs.oss.accessKeySecret

valueFrom:

secretKeyRef:

name: access-key

key: fs.oss.accessKeySecret

accessModes:

- ReadOnlyMany

---

apiVersion: data.fluid.io/v1alpha1

kind: JindoRuntime

metadata:

name: oss-data

spec:

replicas: 2

tieredstore:

levels:

- mediumtype: SSD

volumeType: emptyDir

path: /mnt/ssd0/cache

quota: 50Gi

high: "0.95"

low: "0.7"

fuse:

args:

- -ometrics_port=-1

master:

nodeSelector:

node.kubernetes.io/instance-type: ecs.g7.xlarge

worker:

nodeSelector:

node.kubernetes.io/instance-type: ecs.g7.xlargeNote: You need to replace the oss://{bucket}/{path} in Dataset CR with the storage location of the model data file recorded above. Replace {endpoint} in Dataset CR with the endpoint of OSS. For more information about how to obtain the endpoints of OSS in different regions, see Domain Names and Data Center [14].

a) Run the following command to deploy Dataset and JindoRuntime CR:

kubectl create -f oss-jindo.yaml -n kserve-fluid-demob) Run the following command to view the deployment of Dataset and JindoRuntime:

kubectl get jindoruntime,dataset -n kserve-fluid-demoExpected output:

NAME MASTER PHASE WORKER PHASE FUSE PHASE AGE

jindoruntime.data.fluid.io/oss-data Ready Ready Ready 3m

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

dataset.data.fluid.io/oss-data 3.14GiB 0.00B 100.00GiB 0.0% Bound 3mThe output shows that the PHASE of Dataset is Bound and the FUSE PHASE of JindoRuntime is Ready. This indicates that both Dataset and JindoRuntime are deployed.

5. Prefetch data in Fluid to improve data access performance.

a) Use the following content to save as an oss-dataload.yaml.

apiVersion: data.fluid.io/v1alpha1

kind: DataLoad

metadata:

name: oss-dataload

spec:

dataset:

name: oss-data

namespace: kserve-fluid-demo

target:

- path: /bloom-560m

replicas: 2b) Run the following command to deploy Dataload for data prefetching:

kubectl create -f oss-dataload.yaml -n kserve-fluid-democ) Run the following command to query the progress of data prefetching:

kubectl get dataload -n kserve-fluid-demoExpected output:

NAME DATASET PHASE AGE DURATIONoss-dataload

oss-data Complete 1m 45sThe output shows that the duration of data prefetching is 45 seconds. It takes a while for data prefetching.

1. Save the following as a oss-fluid-isvc.yaml

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "fluid-bloom"

spec:

predictor:

timeout: 600

minReplicas: 0

nodeSelector:

node.kubernetes.io/instance-type: ecs.g7.2xlarge

containers:

- name: kserve-container

image: cheyang/kserve-fluid:bloom-gpu

resources:

limits:

cpu: "3"

memory: 8Gi

requests:

cpu: "3"

memory: 8Gi

env:

- name: STORAGE_URI

value: "pvc://oss-data/bloom-560m"

- name: MODEL_NAME

value: "bloom"

# Set this parameter to True if GPU is used. Otherwise, set this parameter to False.

- name: GPU_ENABLED

value: "False"Note: In this example, the cheyang/kserve-fluid:bloom-gpu sample image is used in the image field in the InferenceService configuration. This image provides an interface for loading models and inference services. You can find the code of this sample image in the KServe open source community and customize the image: https://github.com/kserve/kserve/tree/master/docs/samples/fluid/docker

2. Run the following command to deploy the InferenceService AI model inference service:

kubectl create -f oss-fluid-isvc.yaml -n kserve-fluid-demo3. Run the following command to view the deployment status of the AI model inference service.

kubectl get inferenceservice -n kserve-fluid-demoExpected output:

NAME URL

READY PREV LATEST PREVROLLEDOUTREVISION LATESTREADYREVISION AGEfluid-bloom http://fluid-bloom.kserve-fluid-demo.example.com True 100 fluid-bloom-predictor-00001 2dThe expected output shows that the READY field is True, which proves that the AI model inference service has been deployed.

1. Obtain the ASM ingress gateway address.

a) Log on to the ASM console. In the left-side navigation pane, click Service Mesh > Mesh Management.

b) On the Mesh Management page, click the name of the target instance, and click ASM Gateways > Ingress Gateway in the left-side navigation pane.

c) On the Ingress Gateway page, find the ASM ingress gateway named ingressgateway. In the Service Address section, view and obtain the service address of the ASM gateway.

2. Access the sample of AI model inference service

Run the following command to access the sample AI model inference service bloom, and replace the ASM gateway service address with the ASM ingress gateway address you obtained.

curl -v -H "Content-Type: application/json" -H "Host: fluid-bloom.kserve-fluid-demo.example.com" "http://{ASM gateway service address}:80/v1/models/bloom:predict" -d '{"prompt": "It was a dark and stormy night", "result_length": 50}'Expected output:

* Trying xxx.xx.xx.xx :80...

* Connected to xxx.xx.xx.xx (xxx.xx.xx.xx ) port 80 (#0)

> POST /v1/models/bloom:predict HTTP/1.1

> Host: fluid-bloom-predictor.kserve-fluid-demo.example.com

> User-Agent: curl/7.84.0

> Accept: */*

> Content-Type: application/json

> Content-Length: 65

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< content-length: 227

< content-type: application/json

< date: Thu, 20 Apr 2023 09:49:00 GMT

< server: istio-envoy

< x-envoy-upstream-service-time: 1142

<

{

"result": "It was a dark and stormy night, and the wind was blowing in the\ndirection of the west. The wind was blowing in the direction of the\nwest, and the wind was blowing in the direction of the west. The\nwind was"

}

* Connection #0 to host xxx.xx.xx.xx left intactAs the expected output shows, the AI model inference service has renewed the sample input and returned the inference result.

Our performance benchmark will compare the expansion time of KServe's OSS Storage Initializer and Fluid's inference service and test the expansion time under different models. That is, measure the time required for the service to expand from 0 to 1.

The test models we selected are:

The Fluid Runtime used is:

Other prerequisites:

Performance Testing command:

# Total time includes: pod initialization and running + download model (storage initializer) + load model + inference + network

curl --connect-timeout 3600 --max-time 3600 -o /dev/null -s -w 'Total: %{time_total}s\n' -H "Content-Type: application/json" -H "Host: ${SERVICE_HOSTNAME}" "http://${INGRESS_HOST}:${INGRESS_PORT}/v1/models/bloom:predict" -d '{"prompt": "It was a dark and stormy night", "result_length": 50}'

# Total time:| Model Name | Model Size (Snapshot) | Machine Type | KServe + Storage Initializer | KServe + Fluid |

| bigscience/bloom-560m [15] | 3.14GB | ecs.g7.2xlarge (cpu: 8, memory: 32G) |

total: 58.031551s(download:33.866s, load: 5.016s) | total: 8.488353s(load: 2.349s) (2 workers) |

| bigscience/bloom-7b1 [16] | 26.35GB | ecs.g7.4xlarge (cpu: 16, memory: 64G) |

total: 329.019987s(download: 228.440s, load: 71.964s) | total: 27.800123s(load: 12.084s) (3 workers) |

Total: the response time (scales out from 0) when no service is available, including container scheduling, startup, model download, model loading to video memory, model inference, and network latency.

In the context of LLMs, it is evident that:

This greatly improves the elastic scaling capability of KServe in container scenarios.

While there is still a long way to go in improving and optimizing the support for large models through existing cloud-native AI frameworks, progress can be made by continuous effort. The Alibaba Cloud Container Service team is committed to collaborating with community partners to explore solutions that enable better support for LLM inference scenarios at a lower cost. Our aim is to provide standard, open solutions and product-based capabilities. In the future, we will introduce methods to control costs, such as leveraging the rule of computing elastic scaling to trigger the elastic scaling of the data cache. Additionally, we will provide a hot update method for large models.

[1] Overview of Fluid Data Acceleration

https://www.alibabacloud.com/help/en/doc-detail/208335.html

[2] Create a Managed Kubernetes Cluster

https://www.alibabacloud.com/help/en/doc-detail/95108.html

[3] Create a Node Pool

https://www.alibabacloud.com/help/en/doc-detail/160490.html

[4] Create an ASM Instance

https://www.alibabacloud.com/help/en/doc-detail/147793.htm#task-2370657

[5] Add the Cluster to the ASM Instance

https://www.alibabacloud.com/help/en/doc-detail/148231.htm#task-2372122

[6] Access Istio Resources Through the KubeAPI of Clusters on the Data Plane

https://www.alibabacloud.com/help/en/doc-detail/431215.html

[7] Create an Ingress Gateway Service

https://www.alibabacloud.com/help/en/doc-detail/149546.htm#task-2372970

[8] Deploying Serverless Applications by Using Knative on ASM

https://www.alibabacloud.com/help/en/doc-detail/611862.html

[9] ASM Console

https://account.aliyun.com/login/login.htm?oauth_callback=https%3A%2F%2Fservicemesh.console.aliyun.com%2F〈=zh

[10] Accelerate Online Application Data Access

https://www.alibabacloud.com/help/en/doc-detail/440265.html

[11] Accelerate Job Application Data Access

https://www.alibabacloud.com/help/en/doc-detail/456858.html

[12] Install Ossutil

https://www.alibabacloud.com/help/en/doc-detail/120075.htm#concept-303829

[13] Connect to a Kubernetes Cluster Through kubectl

https://www.alibabacloud.com/help/en/doc-detail/86378.html

[14] Access Domains and Data Centers

https://www.alibabacloud.com/help/en/doc-detail/31837.html

[15] bigscience/bloom-560m

https://huggingface.co/bigscience/bloom-560m

[16] bigscience/bloom-7b1

https://huggingface.co/bigscience/bloom-7b1

Deploy Enterprise-level AI Applications Based on Alibaba Cloud Serverless Container Service

Demystify the Practice of Large Language Models: Exploring Distributed Inference

639 posts | 55 followers

FollowAlibaba Container Service - November 15, 2024

Alibaba Container Service - April 17, 2025

Alibaba Developer - March 1, 2022

Alibaba Cloud Native Community - March 29, 2024

Alibaba Container Service - October 30, 2024

Alibaba Container Service - August 30, 2024

639 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community