The industry is evolving.

Traditional IT-based workloads are transitioning to DT-based workloads and as a result, services are gradually shifting from CPU-based to GPU-based. In particular, GPUs are becoming more commonly used for AI applications than for traditional service workloads.

Alibaba Cloud has researched the challenges faced by engineers working with AI workloads.

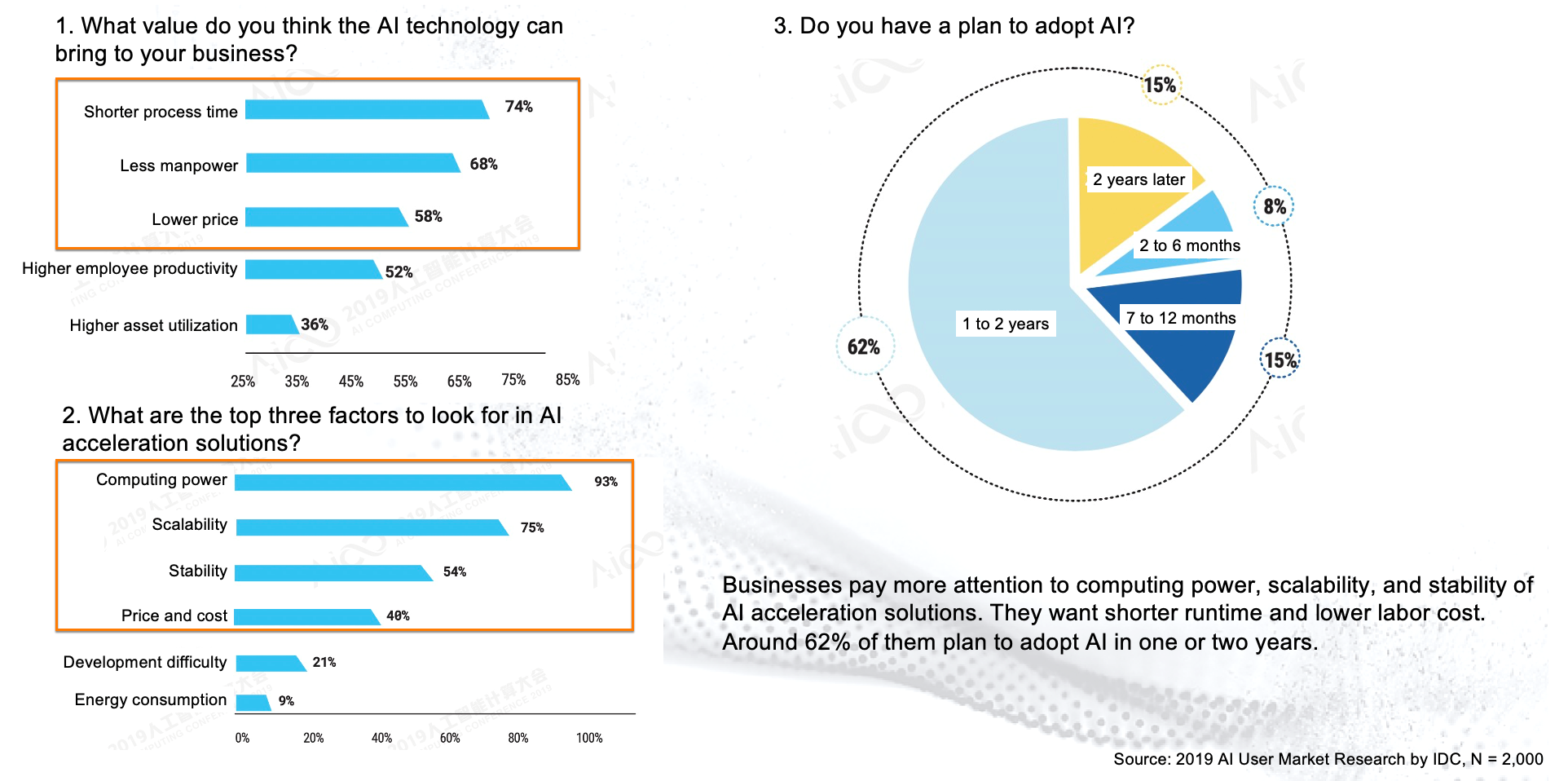

First, we responded to the question of what benefits AI can bring, with the following answers: (1) short process time, (2) reduced human resources, and (3) cost savings.

We also answered questions about the important functions that AI solutions should have, with the following answers: (1) computing power, (2) scalability, and (3) stability and price.

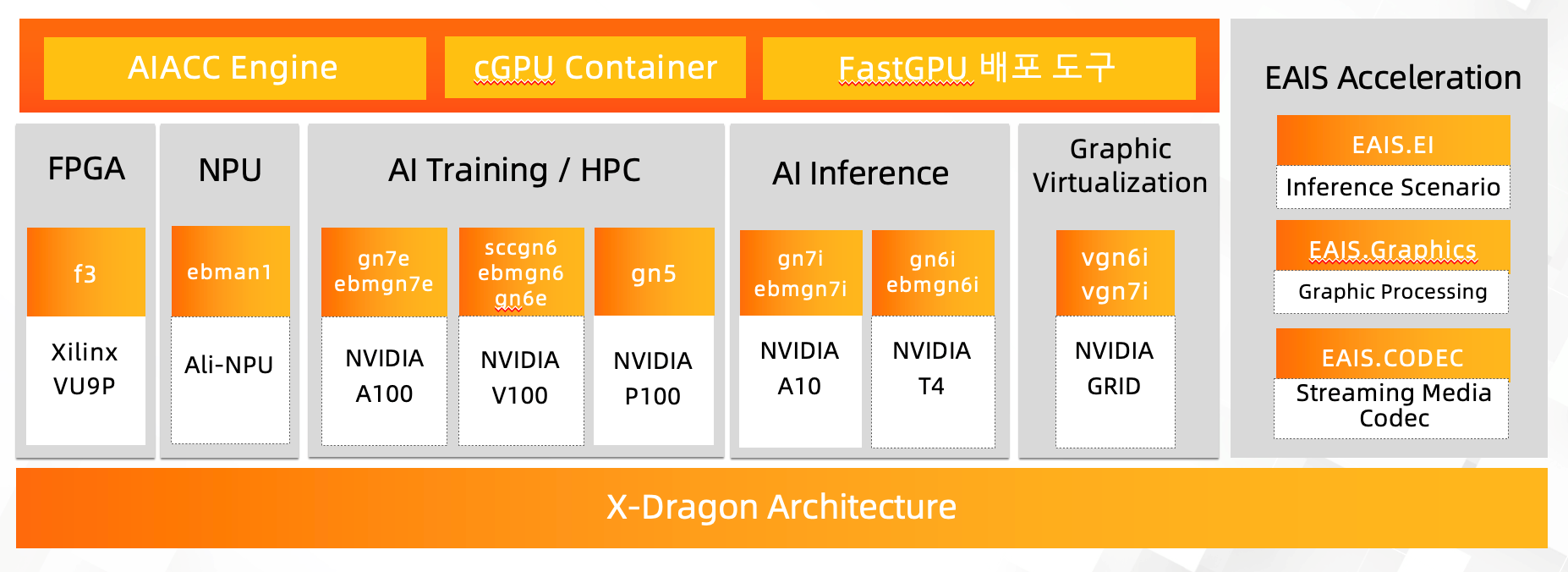

Alibaba Cloud has software features that enable efficient utilization of various types of GPU clusters and GPU resources based on the X-Dragon virtualization architecture.

Let's summarize the services that are worth focusing on.

Alibaba Cloud provides FPGA-based Elastic Compute Service (ECS) instances optimized for computing-intensive workloads such as artificial intelligence, machine learning, and data analysis. These instances are powered by Xilinx Virtex UltraScale+ FPGAs and designed to provide high-performance, low-latency processing for complex algorithms and calculations.

By using FPGAs on Alibaba Cloud, users can achieve faster processing speeds and shorter wait times, improving application performance and reducing cloud computing infrastructure costs.

Alibaba Cloud provides NPU-based Elastic Compute Service(ECS) instances optimized for deep learning workloads such as image and voice recognition, natural language processing, and recommendation systems. These instances are equipped with dedicated NPUs that can perform matrix multiplication and other common neural network tasks with high efficiency and short wait times.

Users can increase the processing speed of deep learning workloads and reduce wait times, improving application performance and lowering cloud computing costs, by using NPUs on Alibaba Cloud. Additionally, Alibaba Cloud provides various pre-built deep learning models that can be easily deployed on NPU-based ECS instances, making it easy for users to start using deep learning.

Using an AI-dedicated Nvidia GPU cluster, it is possible to accelerate deep learning algorithms utilizing GPUs.

NVIDIA V100, and P100 are different generations of NVIDIA GPU accelerators designed for different workloads and use cases.

NVIDIA P100 is the first generation of NVIDIA Pascal architecture and is designed for high-performance computing (HPC) workloads. It features up to 3584 CUDA cores and 16GB or 12GB of high-bandwidth memory (HBM2), providing up to 9.3 teraflops of single-precision floating-point performance.

NVIDIA V100 is the second generation of NVIDIA Volta architecture designed for HPC and deep learning workloads. It features up to 5120 CUDA cores and 16GB or 32GB of HBM2 memory, providing up to 7.8 teraflops of double-precision floating-point performance or up to 15.7 teraflops of single-precision floating-point performance.

NVIDIA GPU is the latest generation of NVIDIA Ampere architecture designed for both HPC and AI workloads. It features up to 6912 CUDA cores and 40GB or 80GB of HBM2 memory, providing up to 9.7 teraflops of double-precision floating-point performance or up to 19.5 teraflops of single-precision floating-point performance. It also includes dedicated tensor cores that can accelerate AI workloads such as deep learning and machine learning.

Overall, the main differences between these GPUs are performance, memory capacity, and special hardware features. The P100 is optimized for HPC workloads, while the V100 are optimized for both HPC and AI workloads, offering higher performance and more memory capacity than the P100.

Using a relatively cost-effective A10/T4 cluster, you can perform tasks such as AI inference, rendering, and media encoding/decoding.

NVIDIA T4 and A10 are both GPUs designed for data center applications, but there are some important differences between them.

NVIDIA T4 is a GPU based on the Turing architecture, designed for a wide range of workloads including artificial intelligence, machine learning, and data analysis. It features 16GB of GDDR6 memory and 320 Turing Tensor Cores specifically designed to accelerate deep learning workloads. T4 also includes 320 Turing Tensor Cores designed to accelerate deep learning workloads.

On the other hand, NVIDIA A10 is a GPU based on the Ampere architecture, specifically designed for AI and HPC workloads. It features 24GB of GDDR6 memory and 640 Tensor Cores designed to accelerate training and inference workloads for deep learning. A10 also includes 64 dedicated RT (ray tracing) cores that can accelerate real-time rendering and other graphics-intensive applications.

In terms of performance, the A10 offers higher performance than the T4 for AI and deep learning workloads, particularly for large model training. The A10 also includes more memory capacity, making it useful for larger workloads. However, the T4 is a more versatile GPU that can be used for a wider range of workloads beyond AI and deep learning.

Alibaba Cloud provides various functions that can efficiently utilize many types of GPUs described above. Among these functions, we will introduce the four most noteworthy features.

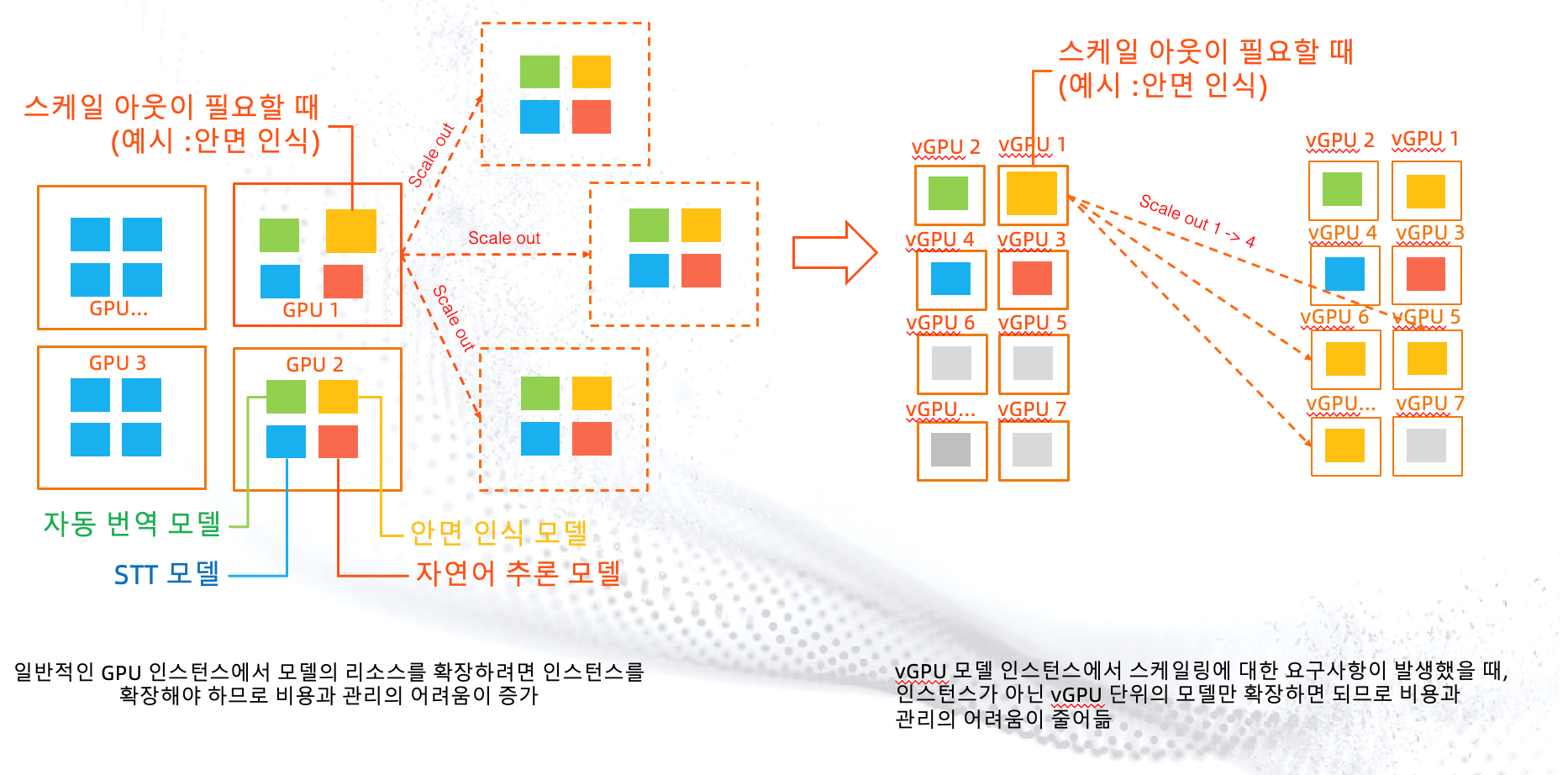

vGPU is an architecture that utilizes Nvidia's Grid virtualization technology. It breaks the limitation of 1:1 ratio between GPU and data scientists, allowing multiple data scientists to use a single GPU card.

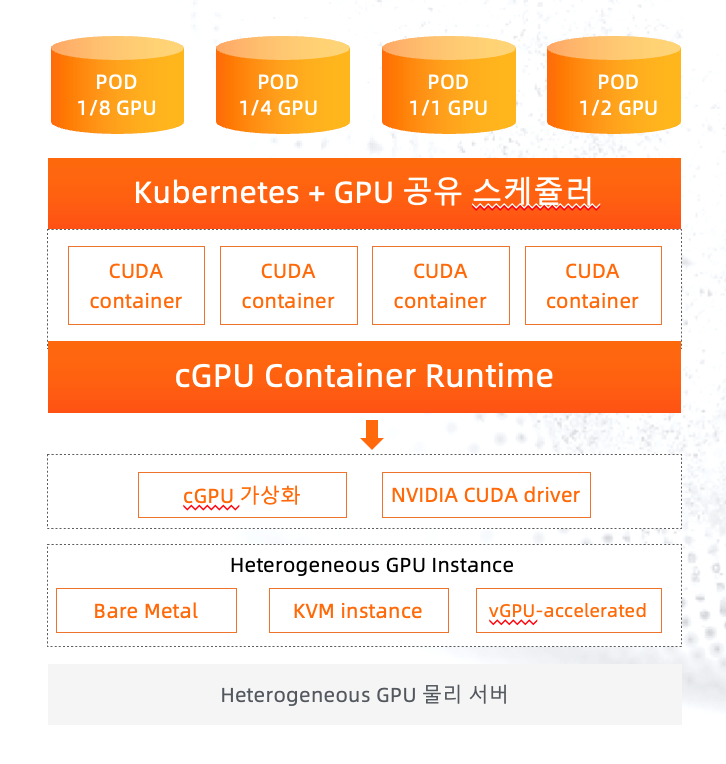

cGPU is a technology that enables GPU virtualization in containers, rather than instances. Created using CUDA drivers on Alibaba Cloud, it can be implemented in Alibaba Cloud's Kubernetes technology, ACK.

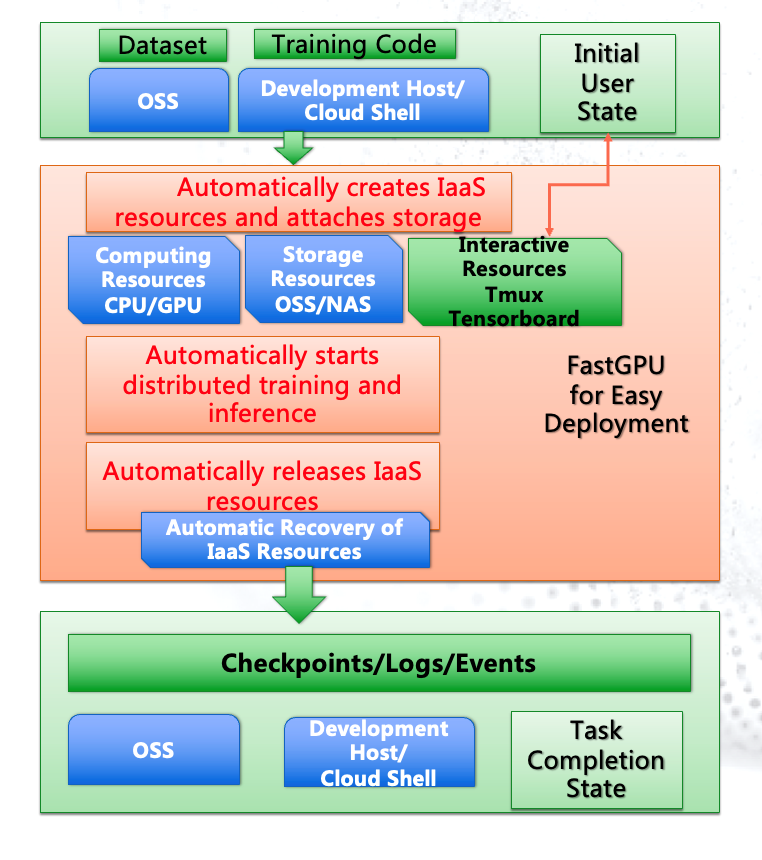

FastGPU is a useful technology when clustering multiple GPU instances for business purposes.

In the past, setting up clustering for multiple GPUs required various tasks such as utilizing commands like "ecluster create," connecting shared storage, and creating a single endpoint. These tasks could take several days to weeks to complete.

However, if you utilize this FastGPU technology, you can automatically perform tasks such as GPU clustering, setting up shared stories, and Shell environments, which can help accelerate your business.

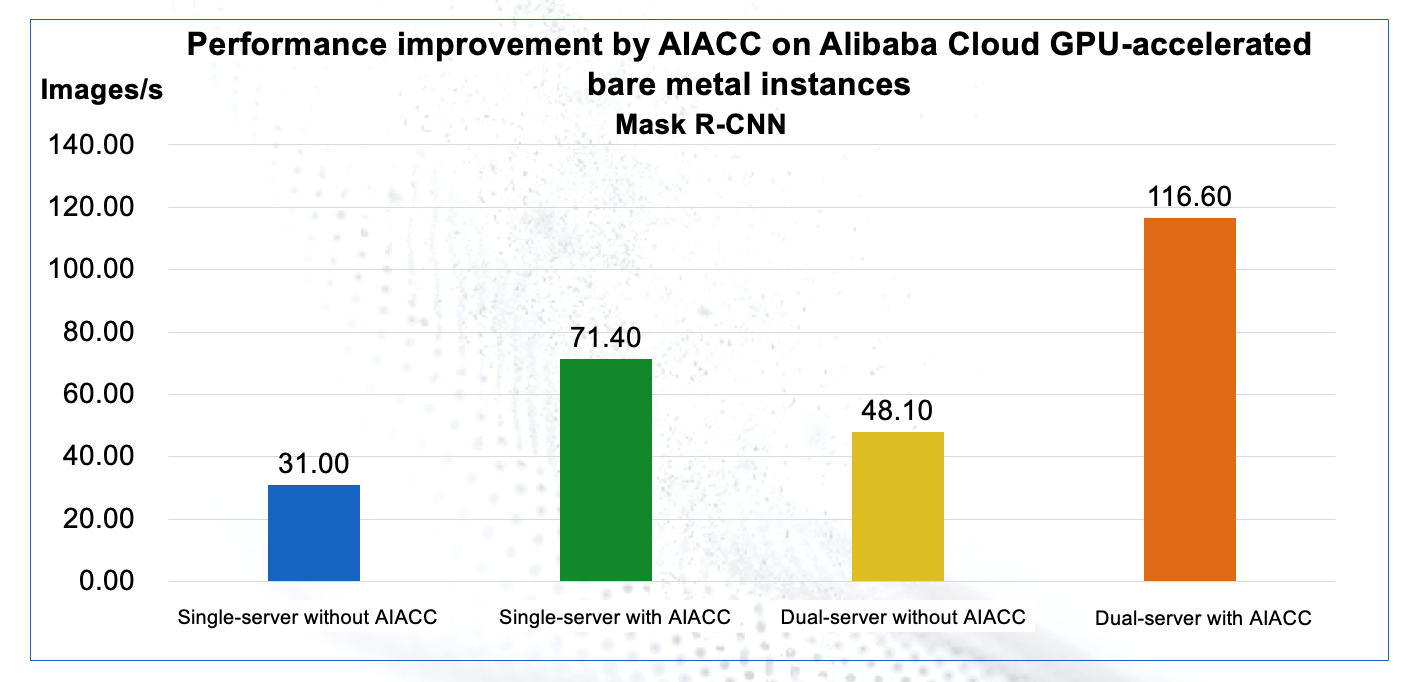

AIACC is a software architecture designed to accelerate inference and cognitive learning in deep learning workloads by utilizing GPUs.

AIACC has a user-friendly interface that can be easily used by adding a few configurations to the GPU cluster. Particularly, significant improvements can be achieved by utilizing multiple GPUs in instances, rather than a single instance with one card.

Alibaba Cloud's GPU service is widely used in a variety of industries, including VFX, Metaverse, gaming for 3D rendering/gaming, enterprise businesses for AI deep learning/video and audio encoding/decoding, and machine learning for autonomous driving, among others.

Recently, Alibaba Cloud has deployed a large number of instances with the latest GPU chipsets in the Seoul region. The Alibaba Cloud Korea team is committed to providing the best local services to enterprise customers in Korea.

If you have any further inquiries about Alibaba Cloud GPU service, please contact us at abckr@list.alibaba-inc.com.

Alibaba Container Service - February 18, 2021

ray - April 16, 2025

Alibaba Cloud Native Community - August 11, 2025

Alex - January 22, 2020

Alibaba Container Service - May 29, 2025

Alibaba Clouder - November 5, 2018

GPU(Elastic GPU Service)

GPU(Elastic GPU Service)

Powerful parallel computing capabilities based on GPU technology.

Learn More Elastic Desktop Service

Elastic Desktop Service

A convenient and secure cloud-based Desktop-as-a-Service (DaaS) solution

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by JJ Lim

Dikky Ryan Pratama May 8, 2023 at 7:01 am

thanks a lot for sharing