By Liu Dongdong, Technical Director of TAL AI Platform

Tomorrow Advanced Life (TAL) implements flexible resource scheduling through Alibaba Cloud's cloud-native architecture. Alibaba Cloud supports its AI platform with powerful technologies. This article is a summary of a speech delivered by Liu Dongdong, Technical Director of the TAL AI Platform, at the 2020 Apsara Conference.

In the AI era, enterprises are confronted with greater challenges in the abundance and agility of underlying IT resources. TAL leverages stable and elastic GPU cloud servers, advanced GPU containerized sharing and isolation technologies, and the Kubernetes cluster management platform of Alibaba Cloud. Together with cloud-native architecture, TAL realizes flexible resource scheduling, supporting its AI platform with powerful technologies.

At the 2020 Apsara Conference, Liu Dongdong shared his understanding of AI cloud-native and the AI platform practice of TAL. This article is a summary of his speech.

Hello everyone, I am Liu Dongdong, Technical Director of the TAL AI Platform. Today, I will deliver a speech entitled "A Brief Introduction to TAL AI Cloud-Native."

My speech is divided into four parts:

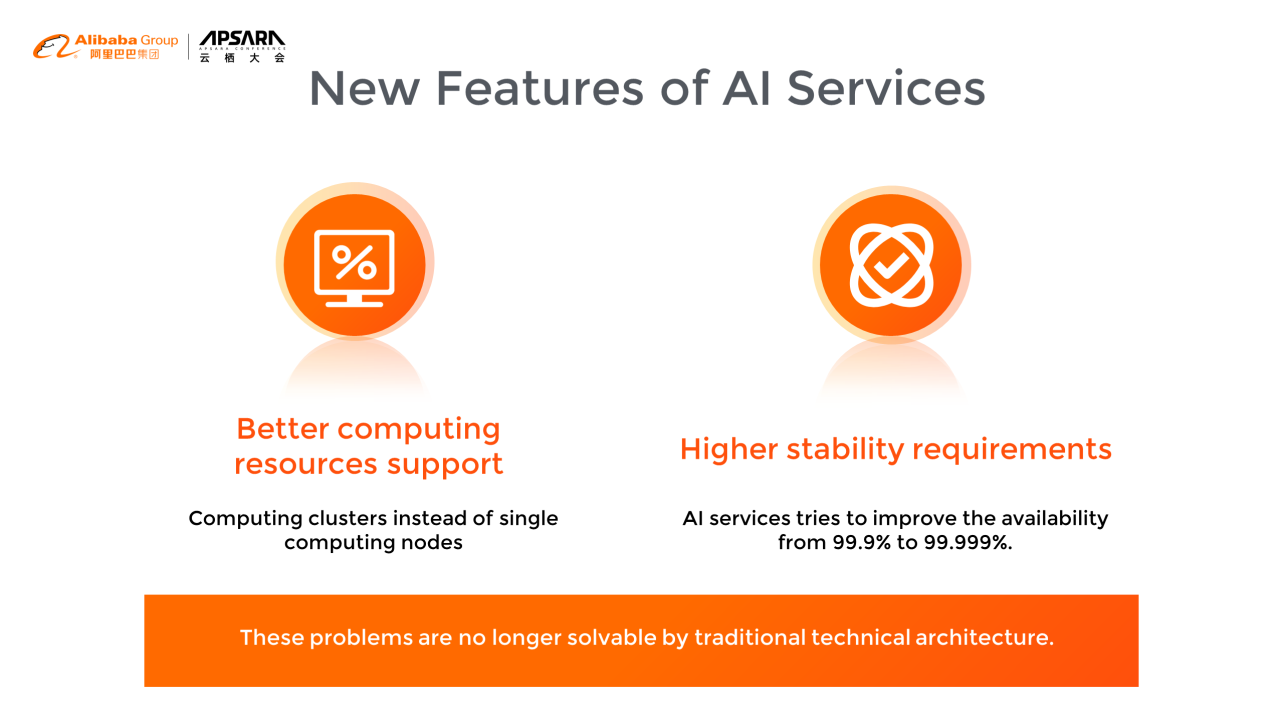

In the cloud-native era, the most distinctive feature of AI services lies in better computing resources support and higher stability requirements.

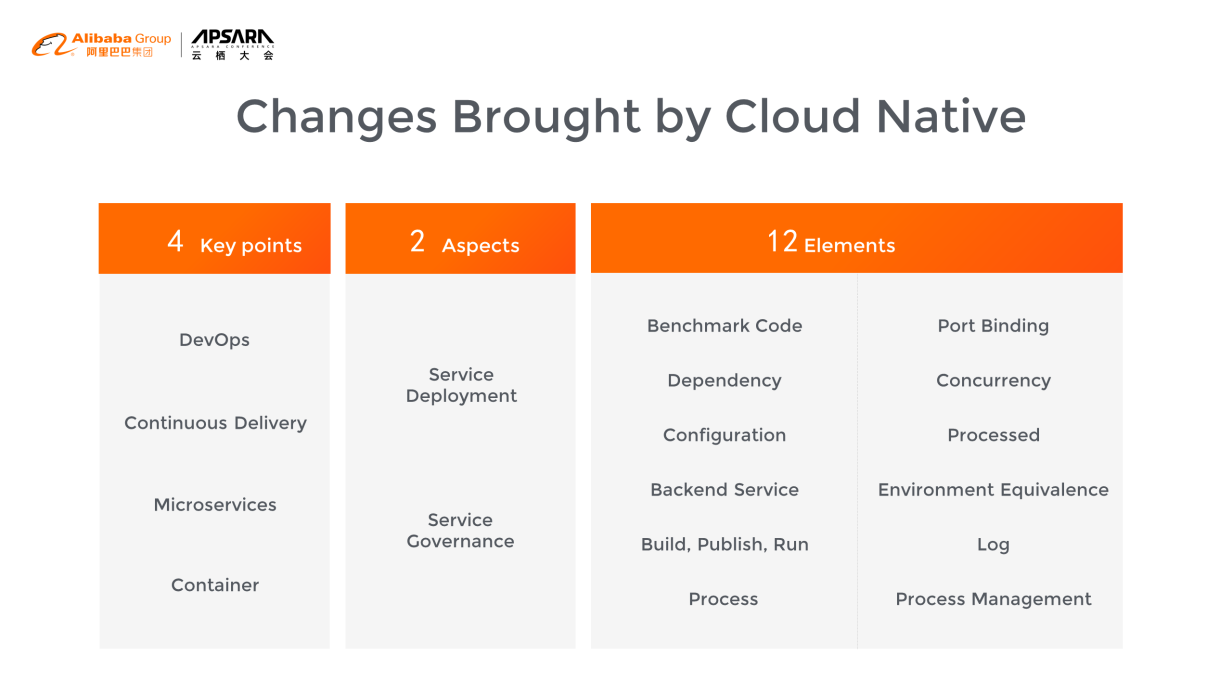

Service has transformed from single services to cluster services. The requirements for performance stability are increasing, improving from 99.9% to 99.999%. These problems are no longer solvable by traditional technical architecture. So, a new technical architecture is expected. That architecture is cloud-native architecture. In terms of changes brought by cloud-native, four key points and two aspects will be introduced.

The four key points are DevOps, continuous delivery, microservices, and containers. The two aspects are service deployment and service governance. There are 12 elements for the overall and systematic summary.

Today's focus is on service deployment and service governance, but how can we manage service deployment and service governance in the cloud-native era?

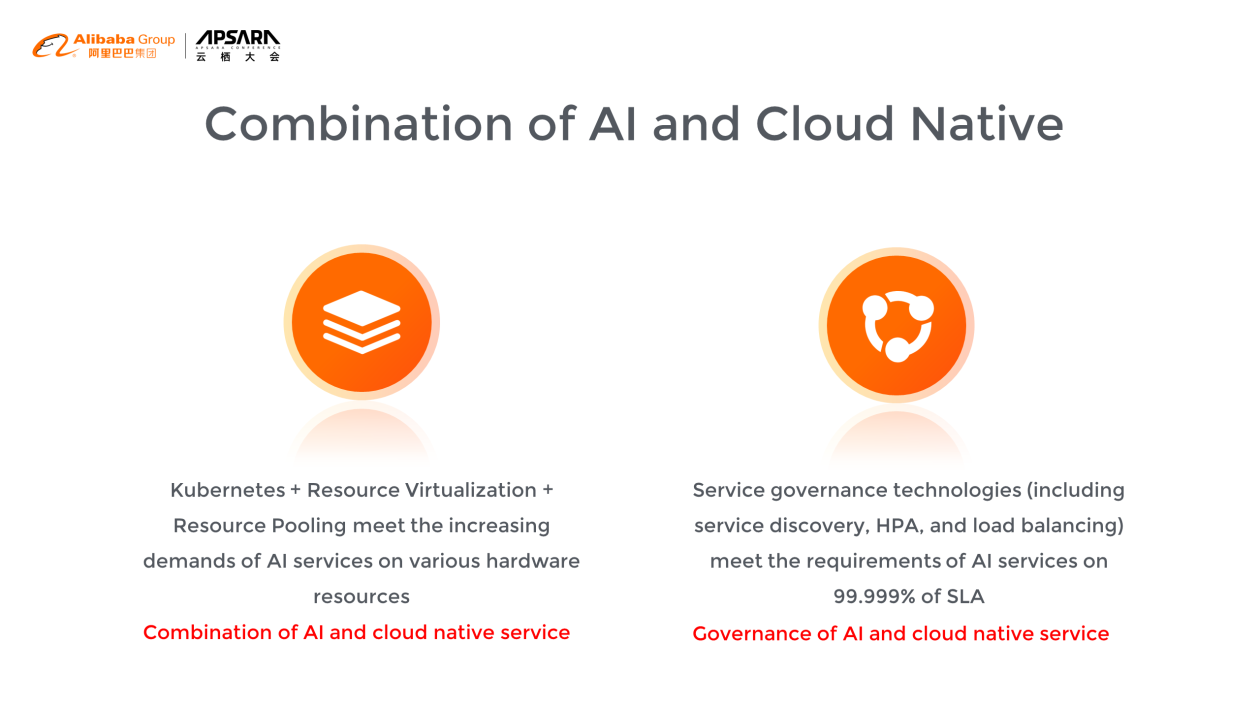

On the one hand, based on AI and cloud-native service deployment, which is Kubernetes, the increasing demands of AI services on various hardware resources can be met, coupled with resource virtualization and resource pooling technologies.

On the other hand, AI and cloud-native service governance is combined organically. Service governance technologies, such as service discovery, Horizontal Pod Autoscaler (HPA), and load balancing, are used to meet the requirements for AI service availability of 99.999% of SLA.

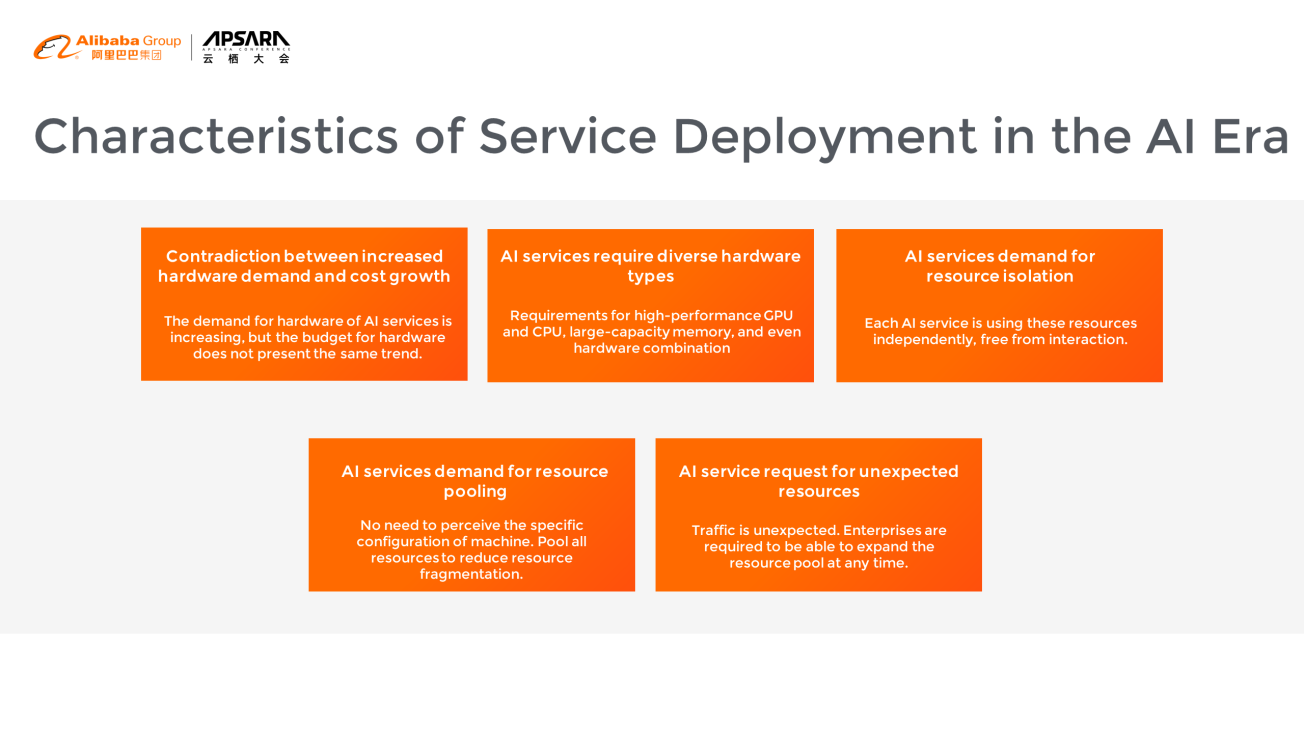

Firstly, there is a contradiction between increased hardware demand and cost growth. The demand for the hardware of AI services is increasing, but the hardware budget does not follow the same trend.

Secondly, AI services require diverse hardware types, such as high-performance GPU and CPU, large-capacity memory, and even partially mixed types.

Thirdly, AI services demand resource isolation. Each AI service can use these resources independently, free from any interaction.

Fourthly, AI services demand resource pooling. AI services do not need to perceive the specific configuration of the machine. Once all of the resources are pooled, resource fragmentation can be reduced, and utilization can be improved.

Finally, AI services may request unexpected resources. Since traffic cannot be predicted, enterprises are required to know how to expand the resource pool at any time.

First, Docker virtualization technology is used to isolate resources. GPU sharing technology is used to pool GPU, memory, CPU, and other resources and manage the entire resource in a unified manner.

Finally, Kubernetes resources, such as taints and tolerations, are used to deploy services flexibly.

It's also recommended to purchase high-configuration machines, which could further reduce fragmentation.

Cluster hardware monitoring is also necessary to make full use of the complex scheduling features of ECS. By doing so, we can cope with traffic peaks. The Cron in the following figure is a time-based scheduling task:

Now, I'd like to explain how the TAL AI platform solves these AI deployment problems.

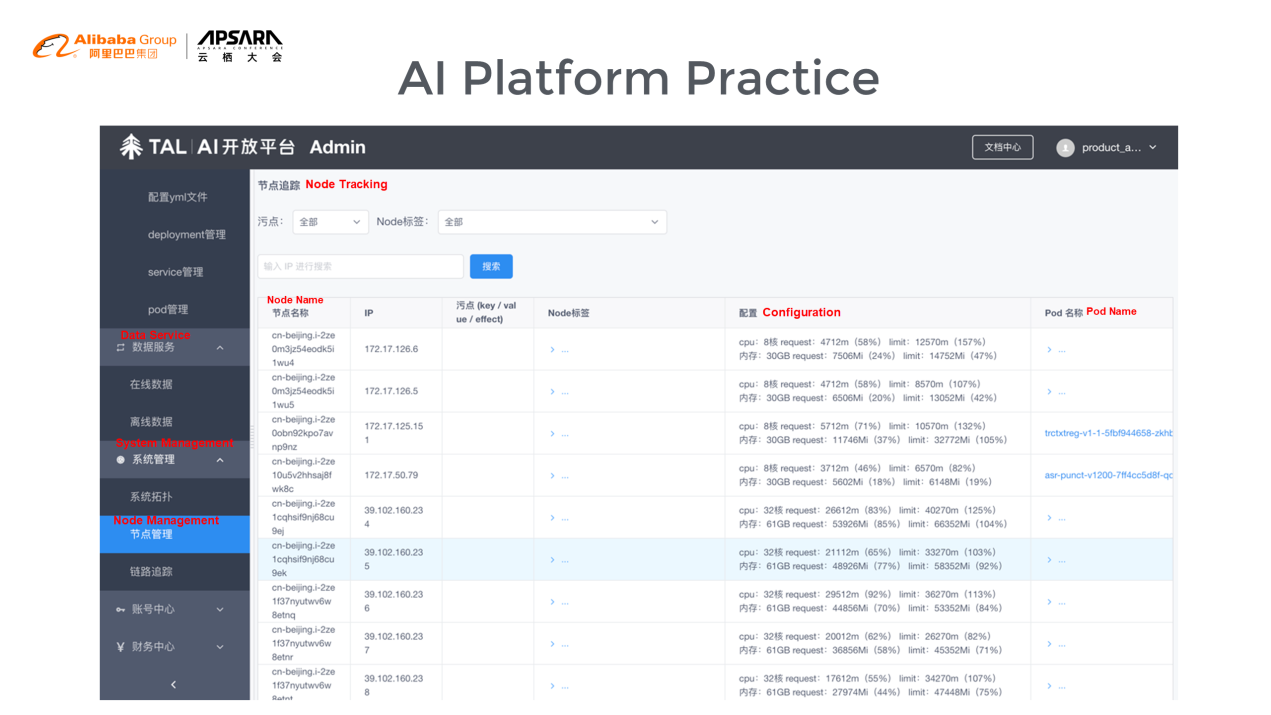

This page is the service management of a Node. Based on this, the information on each server can be seen, including resource usage and pods and nodes to be deployed.

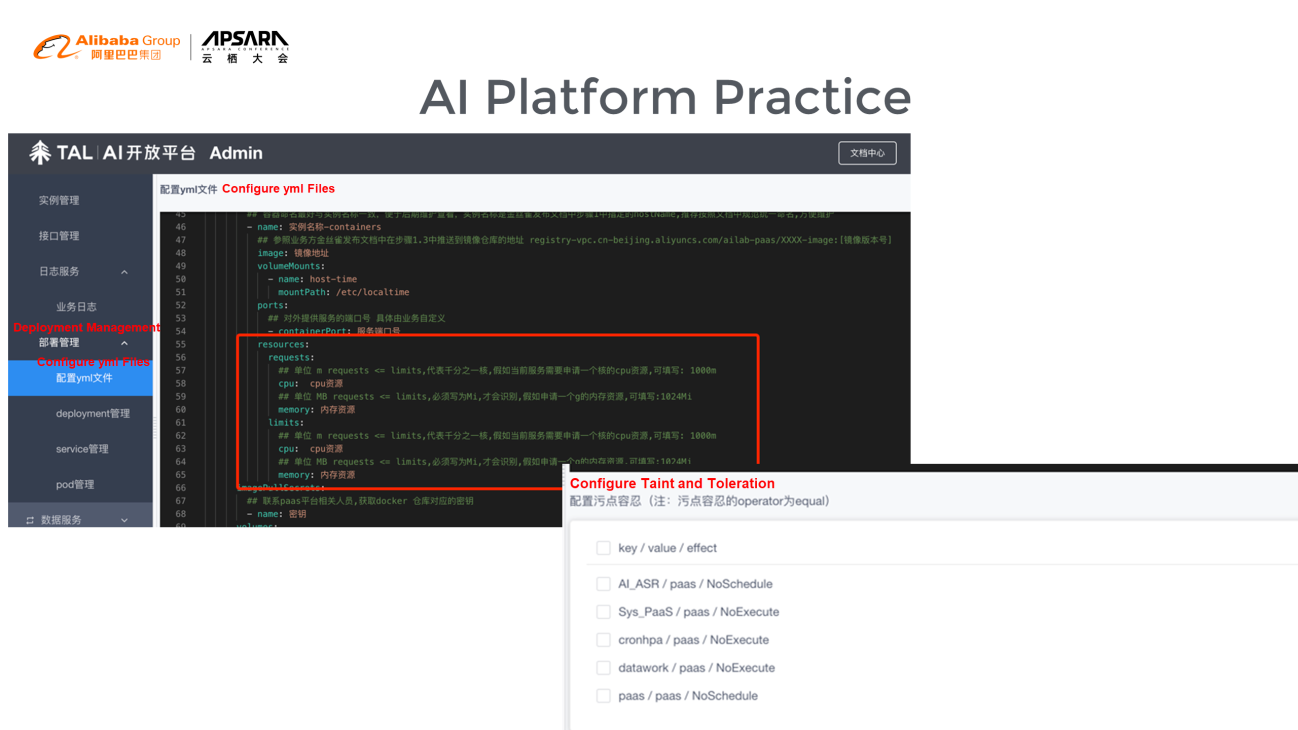

The next page is the service deployment page in the AI platform. The memory, CPU, and GPU usage of each pod can be precisely controlled by compressing files. Additionally, through technologies, such as taints, the diversified deployment of servers is realized.

According to comparative experiments, cloud-native deployment provides 65% of the reduction in costs compared to deployment by users themselves. Moreover, as AI clusters grow, it will bring more benefits in terms of economic benefits and temporary traffic expansion.

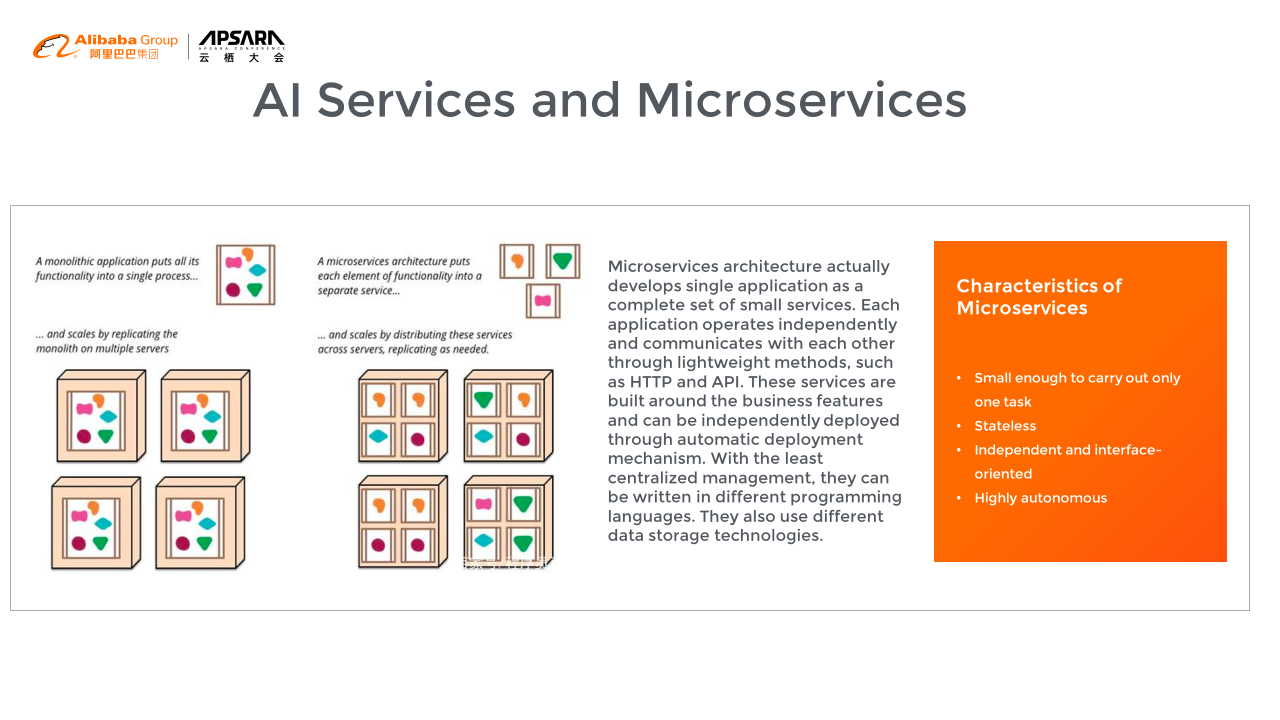

What are microservices? Microservices, as an architectural style, develop single services as a complete set of small services. Each application program operates independently and communicates with each other through lightweight methods, such as HTTP and API.

These services are built around the business and can be centrally-managed through automated deployment. They can also be written in different programming languages and use different storage resources.

What are the characteristics of microservice?

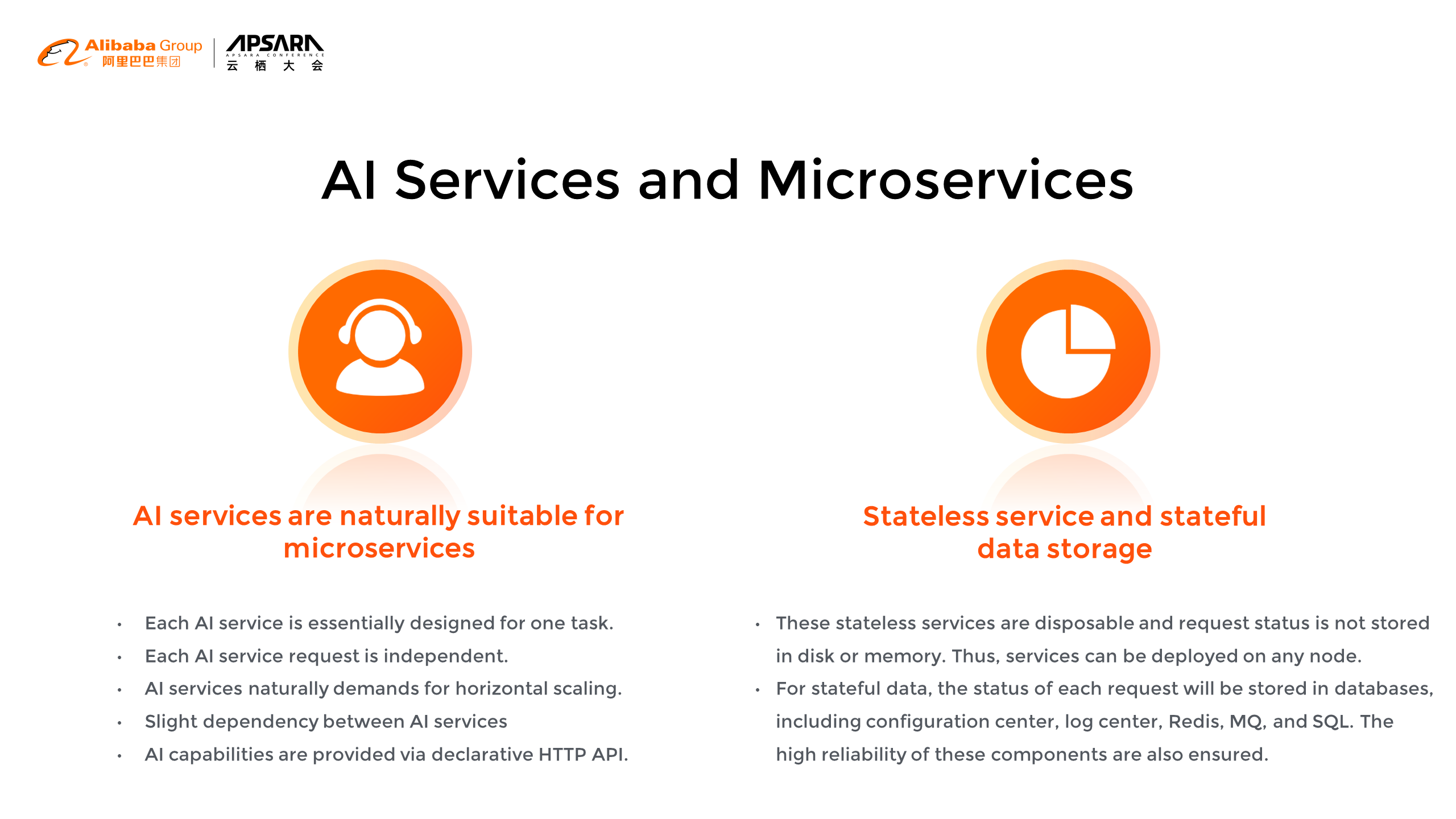

AI services are naturally suitable for microservices. Each microservice is designed for one task. For example, OCR only provides OCR services, and ASR mainly provides ASR services.

In addition, each AI service request is independent. One OCR request is unrelated to another one. AI services naturally demand horizontal scaling because AI services demand vast resources, which makes scaling very necessary. AI services are slightly dependent on each other. OCR service may have few requirements for NLP service or other AI services.

All AI services can provide AI capabilities via declarative HTTP or API. However, not all AI services can be transformed into microservices. So, what are the solutions?

First, AI services need to be stateless. These stateless services are disposable and do not store requests on a disk or memory. Thus, services can be deployed on any node or anywhere.

However, not all services can be stateless. For stateful services, the status of each request will be stored in databases, including configuration center, log center, Redis, MQ, and SQL. At the same time, the high reliability of these components is ensured.

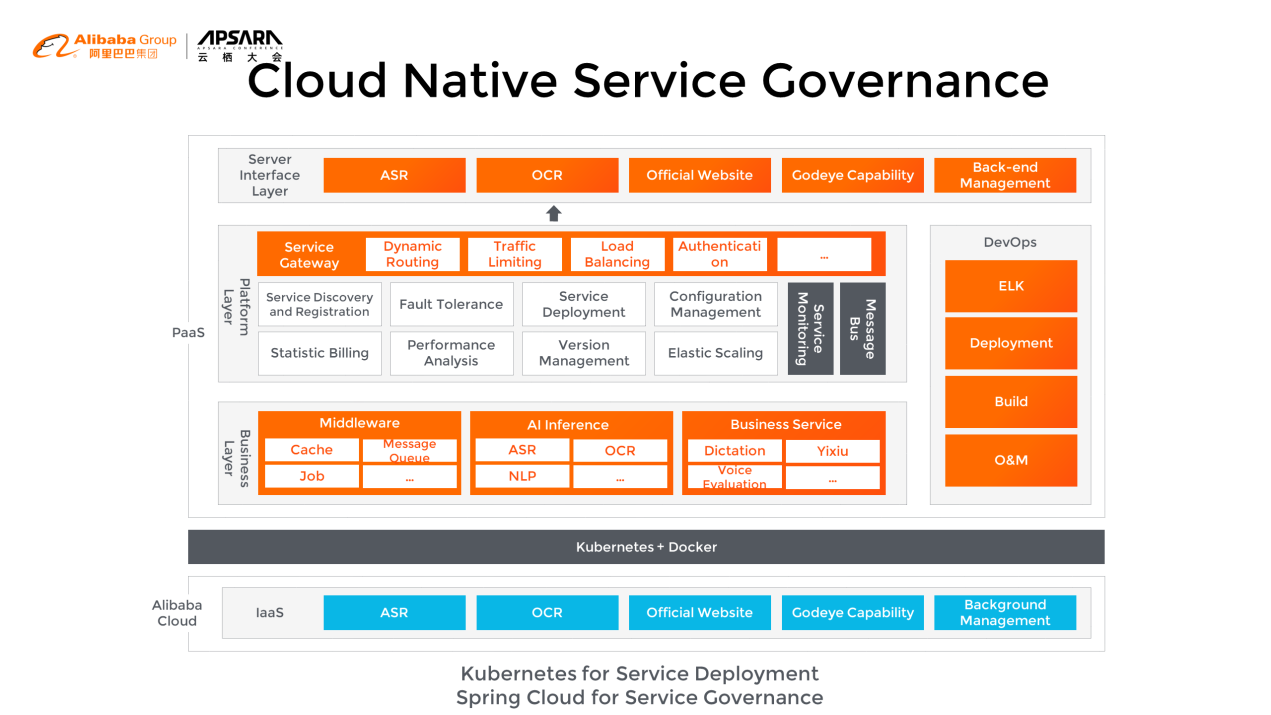

This is the overall architecture diagram of the TAL AI platform. The upper layer is the service interface layer, which provides AI capabilities for the external environment.

The most important part of the platform layer is the service gateway, which is mainly responsible for dynamic routing, traffic control, load balancing, and authentication. Other functions are also demonstrated, such as service discovery and registry, fault tolerance, configuration management, and elastic scaling.

Below is the business layer, which provides AI inference services. In the bottom layer, there are Kubernetes clusters provided by Alibaba Cloud. In short, Kubernetes is responsible for service deployment, and SpringCloud is responsible for service governance in the architecture.

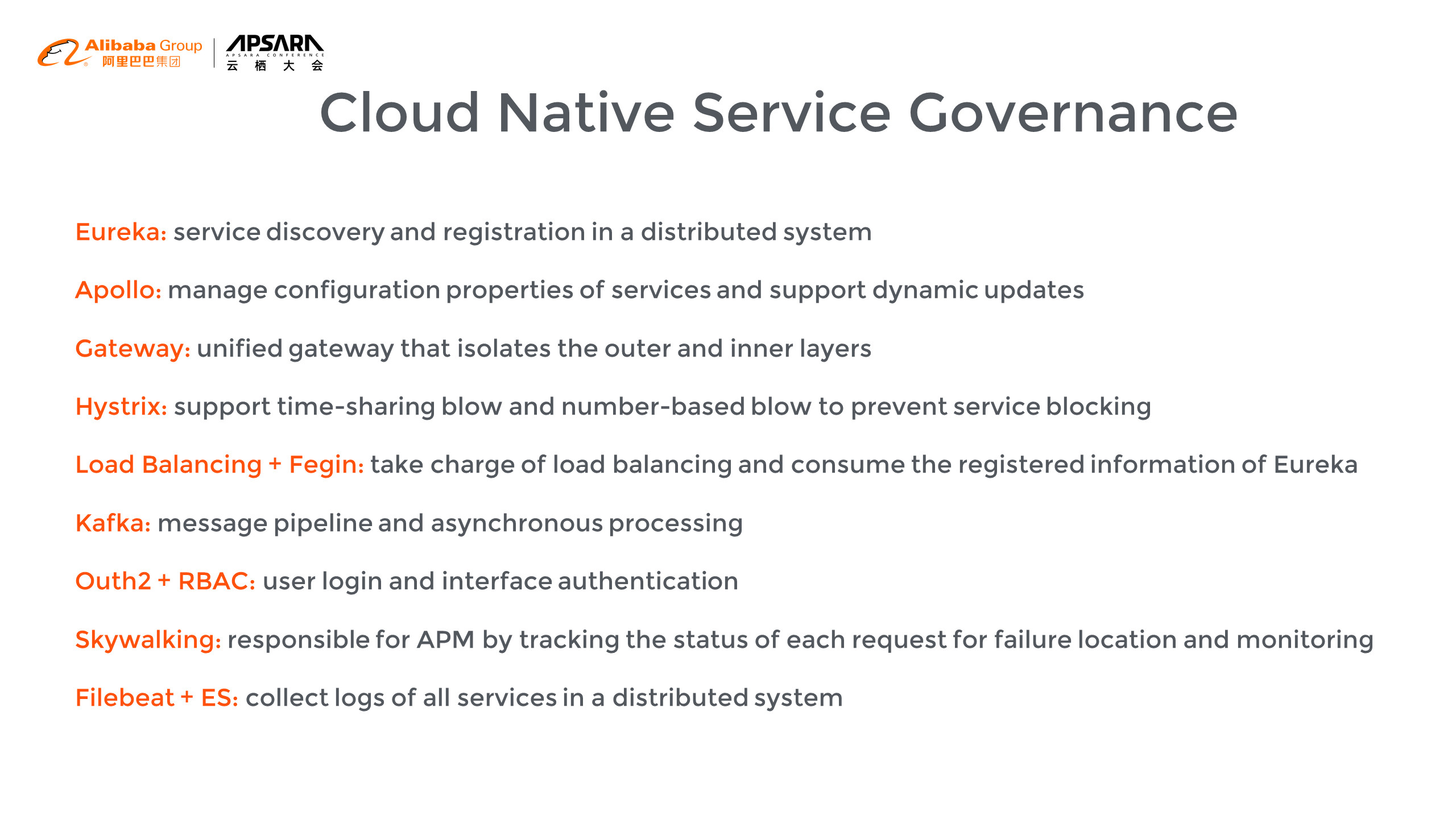

Eureka is taken as the registry to realize service discovery and registration in the distributed system. The configuration center Apollo manages the configuration properties of the server and supports dynamic updates. Gateway isolates the inner and outer layers. Hystrix mainly supports time-sharing blow and number-based blow to prevent service blocking.

Load balancing and Fegin can implement the overall traffic load balancing and consume the Eureka-related registration information. The message bus, Kafka, is an asynchronous processing component. The authentication is carried out via Outh2 and RBAC, which realizes the authentication management of user login, including interfaces, and ensures security and reliability.

Tracing analysis uses Skywalking to track the status of each request for request locating and monitoring with APM architecture. The log system collects logs from the entire cluster in a distributed manner via Filebeat and ES.

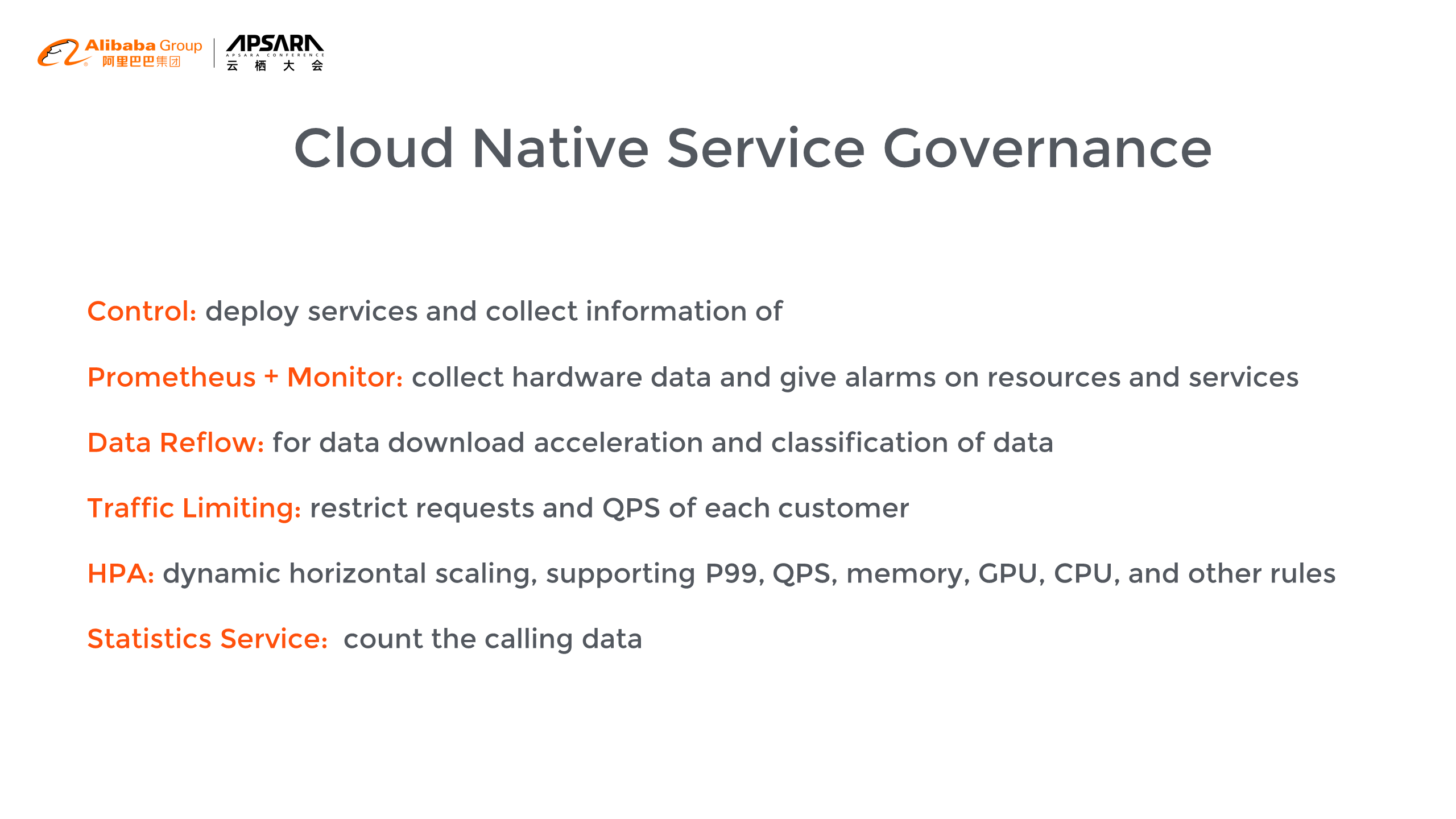

Other services are also developed, such as deployment and control services. These services are mainly for communication with Kubernetes and the collection of service deployment information in Kubernetes clusters and hardware information related to Kubernetes.

Based on Prometheus and Monitor, the monitoring system collects hardware data and handles alarms related to resources and services. Data service and data reflow are mainly used for data downloads and data interception in our inference scenarios. Traffic limiting service restricts the requests and Queries Per Second (QPS) of each customer. As the most important part, HPA supports memory-level or CPU-level HPA, some P99, QPS, GPU, and other related rules. The statistics service is mainly used to count the relevant calls, such as requests.

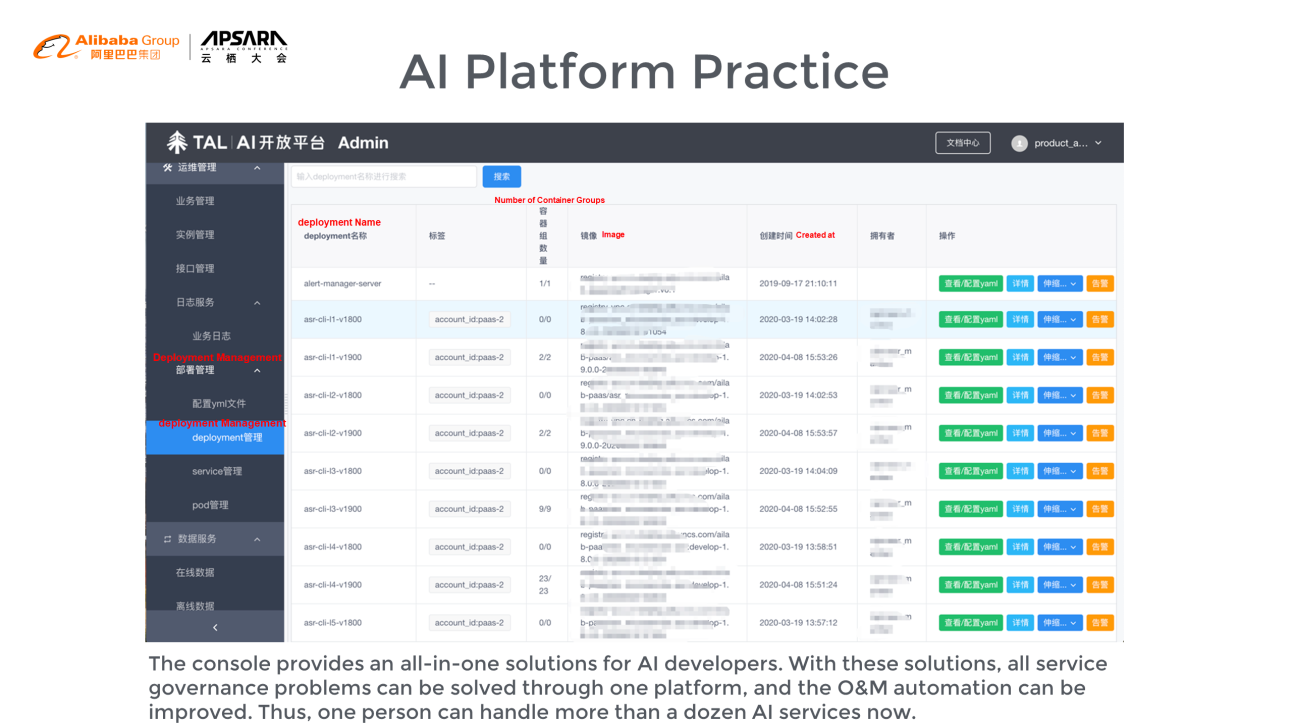

Based on a unified console, all-in-one solutions are available for AI developers that enable them to solve all of the service governance problems on one platform. Thus, the O&M automation is improved. One person can handle more than a dozen AI services now.

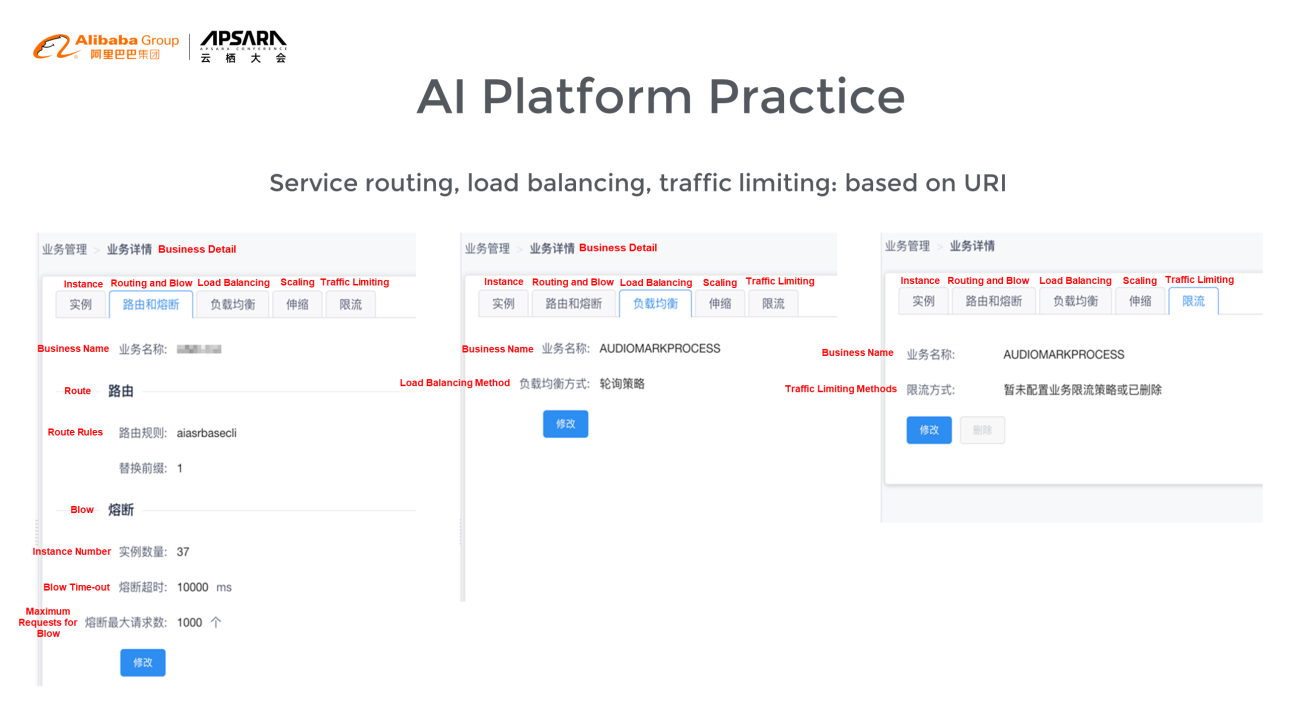

This figure shows the configuration pages of service routing, load balancing, and traffic limiting:

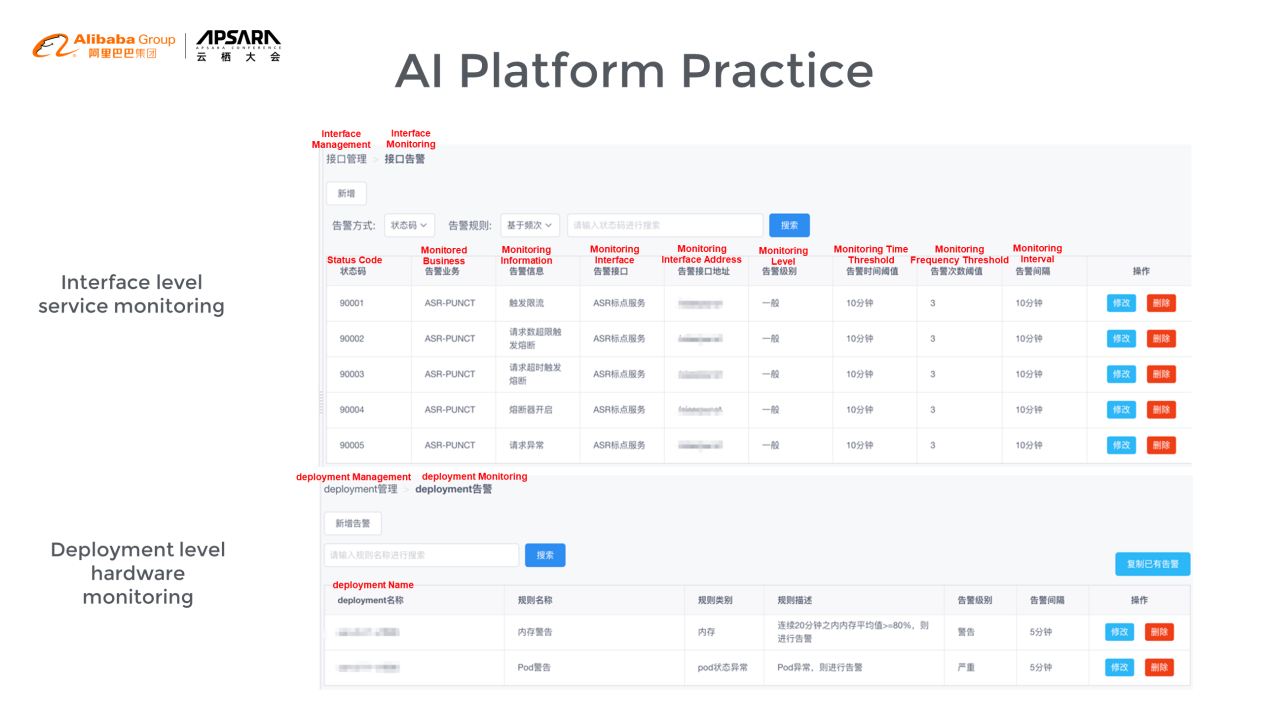

This figure shows the interface-level service monitoring and deployment-level hardware monitoring:

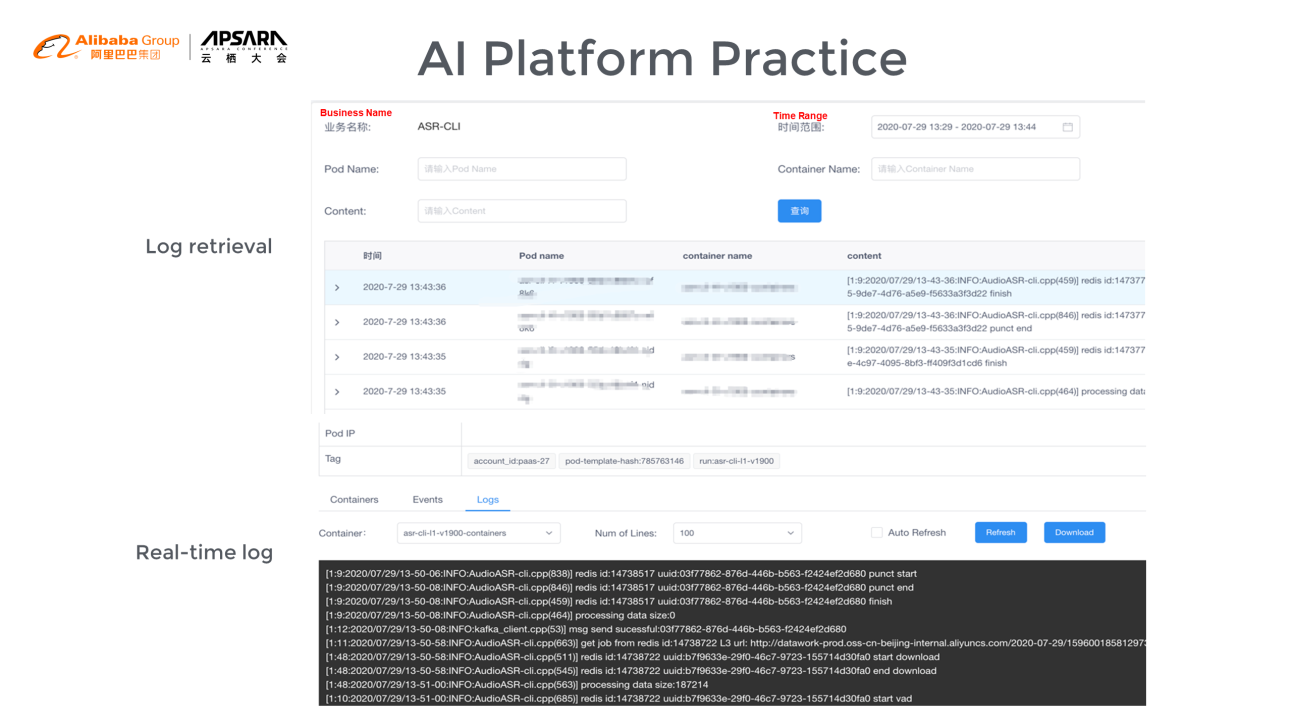

This page shows log retrieval and real-time log:

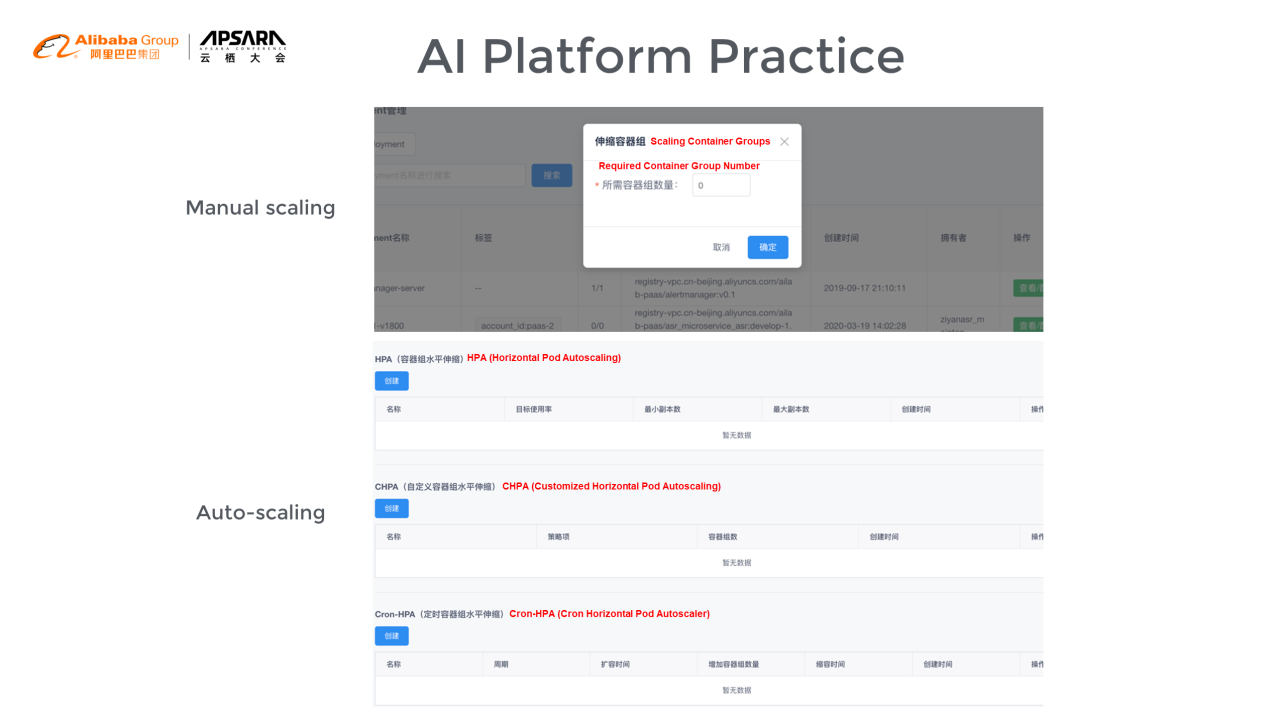

This page shows manual scaling and auto-scaling operations. Auto-scaling includes CPU- and memory-level HPA and HPA based on the corresponding response time and timed HPA.

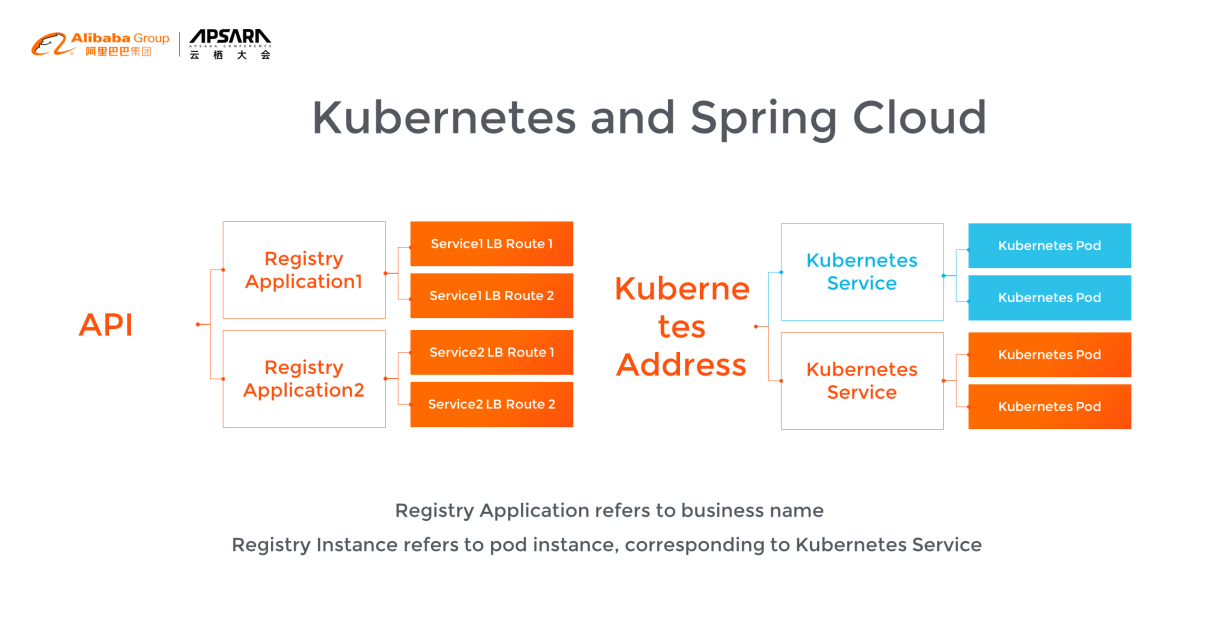

Now, let me introduce the organic combination of Kubernetes and SpringCloud.

As is shown above, the left part is the diagram from the SpringCloud data center to Route, while the right is from the Kubernetes Service to Pod.

The two diagrams are very similar in structure, but how did we achieve that? Application is bound to Kubernetes Service. In other words, the LB address registered in SpringCloud is converted to the address of the Kubernetes Service. By doing so, Kubernetes and SpringCloud can be combined, making them a route-level combination. Thus, the final effect can be achieved.

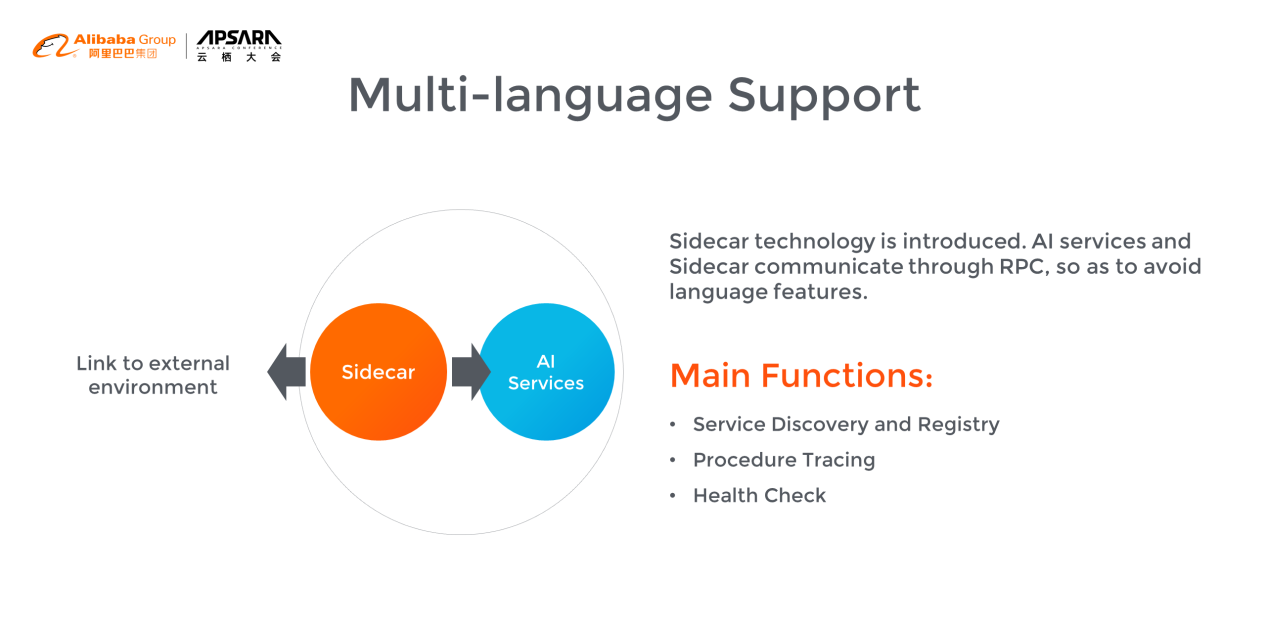

SprigCloud is a Java language station, while AI services languages are diversified, including C++, Java, and even PHP. Sidecar technology was introduced to communicate with AI services through RPC for multi-language support and avoid language features. Sidecar has the following functions, application service discovery and registration, route tracing, tracing analysis, and health check.

Thank you very much for listening to my speech!

Fluid: An Important Piece for Big Data and AI to Embrace Cloud-Native

PrudenceMed: Using Serverless Containers to Improve Diagnostic Accuracy

222 posts | 33 followers

FollowAlibaba Clouder - May 11, 2020

Alibaba Cloud Native Community - September 4, 2025

Alibaba Cloud Big Data and AI - October 27, 2025

Alibaba Clouder - April 26, 2020

Alibaba Developer - February 9, 2021

Alibaba Developer - March 3, 2020

222 posts | 33 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Container Service