By Alex, Alibaba Cloud Community Blog author

Currently, there's huge excitement in the data science community as an increasing number of technology companies compete to release more products in this domain. For example, the iPhone X launch created a lot of buzz by announcing some cool features such as FaceID, Animoji, and Augmented Reality. These features employ a machine learning framework. If you are a data scientist, then you may probably wonder how to build such systems. Core ML, a machine learning framework answers this question well. As a framework from Apple to the developer community, Core ML is compatible with all Apple products from iPhone, Apple TV to Apple watches.

Furthermore, the new A11 Bionic processing chip incorporates a neural engine for advanced machine learning capabilities. It is a custom graphics processing unit (GPU) in all the latest iPhone models shipped by Apple. The new tool opens up a whole new gamut of machine learning possibilities with Apple. It is bound to spur creativity, innovation, and productivity. Since Core ML is a crucial turning point, this article aims to decode this framework and discover why it is becoming so important. It also explains how to implement the model and evaluate its merits and demerits.

Source: Apple.com

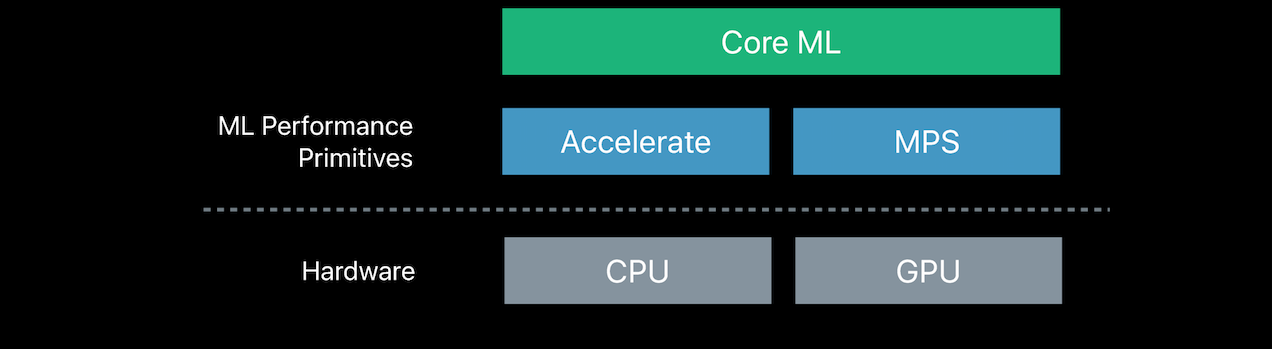

Simply put, the Core Machine Learning Framework enables developers to integrate their machine learning models into iOS applications. Both, CPU and GPU act as the underlying technologies powering Core ML. Notably, the machine models run on respective devices allowing local analysis of data. The methods in use include both 'Metal' and 'Accelerate' techniques. In most cases, locally run machine learning models are limited in both complexity and productivity as opposed to cloud-based tools.

Previously, Apple created machine learning frameworks for its devices, and the most notable are the following two libraries:

The two frameworks were distinct in their optimizations, one was for CPU and the other for GPU, respectively. Notably, inference with the CPU is always faster than GPU. On the other hand, training on the GPU is faster. However, most developers find, these frameworks confusing to operate. Furthermore, they are not easily programmable due to the close association with the hardware.

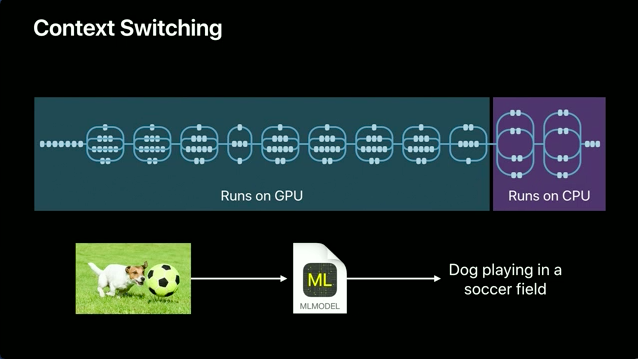

The two previous libraries are still in place. However, the Core ML framework is another top layer abstraction over them. Its interface is easier to work with and has higher efficiency. It doesn't require to switch between CPU and GPU for inference and training. The CPU deals with memory-intensive workloads such as natural language processing, while the GPU handles computation-intensive workloads such as image processing tasks and identification. The context switching process of Core ML handles these functionalities with ease, and also takes care of the specific needs of your app depending on its purpose.

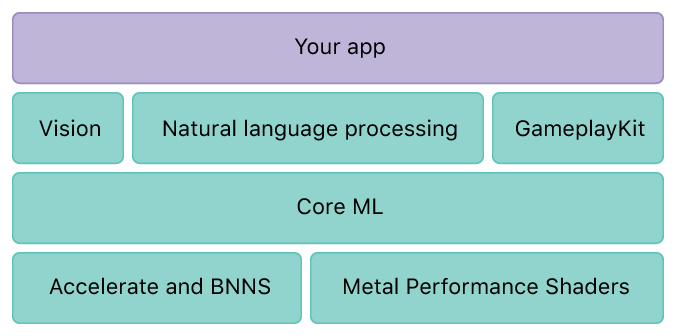

Following three libraries form an essential part of Core ML functionality:

1) Vision: This library supports the identification of faces, detection of features, or classification of image and video scenes in the framework. The vision library is based on computer vision techniques and high-performance image processing.

2) Foundation (NLP): This library incorporates the tools to enable natural language processing in iOS apps.

3) Gameplay Kit: The kit uses decision trees for game development purposes and for artificial intelligence requirements.

Apple has done a great deal of work to ensure these libraries easily interface and operationalize with apps at large. Placing the above libraries into the Core ML architecture offers a new structure as below.

The integration of Core ML into the structure provides a modular and better scalable iOS application. Since there are multiple layers, it is possible to use each one of them in numerous ways. For more information about these libraries, refer to following:

Now, let's learn some basic practical concepts of Core ML.

The following are the requirements for setting up a simple Core ML project.

1) The Operating System: MacOS (Sierra 10.12 or above)

2) Programming Language: Python for Mac (Python 2.7) and PIP. Install PIP using the command line below.

sudo easy_install pip3) Coremltools: To convert machine models written in Python to a format that is readable by the Core ML framework. Execute the following command to install.

sudo pip install -U coremltools4) Xcode 9: It is the default platform on which iOS applications are built and is accessible here. Log in using your Apple ID to download Xcode.

Verify identity using the six-digit code notification that you receive on the Apple device. Post verification, you get a link to download Xcode.

Now, let's look at how to convert trained machine learning models to Core ML standards.

The conversion of trained machine models transforms them into a format that is compatible with Core ML. Apple provides a specific Core ML tool to enable the conversion process. However, there are other third-party tools, such as MXNet converter or the TensorFlow converter, which work pretty well. It is also possible to build your own tool if you follow the Core ML standards.

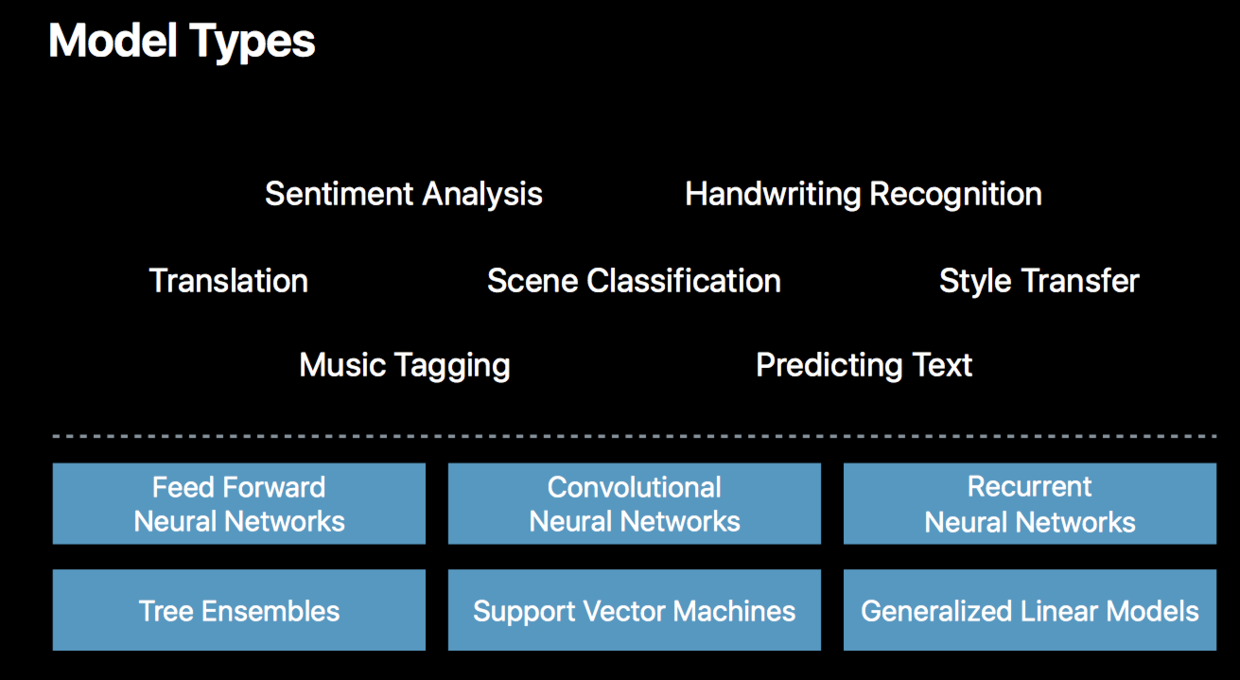

Core ML Tools, composed in Python, converts a wide range of applicable model types into a format that Core ML understands. The following table shows the list of ML models and third-party frameworks compatible with Core ML Tools as per Apple.

Table 1: Third-party frameworks and ML models compatible with Core ML Tools

| Model type | Supported models | Supported frameworks |

|---|---|---|

| Neural networks | Feedforward, convolutional, recurrent | Caffe v1 Keras 1.2.2+ |

| Tree ensembles | Random forests, boosted trees, decision trees | scikit-learn 0.18 XGBoost 0.6 |

| Support vector machines | Scalar regression, multiclass classification | scikit-learn 0.18 LIBSVM 3.22 |

| Generalized linear models | Linear re gression, logistic regression | scikit-learn 0.18 |

| Feature engineering | Sparse vectorization, dense vectorization, categorical processing | scikit-learn 0.18 |

| Pipeline models | Sequentially chained models | scikit-learn 0.18 |

As per the standards listed in the preceding section, if your ML model recognizes as a third-party framework, execute the conversion process, using the convert method. Save the resulting model as (.mlmodel) which is the Core ML model format. Also, pass the models created using Caffe model,(.caffemodel), to the coremltools.converters.caffe.convert method as shown below.

import coremltools

coreml_model = coremltools.converters.caffe.convert('my_caffe_model.caffemodel')Save the result after conversion in the Core ML format.

coremltools.utils.save_spec(coreml_model, 'my_model.mlmodel')In some model types, you may have to include additional information regarding the updates, inputs, outputs, and labels. In other cases, you may have to declare image names, types, and formats. All conversion tools have other documentation and outlined information specific to each tool. Core ML includes a Package Documentation with further information.

In case, the Core ML tool doesn't support your model, create your own model. The process entails translating your model's parameters such as input, output, and architecture to the Core ML standard. Define all layers of the model's architecture and how each layer connects to other layers. The Core ML Tools have examples showing how to make certain conversions and also demonstrate conversion of third-party framework model types to Core ML format.

There are numerous ways of training a machine learning model. For this article let's consider MXNet. It is an acceleration library that enables the creation of large-scale deep-neural networks and mathematical computations. The library supports the following scenarios:

Install Python 2.7 and get MacOS El Capitan (10.11) or later versions to run the converter.

Install the MXNet framework and the conversion tool using the following command.

pip install mxnet-to-coremlAfter installing tools, proceed to convert models trained using MXNet and apply them to CoreML. For instance, consider a simple model that detects images and attempts to determine the location.

All MXNet models comprise of two parts:

A simple location detection model would contain three files namely, model definition (JSON), parameters (binary) and a text file (geographic cells). During the application of Google's S2 Geometry Library for training, the text file would contain three fields, including Google S2 Token, Latitude, and Longitude (e.g., 8644b554 29.1835189632 -96.8277835622). The iOS app only requires coordinate information.

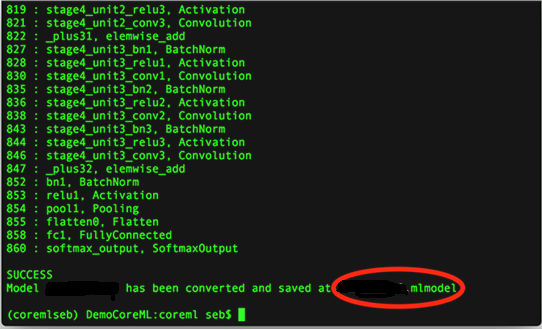

Once everything is set up, run the command below.

mxnet_coreml_converter.py --model-prefix='RN101-5k500' --epoch=12 --input-shape='{"data":"3,224,224"}' --mode=classifier --pre-processing-arguments='{"image_input_names":"data"}' --class-labels grids.txt --output-file="RN1015k500.mlmodel"Subsequently, the converter recreates the MXNet model to a CoreML equivalent and generates a SUCCESS confirmation. Import the generated file to XCode project.

The next process requires XCode operating on the computer. Check properties of the newly converted file such as size, name or even parameters.

In Xcode, drag and drop the file and tick on the Target Membership checkbox. Next, test the application in a physical device or alternatively use the Xcode simulator. To use the app on a physical device, ensure to sign up via Team account. Finally, build the app and run it on a device. That's it!

Getting started with Core ML is as easy as integrating it with your mobile application. However, trained models may take up a large chunk of your device's storage. Solve the problem for neural networks by reducing your parameters weight. In non-neural networks, overcome such challenges by reducing the application's size. Store the models in the cloud, and use a function to call the cloud for downloading learning models instead of bundling them in the app. Some developers also use half-precision in non-neural networks. The conversion does well in reducing the network's size when the related weights reduce.

However, the half-precision technique reduces the floating point's accuracy as well as the range of values.

If you plan to deploy an MXNet machine learning platform on your Alibaba Cloud, you must know how to integrate it into your iOS application and use the cloud to train and optimize the models at scale before exporting them to devices. Machine learning techniques allow applications to learn new techniques and methods without actual programming. When machine learning algorithms are fed with the training data, it results in a training model. Core ML simplifies the process of integrating machine learning into applications built on iOS. While the tool is available on the device, there is a huge opportunity to use the cloud as a platform to combine output from various devices and conduct massive analytics and machine learning optimization. With a tool such as a computer vision, it is quite easy to create useful features, including face identification, object detection, text to speech, landmarks, and barcode detection, among others. The machine learning tool makes it easy to integrate natural language processing that interprets texts and translates languages accurately.

Don't have an Alibaba Cloud account yet? Sign up for an account and try over 40 products for free worth up to $1200. Get Started with Alibaba Cloud to learn more.

Sources:

Alibaba Clouder - November 5, 2018

Alibaba Clouder - October 10, 2019

Amuthan Nallathambi - May 12, 2024

Alibaba Clouder - October 30, 2019

Alibaba Clouder - August 12, 2020

Alibaba Clouder - April 28, 2021

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn MoreMore Posts by Alex