By Yizheng

Previously, we looked at how Alibaba Cloud Table Store implements high reliability and availability. This article will describe how Table Store can implement cross-region disaster tolerance.

Although the concepts of disaster tolerance and high availability are somewhat overlapped, their application scenarios and related technology systems have many differences. Therefore, this article is written separately to describe disaster tolerance. Implementing disaster tolerance strengthens security on the basis of high cluster availability, preventing rare but critical failures. We recommend you read the previous article about high availability first to understand how Table Store ensures high reliability and high availability.

We will first describe some background knowledge and related scenarios as well as two important features to implement database disaster tolerance: data synchronization and switching. Then we will explain how Table Store implements these features and how we use these features to implement servitization, so that users can easily and flexibly set up disaster tolerance scenarios, provide higher availability, or optimize latency for end users in different regions by using the remote multi-active mechanism.

Table Store is a distributed NoSQL database developed by Alibaba, and has been in use at Alibaba for years. It has incorporated many disaster tolerance scenarios and related experience. This article also shows our views on how to use distributed NoSQL systems to implement disaster tolerance as well as our lessons and experiences along the way.

Although disaster tolerance and high availability address different issues, disaster tolerance does increase system availability. Disaster tolerance usually deals with rare but severe failures, such as power/network outages in IDCs, earthquakes, fires, and human-caused software and hardware unavailability. These failures are actually beyond the ability scope of many high availability designs.

Disaster tolerance has two critical indicators: RTO and RPO. RTO is the targeted duration of time within which a business process must be restored after a disaster. RPO is the maximum targeted period in which data might be lost due to a major failure. Today many businesses have very high availability requirements: RTO is usually less than one or two minutes, and RPO should be as small as possible (usually in seconds; zero RPO in financial scenarios). In cases where RTO and RPO requirements cannot both be guaranteed, it is advised to find reasonable trade-offs between data consistency and availability.

Disaster tolerance is similar to fault tolerance. Both prevent disruption or data loss through redundancy, that is, to have one or two copies of standby data and computing resources distributed in different regions to prevent service disruption due to natural disasters or human-caused failures. Most traditional disaster tolerance methods use cold backup. However, cold backup provides RPO and RTO that no longer meets current business requirements, and lacks reliability.

That's why disaster tolerance design today adopts hot backup and hot switching, which can be implemented two ways:

This corresponds to the first method as describe above: use one distributed system and spread multiple copies of data across different regions to implement disaster tolerance. We will take Table Store as an example and describe how to implement this disaster tolerance method and the applicable scenarios.

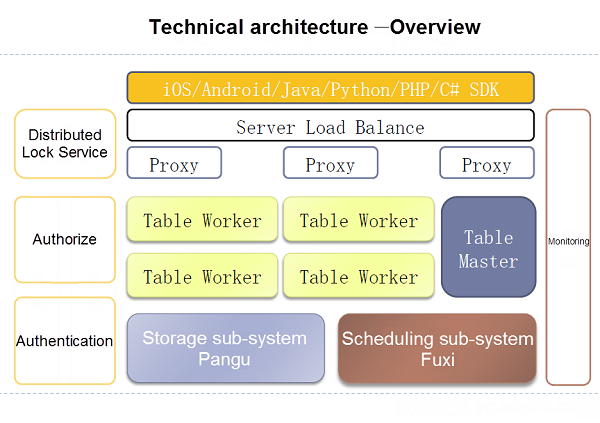

The following figure is a Table Store architecture diagram. Under the service layer of Table Store is a distributed storage system called Pangu, which provides multiple copies and strong consistency to ensure data reliability. Pangu is able to distribute multiple copies in different availability zones. Specifically, we have distributed three copies in three different availability zones. In each availability zone, we have deployed Pangu master and chunkserver as well as Table Store front-end and back-end roles. Logically, the whole system across the three availability zones is still a cluster. Failures in each availability zone will not influence services due to automatic failover.

This mode is called Three Availability Zones in One City, which will be available on the public cloud. This mode can tolerate failures that occur in one of the three availability zones. However, it cannot deal with failures across the city. That is because the three copies are distributed in the same city instead of across cities or in a larger region. Why are three copies distributed in the same city? For one thing, the three copies are intended to guarantee strong consistency. Too much distance will increase network latency for data writes and eventually cause significantly reduced performance; in addition, systems using this model exist as a whole, and implementing fully cross-region distribution is basically like implementing a set of global databases for global disaster tolerance. This is another product form, which is not within the scope of this article.

This is the second disaster tolerance method as previously described: use two (or more) different systems to implement disaster tolerance through data synchronization and switching. This method can be divided into the active-standby model and the active-active/multi-active model. Examples of the active-standby mode are dual clusters in the same city and three data centers across two regions; examples of the active-active/multi-active mode are the active-active mechanism across regions and the multi-active mechanism across regions (units)

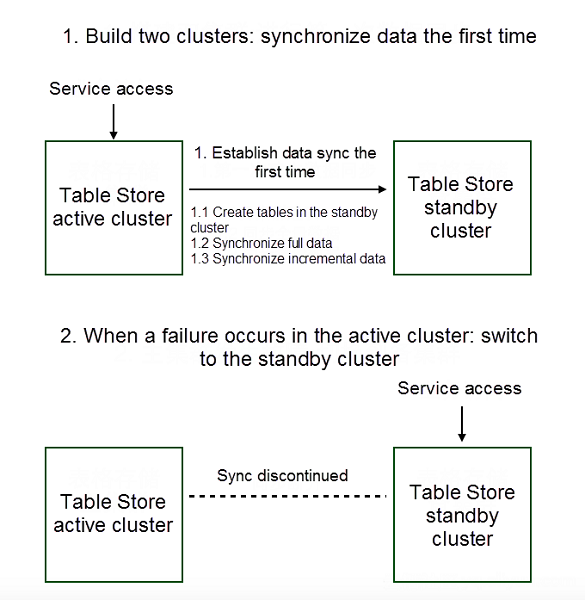

This section uses the active-standby model in Table Store as an example to demonstrate the whole process:

As shown in the preceding process, the two most important steps in this disaster tolerance plan are data synchronization and switching. During the process of initially setting up the two clusters, data synchronization includes a full synchronization and real-time incremental synchronization. After switching, the standby cluster begins to provide services and wait to re-establish the synchronization relationship until the active cluster recovers, so that it can eventually switch back to the active cluster.

Note that the data synchronization in this step is asynchronous. Data will be returned to the client immediately after it is written into the active cluster. At this point, that data has not been synchronized to the standby cluster. Asynchronous synchronization can cause some data consistency problems. Therefore, any potential impact to the business due to this data inconsistency should be identified, and measures to deal with this impact should be ready.

Specifically, assume that a portion of data has not yet been synchronized to the standby cluster when switching is triggered in the event of a failure:

When the original active cluster recovers, the following options are available:

Now, let's take a brief look at switching. The purpose of switching is to make the application layer access the standby system instead of continuing to access the failed system. Therefore, there must be a point between the application layer and the server side that notices the switch so that another system will be used. We can consider the following methods (for your reference only):

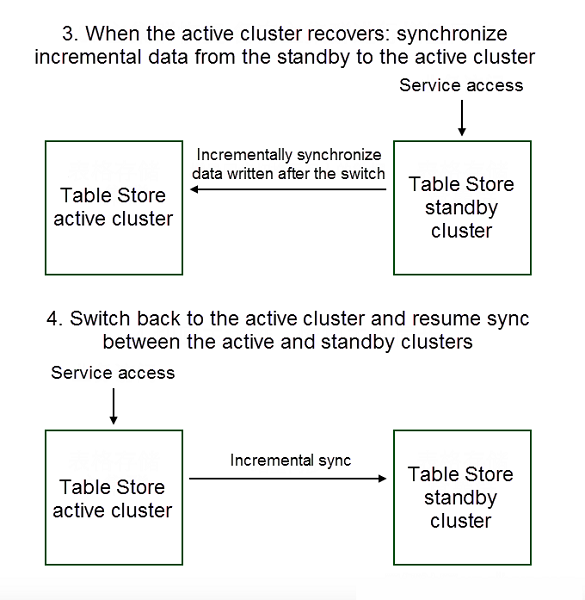

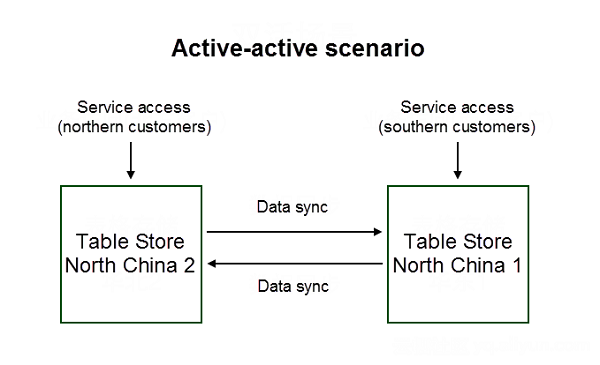

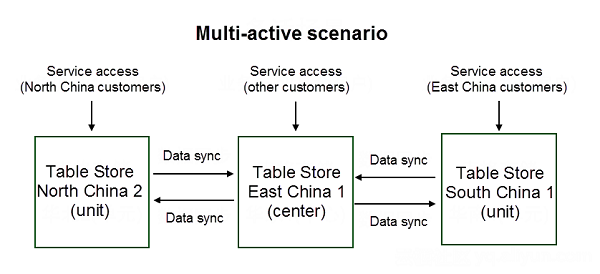

The following diagram shows the procedures in an active-active/multi-active scenario:

Now, we will look at an active-active model example and explain its differences with the active-standby model:

The multi-active model is similar to the active-active model, but it involves the concepts of the center and units. The cross-regional multi-active architecture is also called the unitized architecture. Data written into a unit is synchronized to the center, which synchronizes data in that unit to other units to ensure that all the units and the center have integral data.

In the active-active/multi-active model, the business layer must restrict a record to being modified on only one node, because data inconsistency will occur if several nodes write the same data. From the perspective of the business layer, this may mean that the information about one user or device can only be modified on a specific node. If a service targets end users, the unitized architecture can reduce latency and optimize the end user experience, especially in some global services.

Another consideration is to avoid cyclical data synchronization. Otherwise, data will flow in a circle, and one record will be continuously synchronized on each node. Cyclical replication can be avoided by attaching information to data which identifies which node the data has been written to. When data has been identified as having already been written to a node, the synchronization will stop.

The active-active/multi-active mode supports very flexible switching. For example, assume we have a set of end-user services. Since the business layer controls which units an end user can access, the business layer can change rules to switch users accessing one node to another node. Note that data inconsistency issues due to asynchronous data sync has to be taken into account for the services. Normally, data synchronization RPO is in seconds or subseconds. If the services restrict data writes or monitors data synchronization during switching, data inconsistency can be avoided in non-failure scenarios. The services also need to consider whether data inconsistency issues can be tolerated or somehow fixed.

From the disaster tolerance scenarios described above, we can see that incremental data synchronization is key to constructing a disaster tolerance plan. This section focuses on how Table Store implements incremental data synchronization. In addition to incremental data synchronization, many other features are needed to construct a comprehensive disaster tolerance plan, such as table meta synchronization, full data synchronization, and switch-related features. These features are very complicated, and are not covered in this section.

Real-time and incremental database synchronization is usually referred to as Replication. The prerequisite of replication is that if two sets of databases apply the same changes in sequence, the final results are the same. For example, MySQL binlog will synchronize from the active database to standby databases, which applies the same changes to the standby databases in sequence. Replication in Table Store also adopts a similar method.

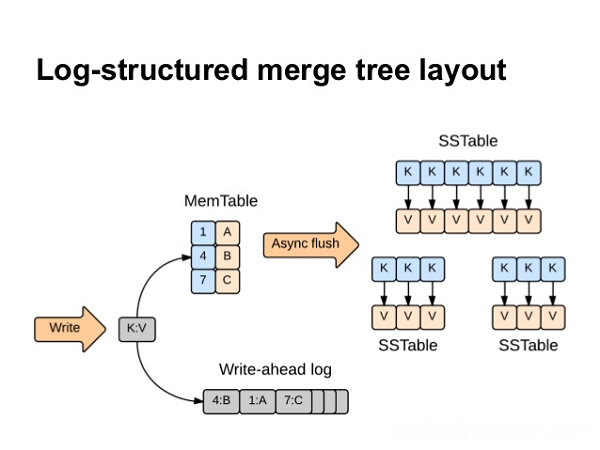

Let's first take a look at Table Store's writing process. Table Store uses the log structured merge tree layout, as shown in the following figure (The figure was obtained from Internet):

The Write-ahead log in the figure is also called the commitlog. An update on a piece of data is first written into the commitlog to get persisted and then into the MemTable in memory, which is regularly flushed into new data files. The back-end regularly compacts data files into a large data file and cleans junk data.

Each table shard in Table Store has a separate commitlog, where any changes made will be appended in sequence to ensure the persistence of written data. When a node encounters a failure and the MemTable has not been flushed into a new data file, failover occurs and the shard is loaded on another node. At this point, the MemTable can be recovered simply by replaying a part of the commitlog, avoiding loss of written data.

The commitlog is a redo log that records any (specific) changes to a shard in sequence. Some undetermined values in the writing process (such as values in an auto-incrementing column or timestamps that have not been customized) will become deterministic values in the commitlog. Replication in Table Store is implemented by replaying the commitlog. Compared with standalone databases, Table Store is very complicated. For one thing, a table in Table Store has many shards, each with a separate commitlog. Shards can also be split and merged and the structure of shards is a directed acyclic graph. In addition, as a large-scale distributed NoSQL storage system, Table Store can have many tables which may have several GB of data written per second. This requires that data synchronization modules must also be distributed.

Currently, Replication functions as a Table Worker module on the Table Store service side. For each table shard with Replication enabled, this module in Table Worker sends newly written shard data to the target end in the same order as in the commitlog. Then, this module also records the checkpoint for the purpose of failover, generates the replication configuration for the new shard after that shard is split and merged, and performs other replication-related actions. This module is also used to control Replication traffic, monitor the RPO indicator and perform some other operations.

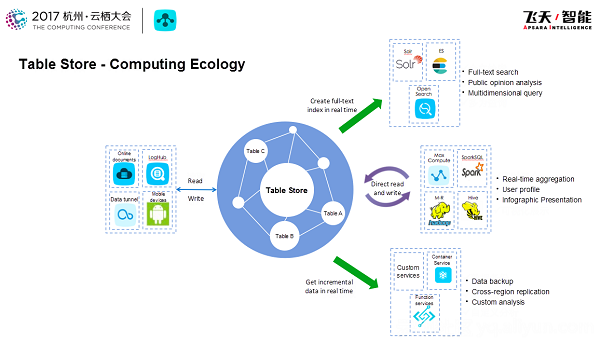

As mentioned above, incremental data synchronization is key to implementing disaster tolerance in a specific scenario. In fact, the role of incremental data has been shown in a variety of scenarios, such as real-time ETL of incremental data, offline import of MaxCompute/Spark for analysis, and real-time import of ElasticSearch to provide full-text search. The following diagram shows how Table Store is connected to various computing ecology systems. Incremental data tunnels play an important role in this process. On one hand, in order to make incremental data synchronization separately available, we developed the Stream feature and provided a set of Stream APIs to read incremental data from tables.

On the other hand, we also want to separate the replication feature from inside a system. Currently the Replication module is inside the Table Worker, and responsible for many operations, such as managing the synchronization checkpoints in the Table Worker and processing a wide range of data synchronization logic. This module is highly coupled with other system features, so is not flexible enough to meet many disaster tolerance scenarios and customized needs. Meanwhile, the Stream feature is publicly available. So we eventually decided to implement Replication based on Stream and began our development.

By implementing Replication based on Stream, on one hand, the Replication module can be separated from inside the system to implement micro servitization and decoupled from other modules. On the other, this set of Replication modules can be based on services and act as a data platform that provides users with flexible customization options. At this point, the Replication module even allows users to implement Replication themselves based on the libs that we provide or use Function Compute to develop serverless replication.

Based on the experience and practices in Table Store disaster tolerance scenarios, this article described disaster tolerance implementation methods in different scenarios and the most important feature of the Table Store disaster tolerance scenario-incremental data synchronization. This article also briefly explained the Stream feature in Table Store and the servitization of disaster tolerance and incremental data synchronization based on Stream.

How Table Store Implements High Reliability and High Availability

57 posts | 11 followers

FollowAlibaba Cloud Storage - April 3, 2019

Alibaba Cloud Community - April 24, 2022

Alibaba Clouder - November 29, 2019

Alibaba Clouder - July 3, 2020

Alibaba Clouder - November 14, 2017

ApsaraDB - September 3, 2018

57 posts | 11 followers

Follow ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Storage