By He Xiaoling and Weng Caizhi

Flink's goal has continuously been optimizing execution efficiency. In most tasks, especially batch tasks, data is transferred between tasks through the network (called data shuffle) at a high cost. Usually, data is transferred from an upstream task to a downstream task through serialization, disk I/O, socket I/O, and deserialization. Only transmitting an octet pointer using a few CPU cycles is needed to transfer the same data in memories.

In Flink's earlier versions, the operator chaining mechanism was used to integrate the adjacent single-input operators with the same concurrency into the same task — eliminating unnecessary network transmissions between single-input operators. However, there are additional data shuffle issues between multi-input operators such as join. Moreover, the operator chaining mechanism cannot help optimize the data transmission between the source node with the largest amount of data shuffle and multi-input operators.

Flink 1.12 introduced multiple-input operator and source chaining optimizations for scenarios that operator chaining cannot cover. These optimizations will eliminate most of the redundant shuffles in Flink tasks, further improving the task execution efficiency. With an SQL task as an example, this article explains the preceding optimizations and shows the results achieved by Flink 1.12 on the TPC-DS test set.

We take TPC-DS q96 as an example to describe in detail how to eliminate redundant shuffles. The following SQL statement aims to filter and count the order quantity that meets specific conditions through multiple join operations.

Select count(*)

From store_sales

, household_demographics

, time_dim, store

Where ss_sold_time_sk = time_dim.t_time_sk

and ss_hdemo_sk = normal

and ss_store_sk = s_store_sk

and time_dim.t_hour = 8

and time_dim.t_minute >= 30

and length = 5

and store.s_store_name = 'ese'

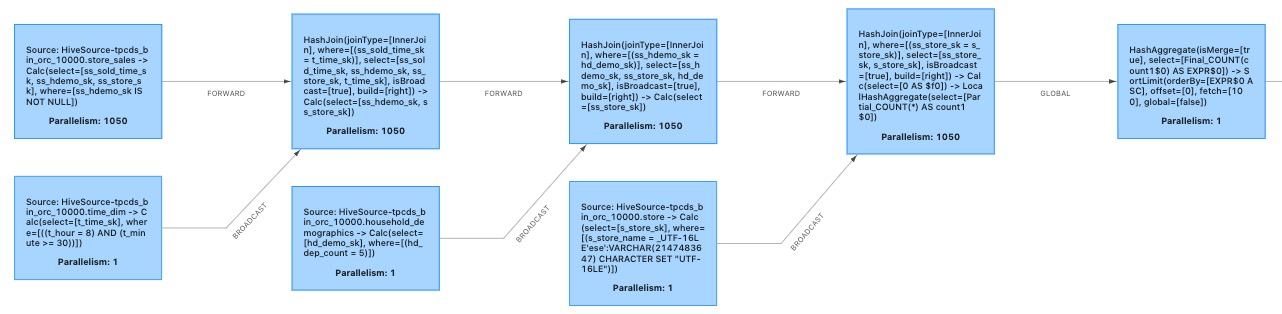

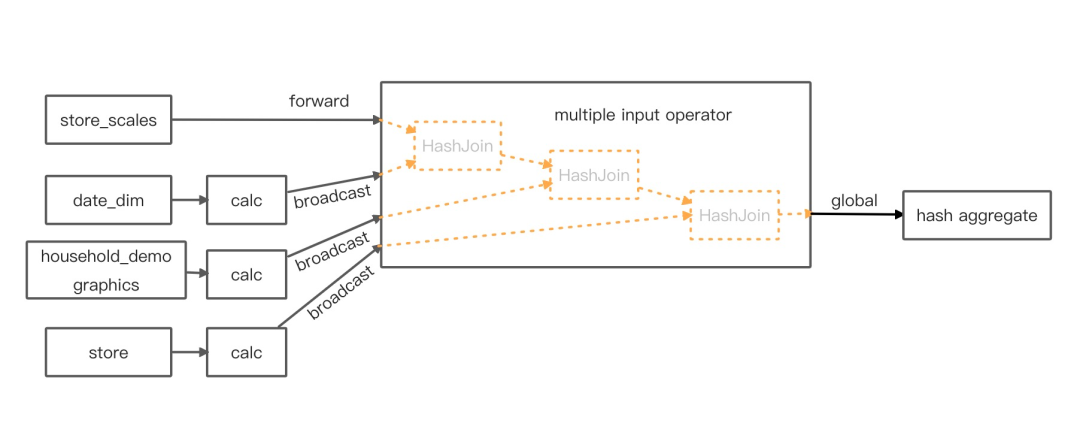

Figure 1: Initial execution plan

Since some operators have requirements on the distribution of input data (for example, hash join operators require the same hash value of the data join keys within the same concurrency), when data is transferred between operators, it may need to be rearranged and collated. Like MapReduce's shuffle process, Flink shuffle organizes the intermediate results generated by upstream tasks and sends them to the downstream tasks that need these intermediate results. In some cases, however, if the output data generated by the upstream tasks meets the data distribution requirements (for example, multiple hash join operators with the same join key), the data collation is unnecessary. Thus, the shuffles generated at this time become redundant and are called forward shuffles in the execution plan.

The hash join operator in figure 1 is a special operator called broadcast hash join. Consider store_sales join time_dim as an example. Due to the small amount of data in the time_dim table, any concurrency can accept any data from the store_sales table without affecting the accuracy of the join result by sending the full data of the time_dim table to each concurrency of hash join through the broadcast shuffle. Meanwhile, the efficiency of hash join is also improved. Now, the network transmission between the store_sales table and join operators becomes a redundant shuffle as well. Similarly, the shuffles between several join operations are also unnecessary.

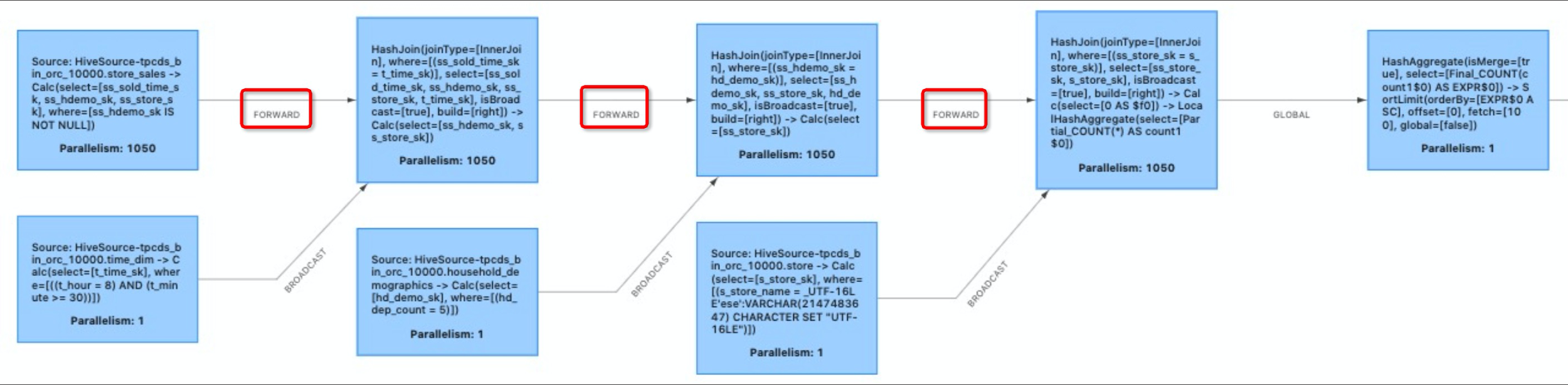

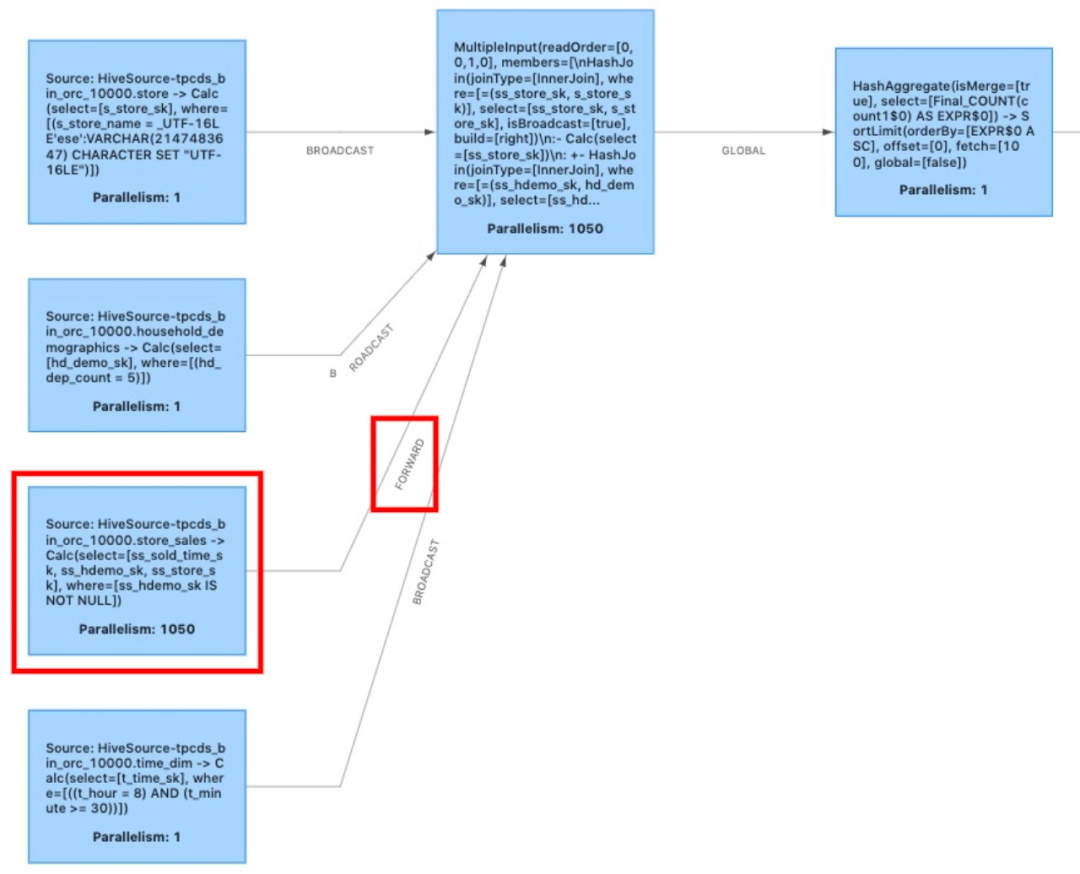

Figure 2: Redundant shuffles (marked in the red box)

In addition to hash join and broadcast hash join, there are redundant shuffles in other scenarios. For example, hash aggregate and hash join with a group key as same as join key, multiple hash aggregates with group keys that are inclusive to each other, etc. We do not discuss this in detail here.

Flink has already introduced the operator chaining mechanism earlier to eliminate unnecessary forward shuffles. This mechanism integrates adjacent single-input operators with the same concurrency into the same task and performs computations together in the same thread. The operator chaining mechanism has already been effective, as figure 1 shows. Without it, the operators separated by "->" in the three source nodes doing broadcast shuffle will be split into a few different tasks, resulting in redundant data shuffles. Figure 3 shows the execution plan when operator chaining is disabled.

Figure 3: Execution plan when operator chaining is disabled

The optimization is effective when data transmission between TMs via the network and files is reduced and operator links are integrated into tasks. It can reduce thread switching, message serialization and deserialization, data exchange in the buffer area, and reduce delay while improving overall throughput. However, operator chaining has stringent restrictions in operator integration. One of the restrictions is that "the indegree of the downstream operators is 1," which means the downstream operators can only have one input. This excludes multi-input operators such as join.

According to the operator chaining optimization, we can introduce a new optimization mechanism with the following conditions:

1) A combination of multi-input operators is supported; and

2) Multiple inputs (inputs for the integrated operators) are supported.

Then, we can run multi-input operators connected with the forward shuffle in the same task to eliminate unnecessary shuffles. The Flink community has noticed the shortcomings of operator chaining early. Flink 1.11 introduced MultipleInputTransformation in the streaming API layer and the corresponding MultipleInputStreamTask. These APIs meet the second condition above. Flink 1.12 has further implemented a new operator that meets the first condition — the multiple-input operator in the SQL layer. For more information, see the FLIP documentation.

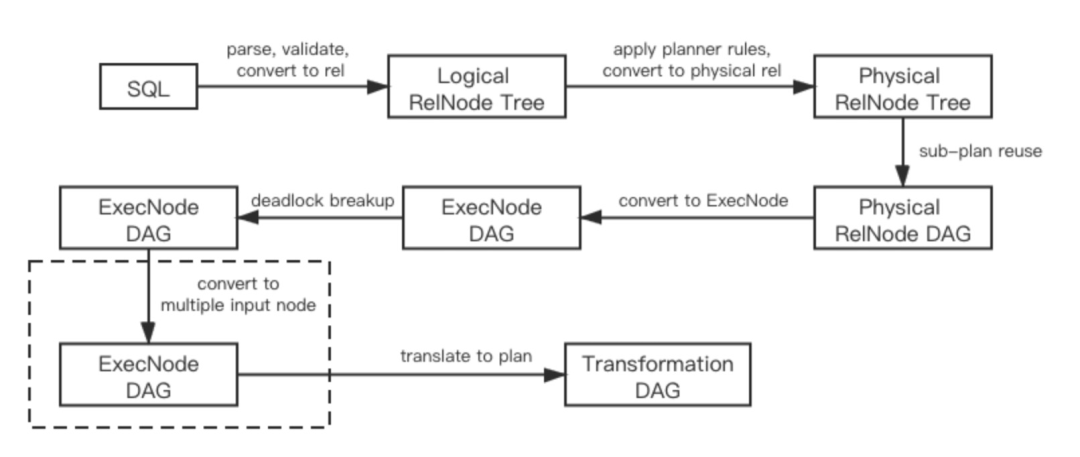

The multiple input operator is a pluggable optimization and also the last step of the optimization in the table layer. It generates execution plans after traversal and integrates adjacent operators that are not blocked by exchange into the multiple-input operator. Figure 4 shows the modification of the original SQL optimization by the multiple-input operator.

Figure 4: Optimization steps after multiple-input operators are added

Why not modify the existing operator chaining but seek other solutions? In fact, in addition to completing the operator chaining work, the multiple-input operator needs to prioritize each input. This is because some multi-input operators (such as hash join and nested loop join) have strict input order restrictions, and deadlock may occur if the input is not properly ordered. Since the information of operator input priority is only described in the operators at the table layer, it is more appropriate to introduce the optimization mechanism at the table layer.

Note that a multiple-input operator is different from operator chaining that manages multiple operators. It is a large operator whose internal operations are similar to a black box. The operator name reflects the internal structure of a multiple-input operator. When running a task containing a multiple-input operator, readers can recognize which operators are combined into multiple-input operators and the topology used during the process from the operator name.

Figure 5 shows the topology of an operator after the multiple-input optimization and a perspective view of the multiple-input operator. In the figure, the three hash join operators can be executed in the same task after removing the redundant shuffles between them. However, the operator chaining cannot handle this multi-input situation. Hence, the hash join operators are executed in the multiple-input operator, using which the input order of each operator and the calling relationships between operators are managed.

Figure 5: Operator topology after the multiple-input optimization

Building and running the multiple-input operator is such a complex process. For more details, see the design documentation.

After the multiple-input operator optimization, the execution plan in figure 1 transforms into the plan in figure 6. After the operator chaining optimization, the execution plan in figure 3 transforms into the plan in figure 6.

Figure 6: Execution plan after the multiple-input operator optimization

The forward shuffles generated from the store_sales table (marked in the red box) in figure 6 indicate that there is still some opportunity for optimization. In most tasks, since operators such as join do not filter and process the data directly generated from the source, shuffles generate the largest amount of data. Consider TPC-DS q96 under 10 TB data as an example. Without further optimization, the task containing the store_sales source table will transmit 1.03 TB data to the network. After applying the join filter once, the data volume drops sharply to 16.5GB. If we can eliminate forward shuffles of the source table, we can make a big step forward in the overall job execution efficiency.

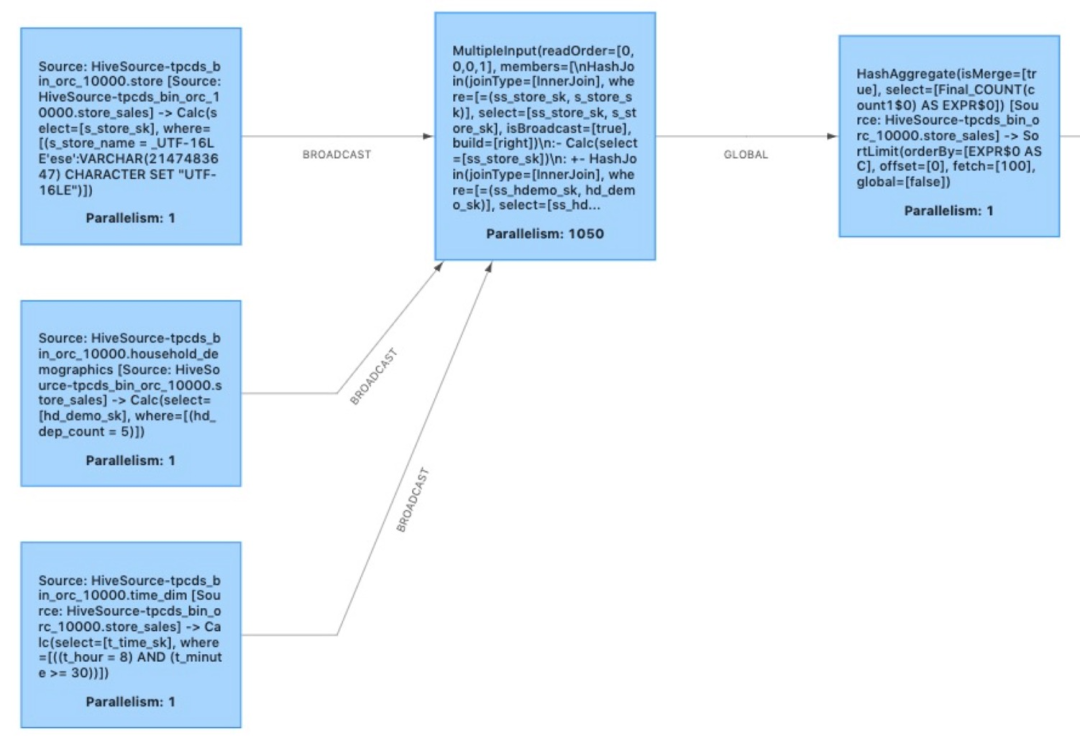

Unfortunately, the multiple-input operator cannot support source shuffle scenarios because the source has no inputs, unlike any other operators. Therefore, a new source chaining function has been added to operator chaining in Flink 1.12, which integrates sources that are not blocked by shuffle into operator chaining. As such, we can eliminate the forward shuffles between source and downstream operators.

Currently, the source chaining feature is only available on FLIP-27 source and multiple-input operators. However, this is still enough to meet the requirements of the scenario optimizations described in this article.

Figure 7 shows the final optimization solution combining the multiple-input operator with source chaining.

Figure 7: Optimized execution solution

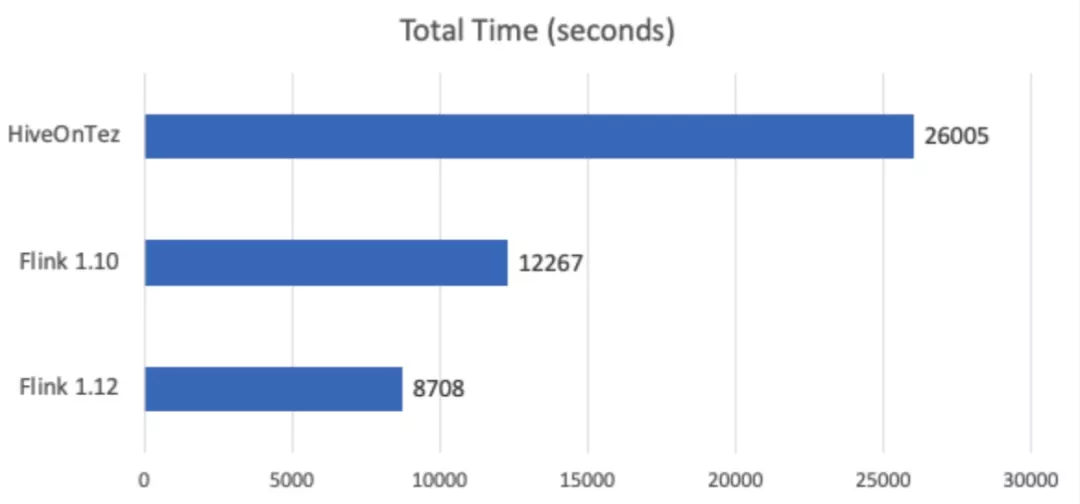

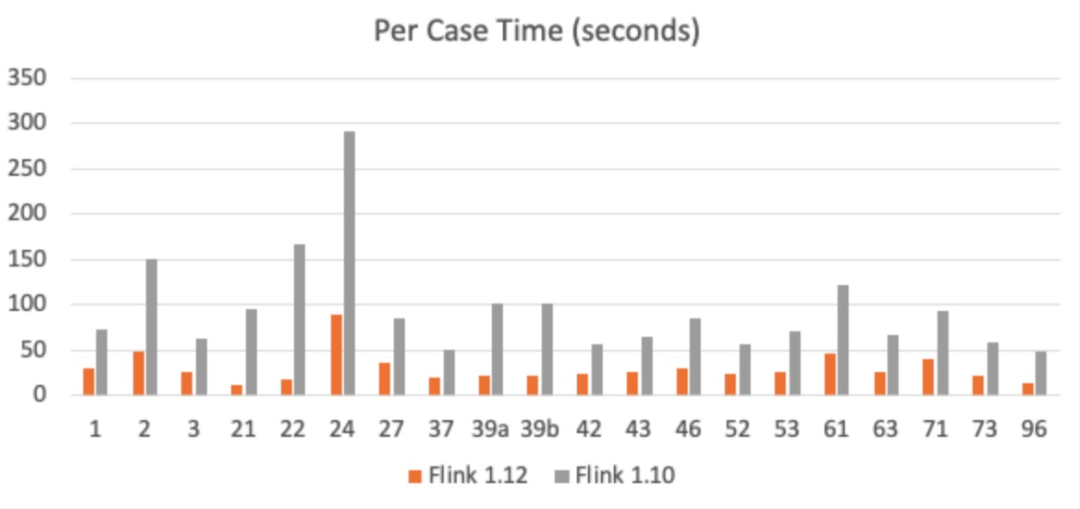

The multiple-input operator and source chaining are significantly effective on most tasks, especially on batch tasks. We use the TPC-DS test set to test the overall performance of Flink 1.12. Compared to 12,267s consumed by Flink 1.10, Flink 1.12 takes only 8,708s in total, with a shortened running time of nearly 30%.

Figure 8: TPC-DS test set total time comparison

Figure 9: Time comparison of TPC-DS test points

From the TPC-DS test results, we can observe that source chaining and multiple-input can significantly improve the performance. The overall framework has been completed, and the common batch operators support the deductive logic of redundant exchange elimination. More batch operators and more refined deductive algorithms will be supported in the future.

Although data shuffling in streaming tasks does not require writing data into disks as batch tasks do, the performance improvement brought by converting network transmission into memory transmission is impressive. Therefore, it is also worthy of expectation when streaming tasks support source chaining and multiple-input. At the same time, we have to do a lot of work to support this optimization on streaming tasks. For example, the deductive logic for redundant exchange elimination on streaming operators is not yet supported. Some operators need to be restructured to eliminate the binary data input requirement. This is why optimizations for streaming tasks are still unavailable. We will complete all this work step by step in future versions and hope that more people from the community will join us to implement more optimizations as soon as possible.

1) https://cwiki.apache.org/confluence/display/FLINK/FLIP-92%3A+Add+N-Ary+Stream+Operator+in+Flink

2) https://docs.google.com/document/d/1qKVohV12qn-bM51cBZ8Hcgp31ntwClxjoiNBUOqVHsI/

Introduction to Alibaba Cloud Realtime Compute for Apache Flink

206 posts | 56 followers

FollowApache Flink Community China - July 3, 2023

Apache Flink Community - May 9, 2025

Apache Flink Community China - November 12, 2021

Apache Flink Community China - July 28, 2020

Apache Flink Community China - September 27, 2020

Apache Flink Community - July 18, 2024

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community

Arman Ali June 15, 2021 at 9:29 am

Great!