By Su Shu, Senior Engineer of Tencent's Data Platform department; edited by Lu Peijie (Flink community volunteer)

Apache Flink is a prevalent stream-batch computing engine in the big data field. Data Lake is a new technical architecture trending in the cloud era. This led to the rise of solutions based on Iceberg, Hudi, and Delta. Iceberg currently supports Flink to write data into Iceberg tables through DataStream API/Table API and provides integration support for Apache Flink 1.11.x.

This article mainly introduces the real-time data warehouse construction by Tencent's Big Data department based on Apache Flink and Apache Iceberg, as follows:

1) Background and pain points

2) Introduction to Apache Iceberg

3) Real-time data warehouse construction with Flink and Iceberg

4) Future plan

Figure 1 shows the booster users of some internal applications, among which WeChat Applets and WeChat Video generated data above PB or EB level per day or month.

Figure 1

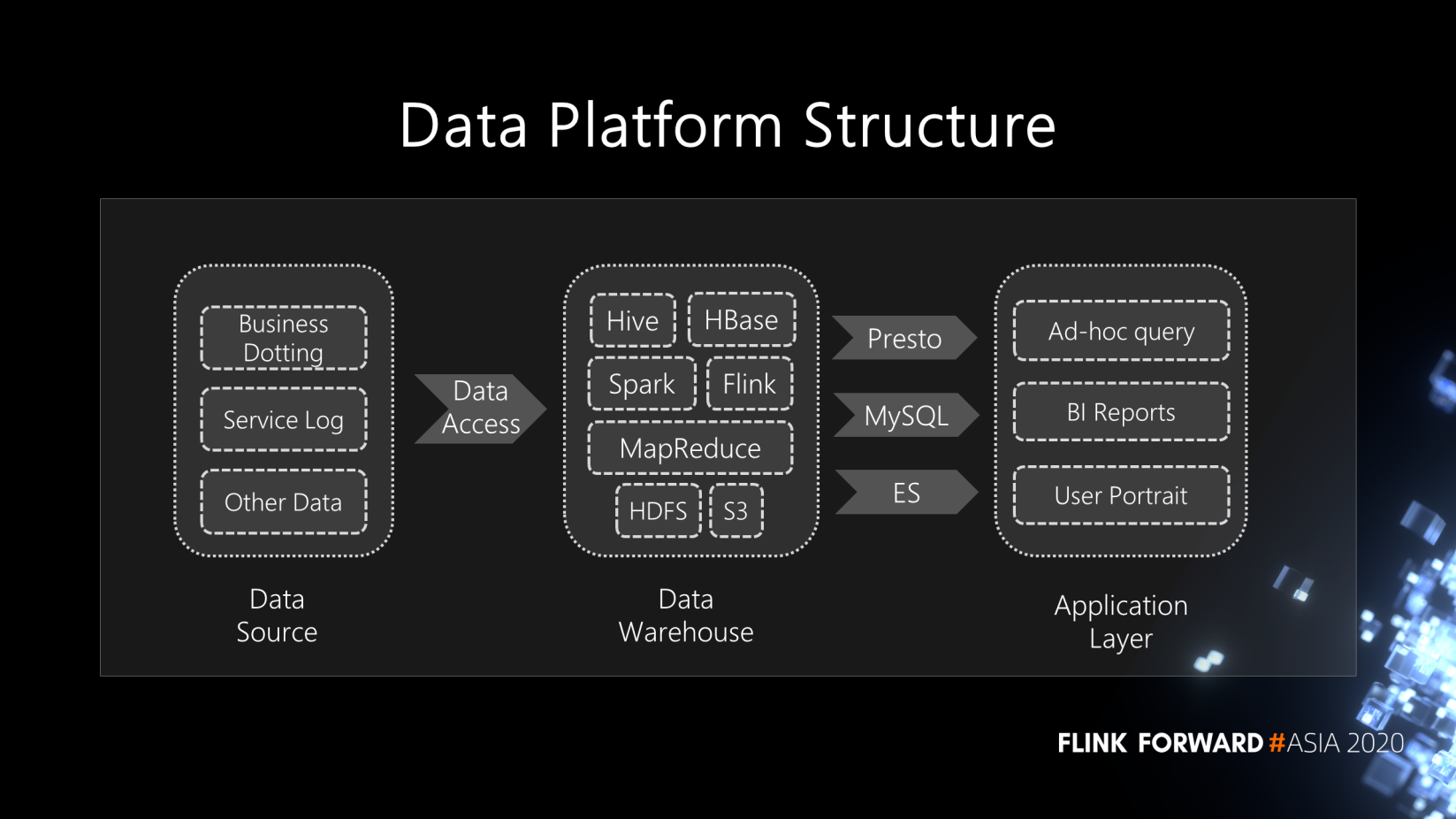

Users of these applications often use the architecture in figure 2 to build their own data analysis platforms.

The business side, such as Tencent Kandian or WeChat Video users, usually collects data such as front-end business dot data and service log data. The data will be put in the data warehouse or real-time computing engine through message-oriented middleware (Kafka/RocketMQ) or data synchronization service (Flume/NiFi/DataX).

A data warehouse includes various big data components, such as Hive, HBase, HDFS, S3, and computing engines such as MapReduce, Spark, and Flink. Users build the big data storage and processing platform based on their needs. After processing and analyzing data on the platform, the result data is saved to relational and non-relational databases that support fast queries, such as MySQL and Elasticsearch. Then, users can perform BI report development and user profiling based on the data at the application layer and interactive queries based on OLAP tools such as Presto.

Figure 2

Some offline scheduling systems are often used in the whole process to perform some Spark analysis tasks and data input, data output, or ETL jobs regularly (T+1 or every few hours). Data latency will inevitably exist throughout the whole offline data processing. The data latency can be relatively large for both data access and intermediate analysis, ranging from hours to days. In other scenarios, real-time processing is also built for real-time requests. For example, Flink and Kafka are often used to build a real-time stream processing system.

Generally, the data warehouse architecture has many components, which significantly increases the complexity of the entire architecture and the cost of O&M.

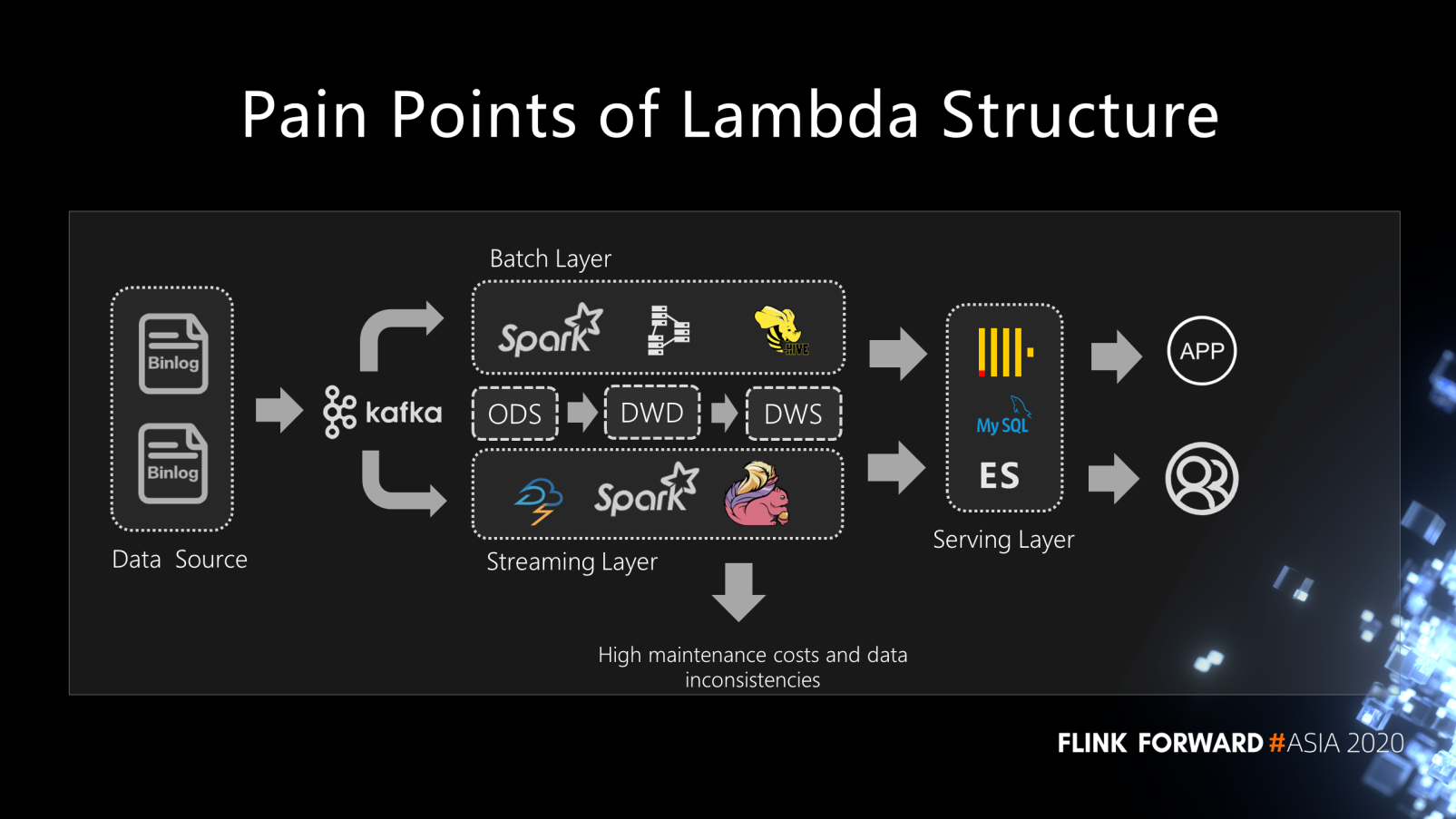

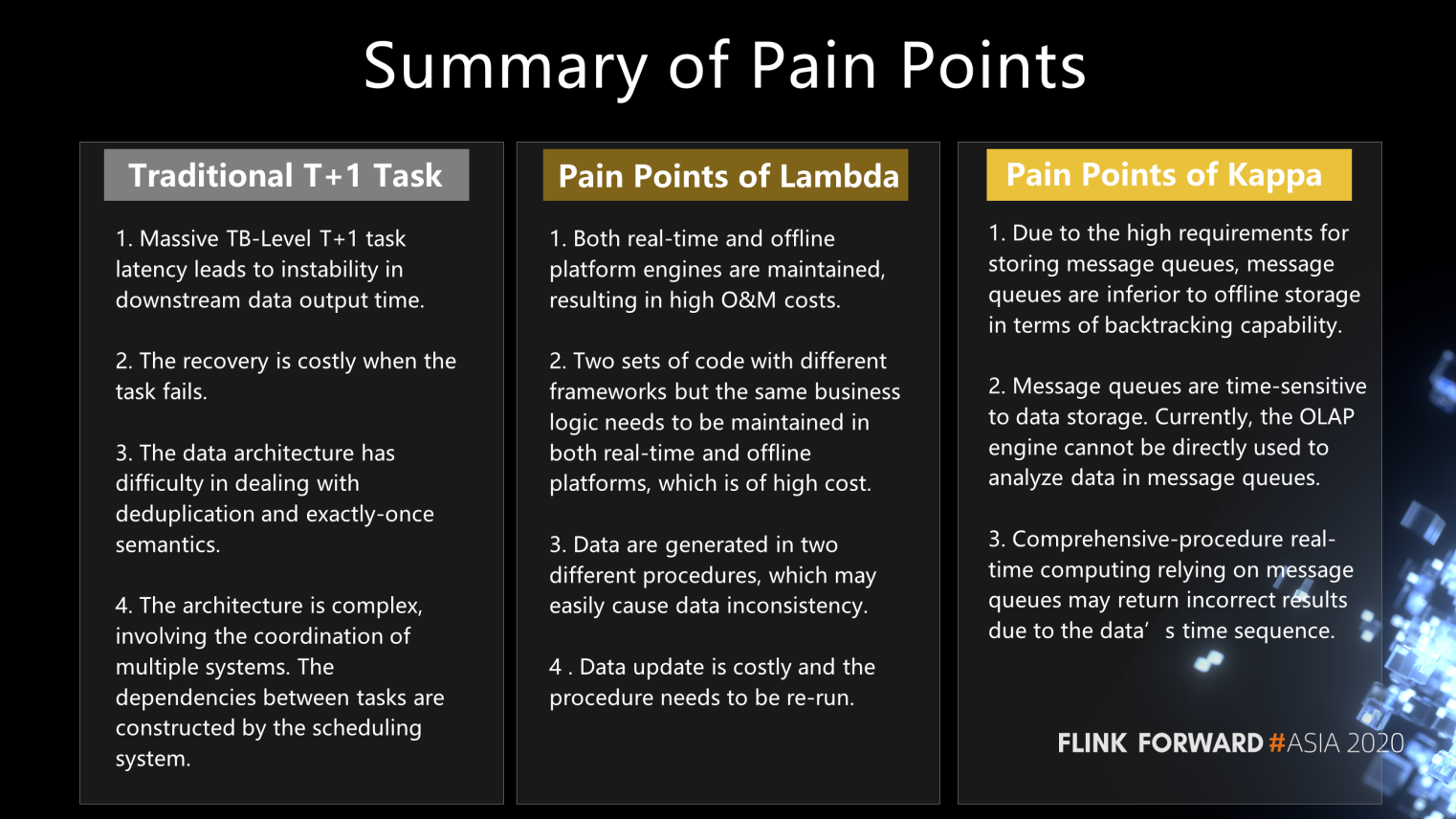

The following figure shows the lambda architecture that many companies used or are using. The lambda architecture divides the data warehouse into the offline and real-time layers. Accordingly, there are two independent data processing procedures: batch processing and stream processing. The same data will be processed more than twice, and the same set of business logic code has to be developed twice. Since the lambda architecture is familiar, the following will focus on some pain points we encountered when using the lambda architecture to build a data warehouse.

Figure 3

For example, in real-time computing of some user-related indexes, the data will be put into the real-time layer for computing in order to see the current PV and UV. These index values will be displayed in real time. But to know the user growth trend, the data for the past day needs to be calculated, which requires the scheduling tasks of batch processing. For example, to start a Spark scheduling task in the scheduling system at two or three the next morning to run all the day's data again.

Given that the two procedures run the same data at different times, it may result in data inconsistency. Due to the update of one or more pieces of data, the entire offline analysis procedure needs to be rerun - making the data update costly. Furthermore, maintaining both the offline and real-time analysis computing platforms simultaneously results in high development and O&M costs.

Therefore, the kappa architecture is developed to solve various problems related to the lambda architecture.

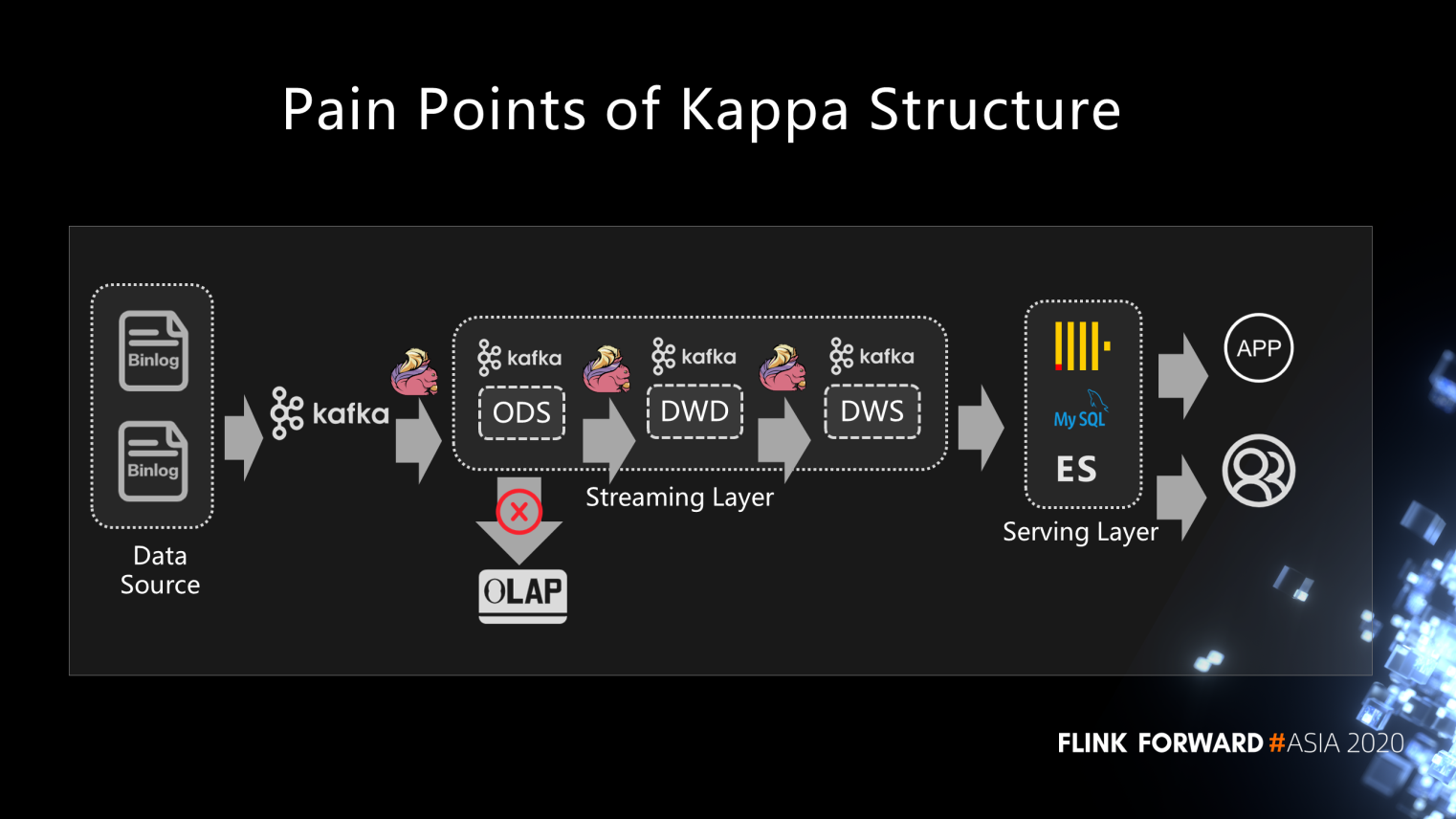

As figure 4 shows, message queues are used in the middle of the kappa architecture, connecting the entire link through Flink. The kappa architecture solves the problem of high O&M and development costs caused by different engines used at the offline processing layer and real-time processing layer in the lambda architecture. However, there are also some pain points in the kappa architecture.

Is there an architecture that can meet both real-time and offline computing requirements and reduce O&M and development costs to resolve some of the pain points in building a kappa architecture through message queues? The answer is yes, as the following sections describe.

Figure 4

Figure 5

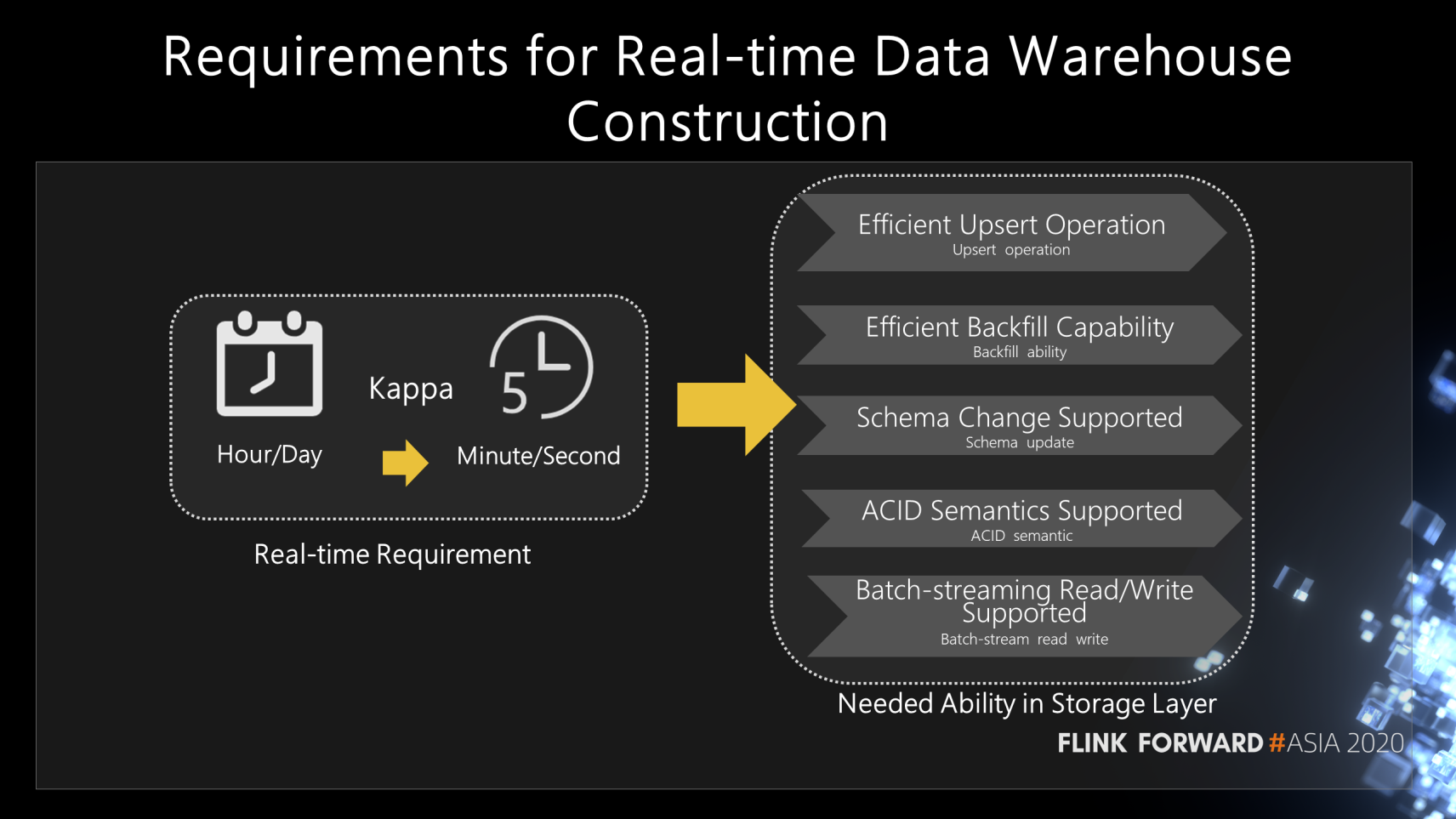

Is there a storage technology that can support efficient data backfill and data updating, stream-batch data read and write, and realize data access from minute-level to second-level?

This is also urgently required in the construction of real-time data warehouses (figure 6). In fact, upgrading the Kappa architecture can solve some problems in the kappa architecture. The following will focus on a popular data lake technology: Iceberg.

Figure 6

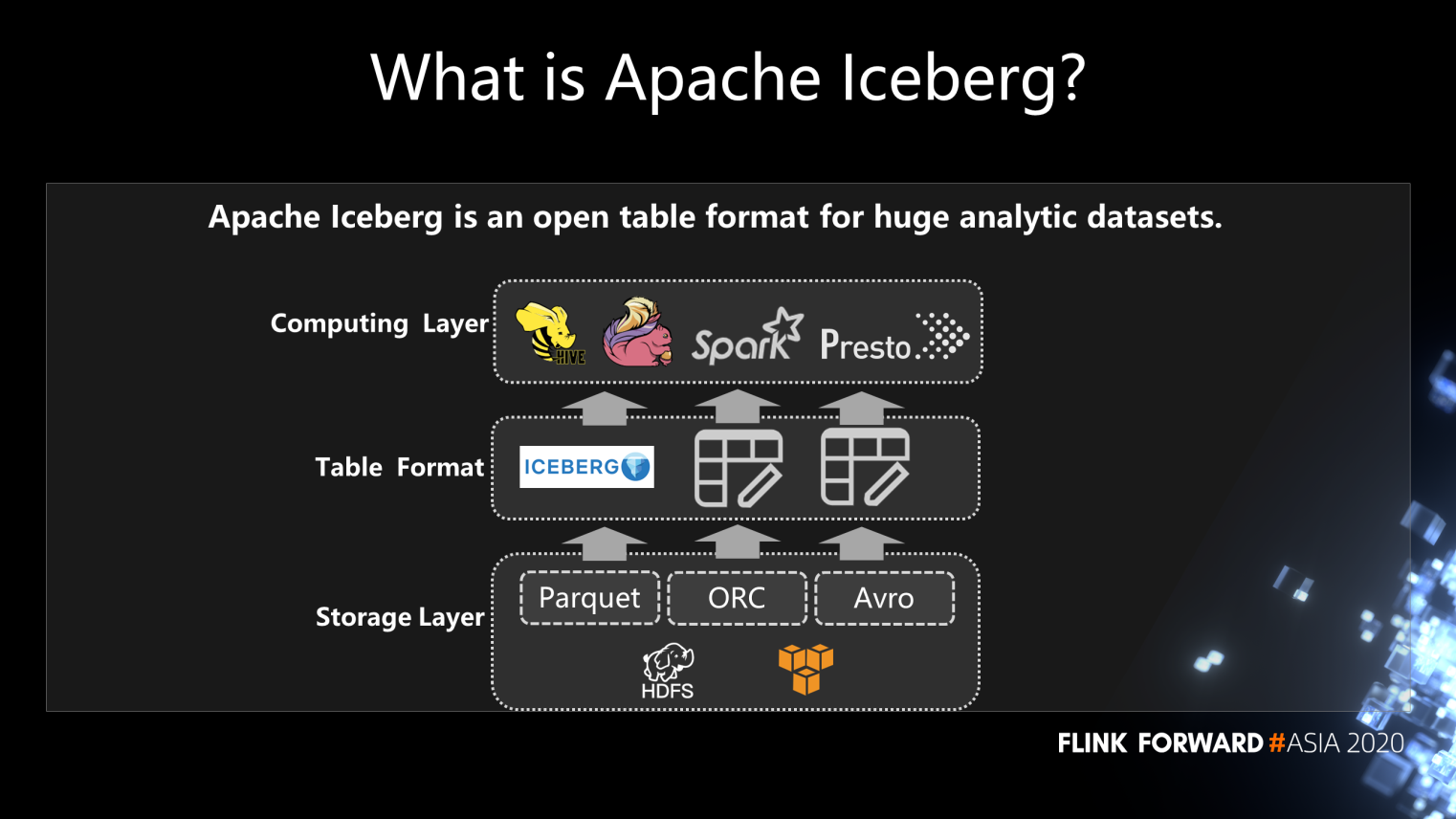

Officially, Iceberg is described as follows:

Apache Iceberg is an open table format for massive analytic datasets. Iceberg adds tables to Presto and Spark that use a high-performance format that works as a SQL table.

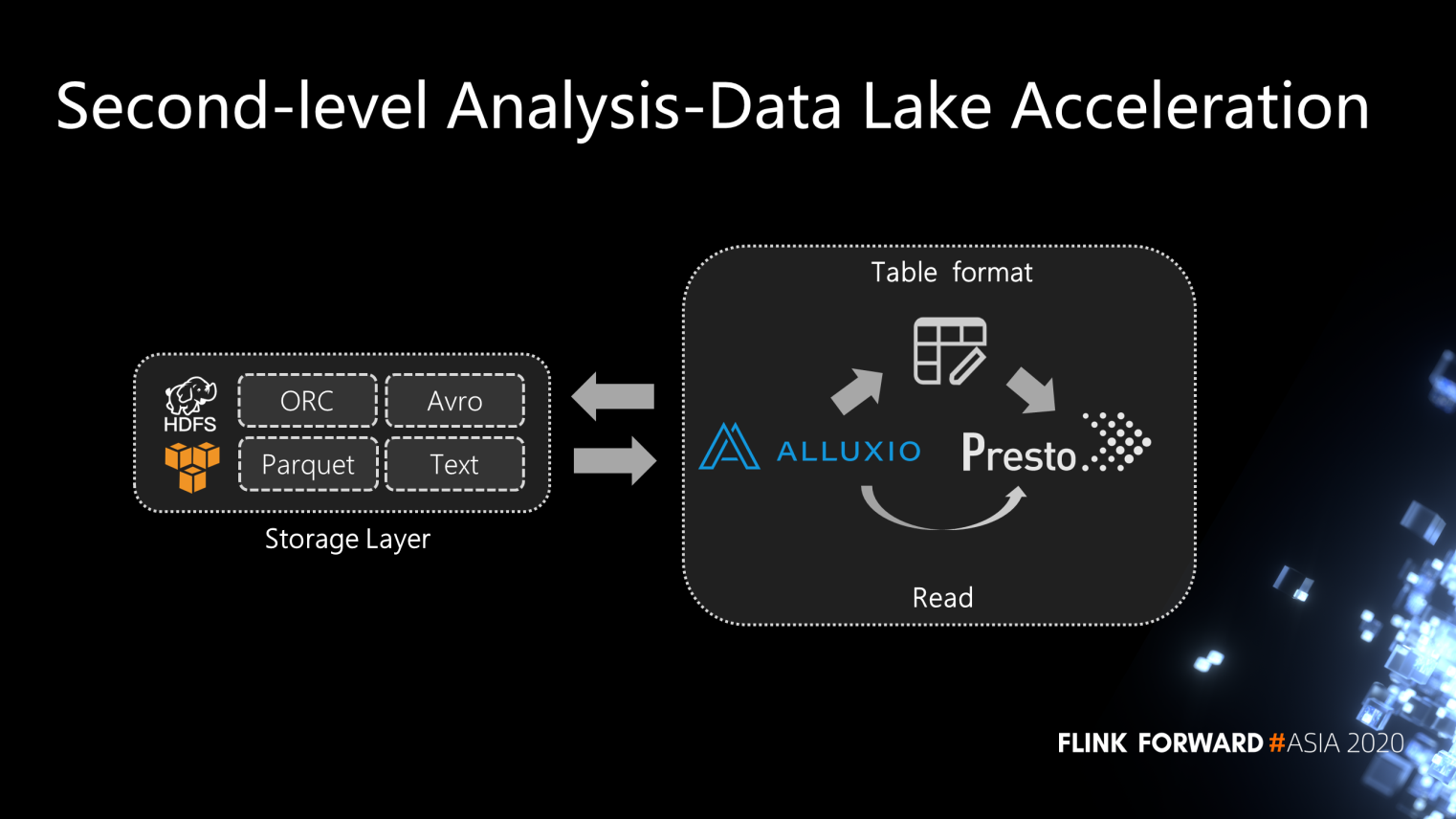

Officially, Iceberg is defined as a table format. It can be simply interpreted as a middle layer based on the computing layer (Flink, Spark) and the storage layer (ORC, Parquet, and Avro). Data is written into Iceberg through Flink or Spark, and then the table is accessed through Spark, Flink, Presto, etc.

Figure 7

Iceberg, designed to analyze massive data, is defined as a table format. The table format is between the computing and storage layers.

The table format is mainly used to manage the files in the storage system downwards and provide corresponding interfaces for the computing layer upwards. File storage on the storage system adopts a specific organizational form. When accessing a Hive table, for example, the HDFS file system will produce some partitions containing information about data storage format, data compression format, data storage HDFS directory, etc. Metastore with the information stored in can be called a file organization format.

An excellent file organization format, such as Iceberg, can more efficiently support the upper computing layer to access files on the disk and perform some list, rename, or search operations.

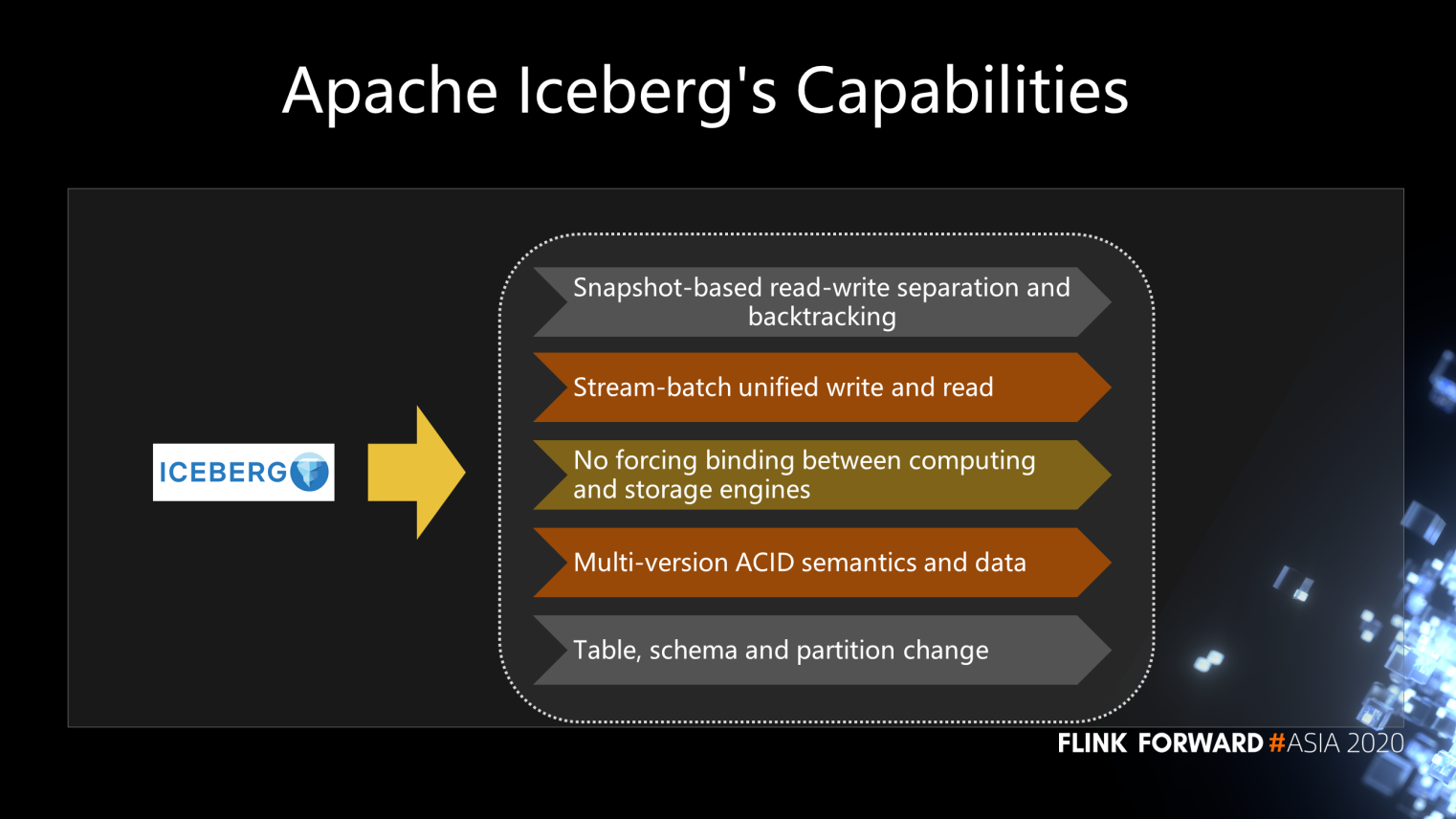

Iceberg currently supports three file formats: Parquet, Avro, and ORC. As figure 7 shows, files in HDFS and in S3 can be stored inline or column, which we will discuss in detail later. The features of Iceberg itself are summarized as follows, as figure 8 shows. These capabilities are essential for building a real-time data warehouse with Iceberg.

Figure 8

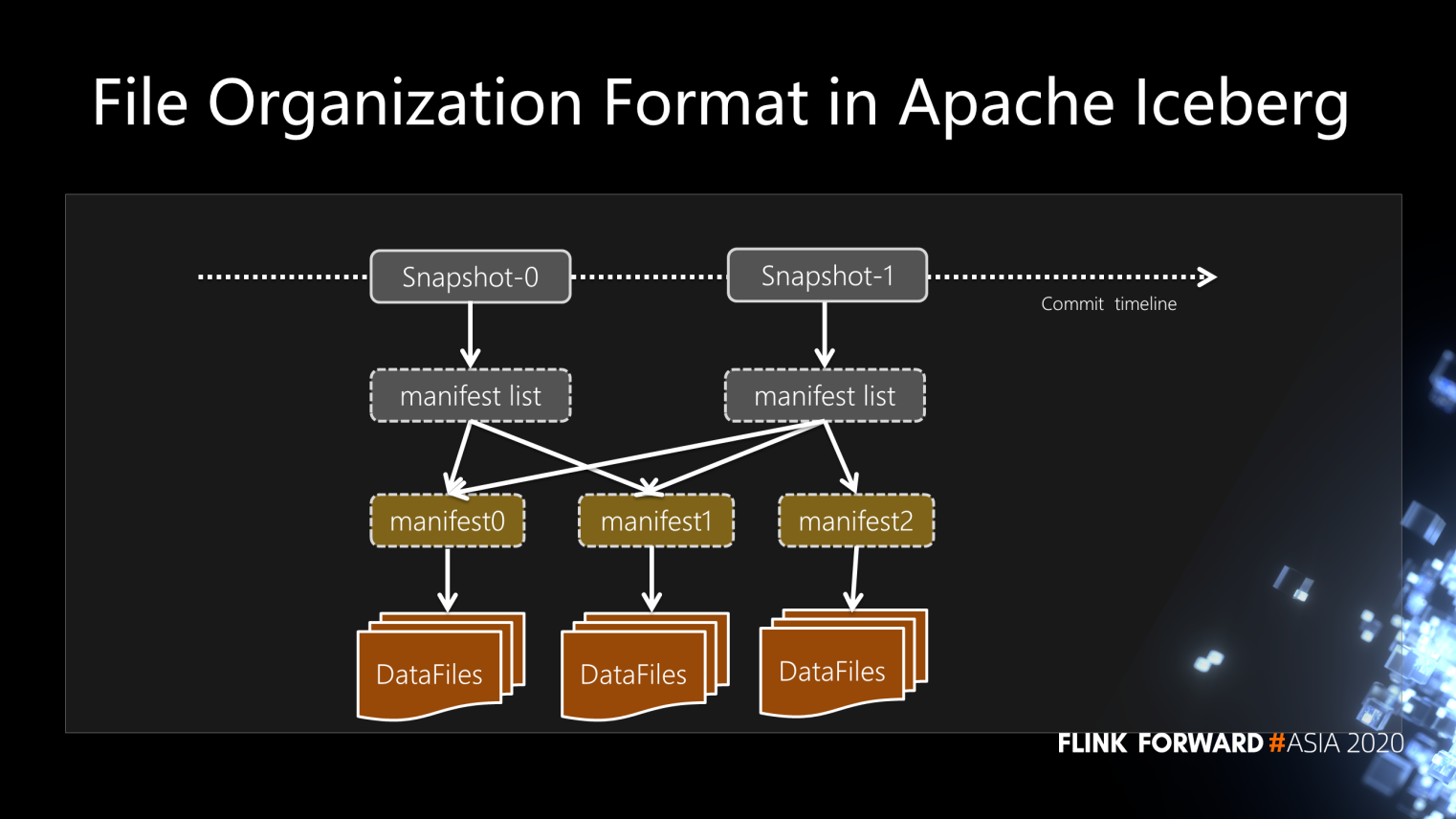

The following figure shows the entire file organization format of Iceberg. Examine from top to bottom:

The figure also shows that Snapshot-1 contains Snapshot-0 data, while Snapshot-1 only contains manifest2 data. Actually, this provides good support for incremental data read.

Figure 9

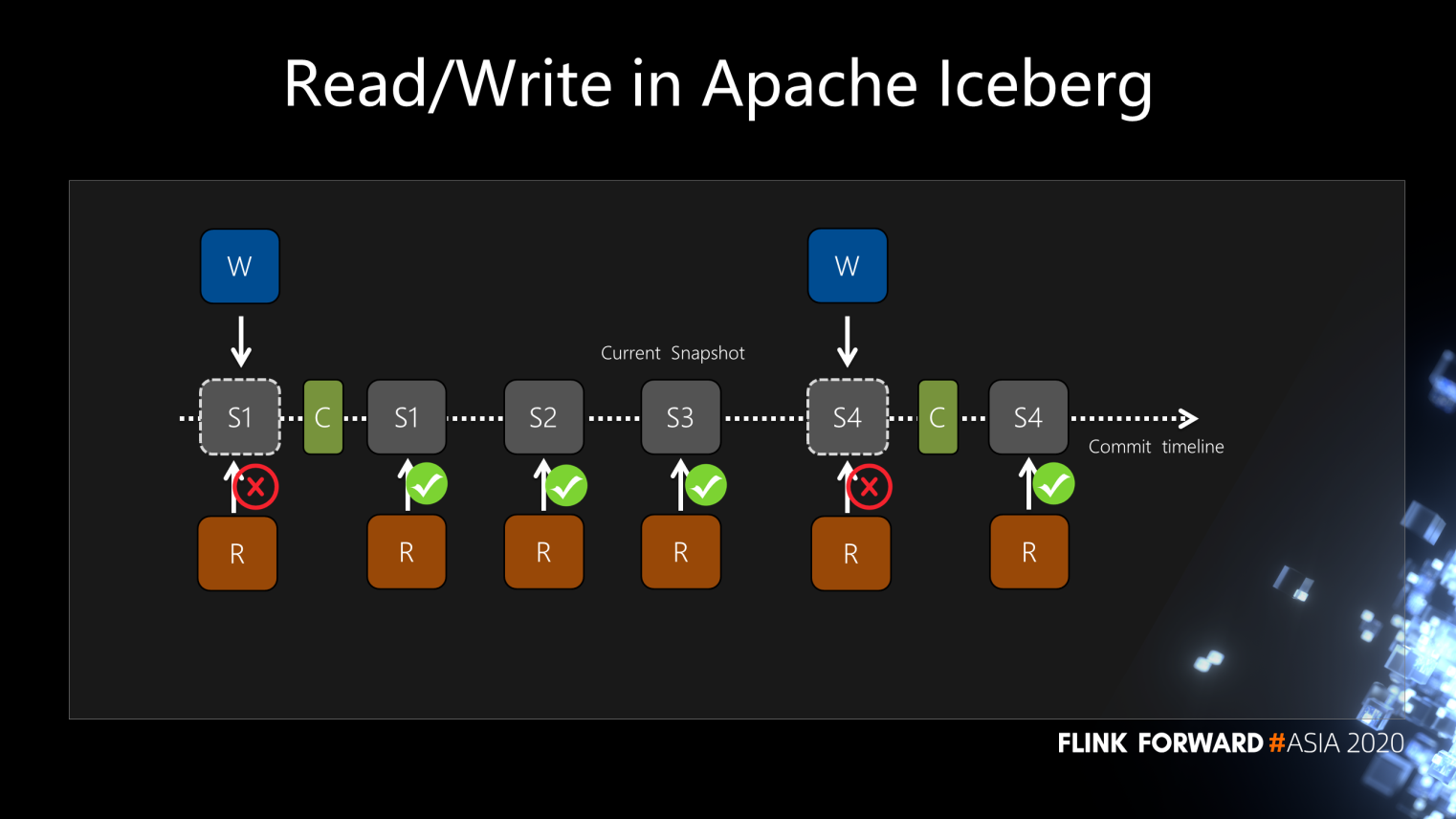

Firstly, in a write operation, the S1 dashed box in figure 10 suggests that Snapshot-1 is not committed, which means Snapshot-1 is unreadable. The reason is that users can only read after committing the snapshot. It is also the same case with Snapshot-2 and Snapshot-3.

Read-write separation is an important feature of Iceberg. Snapshot-4 writing does not affect Snapshot-2 and Snapshot-3 reading at all. This is one of the most important capabilities in building real-time data warehouses.

Figure 10

Similarly, reads can be concurrent. Snapshot S1, S2, and S3 data can be read simultaneously, which provides the ability to trace back to the Snapshot-2 or Snapshot-3 data reading. A commit operation will be performed when Snapshot-4 is written. Then Snapshot-4, as the solid box in figure 10 indicates, becomes readable. By default, the snapshot that the Current Snapshot pointer points to is indeed what a user reads when reading a table. However, the read operation to the previous snapshot is left unaffected.

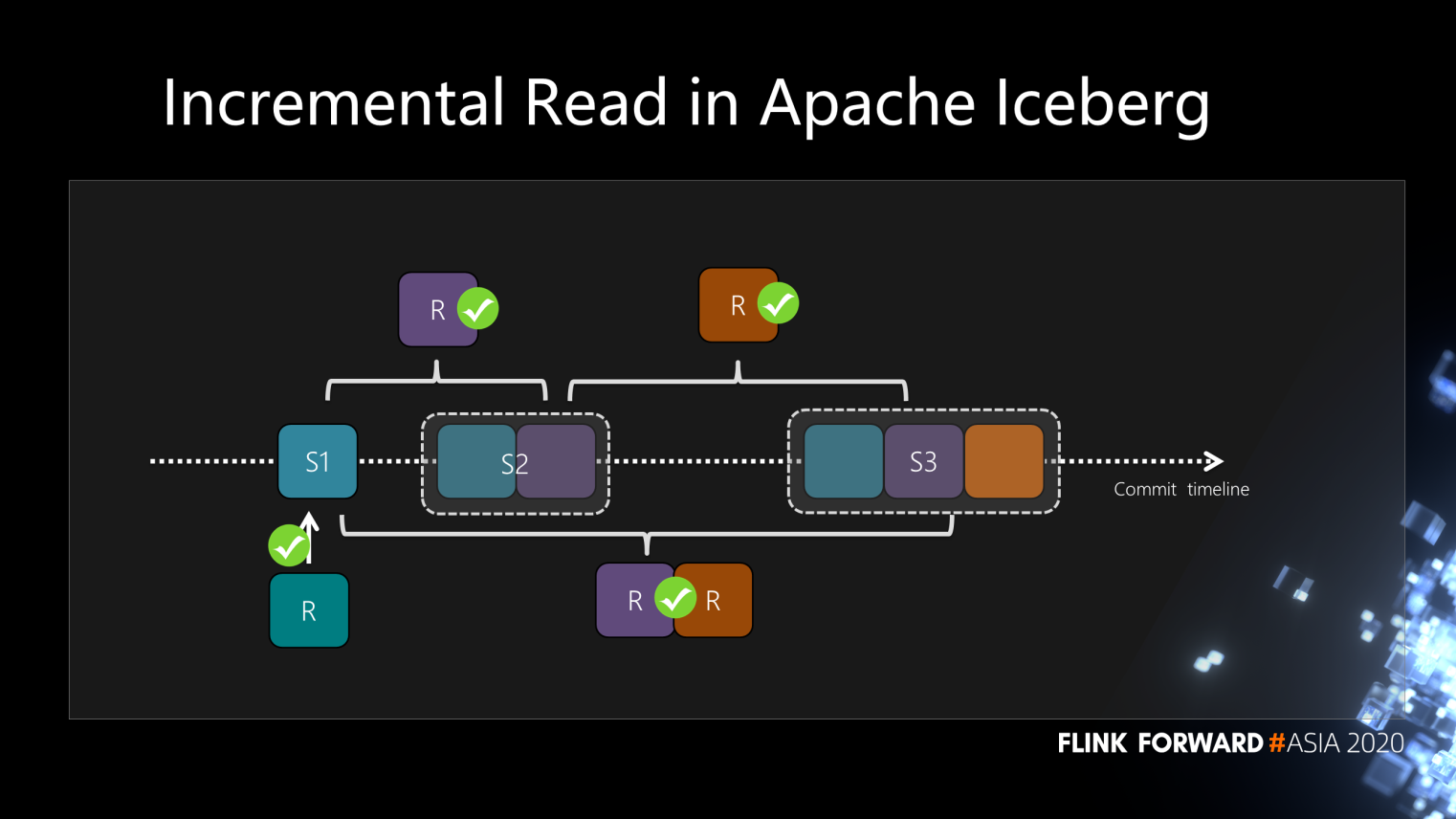

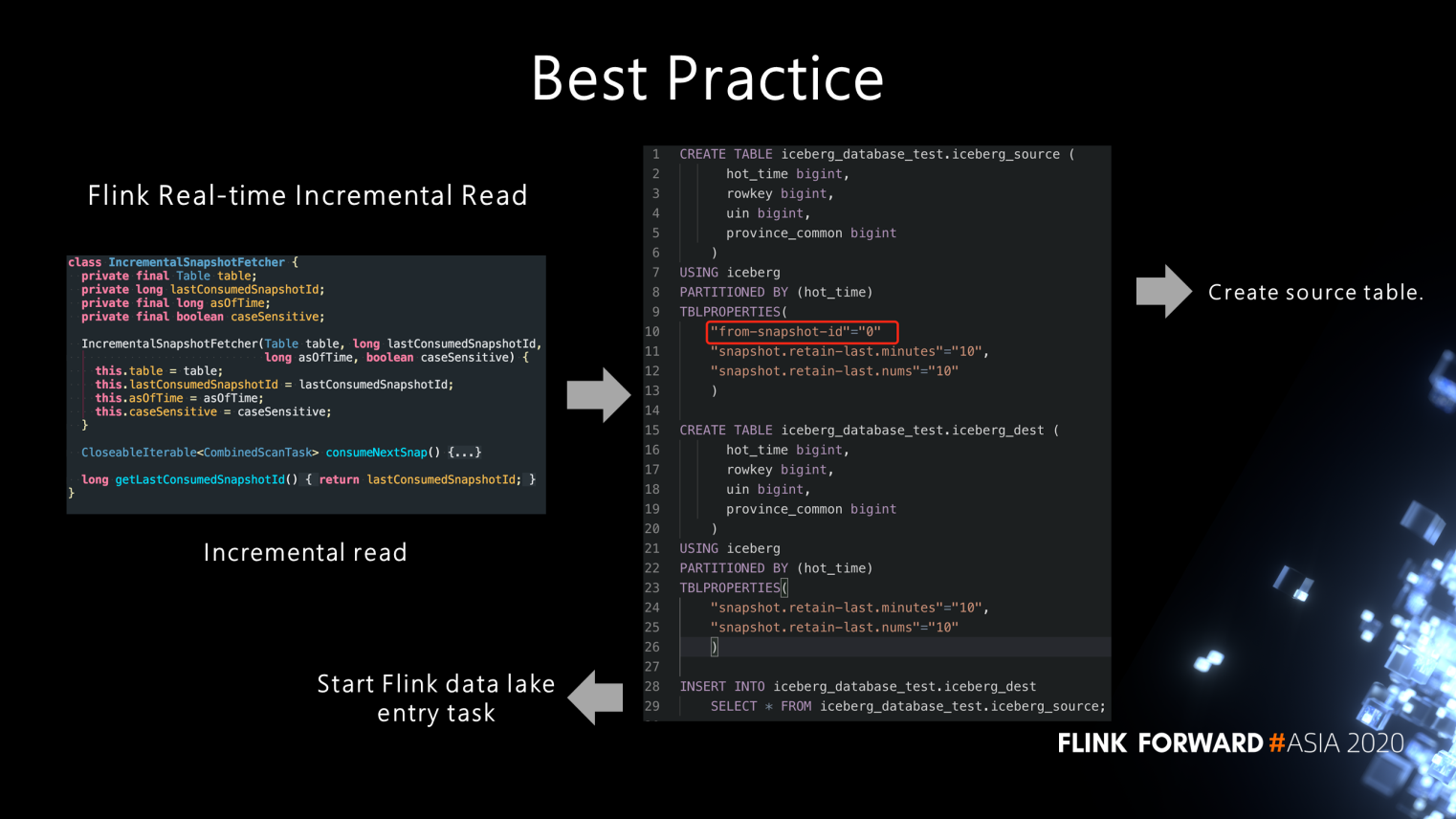

This section describes the incremental read operation of Iceberg. First, the Iceberg read operation is only based on Snapshot-1 that has been submitted. Then, there is a Snapshot-2. Each snapshot contains all the data of the previous snapshot. If full data is read each time, the cost of reading is very high for the computing engines on the comprehensive procedure.

The incremental data from Snapshot-1 to Snapshot-2 should be selected to read according to Iceberg's snapshot backfill mechanism if only the newly added data at the current time is needed, as the purple parts in figure 11 show.

Figure 11

Similarly, when it comes to S3, only the data colored in yellow and incremental data from S1 to S3 can be read. Such an incremental read function has already been available on the Streaming Reader based on the Flink source internally and has been run online. Now, here is the point: Since Iceberg has been equipped with read-write separation, users must implement concurrent read, incremental read, and the Iceberg Sink to realize the connection between Iceberg and Flink.

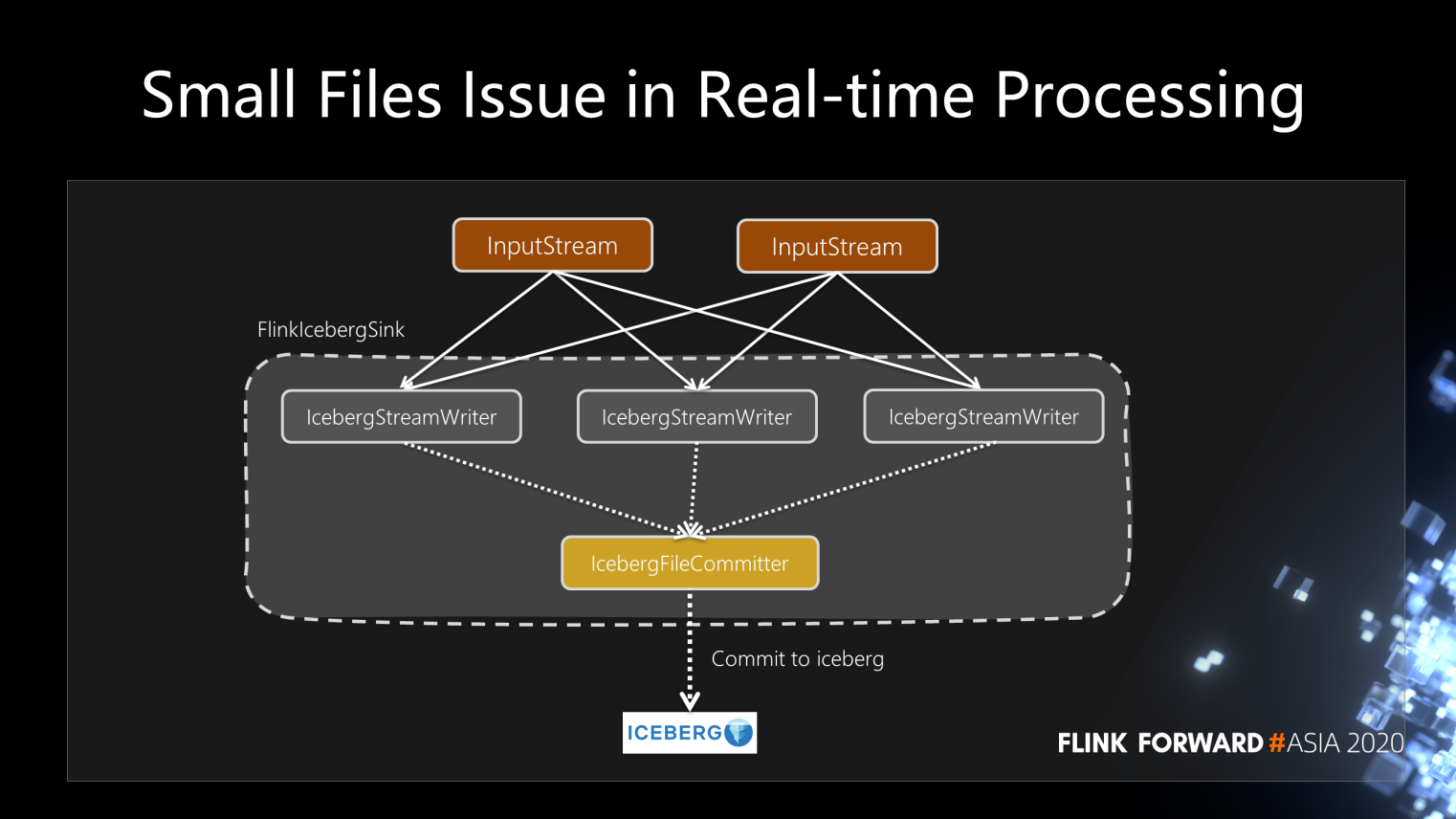

The community has now restructured Flink Iceberg Sink in Flink and implemented the global committee function. Our architecture is consistent with that of the community. The dashed box of figure 12 shows Flink Iceberg Sink.

With multiple IcebergStreamWriters and one IcebergFileCommitter, each writer writes DataFiles when upstream data is written into IcebergStreamWriter.

Figure 12

When each writer completes writing the current batch of small DataFiles, a message will be sent to IcebergFileCommitter to indicate that the DataFiles are ready for commit. When IcebergFileCommitter receives the message, it commits the DataFiles at one time.

The commit operation itself is only a modification for some original information, making the data written on the disk visible from the invisible state. Therefore, Iceberg needs the commit operation only once to complete such a transition.

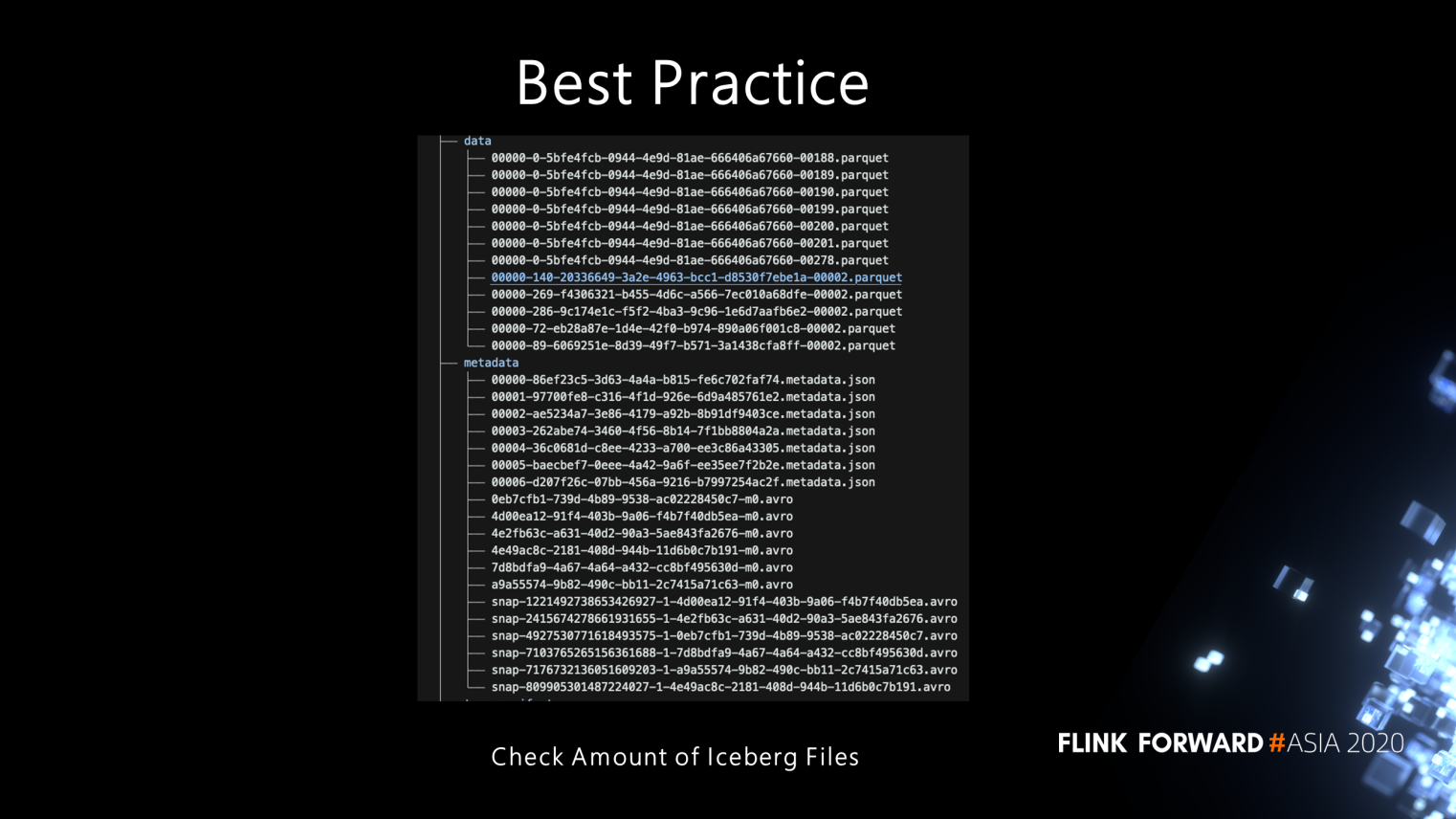

Flink real-time tasks often run in clusters on a long-term basis. Usually, the Iceberg commit is set to perform a commit operation every 30 seconds or 1 minute to ensure the timeliness of the data. If the commit operation is performed every minute, there will be 1,440 commits in total for Flink to run for a day. This number may be even more significant if Flink runs for a month. The shorter the interval for a snapshot commit operation, the more the snapshots generated. A streaming task generates a large number of small files when it runs.

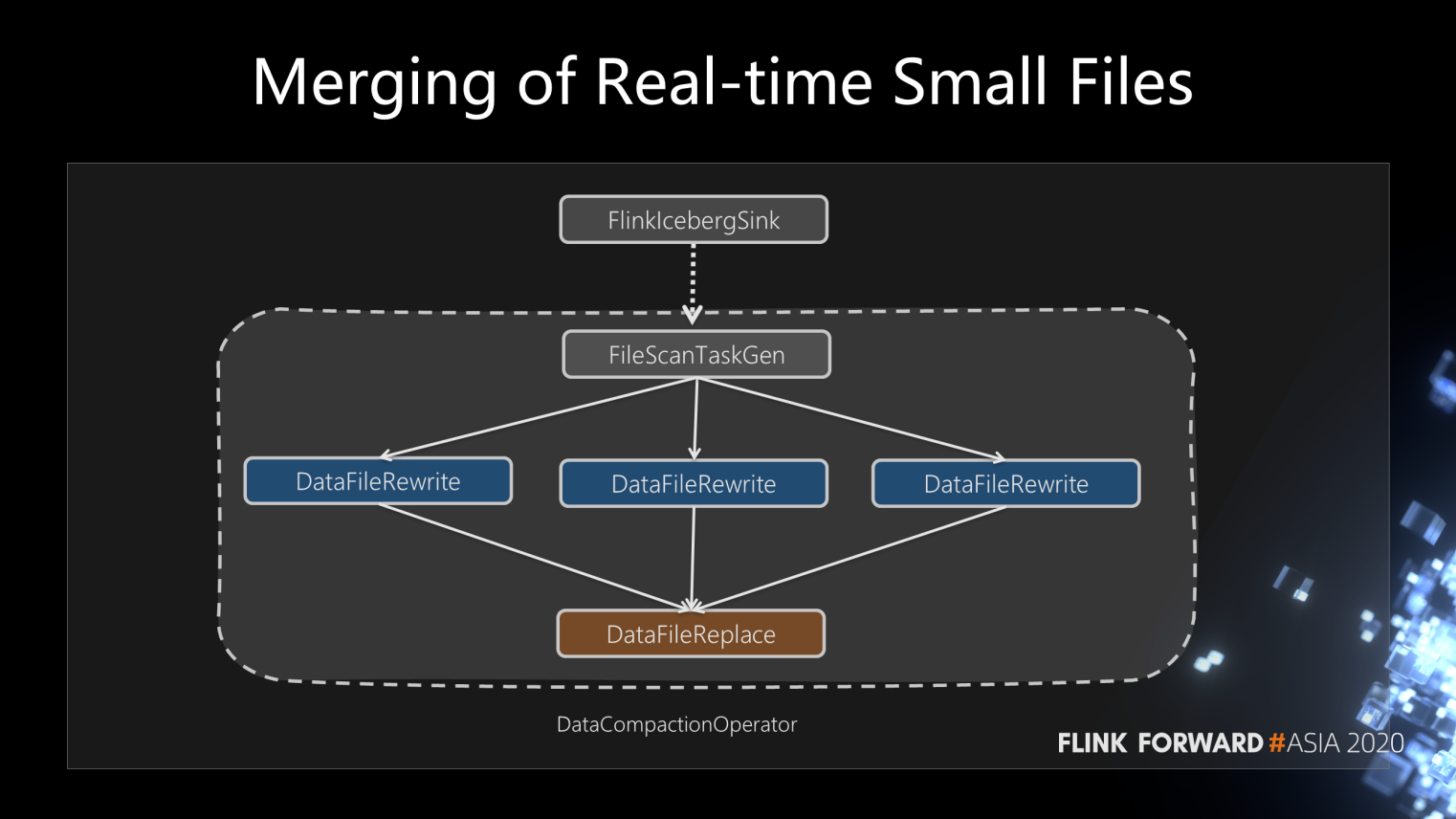

If this problem is unsolved, the Iceberg Sink operation on the Flink processing engine will be invalid. We internally implemented a function called DataCompactionOperator that runs along with the Flink Sink. Each time a commit is completed, it sends a message to the downstream FileScanTaskGen, telling the FileScanTaskGen that the commit has been completed.

Figure 13

The FileScanTaskGen tool has the relevant logic for generating a file merging task based on the user's configuration or the current disk's characteristics. FileScanTaskGen sends the list of files generated in FileScanTaskGen that need to be merged to DataFileRewrite. Similarly, the file merge takes a certain amount of time, so the files need to be distributed asynchronously to different task rewrite operators.

Iceberg's commit operation mentioned above requires a new snapshot for the files after rewrite. Here, it is also a commit operation for Iceberg, so a single concurrent event like a commit operation is used.

For the comprehensive procedure, the commit operation is currently adopted to merge small files. If the commit operation is blocked, the previous write operation will be affected. Such a problem will be solved later. Now, a design doc has been created in the Iceberg community for discussing merging-related work with the community.

As described above, Iceberg supports read-write separation, concurrent read, incremental read, merging small files, and seconds to minutes of latency. Based on these advantages, we try to use Iceberg to build a Flink-based real-time data warehouse architecture featuring real-time comprehensive-procedure and stream-batch processing.

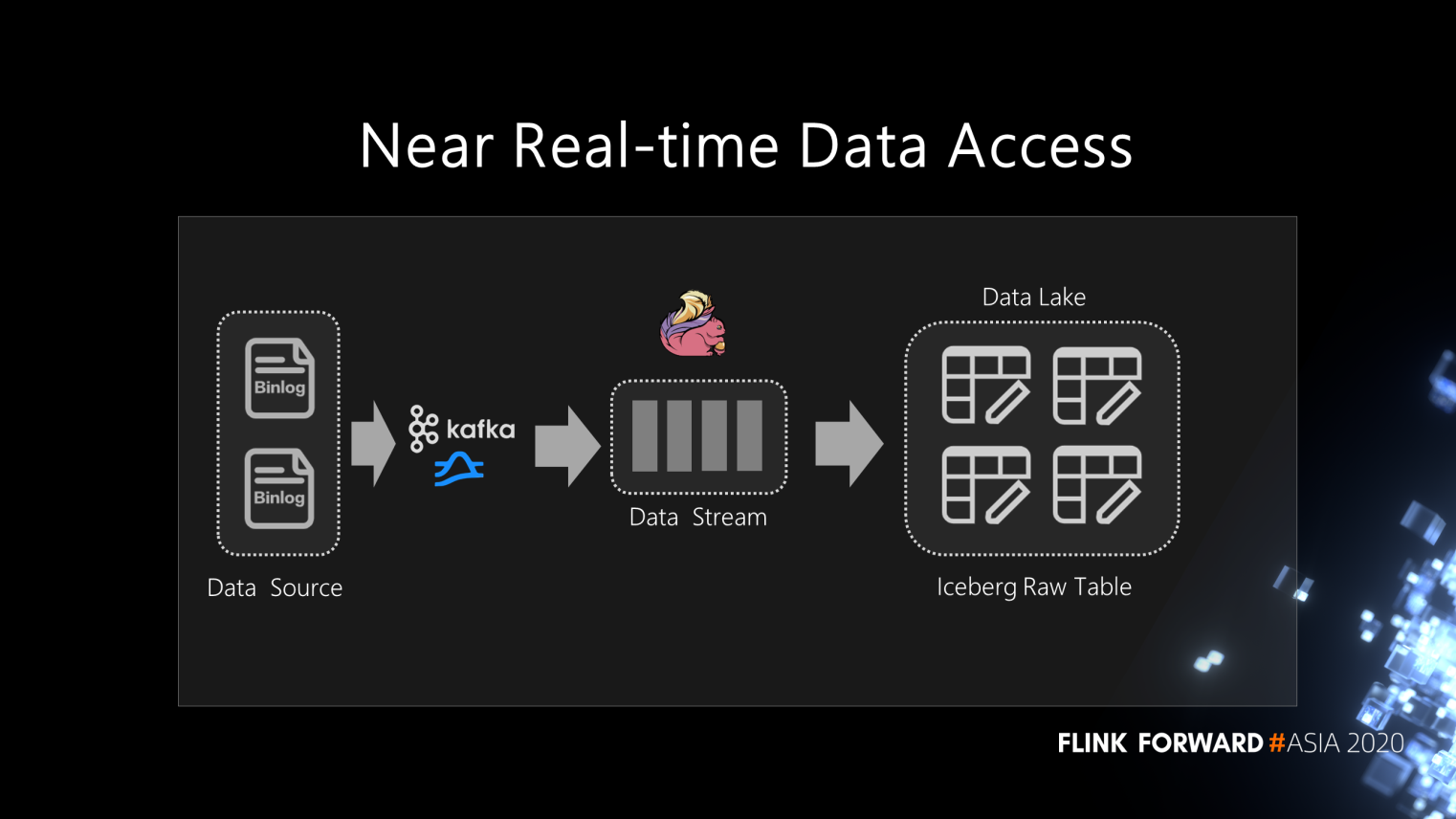

As the following figure shows, each commit operation of Iceberg changes the visibility of data. That is, to make the data visible from the invisible state. In this process, users can implement near-real-time data recording.

Figure 14

Previously, users needed to access data first. For example, users can use the Spark offline scheduling task to run, pull, and extract some data, then the data is written to the Hive table. The latency is relatively high in this process. With the Iceberg table format, near-real-time data access can be realized using Flink or Spark streaming.

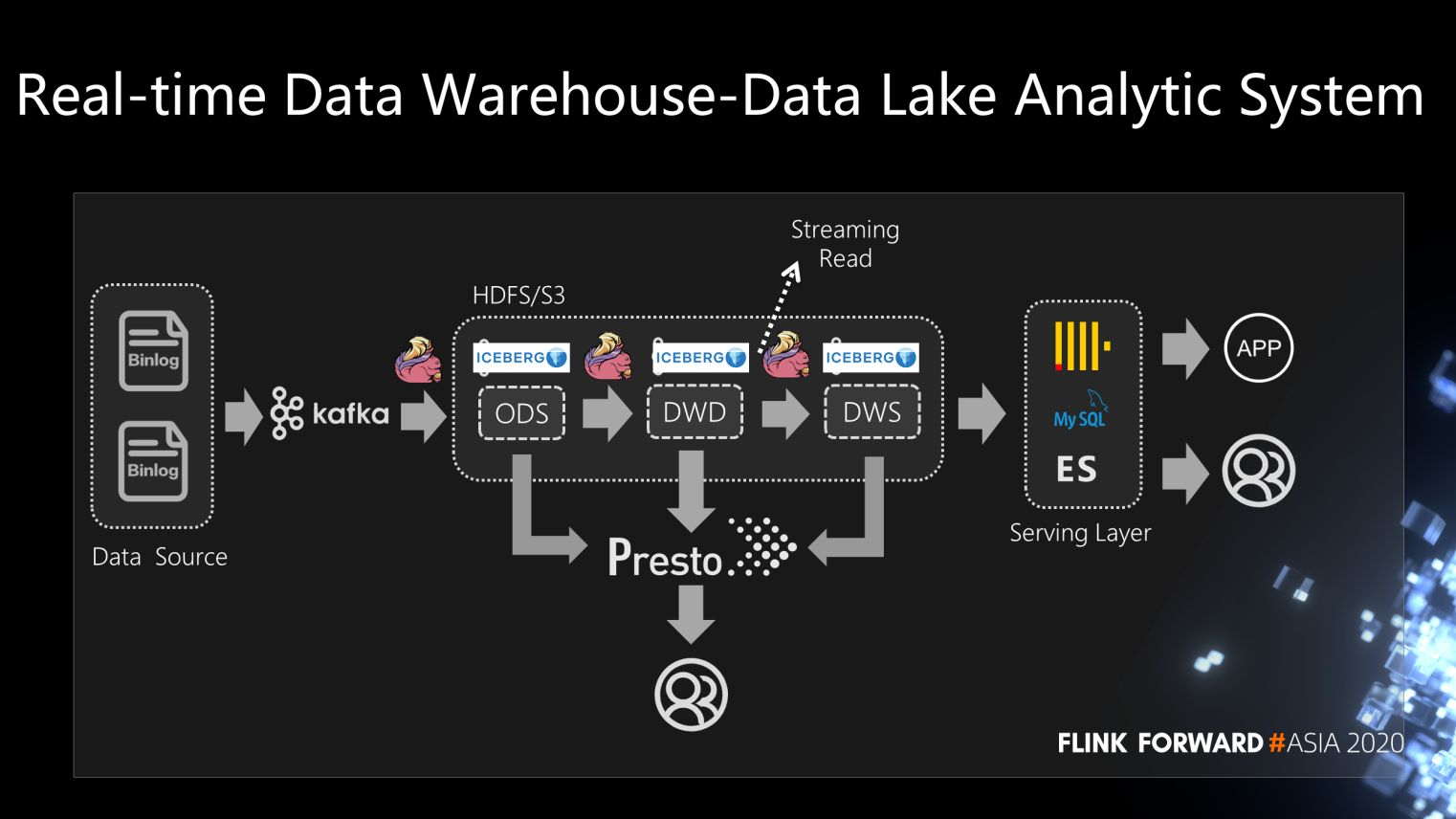

Based on the above functions, we review the kappa architecture discussed earlier. Despite the pain points of the kappa architecture, is it possible to replace Kafka with Iceberg since Iceberg is an excellent table format supporting Streaming Reader and Streaming Sink?

Iceberg's bottom layer relies on cheap storage like HDFS or S3. In addition, Iceberg supports columnar storage such as Parquet, ORC, and Avro. Columnar storage can support basic OLAP analysis optimization and perform computing directly in the middle layer. For example, the most basic OLAP optimization policy of predicate push-down - based on the Streaming Reader function of the Iceberg snapshot - can greatly reduce the offline tasks' day to hour level latency and transfer them into a near-real-time data lake analytic system.

Figure 15

In the intermediate processing layer, users can use Presto for some simple queries. As Iceberg supports Streaming Read, Flink can also be directly connected in the middle layer to perform batch processing or stream computing tasks. The intermediate results are further calculated and then output to the downstream.

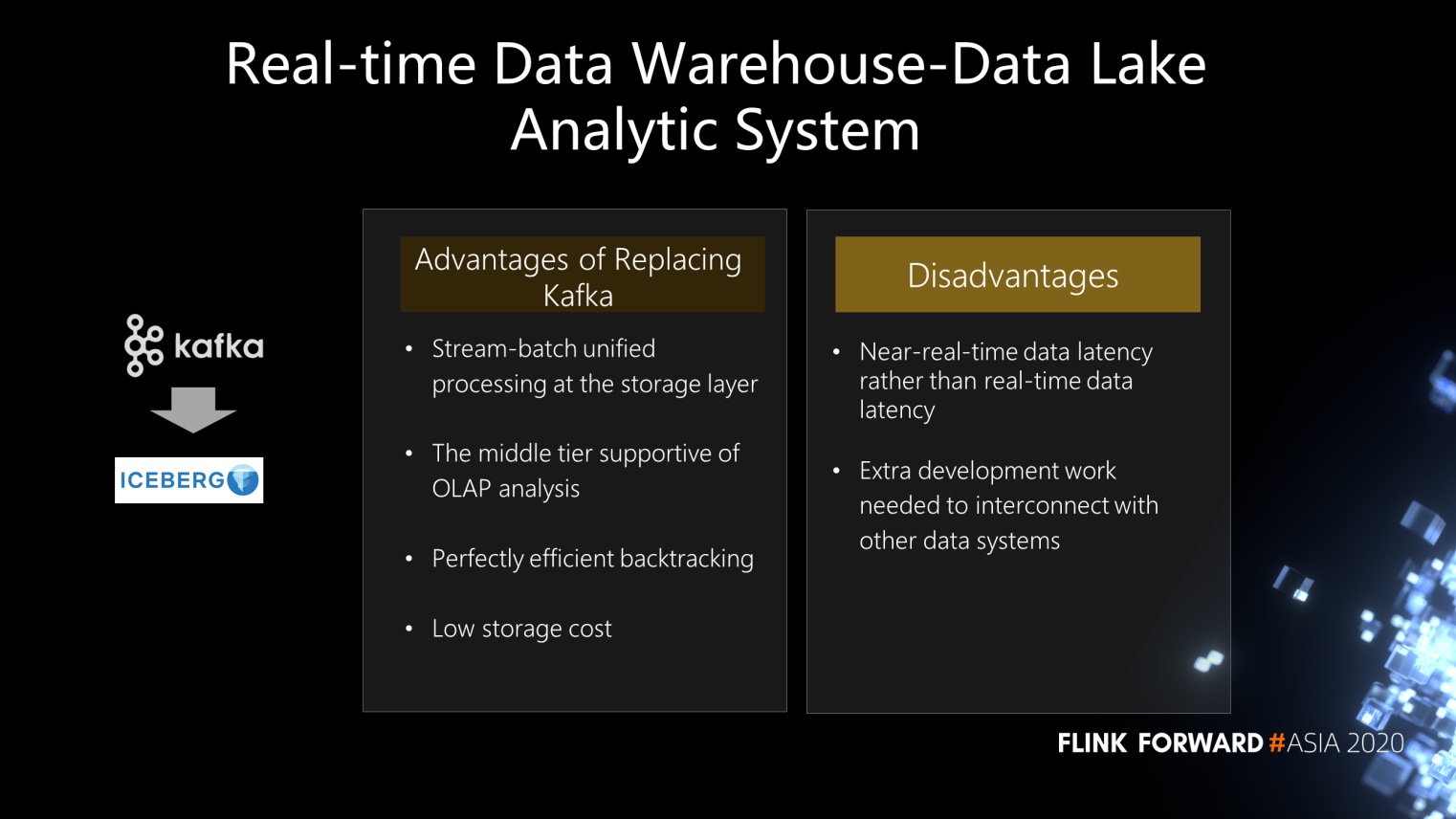

In general, the main advantages of replacing Kafka with Iceberg are as follows:

Of course, there are also some disadvantages, such as:

Figure 16

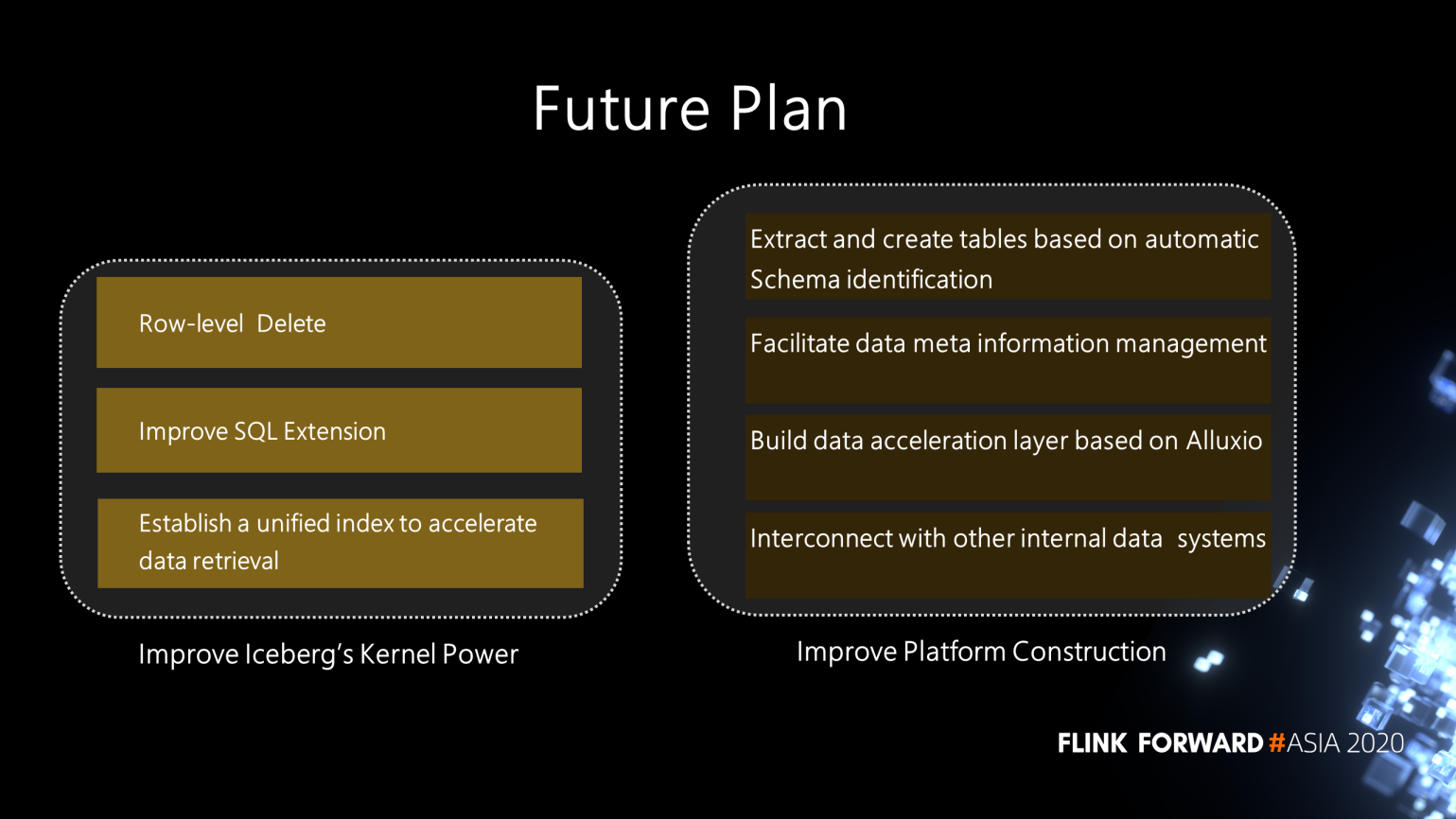

Iceberg stores all data files in HDFS. But HDFS read-write cannot fully meet the requirements for second-level analysis scenarios. Next, the cache Alluxio will be supported at the bottom layer of the Iceberg data lake acceleration through cache. This architecture is also in our future plan and construction.

Figure 17

As figure 18 shows, Tencent has implemented the full SQL of Iceberg. Users can set some parameters for merging small files in the table properties, such as the number of snapshots needed for merging. As such, the bottom layer can start the Flink data lake entry task directly with an insert statement. Thus, the entire task can keep running, and the DataFiles of backend data are automatically merged in the background.

Figure 18

The following figure shows the data files and the corresponding metafiles in Iceberg. As the open-source Iceberg Flink Sink in the community does not have the file merging function, when a small streaming task is run in the computer, the number of files in the related directory will surge after the Flink task is run for a while.

Figure 19

We can conclude that the number of files can be controlled at a stable level through Iceberg's real-time small file merging.

To achieve real-time data incremental read, users can configure the data to Iceberg's table properties parameter and specify the snapshot for consumption. Then, each time the Flink task is started, it reads only the latest data added to the snapshot.

Figure 20

In this example, the file merging feature is enabled. Finally, a Flink Sink task to enter the lake is started by SQL.

Currently, users are inclined to solve all task problems with SQL. Actually, the small file merging is fit only for online Flink tasks. The number of generated files or the file size during each commit cycle is not very large for offline tasks.

However, when the user's task has run for a long time, there may be thousands of files in the bottom layer. In this circumstance, it is inappropriate to merge files directly online with real-time tasks, and it may affect the timeliness of online real-time tasks. Therefore, users can utilize the SQL extension to merge small files and delete leftover files or expired snapshots.

Internally, we have been managing Iceberg's data and its meta-information on the disk using the SQL extension for a long time. We will continue to add more functions to the SQL extension to improve Iceberg's usability and user experience.

Figure 21

Figure 22

Given the need to improve the kernel of Iceberg, we prioritize improving the above features first.

In terms of platform construction, we will:

Apache Iceberg 0.11.0: Features and Deep Integration with Flink

132 posts | 41 followers

FollowApache Flink Community China - June 2, 2022

Apache Flink Community China - May 14, 2021

Apache Flink Community China - March 17, 2023

Apache Flink Community China - June 15, 2021

Apache Flink Community China - January 11, 2021

Apache Flink Community China - September 27, 2020

132 posts | 41 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn More Omnichannel Data Mid-End Solution

Omnichannel Data Mid-End Solution

This all-in-one omnichannel data solution helps brand merchants formulate brand strategies, monitor brand operation, and increase customer base.

Learn MoreMore Posts by Apache Flink Community