By Liu Tianyuan, Product Manager of DataWorks

This article is a part of the One-stop Big Data Development and Governance DataWorks Use Collection.

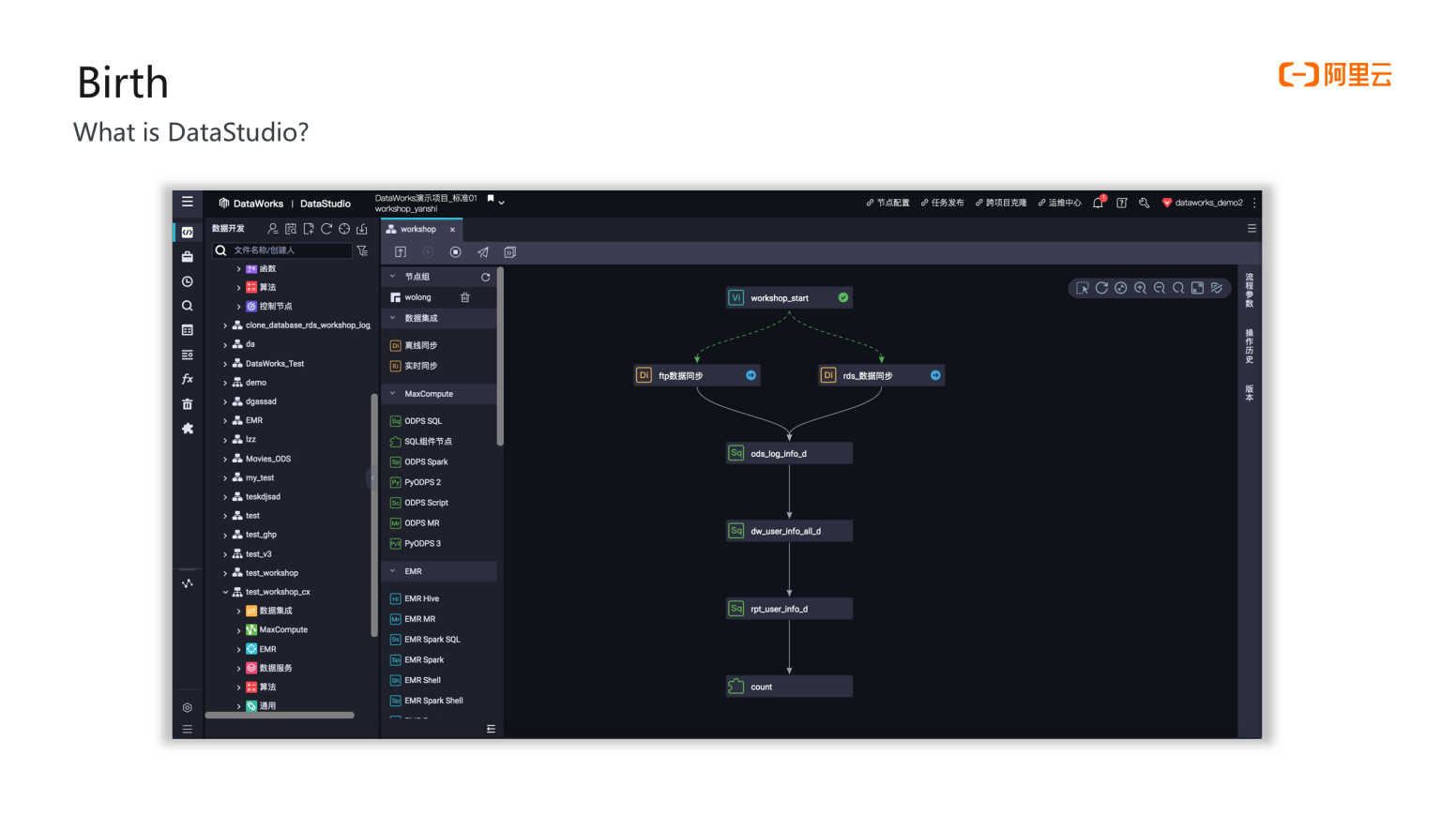

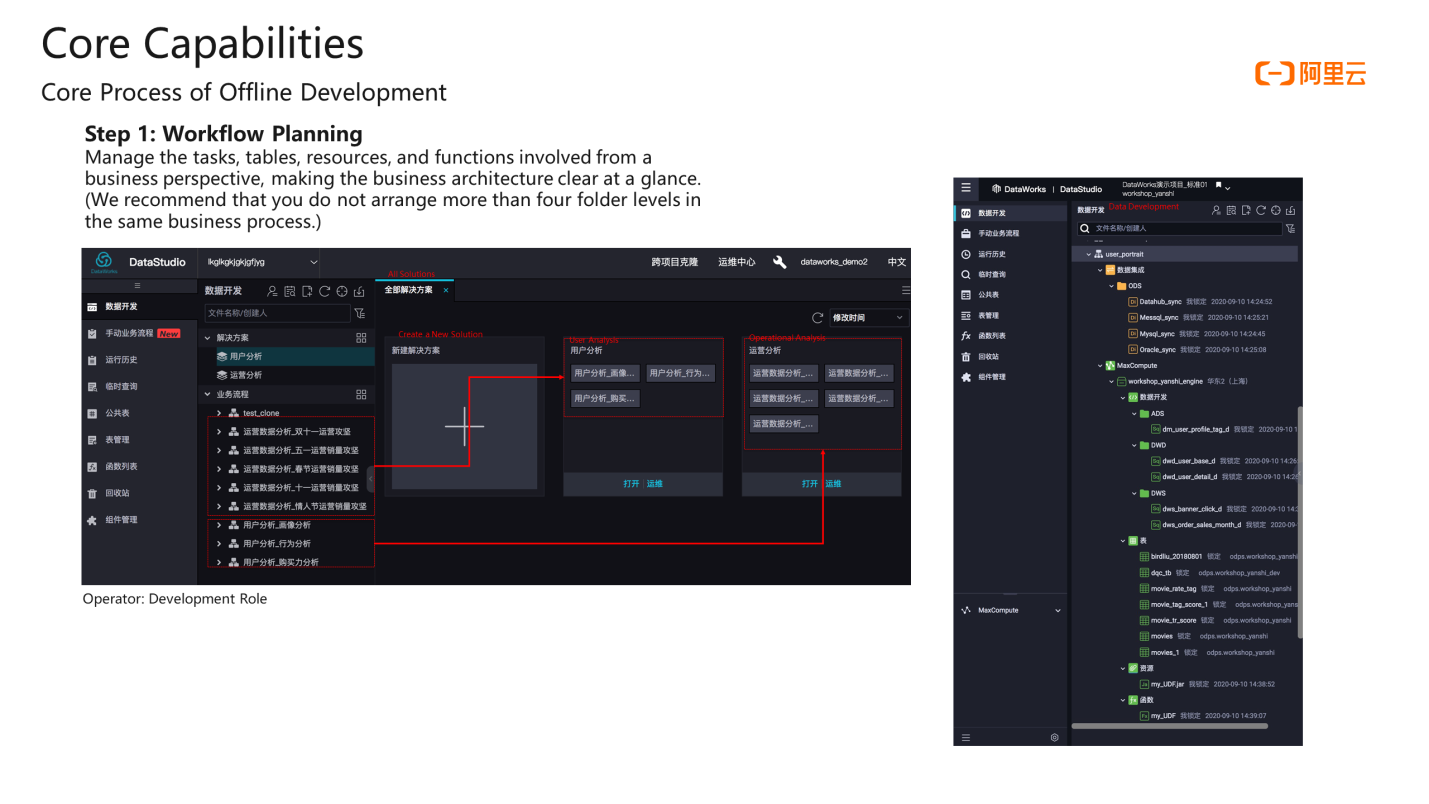

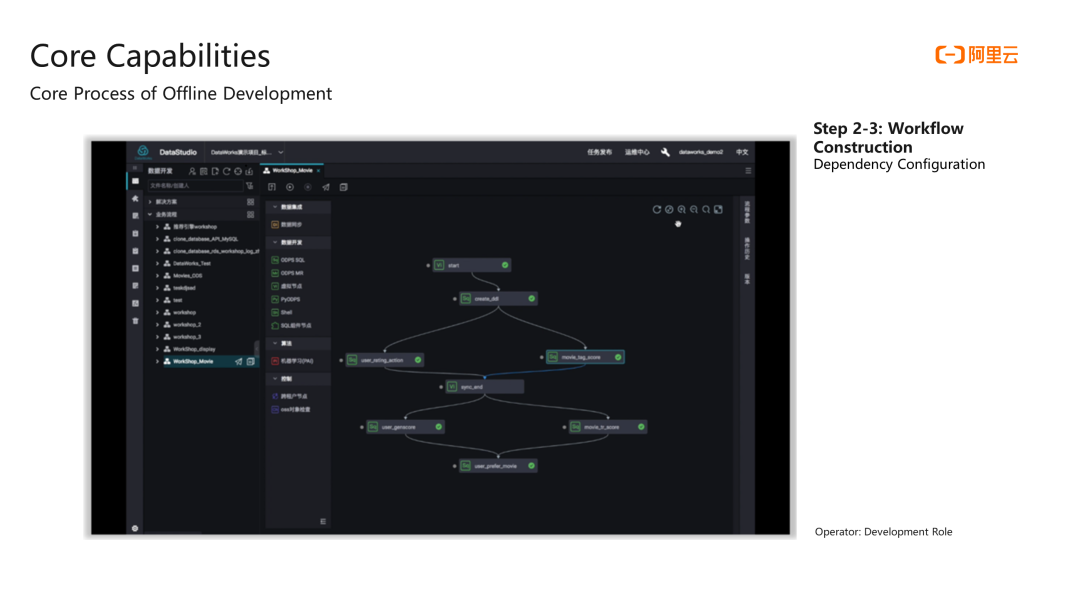

The following figure shows the development interface of DataWorks. In the center of DataStudio is a workflow, which contains synchronization tasks and SQL tasks. You can drag other nodes, such as EMR nodes or other types of engine nodes, based on the different needs of the enterprise. When facing a disparate data source or heterogeneous computing engines, developers can perform data development in the form of drag and drop and build workflows that schedule tasks offline or in real-time.

Before 2009, Alibaba Group adopted Oracle and used the primitive Crontab for scheduling. It is conceivable that this scheduling method is very unrealistic as the number of tasks and data increases. In August 2009, the predecessor of DataWorks, Skynet, was released. Skynet is a simple tool that supports configuring scheduling cycles.

DataWorks has also gone through multiple iterations, including the launch of the Alibaba Group moon landing program and the development of a data middle platform. In 2015, DataWorks entered the public cloud and Alibaba Cloud Apsara Stack marketplace in the form of D+ and began to serve governments and enterprises. The Digital Plus platform was released in 2016. The Digital Plus brand brought the powerful combination of DataWorks and MaxCompute to the market. In 2017, 2018, and 2020, DataWorks was internationalized and upgraded from version 2.0 to 3.0.

Now, DataWorks has become a powerful big data production scheduling tool that can support multiple engines, multiple instances, and cross-region scheduling.

Open-source users understand when an open-source cluster is built, data can be uploaded and calculated through basic command lines. When building a big data system, the task workflow will be large. At this time, some open-source scheduling tools, such as Azkaban, OOZIE, and AirFlow, need to be used to help users schedule and run tasks in the entire workflow. However, these open-source scheduling tools will maintain one or more or dozens of configuration files to realize the dependency relationship between tasks. This is a high threshold for technical support personnel but also low efficiency and error-prone.

DataWorks is valuable when facing the bottleneck of open-source scheduling.

Theoretically, the enterprise can undertake the data requirements of the entire enterprise to do calculations with the big data engine. However, when the business personnel and non-technical personnel want to access the data, there will be a threshold. At this time, enterprises data developers and frontend or backend development personnel need to develop a series of tools for business or non-technical personnel so they can use the data and perform visual analysis and queries.

DataWorks has lowered the threshold. As long as the business personnel can write SQL, they can query the calculated data. In addition, the maximum value of DataWorks is that scheduling does not require users to continue writing scripts or editing many configuration files. Users only need to visualize the development, configuration dependencies, and scheduling cycle. Then, the enterprise's entire data system can be built easily.

DataWorks also has advantages in reducing costs and improving efficiency.

First of all, it is fast for non-technical personnel to get started. Users can master the data development and production process within two hours after completing the Alibaba Cloud Help Center Workshop. Enterprises no longer need to set up a tool development team to develop the entire scheduling system, nor do they need to maintain the stability of the entire scheduling system.

The visual development interface provided by DataWorks, such as the SQL intelligent editor, can improve the development efficiency for data analysts and developers.

DataWorks comes with a standardized process for task development and release, which can help enterprises establish internal data production mechanism specifications and make their data systems run more stably.

Finally, a more sustainable task management model is implemented. Alibaba automatically schedules millions of tasks every day. These tasks are completed through the DataWorks scheduling system. All in all, the stability of DataWorks is a very important cornerstone to ensure the data output of the group.

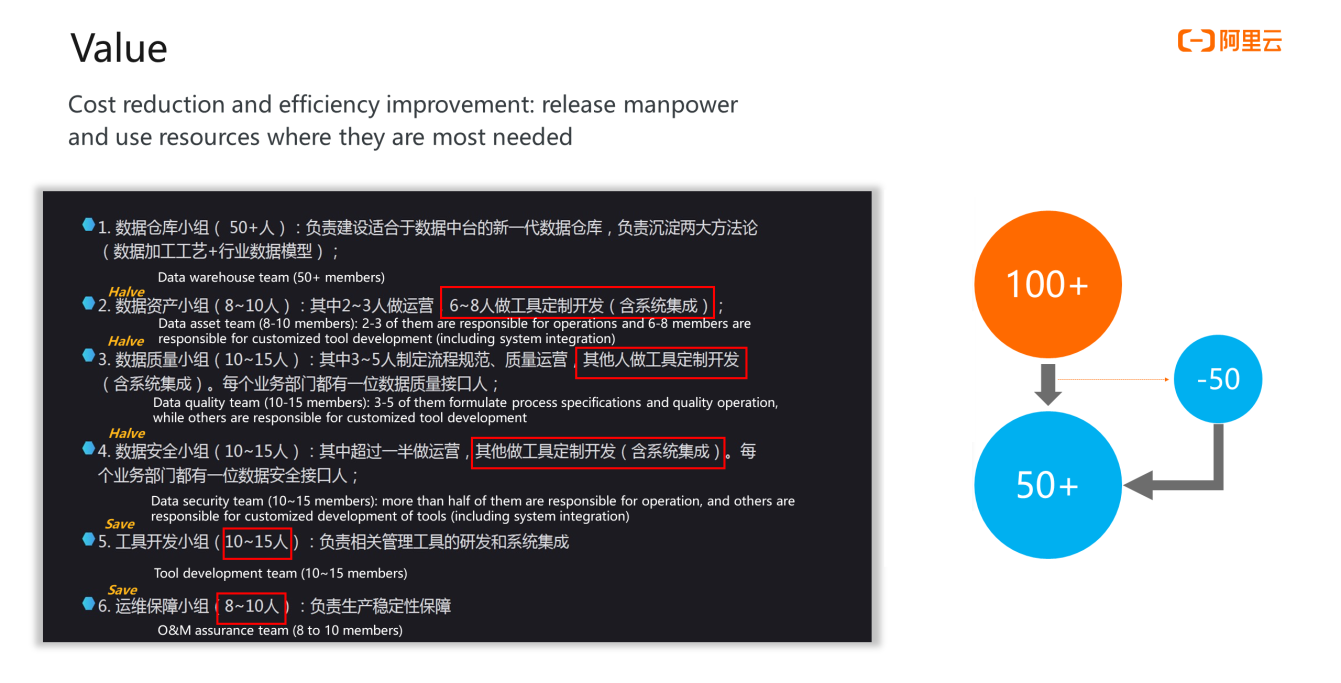

The value brought by DataWorks can be interpreted by referring to the following data systems of large and medium-sized enterprises to reduce costs and improve efficiency. The data system of the enterprise includes a data warehouse team with more than 50 people. Some members of the asset, quality, safety, tool development, and operation and maintenance teams are responsible for tool development. Moreover, the tool development and operation and maintenance teams are also responsible for tool-related work. In this case, if an enterprise chooses to use DataWorks, the staff size of the entire data system can be reduced from more than 100 to more than 50. The additional 50 people can focus on data business development to help the enterprise build a better data system, release manpower, and use resources where they are most needed.

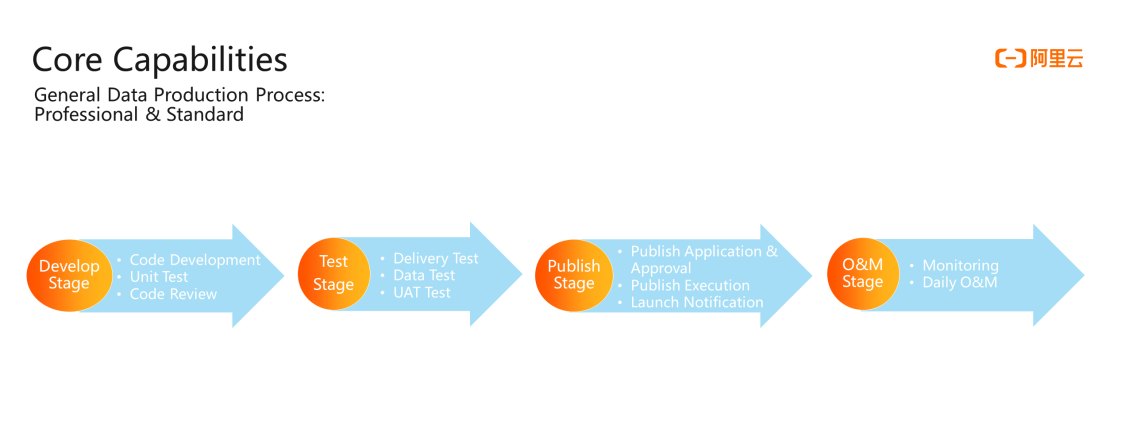

From a macro perspective - First, synchronize the data to the big data computing engine and define your data standards, specifications, and data models. Then, you can produce data, including offline or real-time data. After the code development is approved, it will be released to the production environment for regular production scheduling and data output. The output data can be used for a BI report presentation or API to support the operation of the entire enterprise application.

From a microscopic perspective - After the developers receive the business requirements, they will develop the code according to the scenario. After the development, they will perform self-testing first and then the testing phase. During the testing phase, data product managers and specialized testers will be involved to conduct delivery testing, data testing, and UAT testing of the developed code. At this stage, the test content will be detailed, including checking whether the table naming and field naming conform to the specification and whether the proportion of null, duplicate, and invalid values of the data meets the requirements. At the same time, it will also check the correctness of the output, whether the output data is useful for the business and whether the code performance is acceptable.

After the test is completed, the code is ready to go online. At this time, a third-party person familiar with the entire enterprise data system and data business is needed to review the code. If there is no impact on the business and useful data is produced, the code can be released and executed.

Finally, we have reached the O&M stage. The most basic responsibility of the person responsible for the task is to ensure that the code runs on time and successfully every day. If there is any problem, you need to check the log to find the cause and solve the problem. At this time, an operation and maintenance platform is needed to carry out task operation and maintenance.

How does DataStudio help enterprises complete professional standard development?

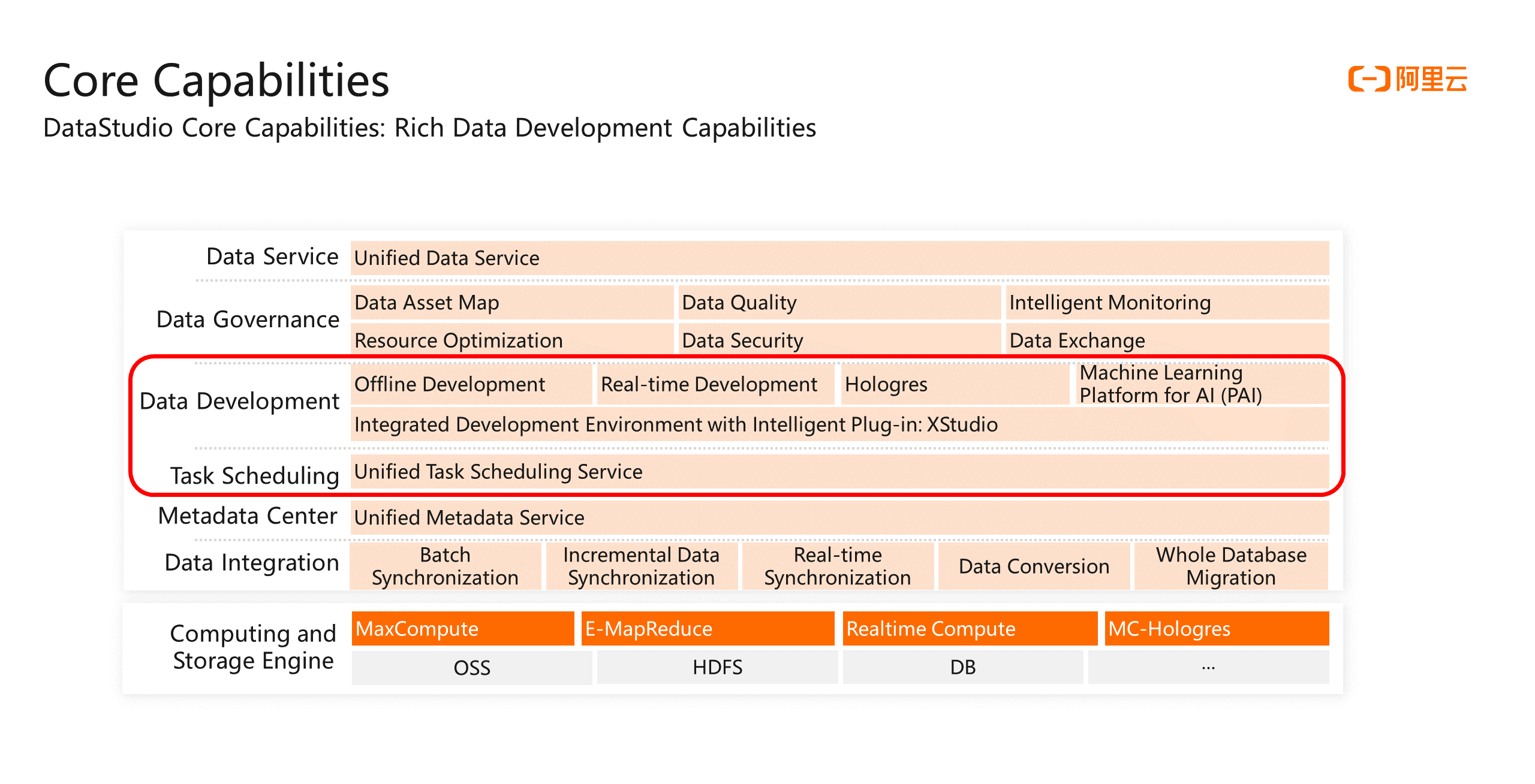

DataWorks supports various development modes, such as offline development, real-time development, interactive analytics, and machine learning. This is also the core development capability of DataWorks. After the task is developed, users can publish the task to the production environment scheduling system and make the DataWorks scheduling system automatically schedule the task.

DataWorks internalizes a standardized development process, which is the DataWorks standard mode space. This mode space corresponds to two computing engines. The user selects MaxCompute, EMR, or other engines.

Data developers only need to carry out code development, workflow configuration, and smoke testing in the development environment and submit them after the test is correct. An administrator or O&M is required to review the code before publishing it to the production environment. If the audit believes the developer's code will not affect the stability of the system, will not cause failures, and will meet the business requirements, the supervisor or O&M deployment personnel can perform the release and publish the task to the production environment for cycle scheduling.

The first step is to plan the workflow. After you enter DataStudio, users need to plan the workflow. The workflow is a new product concept after DataWorks 2.0. It helps users manage logical abstract concepts, such as task tables and function resources related to a business. Currently, we recommend creating a workflow from a business perspective, such as creating tables, resources, and functions dedicated to managing user portraits or creating a workflow dedicated to managing operational activities.

After creating a workflow, users can create various synchronization tasks and computing tasks in the workflow and string these tasks into a workflow from top to bottom. The management of workflows is flexible. Users can use a separate process to produce all the data of the DWS layer and use another workflow to produce all the data of the DWD layer.

All synchronization tasks at the ODS layer are also placed in a separate workflow. How to use them depends on the user scenarios.

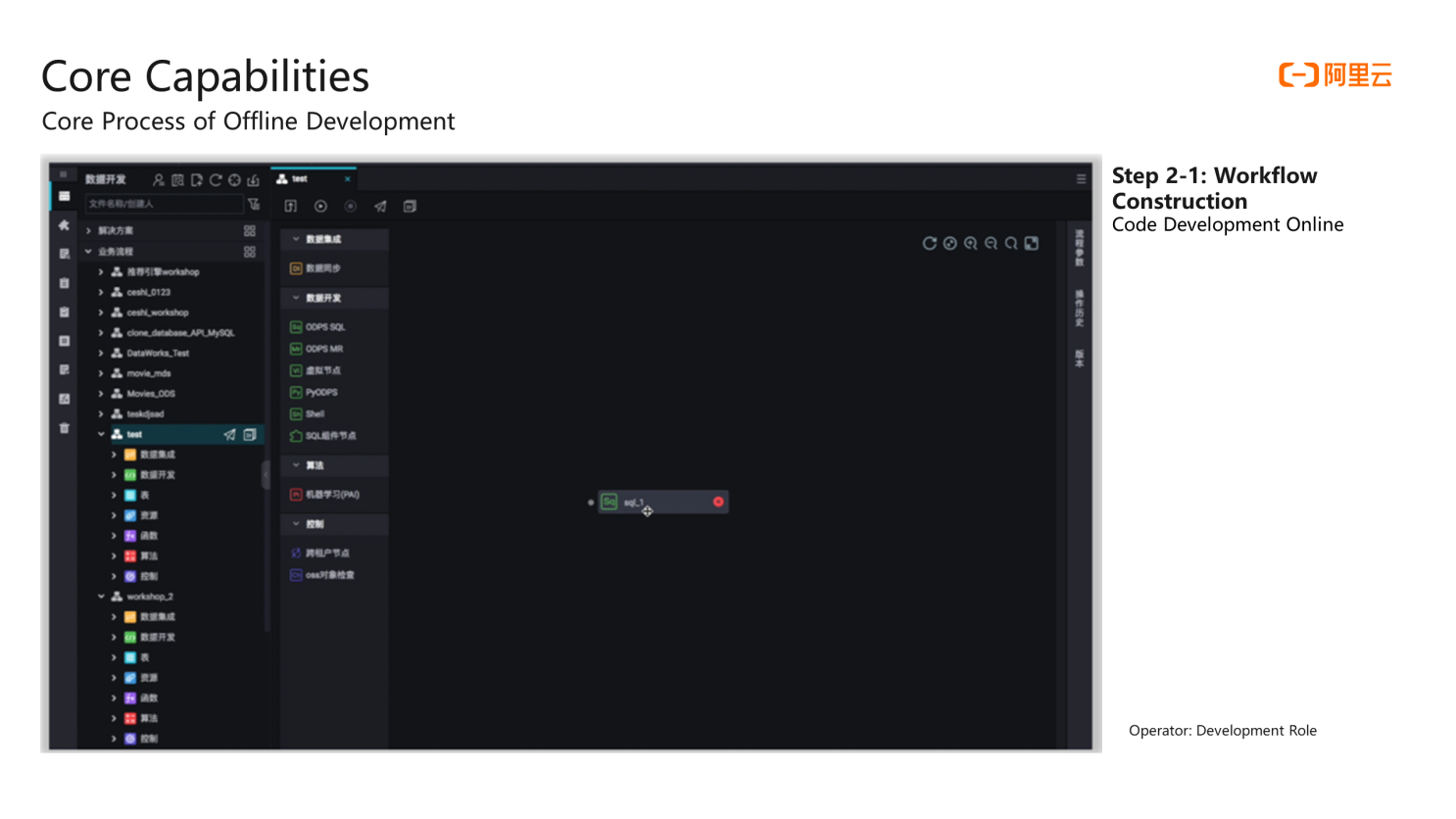

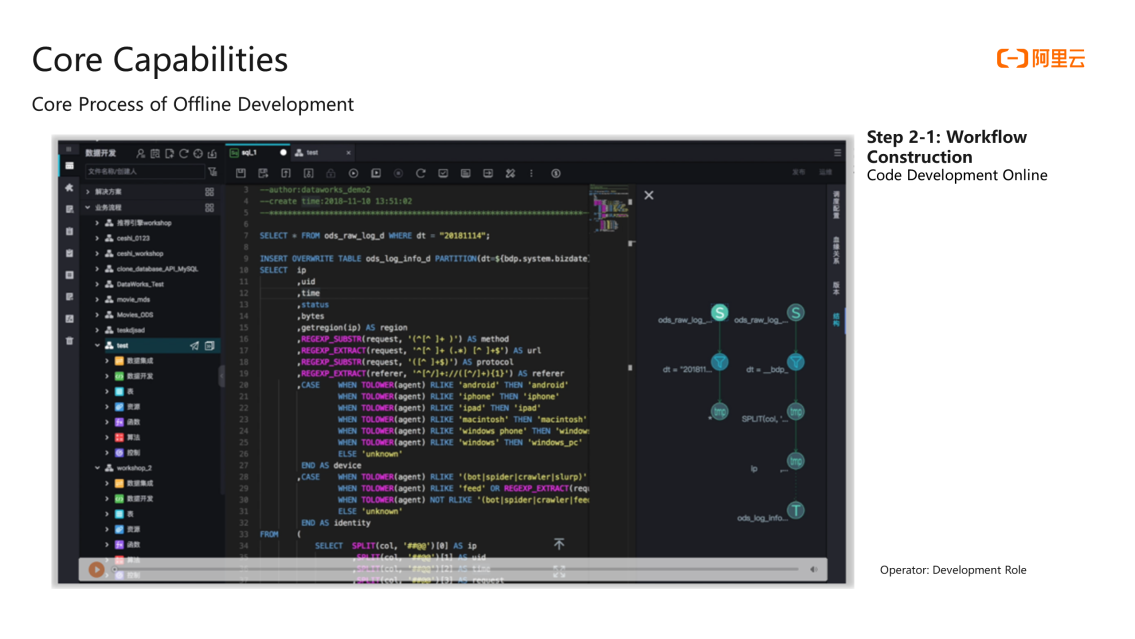

The second step is to build the workflow. You can create an SQL task by dragging a node to the workflow. Then, you can edit the SQL task by double-clicking the node. First, copy a piece of code with the correct syntax but no formatting. After clicking the formatting button, it will become an indented form. When writing SQL, users will also have keyword association and keyword highlighting effects to help understand SQL. At the same time, there will be a DAG graph on the right to help users locate SQL keywords. This function is practical when SQL is long.

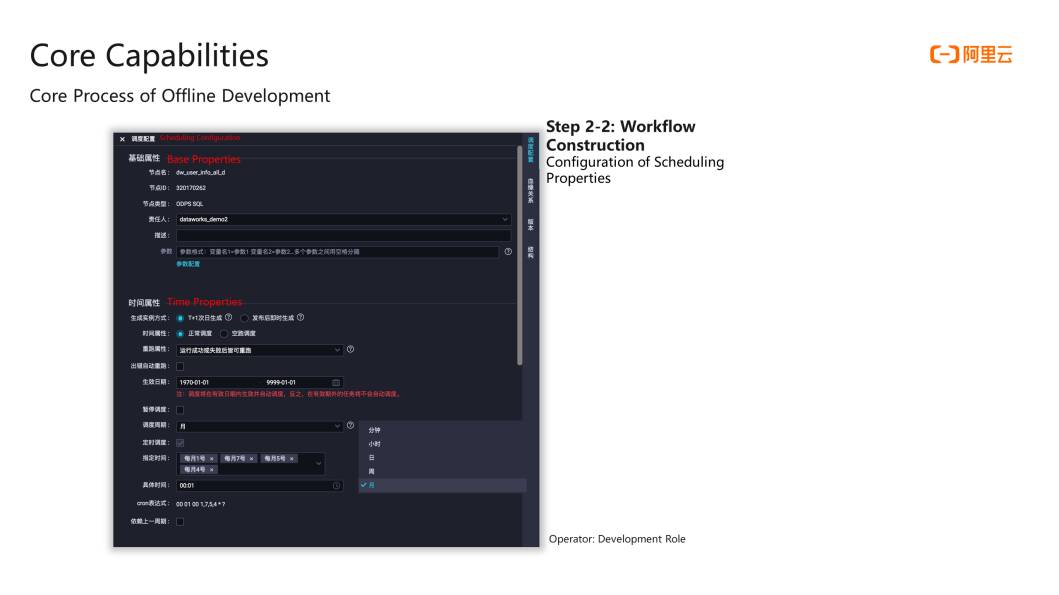

After you write SQL, you can configure the scheduling of this node.

First of all, users should be concerned about whether the current node is scheduled once a month or once a day. The scheduling cycle here is the same as Linux and Chrome Table. Users can set their running cycle for any node. Users can also set the rerun mechanism after the node task fails to run.

After you have configured the scheduling of multiple tasks, you can configure the dependencies between each task. DataWorks has a variety of ways to configure dependencies. The simplest one is the connection method. Users only need to configure the dependencies with a simple connection of a mouse. DataWorks also allows users to configure dependencies by parsing the lineage of upstream and downstream output data.

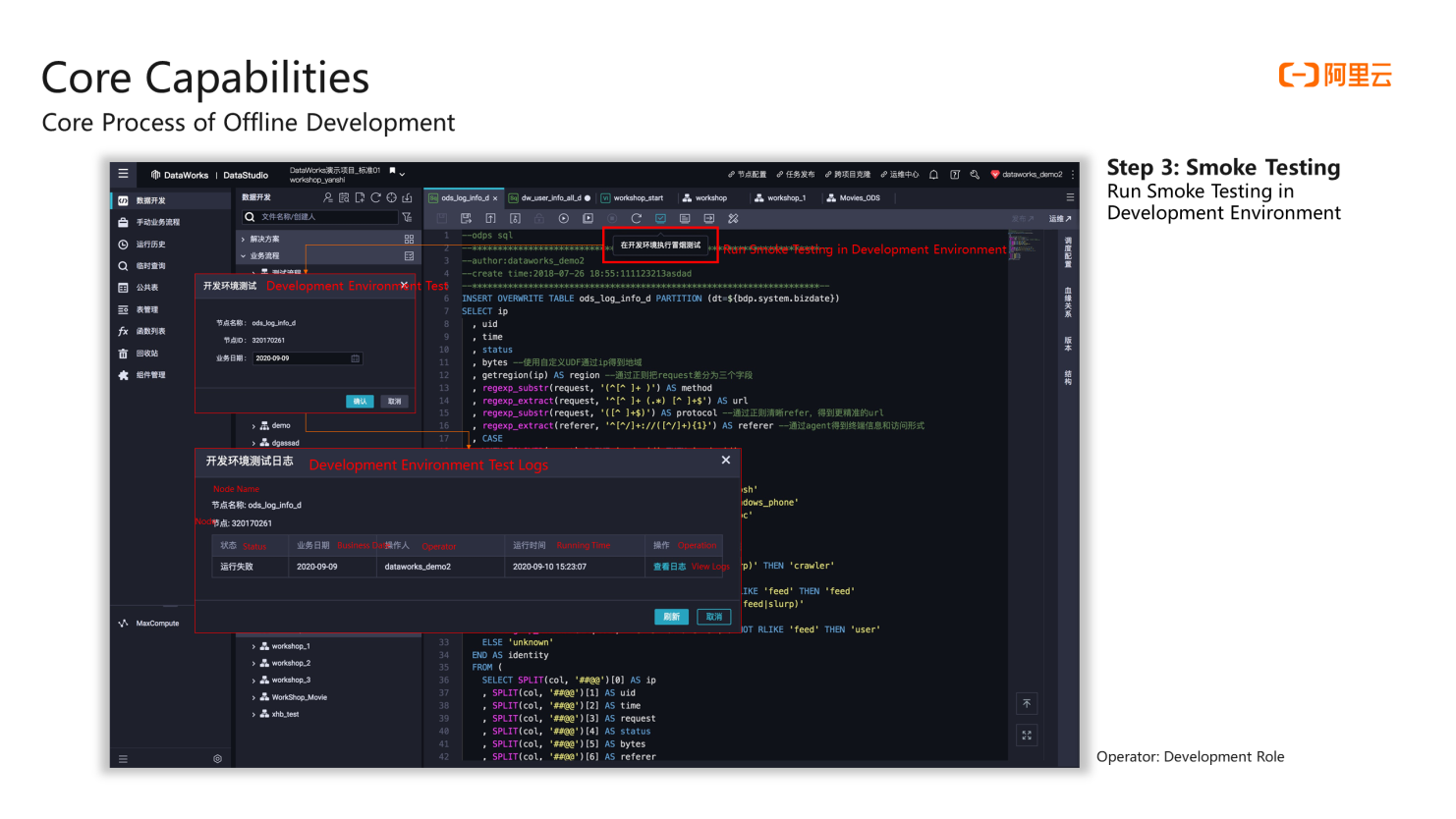

After the configuration is complete, commit each node and proceed to the next step. This step requires users to enter the development interface one by one and then click the development environment to execute the smoke testing. At this time, users will be allowed to select a business date. The log of this smoke testing can be viewed on the page. If the task is successfully executed, it will be displayed. If it fails, the reason for the failure can be viewed.

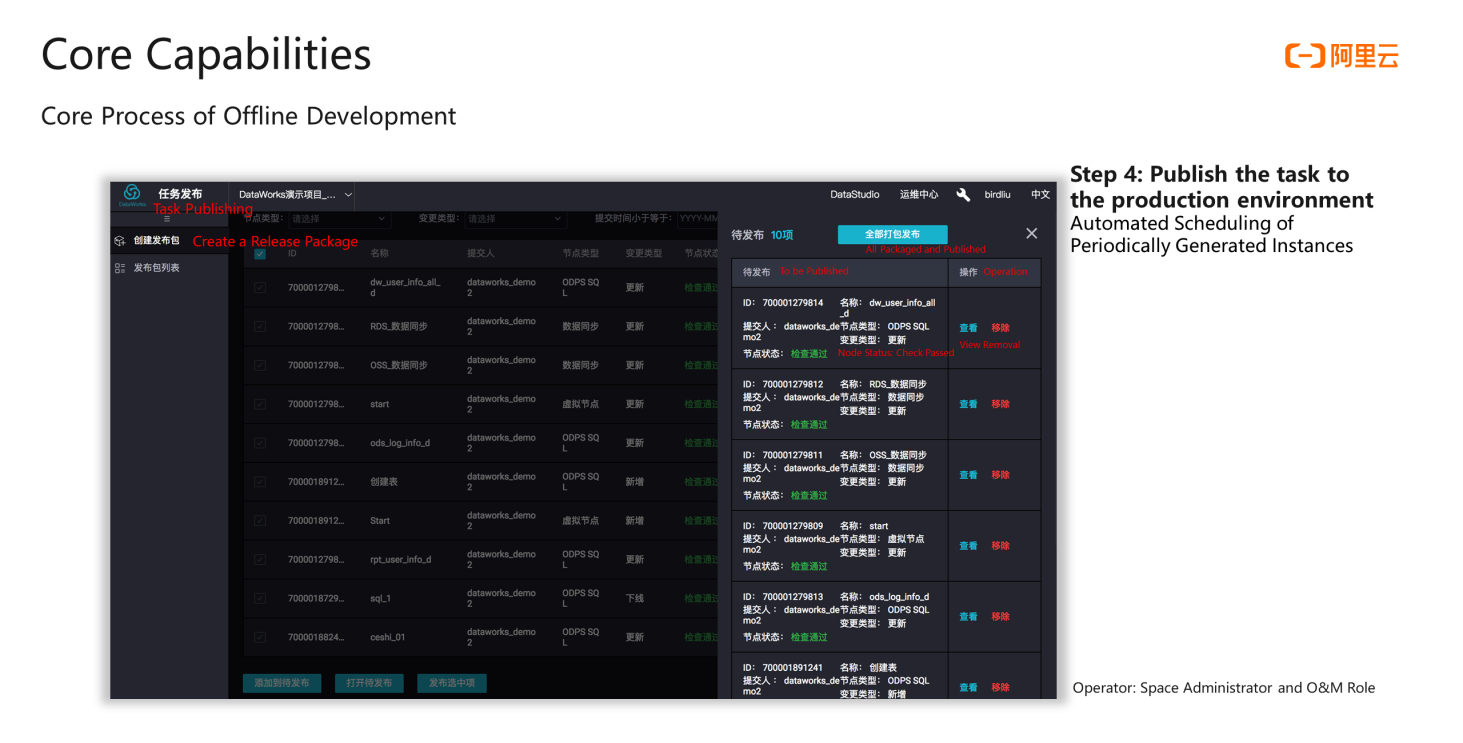

After each task performs smoke testing in the development environment and succeeds, users can notify the O&M or administrator to go to the task release page in the upper-right corner to review and publish the task (as shown in the following figure):

This page will show all the tasks submitted by the developer, and you can click to compare the code differences before and after the two releases. If the administrator judges the code to be released has no effect on the online environment at this time, and the released code also meets the business requirements, you can click all packaged and release. The tasks you wrote will be released to production.

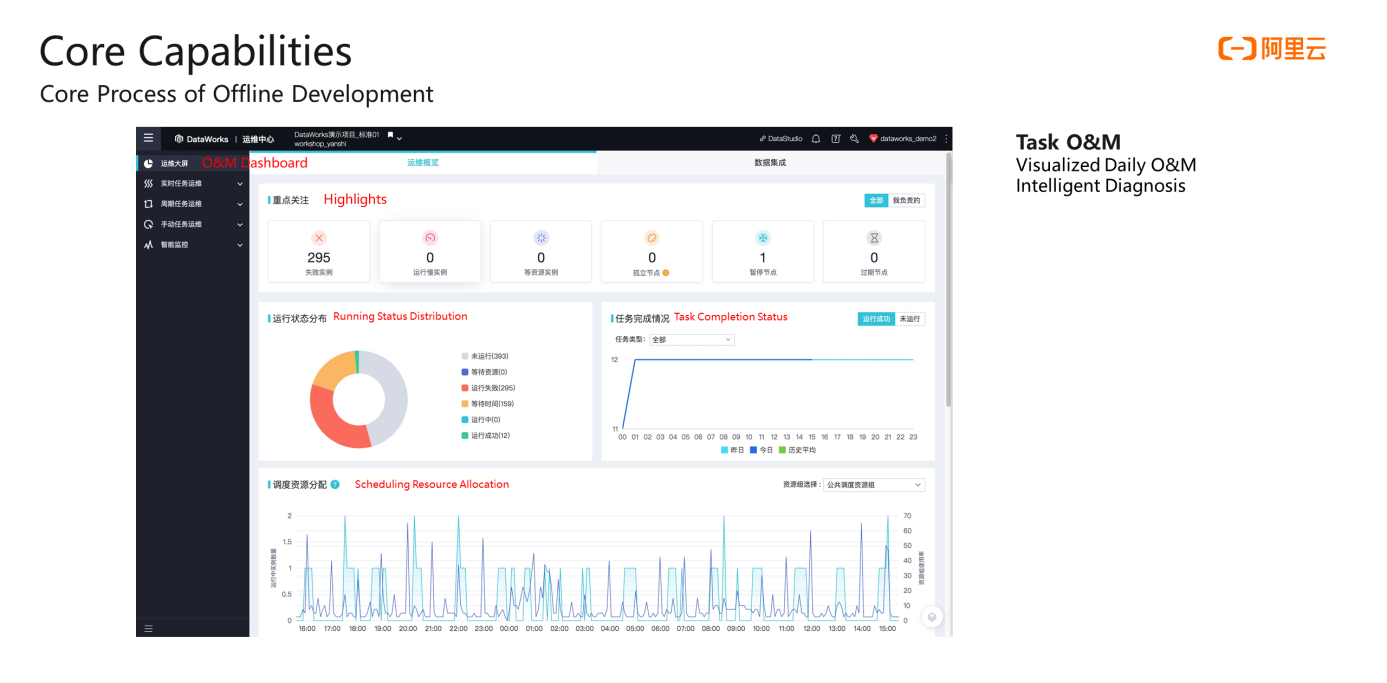

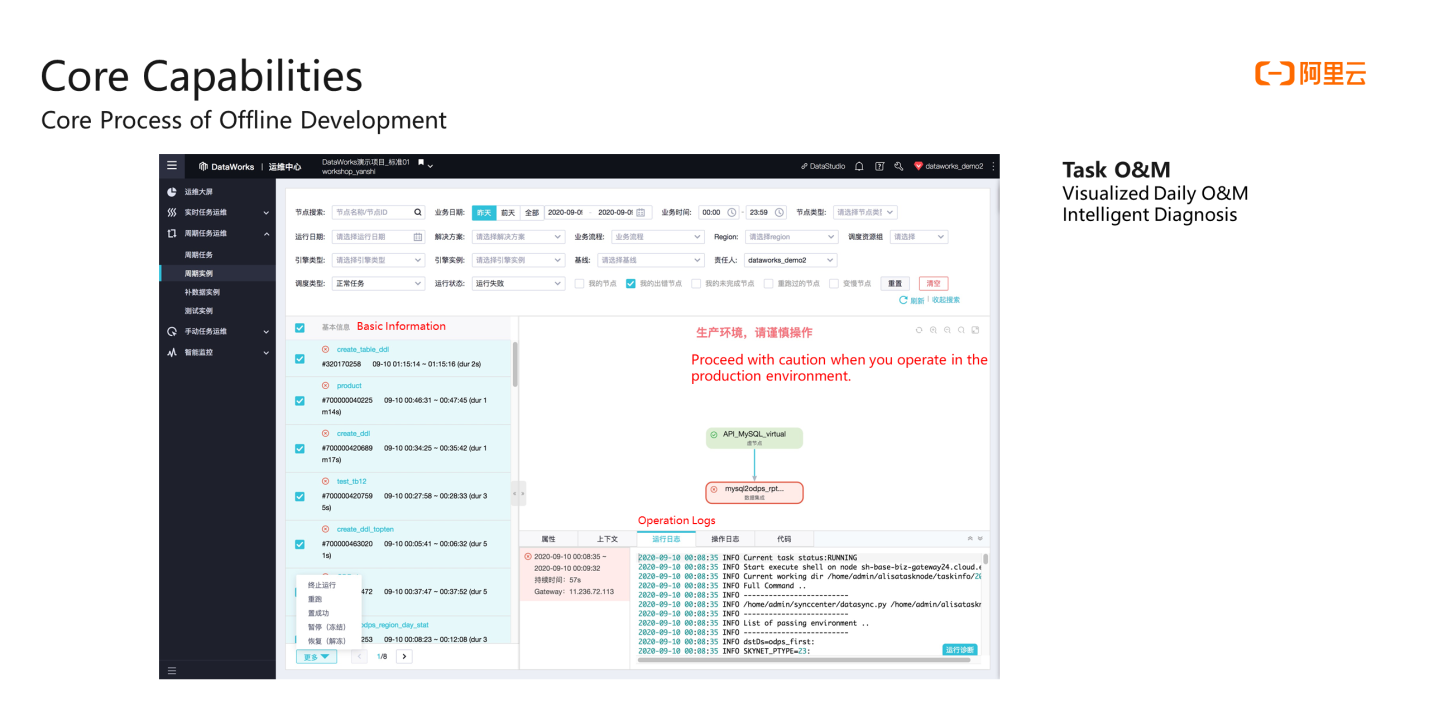

After you release it to the production environment, you can perform daily O&M. In the O&M center of DataWorks, you can complete all daily O&M operations and diagnose the running status. Users can see the daily operation on the homepage dashboard of the operation and maintenance center, such as how many tasks have been successful today and how many tasks have failed. At the same time, you can also see the comparison between today's and yesterday's tasks and the comparison with the historical average.

When a task is slow or fails, you can view the problem in logs:

You can view all information by entering the recurring instance menu. You can click to view a task. You can see the overall log of the task operation and diagnose the operation on the right-side view.

Running the diagnostic function starts with dependency checking. First, check whether all tasks that are dependent upstream of the tasks have been completed. If all the tasks have been completed, check whether the scheduled time of the current task is up. Then, check whether the scheduling resources of DataWorks are sufficient. Finally, check the cause of the task execution error. The operation diagnosis has covered most of the causes of task errors and can translate them into Chinese, which more intuitively tells the user the cause of the current task failure. In theory, these errors should be found and avoided in the development stage and should not be generated in the production environment.

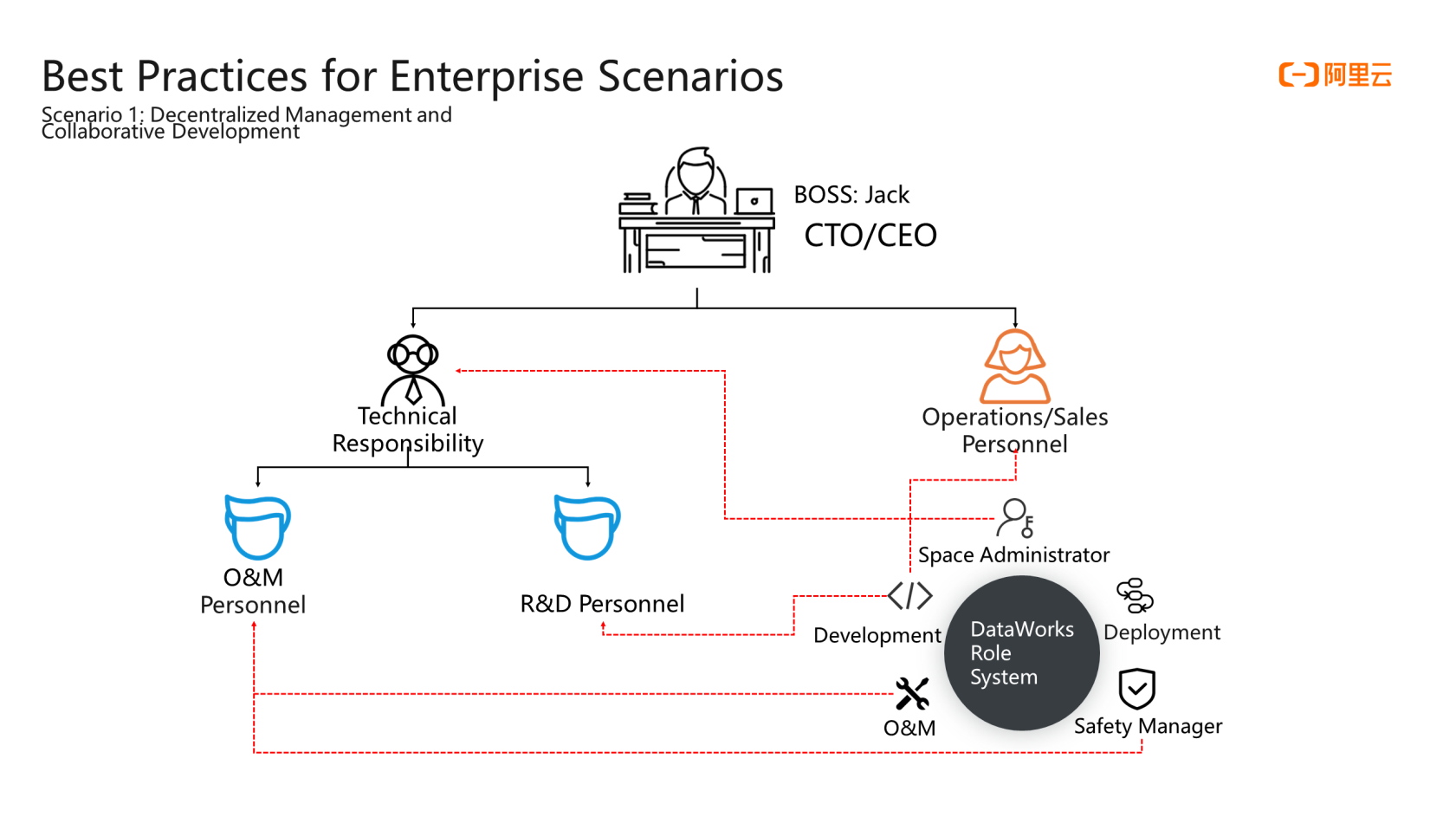

The main account of Alibaba Cloud is all the wealth of an enterprise on Alibaba Cloud. Generally, this main account will be in the hands of the CEO or CTO. How can others reasonably log on to Alibaba Cloud to use big data products?

DataWorks has a built-in role permission system, including the space administrator, development, O&M, deployment, security administrator, and model designer roles. The leader of the enterprise data team generally will be granted the role of space administrator. Developers and sales personnel can be assigned the development role. O&M personnel can be assigned the O&M or security administrator role. Developers are only responsible for writing code and building development processes, workflows, configuring dependencies, smoke testing code, and publishing applications. However, the main responsibilities of operation and maintenance personnel are more biased towards resource management, such as configuring data sources or releasing the reviewed code execution online.

Implementing such decentralized management is simple and does not require particularly complicated operations. You can go directly to member management on the workspace management page in the upper right corner of the DataWorks space. After the roles are assigned, you can use the preceding DataWorks development mode to perform standardized data production and development.

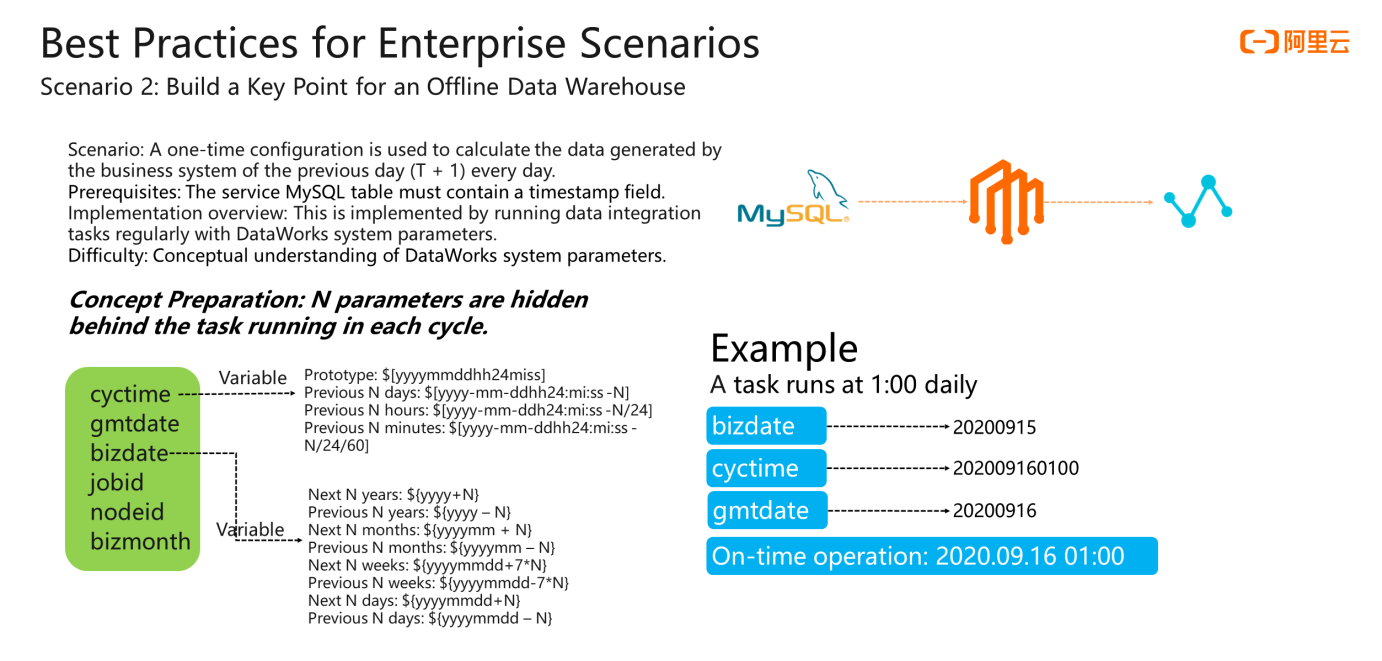

This is the most basic configuration of a basic and important offline development scenario. First, look at the requirements and objectives of the scenario.

First, you need to understand the basic concept before introducing the configuration. Each recurring task runs behind multiple hidden parameters. In this case, the main focus is on Cyctime and Bizdate.

Bizdate refers to the business date. For example, today is September 16, and today the system parses the data from yesterday, which is September 15. Then, Bizdate will be parsed to September 15. If the instance runs today, Cyctime time will be parsed to September 16. It can be accurate down to hours, minutes, and seconds.

For example, if the running cycle of a scheduled task is 1:00 a.m. every day, then the running time on September 16, 2020, is 1:00. When this instance is generated, its time is already clear. It is 202009160100. This is a string that can be accurate down to hours, minutes, and seconds. The Bizdate parameter is parsed as its business date, which is the 20200915 of the previous day. The Gmtdate parameter is the actual running date of this task. If the task runs on time on the 16th, it is 20200916. If the task instance is delayed to run on the 18th for some reason, neither Bizdate nor Cyctime will change, but Gmtdate will become 20200918.

Cyctime and Bizdate can also be transformed. Bizdate can add or subtract N days. Cyctime can also add or subtract N hours or N days or only display hours or months, which can be changed through the flexible transformation of parameters.

After introducing the basic concepts, we will go back to the scenarios and goals of users. The user wants to calculate the data generated by the business system of the previous day by using a one-time configuration.

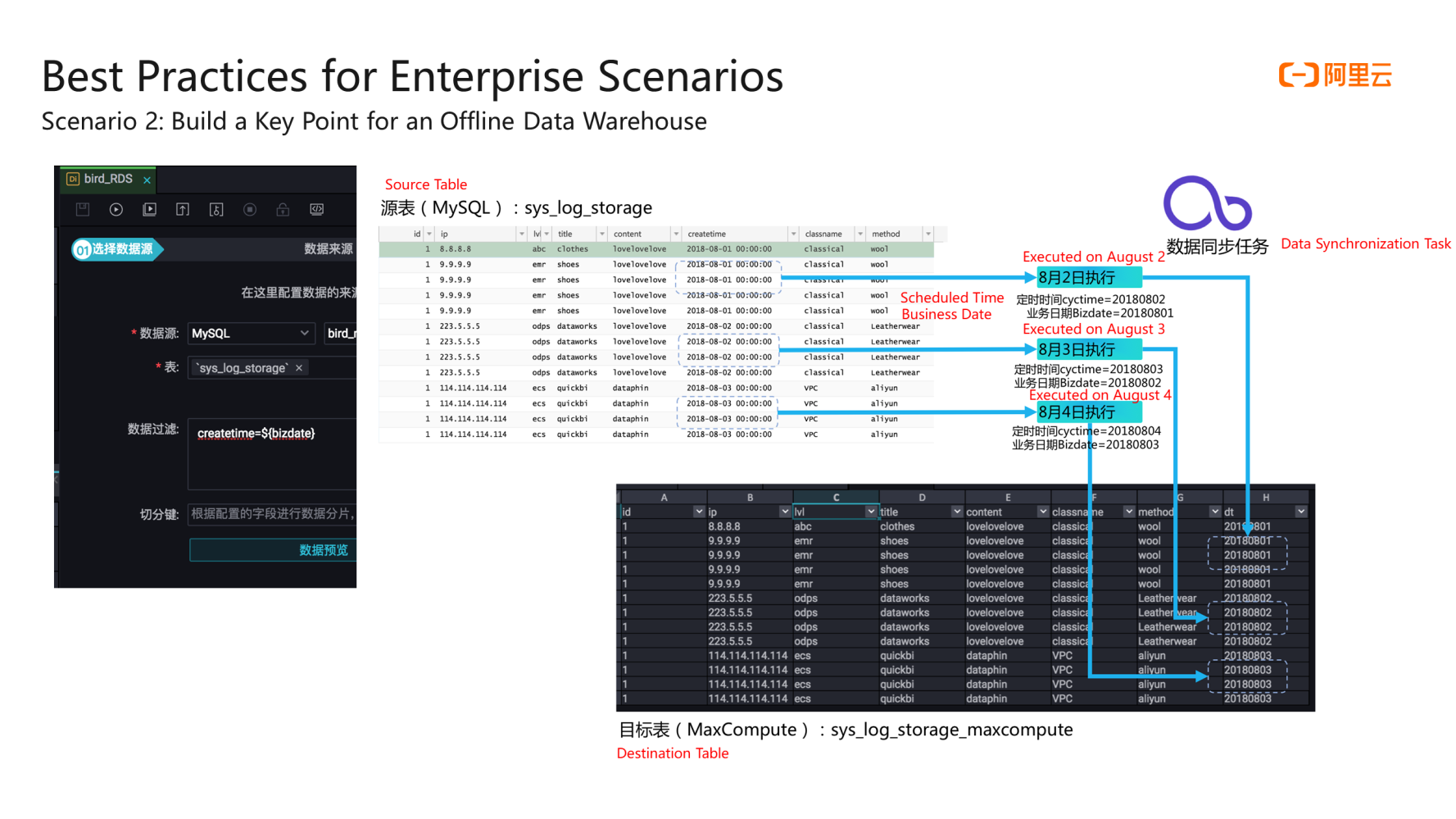

The first step is to synchronize the data from the business system. First, you need to create a synchronization task. The data source is MySQL, and the destination is MaxCompute (ODPS). The data warehouse on the data source side is more important. If the timestamp field of the MySQL table is Createtime, the Bizdate parameter can be used to filter. Bizdate should also be written in its partition for the MaxCompute table on the destination side. The meaning of this configuration is that when the task is run on each day, the data record value whose MySQL timestamp is equal to that day (Bizdate) is retrieved, and these data records are written to the partition of the MaxCompute table on that day.

If a synchronization task is configured and executed on August 2, 2018, the date parsed at this time is 20180801, and the Bizdate is the 20180801 for the original MySQL table, which corresponds to the filter condition. The data whose value in the Createtime column is 20180801 is extracted by the filter condition and written to the 20180801 partition in the MaxCompute table.

Synchronization tasks can be performed this way, like computing tasks. After the data extraction is completed, the user will calculate the data extracted every day through the calculation task of each day. Similarly, SQL code can also retrieve system parameters (Bizdate and others).

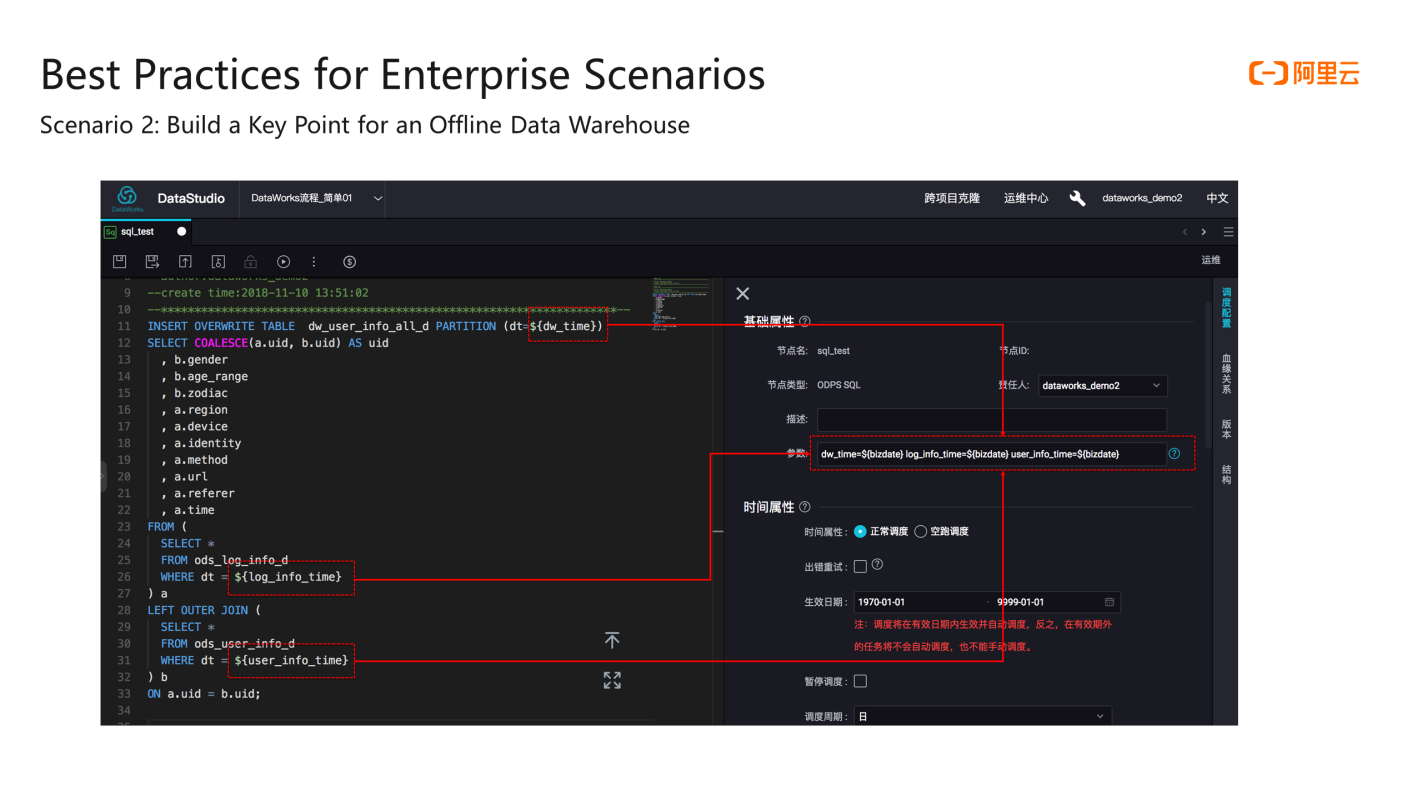

The SQL statement in the preceding figure shows the partitions added to the ods_log_info_d and ods_user_info_d tables are joined every day. Then, it is written to the latest partition of the dw_user_info_all_d every day. The parameter name can be defined according to the scenario in the code.

In the scheduling configuration on the right side, you can assign a value to each custom parameter. In the configuration, you can see that the three custom parameters dw_time, log_info_time, and user_info_time used on the left side SQL statement are all assigned to the right side system parameters. If it passes a smoke testing or is submitted to production for testing, the three parameters on the left side will be parsed to the date of the business running on that day when the data is replenished or scheduled to run. This way, the entire offline data development scenario is implemented.

An Introduction and Best Practice of DataWorks Data Governance

1,349 posts | 478 followers

FollowAlibaba Cloud MaxCompute - March 2, 2020

Alibaba Clouder - February 11, 2021

Alibaba Cloud Native - August 14, 2024

Alibaba Cloud Community - March 4, 2022

Alibaba Cloud Indonesia - January 12, 2024

Alibaba Cloud Community - March 8, 2022

1,349 posts | 478 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn MoreMore Posts by Alibaba Cloud Community