By Meng Shuo, Product Expert of Alibaba Cloud Intelligence

How can we accelerate data science with distributed Python on the cloud? If you are familiar with data science technology stacks, such as NumPy, pandas, or scikit-learn, and are limited by the computing performance of the platform, MaxCompute in this article allows you to use parallel and distributed technologies to accelerate data science. In other words, if you are familiar with NumPy, pandas, and scikit-learn, it will not be difficult to use the distributed Python capabilities of MaxCompute.

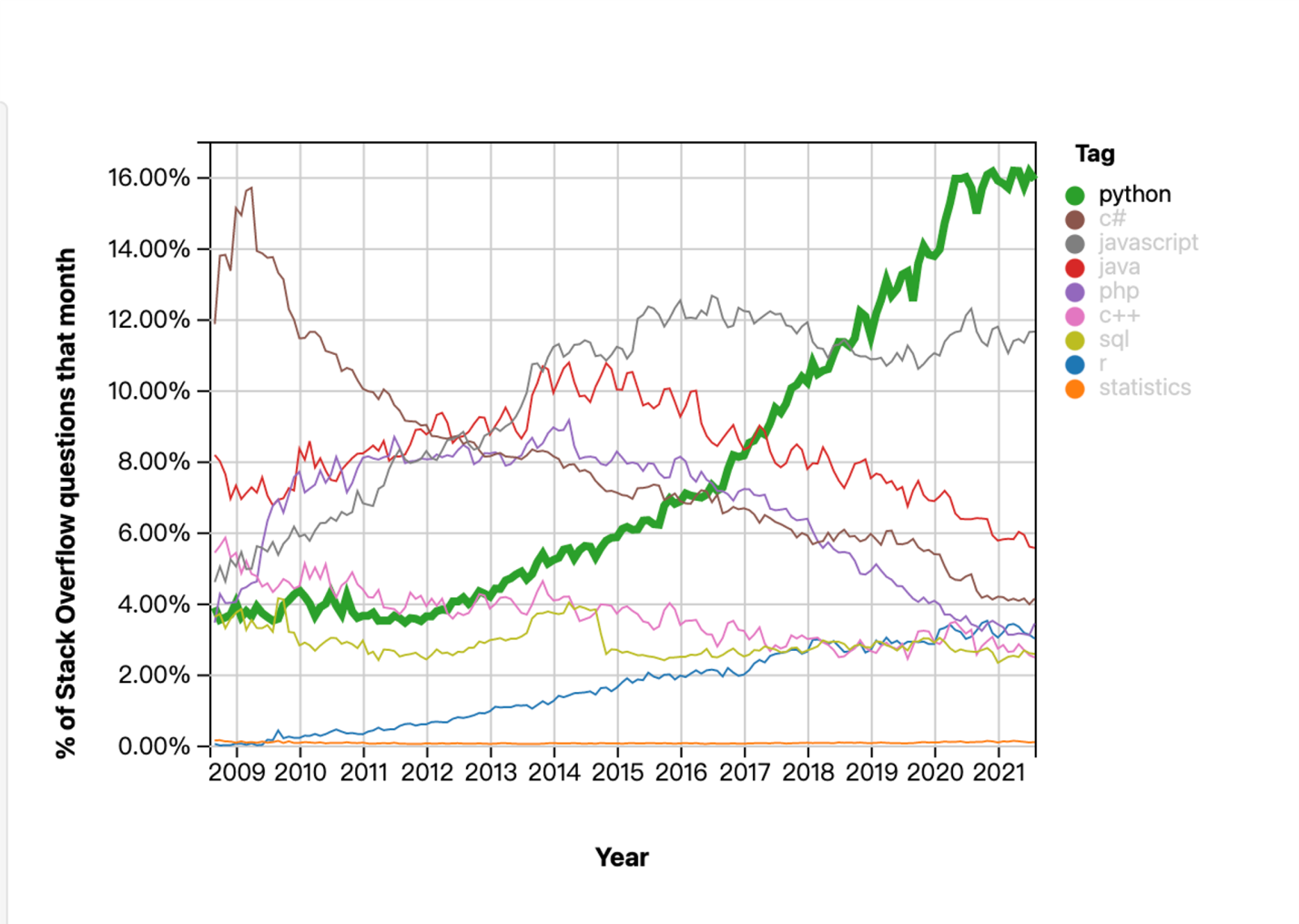

Python has grown to become the dominant language in data analytics and general programming.

Based on the statistics of stack overflow, the development trend of Python, C#, JavaScript, Java, PHP, C++, SQL, and R from 2009 to 2021 is shown in the following figure. It shows that Python is on the rise, especially in the data analysis and data science fields. It is almost the top programming language in these fields. This is the development trend of Python ecology. However, in the data analysis, data science, and machine learning fields, programming languages are not the only thing that matters.

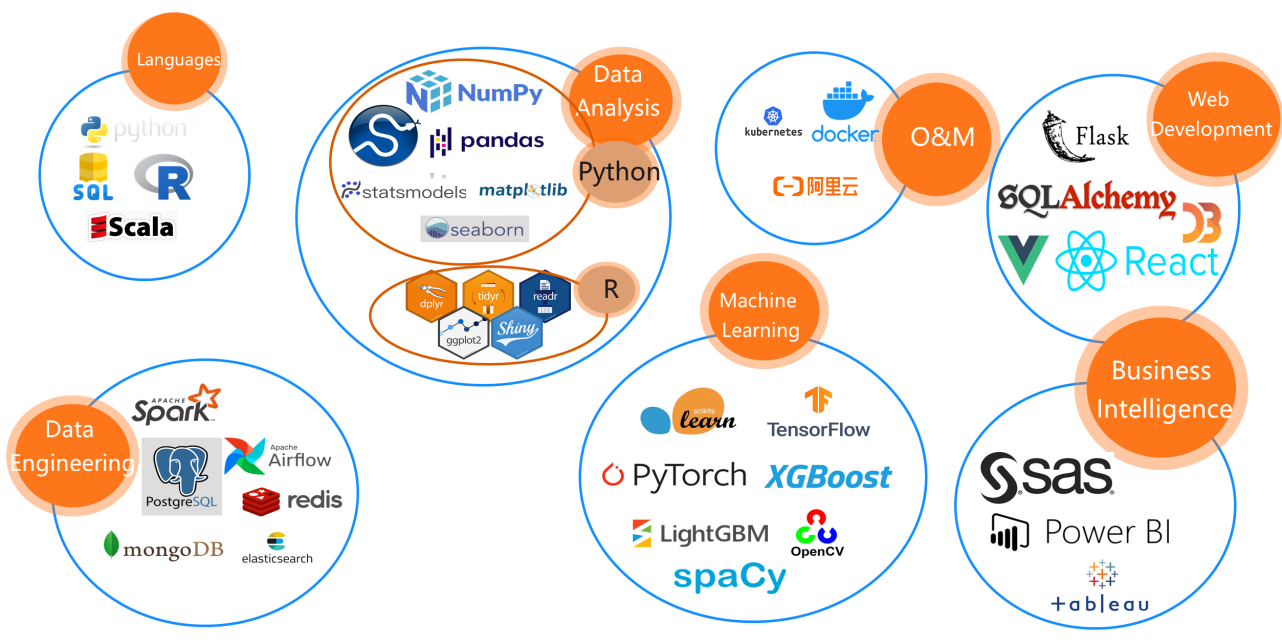

Programming languages are only one aspect of the data science field. Python is one language option, but some data analysts use SQL, the traditional analysis language, R, or the functional programming language, Scala. The second aspect is a database for data analysis, such as NumPy and pandas, or a visualization-based library. Clusters where Python runs also contain some O&M technology stacks, for example, being able to run on Docker or Kubernetes. The early stage of data analysis and data science involves data cleaning and some extract, transform, and load (ETL) processes. Some cleaning works involve more than one or two steps but require the use of workflow to complete the overall ETL process. It involves the most popular components, such as Spark and the workflow scheduler Airflow. Storage is needed to present the final result. Generally, PostgreSQL database or memory database Redis is used, connecting to a BI tool externally to display the final result. There are also some machine learning platforms or components, such as TensorFlow and PyTorch. In web development, Flask will be used for the quick establishment of a frontend platform. Finally, business intelligence software is involved, including BI tools such as Tableau and Power BI or SaaS software, which is often used in the data science field.

This is a relatively complete overview of the entire data science technology stack. We start with programming languages and find that data science for large-scale data requires all aspects of consideration.

MaxCompute is a cloud data warehouse in SaaS mode that is compatible with Python.

PyODPS is a Python version SDK for MaxCompute. It provides basic operations on MaxCompute objects and a DataFrame framework (a two-dimensional table structure, which adds, retrieves, updates, and deletes data records) to analyze data on MaxCompute.

SQL and DataFrame tasks submitted by PyODPS are converted into MaxCompute SQL for distributed operation. If a third-party library can be run in the form of UDF + SQL, it can also run in a distributed manner.

Sometimes tasks need to be split into subtasks to run them in a distributed manner in Python. For example, native Python does not have distributed capabilities for large-scale vector computing. We recommend MaxCompute Mars here. It is a framework that can split Python tasks into subtasks for running.

MaxCompute also supports using third-party packages in Python. The steps are listed below:

Determine the third-party packages to be used:

Find all dependencies for the corresponding packages:

Download the corresponding third-party packages (pypi):

Convert uploaded resources into a Resource object of MaxCompute. By doing so, third-party packages will be used when we create functions and reference custom functions.

def test(x):

from sklearn import datasets, svm

from scipy import misc

import numpy as np

iris = datasets.load_iris()

clf = svm.LinearSVC()

clf.fit(iris.data, iris.target)

pred = clf.predict([[5.0, 3.6, 1.3, 0.25]])

assert pred[0] == 0

assert misc.face().shape is not None

return xIt was originally called MatrixandArray.

Tips: Scientific computing involves computer combing data. The procedure is: Excel → database (MySQL) → Hadoop, Spark, MaxCompute. The data volume has changed significantly, while the computing model has not changed. Two-dimensional table, projection, sharding, aggregation, filtering and sorting, relational algebra, and set theory are all required. Scientific computing infrastructure is not a two-dimensional table. For example, although a picture has two dimensions, each pixel point in a picture is not a number (RGB + α transparent channel).

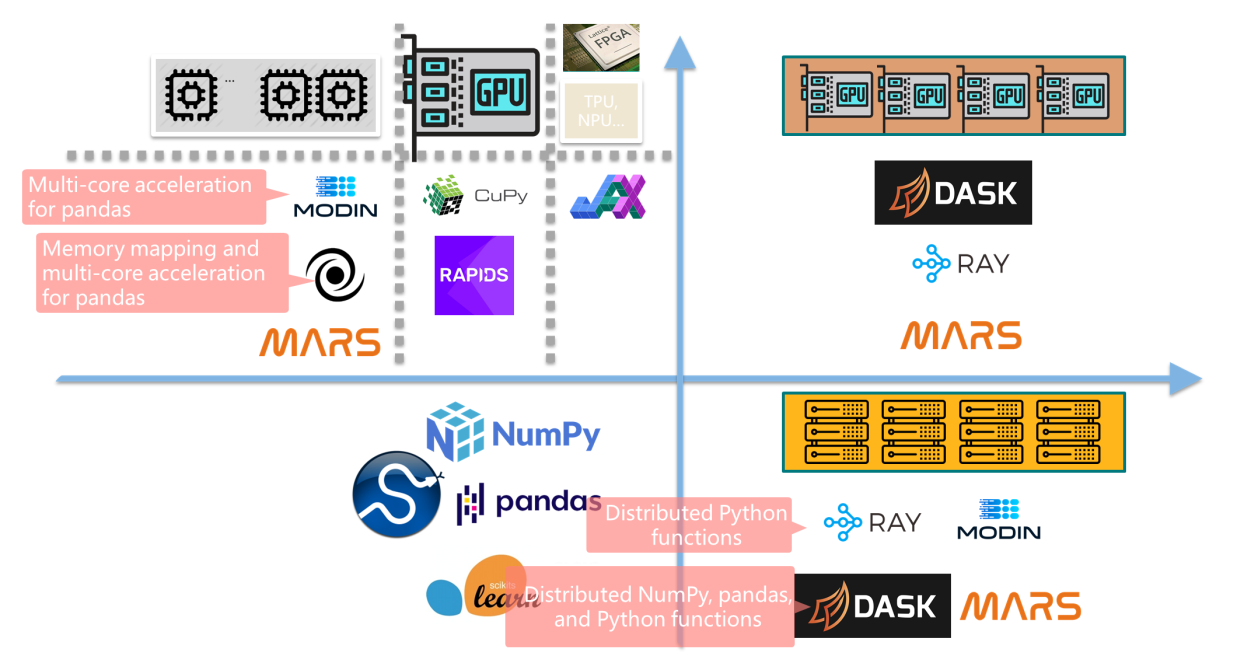

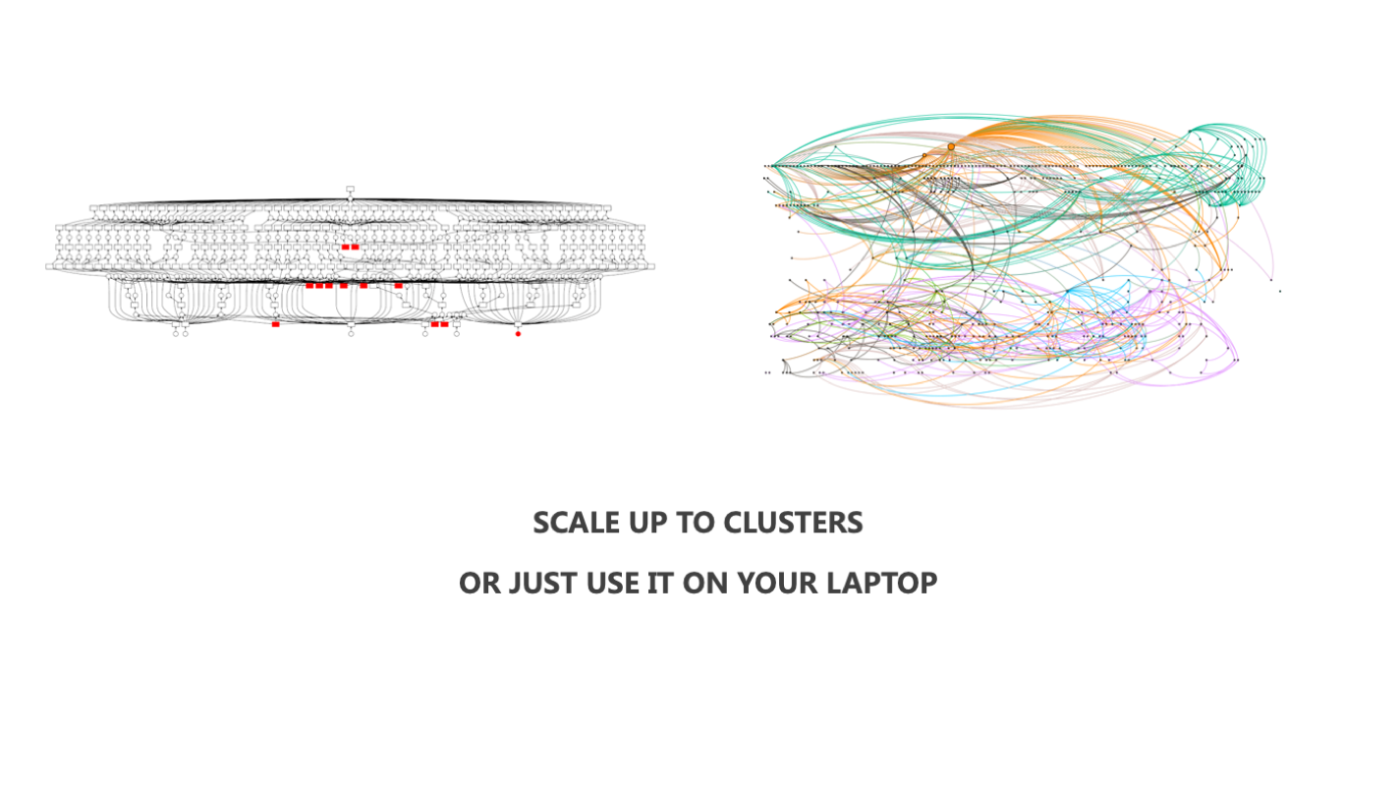

Methods based on DASK or MaxCompute Mars are compatible with scale up and scale out. The lower left of the figure below stands for a way to do data science by running Python libraries on a stand-alone basis. The idea of large-scale supercomputing is scale up, which uses vertical diffusion online to increase hardware capability. For example, the multi-core method can be used. Currently, each computer or server has more than one core, including GPU, TPU, NPU, and other hardware for deep learning. Python running on the hardware will gain acceleration. The technology here includes Modin, which is for the multi-core acceleration of pandas. The lower right part shows some frameworks for distributed Python. For example, RAY is a framework service of Ant. In essence, Mars can run on RAY, which is equivalent to a scheduling service of Python ecology or a Kubernetes service. DASK and Mars are also for distributed Python, but the best mode is to combine scale up and scale out. The advantage of this is that it can be distributed, and hardware capabilities can be utilized on a single node. Mars can only be configured on large-scale clusters and a single-machine GPU cluster. The following figure shows how to accelerate data science:

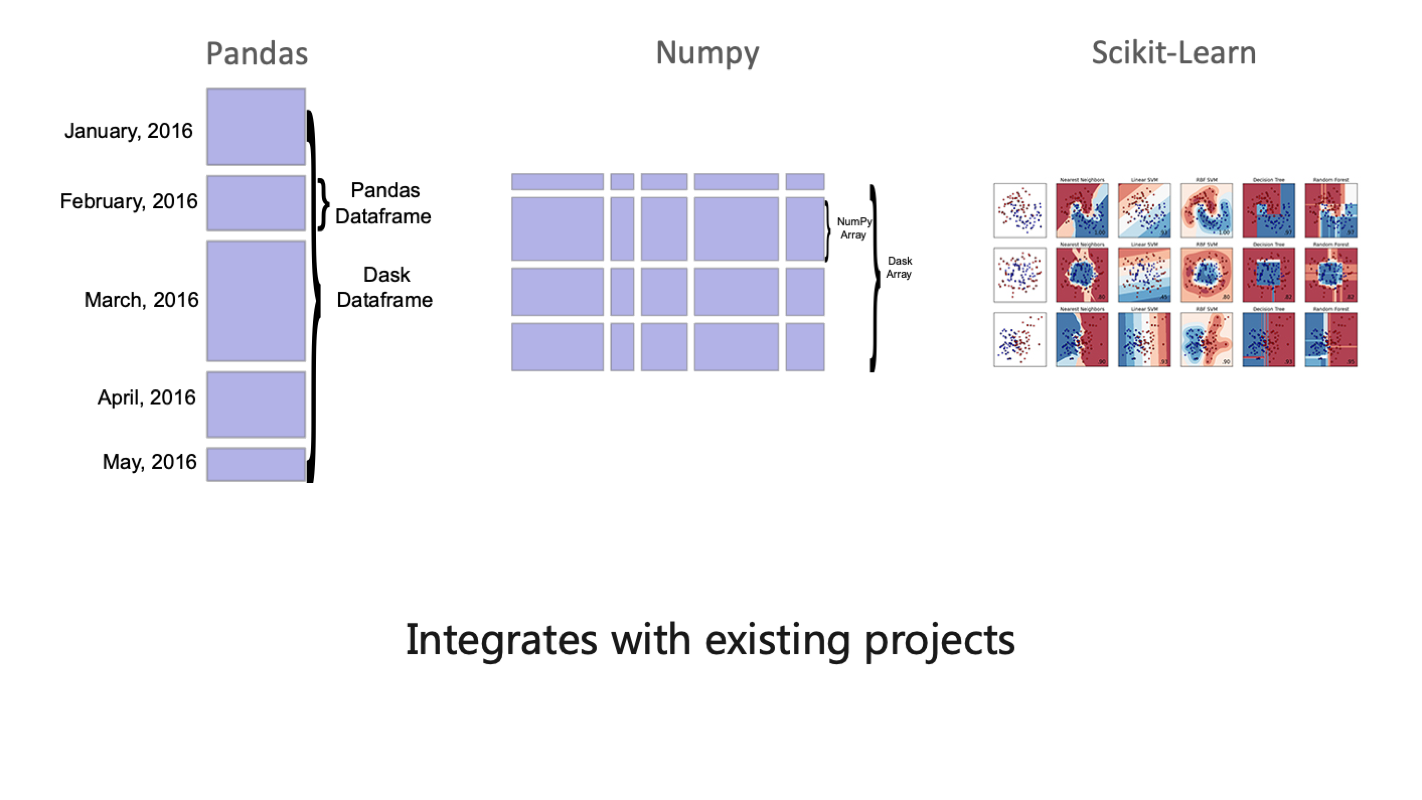

In essence, the design idea of Mars is to distribute the data science library, such as Python, which can split Dataframe, NumPy, and scikit-learn.

The idea is to split large-scale tasks into small tasks for distributed computing. The framework is used for splitting tasks. First, the client submits a task. Then, the Mars framework splits the task and makes a DAG diagram. Finally, the calculation results are collected.

Mars integrates some Python third-party packages, including mainstream machine learning and deep learning libraries. A demo below shows how to use Mars for intelligent recommendation and LightGBM for classification algorithms. They are used to judge whether to send discounts to certain users.

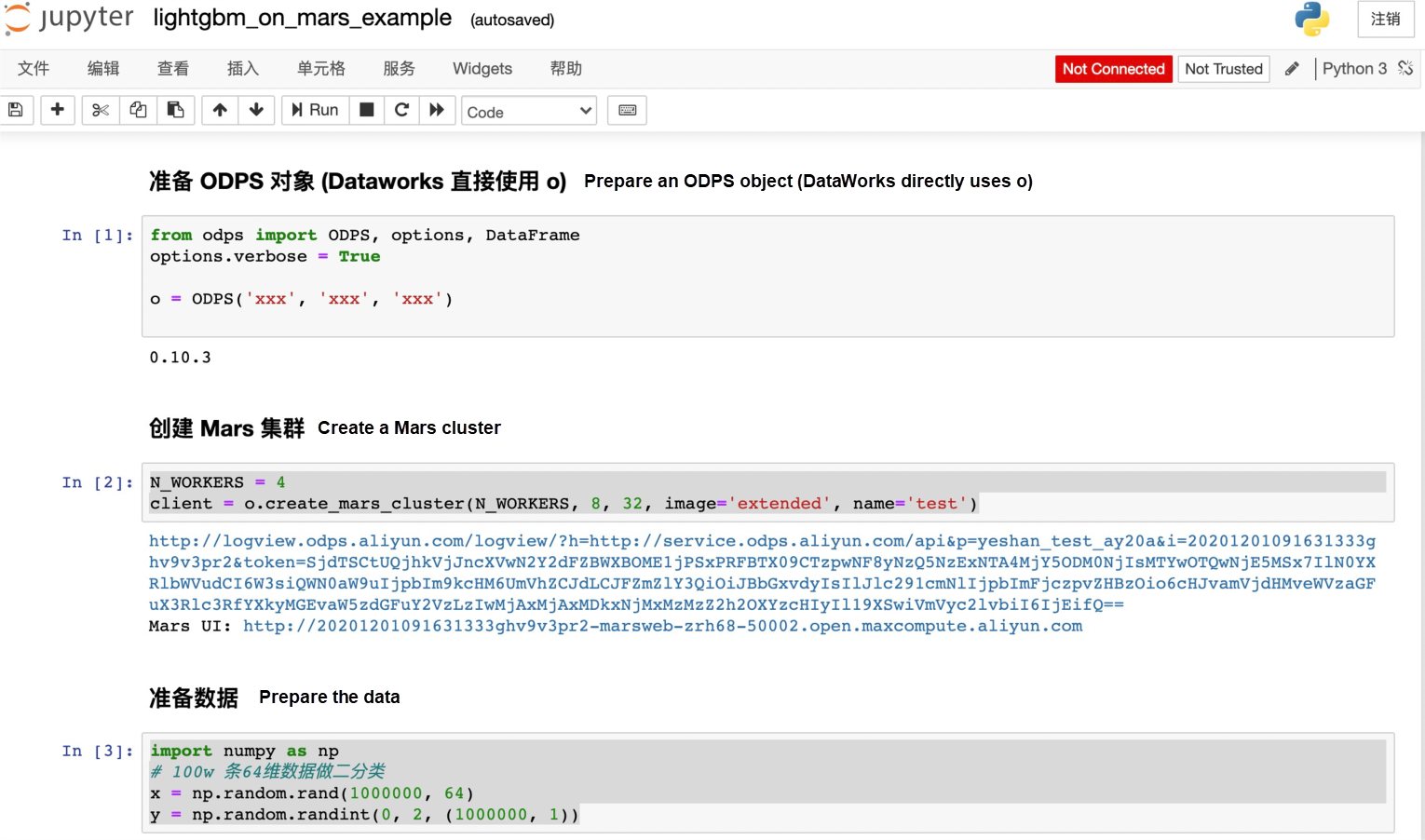

The main step in the first figure is to connect to MaxCompute through AK, project name, and Endpoint information. Next, create a 4-node cluster with an 8-core CPU and 32-GB memory per node, apply the extended extension package, and generate training data with 64-dimension description information of 1 million users.

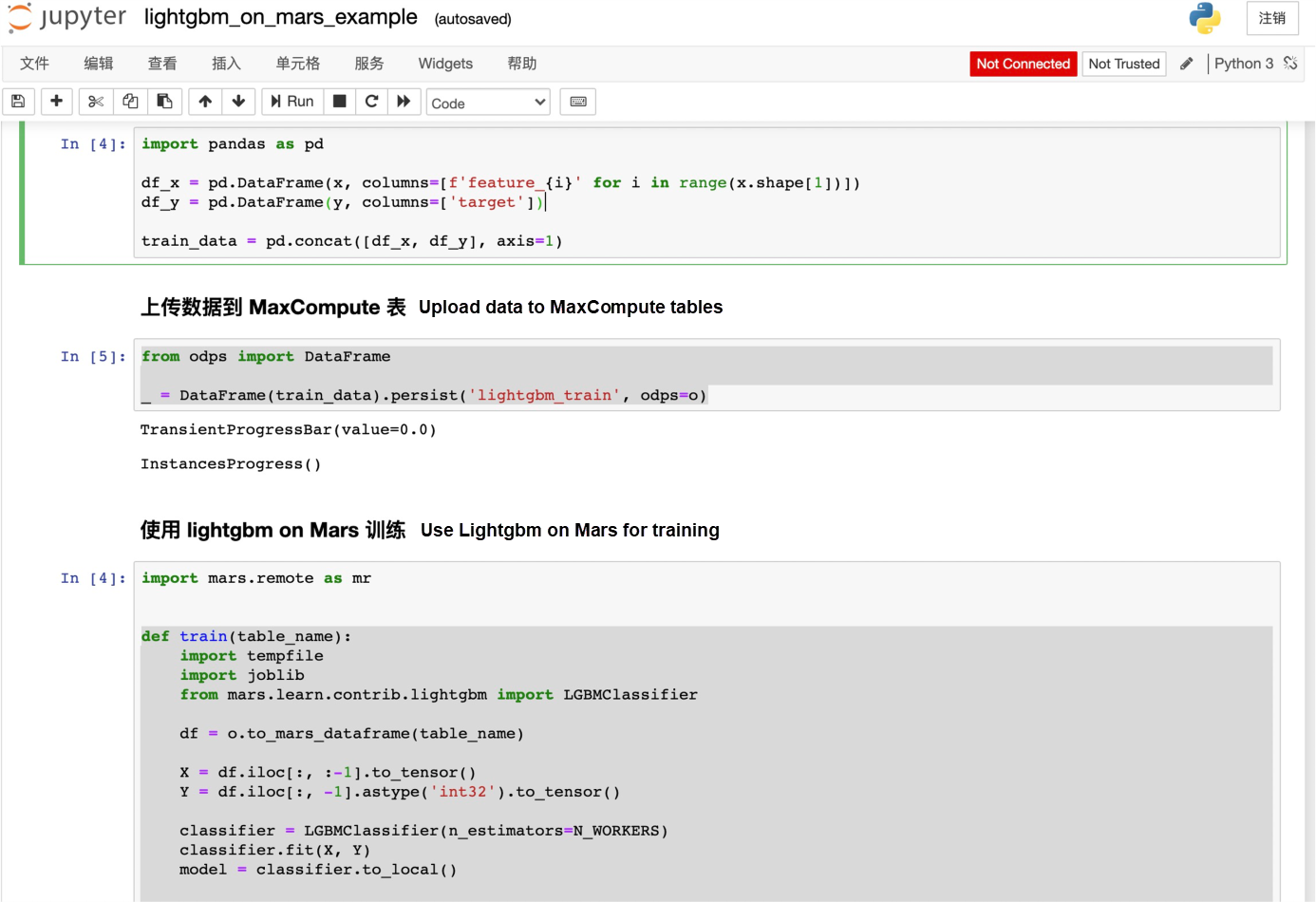

Model training using the LightGBM 2 classification algorithm:

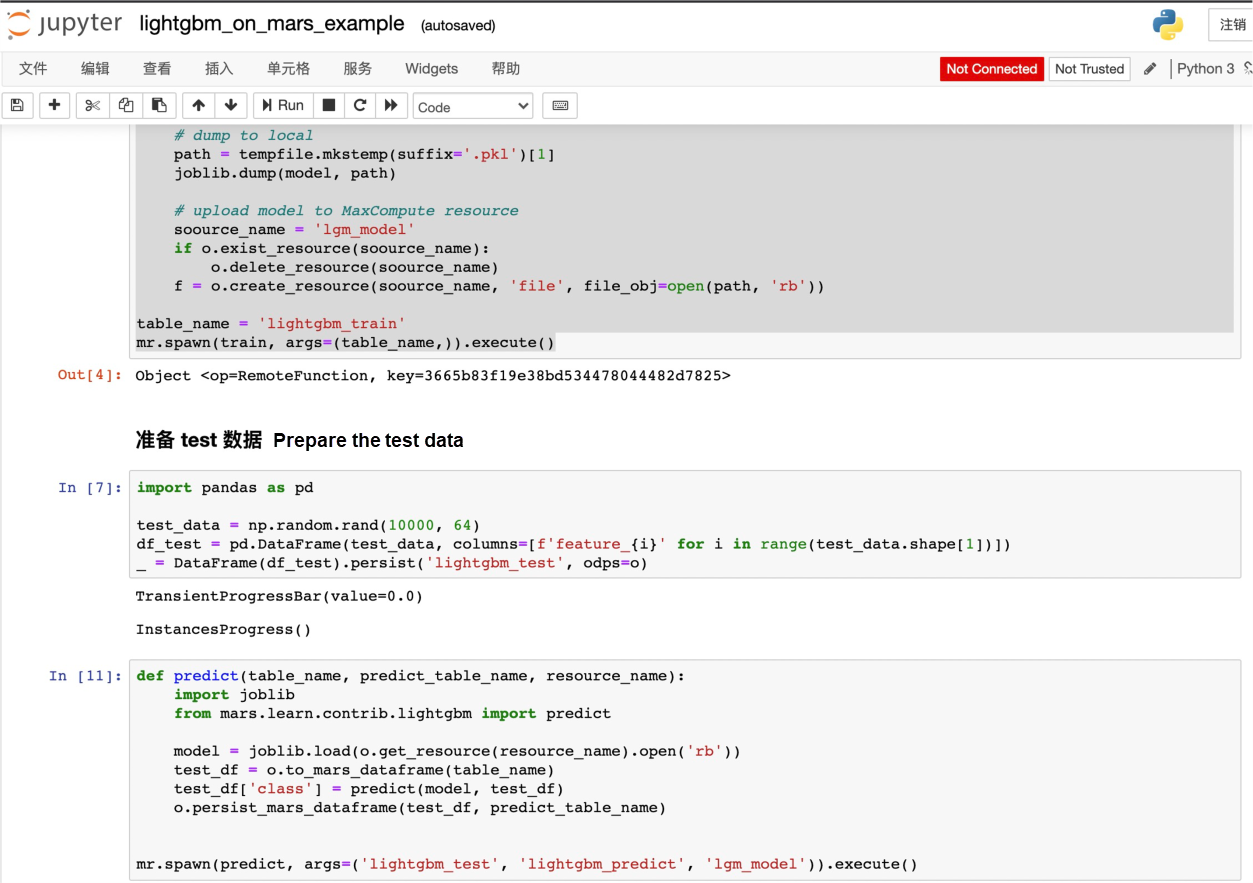

Send the model to MaxCompute as a resource object through create resource and prepare the test set data.

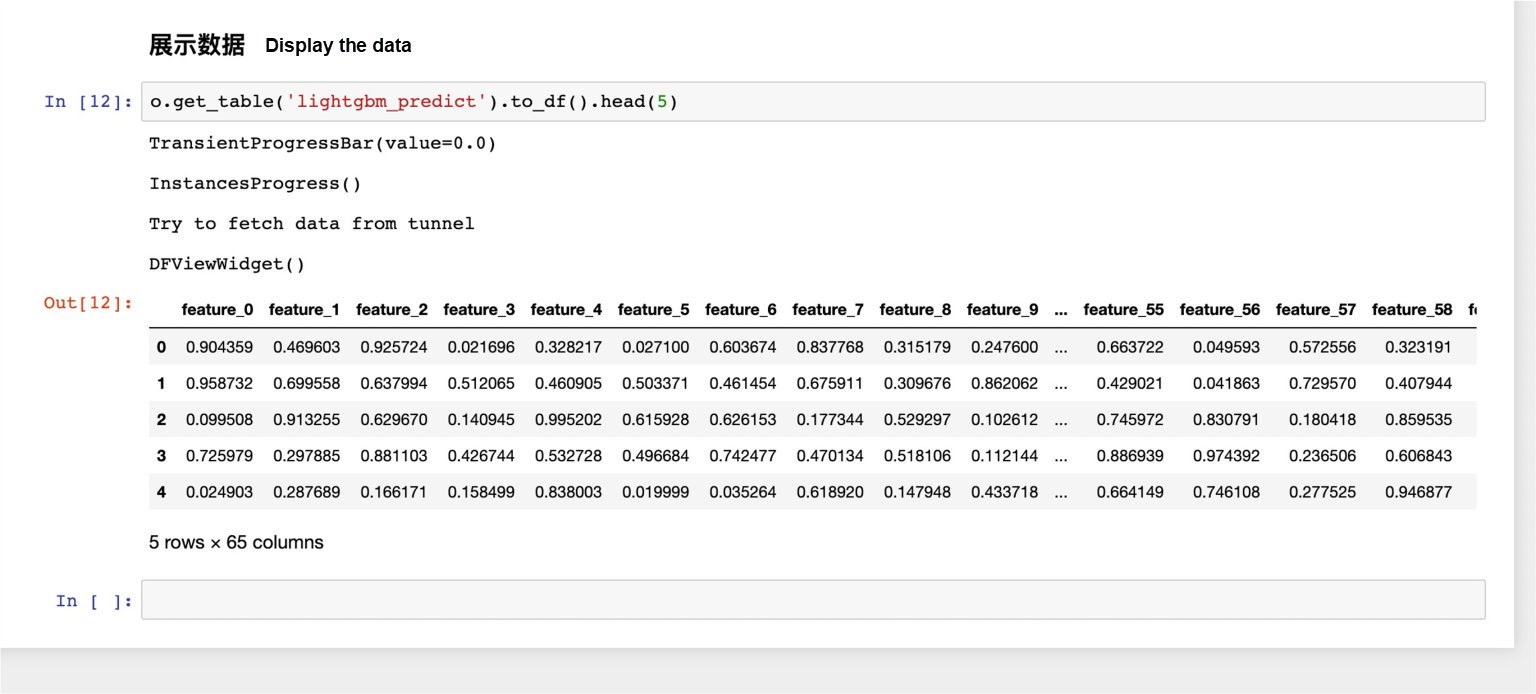

Use test set data to validate the model and derive a classification:

Crowd Selection and Data Service Practices Based on MaxCompute & Hologres

The Practice of Semi-Structured Data Processing Based on MaxCompute SQL

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - March 24, 2021

Alibaba Clouder - April 22, 2021

Alibaba Cloud MaxCompute - March 20, 2019

Alibaba Cloud MaxCompute - March 20, 2019

Alibaba Cloud MaxCompute - March 2, 2020

Alibaba Cloud MaxCompute - May 5, 2019

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn MoreMore Posts by Alibaba Cloud MaxCompute