By Zichou

This presentation offers an overview of canary release practices from a DevOps perspective, focusing on four main aspects:

First, the problems to be solved by canary release.

Second, four typical scenarios of canary release.

Third, how to integrate canary release into the application development process, that is, to integrate canary release with DevOps work.

Fourth, for external traffic canary scenarios, demonstrate how to use tools to implement it.

In the early days of software development in industries like finance and telecommunications, the concept of canary release did not exist. They mostly deployed updates in full to production, with lengthy testing processes that involved testing the entire system rather than individual microservices. This approach aimed to ensure that when the businesses reached the production stage, it was as secure as possible and free from safety risks. In this case, when the business reaches the production stage, the cost of rollback or cancellation is high and difficult to achieve.

Therefore, in the development process of traditional software, developers have a lot of mental burden on how to ensure that the risk is low enough after the new version enters the production stage.

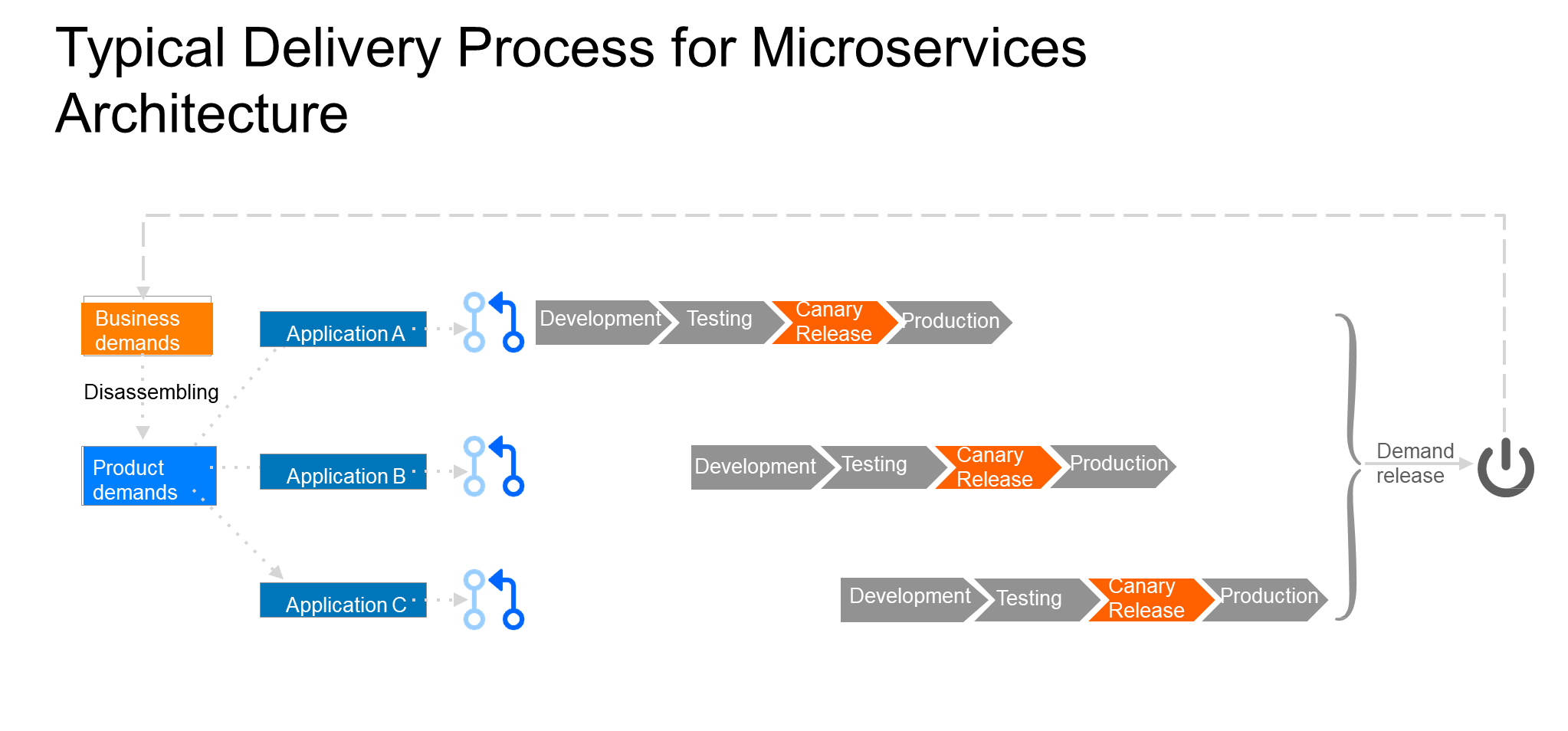

In the microservices architecture, most developers work in the context of web application development, where the delivery process for their requirements has undergone significant changes. The delivery units have become smaller, with each delivery often consisting of one or a few small requirements. These changes typically involve modifications to a few applications, and they do not require simultaneous deployment, allowing for on-demand deployment. As shown in the following figure:

After receiving requirements from the business side, upon decomposition, it was found that three applications need to be modified. Each of these applications will go through the phases of code submission, development testing, and production deployment. During this process, there will also be a canary release phase, where traffic is redirected to the new version in advance to check for any issues before full deployment. If there is no problem, deploy the application. Once all three applications are successfully deployed, the feature is activated, making the business requirements visible to users.

Compared to traditional software development, especially in industries like finance and telecommunications, the biggest difference in typical requirement delivery under microservices architecture is the ability to perform real validation on a small scale. Delivery units are smaller, which helps in controlling risks effectively.

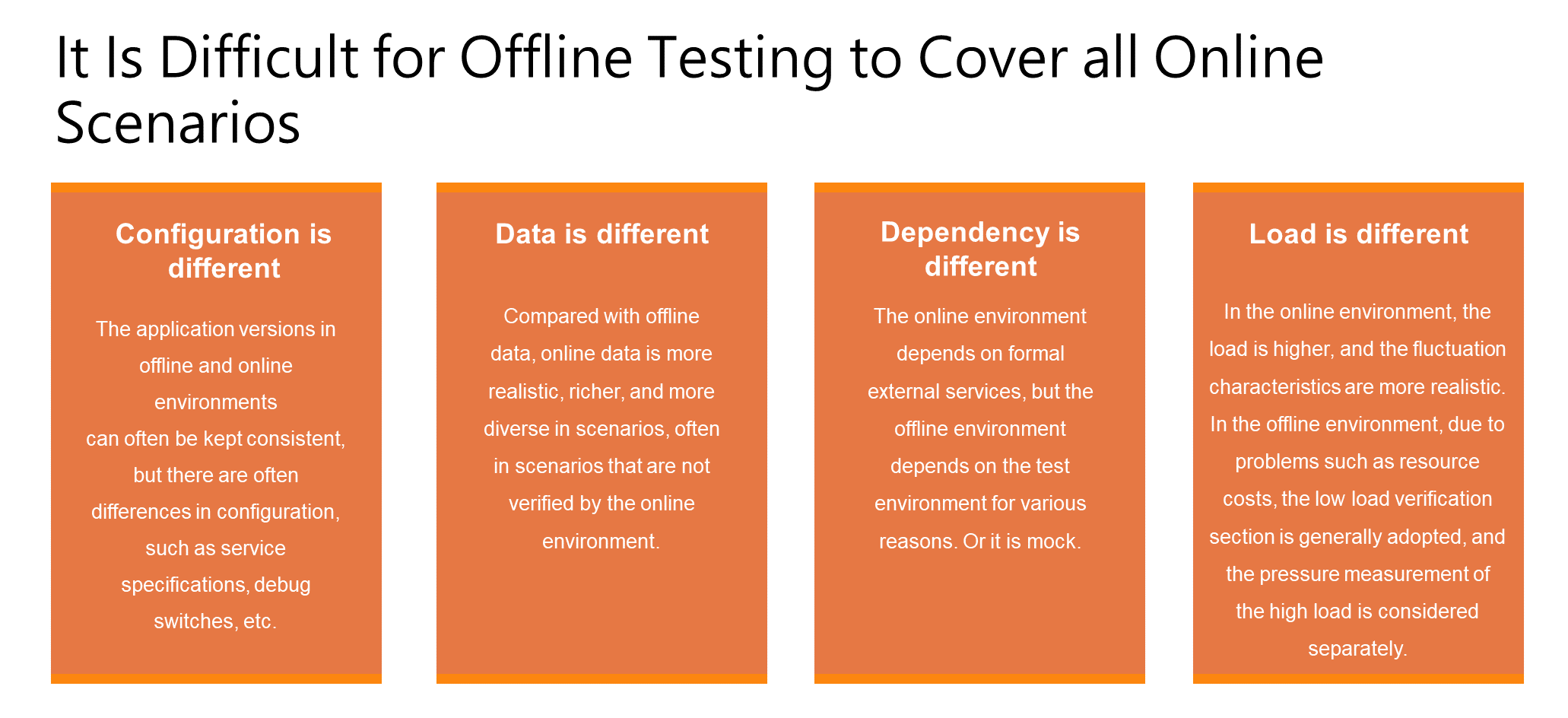

It is difficult for offline testing to cover all online scenarios. Even if the test is well designed, there will still be differences. Simply put, offline testing is different from online testing in at least four aspects.

First, the configuration is different. Keeping application versions consistent between offline and online environments is not difficult, but there are often differences in configuration, such as service specifications and debugging switches.

Second, the data is different. Online data is more realistic and richer, and the scenarios are more diverse. For example, in network development, even if various scenarios are simulated offline, problems may still occur online.

Third, dependencies on different objects. Dependencies on external services, such as certain financial software relying on interfaces from the People's Bank of China, cannot be met in the offline environment and require the use of simulated interfaces.

Fourth, the load is different. Online loads are higher and exhibit more realistic fluctuations. For example, at certain times, there may be a sudden surge in traffic, resulting in different peak and valley values of traffic. In offline environments, considering resource costs, the workload is generally lower, and stress testing for high loads is typically considered separately. During stress testing, the coverage of scenarios may also be reduced compared to normal testing.

Due to the differences between offline and online environments, it's difficult for offline testing to cover all scenarios present in the online environment. We need to complement offline testing shortcomings with canary release or online testing, fundamentally to reduce the mental burden on developers.

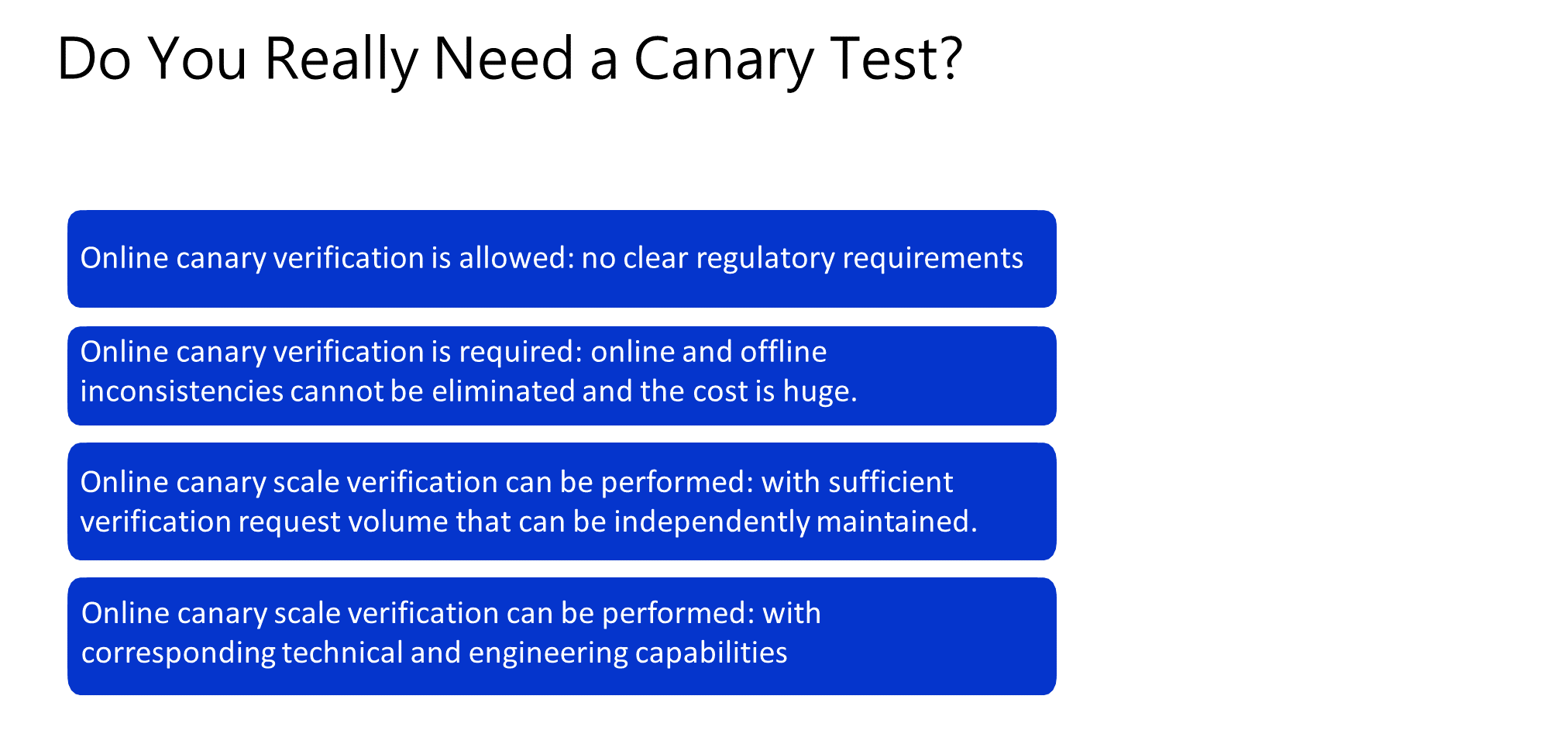

However, the necessity of canary testing still requires careful consideration from various aspects such as business scenarios.

First, whether we should allow online canary validation varies; for instance, certain industries with strict regulatory requirements cannot employ online canary validation.

Second, whether we really need to do online canary verification. Since inconsistencies between online and offline cannot be eliminated, and the cost is high, if the risks caused by not performing canary verification are considered acceptable based on the cost, canary verification may not be performed.

Third, whether we have the conditions to do online canary verification, that is, whether we have the ability to operate independently. In addition, if the number of verification requests is very low and the service page view is very small, the cost of canary verification is relatively high. In this case, canary verification is not required.

Fourth, we can do online canary verification, but also need to have the corresponding engineering and technical capabilities, as well as the preparation of the corresponding tools.

There are four common scenarios of canary release, namely, simple batch, external traffic canary, external + internal traffic canary, and full-link (traffic + data) canary. The four scenarios are progressive.

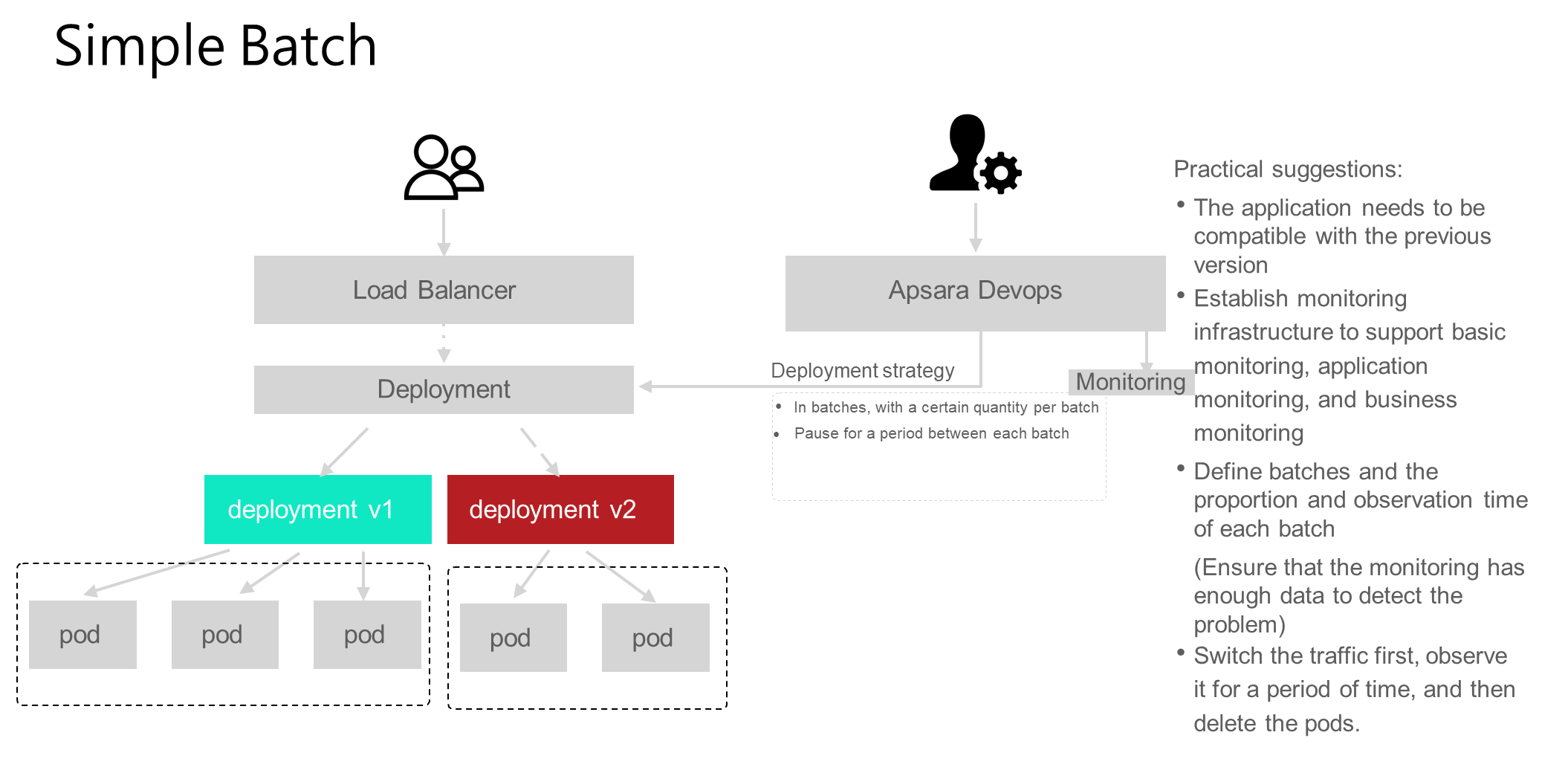

Simple batch deployment does not have any traffic features, it simply involves requesting and invoking both the new and old versions of the application simultaneously in the environment. Requests are randomly routed to the new or old version. Assume that a service is supported by 10 pods when the service is routed to a pod. If evenly distributed, there is about 10% of the traffic on each pod. One characteristic of simple batch deployment is that it involves deploying in batches, where each batch receives a certain amount of traffic. Secondly, there is a period of observation and validation between batches. Therefore, for simple batch deployment, inter-batch observation means are necessary. When an application has two versions, you must use the observation means to check whether the application can run normally and whether there is a risk of failure.

Simple batch is the simplest canary scale mode. It does not consider the traffic routing problem but simply puts two versions on the line. Of course, it also has some requirements. First of all, you need to ensure that the application is compatible with the previous version, that is, when v1 and v2 exist at the same time, the new version of v2 should be compatible with v1. Otherwise, when users call v1 first and then v2, inconsistency will occur due to version incompatibility. Secondly, establishing monitoring capabilities, including infrastructure monitoring, application monitoring, and business monitoring, enhances validation during canary releases. The more comprehensive the monitoring, the more confident we can be in ensuring that the new version is problem-free. Finally, the batches, the proportion of each batch, and the observation time need to be clearly defined, and the monitoring can collect enough data to ensure that the problem can be found. Therefore, try to allow enough observation time between each batch.

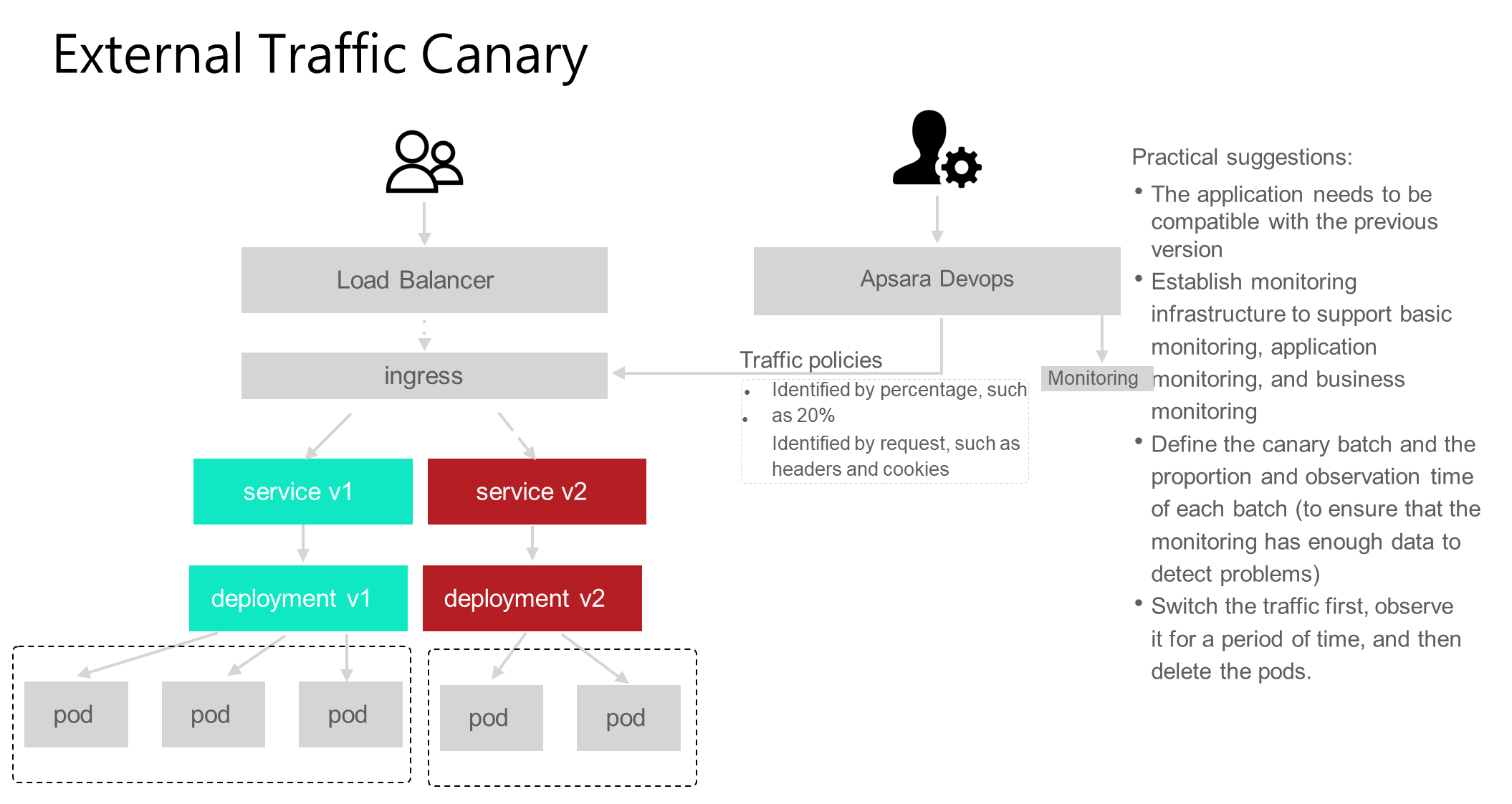

The biggest issue with simple batch deployment is that it lacks traffic. If the new version has poor quality, there is a high probability of encountering quality risks in the online environment. To address this issue, we need to isolate the traffic for canary releases, routing only specific traffic to the canary service to minimize the impact on normal traffic. This leads us to the next topic: external traffic canary scenarios.

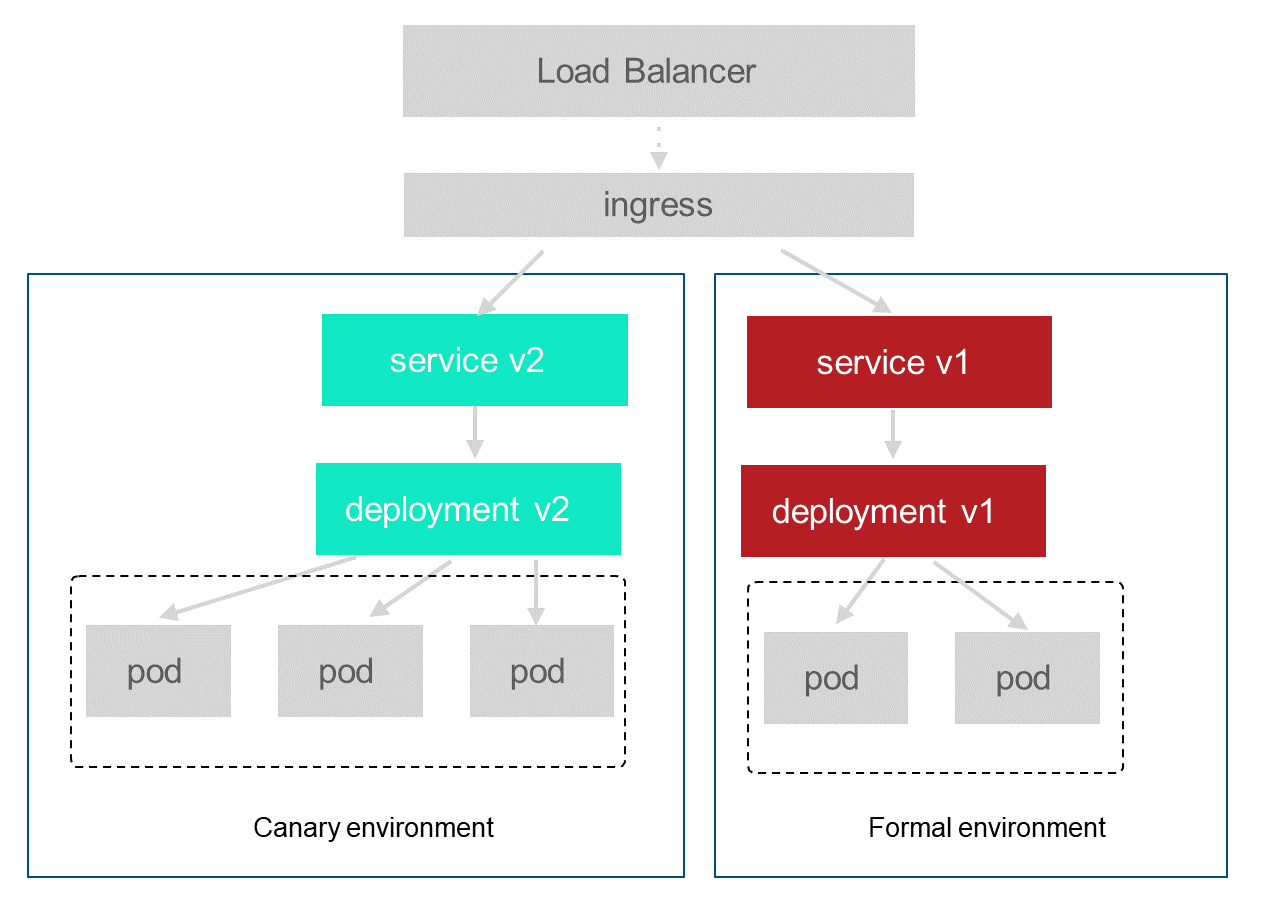

For workloads on Kubernetes, after traffic enters from an ingress, it uses a specific identifier to control the traffic to enter the specified application. The identifier can be a percentage, such as 20% traffic entering service v1 and 80% traffic entering service v2. It can also be a request characteristic, such as specifying the header identifier to enter service v2 and other traffic to enter service v1. The biggest difference between external traffic canary and simple batch is the traffic characteristics of the inlet. For example, when traffic is batched by a request identifier, only requests with specified headers, cookies, or region characteristics enter the canary version. External traffic canary scenarios also have certain requirements. First, ensure compatibility with the previous version, because this scenario has no constraints on internal traffic calls. Second, there must be a monitoring system. At the same time, the batch and observation time also need to be clearly defined. Finally, we recommend that you switch the traffic before clearing the workload, and there should be a period of observation between the two, that is, after the traffic is cut from the canary to the official version, there should be a certain period of observation time before clearing the canary workload.

The problem with external traffic canary scenarios is that the route identifier is only made at the ingress. Once a call between internal services is entered, the route cannot be distinguished by the traffic identifier.

For external traffic canary scenarios, another problem is inevitable, that is, how to set the canary identifier that controls the ingress traffic distribution. The following are several common ideas:

First, use client feature attributes. This is a common setup method, such as identifying the client's telecom provider (e.g., Mobile, Telecom, or Unicom), the client's geographical location (e.g., Zhejiang, Jiangsu, or Shanghai), or the browser version. These characteristics can help us make specific identifications. For example, routing traffic from mobile users in a specific province of China to the canary version as a canary user set. Using this approach makes the canary characteristics very clear, and it simplifies the observation of canary user data.

Second, use customer ID to perform Hash Groupby. This relatively discrete method can avoid survivorship bias. For example, using tests with clients from Zhejiang Province or Mobile may encounter issues not present with other telecom operators. This method can help avoid such biases.

Third, use the cookie, header, or query parameter to carry the canary identifier for verification. This is a method commonly used in Ingress, where in online canary services, cookies are used to route them to canary environments.

The fourth method involves using designated identifiers for canary validation. Preparing specific identifiers in advance and distributing them to users for verification purposes is one approach.

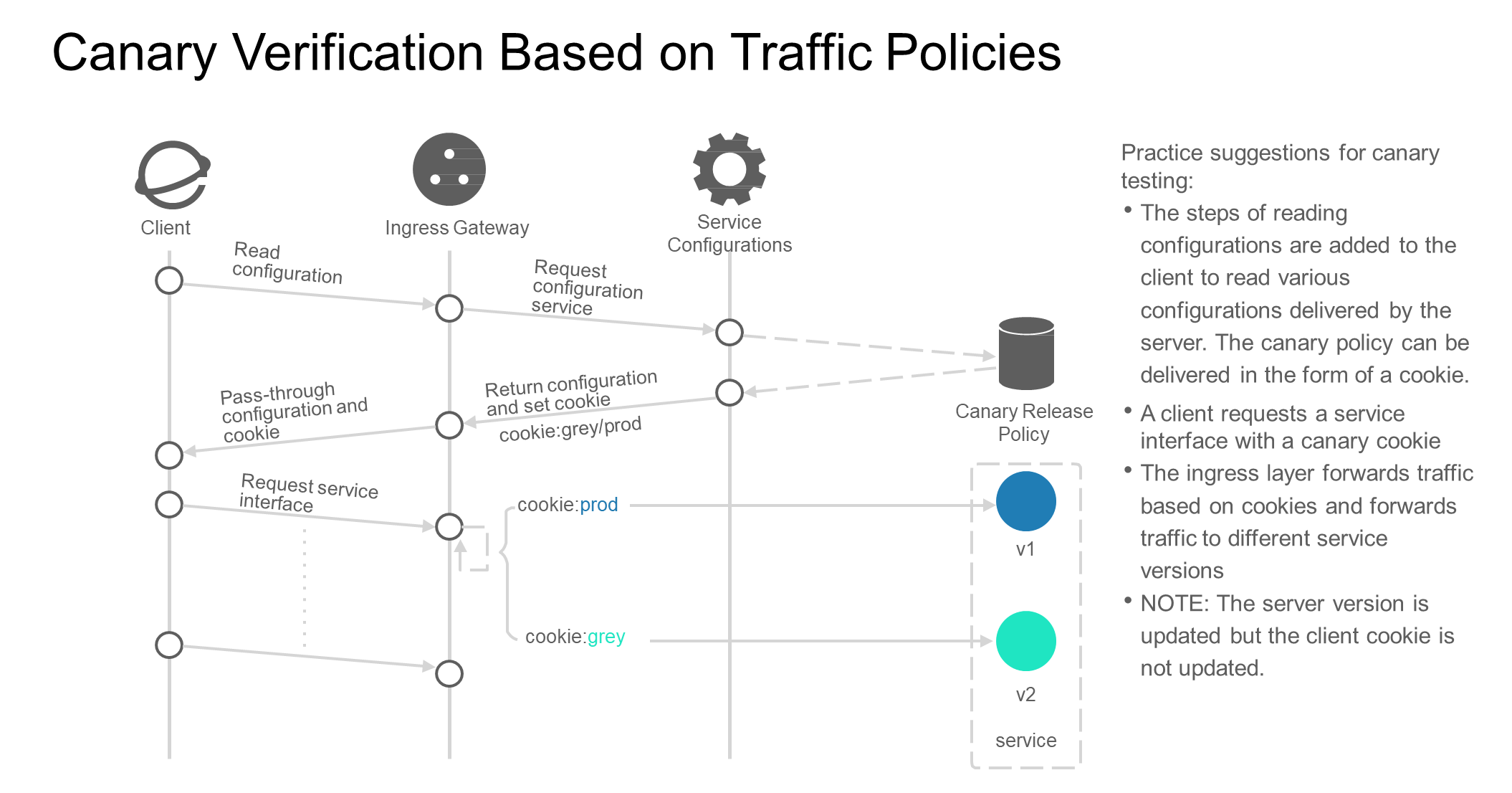

After you set the canary identifier, the process of canary verification is as follows:

The client reads the canary policy. The canary policy returns the configuration_env: grey or other canary identifiers. When requesting a service interface, requests that match the identifiers are routed to the new version of the service based on whether the service interface contains the canary identifier. Requests that do not contain the canary identifier are routed to the old version to implement canary verification.

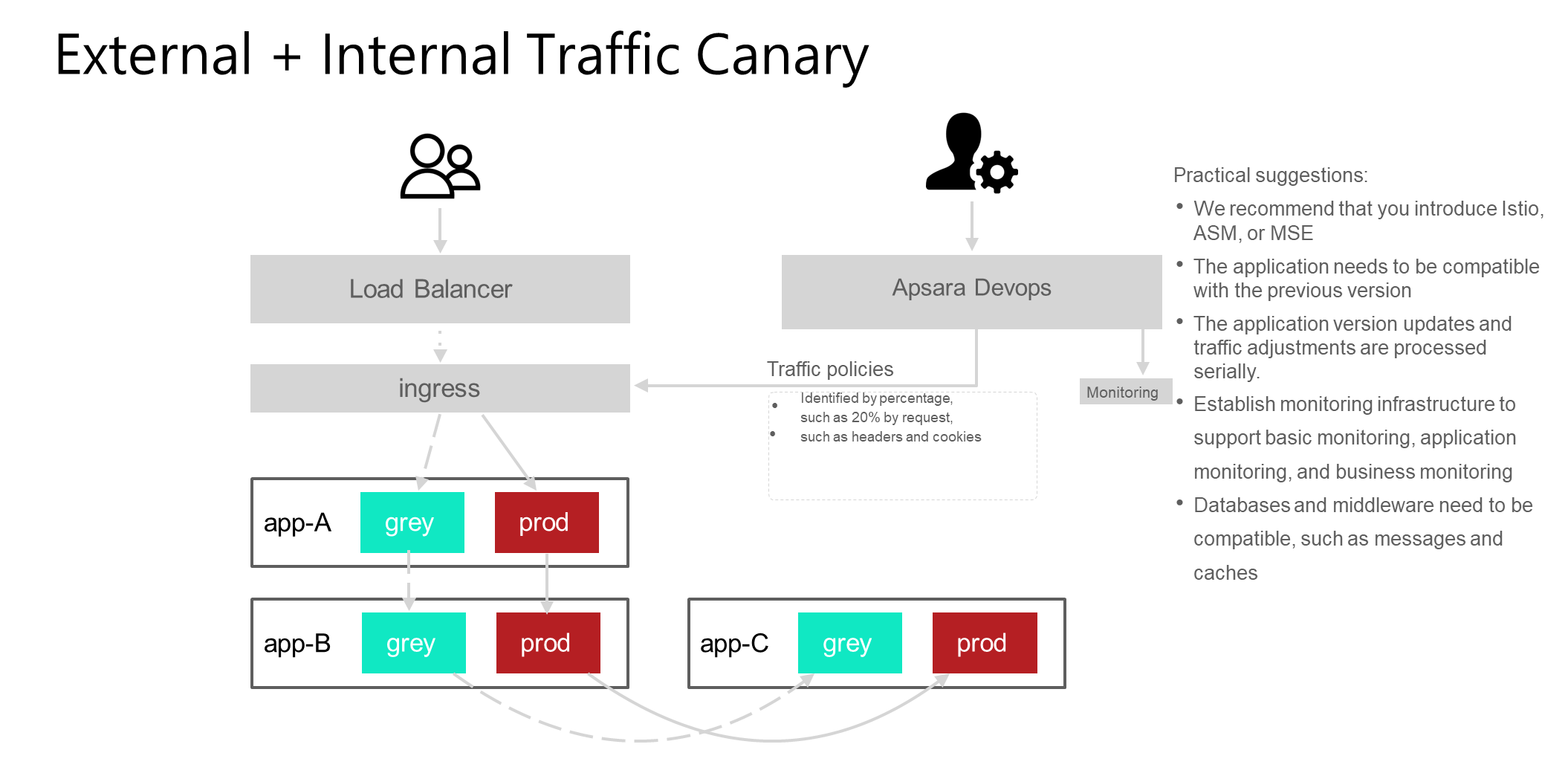

The previously mentioned external traffic canary scenarios cannot address the traffic issues between internal microservices within an application. In such cases, external and internal traffic canary practices are necessary.

First, the entrance is the same as the one in the previous scenario. When traffic enters, the traffic policy carries the canary identifier. When you enter the application, there is also a routing policy of traffic inside the application. For example, grey traffic flows to the app-B grey version service and the app-C grey version service. The prod traffic is routed to the other app's prod. Of course, the actual traffic policy will be more complex, if the app-C has only one version, whether it is grey or prod will be routed to the version.

In this process, a problem will be involved, how to make as few changes to the application as possible to support internal traffic canary. You can use tools such as Istio, ASM, and MSE to route internal RPC traffic.

Secondly, ensuring compatibility between versions is crucial because besides application calls, there are also considerations for data, messages, and other factors that aren't differentiated in this scenario.

Thirdly, application version updates and traffic adjustments are processed serially. For example, some teams hope to control the deployment order of each application during deployment, and the order may not be the same each time. It is necessary to ensure that several applications with dependencies are deployed in the correct order. For example, A depends on B and C. When B and C are updated, the service is deployed to A. If the order is wrong, when B and C are not updated, the traffic will enter A, which will create an unexpected risk. In this regard, developers bear a heavy mental burden. At this point, a different approach can be considered: delaying the entry of canary validation traffic and first deploying the canary environments for applications A, B, and C simultaneously. Then, introduce canary traffic to separate traffic adjustments from version updates, freeing them from dependencies during application updates.

Fourth, with regard to monitoring, it is the same as the previous canary verification.

Fifth, databases and middleware need to be compatible. If application C's canary and production environments both use the same cache, interference may occur. Similarly, if they both consume the same messages, there is a risk that messages generated by the canary environment may be consumed by production. Whether this process can remain compatible is a question to consider. So far, the canary has been basically implemented on the traffic side except for middleware, while it has not been processed on the data side.

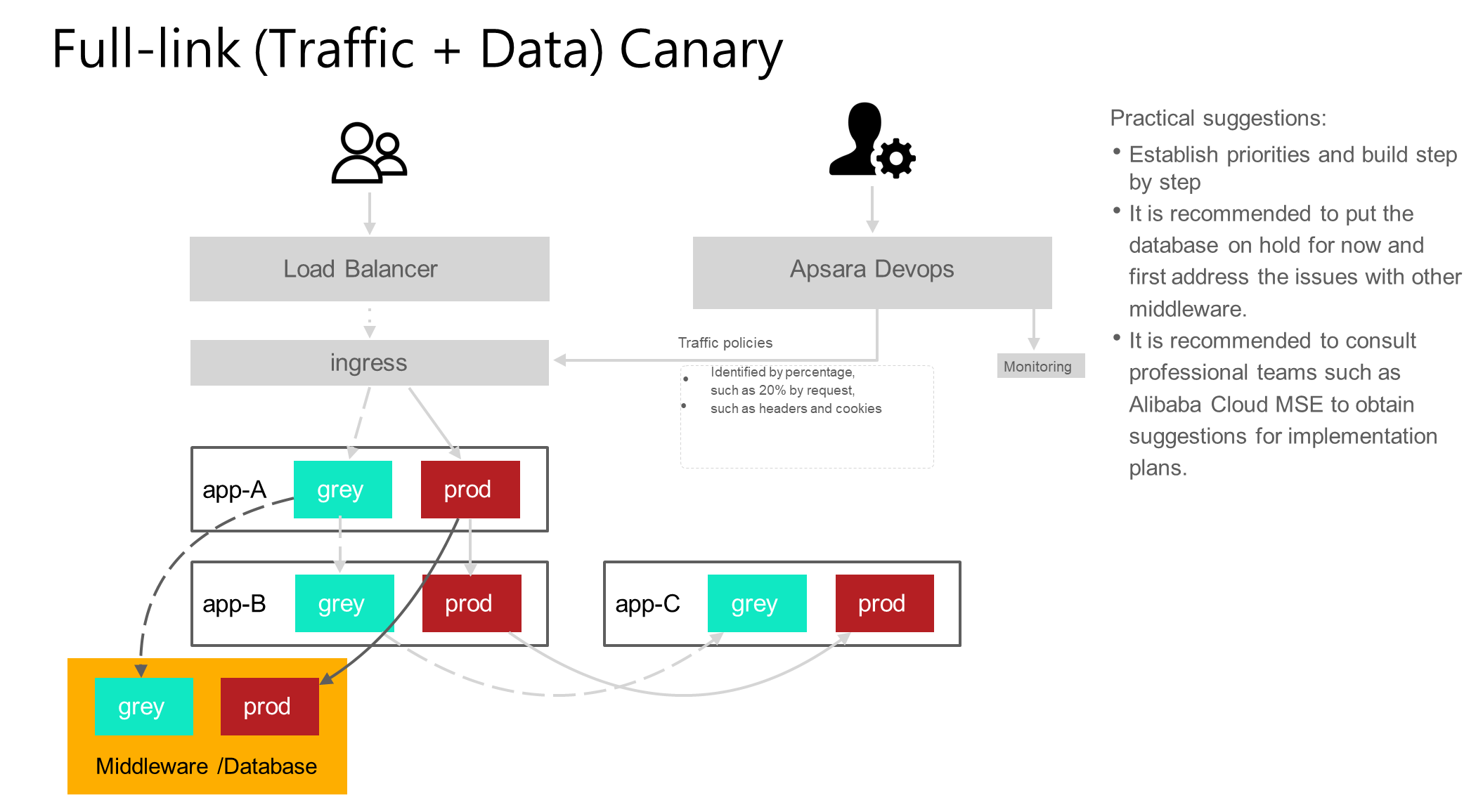

This scenario is about how to process the canary scale of traffic and data, which includes not only the canary scale of full-link traffic, but also middleware (such as messages and cache) and databases, and can also process traffic identifiers. This scenario relies heavily on the construction of middleware and requires high team engineering capabilities.

For this scenario, our suggestion is, first, build gradually according to priority; second, solve middleware problems outside the database first; third, try to seek professional technical teams, such as the MSE team, to obtain implementation solutions.

The four canary release scenarios gradually change from simple to complex. The simple batch is the simplest and has the lowest cost.

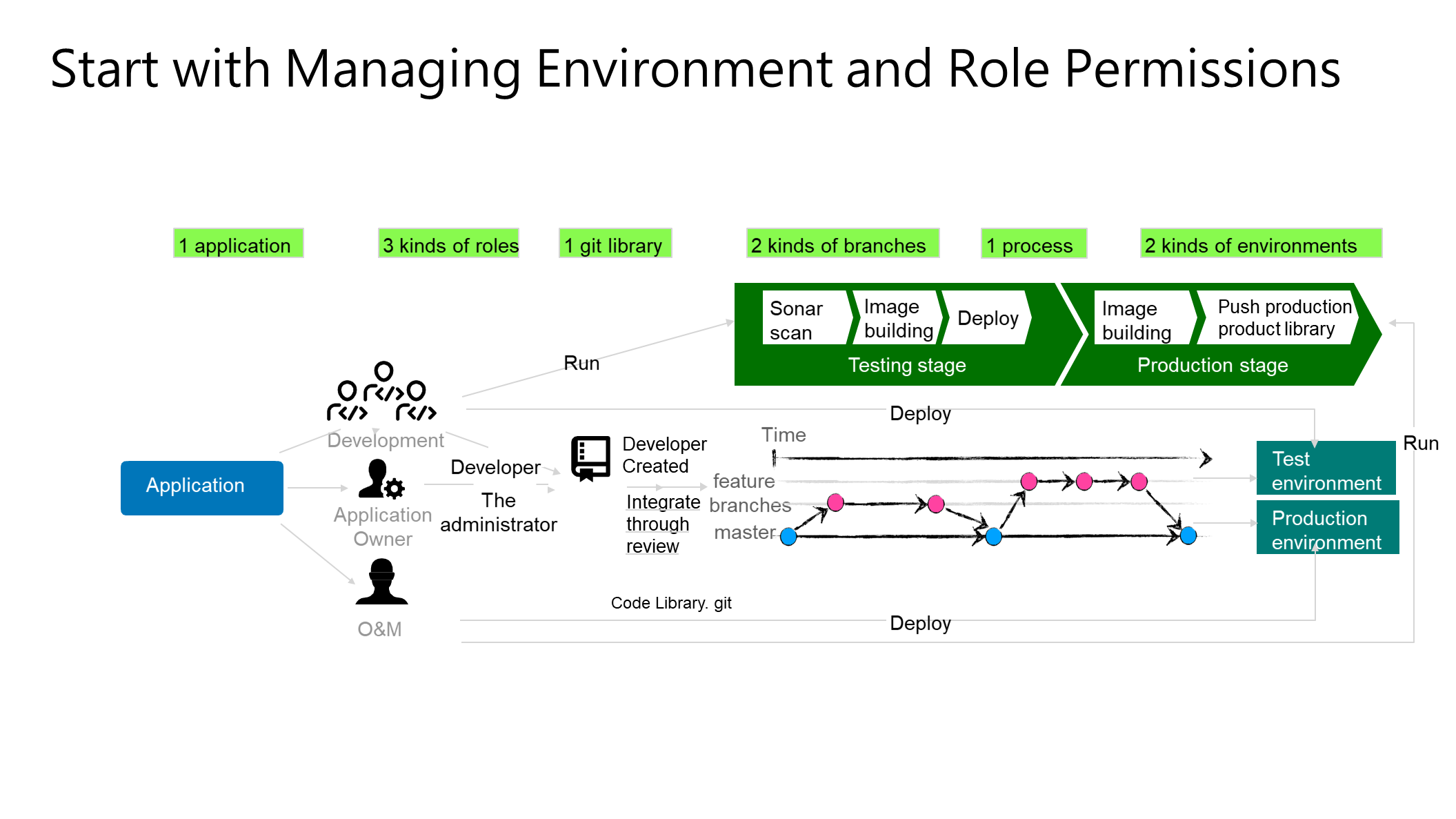

Next, we need to concatenate the canary release in the application R&D process. This part will focus on how to solidify the canary release as part of the R&D process.

First, we recommend that the canary environment be treated as an independent environment and isolated from the formal environment to reduce the complexity of environment management and permission control. Second, define different roles for applications, such as development and O&M, and different roles have different environment permissions.

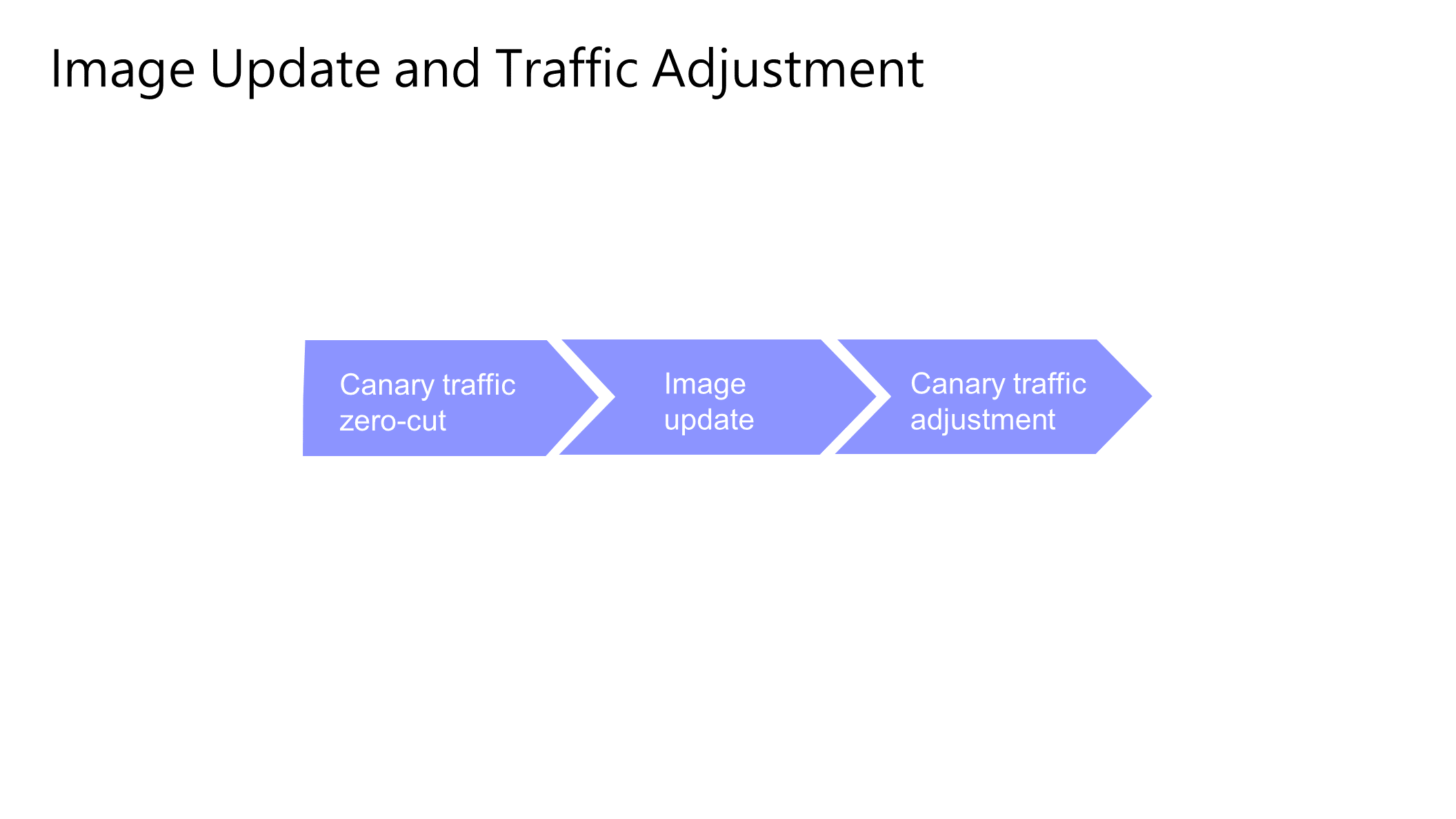

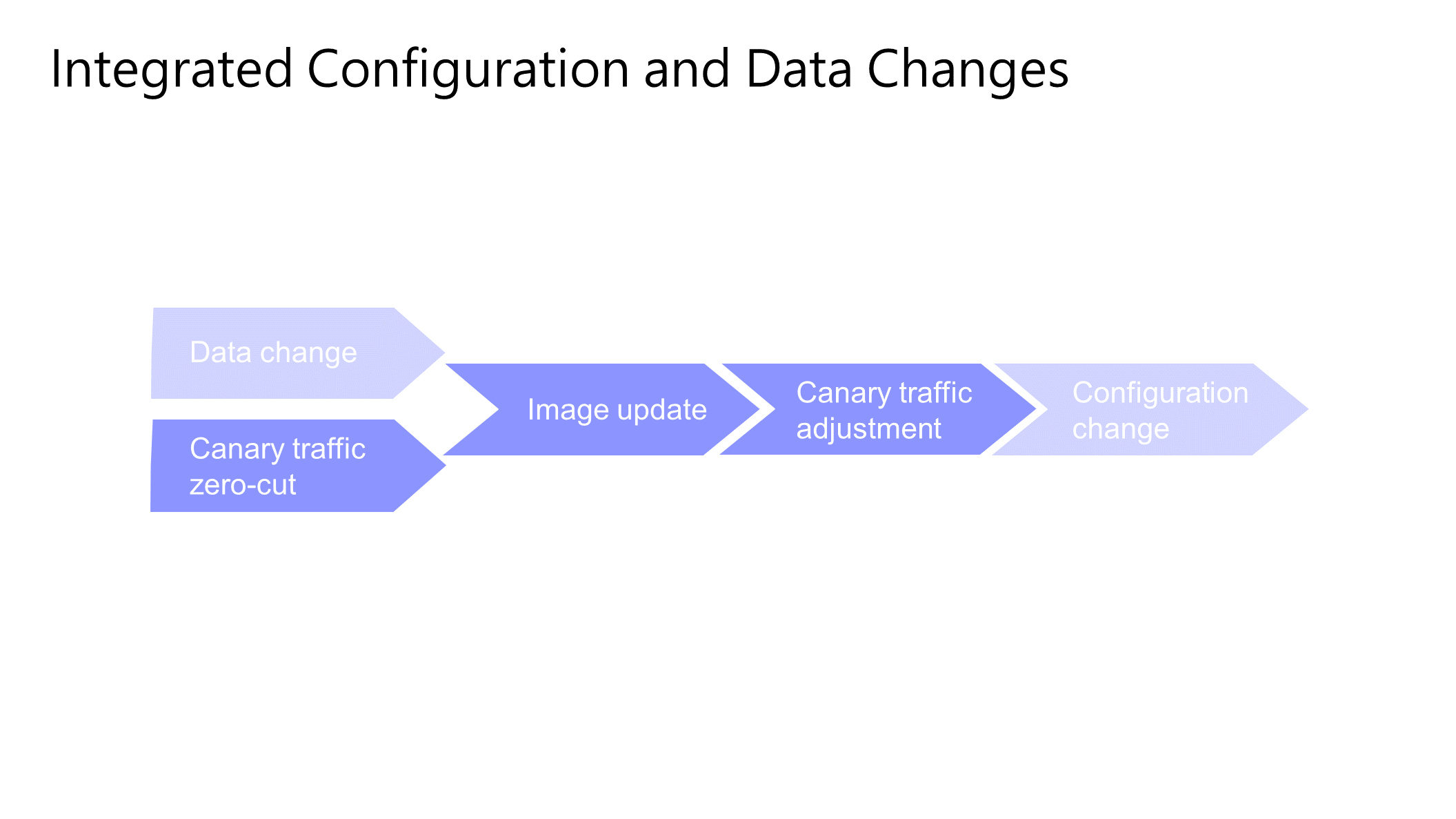

We recommend that you separate the image update and traffic adjustment into different steps, cut the traffic to zero before the image update, and then adjust the canary traffic of the image after the update. The advantage of this is that you do not need to care about whether the service is available during the canary image update process, and you can update it at one time according to the idea of batch delivery, which can avoid complex deployment sequence scheduling problems.

If configuration changes and data changes have a critical impact on canary release, we must integrate them.

In general, data changes are completed before application deployment or even before the entire process. You can also perform data changes in the R&D process. For example, you can complete data changes in a data change release order. Before and after the canary traffic adjustment, the configuration changes are completed. In this way, the configuration and data changes can be integrated to ensure the integrity of the entire environment before canary verification. According to previous canary verification cases, many canary verification processes only perform image updates, but in fact, there are still many preparations, such as database operations and configuration operations. However, these operations are often scattered in different places, which has two adverse effects on canary verification. One is that the entire process is low in automation and efficiency, and the other is prone to risks such as misoperations. As a result, even if the image update is completed, other risks will occur without data changes and configuration changes.

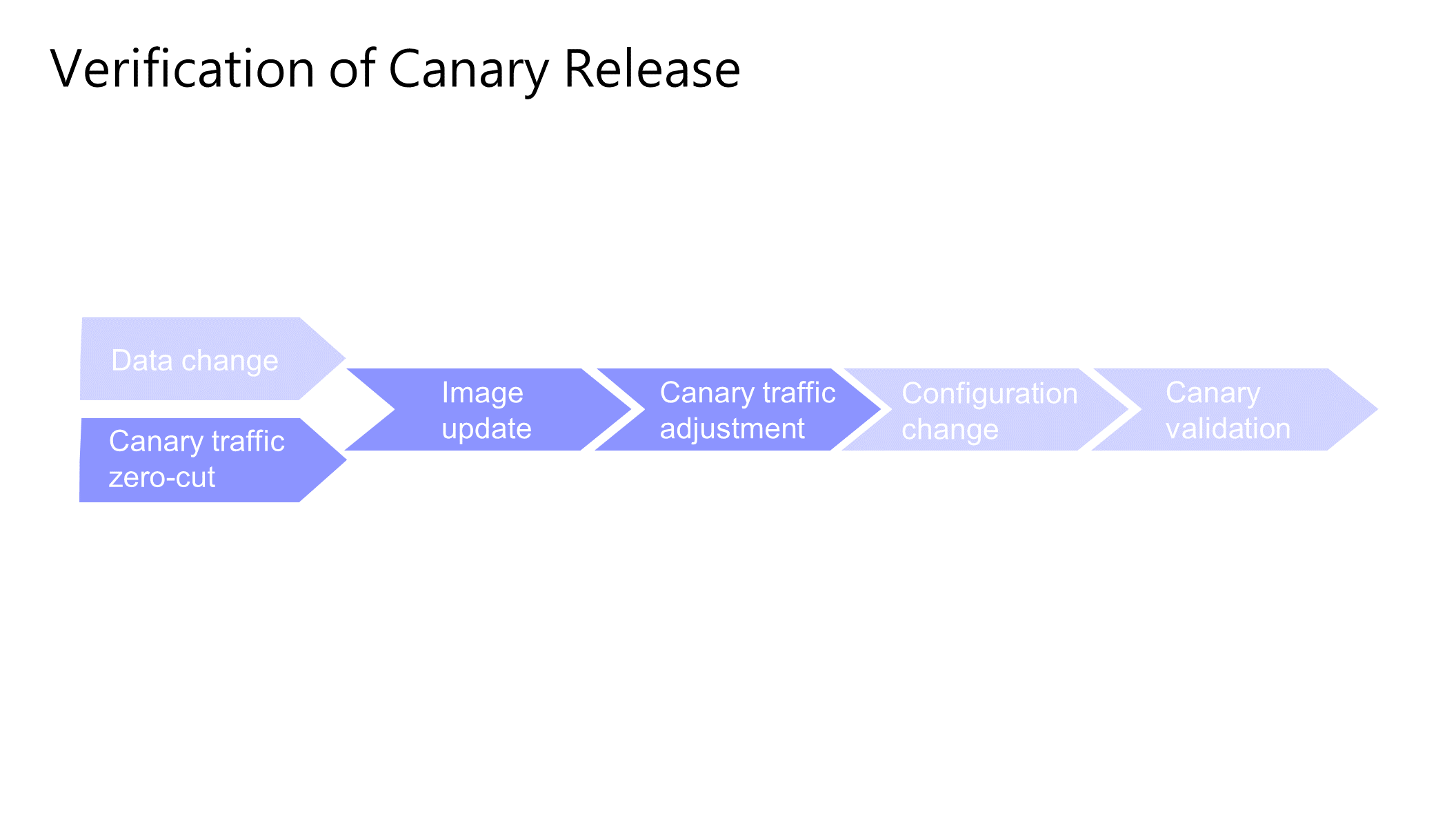

After the canary release process is completed, you should add a validation node. First, the simplest and most general automated verification can be performed, or manual checkpoints can be used for human confirmation. This ensures that the canary validation is correct before proceeding to the production phase.

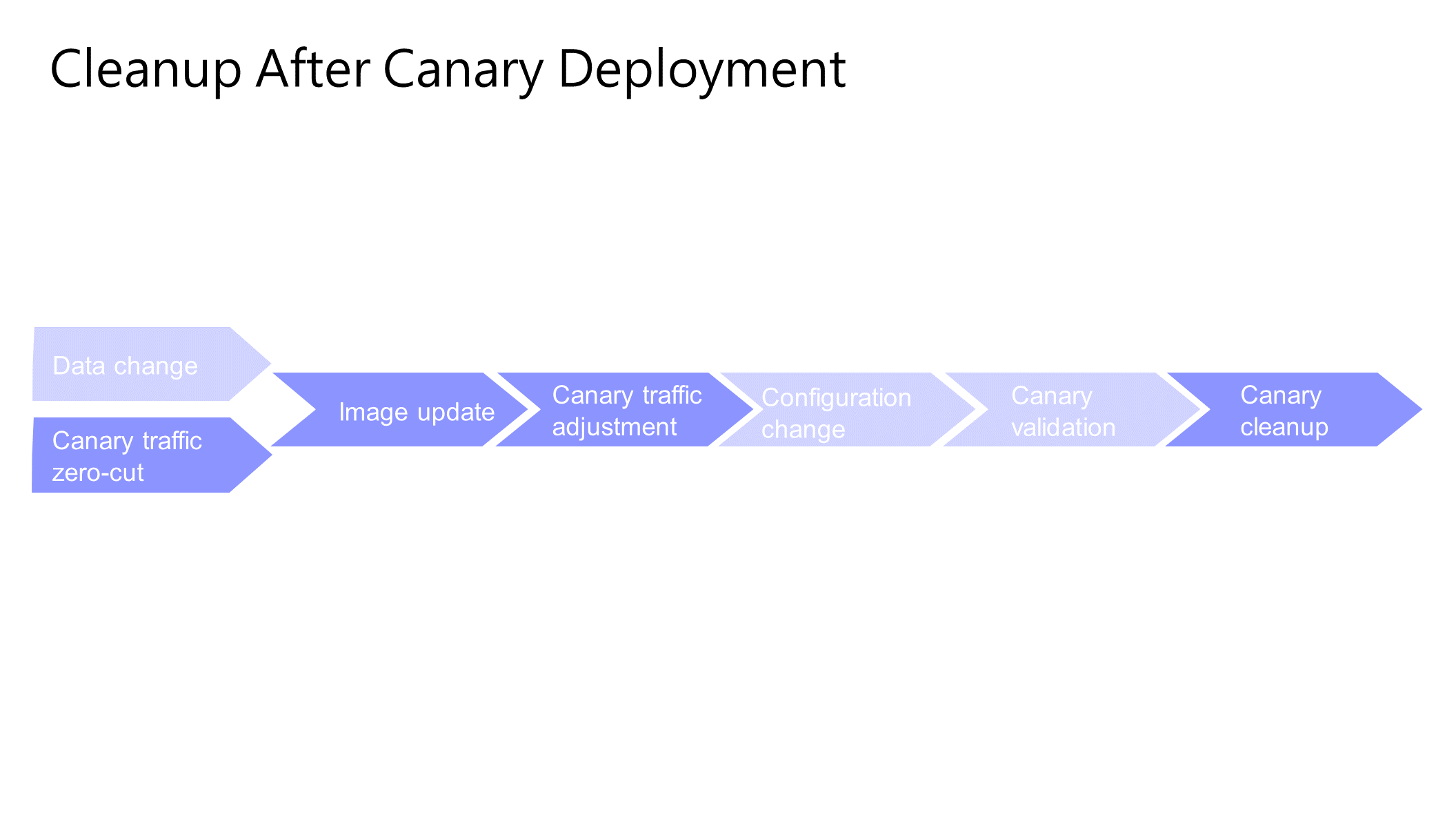

The cleanup process after successful canary validation primarily aims at two objectives: first, reducing the load and resource consumption in the canary environment, and second, avoiding risks associated with some traffic entering the canary environment. Of course, this process depends on the actual situation. In many cases, it may not be completely cleaned up. It can be reduced to a copy so that it can also be verified when it is deployed in a canary manner with other services.

The demo is based on the Kubernetes ingress canary release scenario.

First, the entry domain name will be configured with two routing policies, one is the canary policy, when it is marked with_env: grey header, enter the canary ingress, then enter the canary service and deployment, together form an independent canary environment. The other is that without this identifier, the ingress, service, and deployment that enter the formal environment together form the formal environment. For display, each environment has only one deployment.

To transform from the development process to the production stage, the access conditions are first set, and the access conditions are verified in the previous test phase before the deployment of the production phase can begin. Second, the image will be built from the master branch. Then, the nodes that have been approved by O&M pass through. If they do not pass through, the whole process will stop. Next, deploy the canary environment and conduct canary validation. If the canary validation fails, do not proceed to the production stage and do not implement any canary changes; instead, directly clean up the canary. If the canary validation passes, proceed with production deployment, canary cleanup, and change closure sequentially. Closing the change signifies that all preceding steps have been successful. Canary cleanup includes two scenarios: one after the production deployment is completed, and the other after the canary validation fails. Next, let's enter the real environment to show the whole process.

Q1: How to implement full-link canary release?

A1: Full-link canary scenarios are divided into several layers. First, consider whether the external traffic scenario is sufficient to meet the requirements, and then expand to the external and internal RPC levels. If the actual requirements cannot be met, consider setting up a full-link canary. However, the cost of full-link canary is high. You can first learn about Alibaba Cloud MSE and other products and solutions that specialize in full-link canary, and then combine them with community solutions, such as Istio and other tools. Since middleware involves a lot of content and has a lot to do with its own technology stack, it is difficult to have a standard implementation. If it is JAVA or Golang, MSE is a good choice.

Q2: Assume that there are ten microservices in a system, and only four microservices A, B, C, and F are released in this version. How can we ensure that the internal calls are up to date?

A2: You can make simple adjustments to the current example. First of all, in the current process, the canary environment will be cleaned up at the end. This is not necessary. If the reserved canary environment and the growth environment use different service clusters, the canary versions of the 10 microservices will always be the latest. When A, B, C, and F are updated to the canary environment, internal calls are still calls to services in the canary environment A, B, C, and F. Inter-service calls are calls to services in the namespace, that is, calls are routed from Kubernetes devices instead of cross-namespace calls. In this case, you can ensure that the canary namespace calls the canary service instead of the production service. If service discovery is not based on Kubernetes and an independent registry is used, it is also necessary to ensure that the registry has a corresponding namespace to distinguish canary and production environments.

Q3: How do I handle databases during canary release?

A3: This issue is more complex. First, we recommend minimizing database differences between the canary and production environments or handling database differences during the canary release to ensure compatibility. This is because in the canary release, if there are differences at the database level, it will be very difficult and prone to some problems. Second, database changes do not have to be integrated into the entire R&D process, or even do not need to be integrated into it in most cases. If there is a database change, it must be completed in advance, because database changes are more risky than application changes or configuration changes.

Q4: Use the same application or assign different namespaces to customers?

A4: If the customer has a concept similar to a tenant, such as Apsara Devops, there are many customer enterprises, each enterprise has its own enterprise ID, and the data between enterprises is logically isolated. From this perspective, if customers are logically isolated, there is no need to assign different namespaces. If the isolation granularity is higher, data isolation is generally considered first.

Q5: Can Function Compute and the canary release be implemented together?

A5: Yes. You can arrange the Function Compute steps in the original research and development process.

Q6: Where are canary policies stored?

A6: Apsara DevOps is currently stored in the system, i.e. in the environment configuration of the Apsara DevOps itself, or in the code base, IaC library, or configuration center. However, it may be more appropriate to store the environment of the application, because its canary policy is defined when the environment is defined.

Q7: Are canary release and production environment data isolated?

A7: In theory, they are not isolated. Even if they are to be isolated, they are isolated logically.

Q8: How do I handle the redundant data generated by canary release?

A8: There is no better way to deal with it at present. If there is a data problem, we can only rely on our own data layer logic, such as canary data markers. With partial test data, we can ensure that the data can be cleaned and recovered. If redundant data is really generated, it can only be repaired again.

Q9: If there is a Zookeeper registry center, is header information added to the registry center?

A9: If there is a Zookeeper registry center, it depends on the form of the microservices model. If all services pass through ZK, we recommend that you complete the service in ZK. If not, it can be done simply in the Kubernetes.

Q10: Is the platform heavily dependent on the code?

A10: The code dependence is not high, but the key point is whether the RPC part /traffic part uses a common standard protocol. If it is Dubbo or part of the framework, it has corresponding requirements. If it requires a registration center, it will have a certain influence. On the other hand, it is recommended to avoid this dependence as much as possible. In the final demo scenario, there is no such dependency. Of course, there is a dependency on infrastructure, that is, YAML orchestration capability based on Kubernetes. Of course, other methods can achieve similar effects.

Q11: Do you recommend a registry center or Kubernetes?

A11: There are no fixed requirements. From a personal perspective, if Kubernetes is sufficient, you can use Kubernetes alone without introducing a registry center. However, if there are other requirements or if you need to interact with services in different environments, a registry center can be utilized. This is because the registry center offers richer features and is not tightly coupled with Kubernetes.

Q12: What are the differences between the YAML syntax of Flow and Jenkins files?

A12: The differences between the two are quite significant. The Jenkins file is based on the Groovy syntax. The YAML of Flow is more like GitHub Actions.

Q13: How can construction be triggered only for specific environments during the build initiation?

A13: There was a brief introduction in the demonstration just now. We used the condition statement to control when production steps are executed based on certain conditions, such as cleaning up the environment, which is only carried out when the conditions are met, and not otherwise. Selective construction can be used in this way.

Q14: I used to use Nacos, is it necessary to replace it with Kubernetes?

A14: If it does not affect the work, there is no need to replace it specially.

A Guide to Mastering the CustomResourceDefinition (CRD) - AliyunPipelineConfig

Comprehensive Upgrade of SLS Data Transformation Feature: Integrate SPL Syntax

212 posts | 13 followers

FollowAlibaba Cloud Native - July 12, 2024

Alibaba Cloud Native Community - September 12, 2023

Alibaba Cloud Native Community - September 18, 2023

Alibaba Cloud Native - February 15, 2023

Alibaba Cloud Native Community - October 31, 2023

Alibaba Cloud Native Community - March 11, 2024

212 posts | 13 followers

Follow Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn MoreMore Posts by Alibaba Cloud Native