By Huaiyuan Wang and Juntao Ji

Apache RocketMQ is a cloud-native messaging and streaming platform that simplifies the process of creating event-driven applications. With the evolution of Apache RocketMQ over the years, substantial code has been written to leverage multi-core processors and improve program efficiency through concurrency. As a result, managing concurrent performance becomes critical, and locks are essential to ensure safe synchronization of multiple executing threads when accessing shared resources. Although locks are essential to ensure mutual exclusivity in multi-core systems, their use can also pose optimization challenges. As concurrent systems become more complex internally, deploying effective lock management strategies is key to maintaining performance.

Therefore, during the Google Summer of Code (GSoC) 2024, our Apache RocketMQ open-source community proposed a highly challenging project: "GSOC-269: Optimizing Lock Mechanisms in Apache RocketMQ". In this project, we aim to optimize the lock behavior to enhance the performance of Apache RocketMQ and reduce resource consumption. Through this project, we innovatively put forward the ABS lock- Adaptive Backoff Spin Lock. The idea of the ABS lock is to provide a set of backoff strategies for lightweight spin locks, to realize low-cost and limited lock spin behavior and adapt to varying levels of resource contention.

The acronym ABS was chosen for the lock because its design idea bears some similarity to the ABS system in braking systems. In early systems, the spin lock would continuously spin. However, when the critical section was large, a large number of unnecessary spins would be generated, similar to the "locking up" in braking systems. This results in a sharp increase in resource utilization. To prevent such resource consumption, the community replaced the spin lock with a mutex to avoid waste of resources when the critical section was large. However, after implementing this alternative, the blocking and waking mechanism of the mutex affected the response time of message sending for smaller messages and resulted in higher CPU consumption.

Spin locks perform well in terms of response time (RT) and CPU utilization when the critical section is small. The only drawback is that they cause a waste of resources during resource contention. Therefore, we decided to optimize the spin lock to reduce unnecessary resource consumption, so as to better utilize the advantages of the spin lock and make it suitable for both high and low contention scenarios. In practice, we have proved that adjusting the lock strategy will affect the message sending performance of Apache RocketMQ and result in significant performance improvements.

The ABS lock can also address the lock selection dilemma faced by open-source users: when delivering messages in Apache RocketMQ, there are two locking mechanisms, SpinLock(swapAndSet) and ReentrantLock mutex. However, there is a lack of documentation that analyzes which scenarios are suitable for each. Therefore, we integrated the two locking mechanisms through the ABS lock, achieving the optimal state and completing the server-side locking mechanism loop. Users do not need to decide on the locking mechanism for their current scenario. The ABS lock naturally fine-tunes lock parameters based on contention scenarios. The ABS lock can dynamically adjust its behavior based on runtime conditions, such as the level of lock contention and the number of threads contending for the same resource. This can improve performance by minimizing the overhead associated with lock acquisition and release, especially in high contention scenarios. By monitoring system performance indicators in real time, the ABS lock can be switched between different locking strategies.

We have now implemented an adaptive locking mechanism (ABS lock), and experimental results have validated that the mechanism achieves the optimal effect of using a mutex or a spin lock alone in different scenarios. In short, it achieves the best locking mechanism effects across different scenarios. This article describes the evolution process of the locking mechanisms in Apache RocketMQ and the optimization effect of the ABS lock.

Before delving into the main content of the article, it is necessary to introduce some concepts that may be frequently used in this article: critical section, mutex, and spin lock. Understanding these concepts will help you better grasp the optimization ideas presented in this article.

The critical section is a segment of code that is accessed exclusively by a thread. In other words, if one thread is currently accessing this segment of code, other threads can only wait until the current thread has left the segment of code before they can enter, thus ensuring thread safety. The size of a critical section is generally influenced by various factors. For instance, in this article, the size of the critical section during message sending may be affected by the size of the message body.

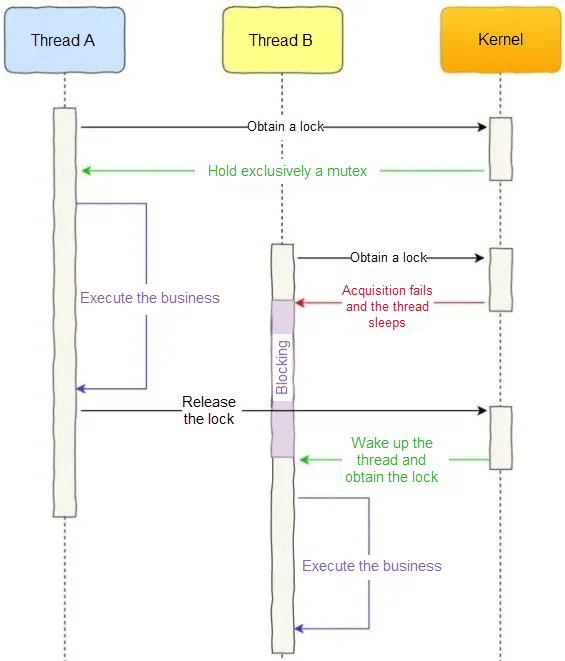

A mutex is an exclusive lock. Once Thread A successfully acquires the mutex, it exclusively holds the lock. Until Thread A releases the lock, any attempt by Thread B to acquire the lock will fail, causing Thread B to yield the CPU to other threads, blocking its locking code.

The blocking behavior when a mutex acquisition fails is managed by the operating system kernel. When a thread fails to lock, the kernel puts the thread to sleep and wakes it up when the lock becomes available, allowing the thread to continue execution once it acquires the lock. For a detailed illustration of how mutexes behave, please refer to the figure below.

The performance overhead associated with mutexes is primarily the cost of two thread context switches.

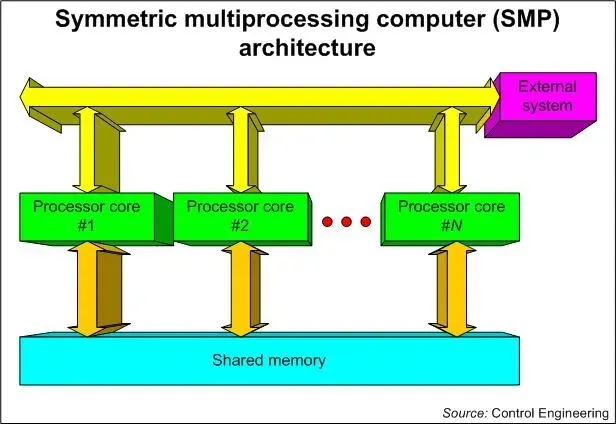

Spin locks use the atomic operation CAS (CompareAndSet) provided by the CPU to complete lock and unlock operations in user mode without actively causing thread context switching. Therefore, compared with mutexes, spin locks are faster and have lower overhead. The main difference between spin locks and mutexes is that when the lock fails, the mutex responds with thread switching, whereas spin locks respond with busy waiting.

This section describes the evolution of lock mechanisms in Apache RocketMQ. Spin locks and mutexes have their own advantages and disadvantages: spin locks are lightweight and have low context switching overhead, making them suitable for short-term waiting situations; mutexes, on the other hand, conserve resources and thus are better suited for scenarios where the lock is held for extended periods.

The following sections will introduce how to optimize the locking mechanisms to benefit from the best features of both spin locks and mutexes.

In the early stages, to minimize the resource consumption caused by thread context switching, Apache RocketMQ opted for spin locks. As Apache RocketMQ evolved, the concurrent pressure increased significantly, leading to excessive and unnecessary spins when the critical section was large. This led to a sharp increase in the resource utilization of brokers. Therefore, Apache RocketMQ later replaced spin locks with mutexes to avoid the waste of resources when the critical section was large.

However, for smaller messages, the blocking and waking mechanism of mutexes can negatively impact RT and cause higher CPU overhead due to context switching. Spin locks have better performance in RT and CPU utilization when the critical section is small. This dilemma of choosing the correct locking mechanism has been a long-standing challenge for us. To this day, these two types of locks are still retained in Apache RocketMQ and are controlled by one switch: useReentrantLockWhenPutMessage.

This means that users need to decide their own lock type when starting the broker- opting for spin locks if the message body is small and the sending TPS is not high, or enabling mutexes for larger message bodies or highly contentious scenarios.

To solve this problem, we started optimizing the lock mechanism. We wanted to develop a lock that can combine the advantages of spinlocks and mutexes and be suitable for both high and low contention scenarios. We ultimately decided to modify the spin lock mechanism by introducing a special spin lock that maintains excellent lock behavior across various contention levels. Since spin locks spin indefinitely until they are acquired, unnecessary spins will occur when critical sections are large, which consumes significant CPU resources. To effectively utilize the advantages of spin locks, we need to control the number of unnecessary spins when the critical section is large, thereby avoiding excessive spinning and maximizing performance in scenarios with larger critical sections.

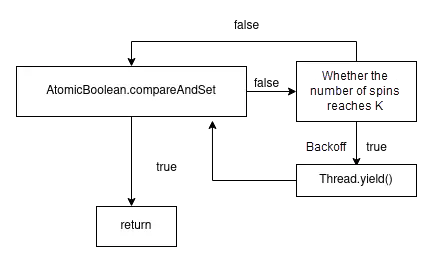

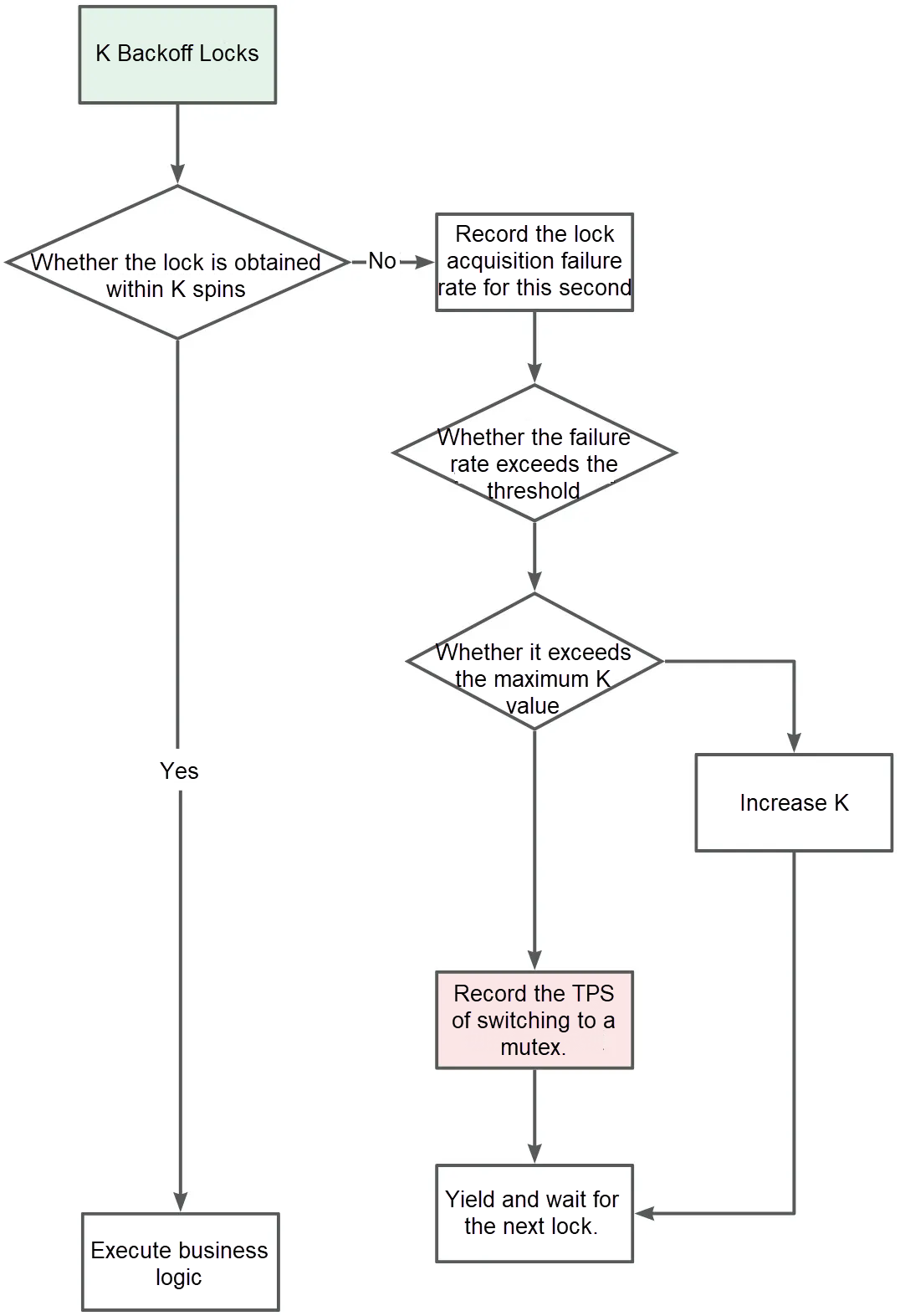

Finally, by modeling the behavior of spin locks, we proposed a k-backoff spin lock: if the lock is not acquired after k spins, Thread.yield(). will be executed to transfer the control of CPU to the operating system. This behavior can avoid unnecessary context switching associated with mutexes and also prevent the CPU overhead caused by infinite spinning in high-pressure scenarios.

The behavior strikes a balance between spinning and CPU context switching, reducing system pressure and minimizing resource consumption.

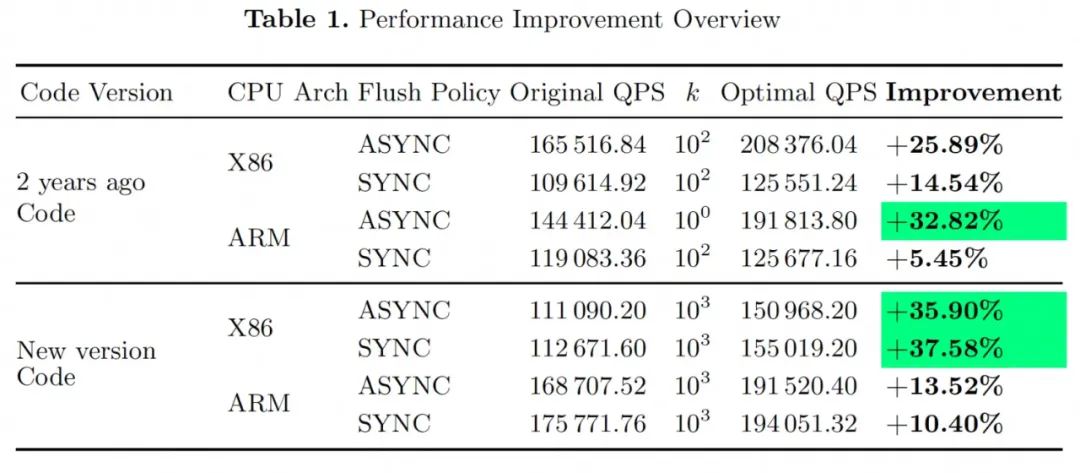

At the recent FM 24 conference, Juntao Ji shared the theoretical modeling and experimental validation of the k-backoff spin lock[3]. With the k-spin lock in place, we can find the local optimal point of the system performance and achieve the maximum TPS performance. The results are shown in the following table.

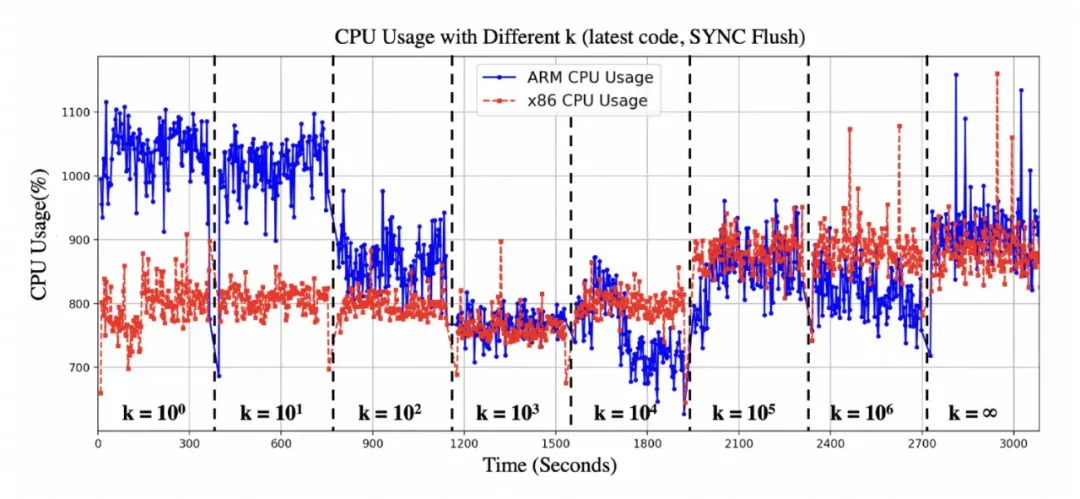

Take the behavior of synchronous disk flushing in the X86 architecture as an example. The experimental results show that when k= 10 ^ 3, the sending speed reaches its peak (155019.20) and CPU utilization reaches its lowest point. This indicates that the backoff strategy saves CPU resources. At this point, the CPU supports higher performance levels with lower utilization, which indicates that the performance bottleneck has moved- possibly to the disk. The table above shows that the performance of Apache RocketMQ is improved by 10.4% on the ARM CPU architecture with the same k(10 ^ 3) and configuration parameters (latest code, SYNC flushing mode). In addition, as shown in the figure above, when k= 10 ^ 3, CPU utilization significantly decreases from over 1000% on average to around 750%. The reduction in resource consumption suggests that alleviating other system bottlenecks may result in more significant performance improvements.

The effectiveness of the k-backoff spin lock has been demonstrated above. However, one issue remains: we find that when the critical section is large enough, the resource consumption of spin locks is still far higher than that of mutexes because the lock cannot be acquired even after multiple backoffs. In this case, the k value has a negative impact, as it ultimately cannot eliminate the cost of context switching and also causes time consumption due to spinning.

Therefore, we decided to dynamically adjust the k value for further optimization. When the critical section is large, k is adaptively increased. Once the resource consumption reaches the same level as that of the mutex (k reaches its adaptive maximum), the spin lock becomes unsuitable for this scenario. At this point, we switch to using a mutex. This is the core concept behind our final implementation- the ABS lock.

We conducted theoretical analyses of various locking mechanisms to determine the scenarios they are best suited for and then performed extensive experimental testing in those scenarios to identify the optimal locking mechanisms for multiple scenarios. Ultimately, we dynamically switched to the optimal locking mechanism based on runtime conditions such as the number of contending threads, TPS, message size, and critical section size.

Above we have demonstrated that controlling the number of spins has a positive effect on performance optimization, but this K value varies across different systems. Therefore, we need to adopt an adaptive strategy for the spin number(K).

In short, the adaptive strategy for K evolves from low-frequency spinning to high-frequency spinning as contention for critical sections increases or the size of critical sections grows. As k gradually increases, the probability of acquiring the lock before the thread backoff can be increased. However, when the number of spins reaches a certain order of magnitude, the overhead of spins is higher than that of thread context switching, indicating that spin locks are no longer suitable- so they degrade into mutexes.

Through experiments, we determine to set the adaptive maximum k value for the number of spins at 10,000. This is because we find that when the number of spins exceeds 10,000, the optimization benefits under high contention are significantly impacted, even exceeding the cost of a single CPU context switch. Therefore, at this point, we switch to a mutex blocking wait mechanism.

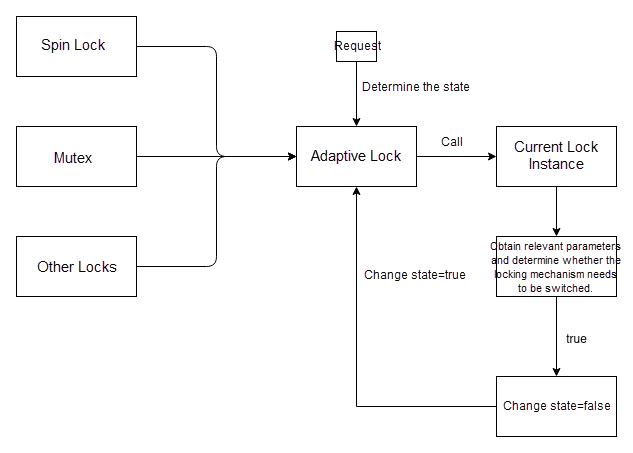

To adaptively adjust the K value, we propose a closed-loop workflow, as shown in the following figure.

The figure shows that we mainly measure the success rate of acquiring the lock through spinning under the current k value. If the success rate of acquiring the lock under the current k value is not high enough, we incrementally increase the k value to boost the probability of lock acquisition. But if increasing the k value does not effectively enhance the success rate, then switching to a mutex would yield greater benefits.

Switching to a mutex indicates potential high burst traffic, leading to intense lock contention. However, mutexes are not suitable for low-contention scenarios, so we also need to decide how to switch back from mutexes to spin locks. Therefore, we monitor the request rate at which a spinlock is switched to a mutex. When the overall request rate falls below 80% of the recorded value, we switch back to a spin lock.

To verify the correctness and performance optimization effects of the ABS lock, we conducted multiple experiments, including performance testing and chaos fault testing. This section will describe the specific experimental designs and results.

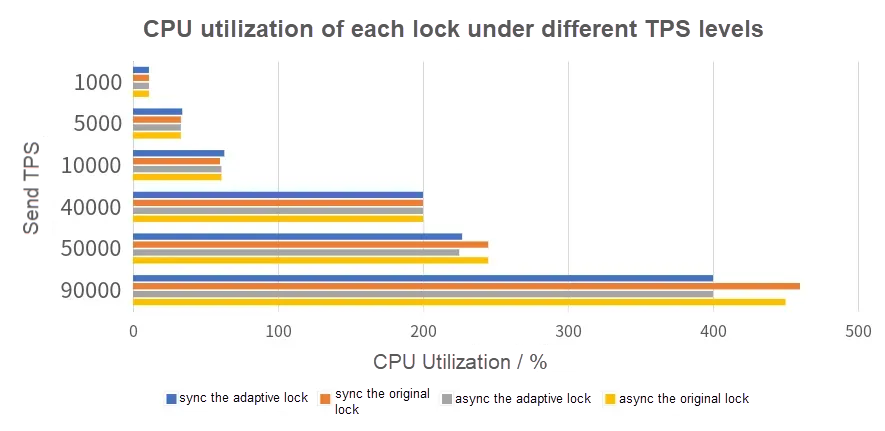

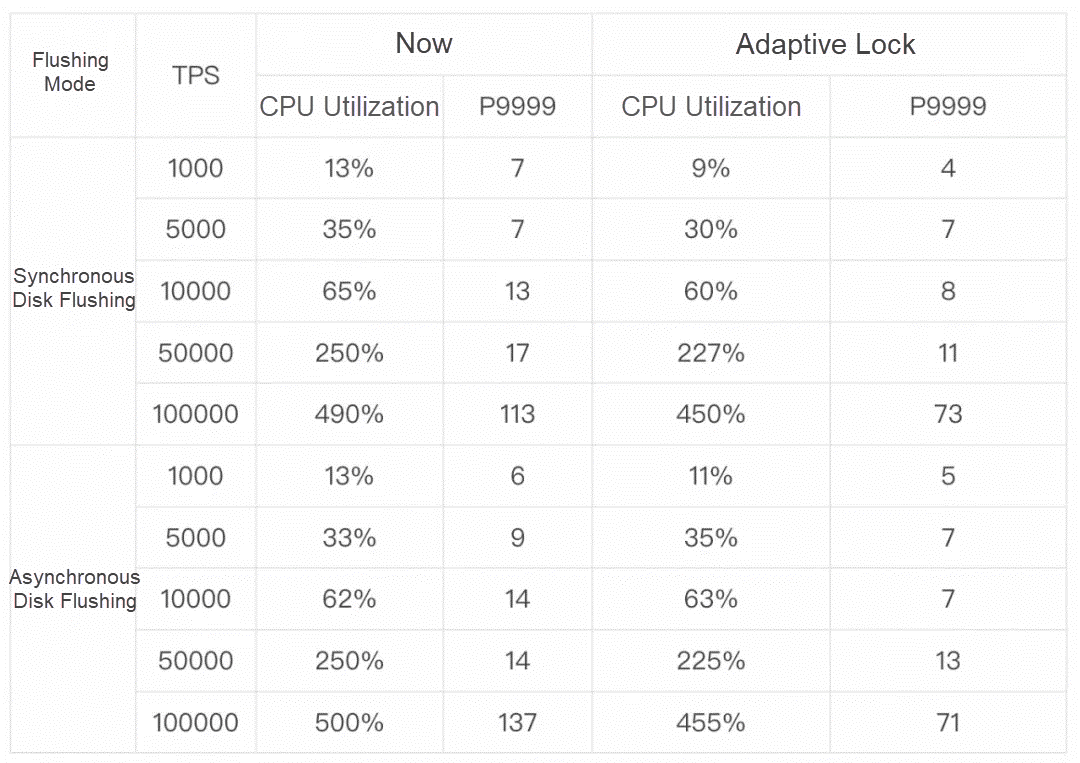

(1) Optimization of CPU utilization and latency

When the message body size is 1 KB, we record the CPU utilization under different message sending rates. The results are shown in the following figure.

The results indicate that when the number of messages is increasing, the adaptive lock shows significant advantages, effectively reducing CPU utilization.

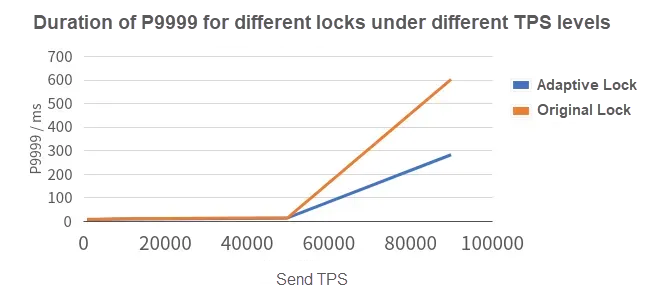

In addition, we record P9999 during message sending. P9999 represents the 99.99th percentile of send latencies for tail requests, reflecting the slowest request times during this period. The results are shown in the following figure.

As can be seen, under different TPS, the adaptive lock improves P9999 performance, effectively reducing the latency caused by lock contention during message sending.

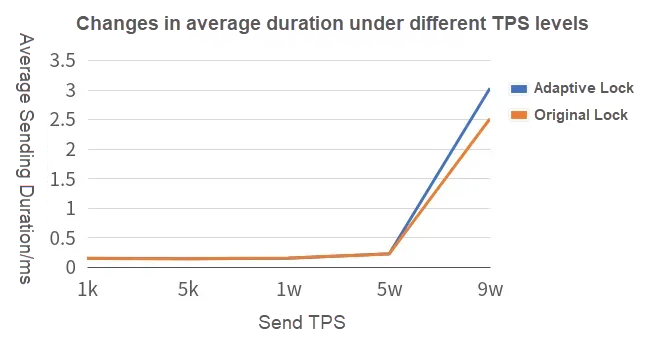

However, tail requests generally represent an extreme scenario with intense contention. Some messages may undergo multiple lock acquisition attempts without success, thus leading to this long-tail effect. We also tested the average sending latency under different TPS, and found that when the contention is extremely intense, the ABS lock will increase the average latency by about 0.5 ms due to its inherent spinning. When using the lock, specific scenarios need to be taken into account to determine whether to enable the adaptive lock under high pressure.

We also conducted experiments for scenarios with even smaller message bodies. The following table presents the complete test results.

(2) Maximum performance improvement

In addition, to calculate the performance improvement brought about by the adaptive lock, we also tested the maximum performance of the broker. The results are shown in the following table.

| CPU Arch | Flush Policy | Original QPS | Optimal QPS | Improvement |

|---|---|---|---|---|

| X86 | ASYNC | 176312.35 | 184214.98 | +4.47% |

| X86 | SYNC | 177403.12 | 187215.47 | +5.56% |

| ARM | ASYNC | 185321.49 | 206431.82 | +11.44% |

| ARM | SYNC | 188312.17 | 212314.43 | +12.85% |

According to this table, we can conclude that the adaptive lock can find the optimal performance point in multiple scenarios at the same time, effectively maximizing performance.

(3) Summary

From all the above experimental data, we can draw the following conclusions:

In software engineering, the concept of "embrace failure" acknowledges that errors and anomalies are a normal part of the process, rather than something to be entirely avoided. For critical systems like Apache RocketMQ, the pressures encountered in production environments far exceed those in idealized laboratory tests. To address this, we adopted chaos engineering practices, which involve proactively exploring the limits and vulnerabilities of the system.

The core objective of chaos engineering is to enhance the robustness and fault tolerance of the system. It involves deliberately introducing failures in real operations, such as network delays and resource constraints, to observe how the system responds to unexpected conditions. The aim is to confirm whether the system can remain stable in uncertain and complex real-world scenarios and whether it can continue to operate efficiently when confronted with real-world issues.

After the introduction of the adaptive locking mechanism in Apache RocketMQ, rigorous chaos engineering experiments were conducted, including but not limited to simulating communication failures between distributed nodes and peak load situations. The aim was to verify the performance and recovery capability of the new mechanism under stress. Only by enduring these intense stress tests can a system prove its ability to maintain high availability in dynamic environments, confirming its maturity and reliability.

To sum up, through chaos engineering, we put Apache RocketMQ through real-world simulations to measure its true strength in achieving high availability. Through such fault tolerance testing, we aim to demonstrate that our adjustments to the locking mechanism do not affect the correctness of data writing.

(1) Configurations

Our chaos testing validation environment is set up as follows:

The configuration for the aforementioned five machines is as follows: Processor: 8 vCPUs, Memory: 16 GiB, Instance Type: ecs.c7.2xlarge, Public Network Bandwidth: 5 Mbps, Internal Network Bandwidth: 5 Mbps/ Maximum 10 Gbps.

During the test, we set up several random test scenarios as follows. Each scenario lasts for at least 30 seconds, followed by a recovery period of 30 seconds before injecting the next fault.

Each scenario is tested at least 5 times, with each test lasting at least 60 minutes.

(2) Conclusion

For all the scenarios mentioned above, the total duration of the chaos testing is at least: 2 (number of scenarios) 5 (number of tests per scenario) 60 (duration of a single test) = 600 minutes

Given that each fault injection lasts 30 seconds and each recovery period also lasts 30 seconds, faults are at least injected 600 (600/1= 600) times. In fact, the number of injections and their duration is much higher than the above calculated values.

In these documented test results, there is no message loss in Apache RocketMQ, and the data is strongly consistent before and after fault injections.

This article implements the evolution of the Apache RocketMQ locking mechanism and the design and implementation of the adaptive backoff spin lock mechanism (ABS lock). As concurrent systems become more and more complex, deploying effective lock management strategies is the key to maintaining performance. Therefore, we aim to further explore the potential of performance optimization in this field and push the boundaries of what can be achieved.

In the future, we plan to integrate various distributed locking mechanisms and other advanced concepts with the ABS locking mechanism for its implementation and performance testing. We expect to achieve multi-end local lock consensus with a single communication, thereby reducing unnecessary network communication and lowering total bus resource consumption. However, our current focus is on the implementation of the locking mechanism during message sending, without specific implementation and testing. Other distributed locking mechanisms can be referenced as follows.

Allocate different delay slots to each client, causing them to retry their requests after a delay. This avoids a large number of unnecessary collisions on the bus.

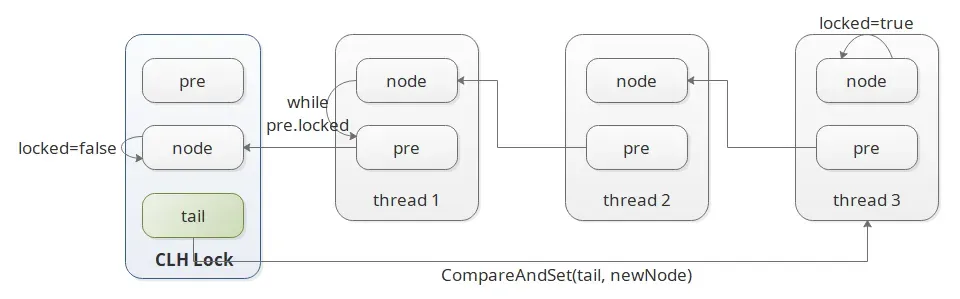

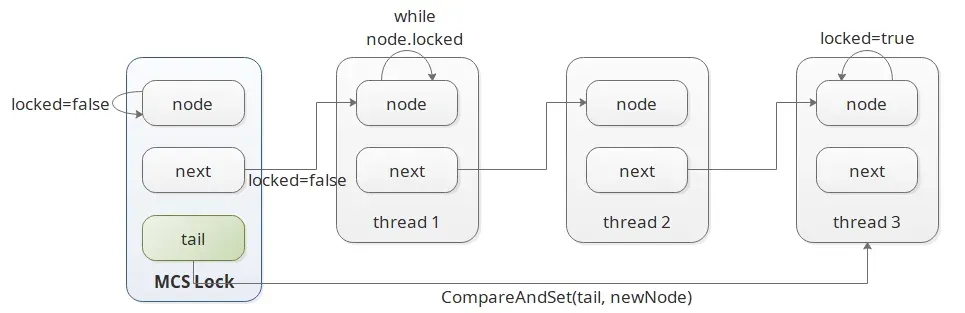

The CLH lock updates its state by continuously reading the value of the preceding node.

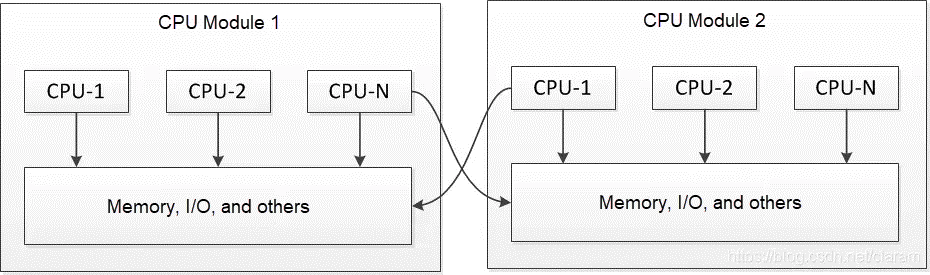

The MCS lock is similar to the CLH lock, but it updates the next node's state by spinning locally. It addresses the issue of the CLH lock in the NUMA (Non-Uniform Memory Access) system architecture, where accessing the memory for the locked domain status is too distant.

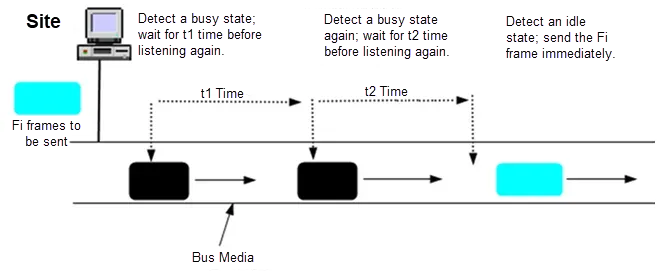

P-persistent CSMA (Persistent Carrier Sense Multiple Access) is a network communication protocol concept that is often used to describe collision avoidance strategies in distributed environments such as wireless local area networks (Wi-Fi). This concept originates from the CSMA/CD (Carrier Sense Multiple Access with Collision Detection) protocol, but it introduces a more persistent listening mechanism.

1. Basic process: Each device (node) listens continuously to check whether the channel is idle before sending data. If the channel is busy, the node waits for a while before attempting again.

2. P-counter: Upon detecting a busy channel, the node will not immediately return to the waiting state, but start a P (persistent period) counter. After the counter finishes, the node attempts to send again.

3. Collision handling: If multiple nodes start sending at the same time and lead to a collision on the channel, all nodes involved in the collision will notice it and increment their respective P counters before retrying. This allows packets to have a better chance of getting through over a longer period, increasing overall efficiency.

4. Recovery phase: When the P counter reaches zero and the channel remains busy, the node enters a recovery phase, selecting a new random delay time before attempting to send again.

P-persistent CSMA reduces the number of unnecessary collision occurrences through this design, but it may lead to long idle waiting times during network congestion.

[1] Apache RocketMQ

https://github.com/apache/rocketmq

[2] OpenMessaging OpenChaos

https://github.com/openmessaging/openchaos

[3] Ji, Juntao & Gu, Yinyou & Fu, Yubao & Lin, Qingshan. (2024). Beyond the Bottleneck: Enhancing High-Concurrency Systems with Lock Tuning. 10.1007/978-3-031-71177-0_20.

[4] Chaos Engineering

https://en.wikipedia.org/wiki/Chaos_engineering

[5] T. E. Anderson, “The performance of spin lock alternatives for shared-money multiprocessors,” in IEEE Transactions on Parallel and Distributed Systems, vol. 1, no. 1, pp. 6-16, Jan. 1990, doi: 10.1109/71.80120

[6] Y. Woo, S. Kim, C. Kim and E. Seo, “Catnap: A Backoff Scheme for Kernel Spinlocks in Many-Core Systems,” in IEEE Access, vol. 8, pp. 29842-29856, 2020, doi: 10.1109/ACCESS.2020.2970998

[7] L. Li, P. Wagner, A. Mayer, T. Wild and A. Herkersdorf, “A non-intrusive, operating system independent spinlock profiler for embedded multicore systems,” Design, Automation & Test in Europe Conference & Exhibition (DATE), 2017, Lausanne, Switzerland, 2017, pp. 322-325, doi: 10.23919/DATE.2017.7927009.

[8] OpenMessaging benchmark

https://github.com/openmessaging/benchmark

Optimize Python Application Observability: Overcome the Challenges in LLM Application Deployment

212 posts | 13 followers

FollowAlibaba Developer - January 28, 2021

Alibaba Clouder - March 19, 2018

Alibaba Cloud Native Community - February 1, 2023

Alibaba Cloud Native Community - April 23, 2023

Alibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native Community - September 9, 2025

212 posts | 13 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Alibaba Cloud Native