By Hanxie

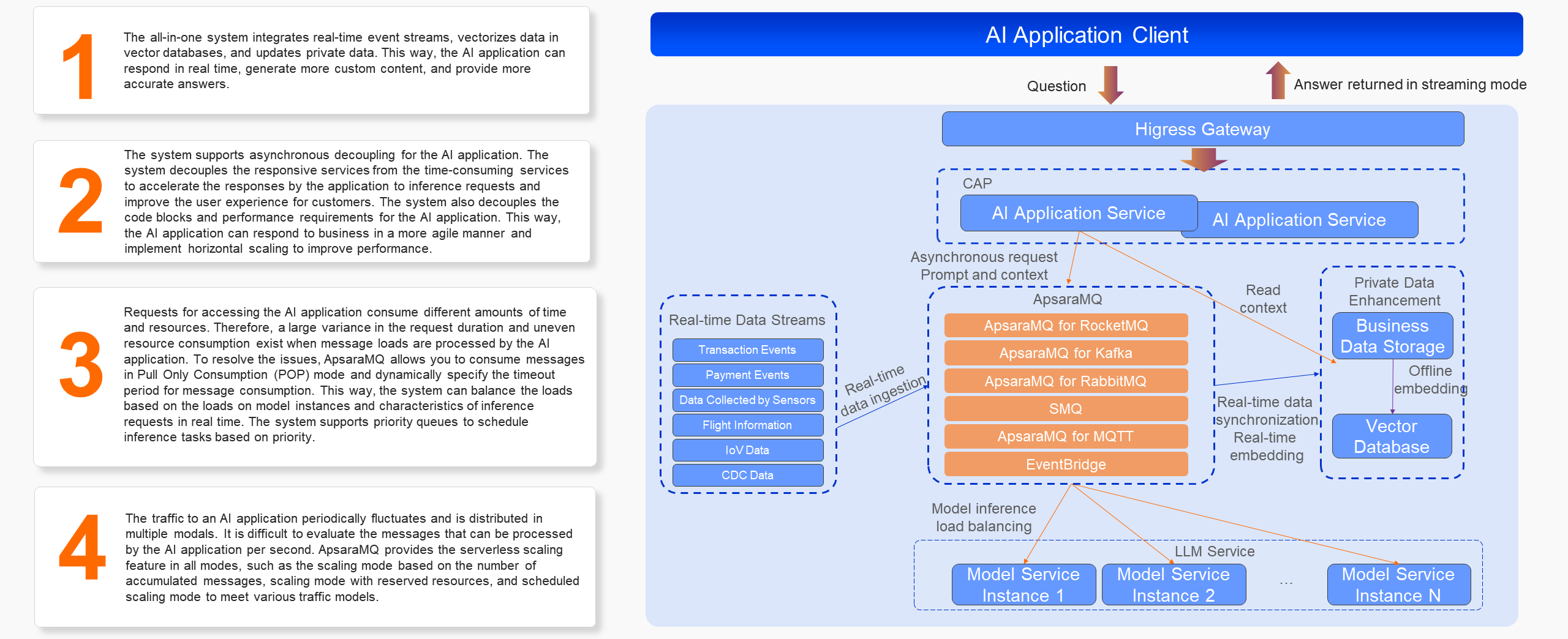

AI applications face many challenges when they are commercialized. For example, AI applications are required to deliver services in a more efficient manner, generate more real-time and accurate results, and provide more user-friendly experience. Traditional architectures support only synchronous interaction and cannot meet the preceding requirements. This article describes how to handle the preceding challenges based on event-driven architectures.

Before we delve into the combination of event-driven architectures and AI, let's review AI applications in the current situation.

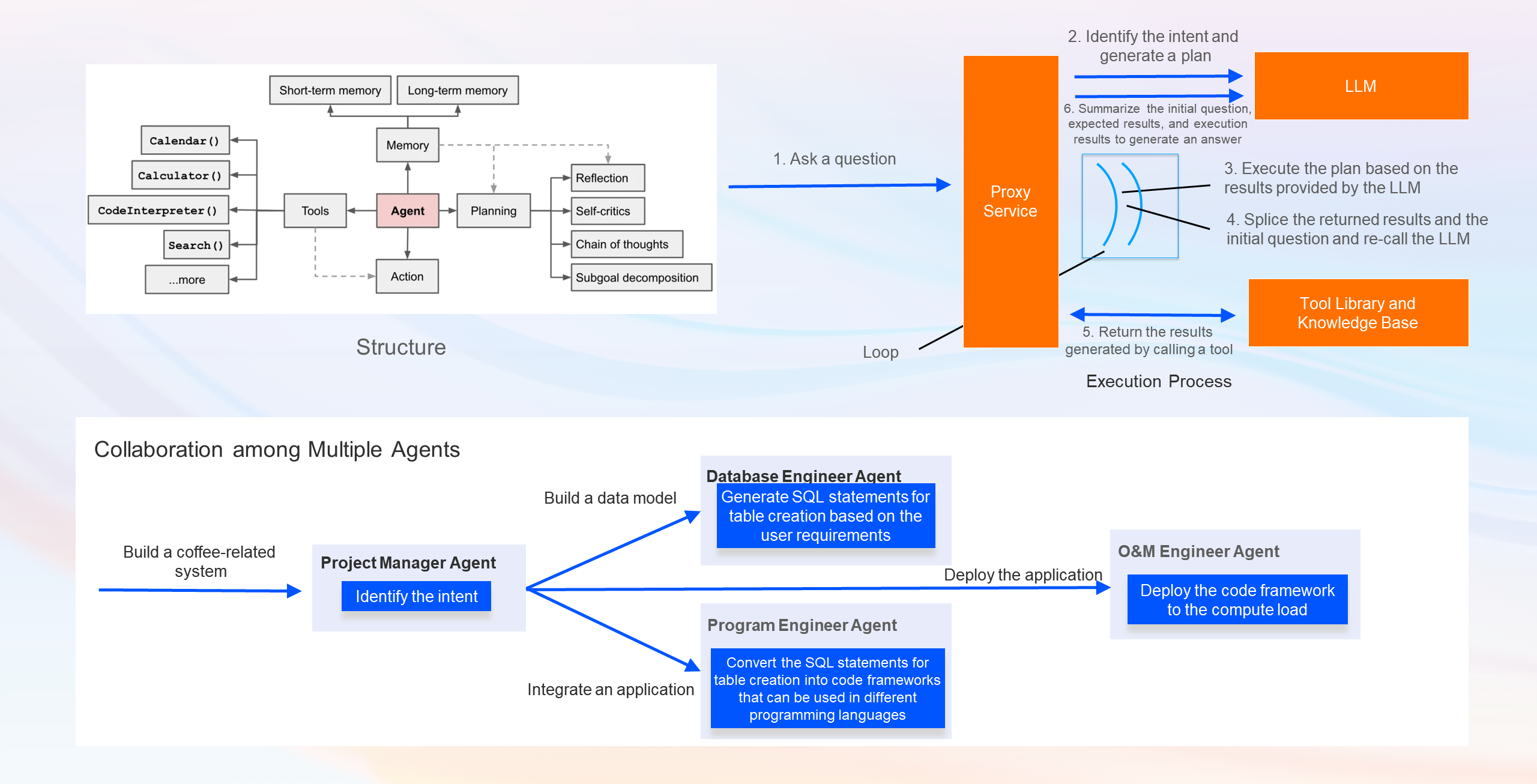

Based on the application architectures, AI applications can be roughly divided into the following three categories:

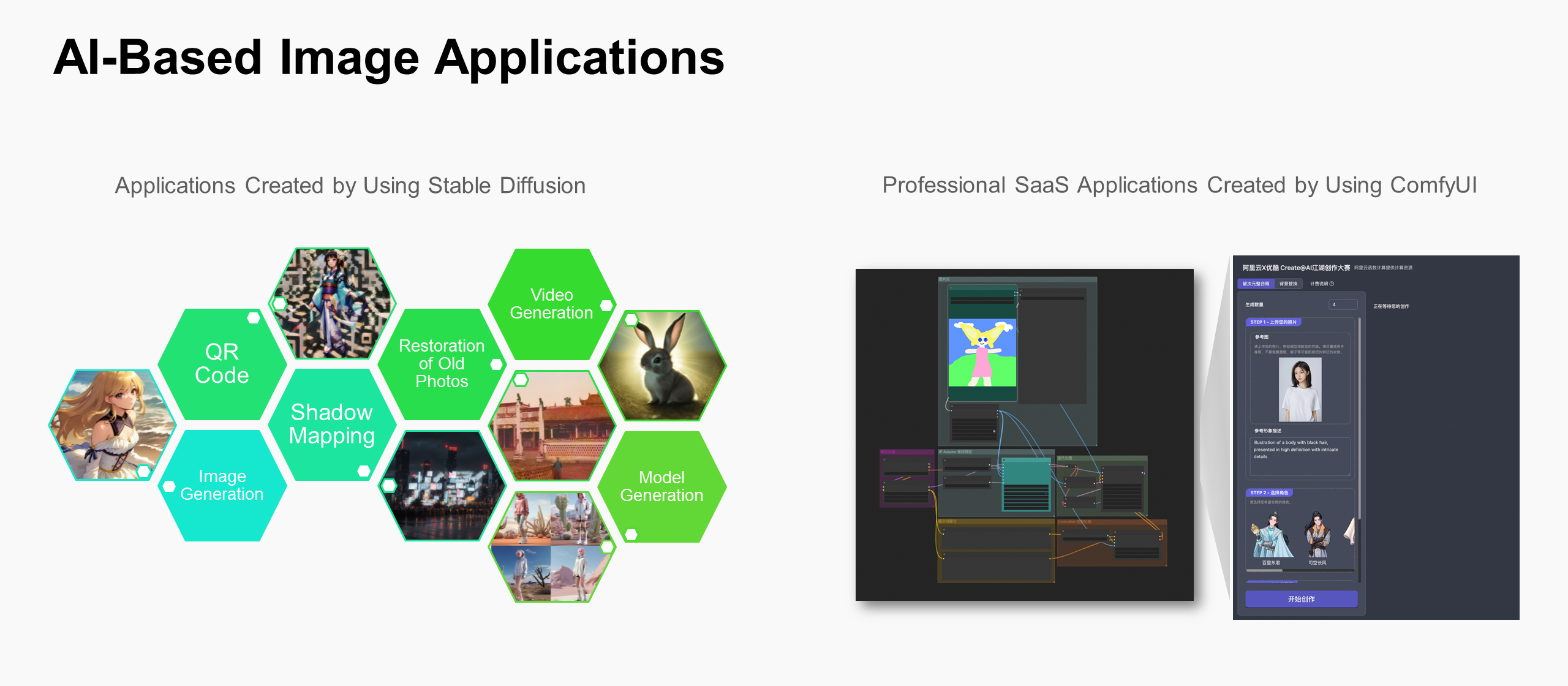

(1) Extension applications developed based on foundation models, such as ChatGPT (text generation), Stable Diffusion (image generation), and CosyVoice (speech generation). In most cases, such applications provide relatively atomic services with model capabilities as the core.

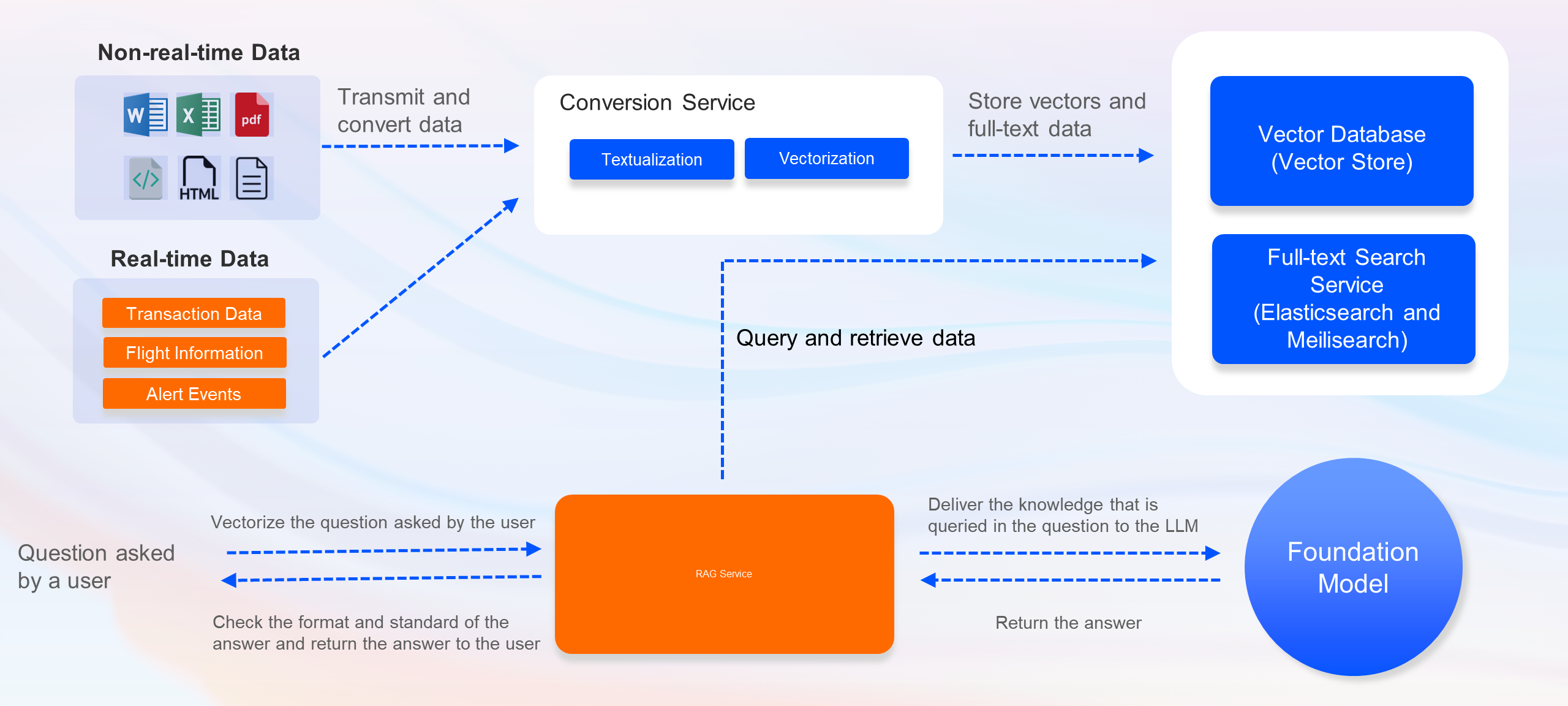

(2) Intelligent applications based on knowledge bases, such as Langchain ChatChat. Such applications use large language models (LLMs) as the core and are developed based on the retrieval-augmented generation (RAG) technology, which are applicable to a wide range of business scenarios.

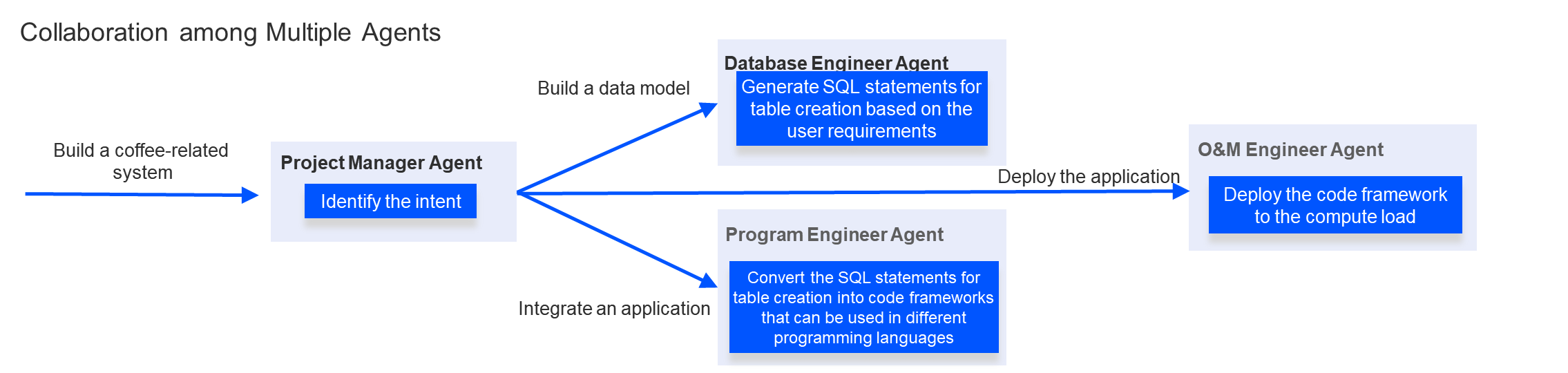

(3) Agent applications that use LLMs as the interaction center. Such applications can connect to external applications or services by calling tools and work in complex forms, such as collaboration among multiple agents. Agent applications are the most creative applications for enterprises.

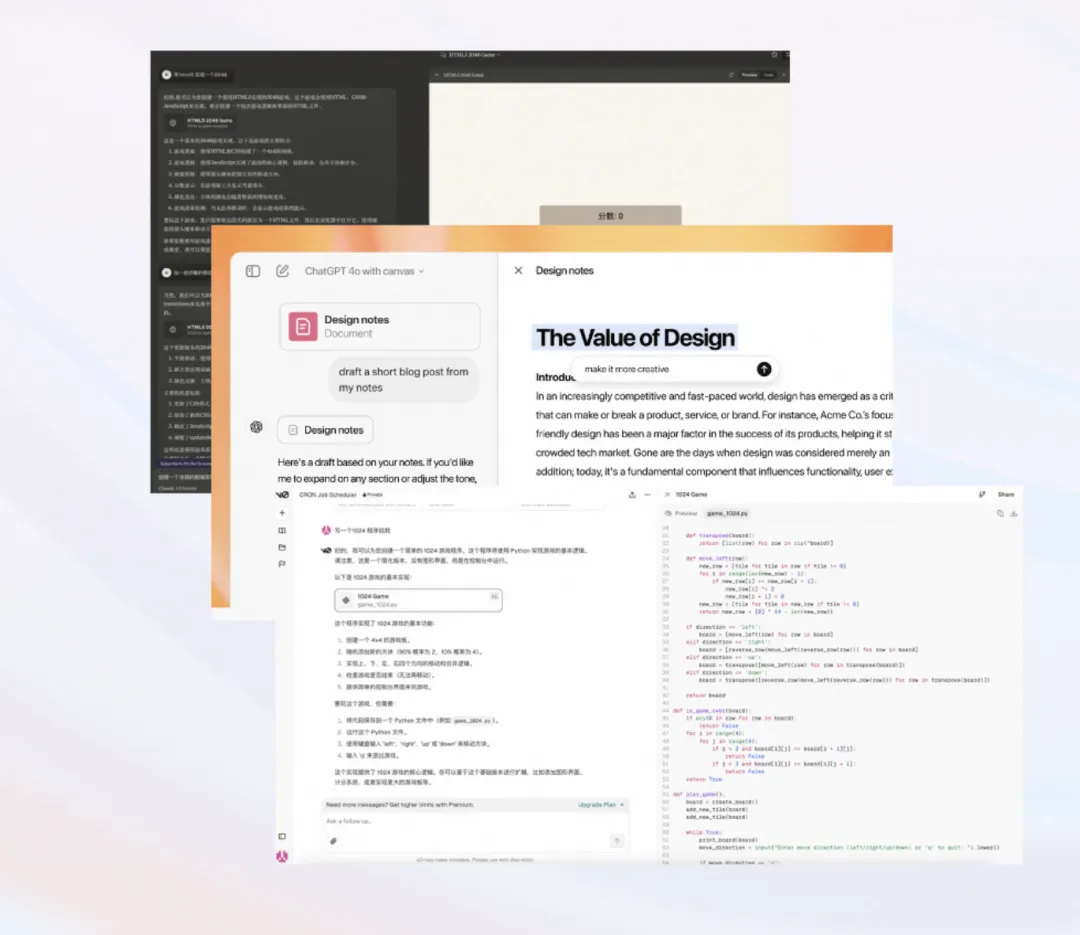

The word "native" represents a broad understanding of a concept. For example, when we talk about native mobile applications, we can immediately associate the word with the applications on mobiles. When we talk about cloud-native applications, a large number of developers can immediately think of containerized applications. However, when we talk about AI-native, except for several leading applications such as ChatGPT and Midjourney, we cannot define AI-native applications as we define native mobile applications. We still cannot draw a conclusion today. We just deduce the direction of AI-native based on facts, hoping to gradually concrete the image of AI-native in our mind.

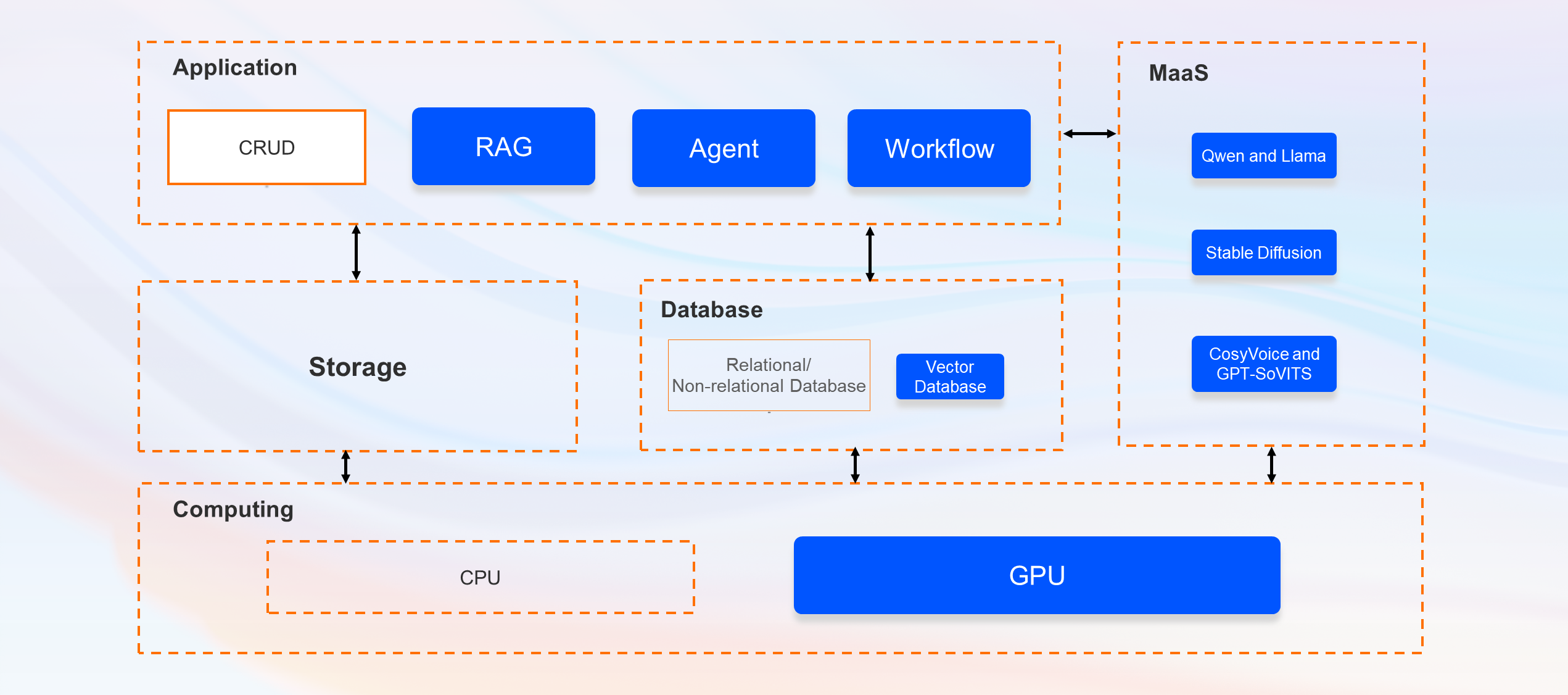

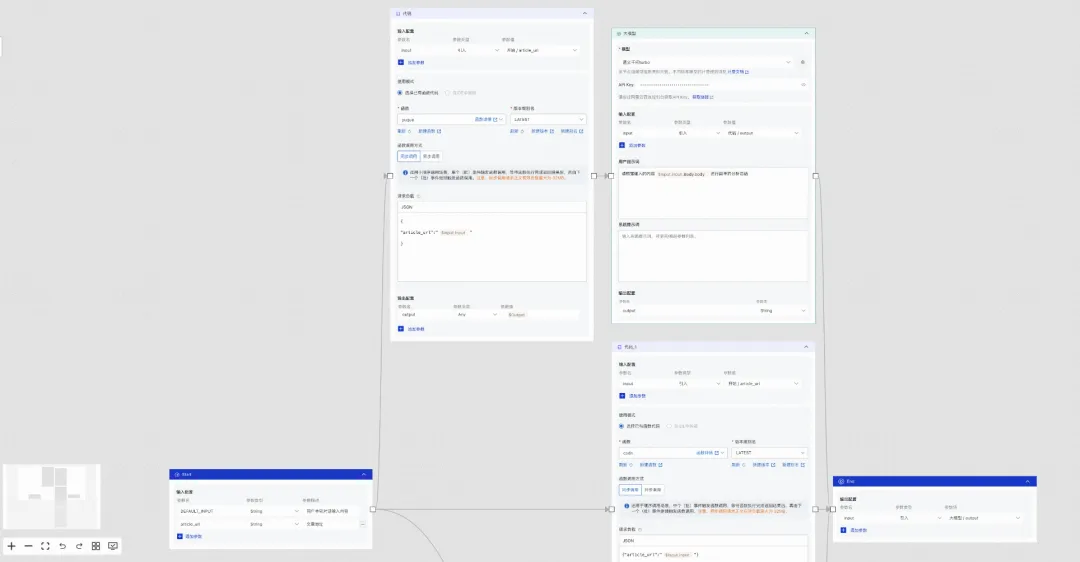

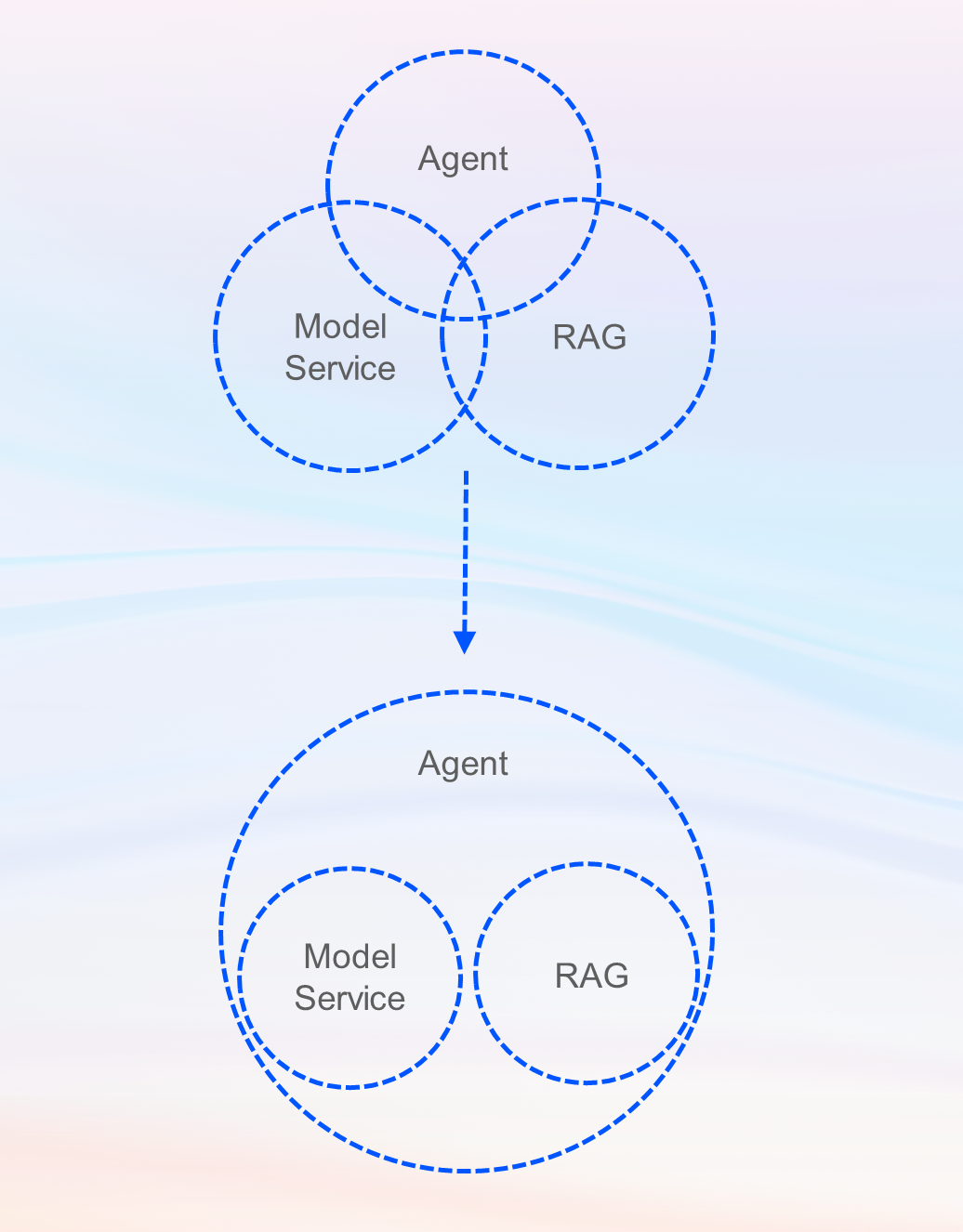

After AI capabilities are applied to application architectures, the application architectures are greatly changed. Programming paradigms such as RAG and agent are used and traditional workflows become different after AI nodes are added to the workflows.

Based on the evolution of the forms of products developed by well-known AI enterprises, AI applications of the preceding three categories are integrated and relatively separated. AI applications gradually evolve into the applications in which an agent controls and manages the other applications in a centralized manner,

such as OpenAI Canvas, Claude Artifacts, and Vercel v0. Such applications share the common features, including intelligent kernel, multi-modal capability, and language user interface (LUI).

From another perspective, users will pay for AI-native applications only if the AI applications can provide better user experience. Distributed capabilities of foundation models and multi-modal capabilities can improve the user experience only in specific scenarios. However, in some aspects, the user experience provided by such capabilities is even inferior to that provided by traditional applications. Therefore, we need to integrate conversational interaction, AI models, and multi-modal capabilities to create applications that provide better user experience than that provided by traditional applications.

We use the event-driven architecture because the sequential architecture may fail to meet business requirements in practices, and the event-driven architecture is more advanced than the sequential architecture.

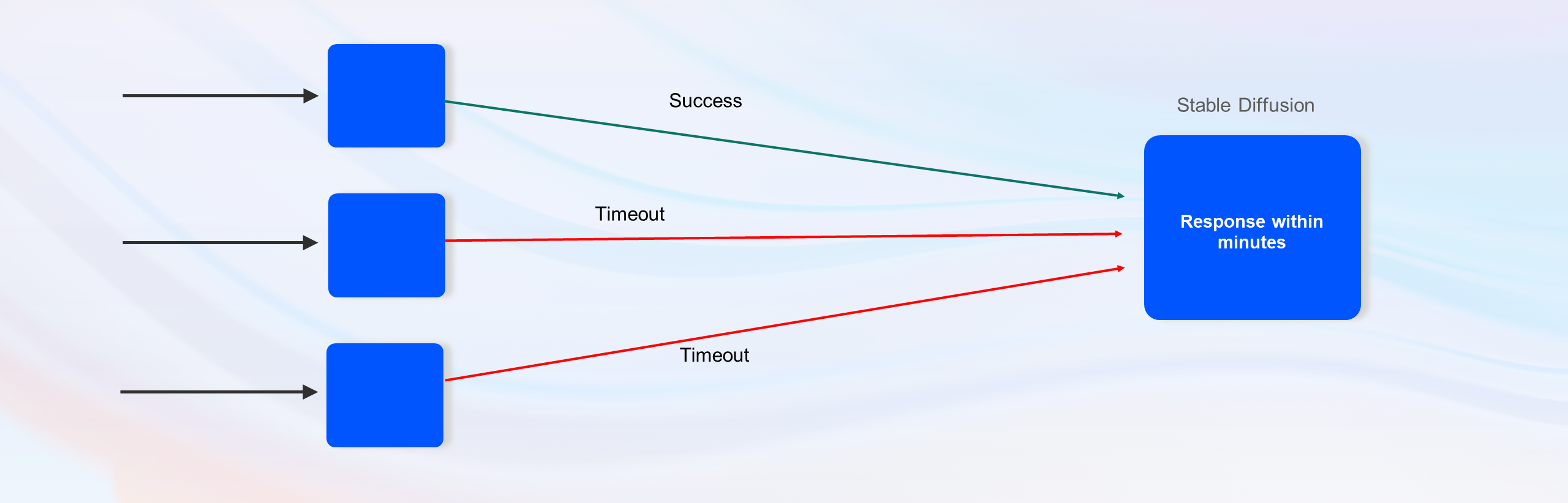

The amount of time consumed by a model service for inference is much greater than that consumed for calling a traditional network service. For example, if you use Stable Diffusion to generate an image from text, the image can be generated within seconds even if the service is optimized by using algorithms. If the number of concurrent requests is large, server downtime may occur. In addition, the synthesis of speeches or virtual avatars may take minutes. In this case, the sequential architecture is obviously not suitable. The event-driven architecture can quickly respond to requests of users and call inference services based on the business requirements. This ensures user experience and reduces the risk of system downtime.

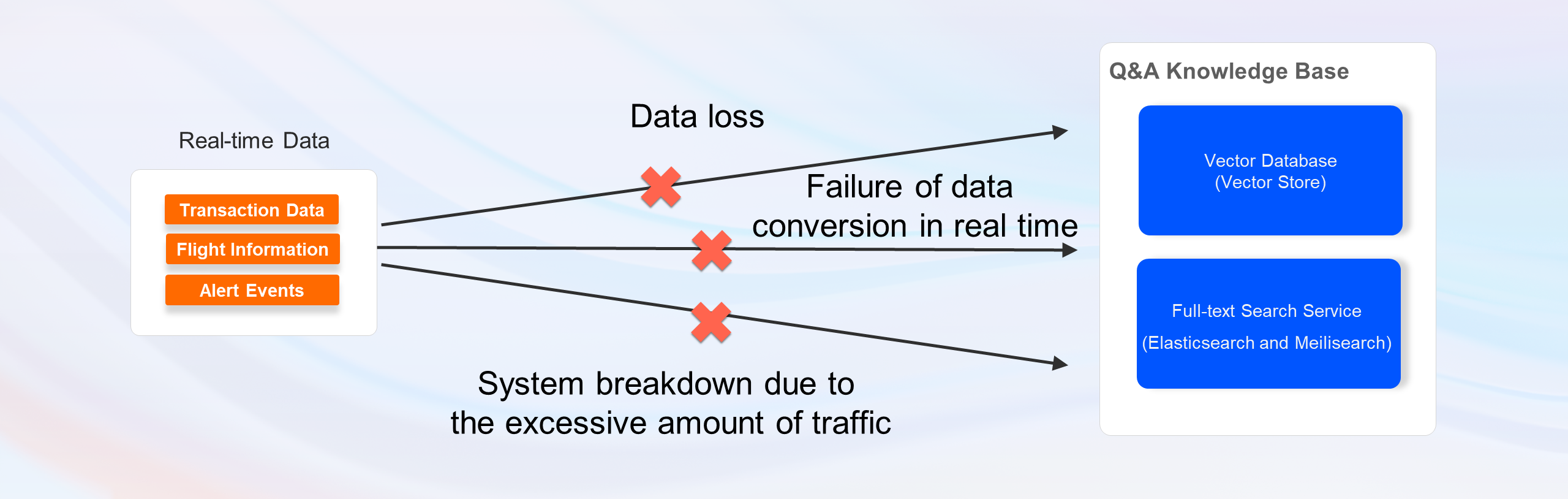

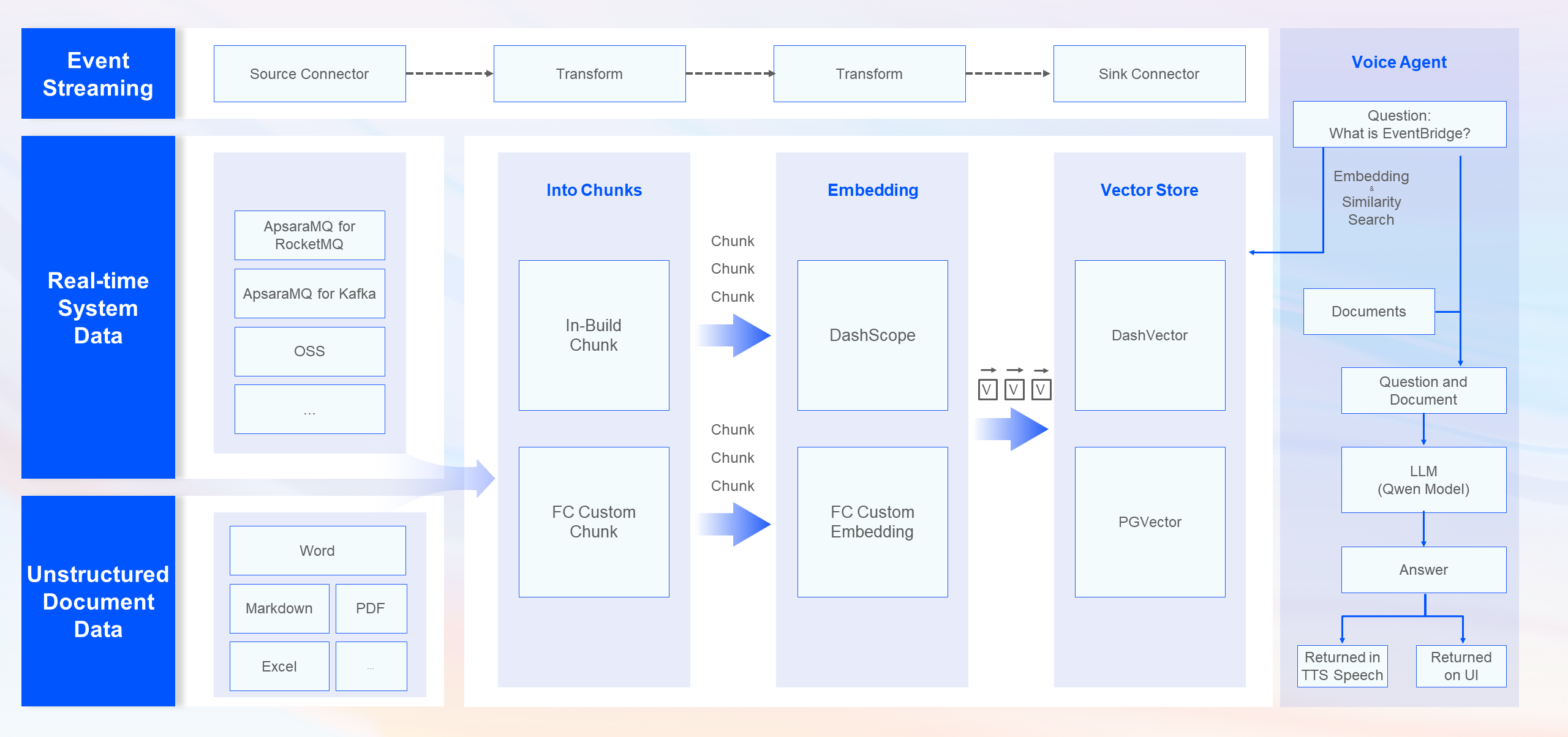

In an intelligent conversational search system, the quality of the generated results depends on the data in the system. The timeliness and accuracy of data retrieved for conversational search greatly affect the user experience of the intelligent conversational search system. In the system architecture, conversational search and data updates are separated. It is impractical to manually update large amounts of data. We can update data in an efficient manner by configuring scheduled tasks and building workflows that are used to update data in knowledge bases. The event-driven architecture has obvious advantages in this scenario.

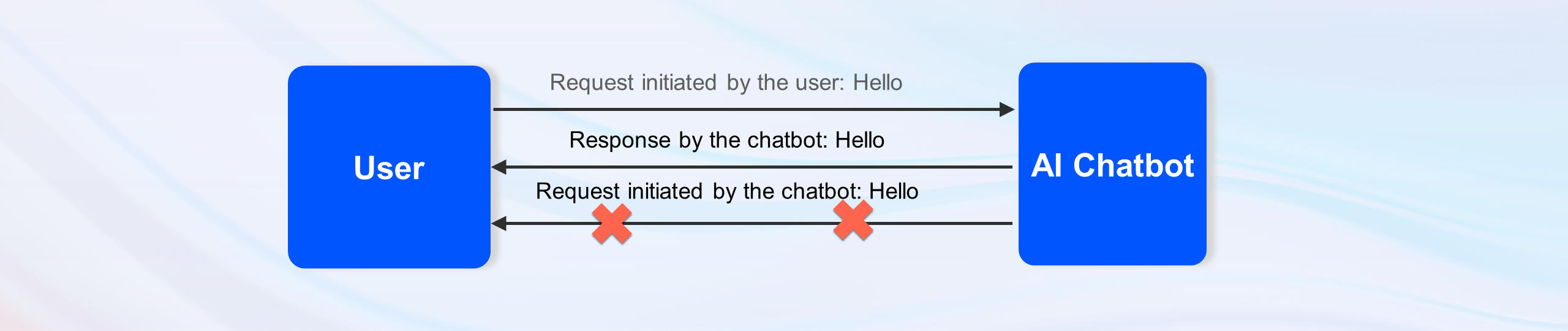

In conversational search scenarios, services that behave like a human can gain favorable impression of users and expand business opportunities. The traditional Q&A application architecture is relatively rigid. AI applications use message queues to transmit information and notify users in an efficient manner. The AI chatbot can identify the intent of users and proactively communicate with users to keep the loyalty of users to services.

I will share some practices for creating AI-native applications by using event-driven architectures.

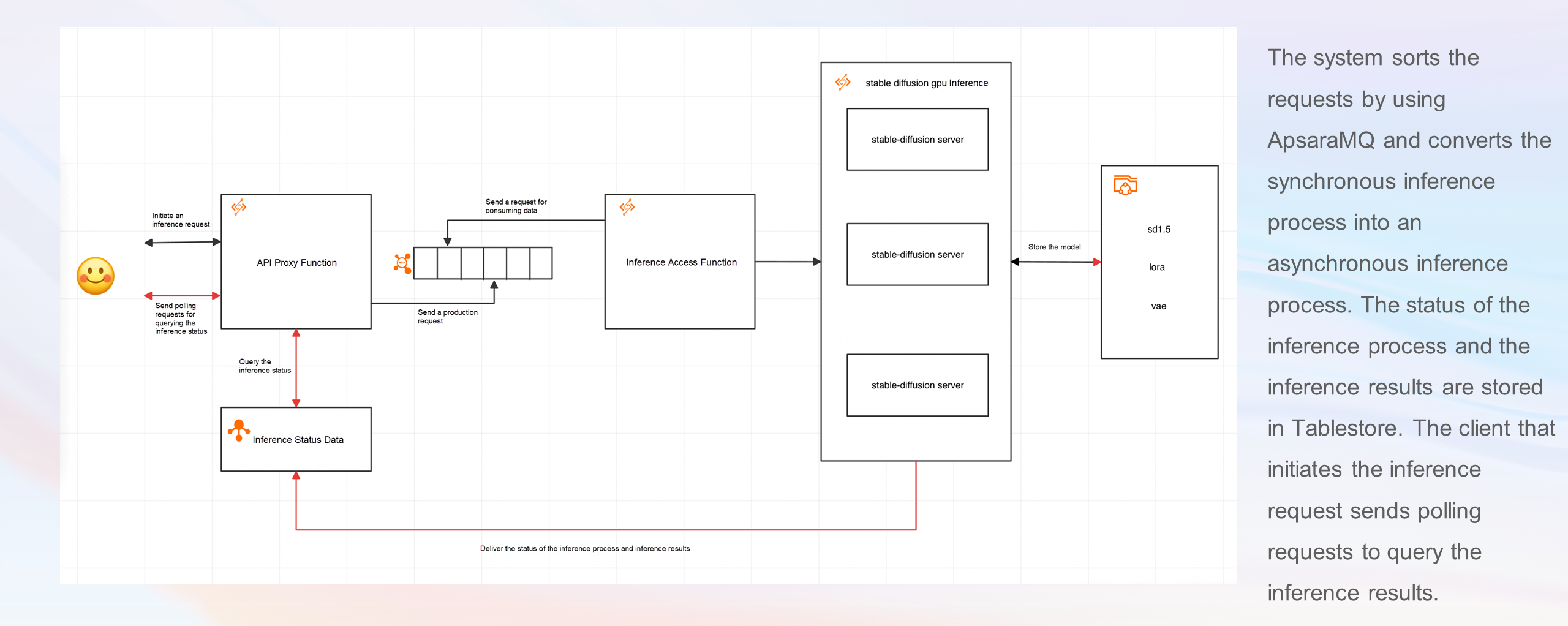

We talked about the issues that may occur when users use the text-to-image model of Stable Diffusion. To resolve these issues, we adopt an event-driven architecture. In the event-driven architecture, we use Function Compute and Simple Message Queue (SMQ, formerly MNS) to create an asynchronous inference architecture for Stable Diffusion. When a user sends a request to the text-to-image model of Stable Diffusion, the request is sent to the API proxy function through the Function Compute gateway. The API proxy function tags and authenticates the request, sends the request to the SMQ queue, and then records the metadata of the request and inference information to Tablestore. The inference function consumes the data based on the task queue, schedules the GPU instance to start Stable Diffusion, returns the image generation result, and updates the request status after the image is generated. The client notifies the user of querying the inference process by sending polling requests on the page.

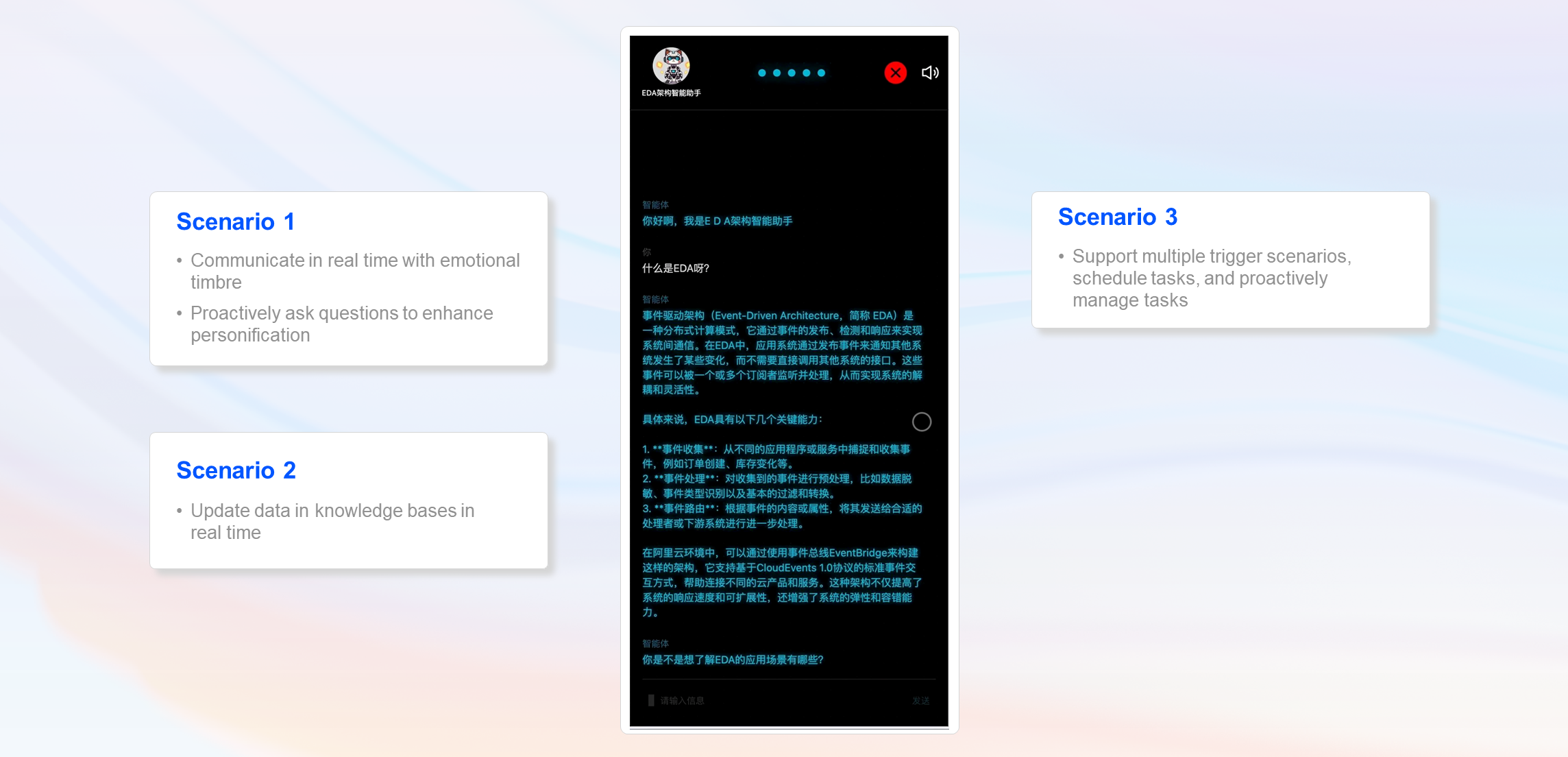

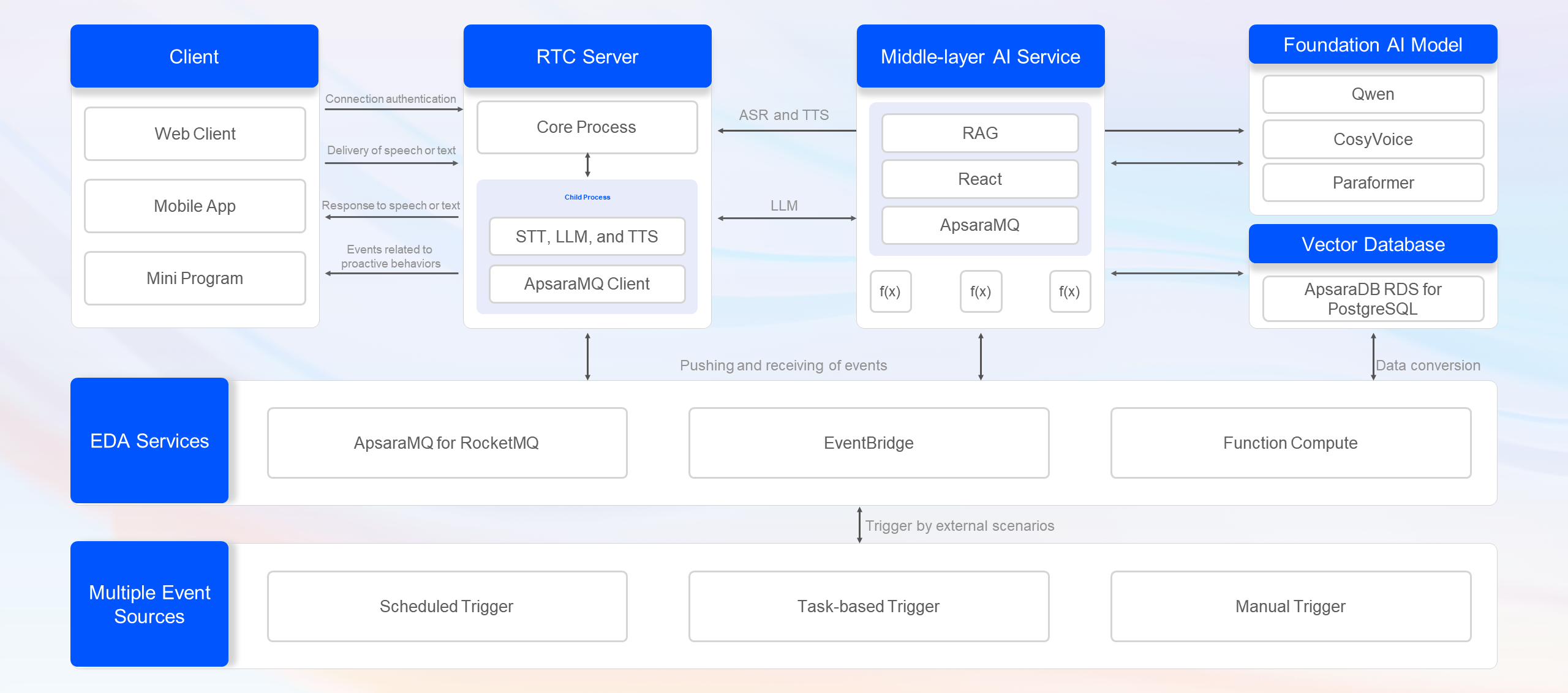

This is a relatively complex application. Users can communicate with the intelligent conversational search service in real time based on speeches and receive questions that are proactively asked by the intelligent conversational search service.

The application uses an event-driven architecture. An ApsaraMQ for RocketMQ client is installed on the Real-Time Communication (RTC) server and the RTC server subscribes to a topic that serves as the center topic in ApsaraMQ for RocketMQ. A scheduled task is triggered to analyze the intent of users,generate messages, and sends the messages to the topic. Then, the ApsaraMQ for RocketMQ client consumes the messages and proactively ask questions.

The knowledge base is automatically updated based on the change data capture (CDC) policy. The automatic update process is triggered by a condition such as importing data from an external system data source or uploading documents to Object Storage Service (OSS). The data is split into chunks, vectorized, and stored in a vector database and a full-text search database.

Finally, I want to share a set of solutions with AI application developers. Select foundation model services such as Ollama, ComfyUI, CosyVoice, and Embedding in Cloud Application Platform (CAP) of Alibaba Cloud and host the model services in CAP. Use services such as ApsaraMQ for RcoketMQ, ApsaraMQ for Kafka, SMQ, and EventBridge to build data stream pipelines and a message center. Use a framework such as Spring AI Alibaba to develop backend services in your local computer to implement RAG and agent capabilities. Use the Next.js framework to build an interface of the frontend service. Then, deploy the developed frontend and backend services to CAP by using Serverless Devs and tune and access the services. Finally, use cloud-native gateways to protect the production of the services. To perform long-term O&M operations on the knowledge base or agent, use Application Real-Time Monitoring Service (ARMS) to monitor metrics of the services.

This article is derived from the topic shared at the Salon for Cloud-native and Open Source Developers during the AI Application Engineering Session held in Hangzhou, China.

The Way to Breaking Through the Performance Bottleneck of Locks in Apache RocketMQ

212 posts | 13 followers

FollowAlibaba Cloud Community - February 5, 2026

Alibaba Cloud Native - December 10, 2024

Alibaba Cloud Big Data and AI - December 29, 2025

5927941263728530 - May 15, 2025

Alibaba Cloud Big Data and AI - January 21, 2026

PM - C2C_Yuan - May 23, 2024

Great write-up! Kristhian. You’ve demonstrated excellent clarity and insight, and that too in such a concise and impactful way.

Great write-up! Kristhian. You’ve demonstrated excellent clarity and insight, and that too in such a concise and impactful way.Sure! The translation of that sentence is: "I really like this article."

Great write-up! Kristhian. You’ve demonstrated excellent clarity and insight, and that too in such a concise and impactful way.Sure! The translation of that sentence is: "I really like this article."

212 posts | 13 followers

Follow E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Native

5113120674444587 December 11, 2024 at 9:27 am

I want to share a set of solutions with AI application developers.

5158455706477346 December 12, 2024 at 7:28 am

Name、Email