By Yanhong

With the growing maturity and versatility of LLMs across an expanding range of scenarios, an increasing number of enterprises are integrating LLMs into their products and services. LLMs demonstrate impressive capabilities in processing natural language. However, the internal mechanisms of LLMs remain unclear. The lack of transparency in LLMs poses unnecessary risks to downstream applications. As a result, issues such as difficulties in LLM application deployment occur. Therefore, the understanding and interpretations of LLMs are essential to elucidating their behavior, limits, and social impact. The LLM application observability feature provides necessary data support for model interpretability. This feature also helps researchers and developers identify unexpected biases and risks and improve performance.

As a programming language in the AI era, Python has been widely used in recent years. Popular LLM projects, such as LangChain, LlamaIndex, Dify, Prompt flow, OpenAI, and Dashscope, are developed in Python. To enhance the observability of Python applications, especially LLM applications in Python, Alibaba Cloud provides the Application Real-Time Monitoring Service (ARMS) agent for Python. The ARMS agent aims to facilitate LLM application deployment for enterprises.

This article describes how to install an ARMS agent for a Python application. This article also describes the features and compatibility of the ARMS agent. In this example, an LLM application is created for testing.

In this example, a sample LLM application is created in the following scenario to help you gain a better understanding of the ARMS agent for Python:

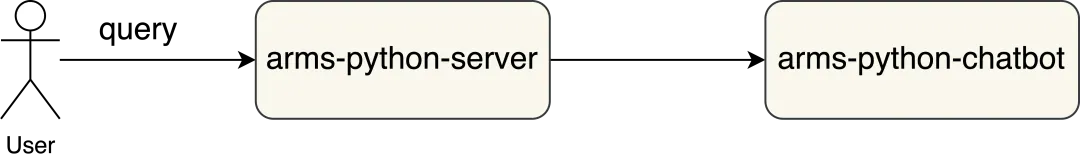

An enterprise integrates the intelligent Q&A feature into its search service during a service upgrade. The following figure shows the service architecture.

After a user initiates a Q&A query to the server, the server calls the chatbot to obtain the result. The chatbot receives the query and returns the result by using retrieval-augmented generation (RAG).

To observe the LLM application, the enterprise installs the ARMS agent for the LLM application in Python. The following section describes how to install the ARMS agent for Python.

Installation methods

In this example, the Python application is deployed in Container Service for Kubernetes (ACK). For more information about how to manually install an ARMS agent for Python, visit https://www.alibabacloud.com/help/en/arms/application-monitoring/user-guide/start-monitoring-python-applications/

• An ACK cluster is created. You can create an ACK dedicated cluster, ACK managed cluster, or ACK Serverless cluster based on your business requirements.

• A namespace is created in the cluster. For more information, see Manage namespaces and resource quotas. In this example, a namespace named arms-demo is used.

• The Python version is compatible with the framework version. For more information, see Compatibility requirements of the ARMS agent for Python.

1. Download the agent installer from the Python Package Index (PyPI) repository.

pip3 install aliyun-bootstrap2. Install the ARMS agent for Python by using aliyun-bootstrap.

aliyun-bootstrap -a install3. Start the application by using the ARMS agent for Python.

aliyun-instrument python app.py4. Build an image. You can refer to the following sample Dockerfiles to modify your Dockerfile.

Dockerfile before modification:

# Use the base image for Python 3.10.

FROM docker.m.daocloud.io/python:3.10

# Specify the working directory.

WORKDIR /app

# Copy the requirements.txt file to the working directory.

COPY requirements.txt .

# Install dependencies by using pip.

RUN pip install --no-cache-dir -r requirements.txt

COPY ./app.py /app/app.py

# Expose port 8000 of the container.

EXPOSE 8000

CMD ["python","app.py"]Modified Dockerfile:

# Use the base image for Python 3.10.

FROM docker.m.daocloud.io/python:3.10

# Specify the working directory.

WORKDIR /app

# Copy the requirements.txt file to the working directory.

COPY requirements.txt .

# Install dependencies by using pip.

RUN pip install --no-cache-dir -r requirements.txt

######################### Install the ARMS agent for Python###############################

RUN pip3 install aliyun-bootstrap && aliyun-bootstrap -a install

##########################################################

COPY ./app.py /app/app.py

# Expose port 8000 of the container.

EXPOSE 8000

#########################################################

CMD ["aliyun-instrument","python","app.py"]1. If you use unicorn to start the application, we recommend that you replace the unicorn command with a gunicorn command. Examples:

Original command:

unicorn -w 4 -b 0.0.0.0:8000 app:appModified command:

gunicorn -w 4 -k uvicorn.workers.UvicornWorker -b 0.0.0.0:8000 app:app2. If gevent is used, you need to specify the required parameter.

from gevent import monkey

monkey.patch_all()

In this case, set the GEVENT_ENABLE environment variable to true:

GEVENT_ENABLE=true• To monitor applications in a serverless ACK cluster or applications in an ACK cluster connected to Elastic Container Instance, you must first authorize the cluster to access ARMS on the Cloud Resource Access Authorization page. Then, restart all pods managed by the ack-onepilot component.

• To monitor an application deployed in an ACK cluster in which ARMS Addon Token does not exist, perform the following operations to manually authorize the ACK cluster to access ARMS. If ARMS Addon Token exists, go to Step 4.

Perform the following steps to check whether ARMS Addon Token exists in a cluster:

a. Log on to the ACK console. In the left-side navigation pane, click Clusters. On the Clusters page, find the cluster in which the application is deployed and click its name to go to the cluster details page.

b. In the left-side navigation pane of the cluster details page, choose Configurations > Secrets. In the upper part of the Secrets page, select kube-system from the Namespace drop-down list and check whether addon.arms.token is displayed on the Secrets page. Note: If ARMS Addon Token exists in a cluster, ARMS performs password-free authorization on the cluster. ARMS Addon Token may not exist in ACK managed clusters that run on specific Kubernetes versions. We recommend that you check whether an ACK managed cluster has ARMS Addon Token before you use ARMS to monitor applications in the cluster. If a cluster does not have ARMS Addon Token, you must perform the following steps to manually authorize the cluster to access ARMS:

After you install the ack-onepilot component, you must enter the AccessKey ID and AccessKey secret of your Alibaba Cloud account in the configuration file of the ack-onepilot component.

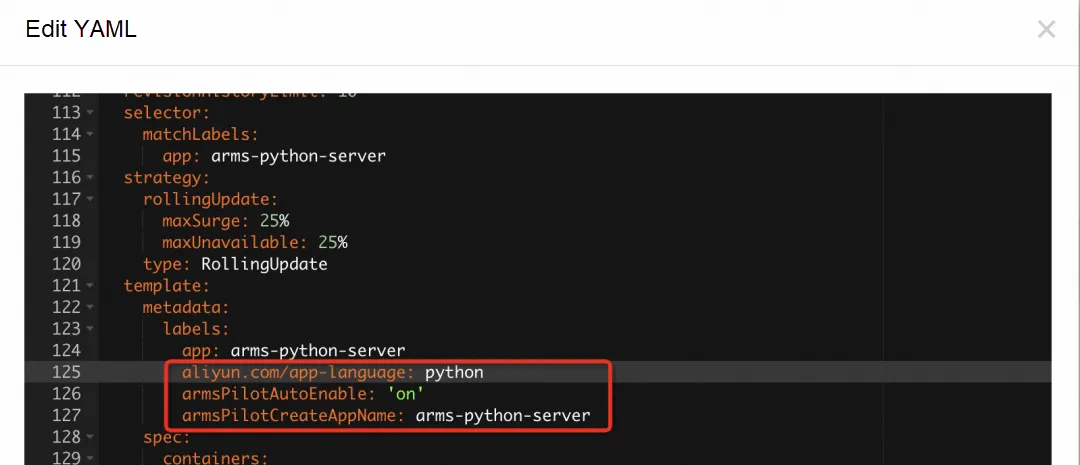

labels:

aliyun.com/app-language: python # Required. Specify that the application is developed in Python.

armsPilotAutoEnable: 'on'

armsPilotCreateAppName: "<your-deployment-name>" # Specify the display name of the application in ARMS.

The following YAML template shows how to create a Deployment and enable application monitoring for the application:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: arms-python-client

name: arms-python-client

namespace: arms-demo

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: arms-python-client

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: arms-python-client

aliyun.com/app-language: python # Required. Specify that the application is developed in Python.

armsPilotAutoEnable: 'on'

armsPilotCreateAppName: "arms-python-client" # Specify the display name of the application in ARMS.

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/arms-default/python-agent:arms-python-client

imagePullPolicy: Always

name: client

resources:

requests:

cpu: 250m

memory: 300Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: arms-python-server

name: arms-python-server

namespace: arms-demo

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: arms-python-server

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: arms-python-server

aliyun.com/app-language: python # Required. Specify that the application is developed in Python.

armsPilotAutoEnable: 'on'

armsPilotCreateAppName: "arms-python-server" # Specify the display name of the application in ARMS.

spec:

containers:

- env:

- name: CLIENT_URL

value: 'http://arms-python-client-svc:8000'

- image: registry.cn-hangzhou.aliyuncs.com/arms-default/python-agent:arms-python-server

imagePullPolicy: Always

name: server

resources:

requests:

cpu: 250m

memory: 300Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

---

apiVersion: v1

kind: Service

metadata:

labels:

app: arms-python-server

name: arms-python-server-svc

namespace: arms-demo

spec:

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: http

port: 8000

protocol: TCP

targetPort: 8000

selector:

app: arms-python-server

sessionAffinity: None

type: ClusterIP

apiVersion: v1

kind: Service

metadata:

name: arms-python-client-svc

namespace: arms-demo

uid: 91f94804-594e-495b-9f57-9def1fdc7c1d

spec:

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: http

port: 8000

protocol: TCP

targetPort: 8000

selector:

app: arms-python-client

sessionAffinity: None

type: ClusterIPAfter the application is automatically redeployed, wait for 1 to 2 minutes. In the left-side navigation pane of the ARMS console, choose Application Monitoring > Application List. On the Application List page, find the application that you created and click its name to view the metrics of the application. For more information, see View monitoring details (new).

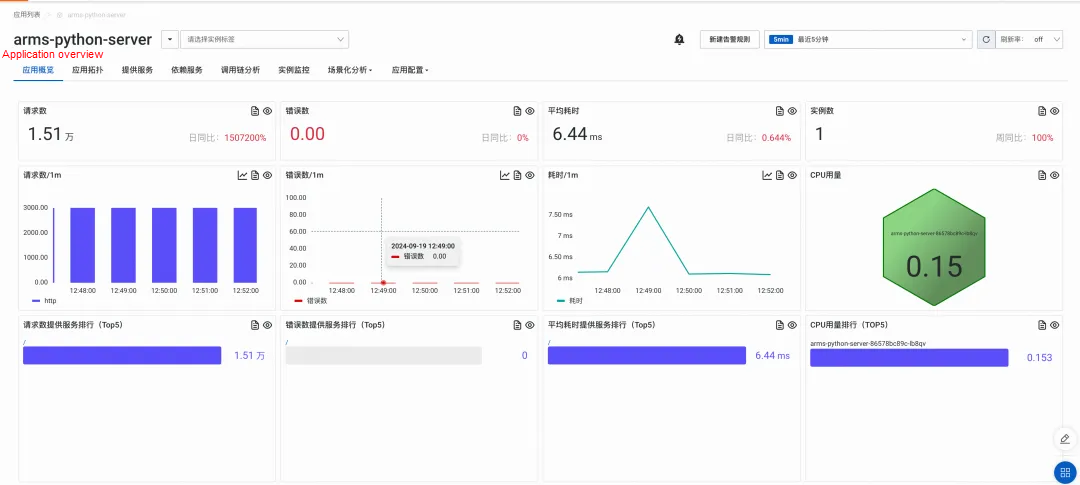

After the application is monitored by ARMS, you can view the information about the application on the application details page in the ARMS console. This section describes the features that you can use.

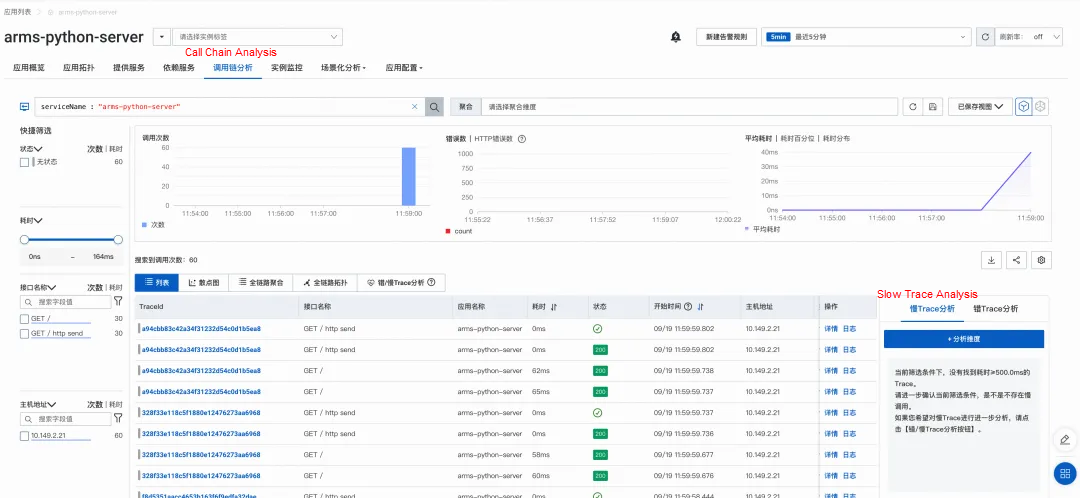

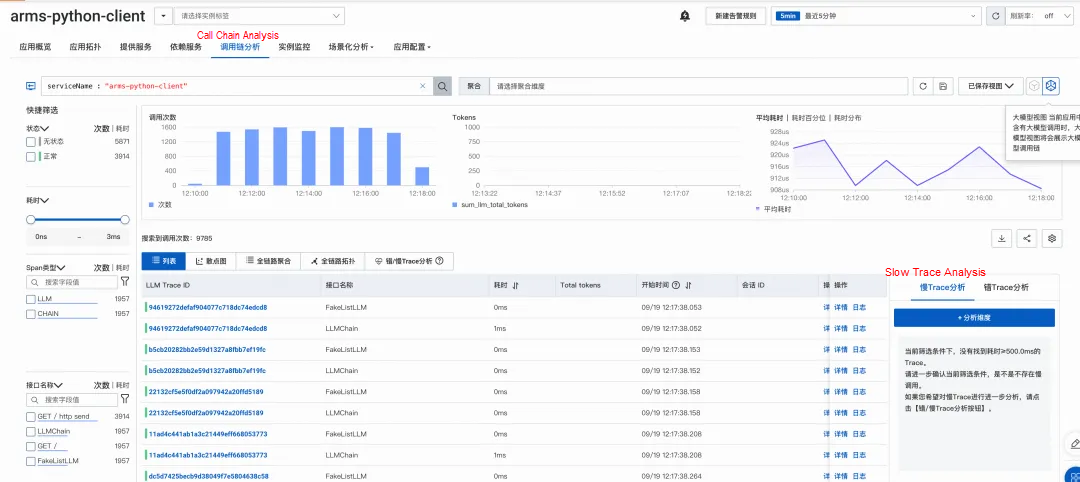

On the Trace Explorer tab, you can combine filter conditions and aggregation dimensions for real-time analysis. You can troubleshoot failed or slow calls of your application based on the failed or slow trace data.

The following figure shows an example of the trace details.

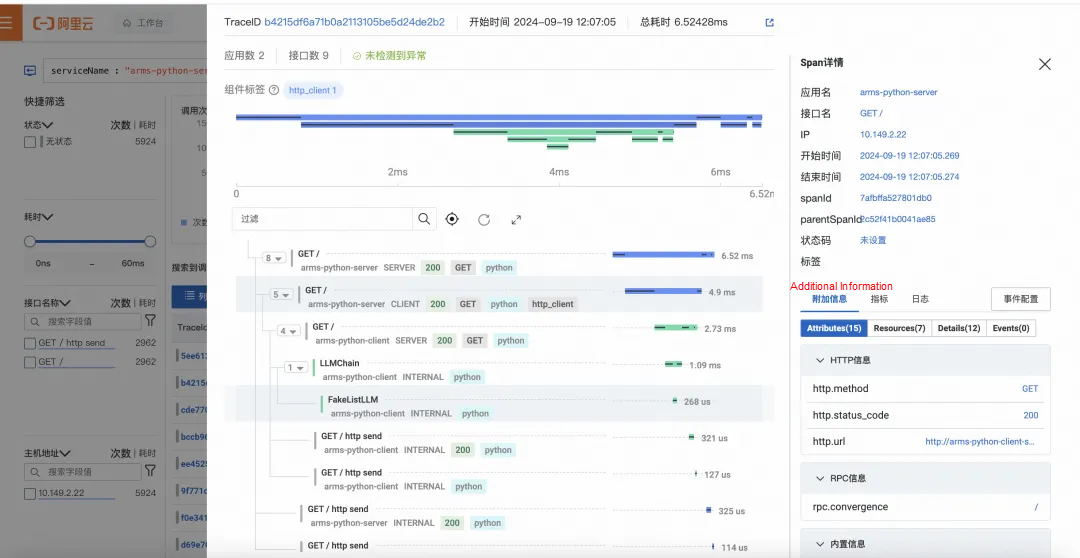

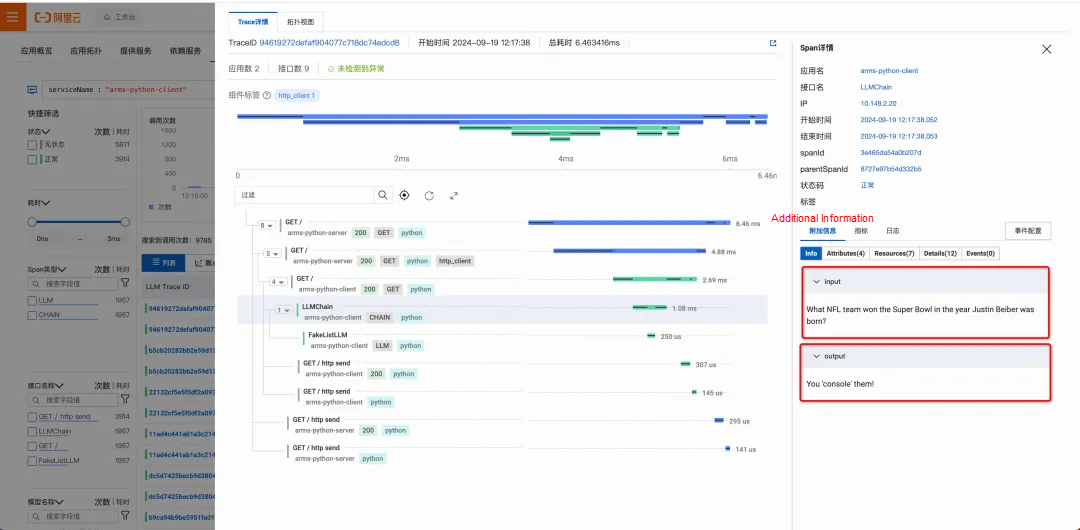

You can view TraceView of the new edition in the LLM field and analyze information such as input and output of different operation types and token consumption in a visualized manner.

On the Trace Explorer tab, click the LLM view icon in the upper-right corner.

The following figure shows an example of the trace details of an LLM application.

Application overview

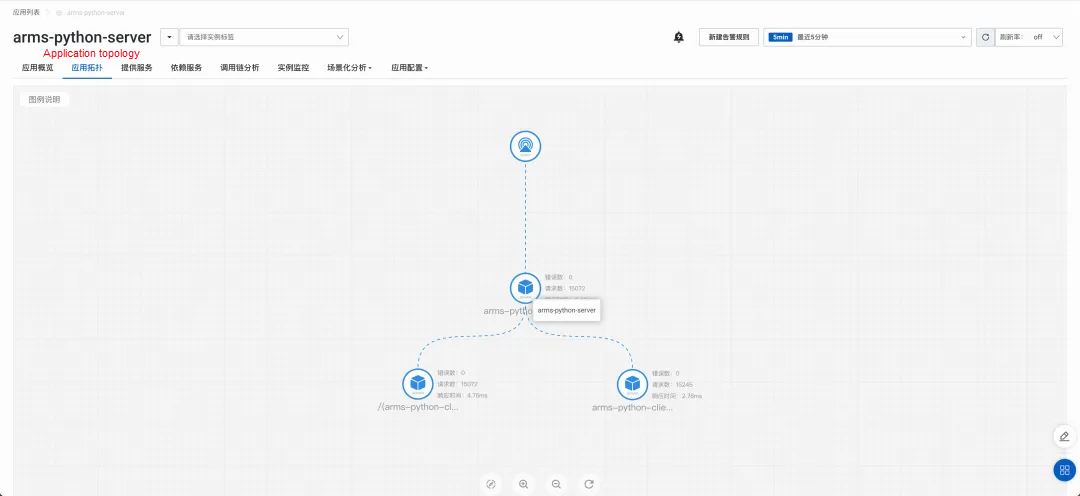

Application topology

You can configure alerting for your application. If an alert is triggered, alert notifications are sent to the contacts or DingTalk group chat based on the specified notification methods. This way, the contacts can resolve issues at the earliest opportunity. For more information, see Application Monitoring Alert Rules.

The ARMS agent for Python is compatible with Python 3.8 and later.

arms-python-server file:

import uvicorn

from fastapi import FastAPI, HTTPException

from logging import getLogger

from concurrent import futures

from opentelemetry import trace

tracer = trace.get_tracer(__name__)

_logger = getLogger(__name__)

import requests

import os

def call_requests():

url = 'https://www.aliyun.com' # Replace the value with the URL of the server.

call_url = os.environ.get("CALL_URL")

if call_url is None or call_url == "":

call_url = url

# try:

response = requests.get(call_url)

response.raise_for_status() # If an error code is returned, an exception is thrown.

print(f"response code: {response.status_code} - {response.text}")

app = FastAPI()

def call_client():

_logger.warning("calling client")

url = 'https://www.aliyun.com' # Replace the value with the URL of the client-side application.

call_url = os.environ.get("CLIENT_URL")

if call_url is None or call_url == "":

call_url = url

response = requests.get(call_url)

# print(f"response code: {response.status_code} - {response.text}")

return response.text

@app.get("/")

async def call():

with tracer.start_as_current_span("parent") as rootSpan:

rootSpan.set_attribute("parent.value", "parent")

with futures.ThreadPoolExecutor(max_workers=2) as executor:

with tracer.start_as_current_span("ThreadPoolExecutorTest") as span:

span.set_attribute("future.value", "ThreadPoolExecutorTest")

future = executor.submit(call_client)

future.result()

# call_client()

return {"data": f"call"}

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8000)arms-python-client file:

from fastapi import FastAPI

from langchain.llms.fake import FakeListLLM

import uvicorn

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

app = FastAPI()

llm = FakeListLLM(responses=["I'll callback later.", "You 'console' them!"])

template = """Question: {question}

Answer: Let's think step by step."""

prompt = PromptTemplate(template=template, input_variables=["question"])

llm_chain = LLMChain(prompt=prompt, llm=llm)

question = "What NFL team won the Super Bowl in the year Justin Beiber was born?"

@app.get("/")

def call_langchain():

res = llm_chain.run(question)

return {"data": res}

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8000)The Way to Breaking Through the Performance Bottleneck of Locks in Apache RocketMQ

212 posts | 13 followers

FollowAlibaba Cloud Native - September 12, 2024

Alibaba Cloud Native Community - November 27, 2025

Alibaba Cloud Native Community - April 15, 2025

Alibaba Container Service - November 15, 2024

Alibaba Cloud Native Community - September 4, 2025

Alibaba Cloud Native Community - October 22, 2025

212 posts | 13 followers

Follow Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn More Real-Time Livestreaming Solutions

Real-Time Livestreaming Solutions

Stream sports and events on the Internet smoothly to worldwide audiences concurrently

Learn More Global Application Acceleration Solution

Global Application Acceleration Solution

This solution helps you improve and secure network and application access performance.

Learn More ADAM(Advanced Database & Application Migration)

ADAM(Advanced Database & Application Migration)

An easy transformation for heterogeneous database.

Learn MoreMore Posts by Alibaba Cloud Native