Welcome to an insightful journey into the evolution of Apache Flink, a technology that has redefined real-time data processing. This blog post, edited based on Feng Wang's presentation (Head of Open Data Platform at Alibaba Cloud and Apache Flink committer) at Flink Forward Asia Singapore 2025, delves into how businesses are leveraging Apache Flink to transition from traditional real-time data analytics to cutting-edge real-time AI applications. We will explore Flink's decade-long journey, its pivotal role in modern data architectures, its synergy with Lakehouse environments, and its transformative potential in empowering real-time AI agents and applications.

Apache Flink's remarkable journey began in 2009 under the name Stratosphere, a research project from Technical University of Berlin in Germany. Five years later, the core team founded dataArtisans and contributed the project to the Apache Software Foundation, where it was renamed Flink. Another five years saw Alibaba acquiring dataArtisans, rebranding it as Ververica, and investing significant resources to propel Flink into the de facto standard for stream processing in real-time data analytics. The innovation continues with recent projects like Flink CDC (Change Data Capture) for real-time data integration and Apache Paimon, a real-time data lake format that originated from the Flink community and is now an independent Apache project. The formal release of Flink 2.0 marks a new chapter for the community, setting the stage for the next decade of advancements.

From a technical standpoint, Flink occupies a central position within the modern data architecture and ecosystem.

It acts as the backbone, driving real-time data streams from operational systems to analytical systems. Flink efficiently captures diverse real-time events from databases and message queues, performs sophisticated stream processing, and then seamlessly syncs the results to data lakes and data warehouses. Over the past decade, Apache Flink's architecture has evolved to be completely cloud-native, simplifying deployment for developers in cloud environments. Consequently, most cloud vendors, including Alibaba Cloud, now offer managed services for Apache Flink, making it more accessible to enterprises globally.

Apache Flink's central role in modern data architectures is undeniable. It serves as the crucial link, enabling real-time data flow from operational data systems to analytical systems. Flink's capabilities extend to capturing various real-time events from databases and message queues, performing complex stream processing, and then seamlessly synchronizing the processed data with data lakes and data warehouses. This integration is vital for businesses seeking immediate insights from their constantly evolving data streams.

One of the most significant advancements in data architecture is the rise of the Lakehouse. This new generation of data platform combines the best features of data lakes and data warehouses, offering both flexibility and structured data management. While many workloads on Lakehouse architectures still operate in offline or batch modes, the increasing demand for real-time data analysis on Lakehouse environments highlights a critical need. This is where Apache Flink becomes indispensable. By integrating Flink's stream processing technology with Lakehouse architectures, enterprises can achieve end-to-end real-time solutions.

The current mainstream data lake format, Iceberg, while powerful, was not inherently designed for real-time operations due to its batch-oriented nature. To address this, the Flink community incubated Apache Paimon (originally Flink Table Store) in 2022. Paimon is specifically designed for real-time data updates within a Lakehouse architecture, making it a purely streaming-oriented format. The synergy between Apache Flink and Apache Paimon creates a new paradigm: the Streaming Lakehouse. This architecture, built on streaming technology, enables end-to-end real-time data pipelines with minute-level timeliness, providing businesses with unprecedented agility and responsiveness.

The rapid growth and widespread adoption of Apache Flink, making it the de facto standard for stream processing globally, can be attributed to more than just its excellent technical design and continuous innovation. Fundamentally, Flink has unlocked the true value of real-time data, empowering enterprises to significantly enhance business efficiency and make real-time business decisions. For instance, in various industries, management and marketing teams can now make immediate business decisions through real-time BI reporting and dashboards, continuously updated by Apache Flink. E-commerce companies can build real-time recommendation systems on Flink to push relevant products to customers, increasing engagement and transactions. Financial systems leverage Flink for real-time fraud detection, showcasing its versatility across numerous use cases.

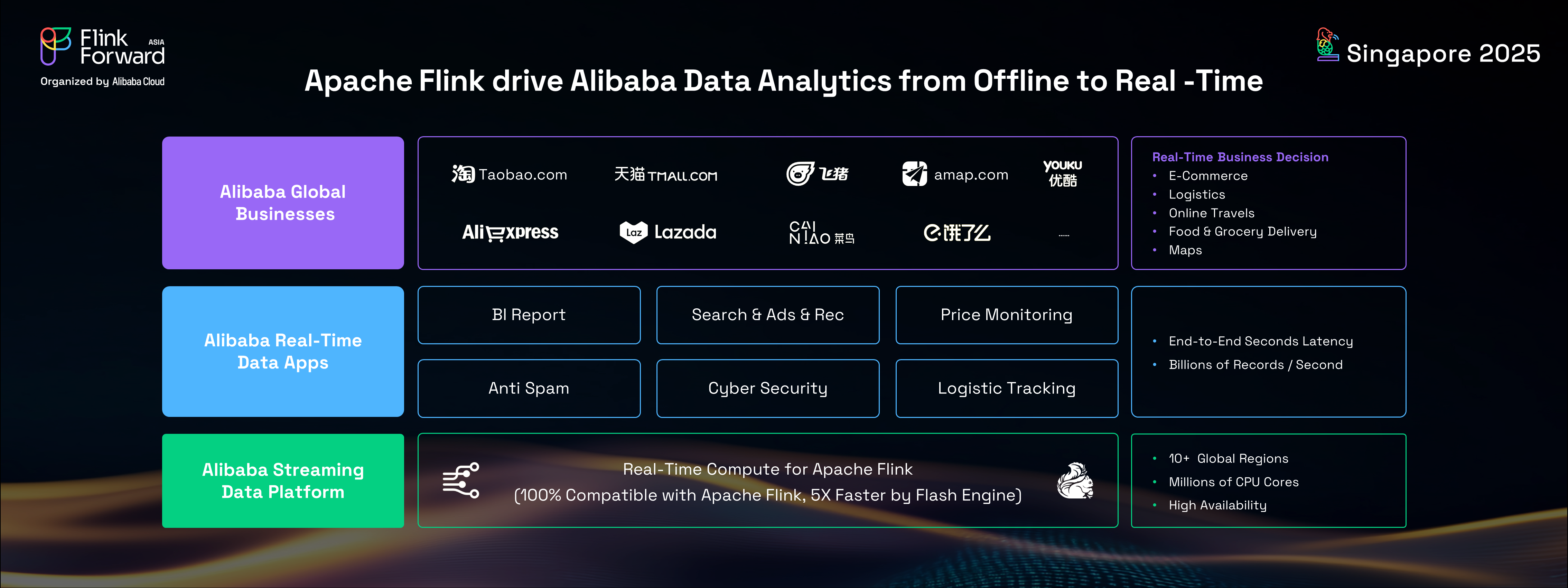

Alibaba, a major contributor to and one of the largest users of Apache Flink, provides a compelling example of how a real-time data platform can be built with Flink and its ecosystem. Since 2016, Alibaba has been building its unified streaming data platform on Apache Flink, upgrading all its businesses and data applications from offline to real-time analytics.

This includes global e-commerce giants like Tmall, Taobao, AliExpress, and Lazada, as well as logistics operations like Cainiao, and online travel services. During peak events like the Double Eleven (11-11) global online shopping festival, Alibaba's streaming data platform, running on millions of CPU cores, handles billions of records per second. To support such immense real-time workloads, Alibaba's stream processing team developed an enhanced version of Apache Flink called Flash, a next-gen vectorized stream processing engine. This engine is 100% compatible with Apache Flink but offers significantly faster performance, achieved by rewriting core components in C++ with a vectorized model, while maintaining the same API and distributed runtime framework.

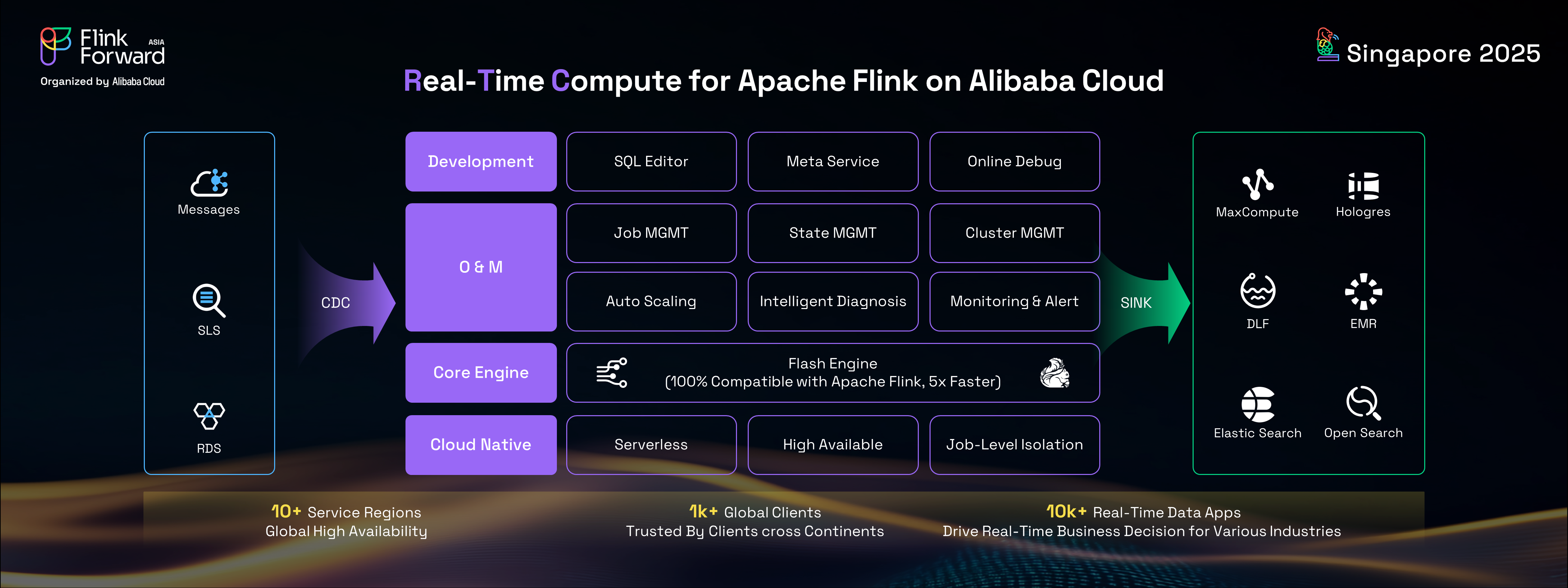

To extend the benefits of this cutting-edge streaming technology beyond Alibaba Group, the entire streaming data platform was migrated to Alibaba Cloud, offering a managed service for Apache Flink known as Real-Time Compute (RTC).

RTC is a comprehensive, one-stop real-time data platform that includes a web SQL interface, meta-service, online debugging, and operational and management tools for Flink jobs in the cloud, all powered by the core Flash engine. To date, RTC serves over 1,000 global customers across more than 10 countries and regions, running over 10,000 Flink jobs that drive real-time business decisions for countless industry customers on Alibaba Cloud.

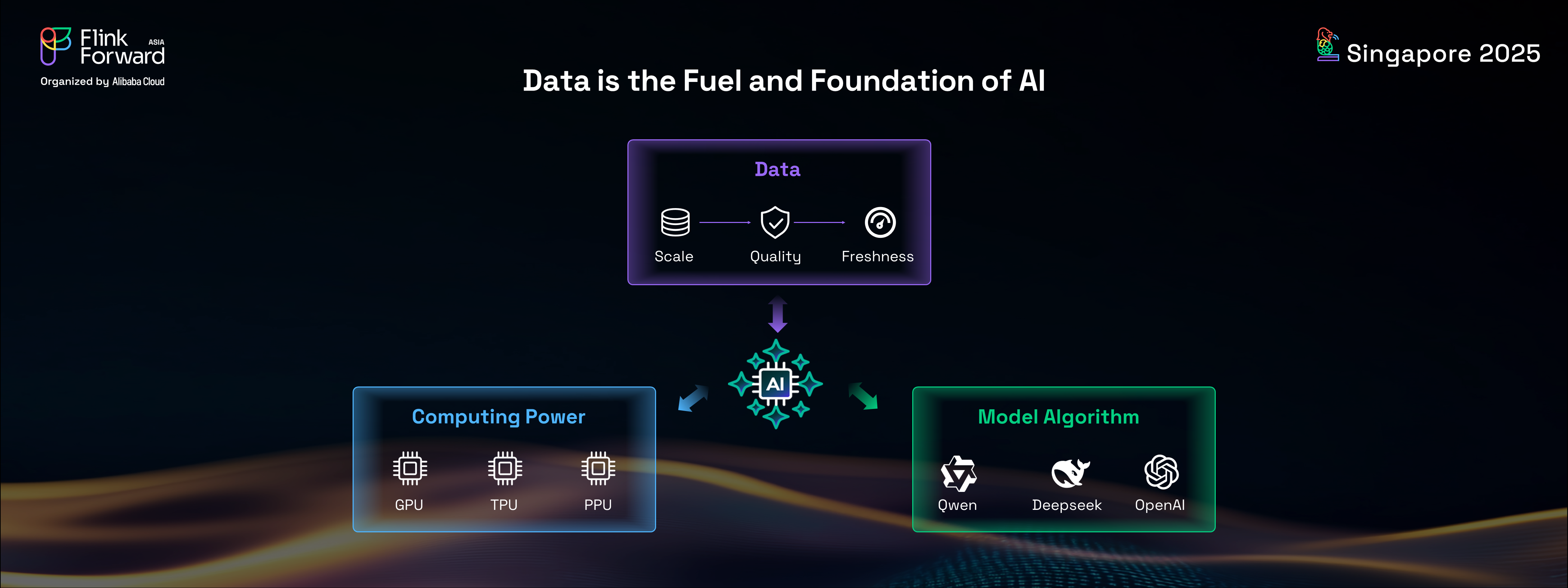

While Apache Flink has firmly established itself as the de facto standard for real-time data analytics globally, its future extends far beyond this domain. In the current era of artificial intelligence, Flink is poised to empower AI systems and applications with real-time capabilities. It is widely acknowledged that computing power, model algorithms, and data are the three foundational pillars driving the evolution of AI technology.

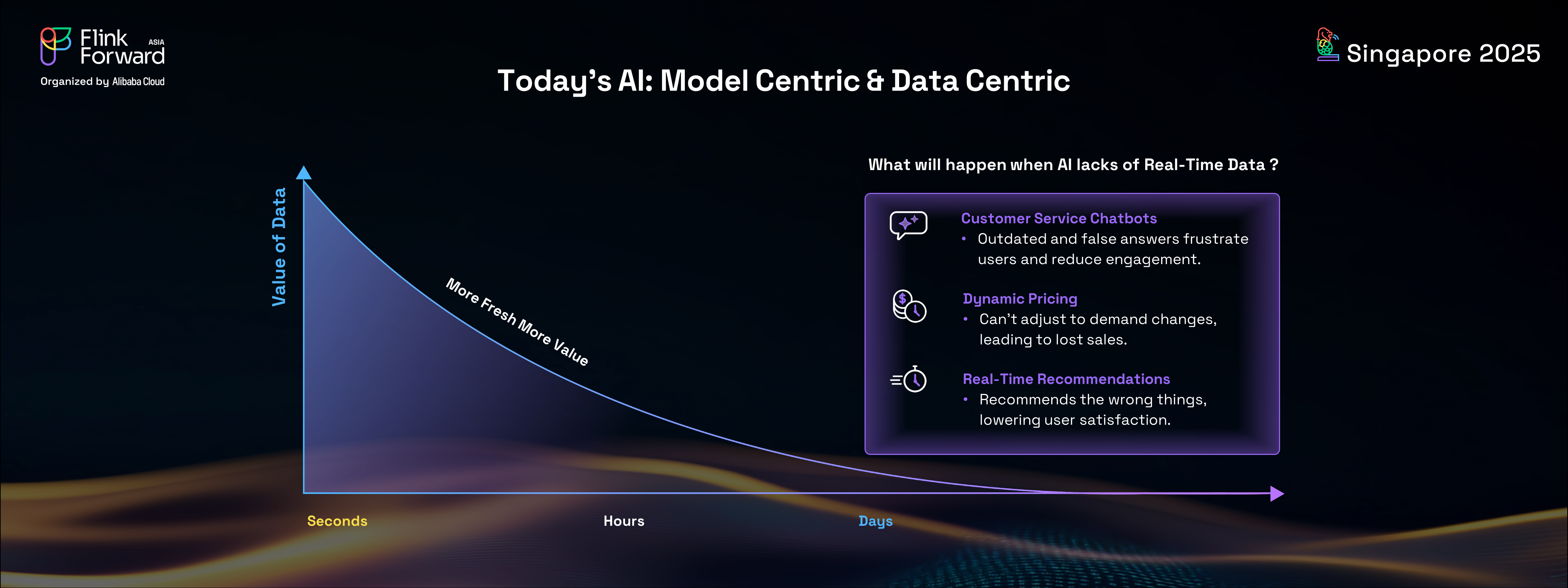

Among these, data plays a particularly crucial role. The scaling law dictates that AI models become more powerful with an increasing number of parameters, and modern AI models are growing in size, becoming multimodal, and demanding ever-larger datasets for training. Yet the supply of public data online is limited. Future progress will hinge less on volume and more on data quality and freshness.

As emphasized by previous discussions, the fresher the data, the higher its value, a principle that holds especially true in the AI world. Consider the implications if an AI system or agent lacks real-time or fresh data. For instance, customer service chatbots would struggle to provide accurate answers without the latest information on customer profiles, orders, or query contexts. Similarly, recommendation systems would fail to deliver relevant suggestions if they cannot access real-time insights into customer behavior, actions, or interests. These examples clearly illustrate that real-time capability is an inherent characteristic of effective AI systems.

In the future, all AI applications will need to be real-time, providing customers with immediate and dynamic experiences.

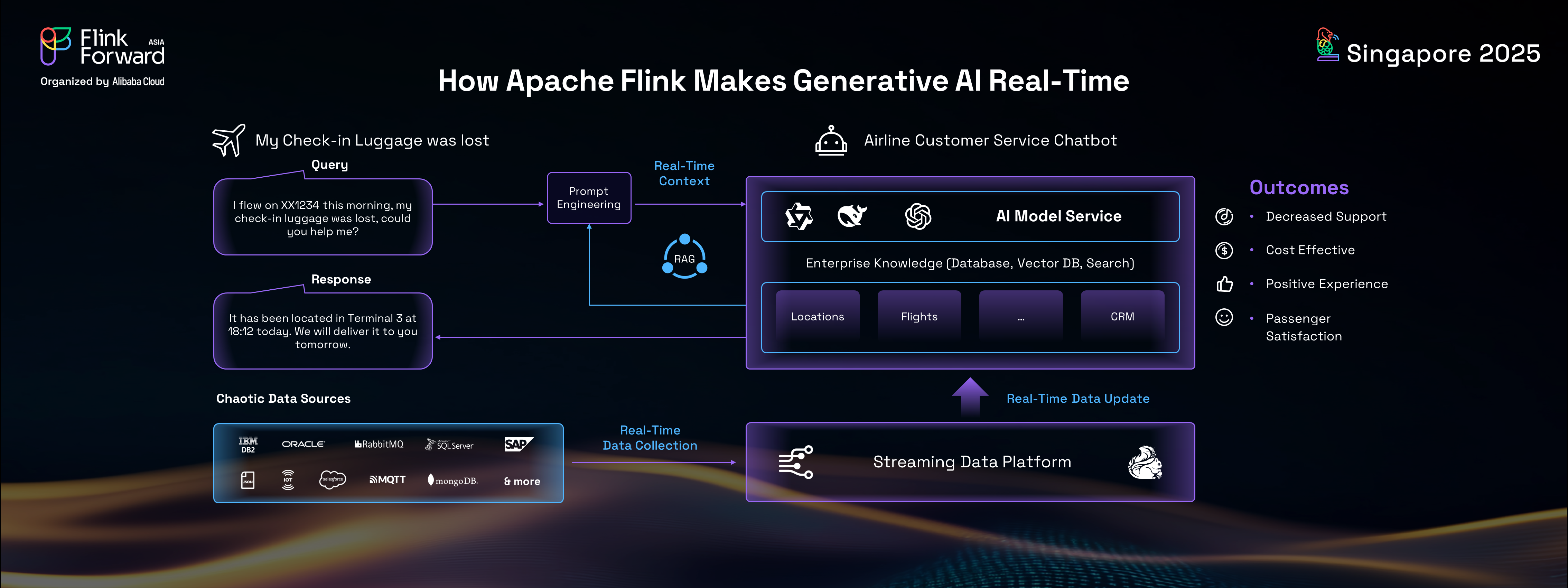

Apache Flink is uniquely positioned to make current generative AI systems real-time.

Take the example of an airline customer service chatbot. If a passenger's luggage is lost, a traditional chatbot without real-time data might be unable to provide accurate information. However, by building a robust streaming data platform powered by Flink behind the AI system, real-time data from various sources can be collected and fed into the AI, ensuring the chatbot is always updated and can deliver precise answers. This demonstrates how Flink can transform generative AI systems, enhancing user experience and accuracy.

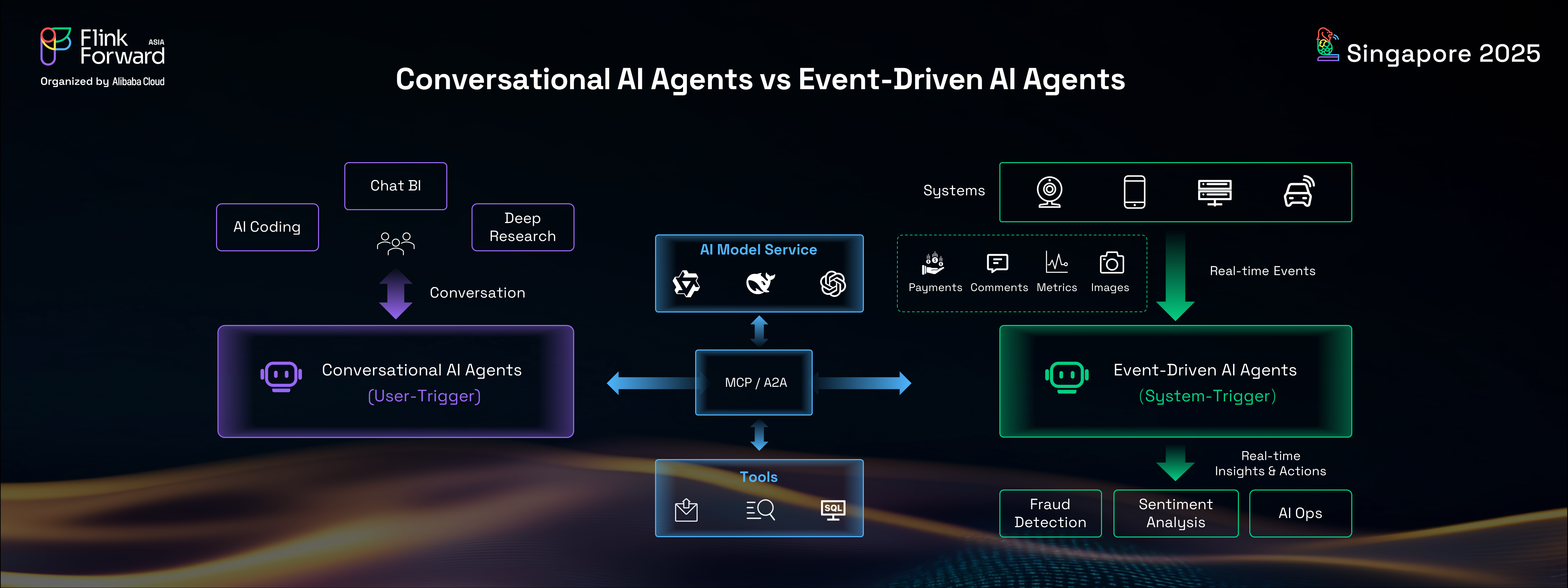

Another rapidly emerging trend in the AI landscape is the rise of AI agents, or Agentic AI. While numerous types of AI agents exist, they can fundamentally be categorized into two main types: conversational AI agents and event-driven AI agents. Conversational AI agents, such as AI coding tools, chat BI, and deep research assistants, are typically triggered by human users who interact with them proactively to ask questions and receive answers. In contrast, event-driven AI agents are system-triggered, reacting to real-time events emitted from various sources like cars, mobile phones, machines, PCs, or servers. These agents deliver comprehensive intelligent insights and take timely actions, operating in a reactive rather than proactive mode. Examples include fraud detection agents, sentiment analysis agents, and AI Ops agents.

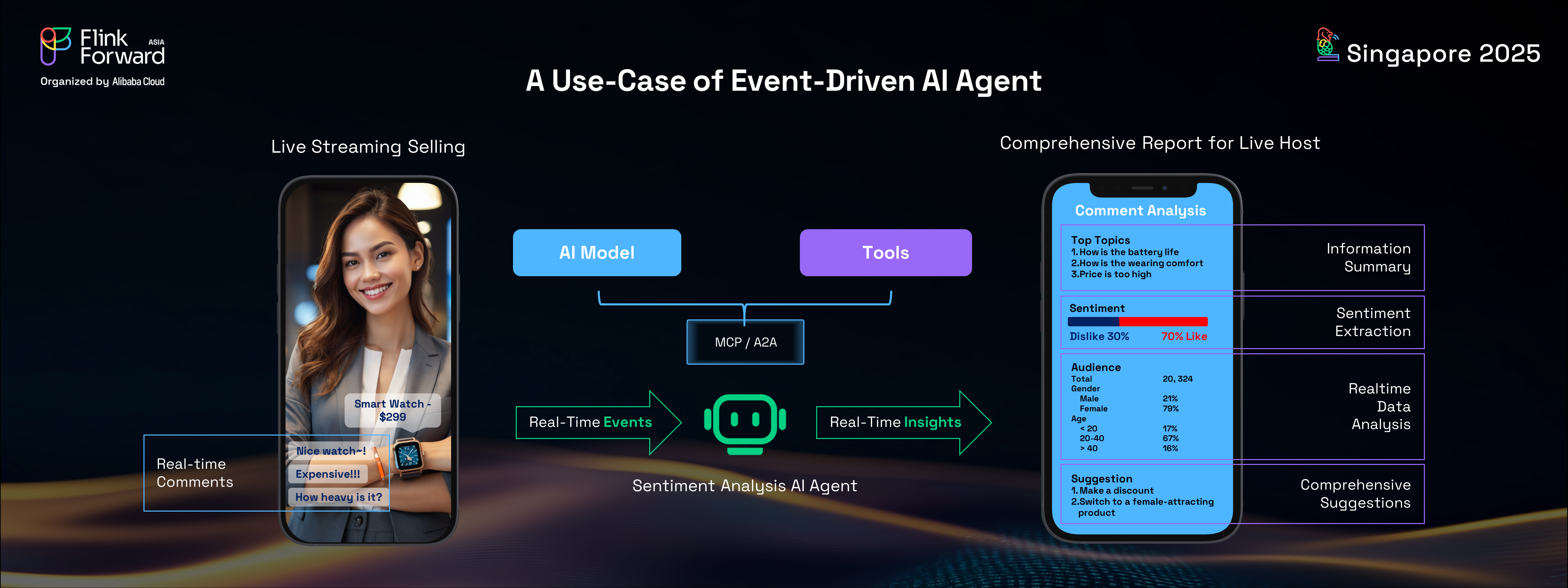

Consider a concrete use case: live streaming selling.

In this popular e-commerce trend, audiences send a continuous stream of messages, comments, and questions that refresh rapidly on screen. It's nearly impossible for a live host to keep up with or read all these messages, let alone derive insights from the audience's feedback. However, by deploying a sentiment analysis agent powered by Flink, the live host can receive a real-time, comprehensive dashboard. This dashboard would not only display traditional statistical reports, such as audience demographics by location and age, but also provide real-time sentiment analysis, indicating audience preferences, topics of discussion, and even offering dynamic suggestions. This real-time feedback loop enables the live host to adapt their strategies dynamically, capturing more audience attention and driving more transactions.

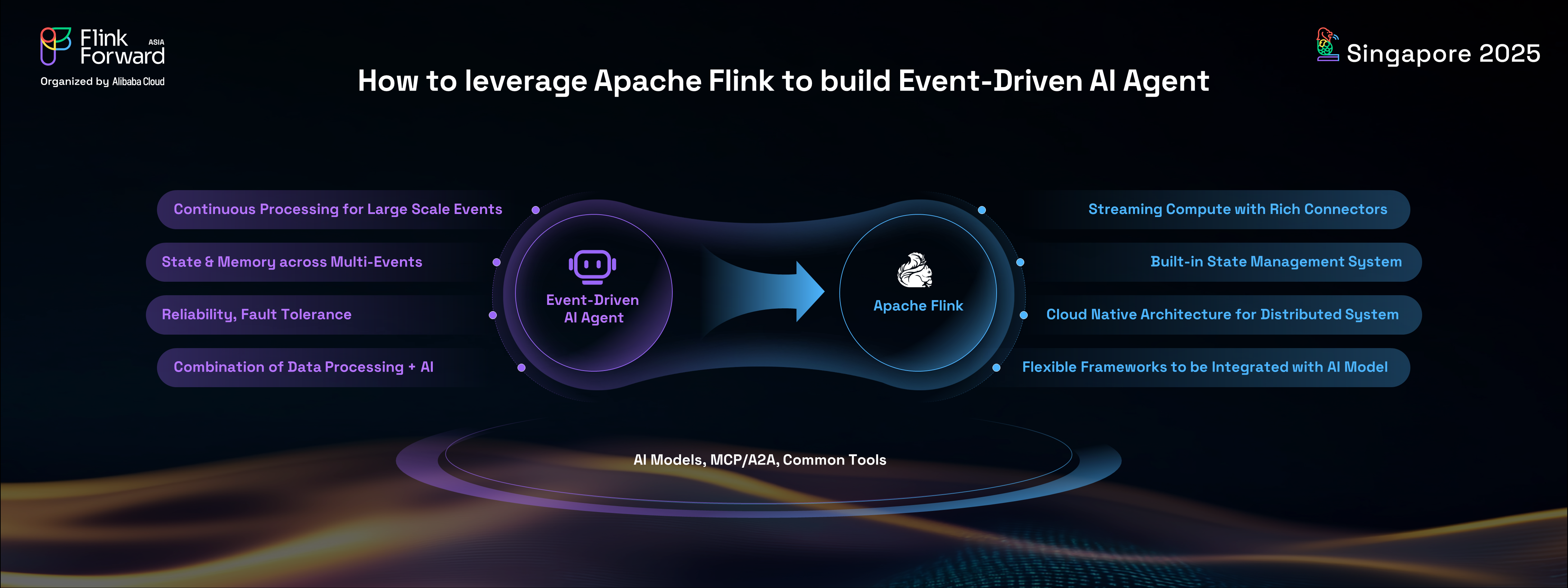

Building such event-driven AI agents requires a powerful distributed runtime framework.

Beyond core AI models, MCP protocols, and general tools, these agents need robust capabilities for continuous processing of large-scale events, efficient event collection, and powerful state management or AI memory across multiple events. Furthermore, reliability and fault tolerance are paramount for these online distributed systems. Given its strengths as a successful event-driven data processor, Apache Flink is an ideal choice for building the real-time runtime framework for event-driven AI agents.

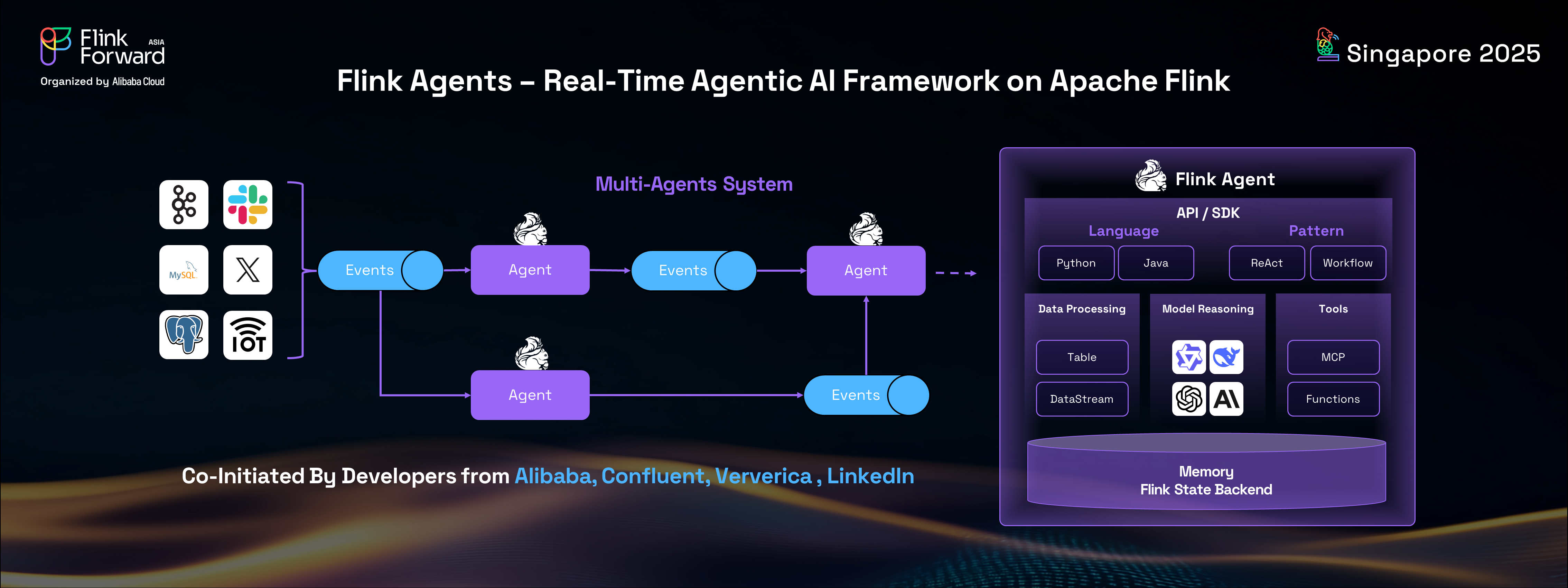

To simplify the development of event-driven AI agents on Apache Flink, developers from Alibaba, Confluent, Ververica, and LinkedIn have co-initiated an open-source project called Flink Agents.

This project aims to establish an agentic AI framework on top of Apache Flink, specifically tailored for event-driven AI agents. The framework offers both Java and Python APIs, supporting static workflow and ReAct patterns for building AI agents. Leveraging Flink's rich connectors and powerful CDC streaming processing capabilities, Flink Agents makes it easy for developers to collect and handle real-time events, delivering immediate insights and suggestions to users. Flink's inherent stateful streaming compute engine, with its powerful built-in state backend and state management system, serves as a robust AI memory across multiple events, further solidifying its role in this domain.

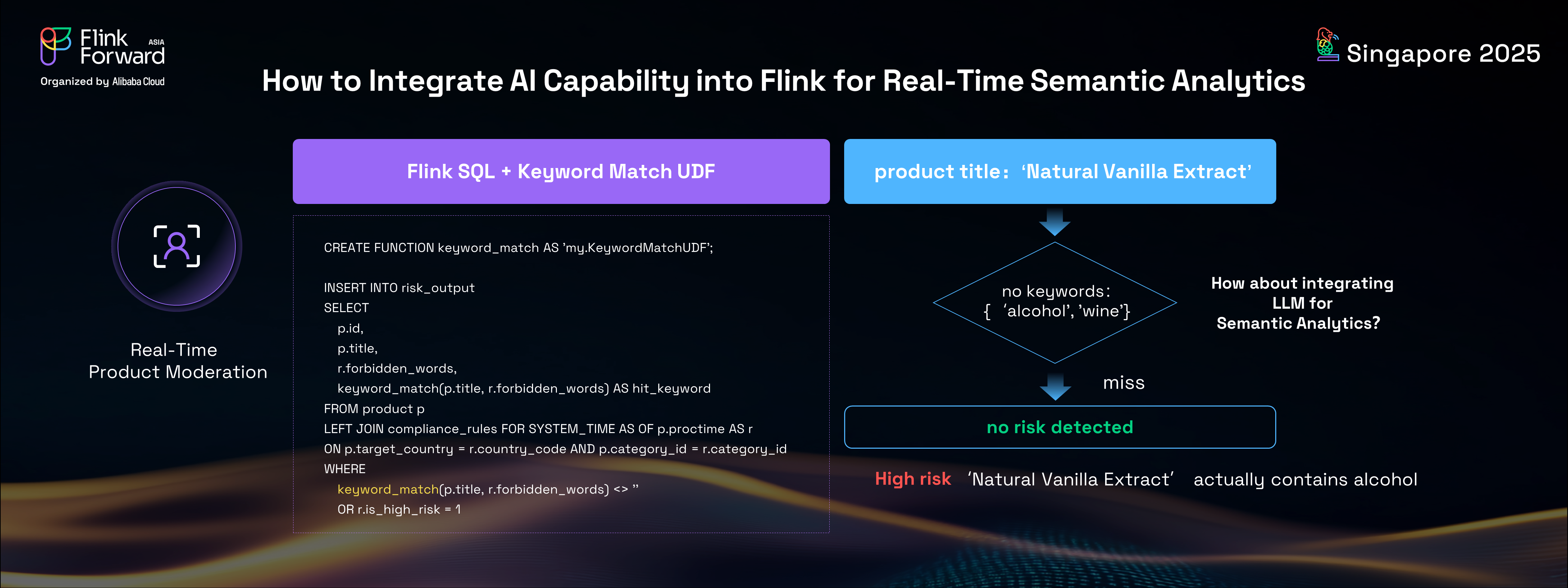

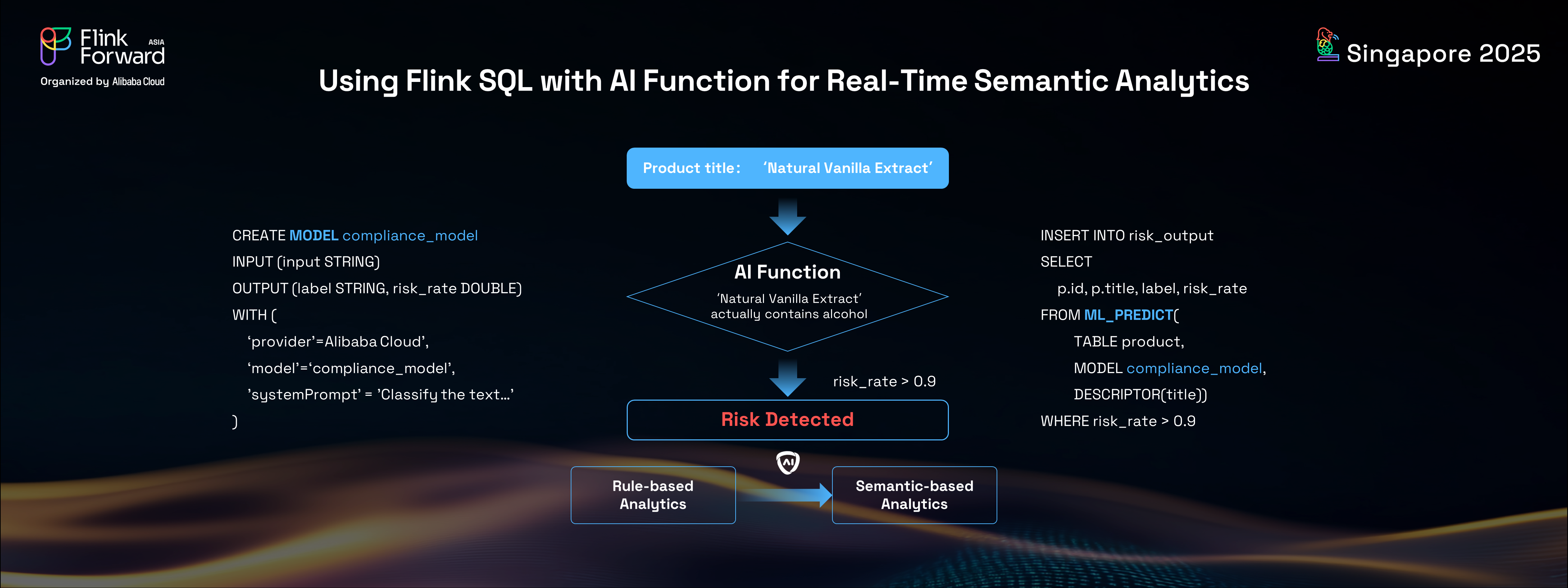

In the final part of this discussion, we explore the exciting integration of powerful AI capabilities, particularly generative AI, into Apache Flink. Flink can now perform real-time semantic analytics for customers, opening up new possibilities for intelligent data processing. Let's consider a concrete use case: product moderation on an e-commerce platform. Every product must undergo strict moderation before listing to ensure compliance. Traditionally, this involved writing Flink SQL to join product publishing streams with compliance rules tables and using string matching functions to check for prohibited keywords in product titles. This rule-based approach, while effective, is limited.

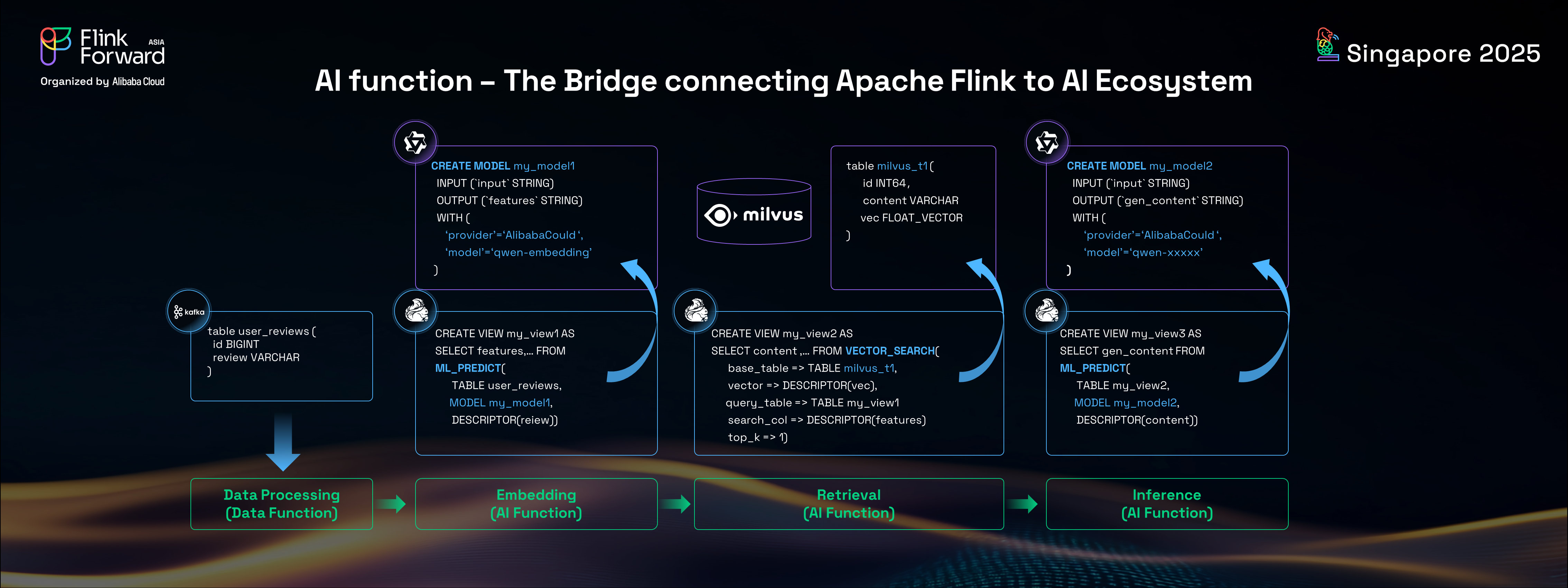

In the current AI era, there's a growing preference for intelligent, semantic-based solutions. By integrating generative AI capabilities, specifically large language models (LLMs), into Apache Flink, Flink users can perform real-time semantic analytics with unprecedented ease. Fortunately, Flink 2.0 introduces a new feature called AI function in Flink SQL.

This feature allows Flink users to directly call AI language model services and perform vector searches within Flink SQL statements, alongside traditional SQL data processing. This means developers can create or define an AI model using Flink SQL, specifying options like model name, endpoint, API key, and system prompts. Models compatible with the OpenAI protocol will be supported.

Developers can then call these AI model services for real-time streaming inference directly within traditional Flink SQL. Even for RAG (Retrieval Augmented Generation) solutions, developers can conveniently perform embeddings and vector searches in real-time mode within SQL statements. This new Flink AI SQL capability seamlessly combines traditional relational algebra with generative AI, enabling real-time semantic analytics for both structured and unstructured data. Reverting to our product moderation example, with the new Flink AI solution, we can solve the same problem in a more advanced way. By creating a complex model and using the AI function ML_predict in Flink SQL, we can perform semantic analysis on product titles to check for compliance. This AI-powered, semantic-based solution offers superior results and a better experience compared to traditional rule-based methods, even detecting non-compliant products without explicit prohibited keywords in their titles.

In summary, Apache Flink is not only thriving in real-time data analytics but is also becoming a critical component of the real-time infrastructure for current AI systems and applications. While most AI models today are trained offline and updated slowly over days, weeks, or even months, the future holds a different promise. I believe that in the future, most powerful AI models will be trained and updated in real-time, allowing AI systems to learn continuously, much like humans. At that juncture, Apache Flink, as a real-time computing engine, will play an even more significant role in the AI world, empowering AI models, systems, and applications to reach their full potential. Thank you.

Flink 2.1 SQL: Unlocking Real-time Data & AI Integration for Scalable Stream Processing

Fluss: Redefining Streaming Storage for Real-time Data Analytics and AI

206 posts | 56 followers

FollowApache Flink Community - July 28, 2025

Apache Flink Community - August 14, 2025

Apache Flink Community - August 1, 2025

Apache Flink Community - August 14, 2025

Apache Flink Community - November 7, 2025

Apache Flink Community - December 17, 2024

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Apache Flink Community