With the advent of Docker and Kubernetes, a large monolithic application can be split into multiple independently deployed microservices, which are packaged and run in corresponding containers. Different applications communicate with each other to complete a functional module. The benefits of the microservices model and containerized deployment are clear. The microservices model reduces the coupling between services, facilitates development and maintenance, and makes more efficient use of computing resources. The microservices model also has disadvantages:

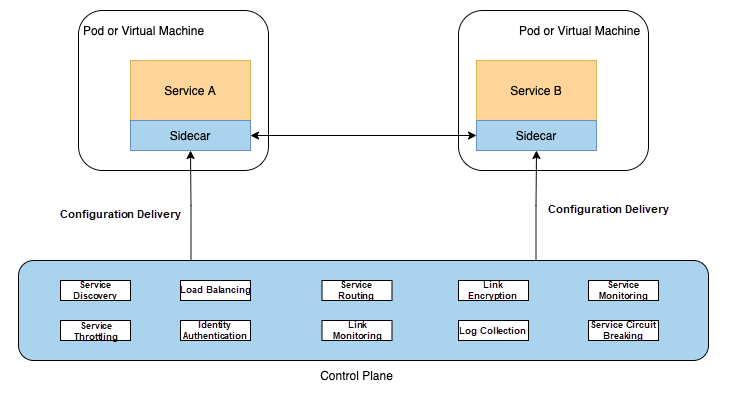

Service Mesh was created to solve these pain points. The classic sidecar mode is used as an example. Service Mesh injects the sidecar container into business pods to govern and control proxy traffic. This way, the governance capability of the framework is moved to the sidecar container and decoupled from the business system, easily realizing the requirements of unified traffic control and monitoring in multiple languages and protocols. Service Mesh solves the problem of strong dependence on SDK by stripping the SDK capability and disassembling it into independent processes. In this case, the developers can focus more on the business and realize the sinking of the basic framework capability, as shown in the following figure (from Dubbo’s official website):

The classic sidecar mesh deployment architecture has many advantages, such as reducing SDK coupling and small business intrusion. However, the additional layer of proxy brings the following problems:

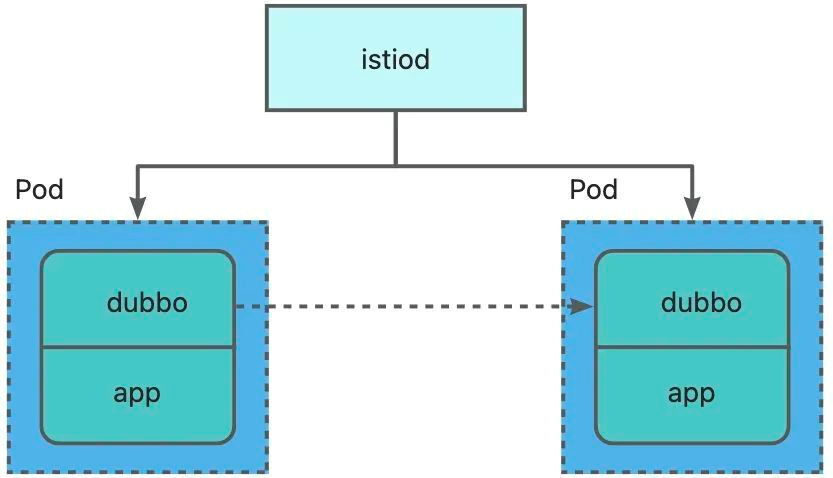

Proxyless Service Mesh was created to solve these pain points. Traditional Service Mesh intercepts all business network traffic through the proxy. The proxy detects the configuration resources issued by the control plane to control the direction of network traffic as required. Take Istio as an example. The Proxyless mode means the application communicates directly with the Istiod process responsible for the control plane. The Istiod process monitors and obtains Kubernetes resources (such as Service and Endpoint) and distributes these resources to different RPC frameworks through the xDS protocol. Then, the RPC framework forwards requests, enabling capabilities (such as service discovery and service governance).

The Dubbo community is the first community in China to explore Proxyless Service Mesh. Compared with Service Mesh, the Proxyless mode has a lower cost and is a better choice for small and medium-sized enterprises. Dubbo 3.1 supports the Proxyless mode by parsing the xDS protocol. xDS is a generic name for a type of discovery service. Applications can dynamically obtain Listeners, Routes, Clusters, Endpoints, and Secret configurations through xDS APIs.

Based on the Proxyless mode, Dubbo can directly establish communication with the control plane to implement unified control over traffic control, service governance, observability, and security. This avoids performance loss and deployment architecture complexity caused by the sidecar mode.

@startuml

' ====== Adjust style ===============

' Single state definition example: state uncommitted #70CFF5 ##Black

' hide footbox can close the modules in the lower part of the sequence diagram.

' autoactivate on is automatically activated or not

skinparam sequence {

ArrowColor black

LifeLineBorderColor black

LifeLineBackgroundColor #70CFF5

ParticipantBorderColor #black

ParticipantBackgroundColor #70CFF5

}

' ====== Define process ===============

activate ControlPlane

activate DubboRegistry

autonumber 1

ControlPlane <-> DubboRegistry : config pull and push

activate XdsServiceDiscoveryFactory

activate XdsServiceDiscovery

activate PilotExchanger

DubboRegistry -> XdsServiceDiscoveryFactory : request

XdsServiceDiscoveryFactory --> DubboRegistry: get registry configuration

XdsServiceDiscoveryFactory -> XdsChannel: return the list information (if the data has not been imported, it is not visible).

XdsServiceDiscoveryFactory-> XdsServiceDiscovery: init Xds service discovery

XdsServiceDiscovery-> PilotExchanger: init PilotExchanger

alt PilotExchanger

PilotExchanger -> XdsChannel: init XdsChannel

XdsChannel --> PilotExchanger: return

PilotExchanger -> PilotExchanger: get cert pair

PilotExchanger -> PilotExchanger: int ldsProtocol

PilotExchanger -> PilotExchanger: int rdsProtocol

PilotExchanger -> PilotExchanger: int edsProtocol

end

alt PilotExchanger

XdsServiceDiscovery --> XdsServiceDiscovery: parse xDSds protocol

XdsServiceDiscovery --> XdsServiceDiscovery: init node info based on Eds

XdsServiceDiscovery --> XdsServiceDiscovery: write the SLB and routing rules of Rds and Cds into the running information of the node.

XdsServiceDiscovery --> XdsServiceDiscovery: send back to the service introspection framework to build the invoker.

end

deactivate ControlPlane

deactivate XdsServiceDiscovery

deactivate XdsServiceDiscoveryFactory

@enduml

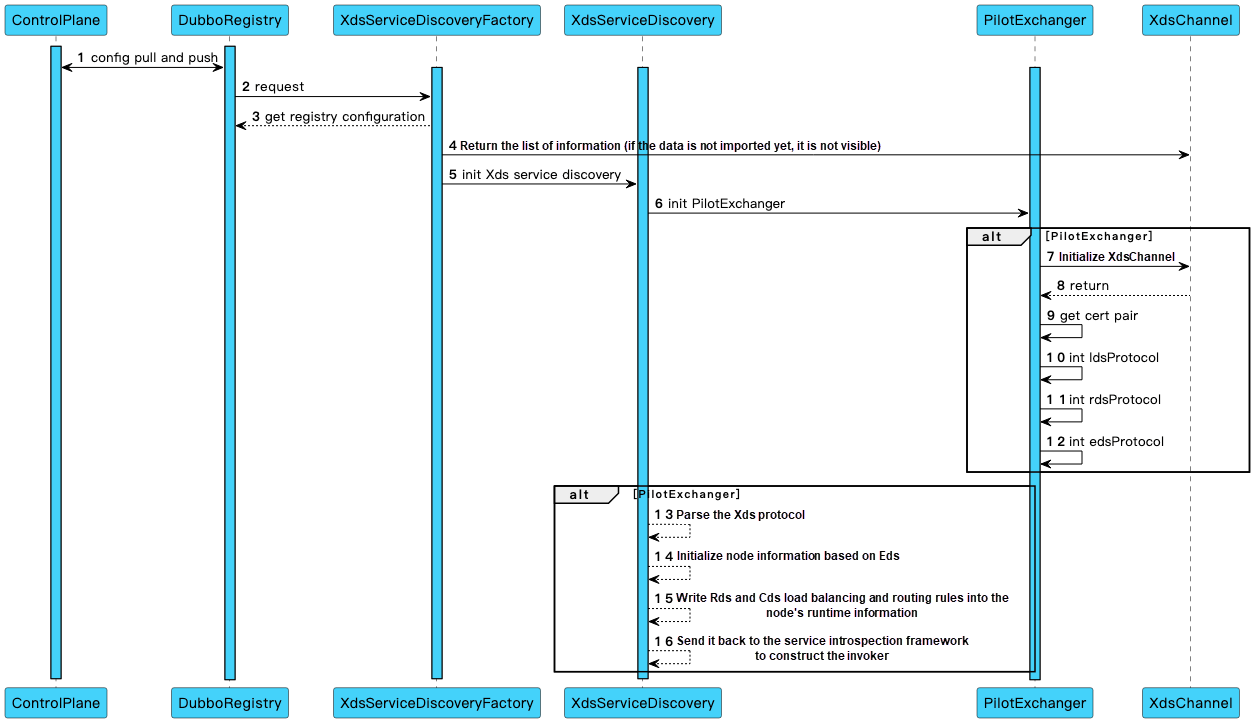

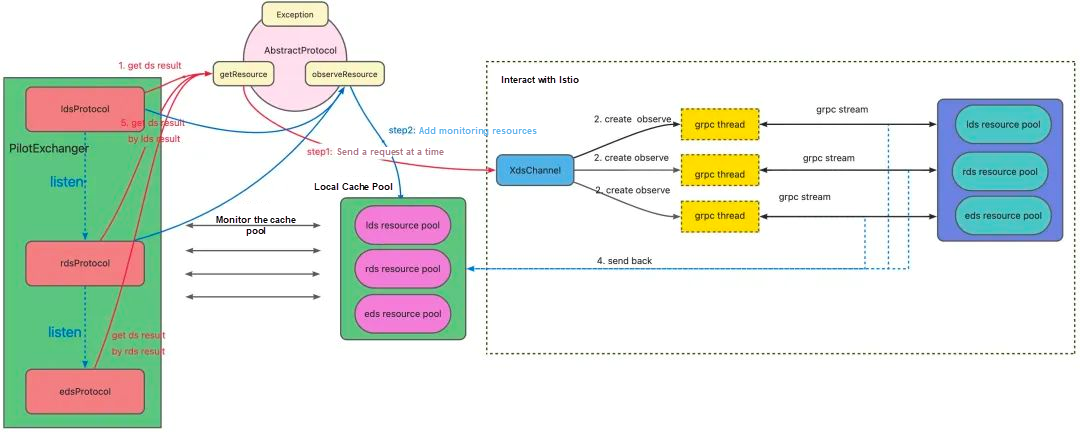

On the whole, the interaction sequence diagram between the Istio control plane and Dubbo is shown above. The main logic of xDS processing in Dubbo is in the specific implementation of the corresponding protocols of PilotExchanger and xDS APIs (LDS, RDS, CDS, and EDS). PilotExchanger is responsible for the concatenation logic. There are three main logic:

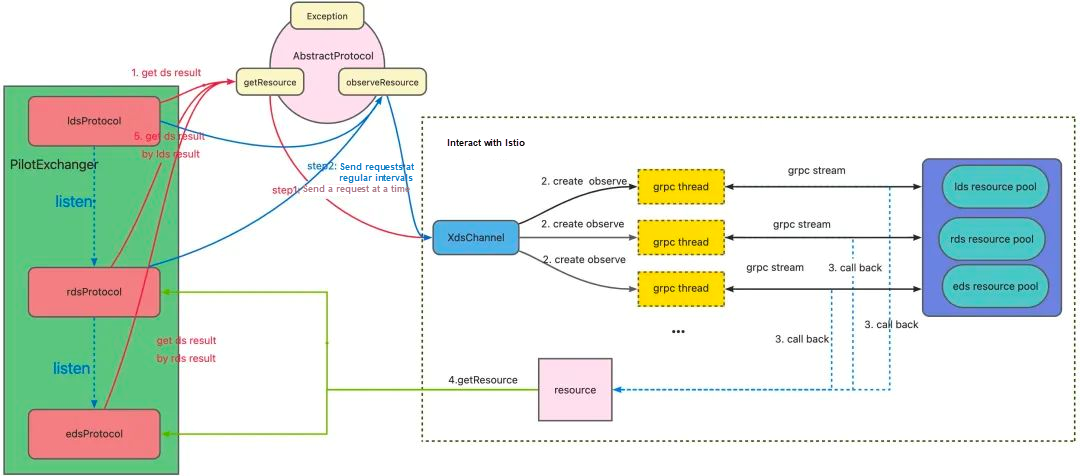

Take LDS and RDS as an example. PilotExchanger invokes the getResource() method of LDS to establish a communication connection with Istio, sends data, and parses the response from Istio. The parsed resource is used as the input parameter for RDS to invoke the getResource() method, and RDS sends data to Istio. When LDS changes, the observeResource() method of LDS triggers the changes in itself and RDS. The same is true for RDS and EDS. The existing interactions are listed below. The preceding process corresponds to the process of the red line in the diagram.

After successfully obtaining resources for the first time, each DS continuously sends requests to Istio through scheduled tasks, parses the response results, and maintains interaction with Istio, thus realizing the traffic control, service governance, and observability control by the control panel. The process corresponds to the blue line in the preceding diagram.

The Dubbo Proxyless mode has been validated and proven to be reliable. The existing Dubbo Proxyless implementation solutions have the following problems:

The transformed interaction logic is shown below:

Currently, Dubbo’s resources include LDS, RDS, and EDS. For the same process, all the resources monitored by the three types of resources correspond to the list of resource listeners cached by Istio. Therefore, we should design corresponding local resource cache pools for these three resources. When Dubbo uses resources, it goes to the cache pools to query first. If there is a result, it will directly return it. Otherwise, Dubbo will aggregate the resource list of the local cache pools with the resources to be sent and then send the aggregation result to Istio to update its resource listener list. The cache pool is shown below, where the key represents a single resource, and T is the return result from different DSs.

protected Map<String, T> resourcesMap = new ConcurrentHashMap<>();After the cache pool is built, a structure or container that monitors the cache pool is required. Here, we design it in the form of Map:

protected Map<Set<String>, List<Consumer<Map<String, T>>>> consumerObserveMap = new ConcurrentHashMap<>();Key is the resource to be monitored, and value is a List. Value is designed as a List because it supports repeated subscriptions. The item stored in List is of the consumer type in JDK8. It can be used to pass a function or behavior. Its input parameter is Map, its key corresponds to a single resource to be monitored, and it can be easily retrieved from the cache pool. As mentioned above, PilotExchanger connects the entire process, and the update relationship between different DSs can be transmitted by consumers. The following code provides an example of how to monitor observeResource of LDS:

// Listener.

void observeResource(Set<String> resourceNames, Consumer<Map<String, T>> consumer, boolean isReConnect);

// Observe LDS updated

ldsProtocol.observeResource(ldsResourcesName, (newListener) -> {

// LDS data is inconsistent.

if (!newListener.equals(listenerResult)) {

//Update LDS data.

this.listenerResult = newListener;

// Trigger an RDS listener.

if (isRdsObserve.get()) {

createRouteObserve();

}

}

}, false);After the stream mode is changed to a persistent connection, we need to store the behavior of the consumer in the local cache pool. After receiving a push request from Dubbo, Istio refreshes its cached resource list and returns a response. In this case, the response returned by Istio is the aggregated result. After Dubbo receives the response, it splits the response resources into resources with smaller granularities and then pushes them to the corresponding Dubbo applications to notify them to change.

Pitfalls

When Dubbo sends a request to Istio for the first time, the getResource() method is invoked to query the data in the cache pool. If the data is missing, Dubbo will aggregate resources before requesting data from Istio. Then, Istio will return the corresponding result to Dubbo. We have two implementation solutions for processing responses from Istio:

Both of the preceding methods can be implemented. However, the biggest difference is whether users need to sense the existence of getResource when invoking onNext to send data to Istio. In summary, solution 2 is selected. After Dubbo establishes a connection with Istio, Istio pushes its monitoring resource list to Dubbo. Dubbo parses the response, divides the data according to the monitoring apps, refreshes the data in the local cache pool, and sends an ACK response to Istio. The process is listed below:

@startuml

object Car

object Bus

object Tire

object Engine

object Driver

Car <|- Bus

Car *-down- Tire

Car *-down- Engine

Bus o-down- Driver

@enduml

Some of the key code is listed below:

public class ResponseObserver implements XXX {

...

public void onNext(DiscoveryResponse value) {

//Accept data from Istio and split the data.

Map<String, T> newResult = decodeDiscoveryResponse(value);

//The local cache pool data.

Map<String, T> oldResource = resourcesMap;

//Refresh the cache pool data.

discoveryResponseListener(oldResource, newResult);

resourcesMap = newResult;

// for ACK

requestObserver.onNext(buildDiscoveryRequest(Collections.emptySet(), value));

}

...

public void discoveryResponseListener(Map<String, T> oldResult,

Map<String, T> newResult) {

....

}

}

//The specific implementation is carried out by LDS, RDS, and EDS.

protected abstract Map<String, T> decodeDiscoveryResponse(DiscoveryResponse response){

//Compare the new data with the resources in the cache pool, and retrieve the resources that are not in the two pools at the same time.

...

for (Map.Entry<Set<String>, List<Consumer<Map<String, T>>>> entry : consumerObserveMap.entrySet()) {

// Skip this step if the local cache pool does not exist.

...

//Aggregate resources.

Map<String, T> dsResultMap = entry.getKey()

.stream()

.collect(Collectors.toMap(k -> k, v -> newResult.get(v)));

//Refresh the cache pool data.

entry.getValue().forEach(o -> o.accept(dsResultMap));

}

}Pitfalls

Concurrency conflicts may occur in the listener consumerObserveMap and the cache pool resourcesMap. For resourcesMap, since the put operation is concentrated in the getResource() method, we can use a pessimistic lock to lock the corresponding resources to avoid concurrent monitoring of resources.

There are put, remove, and traverse operations for consumerObserveMap. In terms of timing, the use of a read-write lock can avoid conflicts. In terms of the traverse operation, use read lock, and in terms of the put and remove operations, use write lock to avoid concurrency conflicts. In summary, use the pessimistic lock to avoid concurrency conflicts for resourcesMap. The consumerObserveMap involves the following operation scenarios:

Pitfalls

If disconnection occurs, we only need to use a scheduled task to regularly interact with Istio and try to obtain a credit certificate. If the certificate is obtained, Istio is thought to be reconnected. Dubbo will aggregate local resources to request data from Istio, parse the response, refresh the local cache pool data, and disable the scheduled task.

Pitfalls

In this feature transformation, the author was really distracted and often could not find bugs. In addition to the pitfalls mentioned above, other pitfalls include (but are not limited to):

I have to admit that Proxyless Service Mesh has advantages and broad market prospects. Since Dubbo 3.1.0 was released, Dubbo has implemented Proxyless Service Mesh capabilities. In the future, the Dubbo community will deeply connect with the business to solve more pain points in the actual production environment and improve service mesh capabilities.

Learn Java & Netty Performance Tuning with the HTTP/2 Protocol Case: Tools, Tips, and Methodology

639 posts | 55 followers

FollowAlibaba Cloud Native Community - April 6, 2023

Alibaba Cloud Native Community - May 23, 2023

Alibaba Cloud Native - October 9, 2021

Alibaba Cloud Native Community - November 22, 2023

Aliware - August 18, 2021

Alibaba Cloud Native Community - July 20, 2021

639 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community