The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

The COVID-19 pandemic has reshaped the digital lifestyle. With today's aggressive digital transformation and increased efficiency, Serverless is poised to become the new computing paradigm for the cloud, freeing developers from heavy manual resource management and performance optimization and sparking a new revolution in cloud productivity.

However, Serverless is often tricky to implement. Migrating legacy projects to Serverless while ensuring business continuity during the migration process, providing comprehensive development tools and effective debugging and diagnostic tools under the Serverless architecture, and leveraging Serverless for better cost savings are all constant challenges. This is especially true when it comes to the large-scale implementation of Serverless in core scenarios. As a result, enterprises need best practices for large-scale application of Serverless in core scenarios.

With a total transaction volume of 498.2 billion yuan (US$74.1 billion) and an order creation peak of 583,000 orders per second, Tmall set another record during the 2020 Double 11 Global Shopping Festival. During the 2020 Double 11 Global Shopping Festival, Alibaba Cloud also achieved one of the first large-scale implementation of Serverless in core business scenarios in China, withstanding the world's largest traffic flood and creating a milestone for the implementation of Serverless.

Elastic Container is a natural attribute of Serverless, but Elastic Container is contingent on extreme cold start speeds to support it. In non-core business scenarios, milliseconds of latency is almost unaffected by the business. However, for core business scenarios, a latency of more than 500 milliseconds can affect the user experience. Serverless leverages lightweight virtualization technologies to continuously reduce the time required for cold starts (under 200 milliseconds in some scenarios.) However, this is only an ideal standalone scenario. In core business scenarios, users not only run their business logic but also rely on back-end services, such as middleware, database, and storage. The connection of these services has to be made when the instance is started, which invariably lengthens the cold start time and increases the cold start time to the second level. For core online business scenarios, a second-level cold start is unacceptable.

Serverless focuses on business code development in the same way as business functions, enabling developers to easily and quickly launch code on the cloud. However, in reality, the requirements of core businesses have pulled developers from the cloud back to reality. In reality, the requirements of the core business pull developers back from the cloud, and they have to face the following questions: How can we do testing? How do we go live with grayscale? How can we do business disaster recovery? How do we control permissions? After answering these questions, the developers will become discouraged and realize that "normal function operation" only accounts for a small part of the core business go-live and the distance to go-live is as long as the Yangtze River.

The core online business is not run by independent functions in isolation but needs to connect to storage, middleware, data backend services, obtain data and then calculate, and then output back to the user. The traditional middleware client needs to connect to the client's network and initialize a series of operations, such as building connections, which often reduces the speed of function startup. The short life cycle and a large number of instances in the Serverless scenario can lead to frequent connection building and a high number of connections. Therefore, it is very important for core applications to migrate to Serverless by optimizing network connectivity for clients of middleware commonly used in online applications and monitoring data of call links to help SRE (Site Reliability Engineer) practitioners better evaluate the downstream middleware dependencies of functions.

Most of the core business applications of users adopt a microservices architecture, so many users solve the problems of core business applications with a microservices perspective. For example, users need to check various indicators of the business system in great detail, not only to check the business indicators but also to check the resource indicators of the system where the business is located. However, there is no concept of machine resources in a Serverless scenario, so how can these metrics leak out? Is it possible to meet the needs of the business side by only revealing the error rate and concurrency of requests? The business needs are much more than that. Observability is still a matter of whether the business trusts your technology platform or not. Good observability is an important prerequisite to winning users' trust.

When there is an online problem in the core business, you need to immediately investigate the matter. The first element of the investigation is the retention of the site and then logging in for debugging. But since there are no machine-level concept in a Serverless scenario, if users need to log into the machine, it is very difficult to do so on top of the existing Serverless-based technology. The reasons are not limited to this. There are also concerns with Vendor-lockin.

The above categories of pain points are mainly for the developers' development experience. Most core application developers are still on the fence about Serverless, and there is no shortage of skepticism that "FaaS is only suitable for small business scenarios and non-core business scenarios."

Consulting firm O'Reill released a Serverless usage research paper in December 2019 that shows 40% of respondents' organizations had already adopted Serverless. In October 2020, the China Academy of Information and Communication Research released the "China Cloud-Native User Research Report." Inside, it stated, "Serverless technology is growing significantly in numbers, with nearly 30% of users already using it in production environments." In 2020, more enterprises are choosing to join the Serverless camp, eagerly awaiting more cases of Serverless scaling to core scenarios.

Faced with the steady growth in the number of Serverless developers, Alibaba formulated the strategy of "Build a Serverless Double 11" at the beginning of the year, aiming not simply to resist traffic and hit peaks, but to effectively reduce costs, improve resource utilization, and build Alibaba Cloud Serverless into a more secure, stable, and friendly cloud product to help users realize its greater business value.

Unlike the past 11 years of the Double 11, this year, Alibaba achieved full cloud-native biochemistry based on the digital native business operating system after the core system of last year's Tmall Double 11 went to the cloud. The underlying hard-core technology upgrade brought surging power and extreme performance. This was the first time Serverless was implemented in the core scenario of Double 11.

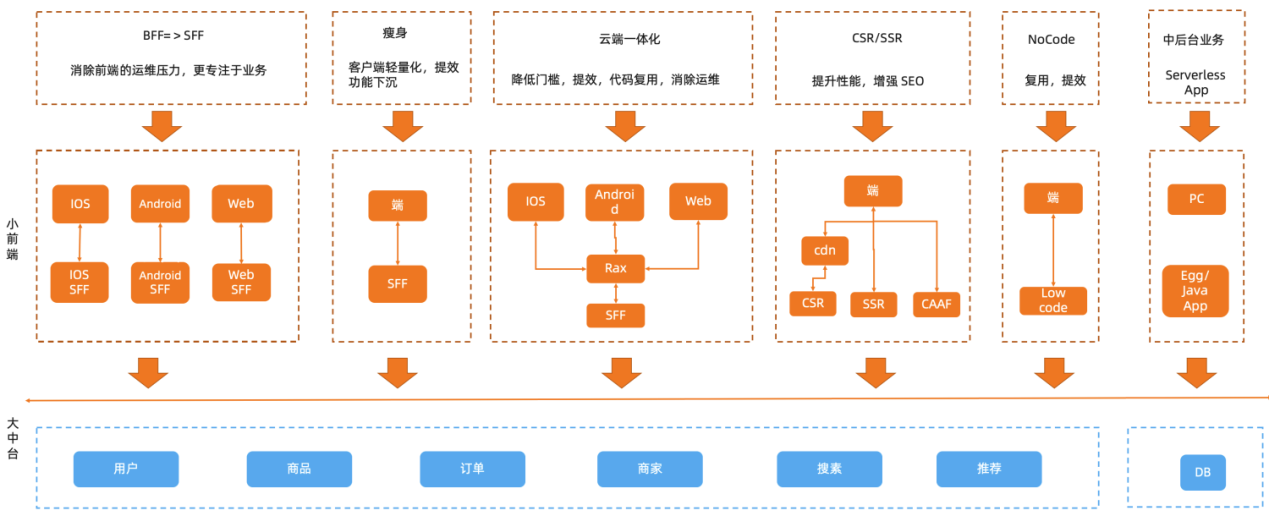

For the 2020 Double 11 Global Shopping Festival, Alibaba Group's frontend fully embraced cloud-native Serverless, and a dozen BUs, including Taobao, Fliggy, AutoNavi, CBU, ICBU, Youku, and Kaola. Alibaba Cloud jointly implemented a cloud-based integrated R&D model with Node.js FaaS online service architecture as the core. On the premise of ensuring stability and high resource utilization, the R&D model was upgraded for the key marketing and shopping scenarios of multiple BUs during this year's Double 11. The cloud-based all-in-one R&D model supported by FaaS at the frontend delivered an average efficiency improvement of 38.89%. Relying on the convenience and reliability of Serverless, Taobao, Tmall, Fliggy, and other Double 11 venue pages quickly implemented SSR technology to improve the user page experience. In addition to ensuring the promotion, daily elasticity also reduced the calculation cost by 30% compared with the past.

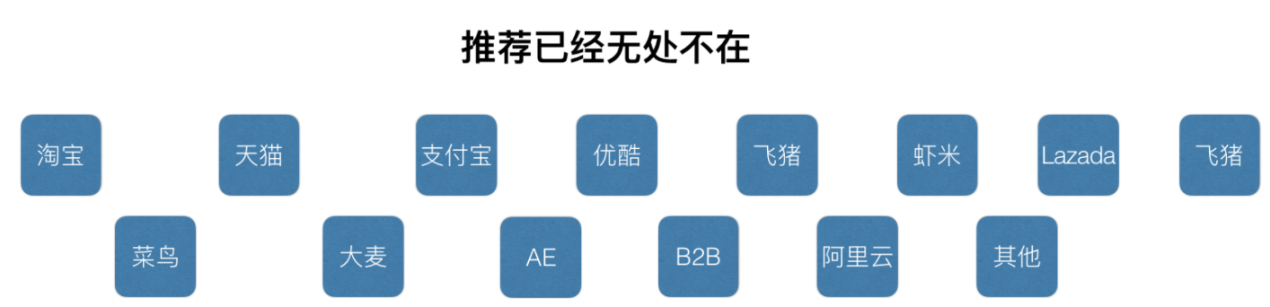

The elastic scaling capability of Serverless is the most important reason why the "personalized recommendation business scenario" was chosen for Serverless. The cost of operating and maintaining thousands of heterogeneous applications has been a pain point in this scenario. By further releasing the operation and maintenance capacity through Serverless, developers can focus on algorithm innovation for business. The application scope of this scenario is getting wider, covering almost the whole Ali system app, including Taobao, Tmall, Alipay, Youku, and Fliggy. Therefore, we can do more optimization on machine resource utilization. Through intelligent scheduling, the machine resource utilization reached 60% at peak time.

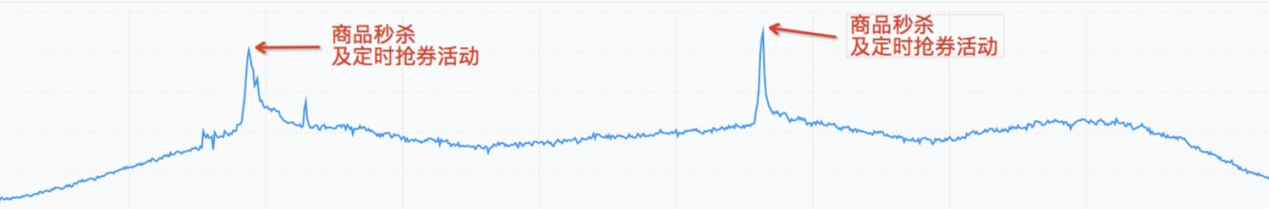

In 2020, Century Lianhua operated during Double 11 based on Alibaba Cloud Function Compute (FC) elastic scaling. In the promotion venue SSR, there were online product spikes, coupon spotting, industry shopping guides, data center computing, and other scenarios for application. Business peak QPS increased by more than 230% compared to the 2019 Double 11 Global Shopping Festival. R&D efficiency and delivery efficiency increased by more than 30%, and Elastic resource costs reduced by more than 40%.

There are more scenarios for Serverless that need to be accumulated by developers from more industries. Overall, FaaS had a very impressive report card this year, not only taking over part of the core business during the Double 11 Global Shopping Festival but also breaking new highs in traffic, helping the business to carry the flood of millions of QPS.

In this case, how did Alibaba Cloud overcome the pain of implementing Serverless in the industry?

In a major Serverless 2.0 upgrade in 2019, Alibaba Cloud Function Compute (FC) was the first to support reserved mode.

Why did Alibaba Cloud take the lead on this? The Alibaba Cloud Serverless Team kept exploring the needs of real businesses. The pay-as-you-go model of the per-volume model, while very attractive, had a long cold start time and shut out the core online businesses. Next, Alibaba Cloud focused on analyzing the demands of core online businesses, small latency and guaranteed resource elasticity. How can we solve it?

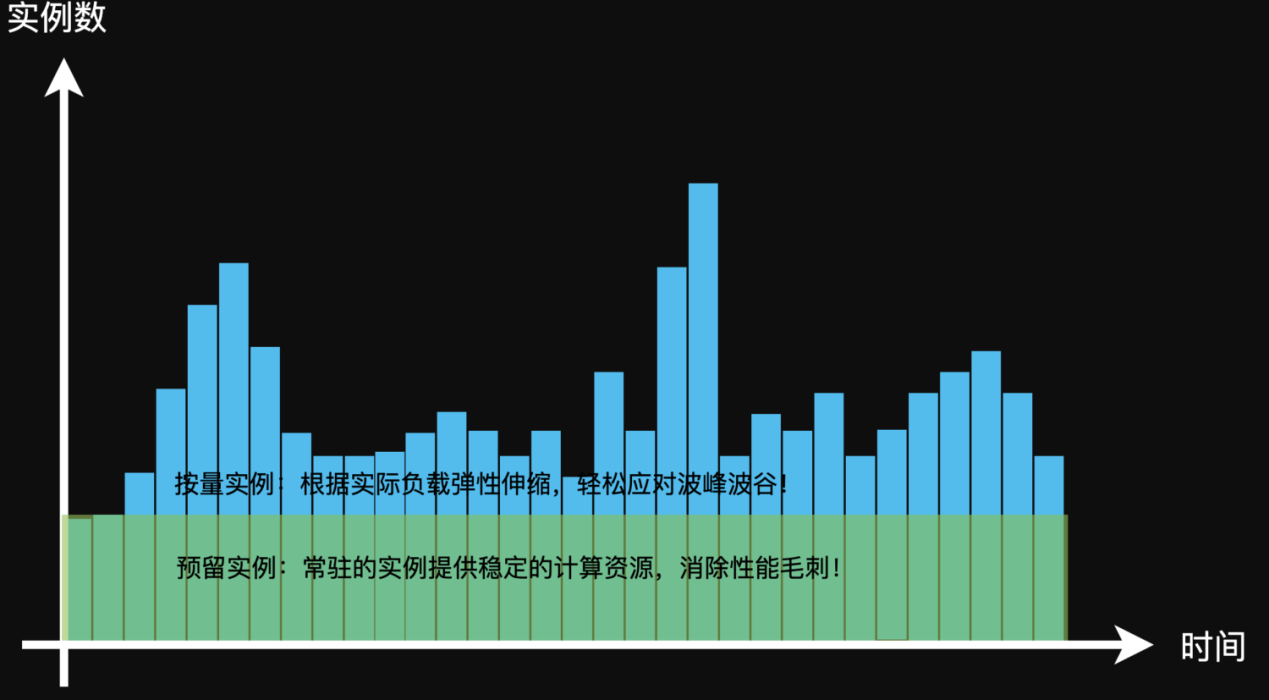

The following figure shows a very typical business curve chart. The reservation mode is used to meet the requirements of a fixed amount at the bottom, while the elasticity is used to meet the requirements of a burst. To scale out the burst resource, two scale-out methods are available: Scale-out by resource and scale-out on request. For example, you can set the scale-out threshold of CPU resources to 60%. When the CPU of an instance reaches the threshold, the scale-out is triggered. In this case, the new request is not sent to the scaled-out instance immediately. Instead, the new request is sent to the scaled-out instance only when the instances are ready, avoiding a cold start. For example, if you only set the concurrency metric scale-out threshold (30 for the concurrency of each instance), the scale-out for the same process is triggered when the condition is met. If both metrics are set, the scale-out is triggered first in the conditions that are met first.

With various scaling methods, Alibaba Cloud Function Compute solves the cold start problem of Serverless, which supports latency-sensitive businesses.

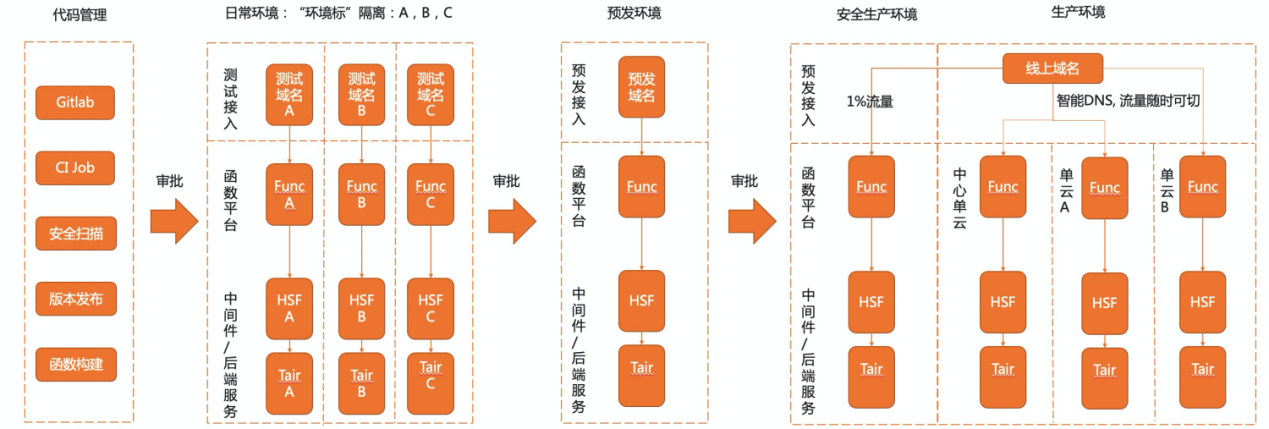

"Increased efficiency" is supposed to be the advantage of Serverless, but for core applications. "Fast" means "risky," and users need to go through several processes, such as CI testing, daily testing, pre-release testing, and grayscale deployment, to ensure the quality of functions. These processes are the stumbling blocks that prevent core applications from using FaaS. To address this problem, Alibaba Cloud Function Compute's strategy is to be integrated, combining the advantages of the R&D platform with Alibaba Cloud Function Compute. This is designed to meet the CI/CD process of users, but also enjoy the dividends of Serverless, helping users to cross the gap of using FaaS. The Alibaba Group exposed the standard OpenAPI and each core application of the R&D platform for integration. After the Double 11 business R&D verification, R&D efficiency greatly improved by 38.89%. On the public cloud, we integrated with the cloud efficiency platform to combine the R&D process with FaaS to help businesses outside the group and improve human efficiency.

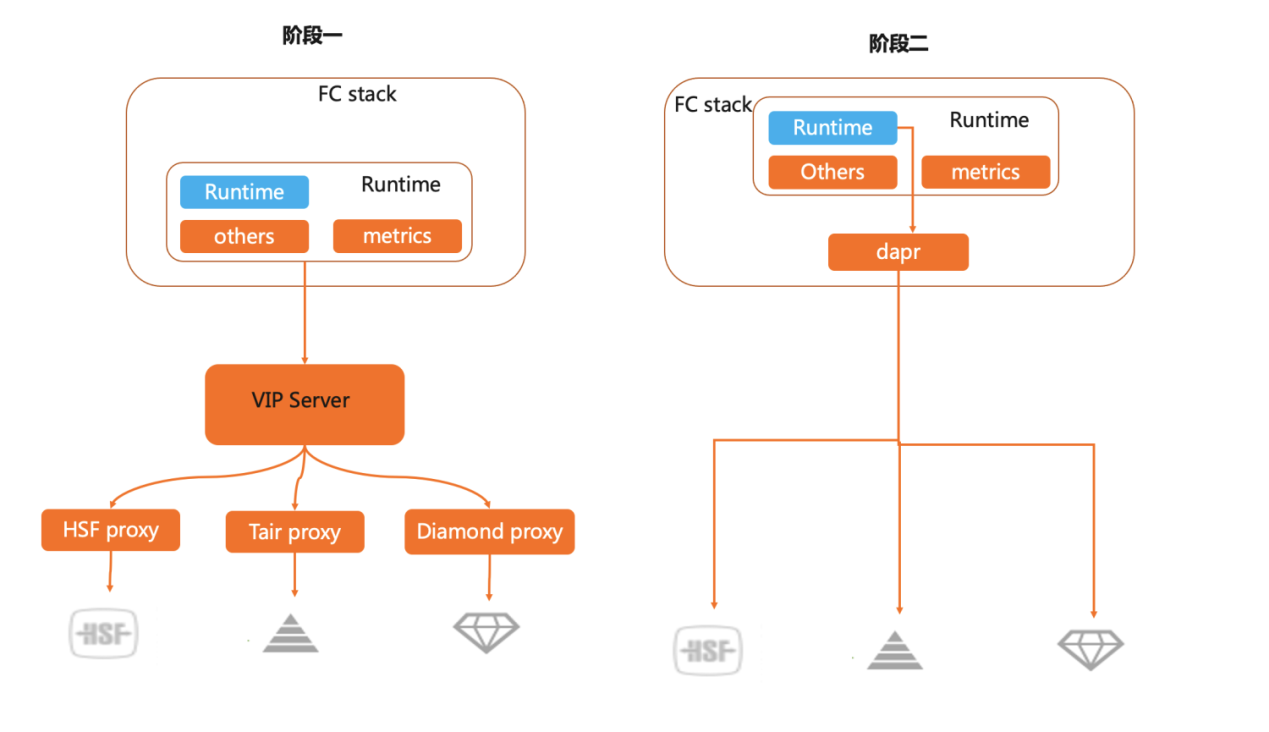

Once the core application uses Function Compute, how can it work with middleware? Traditional application development requires the integration of various middleware SDKs for packaging online, but for Serverless functions, the size of the code package is a hard wound. This problem will directly affect the cold start time. Alibaba Cloud Function Compute has two stages of development. The first stage builds the middleware Proxy. Through the Proxy to open the middleware, the function uses a single protocol to interact with the Proxy and offload the middleware SDK package. During the second stage, the middleware capabilities sink, and some control type requirements are on the agenda, such as command delivery, traffic management, and configuration pulling. During this period, Alibaba Cloud embraced the open source component Dapr, using Sidecar to offload the middle interaction costs. The solution above is based on Alibaba Cloud's Custom Runtime for Function Compute and Custom Container functionality.

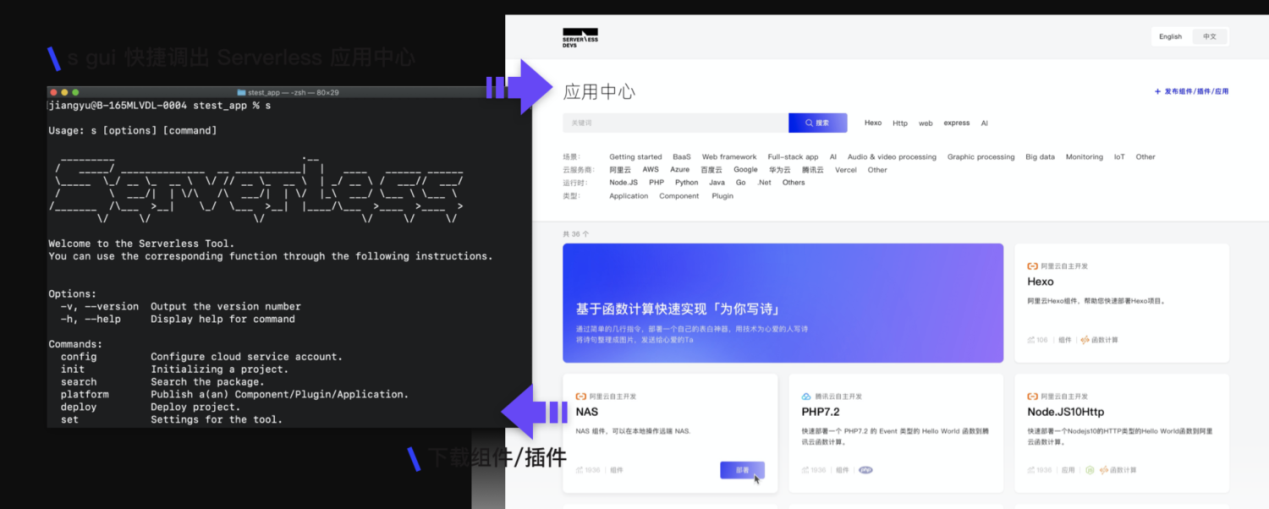

Remote debugging, log viewing, link tracing, resource utilization, and improvement of the peripheral tool chain are essential for developers. Alibaba Cloud Function Compute has started different research teams at the same time. First, it is combined with Tracing/ARMS to create a clear link Tracing capability and is integrated with SLS to create comprehensive business data monitoring. This way, the business can be customized as needed, and the open source Prometheus product, which exposes resource utilization and supports WebIDE, can be remotely debugged. In addition, Serverless-devs, a new open source project of Alibaba Cloud, is a developer tool that has no binding to manufacturers and helps developers double the development, operation, and maintenance efficiency in the Serverless architecture. You can easily and quickly create, develop, test, publish, and deploy applications in a project to achieve end-to-end management of the project.

There are many initial pain points of Serverless, but how is Alibaba Cloud able to offer Serverless solutions to all industries?

First of all, Alibaba Cloud provides the most complete Serverless product matrix among all cloud vendors, including Function Compute, SAE for Serverless application engine, ASK for container orchestration, and ECI for container instances. For example, for event-triggered scenarios, Function Compute provides rich event source integration capabilities and the ultimate elasticity of 100 millisecond scaling. For microservice applications, the Serverless Application Engine can achieve zero code transformation, allowing microservices to enjoy the Serverless dividend.

Second, Serverless is a fast-growing field, and Alibaba Cloud is constantly expanding the boundaries of Serverless products. For example, Function Compute supports container mirroring, prepaid mode, concurrent execution of multiple requests within an instance, and other industry-first features that completely solve Serverless challenges, such as performance burrs caused by cold starts, greatly expanding the application scenarios of Function Compute.

Finally, Alibaba has a very rich business scenario, which can further polish the practice of Serverless on the ground. This year, the core business scenarios of Alibaba, Kaola, Fliggy, and AutoNavi all used Alibaba Cloud Function Compute, and successfully carried the peak of the Double 11 Global Shopping Festival.

"The greatest radicalization of the productivity of labor, and the greater skill, skill and judgment in the use of labor, seem to be the result of the division of labor." This is a quote from "The Wealth of Nations," emphasizing the stakes of "division of labor."

Similarly, this theorem also applies to the software application market industry. The traditional industry has entered the Internet phase, the market size is getting bigger, the division of labor is getting finer, and the era of physical machine hosting has passed. It was replaced by the mature IaaS layer, followed by container services, which is now the industry standard. How will the next decade of technology look? The answer is Serverless, wiping out the lack of R&D personnel in the budget and operations and maintenance experience. In the case of fighting the business flood, most R&D can easily control the processing, greatly reducing the technical threshold of R&D, while improving the efficiency of R&D, online warning, traffic observation, and other tools that are available, easily achieving the technology R&D free of operations and maintenance. Serverless is a more fine-grained division of labor, so business developers don't need to focus on the underlying operations and maintenance. Instead, they can focus on business innovation, greatly improving labor productivity, which is known as the "Smith's theorem" effect. This is also the inherent reason why Serverless will become an inevitable trend in the future.

Today, the entire cloud product system is Serverless, and more than 70% of the products are in Serverless form. Serverless products, such as Object Storage, Messaging Middleware, API Gateways, and table storage are already well known by developers. Over the next decade, Serverless will redefine the programming model of the cloud and reshape the way enterprises innovate.

Improving Business Efficiency by 38.89% on Double 11 with Serverless Technology

Unveiling the Secret: The Technology Evolution of the Alipay Mini Program "V8 Worker"

2,605 posts | 747 followers

FollowAlibaba Cloud Community - December 21, 2021

Alibaba Clouder - December 4, 2020

Alibaba Cloud Community - November 25, 2021

Aliware - May 24, 2021

Alibaba Cloud Native Community - November 5, 2020

Alibaba Cloud Native - October 9, 2021

2,605 posts | 747 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by Alibaba Clouder